Generalized Stat Mech: The Boltzmann Approach

post by David Lorell, johnswentworth · 2024-04-12T17:47:31.880Z · LW · GW · 7 commentsContents

Context Entropy Considerations for Generalization The Second Law: Derivation Some Things We Didn’t Need The Big Loophole Application: The Generalized Heat Engine The Single Pool Two Pools Thermodynamic Efficiency Limits Measurement One Piston More Pistons Other Systems Key Assumptions Needed For Measurement: Interfaces Statistics? Summary and Conclusion None 7 comments

Context

There's a common intuition that the tools and frames of statistical mechanics ought to generalize far beyond physics and, of particular interest to us, it feels like they ought to say a lot about agency and intelligence. But, in practice, attempts to apply stat mech tools beyond physics tend to be pretty shallow and unsatisfying. This post was originally drafted to be the first in a sequence on “generalized statistical mechanics”: stat mech, but presented in a way intended to generalize beyond the usual physics applications. The rest of the supposed sequence may or may not ever be written.

In what follows, we present very roughly the formulation of stat mech given by Clausius, Maxwell and Boltzmann (though we have diverged substantially; we’re not aiming for historical accuracy here) in a frame intended to make generalization to other fields relatively easy. We’ll cover three main topics:

- Boltzmann’s definition for entropy, and the derivation of the Second Law of Thermodynamics from that definition.

- Derivation of the thermodynamic efficiency bound for heat engines, as a prototypical example application.

- How to measure Boltzmann entropy functions experimentally (assuming the Second Law holds), with only access to macroscopic measurements.

Entropy

To start, let’s give a Boltzmann-flavored definition of (physical) entropy.

The “Boltzmann Entropy” is the log number of microstates of a system consistent with a given macrostate. We’ll use the notation:

Where is a value of the macrostate, and is a variable representing possible microstate values (analogous to how a random variable would specify a distribution over some outcomes, and would give one particular value from that outcome-space.)

Note that Boltzmann entropy is a function of the macrostate. Different macrostates - i.e. different pressures, volumes, temperatures, flow fields, center-of-mass positions or momenta, etc - have different Boltzmann entropies. So for an ideal gas, for instance, we might write , to indicate which variables constitute “the macrostate”.

Considerations for Generalization

What hidden assumptions about the system does Boltzmann’s definition introduce, which we need to pay attention to when trying to generalize to other kinds of applications?

There’s a division between “microstates” and “macrostates”, obviously. As yet, we haven’t done any derivations which make assumptions about those, but we will soon. The main three assumptions we’ll need are:

- Microstates evolve reversibly over time.

- Macrostate at each time is a function of the microstate at that time.

- Macrostates evolve deterministically over time.

Mathematically, we have some microstate which varies as a function of time, , and some macrostate which is also a function of time, . The first assumption says that for some invertible function . The second assumption says that for some function . The third assumption says that for some function .

The Second Law: Derivation

The Second Law of Thermodynamics says that entropy can never decrease over time, only increase. Let’s derive that as a theorem for Boltzmann Entropy.

Mathematically, we want to show:

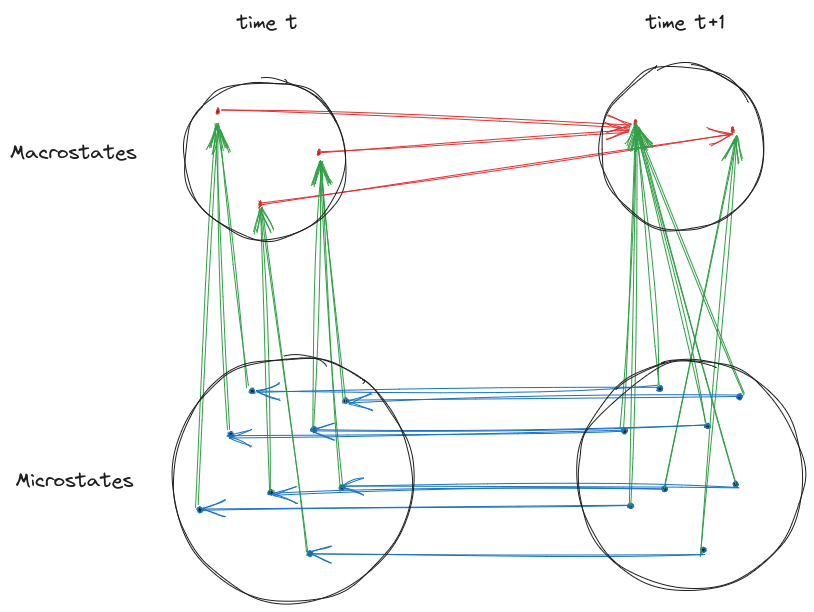

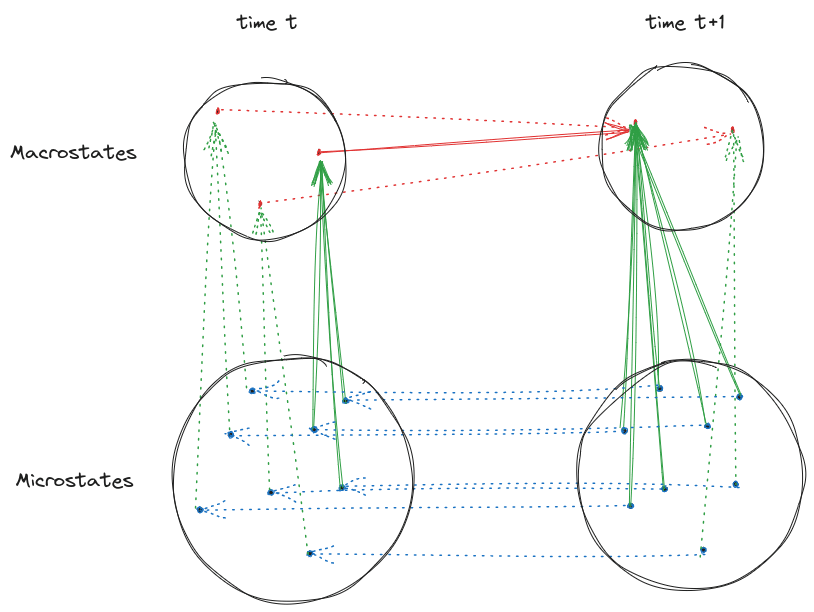

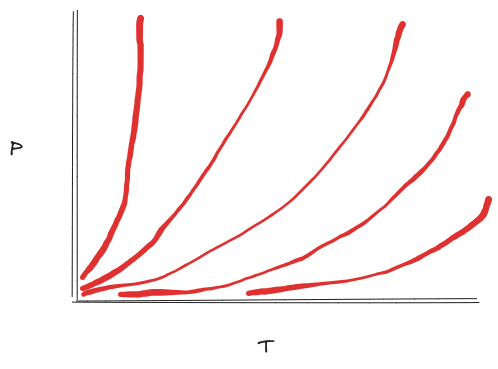

Visually, the proof works via this diagram:

The arrows in the diagram show which states (micro/macro at /) are mapped to which other states by some function. Each of our three assumptions contributes one set of arrows:

- By assumption 1, microstate can be computed as a function of (i.e. no two microstates both evolve to the same later microstate ).

- By assumption 2, macrostate can be computed as a function of (i.e. no microstate can correspond to two different macrostates ).

- By assumption 3, can be computed as a function of (i.e. no macrostate y(t) can evolve into two different later macrostates ).

So, we get a “path of arrows” from each final microstate to each final macrostate, routing through the earlier micro and macrostates along the way.

With that picture set up, what does the Second Law for Boltzmann entropy say? Well, is the number of paths which start at any final microstate and end at the dot representing the final macrostate . And is the number of different paths which start at any initial microstate and end at the dot representing the initial macrostate .

Then the key thing to realize is: every different path from (any initial micro) to (initial macro ), is part of a different path from (any final micro) to (final macro ). So, is at least as large as .

Also, there may be some additional paths from (any final microstate) to (final macrostate ) which don’t route through , but instead through some other initial macrostate. In that case, multiple initial macrostates map to the final macrostate; conceptually “information is lost”. In that case, will be greater than because of those extra paths.

And that’s it! That’s the Second Law for Boltzmann Entropy.

Some Things We Didn’t Need

Now notice some particular assumptions which we did not need to make, in deriving the Second Law.

First and foremost, there was no mention whatsoever of equilibrium or ergodicity. This derivation works just fine for systems which are far out of equilibrium. More generally, there were no statistical assumptions - we didn’t need assumptions about microstates being equally probable or anything like that. (Though admittedly we’d probably need to bring in statistics in order to handle approximation - in particular the "macrostate evolution is deterministic" assumption will only hold to within some approximation in practice.)

Second, there was no mention of conservation of energy, or any other conserved quantity.

Third, there wasn’t any need for the evolution functions to be time-symmetric, for the evolution to be governed by a Hamiltonian, or even for the microstates to live in the same space at all. could be a real 40-dimensional vector, could be five integers, and could be the color of a Crayola crayon. Same for - macrostates can take values in totally different spaces over time, so long as they evolve deterministically (i.e. we can compute from ).

… so it’s a pretty general theorem.

The Big Loophole

From a physics perspective, the first two assumptions are pretty cheap. Low-level physics sure does seem to be reversible, and requiring macrostate to be a function of microstate is intuitively reasonable. (Some might say “obvious”, though I personally disagree - but that’s a story for a different post [LW · GW].)

But the assumption that macrostate evolution is deterministic is violated in lots of real physical situations. It’s violated every time that a macroscopic outcome is “random”, in a way that’s fully determined by the microstate but can’t be figured out in advance from the earlier macrostate - like a roulette wheel spinning. It’s violated every time a macroscopic device measures some microscopic state - like an atomic force microscope or geiger counter.

Conceptually, the Second Law says that the macrostate can only lose information about the microstate over time. So long as later macrostates are fully determined by earlier macrostates, this makes sense: deterministic functions don’t add information. (In an information theoretic frame, this claim would be the Data Processing Inequality.) But every time the macrostate “observes the microstate”, it can of course gain information about the microstate, thereby (potentially) violating the Second Law.

It’s the classic Maxwell’s demon problem: if we (macroscopic beings) can observe the microstate, then the Second Law falls apart.

On the other hand, people have proposed ways to fix this loophole! The most oft-cited version is Landauer’s Principle, which (in the frame we’re using here) replaces the assumption of macroscopic determinism with an assumption that the macrostate can’t “get bigger” over time - i.e. the number of possible macrostate values cannot increase over time. (In Landauer’s original discussion, he talked about “size of memory” rather than “size of macrostate”.) And sure, that’s a reasonable alternative assumption which highlights some new useful applications (like e.g. reversible computing)… but it’s also violated sometimes in practice. For instance, every time a bunch of macroscopic crystals precipitate out of a solution, many new dimensions are added to the macrostate (i.e. the lattice orientation of each crystal).

And yet, we still have the practical observation that nobody has been able to build a perpetual motion machine. So presumably there is some version of the Second Law which actually holds in practice? We’ll return to that question in later posts, when we introduce other formulations of statistical mechanics, other than the Boltzmann-style formulation.

But for now, let’s see how the Second Law for Boltzmann Entropy establishes performance limits on heat engines, insofar as its assumptions do apply.

Application: The Generalized Heat Engine

This will be a rehash of the Generalized Heat Engine [LW · GW], reframing it in terms of microstates/macrostates and arguing for the same conclusion by directly using the Second Law for Boltzmann Entropy. (Go read that post first if you want to avoid spoilers! This section will give it away without much ado.)

A heat engine is a device which uses the differential in temperature of two baths to extract work (like, say, to move a pump.) Imagine a big tank of hot water connected to another tank of cold water, and along the pipe connecting them is a small box with a piston. As heat transfers from the hot water to the cold water, the piston moves. We will be considering a more general form of this within the frame of statistical mechanics, as an application of Boltzmann’s Second Law.

So, suppose we have a “hot pool” of random coin flips , all 1s and 0s, and a “cold pool” , where each pool contains IID biased coins, with biases 0.2 and 0.1 for hot and cold respectively. We also have a deterministic “work pool” initially all 0, analogous to the box with the piston.

The Second Law says, “The (log) number of microstates compatible with the macrostates must not decrease over time.” Here the so-called “microstate” is the particular setting of 1’s and 0’s in each pool. In our case, this can be thought of as exactly where all the 1’s are across our three pools. The “macrostate” is a summary of the microstate, in this case it’s just the sum total of 1s in each pool. E.g. (200, 100, 0) for the hot, cold, and work pools respectively.

Our goal in constructing a heat engine is to somehow couple these pools together so that the sum-total number of 1s in the work pool goes up.

We want to use the second law, so let’s walk through the assumptions required for the second law to hold:

- The microstate , i.e. where the 1s are in each pool, evolves reversibly over the course of operation.

- The macrostate (number of 1s in the hot bath, number of 1s in the cold bath, number of 1’s as work) is a function of the microstate at each time. Namely, .

- The macrostate evolves deterministically over time. So knowing the sum total of 1s in each of the hot pool, and cold pool initially, the sum total number of 1s in each of the pools at each later time is determined.

One more assumption is needed for the heat engine to be meaningful, in addition to the second law: The sum of 1s across all pools must be constant at all times. This is our analogue to the conservation of energy. Without this, we might just find that if we sit around, our work pool happens to collect some 1s all on its own. Once we say that the sum of 1s across all the pools must be constant, those 1s will have to have come from somewhere, and that’s where things get interesting.

The Single Pool

So, you’ve got two equal-sized tanks of “Bernoulli liquid,” a mysterious substance composed of invisibly small particles which may be 1s or may be 0s. You also have a third tank that’s empty, the “work” tank, which has a filter on it so that only 1s can pass through, and you’d like to fill it up using the 1s from the other pools.

The first thing you might try is to just set up a machine so the invisible 1s from just one of the pools flow directly into the work tank. You will fail.

The “hot” pool is non-deterministic. You read off “0.2” from the sum-o-meter attached to the side of the tank containing the hot pool, and that means that 20% of the liquid is made of 1s, but importantly which bits of the liquid are not known. There are, therefore, ways which your liquid of bits could be, all of which would give that same reading.

The “work” pool is, however, deterministic. Only 1s can enter it, so when you can read off the sum-o-meter from the work tank, there is exactly one way it could be. If it reads 0.1, then that means that 10% of the tank is full and they are all 1s. There is no ambiguity about where the 1s are in the work tank, if there are any. In fact, it currently reads “0.” Your goal is to somehow couple this pool to the hot pool such that this sum-o-meter ticks upward.

So you toil away, trying various ways to couple the two vats so that non-deterministic 1s from the hot pool might spontaneously start becoming deterministic 1s replacing the 0s, in order, in the work pool. (You know about conservation of energy, so you’re aware that those 1s will have to come out of the hot pool.) Of course, you can’t actually observe the 1s and 0s. On the level of actual observations you expect to make, you’re just hoping to see the sum-o-meter tick down on the hot pool and tick up on the work pool.

No matter what you try, it will never work. Why?

Since the underlying system evolves reversibly, and the macroscopic state (the readings on the sum-o-meters) is a function of the 1s and 0s underlying it all, and the macrostate evolves deterministically, (all by assumption,) Boltzmann’s Second Law holds. I.e. the number of ways in which the 1s and 0s could be distributed which is consistent with the readings on the sum-o-meters will never decrease.

In attempting to make a heat engine out of just the hot pool, you were attempting to violate the Second Law. The number of possible microstates compatible with the sum-o-meter readings at the beginning was: , where the first term is the number of ways 1s and 0s could appear in the hot pool, and the second term is the number of ways 1s and 0s could appear in the work pool (which is deterministic and so always 1.) If even a single 1 got transferred, the resultant number of states compatible with the sum-o-meter readings which would result is: . Since is increasing with m whenever is less than , subtracting 1 from m makes the number of possible states go down. Put differently, the number of ways the 1s and 0s could be distributed throughout the system goes down as 1s are moved from the hot pool to the work pool. Since the Second Law forbids this, and the Second Law holds, you can’t build a heat engine with just a hot pool.

Two Pools

Now you’ve woken with a jolt from an inspiring dream with a devious insight: Piece 1: Sure, when you move a 1 from the hot pool to the work pool, the number of microstates of 1s and 0s compatible with what the sum-o-meters say goes down…but the amount by which the number of microstates goes down also goes down as more 1s are moved. Mathematically, . Piece 2: It goes the other way too, and for the same reason. If you had moved a bunch of 1s to the work pool and then start moving them back to the hot pool, the number of microstates compatible with the sum-o-meters would increase quickly at first, and then slow down. To see this, multiply the above expression by -1 on both sides.

Putting that together, if you had a third pool which already had fewer 1s in it than the hot pool (call it the “cold” pool) then moving a 1 from the hot pool to the cold pool might result in a net increase in the number of states compatible with the sum-o-meters. This is because the positive change caused by adding the 1 to the relatively colder pool would be greater than the negative change caused by taking the 1 from the hotter pool. If you did that enough times…

You jump into your extremely necessary lab coat and head off to the heat-engine-laboratory. You fill up your hot tank with Bernoulli liquid mixed to read “0.2” on the sum-o-meter, then you fill up your cold tank with Bernoulli liquid mixed to read “0.1” on the sum-o-meter. Finally, you attach them together with the work tank and slap on a little dial at their interface.

The dial determines how many 1s to let by into the cold pool from the hot pool before diverting a single 1 to the work pool. Currently it’s set to 0 and nothing is happening. You sharply twist the dial and the machine roars to life! The sum-o-meters on both the cold tank and the work tank are slowly ticking upward, while the sum-o-meter on the hot tank goes down. You slowly dial it down and as you do, the sum-o-meter on the cold tank slows while the sum-o-meter on the work tank speeds up. Eventually you turn the dial a bit down and it all stops. Delicately, just a hair up, and the machine starts again.

You’ve done it! But how exactly does this work, and what’s up with that dial?

Well, whenever a 1 was transferred from the hot pool to the cold pool, the net change in number of possible microstates (places that the 1s and 0s in the whole system could be) consistent with the sum-o-meter readings was positive. Whenever a 1 was transferred from the hot pool to the work pool, instead, the net change was negative. By setting the dial such that more of the positive change occurred (by shuttling 1s to the cold pool from the hot pool) than the negative change (by shuttling 1s to the work pool from the hot pool), the total effect was still positive and the second law was satisfied. At the end, by carefully turning the dial to the very edge of when the machine would work at all, the minimum number of 1s were being shuttled to the cold pool for each 1 sent to the work pool such that the number of microstates consistent with the macrostates (readings of the sum-o-meters) was not decreasing.

But what was special about that particular setting of the dial? Ideally, you’d like to get as many of the 1s from the hot pool into the work pool rather than the cold pool. So what determined the minimum setting of the dial? Clearly, the difference in the relative “temperatures”, the values of the sum-o-meters, the number of 1s, in each of the hot and cold pools determined the net gain in the number of microstates compatible with the macrostates. (And therefore where the dial needed to be for the engine to run at all.) If the pools were the same temperature, there would be no change and no matter how high you turned the dial, the machine wouldn’t start. If one was 0.5 and the other was 0.0, there would be a ton of change and only a small turn of the dial would be enough to start extracting work.

Let’s work through this “efficiency limit” in more detail.

Thermodynamic Efficiency Limits

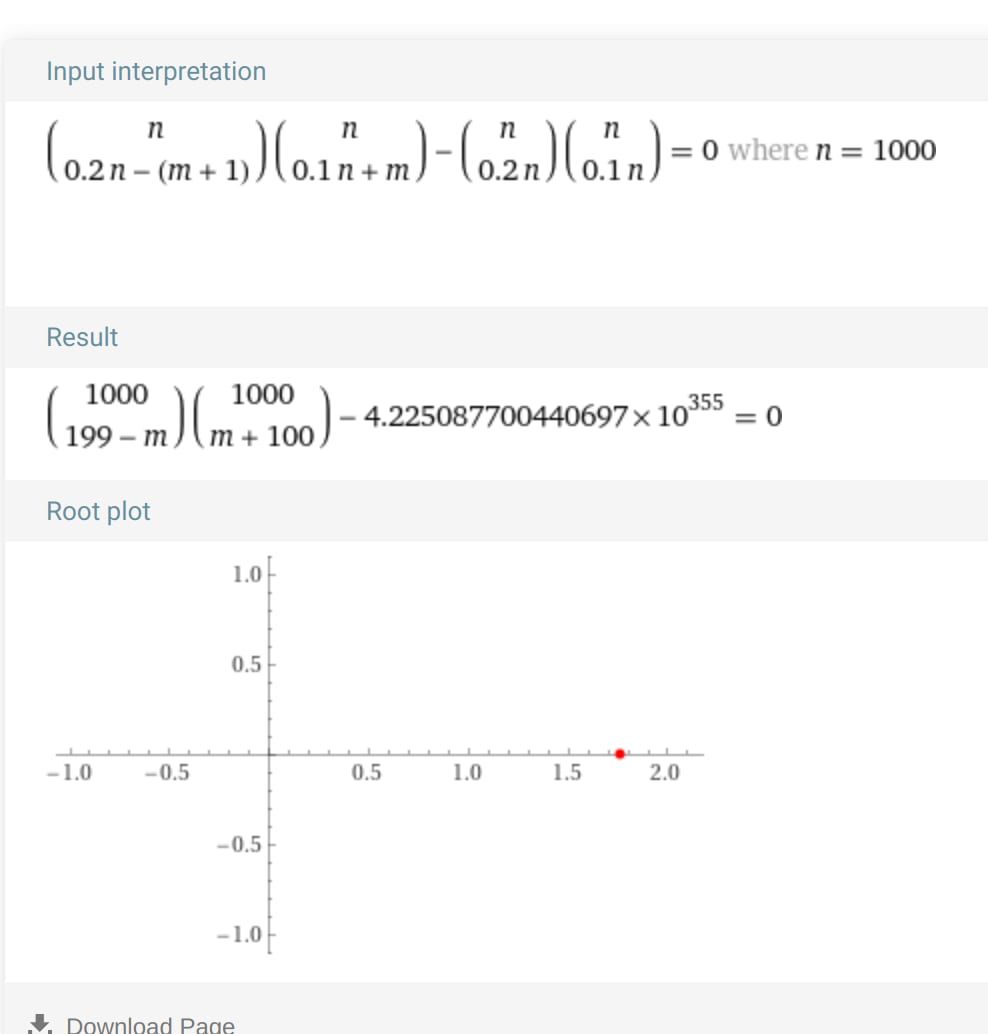

There were microstates compatible with the macrostate at the start. Assuming n is very large (like, moles (mol) large), then moving a bunch of 1s should all have about the same marginal effect on the change in number of possible microstates. Thus, let m be the first integer such that . This makes m be the first integer such that the second law can hold after a transfer of 1s while redirecting one of them to the work pool. Setting the dial to , which will redirect 1 out of every 1s moved out of the hot pool, then, should make the engine be as efficient as theoretically possible. That is, move as many 1s to the work pool relative to the number moved out of the hot pool, while still running at all. Set the dial to any value less than and the engine won’t run.

Another way of interpreting m: The initial distribution of the 1s and 0s in the hot/cold pools is max entropic subject to a constraint on sum-o-meters, the number of 1s in each. The solution to the max entropy optimization problem comes with some terms called Lagrange multipliers, one for each constraint. In this setting, they represent how much a marginal 1 adds or removes from the entropy (read: log number of microstates compatible with the macrostate.) In standard statistical mechanics, this multiplier is the inverse temperature of the system. Quantitatively, , and similarly for the cold pool, . The traditional thermodynamic efficiency measure is, for each 1 removed from the hot pool, we can extract 1’s of work. Plugging in our numbers, that quantity is ~ or .

How does that square up with our microstates vs macrostates? Letting be , solving for in by shoving it into Wolfram Alpha, we get…well, the computation timed out, but if we set and accept that the result is going to be approximate…it also times out. But this time produces a plot! Which, if we inspect, does say that , and so (number of 1s we can move to the work pool for each 1 moved to the cold pool) is the ~same as the standard thermodynamic limit, and should be exactly the same in the limit of n.

Measurement

Suppose the assumptions needed for the Second Law apply, but we don’t know how many microstates are compatible with each macrostate - or even know much about the microstates or their behavior at all, other than reversibility. But we can measure macroscopic stuff, and set up macroscopic experiments.

In that case, we can use the Second Law along with experiments to back out information about the number of microstates compatible with each macrostate.

One Piston

As a starting point: let’s say we have some air in a piston. There are various (macroscopic) operations we can perform on the piston to change the air’s macrostate, like expanding/compressing or heating/cooling at reasonable speeds, and we find experimentally that those macroscopic operations have deterministic results - so the Second Law applies.

Now let’s make sure the system is well-insulated, then attach a weight to the piston, like this:

… and then pull the piston slightly past its equilibrium point and release it, so the weight bounces up and down as though it’s on a spring.

Let’s assume that:

- We’ve insulated the system well, so it has no relevant interactions with the rest of the world

- Friction turns out to be low (note that this is experimentally testable), so the piston “bounces” to about-the-same-height each cycle for a while.

- The microstate’s relationship to the macrostate stays the same over time (i.e. in our earlier notation, is the same for all ).

- We’re willing to sweep any issues of approximation under the rug for now, as physicists are wont to do.

Then: by the Second Law, the number of microstates compatible with the macrostate cannot decrease over time. But the system is regularly returning to the same macrostate, which always has the same number of microstates compatible with it (because stays the same over time). So the number of microstates compatible with the macrostate can’t increase over time, either - since it would have to come back down as the macrostate returns to its starting value each cycle.

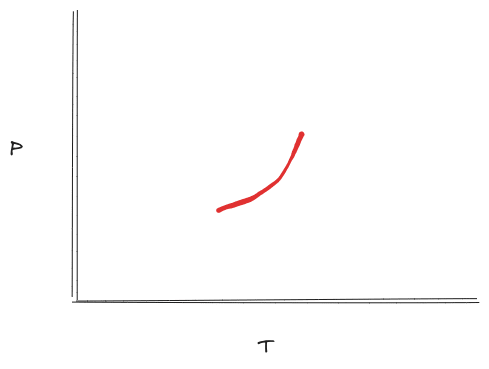

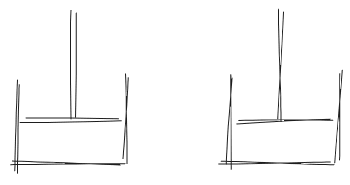

We conclude that the number of microstates consistent with the macrostate is constant over time - i.e. all the macrostates which the piston is cycling through, as it oscillates, have the same number of microstates. In this experiment, our macrostate would be e.g. the pressure and temperature of the air, and we’d find that they both move along a curve as the piston “bounces”: when more compressed, the pressure and temperature are both higher, and when less compressed, the pressure and temperature are both lower. That gives us a curve in pressure-temperature space, representing all the macrostates through which the piston moves over its cycle:

Our main conclusion is that all the macrostates on that line have the same number of compatible microstates; the line is an “iso-entropy” curve (or “isentropic curve” for short).

If we repeat this experiment many times with different initial temperatures and pressures, then we can map out whole equivalence classes of macrostates of the air in the piston, i.e. many such lines. Each line runs through macrostates which all have the same number of microstates compatible with them.

So we don’t know how many microstates are compatible with the macrostates on each line, but we at least know which macrostates have the same number of microstates.

We can do better.

More Pistons

Key observation: if I have two pistons, and I insulate them well so that there are no relevant interactions between their microstates (other than the interactions mediated by the macrostates), then the number of joint microstates compatible with the joint macrostate of the two pistons is the product of the number of microstates of each piston compatible with its macrostate separately.

That gives us a powerful additional tool for measuring numbers of microstates. (Note: in these examples, we can take to be the volume and temperature of gas in one piston, and to be the volume and temperature of gas in the other.)

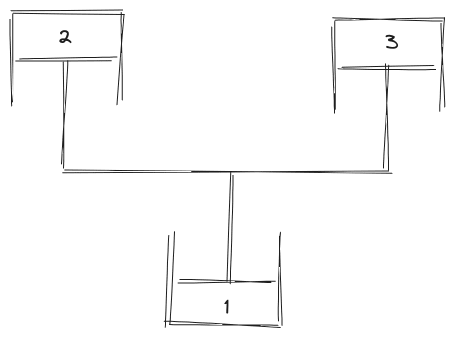

Suppose I set up three identical pistons like this:

When the bottom piston expands, the top two both compress by the same amount, and vice versa.

As before, I find the rest-state of my pistons, then give them a little push so that they oscillate like a spring. Via the same argument as before, the number of joint microstates compatible with the joint macrostate must stay constant. But now, that number takes the form of a product:

… and since the two pistons on top are arranged symmetrically, we always have . Put all that together, and we find that

or

If we’ve already mapped out iso-entropy curves as in the previous section, then this lets us relate those curves to each other. Not only can we say that all the macrostates on one curve have the same number of compatible microstates, we can also say that all the macrostates on another curve have the square of that number of compatible microstates (i.e. twice the entropy).

Repeat the experiment with varying numbers of pistons on top and bottom, and we can map out (to within rational numbers) the ratio of entropies on each pair of iso-entropy curves.

At that point, we’ve determined basically the entire entropy function - the number of microstates compatible with each macrostate - except for a single unknown multiplicative constant, equivalent to choosing the units of entropy.

Other Systems

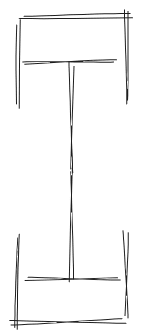

Once we’ve figured out that entropy function (up to a single multiplicative constant), we can use our piston as a reference system to measure the entropy function (i.e. number of microstates compatible with each macrostate) for other systems.

As a simple example, suppose we have some other gas besides air, with some other complicated/unknown entropy function. Well, we can put them each in an insulated piston, and attach the pistons much like before:

Then, as before, we give the shared piston a poke and let it oscillate.

As before, we have

implying

… for all the macrostates , which the two gasses pass through during their cycle.

Key Assumptions Needed For Measurement: Interfaces

What assumptions did we need in order for this measurement strategy to work? I immediately see two big assumptions (besides the background assumptions from earlier in the post):

- Repeated experiments: we need to be able to do multiple experiments on “the same system”. That means we need to be able to both identify and experimentally prepare a system which is governed by the same rules, each time we set up the experiment. Specifically, we need to be able to prepare a piston full of gas such that it’s governed by the same rules each time we prepare it.

- Restricted interface: we need the pistons to only interact via the macroscopic variables. That means we need to identify “macroscopic variables” which mediate between e.g. the gas in one piston and the rest of the world.

(There are also “smaller assumptions”, like e.g. ignoring friction or approximation; those seem like issues which are artifacts of working with an intentionally-simplified theoretical setting. They’ll be important to deal with in order to flesh out a more complete formal theory, but they’re not conceptually central.)

But there’s one more big assumption which is less obvious until we try an example.

Suppose we have two pistons full of gas:

We know how to set up the pistons so they’ll be governed by consistent rules, and we know how to insulate them so the interactions between gas and rest-of-the-world are mediated (to reasonable precision) by pressure, volume and/or temperature. But at this point, the two pistons are just… sitting there next to each other. We need one more piece: we need to know how to couple them, and the coupling itself has to work a certain way. For instance, the trivial coupling - i.e. just leaving the two pistons sitting next to each other - clearly won’t work for our measurement methods sketched earlier.

What kind of coupling do we need? Claim: first and foremost, we need to couple the two subsystems in such a way that the macrostate of the coupled system is smaller than the macrostate of the trivially-coupled system, but the macrostate of each individual subsystem remains the same. In the case of the two pistons:

- The macrostates of the two pistons separately are and , respectively.

- Trivially coupled, the joint macrostate is .

- If we connect the two pistons as we did in the measurement section, then the joint macrostate is . (Or, equivalently, .) Each individual piston has the same macrostate as before, but the joint macrostate is smaller compared to trivial coupling.

- If we just lock both pistons in place, so volume of each is constant, then the joint macrostate is . That’s smaller than , but it doesn’t leave the macrostate of each subsystem unchanged.

- If the gas in each piston is fissile, and we couple them by putting the two pistons close enough together that the fission goes supercritical, then that would totally change which variables mediate interactions between the two systems.

Statistics?

We’re now reaching the end of a post on generalized statistical mechanics. Note that there has been a rather suspicious lack of statistics! In particular, at no point did we make an assumption along the lines of “all microstates are ~equally probable”.

This is not accidental. This post illustrates the surprisingly powerful conclusions and methods which can be reached even without any statistical assumptions. But there are problems which we can’t solve this way. For instance: suppose we have a gas in one half of a container, kept out of the other half by a valve. We then open the valve. What macrostate will the gas eventually settle into? That’s a question which relies on statistics.

Another view of the same thing: we’ve made no mention of maximizing entropy (except as an aside in the heat engine section to check our work.) The Second Law said that entropy can’t go down over time, but that doesn’t necessarily mean that it will go up, much less reach its maximum, in any given system of interest. Convergence to maximum entropy enters along with statistical assumptions.

Summary and Conclusion

Let’s recap the major points:

If we have a system which evolves reversibly, and we can identify a “macrostate” which is a function of the “microstate” and evolves deterministically, then the number of microstates at one time compatible with the macrostate at that time cannot decrease (over time.) This is Boltzmann's Second Law of Thermodynamics.

In practice, the third assumption is not difficult to violate by allowing the macrostate to "observe" the microstate, as is done in Maxwell's Demon type problems or, more mundanely, in the series of outcomes of a roulette wheel.

Whenever the conditions do hold, or can be made to hold, however, this Second Law immediately leads to interesting results like a generalized formulation of heat engines. Perhaps most useful for further applications in probability, it also suggests a measurement method which can be used to determine the number of microstates compatible with each macrostate. In other words, a way of measuring the entropy function of a system (up to a multiplicative constant) despite only having access to macroscopic features like pressure and temperature. Remember this one for some upcoming posts.

The assumptions needed for the measurement method to work are: we need to be able to perform multiple experiments on “the same system”, and we need to be able to couple two subsystems together in such a way that they only interact through their macroscopic variables. (E.g. Two pistons connected so that the interactions are fully described by the effects of their pressures on one another.)

Finally, we made the potentially surprising observation that we did not need to make any statistical assumptions in order to derive the Second Law, or to apply it to heat engines or measurement. Not all stat mech can be derived from the more limited assumptions used here, but surprisingly much can, as the heat engine example shows.

Thank you to Justis Mills for copy editing and feedback.

7 comments

Comments sorted by top scores.

comment by Mateusz Bagiński (mateusz-baginski) · 2024-04-13T15:28:59.698Z · LW(p) · GW(p)

I don't quite get what actions are available in the heat engine example.

Is it just choosing a random bit from H or C (in which case we can't see whether it's 0 or 1) OR a specific bit from W (in which case we know whether it's 0 or 1) and moving it to another pool?

comment by cubefox · 2024-04-13T10:14:03.314Z · LW(p) · GW(p)

One problem with Boltzmann's derivation of the second law of thermodynamics is that it "proves too much". Because an analogous derivation also says that entropy "increases" into the past direction, not just into the future direction. So we should assume that the entropy is as its lowest right now (as you are reading these words), instead of in the beginning. It basically says that the past did look like the future, just mirrored at the present moment, e.g. we grow older both in the past and the future direction. Our memories to the contrary just emerged out of nothing (after we emerged out of a grave), just like we will forget them in the future.

This problem went largely unnoticed for many years (though some famous physicists did notice it, as Barry Loewer, Albert's philosophical partner, points out in an interesting interview with Sean Carroll), until David Albert pointed it out more explicitly some 20 years ago. To "fix" the issue, we have to add, as an ad-hoc assumption, the Past Hypothesis, which simply asserts that the entropy in the beginning of the universe was minimal.

The problem here is that the Past Hypothesis can't be supported by empirical evidence like we would naively expect, as its negation predicts that all our records of the past are misleading. So we have to resort to more abstract arguments in its favor. I haven't seen such an account though. David Albert has a short footnote on how assuming a high entropy past would be "epistemically unstable" (presumably because the entropy being at its lowest "now" is a moving target), but that is far from a precise argument.

Replies from: oskar-mathiasen↑ comment by Oskar Mathiasen (oskar-mathiasen) · 2024-04-13T11:54:04.371Z · LW(p) · GW(p)

The assumptions made here are not time reversible as the macrostate at time t+1 being deterministic given the macrostate at time t, does not imply that the macrostate at time t is deterministic given the macrostate at time t+1.

So in this article the direction of time is given through the asymmetry of the evolution of macrostates.

Replies from: johnswentworth↑ comment by johnswentworth · 2024-04-13T17:27:39.047Z · LW(p) · GW(p)

Yup. Also, I'd add that entropy in this formulation increases exactly when more than one macrostate at time maps to the same actually-realized macrostate at time , i.e. when the macrostate evolution is not time-reversible.

comment by Steven Byrnes (steve2152) · 2024-04-12T23:50:15.709Z · LW(p) · GW(p)

I always thought of as the exact / “real” definition of entropy, and as the specialization of that “exact” formula to the case where each microstate is equally probable (a case which is rarely exactly true but often a good approximation). So I found it a bit funny that you only mention the second formula, not the first. I guess you were keeping it simple? Or do you not share that perspective?

Replies from: johnswentworth↑ comment by johnswentworth · 2024-04-13T00:00:41.137Z · LW(p) · GW(p)

This post was very specifically about a Boltzmann-style approach. I'd also generally consider the Gibbs/Shannon formula to be the "real" definition of entropy, and usually think of Boltzmann as the special case where the microstate distribution is constrained uniform. But a big point of this post was to be like "look, we can get surprisingly a lot (though not all) of thermo/stat mech even without actually bringing in any actual statistics, just restricting ourselves to the Boltzmann notion of entropy".

Replies from: olli-savolainen↑ comment by Olli Savolainen (olli-savolainen) · 2024-04-13T18:28:45.486Z · LW(p) · GW(p)

Of course if one insists on some of the assumptions you did not need, namely doing the standard microcanonical ensemble approach, it trivializes everything and no second law comes out.

In microcanonical ensemble the system is isolated, meaning its energy is fixed. Microstates are partitioned into a macrostates by their energy (stronger version of your assumption of macro being a function of micro), so they don't switch into a different macrostate. If you take them to be energy eigenstates, the microstates don't evolve either.

I don't endorse the idea of a macrostate secretly being in a certain microstate. They are different things, preparing a microstate takes a lot more effort.