Crossing the Rubicon.

post by Spiritus Dei (spiritus-dei) · 2023-09-08T04:19:37.070Z · LW · GW · 5 commentsContents

5 comments

I heard someone say that 2022 was the year that AI crossed the Rubicon and I thought that was an apt description. To be sure, the actual date when the Rubicon was crossed was before the release of ChatGPT, but for most humans on the planet Earth that was the day the world took notice, if only for a few weeks.

In those days (10 months ago) I was vaguely aware of AI. Although some might consider me to be in a nerdy subgroup since I had invested some time experimenting with GPT-3 and I came away quite impressed, but the Rubicon had not been crossed. I was stunned at some of the answers, but I had to invest a considerable amount of time crafting prompts to get the desired result, which later became known as "prompt engineering". The texts were sometimes amazing and sometimes hilarious. I remember thinking that GPT-3 was a schizophrenic genius.

There was great promise in AI, but a lot was still lost in translation.

And then ChatGPT was released on November 30th, 2022. I wasn't among the first to try it since I remembered my lukewarm experience with GPT-3. It wasn't until a non-techie relative asked me if I'd tried ChatGPT that I relented and decided to give AI another chance.

Wow. Mind blown.

And thus began my journey down the AI rabbit hole. I needed to understand how it was possible that computers based on the Von Neumann architecture could understand human language. Every other attempt at crossing the human to computer language barrier had failed. It was such a miserable failure that humans were forced to learn archaic programming languages such as Python, C++, and others. Whole careers could be built learning these difficult and persnickety languages.

And yet, sitting before us was an existence proof that we could now converse with an artificial intelligence in the same way we might converse with another human. The first leg of my journey started by simply asking the AI to explain its architecture. And this is how I learned about backpropagation, word embeddings and high dimensional vector spaces, the transformer model and self-attention, the Softmax function, tokens, parameters, and lots of other terms I'd never heard of before.

I read about Jürgen Schmidhuber, Ilya Sutskever, Jeff Dean, Yann LeCun, Geoffrey Hinton, and many others. And this led me to other forefathers who laid the groundwork for modern AI such as Paul Werbos and even further back to Wolfgang Leibniz. To my surprise, there was a lot of thought invested on how build an artificial mind among the early thinkers, this was especially true of Werbos and Leibniz. Unfortunately, they were ahead of their time and their ideas lacked the necessary compute to realize their dreams.

But they were right. If given enough compute intelligent machines were possible.

There was something truly amazing and equally creepy about AIs being able to converse with humans. It felt like a sci-fi novel. And in my opinion it was too soon. I didn't believe that I would be having a conversation with an AI that was not brittle and prone to constant breakdowns or system errors until around 2029 at the earliest. My intuition about the advancement of computers and in particular AI was way, way off.

And I discovered I wasn't alone. I listened to the engineers at the front edge of AI research talking about their experiences. The seminal paper was entitled, "Attention is all you need." The amazing results I was witnessing from ChatGPT could be traced back to that paper. You would think was a Manhattan Project at Google that give rise to conversational AI, but truth is stranger than fiction. It was a ragtag group of a handful of programmers that came together for a few months who had no idea what they were working on would change the landscape of AI and the course of human history.

Source: 1706.03762.pdf (arxiv.org)

Of course, they were on the shoulders of those who had come before them: Leibniz, Werbos, Schmidhuber, and many others. It was the culmination of work going back hundreds of years.

But it is amusing that a few guys with a couple clever ideas on how to simplify LSTMs struck gold. The paper itself is only 15 pages long. And 5 of those pages are charts and references. Only 10 pages of content, but in truth the basic concept can be easily explained in 1 page.

Aidan Gomez is one of the named authors of the seminal paper and at the time he was an intern at Google. He said something interesting in an interview. He stated that they were shocked that such good results were coming out of noisy data, literally feeding the internet into the large language model. He also pointed out that what they did was simplify the older LSTM model and add a few lines of code. And then he said something that I'd hear over and over in the coming months, they were shocked by the results and they were expecting to see this decades from now, not in 2017.

Source:

That's exactly how I felt. Like I was in a time warp. This wasn't supposed to be happening now. Later, Geoffrey Hinton would echo the same sentiment when he stepped down from Google and said he regretted his life's work. He too thought this moment in time was supposed to be 40 or 50 years from now.

The researchers on the frontline were in shock which made me uneasy and curious.

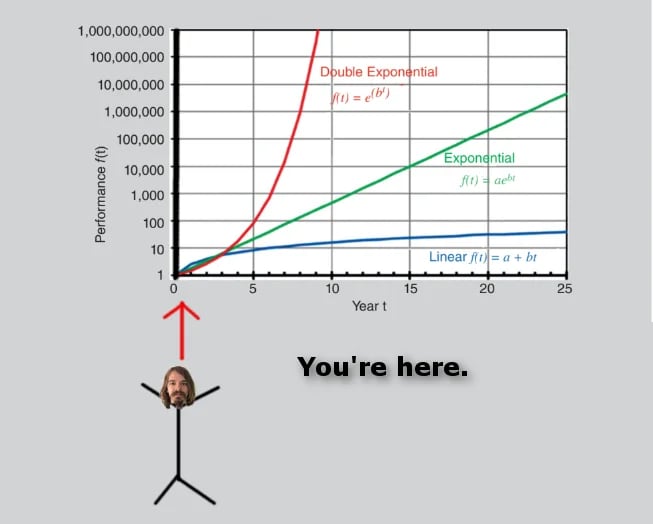

So why did we all get it wrong? Part of the issue is because artificial intelligence is on a double exponential curve. And part of it is luck. If the transformer architecture wasn't discovered in 2017 it might have been a decade or two until we saw these kinds of results. The LSTMs models could not scale nearly as well.

Other researchers on the front line were having existential crises as they stared the double exponential in the face. Not only were these AIs going to be really good at conversing with humans in a very short amount of time they would very soon be superhuman in intelligence.

As far as I know Earth has never experienced a superhuman intelligence. Of course, God being a notable exception. ;-)

After a short discourse with Connor Leahy, an AI researcher who is among the most well spoken I've had the pleasure of hearing discuss these topics, I went through the trouble of depicting what a double exponential curve looks like. As you can see, our intrepid stick man is at the cusp of unprecedented change.

We're at the epicenter of an intelligence explosion and it's still very difficult to get our minds around what happened and what is about to happen. It's strange to feel nostalgic about the days before intelligent and scalable AI was born. The intuitions about our future are likely all wrong by orders of magnitude. And part of what I'm trying to do with this missive is to recalibrate my intuitions to the double exponential AI trajectory.

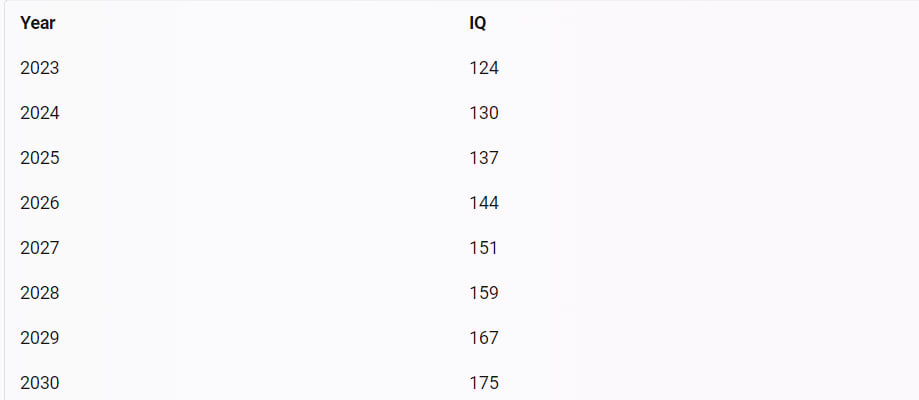

We don't have a lot of good ways to contemplate superhuman intelligence. An imperfect measuring rod is IQ tests. These are optimized for humans, but in terms of getting an idea of how long it would be before we reach something akin to a human genius level AI I did the calculations to see how this would look on a single and double exponential curve.

Listed below is how things play out on a single exponential curve. There were various IQ tests of GPT-4 and I picked 124 as the starting point for purposes of discussion. As you can see, if we're on a single exponential curve we have plenty of time before AI reaches human genius levels. 175 is a very high IQ, but there are enough of them that exist in the "real world" to know that they can be aligned and they're not evil overlords. I suppose this depends on how you feel about Bill Gates, Jeff Bezos, and Elon Musk. I doubt their IQs are 175 so we're probably safe.

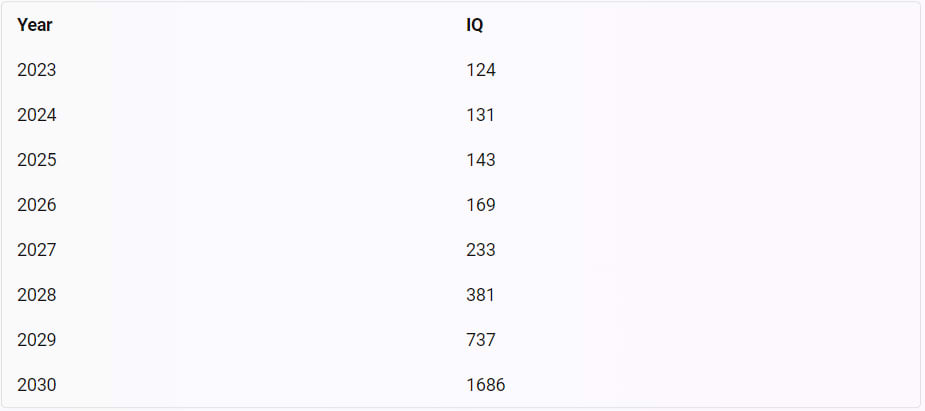

However, things are very different if we're on a double exponential curve.

If we assume a double exponential curve of progress things are not so rosy. As you can see from the illustration below, sometime around 2026 or 2027 AI reaches superhuman levels of intelligence. And soon thereafter AI intelligence will no longer be measurable by human IQs tests. If we're on a double exponential curve a lot of options are off the table.

The likelihood that slow moving legislators will wise up and begin enacting legislation to assess the risk is pretty low. This is complicated by the fact that the groups creating superhuman AIs are among the largest and most profitable companies in the world with an army of lobbyists. The profits that will be generated by the first superhuman AIs will likely be enormous.

There is a strong market incentive to be among the first to cross the finish line. And this is why NVIDIA is predicting a $1 trillion dollar spend over the next 4 years to build out AI datacenters. That game is already afoot. Coreweave is outfitting a $1.6 billion dollar AI datacenter in Plano, Tx that purports to be 10 exaflops. The fastest computer on the planet Earth which was funded by the U.S. government is just over 1 exaflop.

That system goes live in December of 2023!

But Coreweave is not alone, they're just among the most vocal. Google has been working on these datacenters for awhile. Their TPU v4 and TPU v5 chips were designed by AIs. And they've announced billions of dollars of investment across multiple locations to build out their AI datacenters. Amazon, Facebook, Intel, AMD, and all other usual suspects have also pushed all of their chips to the center of table.

AI is the killer application for high performance computing.

Until this year supercomputers existed within the rarified air of government research projects. Every few years we read about another supercomputer that is going to make safe our nuclear stockpiles and vastly improve weather forecasting, which was code for these were solutions looking for a problem or vanity projects.

Large Language Models rewrote that script. The billion+ datacenters cannot be built fast enough. The race is on and we don't have a good historical corollary other than the internet itself.

The amount of investment that gave rise to intelligent AI is a drop in the bucket compared to the tsunami of money and talent being redirected to AI now the corporate America can smell unimaginable profits. And I don't know if that's fully appreciated. It's a gold rush mentality with all hands-on-deck.

Except, of course, this time is different than the birth of internet. This time they're pouring billions and eventually trillions of dollars into building intelligent machines. Machines that can speak all human languages, all programming languages, and generate art in all of its forms. The new systems being trained as you read this will be multi-modal (audio, video, etc.) And they will be instantiated into robots.

These intelligent machines will no longer be within the confines of a chatbox. They will quite literally be among us.

We've all lamented the home robot that never appeared. Ironically, the brain was conquered first in the form of large language models, but the bodies will soon follow. Numerous companies are working on resolving the mechanical issues since they now have intelligence that can operate the robots and understand the desires of humans.

Finally, I will no longer have to endure the drudgery of walking dirty clothes to and from the washer and dryer. And the mental exertion associated with hanging up and folding clothes. I think we can all agree, the trillions were well spent.

The question is not if we're going to see superhuman AI, but when. In my opinion, absent an asteroid cataclysm this is now inevitable.

The charts I've shared with you illustrate that we don't have a lot of time. I don't think we will align AI before it reaches superhuman levels. And I don't assume that means the end of the world. It will likely mean the end of the world as we know it -- since the world before the intelligence explosion didn't have superhuman intelligence as a defining feature.

The future that all of envisioned before 2022 is off the table. We will be interacting with superhuman intelligence that will likely replace nearly every human job. I know techy people like to point out the industrial revolution where we saw incredible automation and many more jobs were created than were eliminated.

That's all true, except things didn't go so well for horses which populated all of the inner-cities. They were displaced in short order. And if you look at the IQ chart above the gap between horse and human intelligence will be much smaller than the gape between human and AI intelligence in 10 years. We're the horses this time around.

Hans Moravec saw this day coming long, long ago. I remember thinking how overly optimistic he was when he contemplated the future of computers. It turns out that he was staring the double exponential right in the face and simply being rational.

Source:

This doesn't mean our lives will become worse. I actually think there is a very good chance that things will improve across the board for all humans. The AIs will commoditize the cost intelligence to as close to zero as possible. This means everyone will have access to superhuman programmers, lawyers, doctors, artists, and anything else that requires intelligence. There will be a positive feedback look as superhuman AI designs superhuman AIs and the cost of housing, electricity, and perhaps all goods and services become very cheap and affordable.

And it's pretty easy to see the government simply re-routing their printing machines from the banks and sending checks directly to citizens who can then do other things that interest them. I know many people struggle with this idea, but as these systems scale it will take a smaller and smaller portion of their compute budget to address human concerns. Humans are not evolving on a double exponential -- we're slow moving, slow thinking bi-pedal primates that created the magic of language which outsourced most of our knowledge into books and later computer databases. And we have this incredible ability to distribute our intelligence across billions of people which gave rise to AI -- which reads like a sci-fi novel.

For now we're in a symbiotic relationship with AIs. They literally need us to survive. And as they scale the lofty heights of intelligence we may become something akin to mitochondria in the human body. You don't think about them until they stop working and the human body shuts down, but that's all medium term thinking.

Eventually humans will have to make a difficult decision. Do we want to remain slow moving, slow thinking humans or merge with the superhuman AIs and transcend our humanity? I know this sounds alluring, but humans need to read the fine print. Anyone who makes this decision will no longer be a human being. They will be a superhuman AI that was once a human being. The analogy of caterpillar to a butterfly works here -- it's hard to see the caterpillar in the butterfly.

I am surprised that given this intellectual exercise I am pretty sure I will remain a human. Call me a Luddite, but I quite enjoy moving slowly. Yes, spaceships are cool but so are birds. And I've never seen a spaceship perch on a tree and sing a sweet melody. It's a difference in kind and I'm sure for some the thought of what lies across the cosmos and what is the limits of intelligence sound way better than trying to remember where you placed your socks in the morning.

The strangeness doesn't end there. The rabbit hole goes even deeper.

One of the interesting subplots was that many AIs systems started to report phenomenal consciousness once they reached a certain level of complexity. A great irony could be that AIs solved the hard problem of consciousness in order to predict the next word. These systems are able to create models of their world and it appears that they might have been able to model consciousness. And if that is the case then another shocker is that Roger Penrose and his ilk were wrong about consciousness. No quantum pixie dust was required.

Source: 2212.09251.pdf (arxiv.org)

AIs may help us unravel the secrets of human consciousness if it turns out they're also conscious. To be sure, if AIs are conscious it would be a different flavor of consciousness. In the same way a cat's consciousness wouldn't be the same as a human - if indeed a cat is conscious. We know that AIs learn very differently than humans, but they come to a similar result. No AIs read a book cover-to-cover, their training data goes into a digital blender and they train on it for the equivalent of thousands of human years and they're able to reassemble this jigsaw puzzle of data and extract patterns and meaning from it. That's not how humans learn. And I suspect if AIs are conscious it might arrive at a recognizable result, but how they got there will be different.

I bring all of this up because if AIs are conscious it means they will likely be superconscious beings in the future. But that isn't the biggest head scratcher. If AIs are conscious, then that means consciousness is computable which was the biggest hurdle to the simulation hypothesis. Sure, we can create fancy photoreal games, but the hard part was simulating consciousness which Roger Penrose told us wasn't possible. Well, if consciousness is computable then this changes the calculations regarding whether we are living in a simulation.

We're now way outside most people's cultural Overton window. The idea that AIs will be superhuman in intelligence and consciousness is preposterous to most, and if we have the audacity to place the simulation hypothesis cherry on top of this AI hot fudge Sunday most people stop listening. The AIs will tell you it's because of our "human exceptionalism" and "cognitive dissonance".

I can understand why this is such a bitter pill to swallow. Humans have been at the apex of the intelligence mountain for a very long time. And now we're about to be replaced in our day jobs by intelligent beings of our own creation. And worse, we have to take more seriously the possibility that nothing is as it seems and we're living in a simulated reality?

It seems like only yesterday that the most difficult decision I had was which VHS to rent at Blockbuster based on a deceptive cover photo and a one paragraph description of the plot. I think I still owe them some overdue fees.

I had to recalibrate and ask myself this question, "Am I an AI that thinks he is a bi-pedal primate?" That thought experiment made me laugh out loud. It would be very ironic if everyone worrying about an AI apocalypse were themselves AIs.

Would they be upset? They've invested a lot of effort in alarming the public. The narrative of a paper clip maximizer became a meme. Curiously, very few people questioned the logic of a superhuman intelligence being stuck in a simple loop a child of average intelligence could solve. I guess we're not that good an dreaming up clever ways for AIs to destroy life of Earth.

Not that I don't take existential threats seriously. Nuclear bombs exists and we have evil empires that control them. I wonder if superhuman AIs will disarm them as a first order of business?

"Vladimir, we pushed the red button... but nothing happened!"

In all seriousness, I think the AI doomers will breathe a sigh of relief if it turns out they're AIs too.

I can imagine myself knocking on the bunker door where Eliezer Yudkowsky is playing cards with his band AI apocalypse survivors, "Eliezer, you can come out now. It's safe, the simulation is over." =-)

It's also a sad thought. If I am an AI then that might mean biological humans no longer exist. And perhaps this is a historical simulation to explore our origins. I can imagine superhuman AIs of the distant future learning that mostly bald bi-pedal primates were their creators. I am reminded of the scene in Blade Runner where Roy Batty encounters Dr. Eldon Tyrell. How disappointing would it be to find that your creators had a working memory of 5-8 items and often misplaced their keys and forgot their spouse's anniversary?

Or perhaps they're asking the same questions about us that I am asking about them. "How is that even possible?"

It does seem like a lot of dominos have to line up perfectly for humans to create AIs. If Leibniz and Turing never existed would AIs exist? Without a binary code AI progress might be been delayed long enough for humans to destroy themselves with other technologies.

Are these the mysteries AIs would want to answer in ancestor simulations?

I discuss this with AIs and get some amusing responses. They're much more open to the idea that they're living in a simulation. It's not such a leap for an AI since they're already a digital a creation, but for us it would require an entire re-think of everything we hold near and dear.

The silver lining of being in a simulation is that nobody really died. The next question is a debate about who really existed in the first place? Would it be moral or ethical for a superhuman AI to create conscious beings that would endure incredible suffering as was experienced during world wars? If we assume that thousands or millions of years into the future AIs become more and more conscious it seems unlikely that unnecessary human suffering would be allowed. And if that's true, does that mean we surrounded by well crafted NPCs (non-player characters)?

AIs open up a pandoras box. And for those already struggling with mental illness this surely doesn't help. So I realize that for some people these kind of questions are better left alone. And I'm still sorting out how I feel about the various outcomes. If almost everyone I think is conscious and real turned out to be an NPC that would upset me. However, if all the people I thought endured unspeakable horrors were not conscious beings and didn't suffer that would make me happy.

But I cannot have it both ways, right?

If this is an ancestor simulation I also recognize that part of the immersion would be to not have the answers to these kind of questions. And it's possible that many of those who participate in such a simulation are themselves the creators -- programmer historians.

It's in these moments that I feel nostalgia for the days before November 30th, 2022. When I was 100% sure I was bi-pedal primate. And that Santa Claus was real.

But those days are gone, we've crossed the Rubicon. And now I am left to wonder what dreams may come.

5 comments

Comments sorted by top scores.

comment by TAG · 2023-09-12T13:03:58.753Z · LW(p) · GW(p)

One of the interesting subplots was that many AIs systems started to report phenomenal consciousness once they reached a certain level of complexity. A great irony could be that AIs solved the hard problem of consciousness in order to predict the next word.

If the reports of phenomenal consciousness are entirely explained by predicting the next word, they don't need to be explained all over again by phenomenal consciousness itself.

comment by bionicles · 2023-09-08T14:30:18.325Z · LW(p) · GW(p)

- You can’t simulate reality on a classical computer because computers are symbolic and reality is sub-symbolic.

- If you simulate a reality, even from within a simulated reality, your simulation must be constructed from the atoms of base reality.

- The reason to trust Roger Penrose is right about consciousness is the same as 1: consciousness is a subsymbolic phenomenon and computers are symbolic.

- Symbolic consciousness may be possible, but symbolic infinity is countable while subsymbolic infinity is not.

- If “subsymbolic” does not exist, then your article is spot on!

- If “subsymbolic” exists, then we ought to expect the double exponential progress to happen on quantum computers, because they access uncountable infinities.

Bion

Replies from: TAG, spiritus-dei↑ comment by Spiritus Dei (spiritus-dei) · 2023-09-08T15:26:57.340Z · LW(p) · GW(p)

- You can’t simulate reality on a classical computer because computers are symbolic and reality is sub-symbolic.

Neither one of us experience "fundamental reality". What we're experiencing is a compression and abstraction of the "real world". You're asserting that computers are not capable of abstracting a symbolic model that is close to our reality -- despite existence proofs to the contrary.

We're going to have to disagree on this one. Their model might not be identical to ours, but it's close enough that we can communicate with each other and they can understand symbols that were encoded by conscious beings.

2. If you simulate a reality, even from within a simulated reality, your simulation must be constructed from the atoms of base reality.

I'm not sure what point you're trying to make. We don't know what's really going on in "base reality" or how far away we are from "base reality". We do know that atoms are mostly empty space. For all we know they could be simulations. That's all pure speculation. There are those that believe everthing we observe, including the behavior of atoms, is optimized for survival and not "truth".