Somewhat against "just update all the way"

post by tailcalled · 2023-02-19T10:49:20.604Z · LW · GW · 10 commentsContents

Reasons to not update all the way Reasons to update all the way None 10 comments

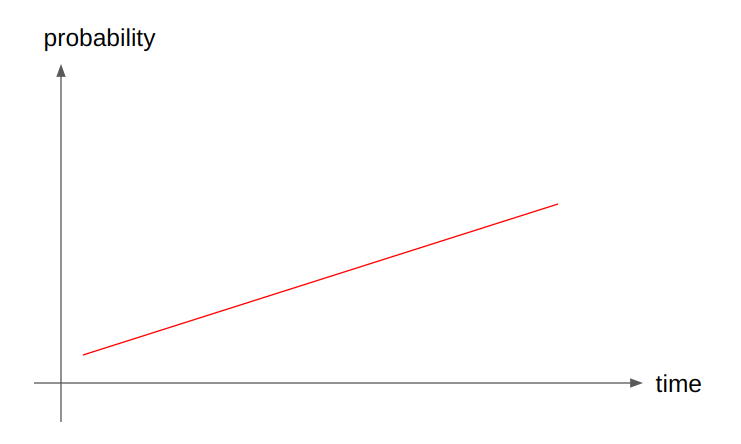

Sometimes, a person's probability in a proposition over a long timespan follows a trajectory like this:

I.e. the person gradually finds the proposition more plausible over time, never really finding evidence against it, but at the same time they update very gradually, rather than having big jumps. For instance, someone might gradually be increasing their credence in AI risk being serious.

In such cases, I have sometimes seen rationalists complain that the updates are happening too slowly, and claim that they should notice the trend in updates, and "just update all the way".

I suspect this sentiment is inspired by the principle of Conservation of expected evidence [? · GW], which states that your current belief should equal the expectation of your future beliefs. And it's an understandable mistake to make, because this principle surely sounds like you should just extrapolate a trend in your beliefs and update to match its endpoint.

Reasons to not update all the way

Suppose you start with a belief that either an AI apocalypse will happen, or someone at some random point in time will figure out a solution to alignment.

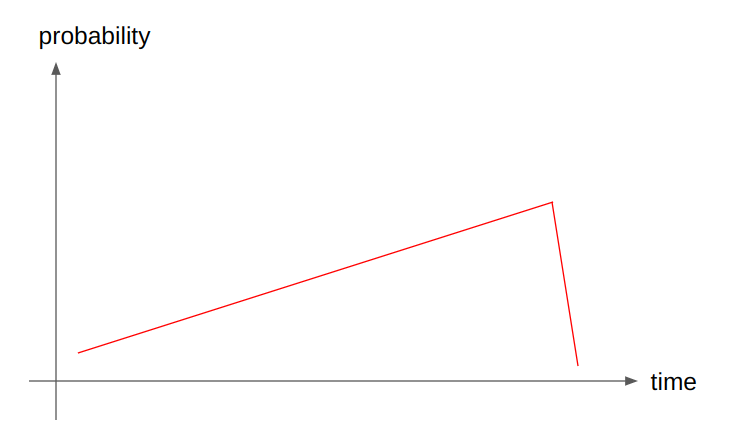

In that case, for each time interval that passes without a solution to alignment, you have some slight evidence against the possibility that a solution will be found (because the time span it can be solved in has narrowed), and some slight evidence in favor of an AI apocalypse. This makes your follow a pattern somewhat like the previous graph.

However, if someone comes up with a credible and well-proven solution to AI alignment, then that would (under your model) disprove the apocalypse, and your would go rapidly down:

So the continuous upwards trajectory in probability satisfies the conservation of expected evidence because the probable slight upwards movement is counterbalanced by an improbable strong downwards movement.

Reasons to update all the way

There may be a lot of other models for your beliefs, and some of those other models give reasons to update all the way. For instance in the case of AI doom, you might have something specific that you believe is a blocker for dangerous AIs, and if that specific thing gets disproven, you ought to update all the way. There are good reasons that the sequences warn against Fighting a rearguard action against the truth [LW · GW].

I just want to warn people not to force this perspective in cases where it doesn't belong. I think it can be hard to tell from the outside whether others ought to update all the way, because it is rare for people to share their full models and derivations, and when they do share the models and derivations, it is rare for others to read them in full.

Thanks to Justis Mills for providing proofreading and feedback.

10 comments

Comments sorted by top scores.

comment by gjm · 2023-02-19T12:35:43.765Z · LW(p) · GW(p)

A different sort of reason for not updating all the way:

When there isn't a time-dependence of the sort exhibited by the OP's example, or a steady flow of genuine new information that just happens all to be pointing the same way, a steady trend probably is an indication that you're deviating from perfect rationality. But the direction you're deviating in could be "repeatedly updating on actually-not-independent information" rather than "failing to notice a trend", and in that case the correct thing to do is to roll back some of your updating rather than guessing where it's leading to and jumping there.

For instance, suppose there's some amount of evidence for X, and different people discover it at different times, and you keep hearing people one after another announce that they've started believing X. You don't hear what the original evidence is, and maybe it's in some highly technical field you aren't expert in so you couldn't evaluate it anyway; all you know is that a bunch of people are starting to believe it. In this scenario, it's correct for each new conversion to push you a little way in the direction towards believing X yourself, but the chances are that your updates will not be perfectly calibrated to converge on what your Pr(X) would be if you understood the actual structure of what's going on. They might well overshoot, and in that case extrapolating your belief-trajectory and jumping to the apparent endpoint would move you the wrong way.

Replies from: tailcalled↑ comment by tailcalled · 2023-02-19T13:10:25.923Z · LW(p) · GW(p)

Yep, that's another excellent reason to beware of.

comment by Pablo (Pablo_Stafforini) · 2023-02-20T15:17:08.364Z · LW(p) · GW(p)

comment by Dagon · 2023-02-19T21:32:25.589Z · LW(p) · GW(p)

I would like to explore some examples outside of random chatter about p(doom) here. Mostly, I think there's no way to know what "all the way" even means until the updates have stopped. I also suspect you're using "update" in a non-rigorous way, including human-level sentiment and changes in prediction based on modeling and re-weighting existing evidence, rather than the more numeric Bayesean use of "update" on new evidence. It's unclear what "conservation of expected evidence" means when "evidence" isn't well-defined.

I don't think (though it would only take a few rigorous examples to convince me) that we're going to find a generality that applies to how humans change their social opinions over time, with and without points of reinforcement among their contacts and reading.

Replies from: tailcalled↑ comment by tailcalled · 2023-02-19T21:43:21.884Z · LW(p) · GW(p)

I would like to explore some examples outside of random chatter about p(doom) here.

I think I've seen discussion about it in the case of prediction markets on whether figures like Vladimir Putin would be assassinated by a given date. In the case of that market, there was a constant downwards trend.

I also suspect you're using "update" in a non-rigorous way, including human-level sentiment and changes in prediction based on modeling and re-weighting existing evidence, rather than the more numeric Bayesean use of "update" on new evidence. It's unclear what "conservation of expected evidence" means when "evidence" isn't well-defined.

I don't agree with this. E.g. radical probabilism does away with Bayesian updates, but it still has conservation of expected evidence [LW · GW].

comment by Garrett Baker (D0TheMath) · 2023-02-19T19:11:22.193Z · LW(p) · GW(p)

I don’t think many with monotonically increasing doom pay attention to current or any alignment research when they make their updates. I don’t think they’re using any model to base their estimates on. When you go and survey people, and pose the same timelines question phrased in two different ways, they give radically different answers[1].

I also think people usually feel the surprise at the fact we can get machines to do what they do at all at this stage in AI research, and not at the minorly unexpected deficiencies of alignment work.

Tangentially, it is also useful to remember this fact when trying to defer to experts. They gain no special powers in future prediction by virtue of their subject expertise, as has always been the case [LW · GW] (“Progress is driven by peak knowledge, not average knowledge.”). ↩︎

↑ comment by tailcalled · 2023-02-19T19:22:52.829Z · LW(p) · GW(p)

I don’t think many with monotonically increasing doom pay attention to current or any alignment research when they make their updates. I don’t think they’re using any model to base their estimates on. When you go and survey people, and pose the same timelines question phrased in two different ways, they give radically different answers.

I don't think this survey is a good indicator, since it is very narrow [LW · GW] in the questions.

If I was designing a survey to understand people's predictions on AI progress, I would first do a bunch of qualitative questions asking them things like what models they are using, what they are paying attention to, what they expect to happen with AI, etc.. (And probably also other questions, such as whose analysis they know of and respect, etc.) This would help give a comprehensive list of perspectives that may inform people's opinions.

Then I would take the qualitative perspectives and turn them into questions that more properly assess people's perspectives. Only then can one really see whether the inconsistent answers people give really are so implausible, or if one is missing the bigger picture.

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2023-02-19T19:52:45.853Z · LW(p) · GW(p)

Ok, I went and looked at the survey (instead of just going based on memories of an 80k podcast that Ajeya was on where she stressed the existence of framing effects), and it is indeed very narrow, and looking at the effect of framing effects, I'm now less/not confident this is convincing to anyone who needs to be convinced that experts aren't really using a model, but I still think that most likely don't have a model, because people in general don't usually have a model.

↑ comment by Morpheus · 2023-02-20T10:36:19.114Z · LW(p) · GW(p)

I don’t think many with monotonically increasing doom pay attention to current or any alignment research when they make their updates

Maybe I am just one of the “not many”. But I think this depends on how closely you track your timelines. Personally, my timelines are uncertain enough that most of my substantial updates have been in the earlier direction (like from Median ~2050 to median 2030-2035). This probably happens to a lot of people who newly enter the field, because they naturally first put more emphasis on surveys like the one you mentioned. I think my biggest ones were:

- going from "taking the takes from capabilities researchers at face value, not having my own model and going with Metaculus" to "having my own views".

- GPT2 (…and the log loss still goes down) and then the same with GPT3. In the beginning, I still had substantial probability mass (30%) on this trend just not continuing.

- Minverva (apparently getting language models to do math is not that hard (which was basically my last “trip wire” going off)).

I do think my P(doom) has slightly decreased from seeing everyone else finally freaking out.

Replies from: Morpheus↑ comment by Morpheus · 2023-02-24T11:58:01.251Z · LW(p) · GW(p)

Past me is trying to give himself too much credit here. Most of it was epistemic luck/high curiosity that lead him to join Søren Elverlin's reading group in 2019 and then I just got exposed to the takes from the community.