A summary of every "Highlights from the Sequences" post

post by Orpheus16 (akash-wasil) · 2022-07-15T23:01:04.392Z · LW · GW · 7 commentsContents

1 2 3 Thinking Better on Purpose The lens that sees its flaws What do we mean by “Rationality”? Humans are not automatically strategic Use the try harder, Luke Your Strength as a Rationalist The meditation on curiosity The importance of saying “Oops” The marital art of rationality The twelve virtues of rationality Pitfalls of Human Cognition The Bottom Line Rationalization You can Face Reality Is that your true rejection? Avoiding your beliefs’ real weak points Belief as Attire Dark side epistemology Cached Thoughts The Fallacy of Gray Lonely Dissent Positive Bias: Look into the Dark Knowing about biases can hurt people The Laws Governing Belief Making beliefs pay (in anticipated experiences) What is evidence? Scientific Evidence, Legal Evidence, Rational Evidence How much evidence does it take? Absence of Evidence is Evidence of Absence Conservation of Expected Evidence Argument Screens Off Authority The Second Law of Thermodynamics, and Engines of Cognition Toolbox-thinking and Law-thinking Local validity as a key to sanity and civilization Science Isn't Enough Hindsight devalues science Science doesn’t trust your rationality When science can’t help No safe defense, not even science Connecting Words to Reality Taboo your words Dissolving the question Say not “complexity” Mind projection fallacy How an algorithm works from the inside 37 ways that words can be wrong Expecting short inferential distances Illusion of transparency: Why no one understands you Why We Fight Something to protect The gift we give to tomorrow On Caring Tsuyoku Naritai! (I Want To Become Stronger) A sense that more is possible None 7 comments

1

I recently finished reading Highlights from the Sequences [? · GW], 49 essays from The Sequences [? · GW] that were compiled by the LessWrong team.

Since moving to Berkeley several months ago, I’ve heard many people talking about posts from The Sequences. A lot of my friends and colleagues commonly reference biases, have a respect for Bayes Rule, and say things like “absence of evidence is evidence of absence!”

So, I was impressed that the Highlights were not merely a refresher of things I had already absorbed through the social waters. There was plenty of new material, and there were also plenty of moments when a concept became much crisper in my head. It’s one thing to know that dissent is hard, and it’s another thing to internalize that lonely dissent doesn’t feel like going to school dressed in black— it feels like going to school wearing a clown suit [? · GW].

2

As I read, I wrote a few sentences summarizing each post. I mostly did this to improve my own comprehension/memory.

You should treat the summaries as "here's what Akash took away from this post" as opposed to "here's an actual summary of what Eliezer said."

Note that the summaries are not meant to replace the posts. Read them here [? · GW].

3

Here are my notes on each post, in order. I also plan to post a reflection on some of my favorite posts.

Thinking Better on Purpose

The lens that sees its flaws [? · GW]

- One difference between humans and mice is that humans are able to think about thinking. Mice brains and human brains both have flaws. But a human brain is a lens that can understand its own flaws. This is powerful and this is extremely rare in the animal kingdom.

What do we mean by “Rationality”? [? · GW]

- Epistemic rationality is about building beliefs that correspond to reality (accuracy). Instrumental rationality is about steering the future toward outcomes we desire (winning). Sometimes, people have debates about whether things are “rational.” These often seem like debates over the definitions of words. We should not debate over whether something is “rational”— we should debate over whether something leads us to more accurate beliefs or leads us to steer the world more successfully. If it helps, every time Eliezer says “rationality”, you should replace it with “foozal” and then strive for what is foozal.

Humans are not automatically strategic [? · GW]

- An 8-year-old will fail a calculus test because there are a lot of ways to get the wrong answer and very few ways to get the right answer. When humans pursue goals, there are often many ways of pursuing goals ineffectively and only a few ways of pursuing them effectively. We rarely do seemingly-obvious things, like:

- Ask what we are trying to achieve

- Track progress

- Reflect on which strategies have worked & or haven’t worked for us in the past

- Seek out strategies that have worked or haven’t worked for others

- Test several different hypotheses for how to achieve goals

- Ask for help

- Experiment with alternative ways of studying, writing, working, or socializing

Use the try harder, Luke [? · GW]

- Luke Skywalker tried to lift a spaceship once. Then, he gave up. Instantly. He told Yoda it was impossible. And then he walked away. And the audience sat along and nodded, because this is typical for humans. “People wouldn’t try for more than five minutes before giving up if the fate of humanity were at stake.”

Your Strength as a Rationalist [? · GW]

- If a model can explain any possible outcome, it is not a useful model. Sometimes, we encounter a situation, and we’re like “huh… something doesn’t seem right here”. But then we push this away and explain the situation in terms of our existing models. Instead, we should pay attention to this feeling. We should resist the impulse to go through mental gymnastics to explain a surprising situation with our existing models. Either our model is wrong, or the story isn’t true.

The meditation on curiosity [? · GW]

- Sometimes, we investigate our beliefs because we are “supposed to.” A good rationalist critiques their views, after all. But this often leads us to investigate enough to justify ourselves. We investigate in a way that conveniently lets us keep our original belief, but now we can tell ourselves and our cool rationalist friends that we examined the belief! Instead of doing this, we should seek to find moments of genuine intrigue and curiosity. True uncertainty is being equally excited to update up or update down. Find this uncertainty and channel it into a feeling of curiosity.

The importance of saying “Oops” [? · GW]

- When we make a mistake, it is natural to minimize the degree to which we were wrong. We might say things like “I was mostly right” or “I was right in principle” or “I can keep everything the same and just change this one small thing.” It is important to consider if we have instead made a fundamental mistake which justifies a fundamental change. We want to be able to update as quickly as possible, with the minimum possible amount of evidence.

The marital art of rationality [? · GW]

- We can think of rationality like a marital art. You do not need to be strong to learn martial arts: you just need hands. As long as you have a brain, you can participate in the process of learning how to use it better.

The twelve virtues of rationality [? · GW]

- Eliezer describes 12 virtues of rationality.

Pitfalls of Human Cognition

The Bottom Line [? · GW]

- Some people are really good at arguing for conclusions. They might first write “Therefore, X is true” and then find a compelling list of reasons why X is true. One strength as a rationalist is to make sure we don’t fall into the “Therefore, X is true” traps. We evaluate the evidence for X being true and the evidence for X being false, and that leads us to a conclusion.

Rationalization [? · GW]

- We often praise the scientist who comes up with a pet theory and then sets out to find experiments to prove it. This is how Science often moves forward. But we should be even more enthusiastic about the scientist who approaches experiments with genuine curiosity. Rationality is being curious about the conclusion; rationalization is starting with the conclusion and finding evidence for it.

You can Face Reality [? · GW]

- People can stand what is true, for they are already enduring it.

Is that your true rejection? [? · GW]

- Sometimes, when people disagree with us, there isn’t a clear or easy-to-articulare reason for why they do. It might have to do with some hard-to-verbalize intuitions, or patterns that they’ve been exposed to, or a fear of embarrassment for being wrong, or emotional attachments to certain beliefs. When they tell us why they disagree with us, we should ask ourselves “is that their true reason for disagreeing?” before we spend a lot of effort trying to address the disagreement. This also happens when we disagree with others. We should ask ourselves “wait a second, is this [stated reason] the [actual reason why I disagree with this person]?”

Avoiding your beliefs’ real weak points [? · GW]

- Why don’t we instinctively target the weakest parts of our beliefs? Because it’s painful, like touching a hot stove is painful. To target the weakest parts of our beliefs, we need to be emotionally prepared. Close your eyes, grit your teeth, and deliberately think about whatever hurts most. Do not attack targets that feel easy and painless to attack. Strike at the parts that feel painful to attack and difficult to defend.

Belief as Attire [? · GW]

- When someone belongs to a tribe, they will go to great lengths to believe what the tribe believes. But even more than that— they will get themselves to wear those beliefs passionately.

Dark side epistemology [? · GW]

- When we tell a lie, that lie is often going to be connected to other things about the world. So we set ourselves up to start lying about other things as well. It is not uncommon for one Innocent Lie to get entangled in a series of Connected Lies, and sometimes those Connected Lies even result in lying about the rules of how we should believe things (e.g., “everyone has a right to their own opinion”). Remember that the Dark Side exists. There are people who once told themselves an innocent lie and now conform to Dark Side epistemology. Can you think of any examples of memes that were generated by The Dark Side?

Cached Thoughts [? · GW]

- Our brains are really good at looking up stored answers. We often fill in patterns and repeat phrases we’ve heard in the past. Phrases like “Death gives meaning to life” and “Love isn’t rational” pop into our heads. Notice when you are looking up stored answers and thinking cached thoughts. When you are filling in a pattern, stop. And think. And examine if you actually believe the cached thing that your brain is producing.

The Fallacy of Gray [? · GW]

- The world isn’t black or white; it’s gray. However, some shades of gray are lighter than others. We can never be certain about things. But some things are more likely to be true than others. We will never be perfect. But the fact that we cannot achieve perfection should not deter us from seeking to be better.

Lonely Dissent [? · GW]

- It is extremely difficult to be the first person to dissent. It does not feel like wearing black to school; it’s like wearing a clown suit to school. To be a productive revolutionary, you need to be very smart and pursue the correct answer no matter what. But you also need to have an extra step— you need to have the courage to be the first one to wear a clown suit to school. And most people do not do this. We should not idolize being a free thinker— indeed, it is simply a bias in an unusual direction. But we should recognize that visionaries will not only need to be extremely intelligent— they will also have to do something that would’ve got them killed in our ancestral environment. They need to be the first person to wear a clown suit to school.

Positive Bias: Look into the Dark [? · GW]

- We often look to see what our theories can explain. It is unnatural to look to see what our theories do not explain. It is also unnatural to look to see what other theories can explain the same evidence.

Knowing about biases can hurt people [? · GW]

- It is much easier to see biases in others than biases in ourselves. Knowing about biases can make it easy for us to dismiss people we disagree with. We should strive to identify our own biases just as well as we can identify others’. This likely means we will need to put more effort into looking for our own biases.

The Laws Governing Belief

Making beliefs pay (in anticipated experiences) [? · GW]

- Some “beliefs” of ours do not yield any predictions about the world or about what will happen to us. Discard these. Focus on beliefs that “pay rent”— beliefs that let you make predictions about what will and will not happen. Sometimes, these beliefs will require us to have beliefs about abstract ideas and concepts (for example, predicting when a ball will reach the ground requires an understanding of “gravity” and “height”). But the focus should be on how these beliefs help us make predictions about the physical world or predictions about our experiences. If you believe X, what do you expect to see? If you believe Y, what experience do you expect must befall you? If you believe Z, what observations and experiences are ruled out as impossible?

What is evidence? [? · GW]

- For something to be evidence, it should not be able to happen in all possible worlds. That is, a piece of evidence should not be able to explain both A and ~A. If your belief does not let you predict reality, it is not evidence, and you should discard it.

Scientific Evidence, Legal Evidence, Rational Evidence [? · GW]

- Legal evidence is evidence that can be admissible in court. We agree to exclude certain types of evidence for the sake of having a legal system with properties that we desire. Scientific evidence is evidence that can be publicly reproduced. There are some kinds of evidence that should be sufficient to have us update our beliefs even if they do not count as legal evidence or scientific evidence. For example, a police commissioner telling me “I think Alice is a crimelord” should make me fear Alice, even if the statement is not admissible in court and not publicly reproducible/verifiable.

How much evidence does it take? [? · GW]

- The amount of evidence you need varies based on:

- How large is the space of possibilities in which the hypothesis lives? (Wider space—> more evidence required)

- How unlikely is the hypothesis seems a priori? (More likely—> less evidence required)

- How confident do you want to be? (More confident—> More evidence required)

- Evidence can be thought of in terms of bits. If you tell me that A happened, and A had a 1/16 chance of happening, you have communicated 4 bits.

Absence of Evidence is Evidence of Absence [? · GW]

- If observing A would cause you to be more confident that B is true, then not observing A should cause you to be less confident that B is true. Stated more mathematically:

- If E is a binary event and P(H | E) > P(H), i.e., seeing E increases the probability of H, then P(H | ¬ E) < P(H), i.e., failure to observe E decreases the probability of H . The probability P(H) is a weighted mix of P(H | E) and P(H | ¬ E), and necessarily lies between the two.

Conservation of Expected Evidence [? · GW]

- Before you see evidence, the expected amount that you may update in favor of your hypothesis must equal the expected amount that you may update against your hypothesis. If A and ~A are equally likely, and observing A would make you 5% more confident in your hypothesis, then observing ~A should make you 5% less confident in your hypothesis. If you expect a strong probability of seeing weak evidence in one direction, it must be balanced by a weak expectation of seeing strong evidence in the other direction. Put more precisely:

- The expectation of the posterior probability, after viewing the evidence, must equal the prior probability.

Argument Screens Off Authority [? · GW]

- Consider two sources of evidence: The authority of a speaker (e.g., a distinguished geologist vs. a middle school student) and the strength of the argument. Both of these are worth paying attention to. But if we have more information about the argument, we can rely less on the authority of the speaker. ****In the extreme case, if we have all the information about why a person believes X, we no longer need to rely on their authority at all. If we know authority we are still interested in hearing the arguments; but if we know the arguments fully, we have very little left to learn from authority.

An Intuitive Explanation of Bayes’s Theorem Bayes Rule Guide

- Eliezer now considers the original post obsolete and instead directs readers to the Bayes Rule Guide.

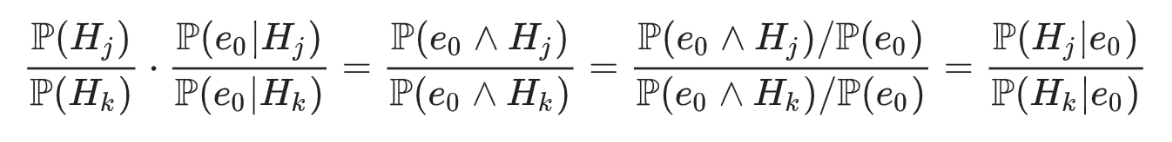

- Bayes Rule is a specific procedure that can be used to describe the relationship between prior odds, likelihood ratios, and posterior odds. [The posterior odds of A|evidence] is equal to the [prior odds of A] times [the likelihood of evidence if A is true divided by the likelihood of evidence if A is not true].

- Understanding Bayes Rule helps us think about the world using a general Bayesian framework: we have prior belief, evidence, and a posterior belief. There is some evidence that Bayesian Reasoning helps us improve our reasoning and forecasting abilities (even when we are not actually “doing the math” and applying Bayes Rule).

The Second Law of Thermodynamics, and Engines of Cognition [? · GW]

- Note: This post is wild, and I don’t think my summary captures it well. It is very weird and nerd-snipey.

- Simple takeaway: To form accurate beliefs about something, you have to observe it.

- Wilder takeaways:

- There is a relationship between information-processing (your certainty about the state of a system) and thermodynamic entropy (the movement of particles). Entropy must be preserved: If information-theoretic entropy decreases by X, then thermodynamic entropy must increase by X.

- If you knew a cup of water was 72 degrees, and then you learned the positions and velocities of the particles, that would decrease the thermodynamic entropy of the water. So the fact that we learned about the water makes the water colder. What???

- Also if we could do this, we could make different types of refrigerators & convert warm water into ice cubes by removing electricity. Huh?

Toolbox-thinking and Law-thinking [? · GW]

- Toolbox Thinking focuses on the practical. It focuses on finding the best tools that we can, given our limited resources. It emphasizes that different techniques will work in different contexts. Law Thinking focuses on the ideal. It focuses on finding ideal principles that apply in all contexts (e.g., Bayes Rule, laws of thermodynamics). Both frames are useful. Some Toolbox Thinkers are missing out (and not fully embracing truth) by refusing to engage with the existence of laws. Eliezer’s advice is to see laws as descriptive rather than normative. Some laws describe ideals, even if they don’t tell us what we should we do.

Local validity as a key to sanity and civilization [? · GW]

- You can have good arguments for an incorrect conclusion (which is rare) and bad arguments for a correct conclusion (which is common). We should try to evaluate arguments like impartial judges. If not, we may get into situations in which we think it’s OK to be “fair to one side and not to the other.” This leads to situations in which we apply laws impartially, which ultimately undermines the game-theoretic function of laws.

Science Isn't Enough

Hindsight devalues science [? · GW]

- When we learn something new, we tend to think it would have been easy to know it in advance. If someone tells us that WWII soldiers were more likely to want to return home during the war than after the war, we say “oh, of course! They were in mortal danger during the war, so obviously they desperately want to go back. That’s so obvious. It fits with my existing model of the world.” But if the opposite was true (which it is), we would just as easily fit that into our model. We aren’t surprised enough by new information. This causes us to undervalue scientific discoveries, because we end up thinking “why did we need an experiment to tell us that? I would have predicted that all along!”

Science doesn’t trust your rationality [? · GW]

- Both Libertarianism and Science accept that people are flawed and try to build systems that accommodate these flaws. In libertarianism, we can’t trust people in power to come up with good theories or implement them well. In Science, we can’t trust individual scientists to abandon pet theories. Science accommodates this stubbornness by demanding experiments— if you can do an experiment to prove that you’re right, you win. Science is not the ideal— it is a system we create to be somewhat better than the individual humans within it. If you use rational methods to come up with the correct conclusion, Science does not care. Science demands an experiment (even if you don’t actually need one in order to reach the right answer).

When science can’t help [? · GW]

- Science is good when we can run experiments to test something. Sometimes, there are important theories that can’t be tested. For instance, we can’t (currently) test cryonics. If it’s easy to do experiments to prove X, but there have been no experiments proving X, we should be skeptical that X is true. But if it’s impossible to do experiments to prove X, and there have been no experiments proving X, we shouldn’t update. Science fails us when we have predictions that can’t be experimentally evaluated (with today’s technology and resources).

No safe defense, not even science [? · GW]

- Some people encounter new arguments and think "Can this really be true, when it seems so obvious now, and yet none of the people around me believe it?" Yes. And sometimes people who have had experiences which broke their trust in others— which broke their trust in the sanity of humanity— are able to see these truths more clearly. They are able to say “I understand why none of the people around me believe it— they have already failed me. I no longer look toward them to see what is true. I no longer look toward them for safety.” There are no people, tools, or principles that can keep you safe. “No one begins to truly search for the Way until their parents have failed them, their gods are dead, and their tools have shattered in their hand.”

Connecting Words to Reality

Taboo your words [? · GW]

- Alice thinks a falling tree makes a sound, and Bob doesn’t. This looks like a disagreement. But if Alice and Bob stop using the word sound, and instead talk about their expectations about the world, they realize that they agree. Carol thinks a singularity is coming and Dave also thinks a singularity is coming. But if they unpack the word *singularity, *****they realize that they disagree deeply about what they expect to happen. When discussing ideas, be on the lookout for terms that can have multiple interpretations. Instead of trying to define the term (standard strategy), try to have the discussion without using the term at all (Eliezer’s recommendation). This will make it easier to figure out what you actually expect to see in the world.

Dissolving the question [? · GW]

- Do humans have free will? A rationalist might feel like they are “done” after they have figured out “no, humans do not have free will” or “this question is too poorly specified to have a clear answer” or “the answer to this question doesn’t generate any different expectations or predictions about the world.” But there are other questions here: As a question of cognitive science, why do people disagree about whether or not we have free will? Why do our minds feel confused about free will? What kind of cognitive algorithm, as felt from the inside, would generate the observed debate about "free will"? Rationalists often stop too early. They stop after they have answered the question. But sometimes it is valuable to not only to answer the question but also to dissolve it— search for model that explains how the question emerges in the first place.

Say not “complexity” [? · GW]

- It is extremely easy to explain things that we don’t understand using words that make it seem like we understand. As an example, when explaining how an AI will perform a complicated task, Alice might say that AI requires “complexity”. But complexity is essentially a placeholder for “magic”— “complexity” allows us to feel like we have an explanation even if when we don’t actually understand how something works. The key is to avoid skipping over the mysterious part. When Eliezer worked with Alice, they would use the word “magic” when describing something they didn’t understand. Instead of “X does Y because of complexity”, they would say “X magically does Y.” The word complexity creates an illusion of understanding. The word magic reminds us that we do not understand, and there is more work that needs to be done.

Mind projection fallacy [? · GW]

- We sometimes see properties as inherent characteristics as opposed to things that are true from our point-of-view. We have a hard time distinguishing between “this is something that my mind produced” from “this is a true property of the universe.”

How an algorithm works from the inside [? · GW]

- Is pluto a planet? Even if we knew every physical property of Pluto (e.g., its mass and orbit), we would still feel the unanswered question— but is it a planet? This is because of the way our neural networks work. We have fast, cheap, scalable networks. They use concepts (like “planet”) activate to quickly and cheaply provide information about other properties. And because of this, we feel the need to have answers to questions like “is this a planet?” or “does the falling tree make a sound?” If we reasoned in a network that didn’t store these concepts— and merely stored information about physical properties— we wouldn’t say “the question of whether or not pluto is a planet is simply an argument about the definition of words. Instead, we would think “huh, given that we know Pluto’s mass and orbit, there is no question left to answer.” We wouldn’t feel like there was another question left to answer.

37 ways that words can be wrong [? · GW]

- There are many ways that words can be used in ways that are imprecise and misleading. Eliezer lists 37. Don’t pull out dictionaries, don’t try to say things are X “by definition”, and don’t pretend that words can mean whatever you want them to mean.

Expecting short inferential distances [? · GW]

- In the ancestral environment, people almost never had to explain concepts. People were nearly always 1-2 inferential steps away from each other. There were no books, and most knowledge was common knowledge. This explains why we systematically underestimate the amount of inferential distance when we are explaining things to others. If the listener is confused, the explainer often goes back one step, but they needed to go back 10 steps.

Illusion of transparency: Why no one understands you [? · GW]

- We always know what we mean by our own words, so it’s hard for us to realize how difficult it is for other people to understand us.

Why We Fight

Something to protect [? · GW]

- We cannot merely practice rationality for rationality’s sake. In western fiction, heroes acquire powers and then find something to care about. In Japanese fiction, heroes acquire something to care about, and then this motivates them to develop their powers. We should strive to do this too. We will not learn about rationality if we are always trying to learn The Way or follow the guidance of The Great Teacher— we will learn about rationality if we are trying to protect something we care about, and we are desperate to succeed. People do not resort to math until their own daughter’s life is on the line.

The gift we give to tomorrow [? · GW]

- Natural selection is a cruel process that revolves around organisms fighting each other (or outcompeting each other) to the death. It leaves starving elephants and limp gazelles in torture. And yet, natural selection produced a species capable of love and beauty— isn’t that amazing? Well, of course not: it only looks beautiful to us, and all of that can be explained by evolution. …But still. If you really think about it, it’s kind of fascinating that a process so awful created something capable of beauty.

- We don’t feel the size of large numbers. When one life is in danger, we might feel an urge to protect it. But if a billion lives were in danger, we wouldn’t feel a billion times worse. Sometimes, people assume that moral saints— people like Ghandi and Mother Theresa— are people who just care more. But this is an error — prominent altruists aren't the people who have a larger care-o-meter, they're the people who have learned not to trust their care-o-meters. Courage isn’t about being fearless— it’s about being afraid but doing the right thing anyways. Improving the world isn’t about feeling a strong compulsion to help a billion people— it’s about doing the right thing even when we lack the ability to feel the importance of the problem.

Tsuyoku Naritai! (I Want To Become Stronger) [? · GW]

- In Orthodox Judaism, people recite a litany confessing their sins. The recite the same litany, regardless of how much they have actually sinned, and the litany does not contain anything about how to sin less the following year. In rationality, it is tempting to recite our flaws without thinking about how to become less flawed. Avoid doing this. If we glorify the act of confessing our flaws, we will forget that the real purpose is to have less to confess. Also, we may become dismissive of people who are trying to come up with strategies that help us become less biased. Focus on how to correct bias and how to become stronger.

A sense that more is possible [? · GW]

- Many people who identify as rationalists treat it as a hobby— it’s a nice thing to do on the side. There is not a strong sense that rationality should be systematically trained. Long ago, people who wanted to get better at hitting other people formed the idea that there is a systematic art of hitting that turns you into a formidable fighter. They formed schools to teach this systematic art. They pushed themselves in the pursuit of awesomeness. Rationalists have not yet done this. People do not yet have a sense that rationality should be systematized and trained like a martial art, with a work ethic and training regime similar to that of chess grandmasters & a body of knowledge similar to that of nuclear engineering. We do not look at this lack of formidability and say “something is wrong.” This is a failure.

7 comments

Comments sorted by top scores.

comment by gjm · 2022-07-17T18:23:09.880Z · LW(p) · GW(p)

On the Second Law of Thermodynamics one: Eliezer does something a bit naughty in this one which has the effect of making it look weirder than it is. He says: suppose you have a glass of water, and suppose somehow you magically know the positions and velocities of all the molecules in it. And then he says: "Does that make its thermodynamic entropy zero? Is the water colder, because we know more about it?", and answers yes. But those are two questions, not one question; and while the answer to the first question may be yes (I am not an expert on thermodynamics and I haven't given sufficient thought to what happens to the thermodynamic definition of entropy in this exotic situation), the answer to the second question is not.

The water contains the same amount of heat energy as if you didn't know all that information. It is not colder.

What is different from if you didn't know all that information is that you have, in some sense, the ability to turn the water into ice plus electricity. You can make it cold and get the extracted energy in usable form, whereas without the information you couldn't do that and in fact would have to supply energy on net to make the water cold.

("In some sense" because of course if you gave me a glass of water and a big printout specifying the states of all its molecules I couldn't in fact use that to turn the water into ice plus electrical energy. But in principle, given fancy enough machinery, perhaps I could.)

Another way of looking at it: if I have a glass of room-temperature water and complete information about how its molecules are moving, it's rather like having a mixture of ice and steam containing the same amount of thermal energy, just as if those molecules were physically separated into fast and slow rather than just by giving me a list of where they all are and how they're moving.

Replies from: JBlack↑ comment by JBlack · 2022-07-18T10:59:46.841Z · LW(p) · GW(p)

Another difference from separated hot & cold reservoirs is that the time horizon for being able to make use of the information is on the order of nanoseconds before the information is useless. Even without quantum messiness and prescribing perfect billiard-ball molecules, just a few stray thermal photons from outside and a few collisions will scramble the speeds and angles hopelessly.

As far as temperature goes it is really undefined, since the energy in the water is not thermal from the point of view of the extremely well informed observer. It has essentially zero entropy, like the kinetic energy of a car or that of a static magnetic field. If you go ahead and try to define it using statistical mechanics anyway, you get a division by zero error: temperature is the marginal ratio of energy to entropy, and the entropy is an unchanging zero regardless of energy.

Replies from: gjm↑ comment by gjm · 2022-07-18T11:37:28.716Z · LW(p) · GW(p)

I think that last bit only applies if we suppose that you are equipped not only with a complete specification of the state of the molecules but with a constantly instantly updating such specification. Otherwise, if you put more energy in then the entropy will increase too and you can say T = dE/dS just fine even though the initial entropy is zero. (But you make a good point about the energy being not-thermal from our near-omniscient viewpoint.)

Replies from: JBlack↑ comment by JBlack · 2022-07-20T09:09:17.333Z · LW(p) · GW(p)

If you just have a snapshot state (even with an ideal model of internal interactions from that state) then any thermal contact with the outside will almost instantly raise entropy to near maximum regardless of whether energy is added or removed or on balance unchanged. I don't think it makes sense to talk about temperature there either, since the entropy is not a function of energy and does not co-vary with it in any smooth way.

comment by qazzquimby (torendarby@gmail.com) · 2022-08-09T00:02:22.596Z · LW(p) · GW(p)

Wow I wish I had searched before beginning my own summary project. [LW · GW]

The projects aren't quite interchangeable though. Mine are significantly longer than these, but are intended to be acceptable replacements for the full text, for less patient readers.

comment by Henry Prowbell · 2022-07-18T13:26:14.597Z · LW(p) · GW(p)

Does anybody know if the Highlights From The Sequences are compiled in ebook format anywhere?

Something that takes 7 hours to read, I want to send to my Kindle and read in a comfy chair.

And maybe even have audio versions on a single podcast feed to listen to on my commute.

(Yes, I can print out the list of highlighted posts and skip to those chapters of the full ebook manually but I'm thinking about user experience, the impact of trivial inconveniences, what would make Lesswrong even more awesome.)

comment by Charbel-Raphaël (charbel-raphael-segerie) · 2022-07-17T10:54:09.770Z · LW(p) · GW(p)

Great summary, I've read the full sequences 4 years ago, but this was a nice refreshing.

I also recommend from time to time to go to the concept lists and to focus randomly on 4-5 tags, and to try to generate/remember some thoughts about them.