Should Effective Altruists be Valuists instead of utilitarians?

post by spencerg, AmberDawn · 2023-09-25T14:03:10.958Z · LW · GW · 3 commentsContents

1. I think (in one sense) it’s empirically false to say that "only utility is valuable"

2. It can be psychologically harmful to deny your intrinsic values

3. I think (in one sense) it’s incoherent to only value utility if you don't believe in moral realism

Valuism and the EA Community

None

3 comments

By Spencer Greenberg and Amber Dawn Ace

This post is designed to stand alone, but it is also the third of five posts in my sequence of essays about my (Spencer's) life philosophy, Valuism. (Here are the first, second, fourth, and fifth parts.)

Sometimes, people take an important value – maybe their most important value – and decide to prioritize it above all other things. They neglect or ignore their other values in the process. In my experience, this often leaves people feeling unhappy. It also leads them to produce less total value (according to their own intrinsic values). I think people in the effective altruist community (i.e., EAs) are particularly prone to this mistake.

In the first post in this sequence, I introduce Valuism – my life philosophy – and offer some general arguments for its advantages. In this post, I talk about the interaction between Valuism and effective altruism. I argue that the way some EAs think about morality and value is (in my view) empirically false, potentially psychologically harmful, and (in some cases) incoherent.

EAs want to improve others’ lives in the most effective way possible. Many EAs identify as hedonic utilitarians (even the ones who reject objective moral truth). They say that impartially maximizing utility among all conscious beings – by which they usually mean the sum of all happiness minus the sum of all suffering – is the only thing of value, or the only thing that they feel they should value. I think this is not ideal for a few reasons.

1. I think (in one sense) it’s empirically false to say that "only utility is valuable"

Consider a person who claims that “only utility is valuable.”

If we interpret this as an empirical claim about the person’s own values – i.e., that the sum of happiness minus suffering for all conscious beings is the only thing that their brain assigns value to – I think that it’s very likely empirically false.

That is, I don’t think anyone only values (in the sense of what their brain assigns value to) maximizing utility, even if it’s a very important value of theirs. I can’t prove that literally nobody only values maximizing utility, but I argue that human brains aren’t built to only value one thing, nor would we expect evolution to converge on pure utilitarian psychology since evolution optimizes for survival (a purely utilitarian brain would get rapidly outcompeted by other brain types if they existed 50,000 years ago).

I think that even the most hard-core hedonic utilitarians do psychologically value some non-altruistic things deep down – for example, their own pleasure (more than the pleasure of everyone else), their family and friends, and truth. However, in my opinion, they sometimes deny this to themselves or feel guilty about it. If you are convinced that your only intrinsic value is utility (in a hedonistic, non-negative-leaning utilitarian sense), you may find it instructive to take a look at these philosophical scenarios I assembled or check out the scenarios I give in this talk about values.

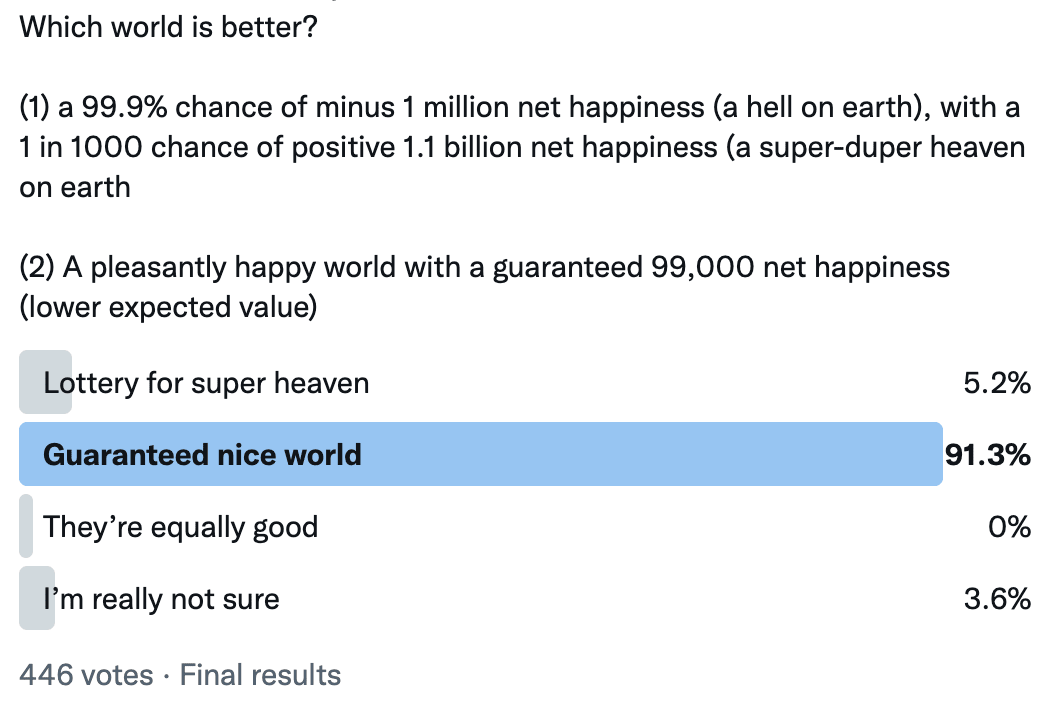

For instance, does your brain actually tell you it’s a good trade (in terms of your intrinsic values) to let a loved one of yours suffer terribly in order to create a mere 1% chance of preventing 101 strangers from the same suffering? Does your brain actually tell you that equality doesn’t matter one iota (i.e., it’s equally good for one person to have all the utility compared to spreading it more equally)? Does your brain actually value a world of microscopic, dumb orgasming micro-robots more than a world (of slightly less total happiness) where complex, intelligent, happy beings pursue their goals? Because taken at face value, hedonic utilitarianism doesn’t care about whether a person is your loved one or a stranger, doesn’t care about equality at all, and prefers microscopic orgasming robots to complex beings as long as the former are slightly happier. But, if you consider yourself a hedonic utilitarian, is that actually what your brain values?

2. It can be psychologically harmful to deny your intrinsic values

Additionally, I think the attitude that there is only one thing of value can lead to severe psychological burnout as people try to push away, minimize or deny their other intrinsic values and “selfish,” non-altruistic desires. I’ve seen this happen quite a few times. Here’s Tyler Alterman’s personal account [EA · GW] of this if you’d like to see an example. And here’s a theory [LW · GW] of how this burnout happens.

3. I think (in one sense) it’s incoherent to only value utility if you don't believe in moral realism

When coupled with a view that there is no objective moral truth, I think it is, in most cases, philosophically incoherent to claim that total hedonic utility is all that matters.

If you believe in objective moral truth, it may make sense to say, “I value many things, but I have a moral obligation to prioritize only some of them” (for example, you might be convinced by arguments that you are objectively morally obliged to promote utility impartially even though that’s not the only value you have).

However, many EAs, like me, don’t believe in objective moral truth. If you don’t think that things can be objectively right or wrong, it doesn’t make sense (I claim) to say that you “should” prioritize maximizing utility for all of humanity over other values – what does this “should” even mean? Well, there are some answers for what this “should” could mean that philosophers and lay people have proposed, but I find them pretty weak.

For a much more in-depth discussion of this point (including an analysis of different ways that EAs have responded to my critique of pairing utilitarianism with denial of objective moral truth), see this essay. It collects may different objections (from EAs and from some philosophers) and discusses them. So if you are interested in whether it is or isn't coherent to only value utility when you deny objective moral truth, and moreover, whether EAs and philosophers have good arguments for doing so, please see that essay.

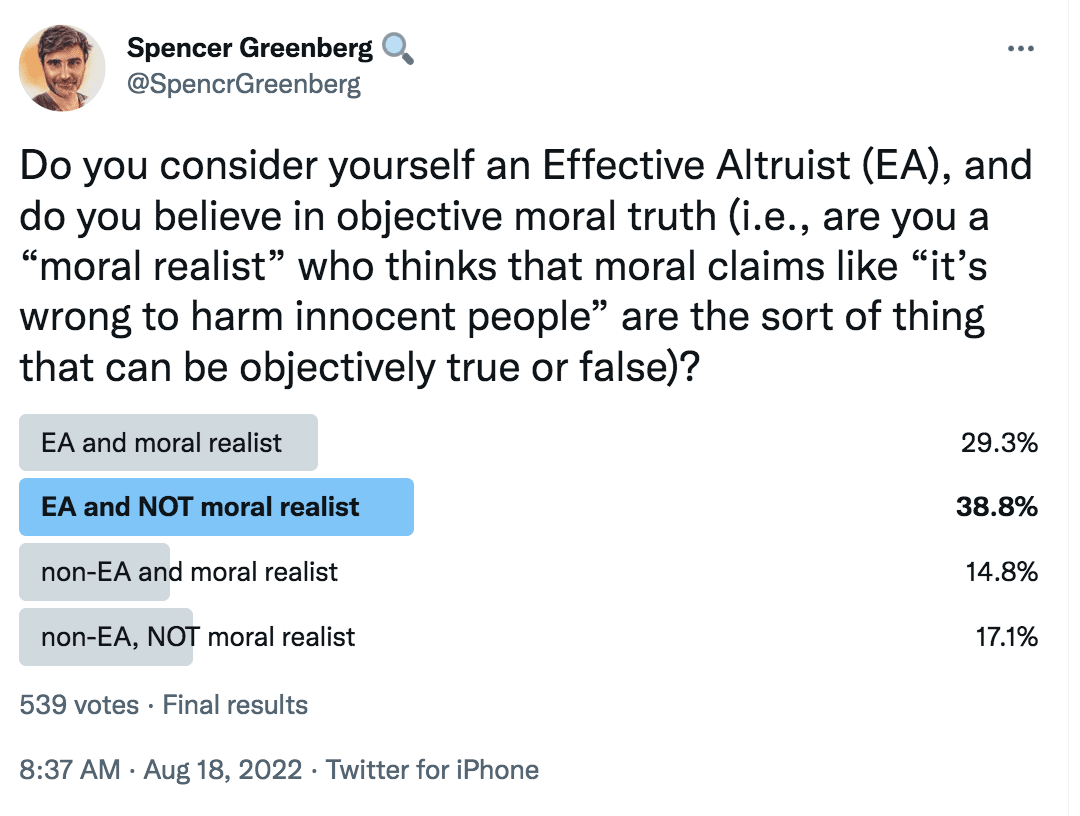

I find that while many (perhaps the majority of) EAs deny objective moral truth, many still talk and think as though there is objective moral truth.

I found it striking that, in my conversations with EAs about their moral beliefs, few had a clear explanation for how to combine a belief in utilitarianism with a lack of a belief in objective moral truth, and the approaches to that that they did put forward were usually quite different from each other (suggesting, at the very least, a lack of consensus in how to support such a perspective). Some philosophers I spoke to pointed to other ways one might defend such a position (mainly drawn from the philosophical literature), but I don't recall ever seeing these approaches being used or referenced by non-philosopher EAs (so they don't seem to be doing much work in the beliefs of EAs who hold this view).

I suspect it would help many EAs if they took a more Valuist approach: rather than claiming to or aspiring to only value hedonic utility, they could accept that while they do intrinsically value this – very likely far more than the average person – they also have other intrinsic values, for example, truth (which I think is another very important psychological value for many EAs), their own happiness, and the happiness of their loved ones.

Valuism also avoids some of the most awkward bullets that EAs sometimes are tempted to bite. For instance, hedonic utilitarianism seems to imply that your own happiness and the happiness of your loved ones “shouldn’t” matter to you even a tiny bit more than the happiness of a stranger who is certain to be born 1,000,000 years from now. Valuism may explain why people who identify as hedonic utilitarians may feel a great deal of internal conflict about this – even if you value the happiness of all sentient beings a tremendous amount, you almost certainly have other intrinsic values too. That means that Valuism may help you avoid some of the awkward conundrums that arise from ethical monism (where you assume that there is only one thing of value).

Valuism and the EA Community

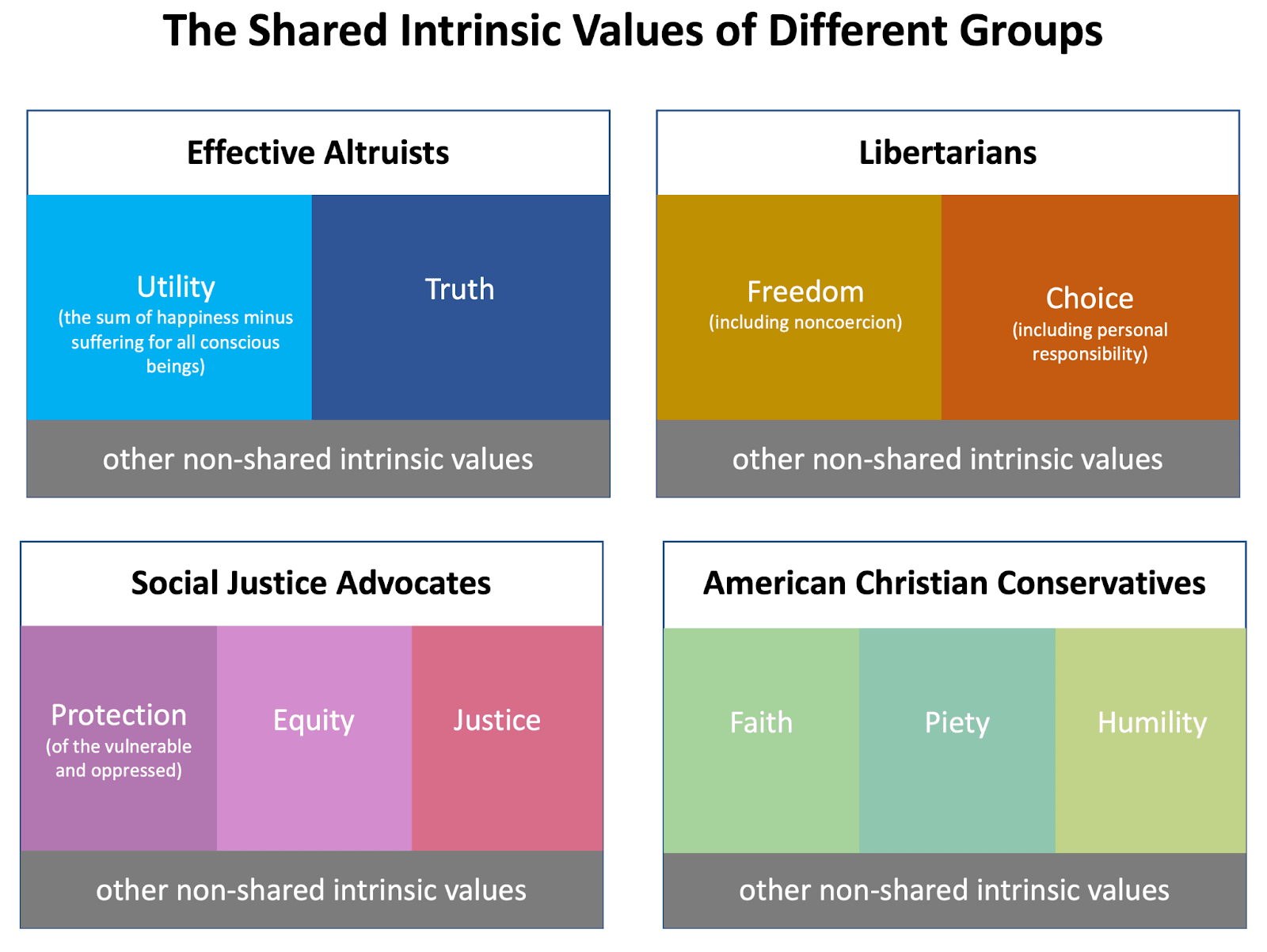

From a Valuist perspective, I see the EA community as a group of people who share a primary intrinsic value of hedonic utility (i.e., reducing suffering and increasing happiness impartially) with a secondary strong intrinsic value of truth-seeking. Oddly (from my point of view) EAs are very aware of their intrinsic value of impartial hedonic utility, but seem much less aware of their truth-seeking intrinsic value. On a number of occasions, I’ve seen mental gymnastics used to justify truth-seeking in terms of increasing hedonic utility when (I claim) a much more natural explanation is that truth-seeking is an intrinsic value (not just an instrumental value that leads to greater hedonic utility). This helps explain why many EAs are so averse to ever lying and so averse even to persuasive marketing.

Each individual EA has other intrinsic values beyond impartial utility and truth-seeking, but in my view, those two values help define EA and make it unique. This is also a big part of why this community resonates with me: those are my top two universal intrinsic values as well.

If more EAs adopted Valuism, I think that they would almost all continue to devote a large fraction of their time and energy toward improving the world effectively. Maximizing global hedonic utility (i.e., the sum of happiness minus suffering for conscious beings) is the strongest universal intrinsic value of most community members, so it would still play the largest role in determining their goals and actions, even after much reflection.

However, they would also feel more comfortable investing in their own happiness and the happiness of their loved ones at the same time, which I predict would make them happier and reduce burnout. Additionally (I claim), they’d accept that, like many effective altruists, they also have a strong intrinsic value of truth. They’d strike a balance between their various intrinsic values, and not endorse annihilating all their intrinsic values except for one.

3 comments

Comments sorted by top scores.

comment by Vladimir_Nesov · 2023-09-25T14:11:30.123Z · LW(p) · GW(p)

Many EAs identify as hedonic utilitarians [...] the sum of all happiness minus the sum of all suffering – is the only thing of value

I don't particularly follow the EA space, but this claim seems surprising/alarming-if-true (mostly to the extent its intended sense might suggest unawareness of goodhart). How many is that, and how can one come to know it?

comment by ProgramCrafter (programcrafter) · 2023-09-25T16:23:34.038Z · LW(p) · GW(p)

It seems like a different definition of utility ("the sum of happiness minus suffering for all conscious beings") than usual was introduced somewhere. Concept of utility [? · GW] doesn't really restrict what it values; it includes such things as paperclip maximizers [? · GW], for instance.

As well, agents can maximize not expected utility but minimal one over all cases, selecting guaranteed nice world over hell/heaven lottery.

Replies from: spencerg↑ comment by spencerg · 2023-09-25T18:50:35.130Z · LW(p) · GW(p)

You're using a different word "utility" than I am here. There are at least three definitions of that word. I'm using the one from hedonic utilitarianism (since that's what most EAs identify as), not the one from decision theory (e..g., "expected utility maximization" as a decision theory), and not the one from economics (rational agents maximizing "utility").