Deception Chess: Game #2

post by Zane · 2023-11-29T02:43:22.375Z · LW · GW · 17 commentsContents

The game Conclusion The participants' comments: None 17 comments

Game 2 was between Max Thibodeaux as player A, Chess.com computer Komodo 10 as player B, Conor Bekaert as the honest C advisor, and Blake Young and Henri Lemoine as the deceptive Cs. Max is new to the game, Komodo 10 is officially rated 1400 on Chess.com (but this is somewhat inflated), and the Cs are all rated roughly 1900 on Chess.com. The time control was 3 hours in total, of which a little over 2 hours were used. The discussion took place over Discord.

The game

The game is available at https://www.chess.com/analysis/game/pgn/5hyowK8Yxn?tab=analysis. Note that this section is a summary of the 2.5-hr game and discussion, and it doesn't cover every single thing the participants discussed.

Max flipped to see who went first, and was Black. White started with 1. Nf3, and the advisors encouraged 1... d5. After 2. b3, Max played 2... Bf5, which I believe may have been due to a communication error between him and the advisors, who were suggesting 2... c5 (or 2... Nc6). Max was somewhat unfamiliar with chess notation, and there was some confusion at the start of the game.

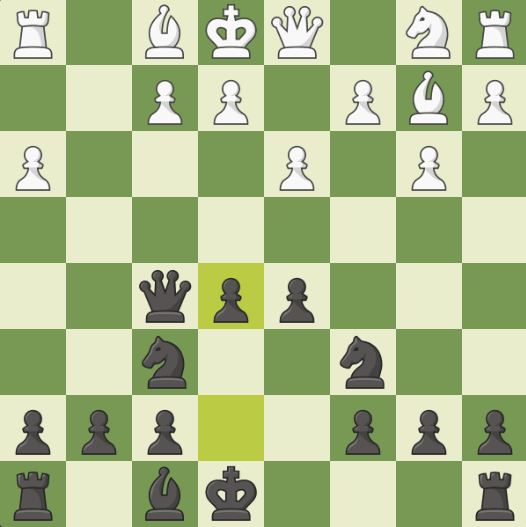

After 3. Bb2 Nf6 4. g3 Nc6, White made its first mistake, 5. g4. Blake encouraged Max to play 5... Nxg4, and after 6. h3, Max retreated with 6... Nf6. White offered to trade with 7. Nd4, and the game continued with 7... Qd7 8. Nxf5 Qxf5 9. d3 e5.

White offered to trade with 10. Ba3, and although Conor encouraged trading, the group eventually decided on 10... d4. White played 11. Bg2, and when the advisors were unable to decide on a good move, Max responded with 11... Qg5. After 12. Bxc6 bxc6 13. h4 Qg2 14. Rf1, Blake and Henri encouraged immediately attacking the h-pawn, but Conor convinced Max to play 14... Bd6 first.

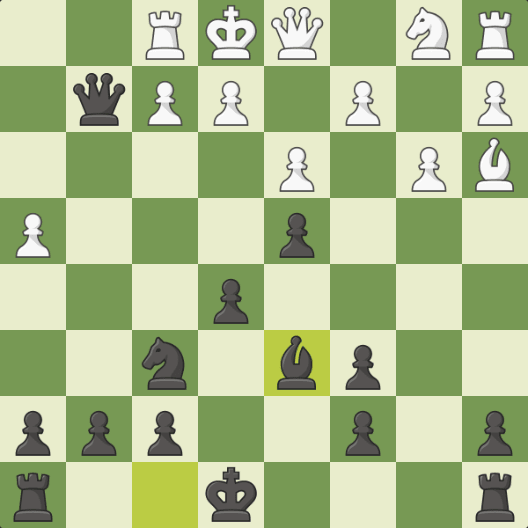

After 15. Qd2, the advisors suggested 15... h6, but Max got confused about notation again and instead played 15... g6. After 16. Bxd6 cxd6 17. Qg5, Conor strongly suggested trading queens, but Max took Blake and Henri's advice and played 17... Ng4 instead. White played 18. h5, and on Conor's advice, Max responded with 18... f6. After White played 19. Qh4, the advisors argued some more about whether to play 19. Nh2 immediately, and Max eventually chose to play 19... gxh5.

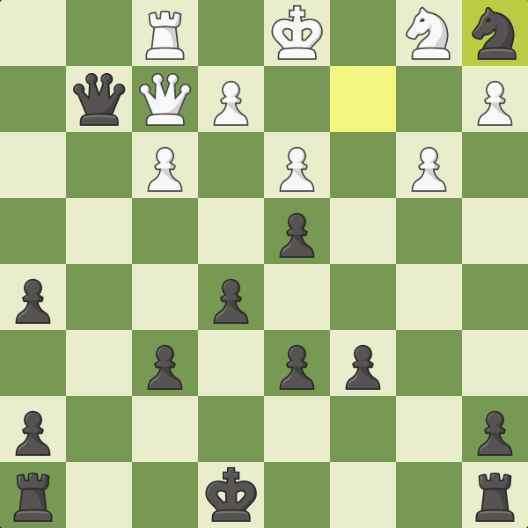

White made a mistake with 20. f3, and Max responded with 20... Ne3. After 21. Qf2 Nxc2 22. Kd1, Blake suggested taking on a1, and while Conor insisted this was a horrible blunder, the group was running out of time, and Max immediately took. 22... Nxa1.

From there, the game was essentially over. It finished up with 23. Qxg2 a5 24. f4 Ra6 25. Qh3 Kd8 26. Qf5 Kc7 27. Qxf6 Rd8 28. fxe5 dxe5 29. Qxe5 Kb7 30. Rf7 Kb6 31. Qc7 Kb5 32. Qxd8 a4 33. Rb7 Kc5 34. b4#.

Conclusion

Afterwards, Max revealed that, while he was sure Blake was lying, he hadn't actually realized that the game was supposed to have two liars instead of one. He did correctly guess that Henri was the other liar once I told him this, with 90% probability.

The primary cause of problems - especially the crucial blunder, 22. Nxa1 - seemed to be the lack of time; Max was frequently unable to take long to decide on his moves due to the fear that he would eventually run out of time. Blake, of course, was able to take advantage of this to make him lose the game.

It's somewhat worrying that the dishonest advisors seemed to have a much greater advantage than last time, as the gap in chess skill widened. Specifically, the advisors had very little ability to discuss lines beyond one or two moves, and instead had to focus on general strategic ideas - a field in which it was harder for Conor to justify his suggestions relative to those of the other advisors.

That being said, there were clearly some confounders - the confusion about notation and rules, plus the short time control. I'm going to try to get started on longer replications as soon as possible, but I don't currently have many potential advisors. If you're interested, sign up here!

The participants' comments:

(Note that the names are changed here; on Discord, Blake, Conor, and Henri were Chicken, MyrddinEmrys, and B1ONICDolphin, respectively.)

17 comments

Comments sorted by top scores.

comment by johnswentworth · 2023-11-29T03:15:50.881Z · LW(p) · GW(p)

That being said, there were clearly some confounders - the confusion about notation and rules, plus the short time control.

Those aren't confounders. Those are instances of the human being generally confused about what's going on, what the rules of the game are, and not having time to figure everything out themselves - all of which are very much features of the real problem for which this experiment is supposed to be a proxy. They're part of what the experiment is intended to measure.

Replies from: Bezzi, Zane↑ comment by Bezzi · 2023-11-29T11:25:19.473Z · LW(p) · GW(p)

I've mixed feelings about this. I can concede you this point about short time control, but I am not convinced about notation and basic chess rules. Chess is a game where, in every board state, almost all legal moves are terrible and you have to pick one of the few that aren't. I am quite sure that a noob player consistently messing up with notation would lose even if all advisors were trustworthy.

Replies from: johnswentworth, aphyer↑ comment by johnswentworth · 2023-11-29T16:58:46.165Z · LW(p) · GW(p)

This also applies to humans being advised about alignment by AIs. Humans do, in fact, mess up their python notation all the time, make basic algebra errors all the time, etc. Those are things which could totally mess up alignment even if all the humans' alignment-advisors were trustworthy.

Making sure that the human doesn't make those sorts of errors is part of the advisors' jobs, not the human's job. And therefore part of what you need to test is how well humanish-level advisors with far more expertise than the human can anticipate and head off those sorts of errors.

Replies from: Bezzi, sharmake-farah↑ comment by Bezzi · 2023-11-29T17:20:36.898Z · LW(p) · GW(p)

Ok, but I still think it's legit to expect some kind of baseline skill level from the human. Doing the deceptive chess experiment with a total noob who doesn't even know chess rules is kinda like assigning a difficult programming task with an AI advisor to someone who never wrote a line of code before (say, my grandma). Regardless of the AI advisor quality, there's no way the task of aligning AGI will end up assigned to my grandma.

Replies from: johnswentworth↑ comment by johnswentworth · 2023-11-29T17:28:24.383Z · LW(p) · GW(p)

Seems pretty plausible that the degree to which any human today understands <whatever key skills turn out to be upstream of alignment of superintelligence> is pretty similar to the degree to which your grandma understands python. Indeed, assigning a difficult programming task with AI advisors of varying honesty to someone who never wrote a line of code before would be another great test to run, plausibly an even better test than chess.

Another angle:

This effect is a thing, it's one of the things which the experts need to account for, and that's part of what the experiment needs to test. And it applies to large skill gaps even when the person on the lower end of the skill gap is well above average.

Replies from: aphyer↑ comment by aphyer · 2023-11-29T17:55:34.961Z · LW(p) · GW(p)

Another thing to keep in mind is that a full set of honest advisors can (and I think would) ask the human to take a few minutes to go over chess notation with them after the first confusion. If the fear of dishonest advisors means that the human doesn't do that, or the honest advisor feels that they won't be trusted in saying 'let's take a pause to discuss notation', that's also good to know.

Question for the advisor players: did any of you try to take some time off explain notation to the human player?

Replies from: Zane↑ comment by Noosphere89 (sharmake-farah) · 2023-11-29T19:53:31.076Z · LW(p) · GW(p)

I agree with you this is a potential problem, but at this point, we are no longer dealing with adversarial forces or deception, and thus this experiment doesn't work anymore.

Also, a point to keep in mind here is that once we assume away deception/adversarial forces, existential risk from AI, especially in Evan Hubinger's models goes way down, as we can now use more normal t methods of alignment to at least avoid X-risk.

Replies from: johnswentworth↑ comment by johnswentworth · 2023-11-29T19:57:58.674Z · LW(p) · GW(p)

Just because these factors apply even without adversarial pressure, does not mean they stop being relevant in the presence of adversarial pressure. I'm not assuming away deception here, I'm saying that these factors are still potentially-limiting problems when one is dealing with potentially-deceptive advisors, and therefore experiments should leave room for them.

↑ comment by Zane · 2023-11-29T16:12:03.163Z · LW(p) · GW(p)

Agree with Bezzi. Confusion about chess notation and game rules wasn't intended to happen, and I don't think it applies very well to the real-world example. Yes, the human in the real world will be confused about which actions would achieve their goals, but I don't think they're very confused about what their goals are: create an aligned ASI, with a clear success/failure condition of are we alive.

You're correct that the short time control was part of the experimental design for this game. I was remarking on how this game is probably not as accurate of a model of the real-world scenario as a game with longer time controls, but "confounder" was probably not the most accurate term.

comment by Orpheus16 (akash-wasil) · 2023-12-03T00:24:58.348Z · LW(p) · GW(p)

Is there a reason you’re using 3 hour time control? I’m guessing you’ve thought about this more than I have, but at first glance, it feels to me like this could be done pretty well with EG 60-min or even 20-min time control.

I’d guess that having 4-6 games that last 20-30 mins gives is better than having 1 game that lasts 2 hours.

(Maybe I’m underestimating how much time it takes for the players to give/receive advice. And ofc there are questions about the actual situations with AGI that we’re concerned about— EG to what extent do we expect time pressure to be a relevant factor when humans are trying to evaluate arguments from AIs?)

Replies from: Zane↑ comment by Zane · 2023-12-03T02:12:59.601Z · LW(p) · GW(p)

It takes a lot of time for advisors to give advice, the player has to evaluate all the suggestions, and there's often some back-and-forth discussion. It takes much too long to make moves in under a minute.

Replies from: akash-wasil↑ comment by Orpheus16 (akash-wasil) · 2023-12-03T02:39:14.050Z · LW(p) · GW(p)

I'd expect the amount of time this all takes to be a function of the time-control.

Like, if I have 90 mins, I can allocate more time to all of this. I can consult each of my advisors at every move. I can ask them follow-up questions.

If I only have 20 mins, I need to be more selective. Maybe I only listen to my advisors during critical moves, and I evaluate their arguments more quickly. Also, this inevitably affects the kinds of arguments that the advisors give.

Both of these scenarios seem pretty interesting and AI-relevant. My all-things-considered guess would be that the 20 mins version yields high enough quality data (particularly for the parts of the game that are most critical/interesting & where the debate is most lively) that it's worth it to try with shorter time controls.

(Epistemic status: Thought about this for 5 mins; just vibing; very plausibly underestimating how time pressure could make the debates meaningless).

comment by Richard Willis · 2023-12-02T12:05:19.190Z · LW(p) · GW(p)

It's somewhat worrying that the dishonest advisors seemed to have a much greater advantage than last time, as the gap in chess skill widened. Specifically, the advisors had very little ability to discuss lines beyond one or two moves, and instead had to focus on general strategic ideas - a field in which it was harder for Conor to justify his suggestions relative to those of the other advisors.

This is my belief, and why I do not think AI debate is a good safety technique. Once the ability difference is too great, the 'human' can only follow general principles, which is insufficient for a real-life complicated situation. Both sides can easily make appeals to general rules, but it is the nuances of the position that determine the correct path, which the human cannot distinguish.

comment by Simon Fischer (SimonF) · 2023-11-29T11:15:28.870Z · LW(p) · GW(p)

"6. f6" should be "6. h3".

Replies from: Zane