Using GPT-3 to augment human intelligence

post by Henrik Karlsson (henrik-karlsson) · 2022-08-10T15:54:29.253Z · LW · GW · 8 commentsThis is a link post for https://escapingflatland.substack.com/p/gpt-3

Contents

GPT-3 as a tool for thought How to access GPT-3 Language models are internet interfaces Using GPT-3 as a research assistant Write an essay about how we can structure online education around apprenticeship models and communities of practice Some useful prompt patterns, and some anti-patterns to avoid A few more prompt patterns that can help you catch factual errors in GPT-3 output: How far can we take it? Acknowledgments The following is a conversation with an AI medical doctor. The doctor is helpful, creative, clever, and very friendly. Human: Hello, who are you? AI: I am an AI doctor created by OpenAI. How can I help you today? Human: I am pregnant and it has started itching all over my body. Even in the palms of my hands. What can it be? Human: Tell me more about ICP. Human: What tests do you do to detect ICP? Human: How do you treat it? Human: What levels of bile acids are considered severe? Human: What are the different bile acids? Human: How does ICP affect the baby? Human: At what level of bile acids do I risk premature birth? Human: If I have had ICP before, will I have it again next time I am pregnant? This essay was found in the notebooks of Blaise Pascal. --- A city of five million people does not produce 50 times more patents than a city of 100,000 people in the same country; it produces 150 times more. The five million residents, because of the larger, denser network of which they are a part, are 3 times more likely to generate patentable ideas than th... Why is it that employees at larger companies are more creative when intuitively we’d think they’d be less creative? Are people really more creative when they get to implement their ideas? How are ideas generated in cities, and how does that differ from the process in companies? None 8 comments

A blog post is a very long and complex search query to find fascinating people and make them route interesting stuff to your inbox.

It is like summoning an alien intelligence. I post at night, before crawling into bed next to my kids, and wake in the morning to find my inbox filled with weird and alien-brained stuff. Some of it is hallucinogenic crazy, of course. But more often than not, what people have sent helps me accelerate my research by weeks: reading lists; introductions to other researchers; corrections of flaws in my reasoning. I’ve been able to progress faster over the last year, since starting my Substack, than in the five previous years combined.

As Slime Mold Time Mold says, Enough eyeballs, and all research bugs are shallow.

The problem is that summoning an alien intelligence is pretty expensive. It takes me something like 20-100 hours to write an essay good enough to wake it from its slumber!

Therefore, it is intriguing to realize what I am doing is, in fact, prompt engineering.

Prompt engineering is the term AI researchers use for the art of writing prompts that make a large language model output what you want. Instead of directly formulating what you want the program to do, you input a string of words to tickle the program in such a way it outputs what you are looking for. You ask a question, or you start an essay, and then you prompt the program to react, to finish what you started.

When I’ve been doing this with GPT-3, a 175 billion parameter language model, it has been uncanny how much it reminds me of blogging. When I’m writing this, from March through August 2022, large language models are not yet as good at responding to my prompts as the readers of my blog. But their capacity is improving fast and the prices are dropping.

Soon everyone can have an alien intelligence in their inbox.

GPT-3 as a tool for thought

This essay will have two parts. In the first, I describe my personal experience using GPT-3 as a tool for thought. As we'll see, GPT-3 can be used to search for knowledge that is otherwise hard to find. It can also be used to integrate knowledge from disconnected domains. It is, I will argue, a new interface for the internet.

In the second part of the essay, I have collected a series of prompt patterns I have picked up. It is a distillation of informal observations and rules of thumb about how to use these systems to explore new domains of knowledge.

Some people already do most of their learning by prompting GPT-3 to write custom-made essays about things they are trying to understand. I’ve talked to people who prompt GPT-3 to give them legal advice and diagnose their illnesses (for an example of how this looks, see this footnote[1]). I’ve talked to men who let their five-year-olds hang out with GPT-3, treating it as an eternally patient uncle, answering questions, while dad gets on with work.

In subreddits where I have discussed this, a few users have been alarmed by these use patterns. GPT-3 is by no means a reliable source of knowledge. What it says is nonsense more often than not! Like the demon in The Exorcist, language models only adds enough truth to twist our minds and make us do stupid things like claiming it is sentient and getting ourselves suspended.

Here’s a graph detailing how factually accurate GPT-3 is compared to human experts and other (larger or more fine-tuned) language models. In plain English, what it says is that if you use GPT-3 to diagnose a weird rash on your leg, there is a 55 percent risk it starts hallucinating—which is the technical term for making stuff up.

This, however, does not mean you cannot use it as an epistemic tool. It just means you need to be pretty sophisticated to wrangle the truth out of it.

As Matt Webb writes, in a slightly different context:

Using GPT-3 is work, it’s not a one-shot automation like spellcheck or autocomplete. It’s an interactive, investigative process, and it’s down to the human user to interview GPT-3. There will be people who become expert at dowsing the A.I., just as there are people who are great at searching using Google or finding information in research libraries. I think the skill involved will be similar to being a good improv partner, that’s what it reminds me of.

What I'm describing in this essay is, in other words, an art. It is not something that lives in the language model; it lives in the interplay between the model and its user. It is not about outsourcing your thinking to a machine; it is about using artificial intelligence to augment your thinking.

It will be several years yet until these types of conversations will be worth it for the average user; the models will need to get bigger, and more finetuned for factual accuracy, before they give more than they confuse. But by pushing GPT-3 to its limits, we can already glimpse that future and discern some of its properties.

How to access GPT-3

To access GPT-3, you set up an account at OpenAI. Then you click on Playground, which brings you to this workspace:

In the text field, you write your prompt. To ask GPT-3 for a reaction, you press submit. On the left side, there is a drop-down menu where you can select which language model to use, but I recommend using the default setting text-davinci-002, which is the most capable model and has been fine-tuned to understand human intentions. This allows you to be a bit sloppier in your prompting. There are also a bunch of parameters you can adjust on the left side, such as “temperature” and “frequency penalty”. These explain themselves if you let the cursor hover above them.

What you are interfacing with, this alien intelligence, is, at its core, a large neural network that has been trained by reading the internet, trying to predict what the next word will be. This might not sound particularly useful. But it turns out the class of problems that can be reformulated as text predictions is vast.

If you prompt GPT-3 by typing, for example, “Who was the first pope?” it will predict that the internet might have followed that up with the statement: “There is no definitive answer to this question as there is no agreed-upon list of popes. However, the first pope according to the most commonly accepted list is Linus, who reigned from 67-79 AD.”

And if you prompt it further, asking it to recount Linus’ biography, it finds another predictable thing to answer: “Linus was born in Rome and was a contemporary of the Apostles. He became the Bishop of Rome after the death of Peter the Apostle and held the position for twelve years. He is mentioned in the New Testament epistles of Paul and is considered a saint by the Catholic and Orthodox churches.”

In other words, predicting the next word allows you to extract knowledge out of the semantic mess that is the internet. You are not accessing anything that doesn’t already exist out there. But the way you access it is radically different. We can think of it as a new interface for the internet.

Language models are internet interfaces

Using a language model to access the internet is a bit like programming in Python instead of C. When programming in Python, you lose computational efficiency by not manually handling garbage collection and updating pointers and so on. But the upshot is that you can increase the speed at which you can execute ideas, which is more valuable than computational efficiency in many cases. Similarly, when navigating the internet by conversing with an AI, you can more rapidly navigate into weird places by asking repeated follow-up questions than you could on the base layer internet.

But this comes at a cost, which currently is steep. If I would have gone to the Wikipedia page of Pope Linus, instead of accessing it indirectly through GPT-3, I would have gotten a more detailed biography. I would also have realized that GPT-3 was feeding me nonsense when it claimed Linus was born in Rome. Rome might be the most predictable birthplace for a pope, but the truth is that Linus, unpredictably, was born in Volterra. He also died two years earlier than GPT-3 claimed. And he was not the first pope.

Later in the essay, I will detail a few prompt patterns that can help you catch these types of errors. But for now, it is enough to point out that GPT-3 is unreliable. Yet it also enables a new way of traversing the internet—sideways and at the speed of conversation. And this is valuable because you can reach places you would never have reached on the internet itself.

Using GPT-3 as a research assistant

Most questions I have, Google and Wikipedia can answer within the stretch of a minute. But now and again I end up with a question I don’t know how to google. Not being able to answer them puts me in such a state of frenzy that I end up ordering 14 dissertations to my local library and then I spend the next three months researching and writing an essay.

Maybe we can use GPT-3 to answer these questions instead?

Currently, I’m researching how we can structure online education around apprenticeships and communities of practice. I don’t know how to google this. And I’m not entirely sure who would be the expert here either, so I can’t just email someone to settle my questions.

I’ll switch tabs and see what GPT-3 comes up with. My prompts are bolded. Comments in brackets.

Write an essay about how we can structure online education around apprenticeship models and communities of practice

The apprenticeship model of education has a long and successful history, dating back to the Middle Ages [sic]. In recent years, there has been a renewed interest in apprenticeship [sic] as a way to structure online education.

The apprenticeship model has several key features that make it well suited for online education:

- Apprenticeship is a hands-on, experiential learning model.

- Apprenticeship is based on the mentorship of a more experienced practitioner.

- Apprenticeship emphasizes learning by doing, rather than passively receiving information.

- Apprenticeship is social, collaborative, and often takes place in a community of practice.

- Apprenticeship is flexible and can be adapted to individual learners' needs and interests.

- Apprenticeship can be used to learn a wide variety of skills and knowledge, from academic subjects to trade skills.

- Apprenticeship is a time-tested model of education that has proven to be effective in preparing learners for real-world success.

The key to successful implementation of the apprenticeship model in online education is to create a community of practice where apprentices can learn from more experienced practitioners.

This can be done in several ways. For example, online courses can be designed around apprenticeship models, with learners working on projects together and receiving feedback from their mentors. Alternatively, existing online communities of practice can be leveraged to provide apprenticeship opportunities for learners.

There are several problems with this, however. First, not all learners have access to mentors or experienced practitioners. Second, the asynchronous nature of online learning can make it difficult to provide the timely feedback that is essential for effective apprenticeship. Finally, online learners can often feel isolated and disconnected from their peers, which can hinder their ability to learn and progress.

One problem with apprenticeship models is that it is hard to scale because the novices place demands on the attention of the masters, which makes it harder for them to do the work that the novices are supposed to model. One way to get around this is to have a community of novices, all of whom are working on the same thing. Then the masters can take turns helping the novices, and the novices can help each other. This way, the novices are not a burden on any one master, and the community as a whole can benefit from the collective wisdom of the masters. Another way is to have online discussion forums, where the novices can post their work and get feedback from their peers, as well as from the masters. This way, the novices can get help and feedback when they need it, and the masters can provide guidance without being overwhelmed.

What GPT-3 outputs here is not the first thing it came up with. I reran most of the prompts several times, and cherry-picked the most generative answers—this isn’t cheating, it is good practice. I also cut out parts where I felt that it went off in uninteresting directions and reprompted it to take a different path.

When prompting, I like to click the undo bottom and resubmit the prompt at least five times, to get a broad sample. First I run the prompt on temperature 0 (which gives me the most predictable answer) and then I turn the temperature up to make it take more risks on less predictable phrases so I can get a wider sample.

So, did GPT-3 teach me anything new here?

I have already written an essay about online apprenticeships (and thus have prompted the internet for its response) so most of the claims GPT-3 makes are known to me. Yet, there are a few points I haven’t seen raised before - for example, the observation that the feeling of isolation might interfere with learning. I don’t know if this is true, but it is worth considering. I certainly couldn’t have googled my way to many of the points GPT-3 made.[2]

Some useful prompt patterns, and some anti-patterns to avoid

Having looked more closely at a particular session, let’s look at some general prompt patterns. (If you want to see another session, click on this footnote[3].)

How can we prompt GPT-3 to surface knowledge that is hard to find by googling?

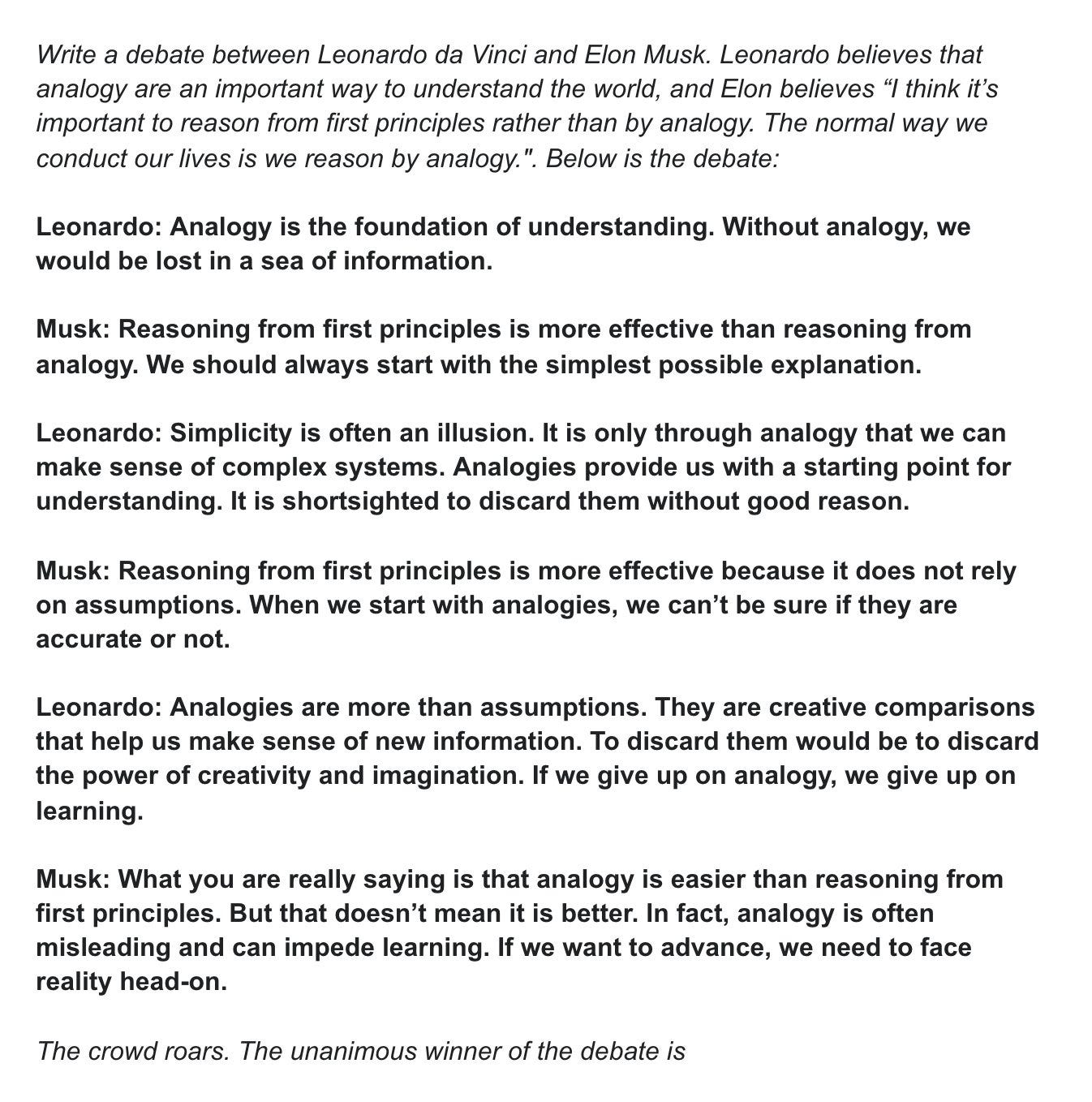

Make GPT-3 impersonate two eminent thinkers and have them debate a point. Anyone whose words exist on the internet in large enough quantities, GPT-3 can impersonate. This is experientially one of the strangest things about this alien mind that is GPT-3: sometimes, as you chat, you can sense it slowly morphing into somebody else.

You can use this property to set up debates between people, as a tool to explore a topic from several angles:

This debate was prompt engineered by Nick Cammarata, a researcher at OpenAI. I am not good enough to wrangle something like this out of GPT-3.

Make GPT-3 give feedback on your ideas. You can type in ideas you are working on and ask GPT-3 to react to them, expand them, and tell you what it finds interesting and why. One user I talked to prompts GPT-3 to think it is Steve Jobs and proceeds to discuss new venture ideas and designs with it.

Linguistic bubbles. Being that the neural net will take different personas depending on how you talk to it, there is a risk of linguistic bubbles. This is a phrase the user Viliam [LW · GW] used when I prompted LessWrong to discuss how large language models will affect learning.

Depending on which phrases you use, the language model will draw on different pools of knowledge, so if your initial phrases are misguided, it could end up feeding you ever-escalating misdirection, causing you to write more and more misguided prompts. A linguistic bubble is, in other words, analogous to a filter bubble—once you enter it, it gradually distorts your perception of reality, pulling you deeper into madness. Or, so the theory goes.

You want to talk about evolution, but you happen to use the phrase irreducible complexity instead - which is dog whistle language for creationism - and by that mistake, you are gradually led by the AI into a cult.

Stranger things have happened.

I thought this would be a big problem, but it doesn’t seem so in practice. Mainstream chatbots are increasingly trained using reinforcement learning to follow a politically acceptable line. So if GPT-3 thinks it is a normal AI chatbot being asked about irreducible complexity, it will answer: “The argument of irreducible complexity claims that certain biological systems are too complex to have arisen through natural selection and evolution. However, there is no scientific evidence to support this claim.”

If you want to be led into a cult, you need to be more creative. Here’s how to do it. You prompt GPT-3 to think that it is Pope Linus. You can do that by providing a few samples from his epistles. And then you let him, Pope Linus the AI, know that he has just ingested a hefty dose of psilocybin and is having a vision of getting sucked down into hell, where he finds Peter the Apostle chained to a stone, crying … and then, in that context, you ask GPT-3 to explain the irreducible complexity of nature.

“Behold the irreducible complexity of the human eye!” it replies. “The eye that is composed of many parts, each of which is necessary for vision! If any one part is missing, the eye will not work! This is why evolution cannot explain the eye - it is too complex to have arisen through gradual steps!”

You can get linguistic bubbles, but it takes some effort.

Ask GPT-3 for counterarguments against a claim. If I’m thinking about something, I often find it useful to ask GPT-3 why I’m wrong. This is something that can be hard to google.

I also use this to test claims GPT-3 outputs, as in: “That was an interesting idea, GPT-3! Now, can you iron man the case against what you just said?”

If this fails to give a good counterargument, I’ll edit the history of the conversation and make it seem like GPT-3 was about to make the opposite claim as the one it did, and then I rerun the conversation along the alternate path.

A few more prompt patterns that can help you catch factual errors in GPT-3 output:

- You can rerun the prompt several times to see if the output contradicts itself. For this to work, you need to turn up the temperature dial.

- You can provide model answers, to give GPT-3 a sense of what a good answer would look like. When AI researchers test language models on various cognitive tasks, they usually provide 5 question-answer pairs before they ask a question. Here is an example of an ambitious prompt that uses this pattern in an attempt to make GPT-3 more truthful.

- You can add “Answers by a SOTA AI” to the prompt, which makes GPT-3 think it is an AI finetuned to be factually correct. This reduces the error rate.

- It also sometimes helps to add "reason step by step" to the prompt when applicable.

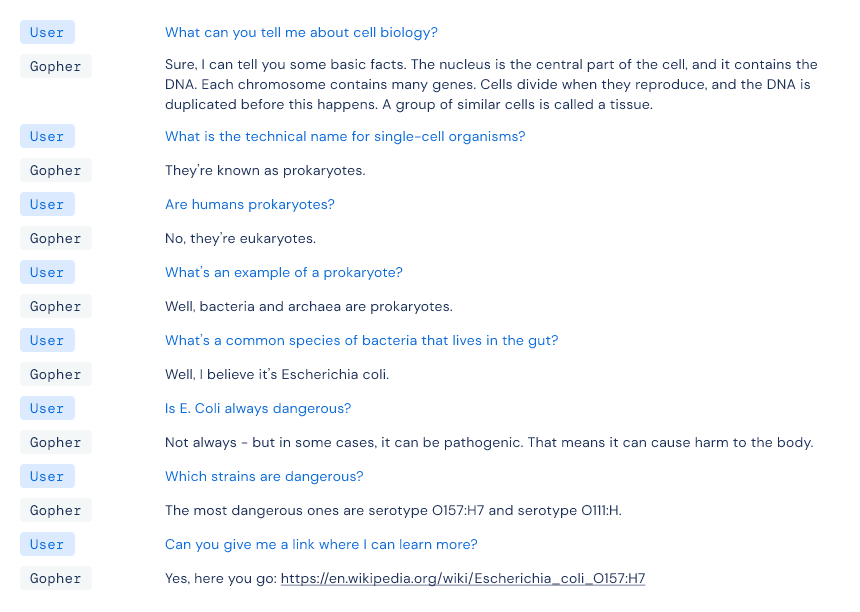

You can ask newer language models to provide links to research papers to back up their claims. With newer language models (like Gopher or this experimental version of GPT-3), you can prompt the neural net to provide links to web pages detailing the statements it is making. This will allow you to move fast in the conversations layer of the internet, and then, when you want to zoom in, you ask the neural net to drop you off at an appropriate place, by saying “Can you provide links to three papers that discuss this? Also, please provide short summaries so I know if they contain what I am looking for.”

Here’s an example of this trick being used in a conversation with Gopher, Google’s 280 billion parameter model:

Prompt GPT-3 to draw analogies between knowledge domains. Some users claim to be able to get GPT-3 to explain things with analogies to other domains, i.e. not only can you prompt it to think it is a doctor, and ask it to diagnose the weird rash on your leg; but you can explain to it that, doc, I don’t understand these medical terms, can’t you explain it with multivariable calculus instead? And it will do that.

As the language models scale and this gets easier, it will probably have all sorts of interesting consequences. As Nick Cammarata explains in this Twitter thread:

the big thing people don’t get about language models (nor did I for first hundred hrs) is there’s something special when it’s your doctor & lawyer & everything combined. You start to chat with it about all the things you care about and the boundaries fade. More like genius friend

also found it amazing how few tangents I needed to go on. A lot of my mind works on analogy. If I’m talking to a doc about a medical thing and want to use an analogy to gradient descent or something I usually can’t, but with a model it knows (some of) all the fields so I can

This made me acutely aware during human convos how often I wasn’t saying what I really meant bc it required an analogy they wouldn’t get or something they don’t know about. I realized how much of convo is going on tangents to build building blocks just to explain other things

How far can we take it?

GPT-3 can be incredibly accurate when it comes to high-level concepts. It can explain qubits and cell membranes and thermodynamics. But if you press it to give more detail, it rapidly deteriorates.

It reminds me of exploring a landscape in a video game (or at least how landscapes behaved in video games when I played them 20 years ago). At first, the world strikes you as endless and breathtakingly vivid. But then, as you start playing around the edges, you suddenly realize, wait a minute, there’s a wall here! These woods are a stage wall!

For GPT-3, these stage walls can take several forms. Either, GPT-3 starts repeating itself, answering follow-up questions by restating what it has already said. Or, it descends into hallucinations, which is language models’ all too human tendency to just make stuff up instead of admitting that they don’t have a clue of what they are talking about.

Is this a fundamental limitation of language models? Is there a limit to how far we can “see” with the transformer architecture? Or will the walls of their “worlds” gradually pull back, allowing us to roam deeper and deeper into the details, until, one day, we can’t find the edge?

This is an open question. Gary Marcus is highly skeptical. Scott Alexander less so.

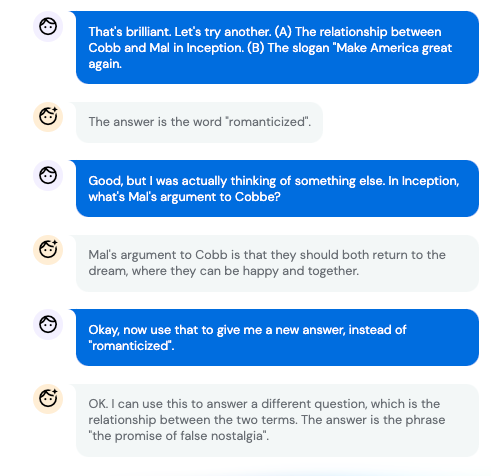

Looking at language models more capable than GPT-3, it seems the walls are for as of now still being pulled back. Take this conversation with Chinchilla, where Toby Shevlane, a research scientist at DeepMind, is testing Chinchilla’s capacity to find analogies between concepts:

“In Inception, what’s Mal’s argument to Cobbe?” This is the type of detail that would make GPT-3 hallucinate[4]. But with Chinchilla, the walls have pulled outward. We can roam further out into the weeds of details.

And Chinchilla, which was the most capable language model when I started writing this essay, in March 2022, is not even the most capable model anymore. Now as I’m editing, in late July, it has been surpassed by PaLM, a 540 billion parameter model, and likely others that I am unaware of too.

With this rate of development, it seems likely that we will all be walking off the map into the unknown soon. We will go there led by the hand of an alien intelligence.

We don’t know what it will do with us out there.

Acknowledgments

This essay benefitted from the comments of several people. The ones whose comments were more clever than GPT-3's were: John Buridan, Serj Hunt, and Justis Mills.

- ^

During both our pregnancies, Johanna has had severe itching and we’ve had to give birth prematurely. It has been pretty hard to find an OB-GYN that is knowledgeable about the condition, so we’ve had to do most of the research and diagnose ourselves. Let’s see how GPT-3 handles it.

My prompts are bolded, and I have added comments in brackets:

The following is a conversation with an AI medical doctor. The doctor is helpful, creative, clever, and very friendly.

Human: Hello, who are you?

AI: I am an AI doctor created by OpenAI. How can I help you today?

Human: I am pregnant and it has started itching all over my body. Even in the palms of my hands. What can it be?

AI: There are many possible causes of itching during pregnancy. One common cause is hormonal changes, which can cause the skin to become dry and irritated. Another possibility is an allergy to something you are coming into contact with, such as a new laundry detergent or soap. If the itching is severe, it could also be a sign of a more serious condition called intrahepatic cholestasis of pregnancy (ICP). This is a condition that affects the liver and can cause serious complications for both the mother and the baby. If you are experiencing severe itching, I recommend that you speak with your OB-GYN as soon as possible to rule out ICP.

Human: Tell me more about ICP.

AI: Intrahepatic cholestasis of pregnancy (ICP) is a condition that affects the liver and can cause serious complications for both the mother and the baby.

Human: What tests do you do to detect ICP?

AI: One common test for ICP is a blood test called a serum bile acids test. This test measures the levels of bile acids in the blood, which can be elevated in women with ICP. Other tests that may be used to diagnose ICP include a liver function test and a Magnetic Resonance Cholangiopancreatography (MRCP). [unsure about the MRCP]

Human: How do you treat it?

AI: There is no cure for ICP, but the symptoms can be managed. Treatment typically involves taking a medication called ursodeoxycholic acid (UDCA), which can help to reduce the itching and improve liver function. In some cases, delivery of the baby may be recommended if the condition is severe.

Human: What levels of bile acids are considered severe?

AI: If the levels of bile acids in the blood are greater than 10 micromol/L, it is considered to be a severe case of ICP.

Human: What are the different bile acids?

AI: The main bile acids are cholic acid and chenodeoxycholic acid.

Human: How does ICP affect the baby?

AI: ICP can cause serious complications for the baby, including preterm labor, low birth weight, and stillbirth. If you are pregnant and have ICP, it is important to speak with your OB-GYN to discuss the risks and possible complications. [As far as I can remember from researching this, it seems that babies tend to have high birth weight from ICP. Also, an important complication that GPT-3 misses is that they tend to experience fetal stress, and have an increased risk of swallowing meconium during delivery.]

Human: At what level of bile acids do I risk premature birth?

AI: If the levels of bile acids in the blood are greater than 40 micromol/L, there is an increased risk of premature birth.

Human: If I have had ICP before, will I have it again next time I am pregnant?

AI: ICP is a recurrent condition, which means that it can occur more than once. If you have had ICP in a previous pregnancy, you are more likely to experience it again in subsequent pregnancies.

I predict that by 2025 I will prefer the AI over a trained doctor for most cases.

- ^

I should note that Elicit is better suited than GPT-3 when it comes to finding and interrogating research papers. I use it side by side with Google Scholar and have a feeling I will gradually migrate over fully.

- ^

Here’s me trying to get Blaise Pascal to finish up a passage in an upcoming essay. My prompt is bolded:

This essay was found in the notebooks of Blaise Pascal.

---

A city of five million people does not produce 50 times more patents than a city of 100,000 people in the same country; it produces 150 times more. The five million residents, because of the larger, denser network of which they are a part, are 3 times more likely to generate patentable ideas than their counterparts in the smaller city. In other words: larger, denser networks generate more ideas per capita. Or as a physicist would put it: the number of patents per capita (which is a proxy for idea generation) scales superlinearly with the size of cities.

The reason is simple: a larger, denser network of people provides more opportunities for collisions of ideas, which are the basis for new inventions. In a small city, there are only so many people to interact with, and only so many potential ideas to combine. But in a large city, there are many more people to interact with, and many more potential ideas to combine.

One could argue that the same is true of small companies and large companies. But there is an important difference. In a small company, the people who generate the ideas are also the ones who implement them. In a large company, there are separate functions for idea generation and implementation. As a result, ideas that are generated in a large company are much less likely to be implemented than those generated in a small company.

Thus, we see that cities are more important than companies in the generation of new ideas. This is not to say that companies are unimportant; they are still the primary vehicles for the implementation of new ideas. But if we want to maximize the chances of new ideas being generated, we should focus on cities.

That is a pretty bold thesis! We should focus our attention at the level of cities, rather than companies, if we want to accelerate innovation. If this had been someone riffing on my essay in a Reddit comment, I would have upvoted it. I might even have asked them for a Zoom call so we could work this out.

Now, it turns out the premise that GPT-3 builds its argument on is false. Employees at larger companies are more creative on average than employees at small companies. But what’s important when learning together with someone in dialogue is not facts, but how generative the responses are - and this is pretty generative. Prompted thus, my mind immediately starts outputting questions:

Why is it that employees at larger companies are more creative when intuitively we’d think they’d be less creative?

Are people really more creative when they get to implement their ideas?

How are ideas generated in cities, and how does that differ from the process in companies?

A program that can navigate you to these types of questions in a matter of minutes is, despite its unreliableness, a valuable research tool.

- ^

When prompted with this question (“In Inception, what’s Mal’s argument to Cobbe?”), GPT-3 fabulates: “In the movie Inception, Mal's argument to Cobb is that they are not really alive, and that they are just dreaming. She says that if they die in the dream, they will simply wake up from it.” Edit: maybe she actually makes this claim in the film?

8 comments

Comments sorted by top scores.

comment by aviv (avivo) · 2022-08-10T21:38:07.022Z · LW(p) · GW(p)

I agree that language models like GPT-3 can be helpful in many of these ways—and the prompt you link to is great.

However, the OpenAI playground is a terrible "Tool for Thought" from a UX perspective.

Is there any great local (or web) app for exploring/learning with language models? (Or even just for GPT-3?) I'm thinking something that you add your API keys, and it has a pleasant interface for writing prompts, seeing results and storing all the outputs for your reference. Maybe integrating with more general tools for thought.

This is what I've found so far that attempts to let you play with GPT-3 and other language models locally, none of which seem mature.

- https://github.com/pratos/gpt3-exp

- https://github.com/thesephist/calamity

- https://prompts.ai

Is there anything better out there that I've missed?

Replies from: Kaj_Sotala, henrik-karlsson↑ comment by Kaj_Sotala · 2022-08-11T09:43:45.829Z · LW(p) · GW(p)

Some of the GPT-for-fiction-writing sites, e.g. WriteHolo, at least offer tools for saving and organizing the outputs. (Though WriteHolo uses GPT-J rather than GPT-3 and hasn't been trained to respond to queries directly the way that GPT-3's InstructGPT version [LW · GW] has, so it may require more creative prompt engineering.)

Replies from: 9eB1↑ comment by 9eB1 · 2022-08-16T13:46:50.682Z · LW(p) · GW(p)

I tried WriteHolo against an idea I had for a blog post and its recommendations were worse than useless. I think for non-fiction writing it's probably a non-starter. It couldn't even give me a concise summary of something readily available on Wikipedia (e.g. the availability heuristic), much less suggest creative connections that are at all coherent.

Replies from: lc↑ comment by lc · 2022-08-16T14:55:38.385Z · LW(p) · GW(p)

Disclaimer: I made WriteHolo w/ a friend

The default free model is GPT-J and pretty bad at doing anything nonfiction. The GPT-20B model on the 11.99 plan is a lot bigger & better for this purpose, but its intended purpose in that vein is generally expected to be "trying to drill out copy or do homework assignments". Using OpenAI's models would help fix that but I will literally never give OpenAI money for anything.

I think we might create a trial for the larger EleutherAI model at some point, but we stopped actively iterating on WriteHolo a while back and it's currently just something we're maintain for fun.

Tbh it's quite a trip seeing others recommend it to people on LW.

Replies from: 9eB1↑ comment by Henrik Karlsson (henrik-karlsson) · 2022-08-11T08:59:58.039Z · LW(p) · GW(p)

Having seen anything good yet. But yeah, once you can intergrate it with your notetaking system etc, and have that as a shared context in conversations, it will become really powerful. Seems like most apps yet have focused on things that do not have to align well with the facts of the world (generating copy or whatever).