Believing vs understanding

post by Adam Zerner (adamzerner) · 2021-07-24T03:39:44.168Z · LW · GW · 2 commentsContents

2 comments

Every year before the NBA draft, I like to watch film on all of the prospects and predict how good everyone will be. And every year, there ends up being a few guys who I am way higher on than everyone else. Never fails. In 2020 it was Precious Achiuwa. In 2019 it was Bol Bol. In 2018 it was Lonnie Walker. In 2017 it was Dennis Smith. In 2016 it was Skal Labissiere. And now this year in 2021, it is Ziaire Williams.

I have Ziaire Williams as my 6th overall prospect, whereas the consensus opinion is more in the 15-25 range. If I'm being honest, I think I probably have him too high. There's probably something that I'm not seeing. Or maybe something I'm overvaluing. NBA draft analysis isn't a perfectly efficient market, but it is somewhat efficient, and I trust the wisdom of the crowd more than I trust my own perspective. So if I happened to be in charge of drafting for an NBA team (wouldn't that be fun...), I would basically adopt the beliefs of the crowd.

But at the same time, the beliefs of the crowd don't make sense to me.

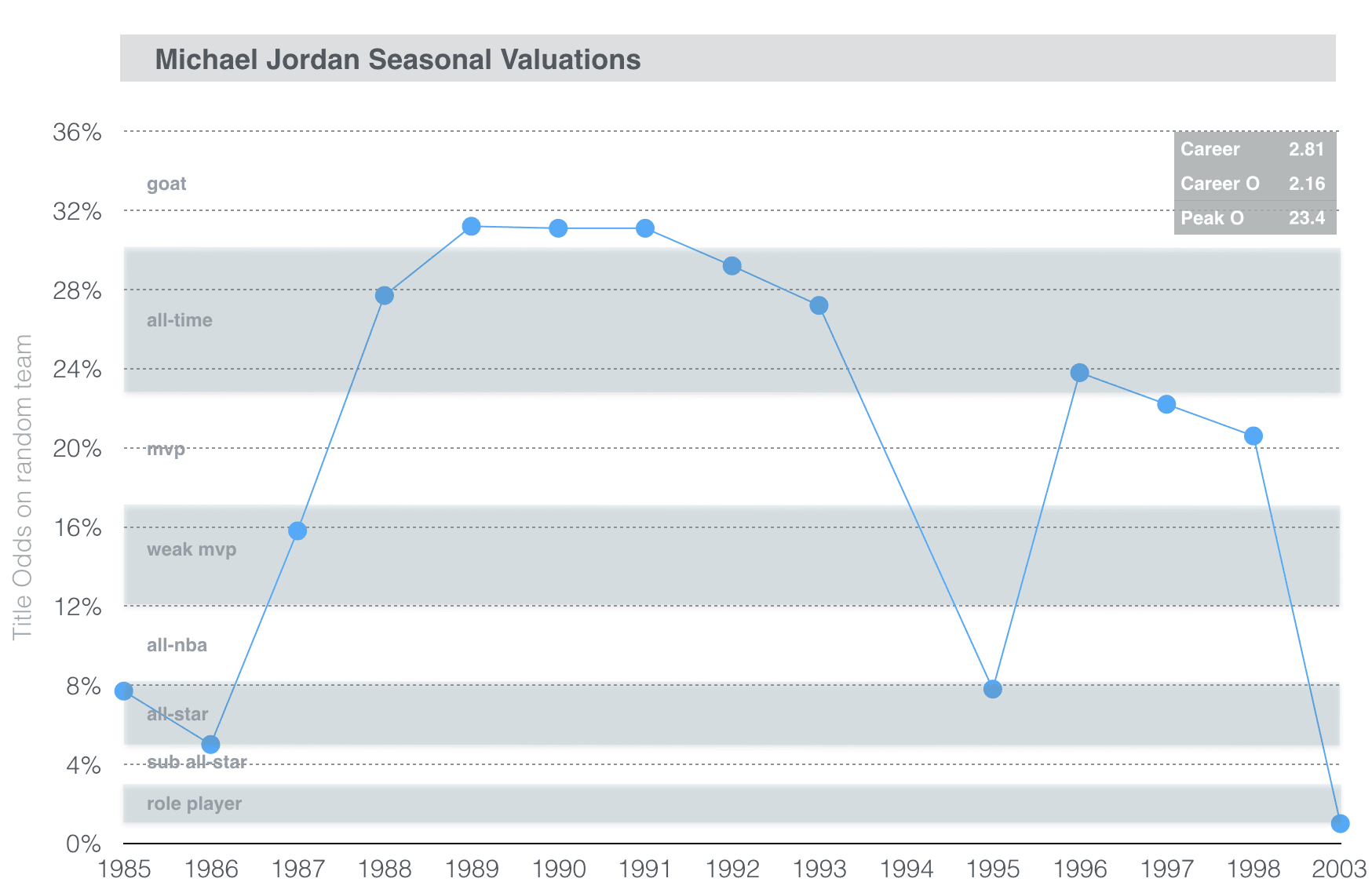

Upside is really important for NBA players. Check this out:

It shows the Championship Odds over Replacement Player (CORP) for Michael Jordan. Role players and sub-all-stars have something like a 2-4% CORP, whereas All-NBA and MVP types have something like a 10-20% CORP. And I don't even think that fully captures the value that these unicorn-type players have. I believe it's easier to build a roster around a unicorn, as one example. So if you can hit on a unicorn, it's huge.

Ziaire Williams is one of the few guys in this draft who I think has that unicorn potential. He's 6'9 with a 6'11 wingspan: great size for a wing. He's got the shake as a ball handler to break his man down off the dribble and create. His shooting isn't there yet, but the fluidity, FT% and tough shot making ability all make me think he can develop into a really good shooter. He doesn't have the ability to get to the rim yet, but his size, explosiveness, fluidity, and shake as a ball handler all make me think that he can totally get there one day. Once he develops physically and becomes a little more savvy, I could see it happening. And then as a passer and defender I think he has the knack and can develop into a moderate plus in both of those areas. Overall, I think he's got All-NBA upside for his ceiling, but also isn't one of those complete boom or bust guys either. I could see him just developing into a solid contributor.

Anyway, there is this discrepancy between what I believe, and what my intuition is. If you put me in charge of drafting for an NBA team, I would adopt the belief of the crowd and punt on drafting him this highly. But at the same time, I wouldn't understand the why. It wouldn't make sense to me. My intuition would still be telling me that he's a prospect worthy of the 6th overall pick. I would just be trusting the judgement of others.

Let me provide a few more examples.

In programming, there is a difference between static typing and dynamic typing. My intuition says that with static typing, the benefit of catching type errors at compile time wouldn't actually be that large, nor the benefit of being able to hover over stuff in your IDE and see eg. function signatures. On the other hand, my intuition says that the extra code you have to write for static typing is "crufty" and will make it take longer to read code. And with that, it seems like dynamic typing wins out. However, I hear differently from programmers that I trust. And I personally have very little experience with static typing, so it's hard to put much weight on my own intuition. So if I was in charge of choosing a tech stack for a company (where I expect the codebase to be sufficiently large), I would choose a staticly typed language. But again, there is a conflict between what I believe and what I intuit. Or what I understand.

I'm moving in 8 days. I have a decent amount of packing to do. It seems like it's pretty manageable, like I can get it done in a day or two. But that's just what my intuition outputs. As for what I believe, I believe that this intuition is a cognitive illusion. With a visual illusion, my eyes deceive me. With a cognitive illusion, my brain does. In this case, I expect that there is actually something like 2x more packing to do than what I'd intuitively think. Let's hope my willpower is strong enough to act on this belief.

I'm a programmer. At work we have these deadlines for our projects. I guess the sales and marketing people want to be able to talk to customers about new features that are coming up. I don't understand this though. We've been building the app we have for seven years. A new feature is maybe four weeks of work. So it's like an iceberg: the bulk of the work is what we've already done, the part that is underwater, whereas the new feature is the itsy bitsy part of the iceberg that is above water. Under what conditions is that itsy bitsy part above the water ever going to bring a customer from a "no" to a "yes"? It doesn't make sense to me. Then again, I'm not a salesperson. This practice seems common in the world of sales and marketing, so there's probably something that I'm missing.

I run into this situation a lot, where I believe something but don't understand it. And I feel like I always fail to communicate it properly. I end up coming across as if my belief matches my understanding. As if I actually believe that release dates are useless to salespeople.

One failure mode I run into is that if you have a long back and forth discussion where you keep arguing for why it seems that your understanding makes sense, people will think that your belief matches your understanding. I guess that is logical. I don't blame the other person for making that assumption. I suppose I need to be explicit when my belief doesn't match my understanding.

Another failure mode I run into is, I feel like I don't have the right language to express belief vs understanding, and so I don't attempt to explain it. It feels awkward to describe the difference.

In a perfect world, I'd talk about how beliefs are basically predictions about the world. They are about anticipated experiences [? · GW]. And then I'd talk about mental models. And gears-level models [? · GW].

I'd also talk about the post A Sketch of Good Communication [LW · GW]. In particular, the part about integrating the evidence someone shares with you, updating your model of the world, and then updating your beliefs.

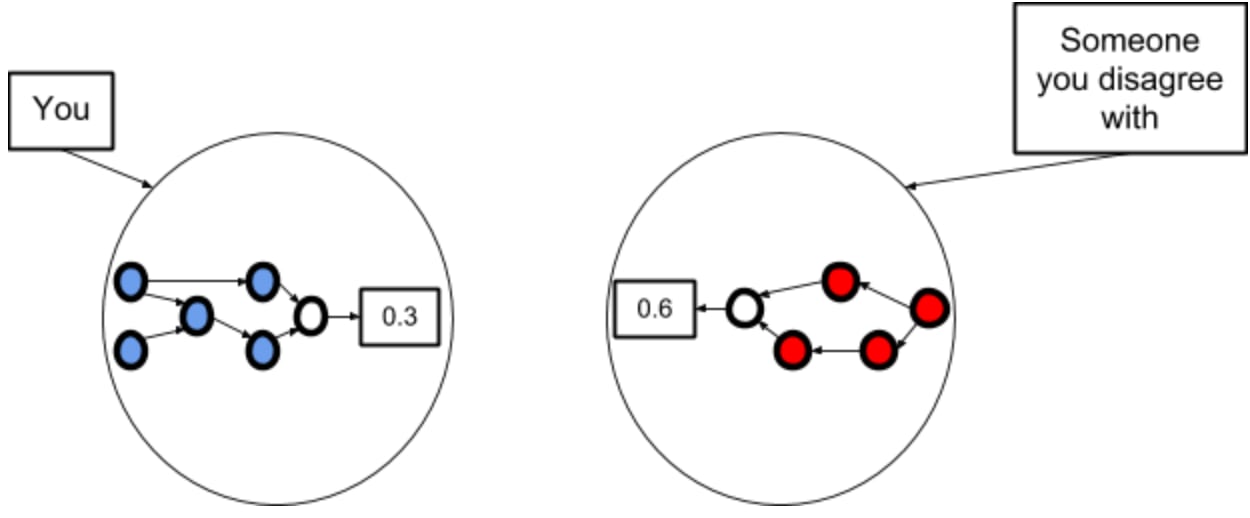

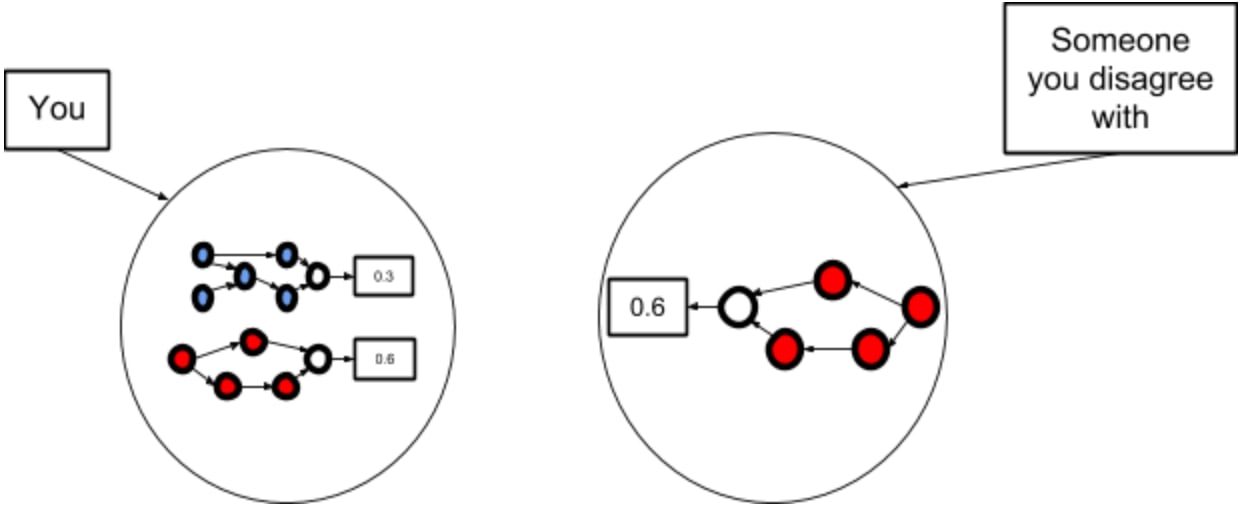

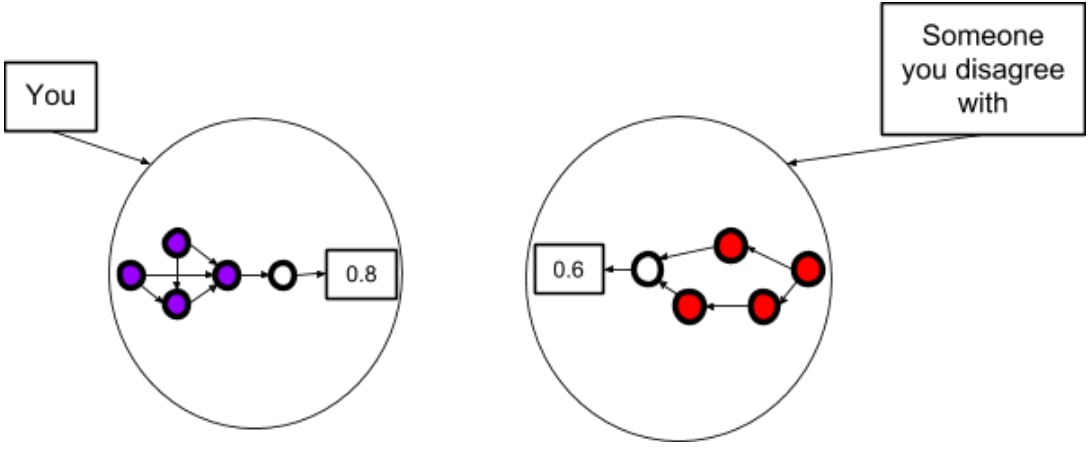

Step 1: You each have a different model that predicts a different probability for a certain event.

Step 2: You talk until you have understood how they see the world.

Step 3: You do some cognitive work to integrate the evidences and ontologies of you and them, and this implies a new probability.

But all of this is impractical to do in most situations, hence the failure mode.

I'm not sure if there is a good solution to this. If there is, I don't think I'm going to figure it out in this blog post. In this blog post my goal is more to make some progress in understanding the problem, and also maybe to have something to link people to when I want to talk about belief vs understanding.

One last point I want to make in this post is about curiosity. When you are forced to make decisions, often times it makes sense to adopt the beliefs of others and act on them without understanding them. And furthermore, sometimes it makes sense to move on without actually trying to understand the thing. For example, I trust that it makes sense to get vaccinated for covid, and I don't think it's particularly important for me to understand why the pros outweigh the cons. But I fear that this type of thinking can be a slippery slope.

It can be tempting to keep thinking to yourself:

I don't actually need to understand this.

I don't actually need to understand this.

I don't actually need to understand this.

And maybe that's true. I feel like we live in a world where adopting the beliefs of experts you trust and/or the crowd can get you quite far. But there are also benefits to, y'know, actually understanding stuff.

I'm not sure how to articulate why I think this. It just seems like there are a lot of more mundane situations where it would be impractical to try to adopt the beliefs of others. You kinda have to think about it yourself. And then there is also creativity and innovation. For those things, I believe that building up a good mental model of the world is quite useful.

Here is where the first virtue of rationality comes in: curiosity.

The first virtue is curiosity. A burning itch to know is higher than a solemn vow to pursue truth. To feel the burning itch of curiosity requires both that you be ignorant, and that you desire to relinquish your ignorance. If in your heart you believe you already know, or if in your heart you do not wish to know, then your questioning will be purposeless and your skills without direction. Curiosity seeks to annihilate itself; there is no curiosity that does not want an answer. The glory of glorious mystery is to be solved, after which it ceases to be mystery. Be wary of those who speak of being open-minded and modestly confess their ignorance. There is a time to confess your ignorance and a time to relinquish your ignorance.

I suspect that curiosity is necessary. That in order to develop strong mental models, it's not enough to just be motivated for more practical and instrumental reasons, as a means to some end. You have to enjoy the process of building those models. When your models are messy and tangled up, it has to bother you. Otherwise, I don't think you would spend enough time developing an understanding of how stuff works.

When I think of the word "curiosity", I picture a toddler crawling in some nursery school playing with blocks.

And when I think of serious, adult virtues, an image of an important business executive at their desk late at night thinking hard about how to navigate some business partnership is what comes to mind. Someone who is applying a lot of willpower and effort.

And I don't think I'm alone in my having these stereotypes. That is a problem. I fear that these stereotypes make us prone to dismissing curiosity as more of a "nice to have" virtue.

How can we fix this? Well, maybe by developing a stronger gears level understanding of why curiosity is so important. How's that for a chicken-egg problem?

2 comments

Comments sorted by top scores.

comment by [deleted] · 2021-07-24T21:17:55.800Z · LW(p) · GW(p)

Under what conditions is that itsy bitsy part above the water ever going to bring a customer from a "no" to a "yes"?

This has happened to me several times. For example, I use a specific markdown editor because it has a single killer feature (single pane editing) that none of the others do. Or a few days ago, I looked in a comparison of vector editing software because the one I was using didn't have a specific feature (user friendly perspective transforms). I've picked apps over something as simple as a dark theme, or being able to store my notes as a tree instead of a flat list. Sometimes, a single feature can be exactly what someone wants.

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2021-07-24T22:46:15.293Z · LW(p) · GW(p)

Yeah that does make sense. I guess it depends on the feature in question and how close the competition is.