A Sketch of Good Communication

post by Ben Pace (Benito) · 2018-03-31T22:48:59.652Z · LW · GW · 36 commentsContents

A Sketch Something other than Good Reasoning None 36 comments

"Often I compare my own Fermi estimates with those of other people, and that’s sort of cool, but what’s way more interesting is when they share what variables and models they used to get to the estimate."

– Oliver Habryka, at a model building workshop at FHI in 2016

One question that people in the AI x-risk community often ask is

"By what year do you assign a 50% probability of human-level AGI?"

We go back and forth with statements like "Well, I think you're not updating enough on AlphaGo Zero." "But did you know that person X has 50% in 30 years? You should weigh that heavily in your calculations."

However, 'timelines' is not the interesting question. The interesting parts are in the causal models behind the estimates. Some possibilities:

- Do you have a story about how the brain in fact implements back-propagation, and thus whether current ML techniques have all the key insights?

- Do you have a story about the reference class of human brains and monkey brains and evolution, that gives a forecast for how hard intelligence is and as such whether it’s achievable this century?

- Do you have a story about the amount of resources flowing into the problem, that uses factors like 'Number of PhDs in ML handed out each year' and 'Amount of GPU available to the average PhD'?

Timelines is an area where many people discuss one variable all the time, where in fact the interesting disagreement is much deeper. Regardless of whether our 50% dates are close, when you and I have different models we will often recommend contradictory strategies for reducing x-risk.

For example, Eliezer Yudkowsky, Robin Hanson, and Nick Bostrom all have different timelines, but their models tell such different stories about what’s happening in the world that focusing on timelines instead of the broad differences in their overall pictures is a red herring.

(If in fact two very different models converge in many places, this is indeed evidence of them both capturing the same thing - and the more different the two models are, the more likely this factor is ‘truth’. But if two models significantly disagree on strategy and outcome yet hit the same 50% confidence date, and we should not count this as agreement.)

Let me sketch a general model of communication.

A Sketch

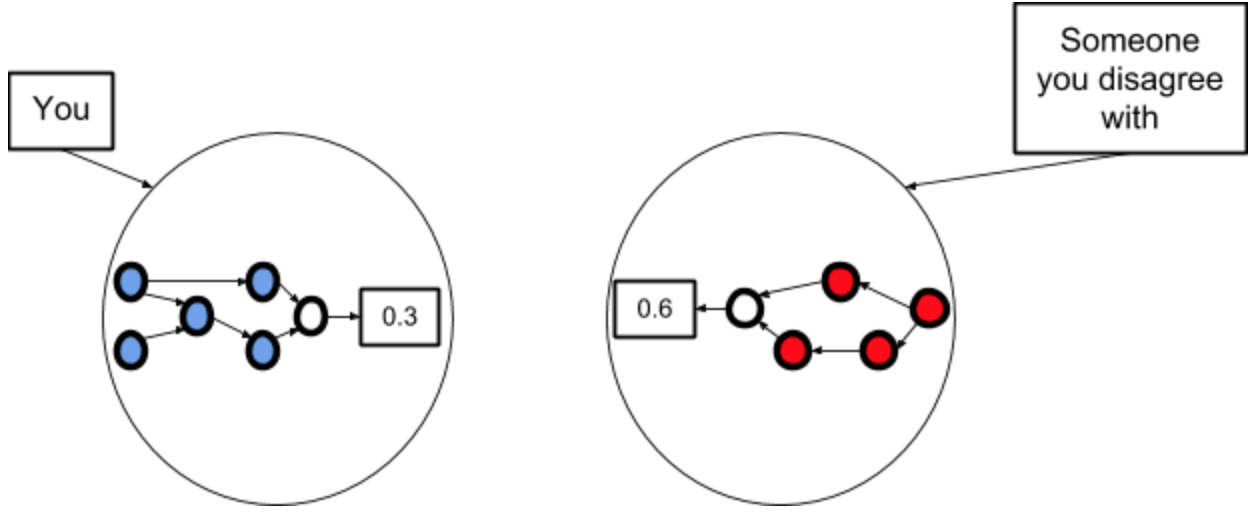

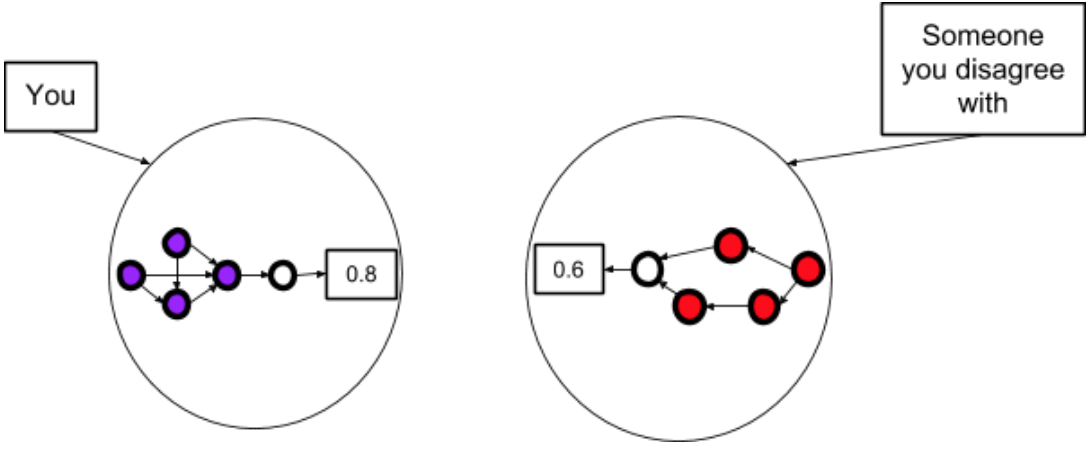

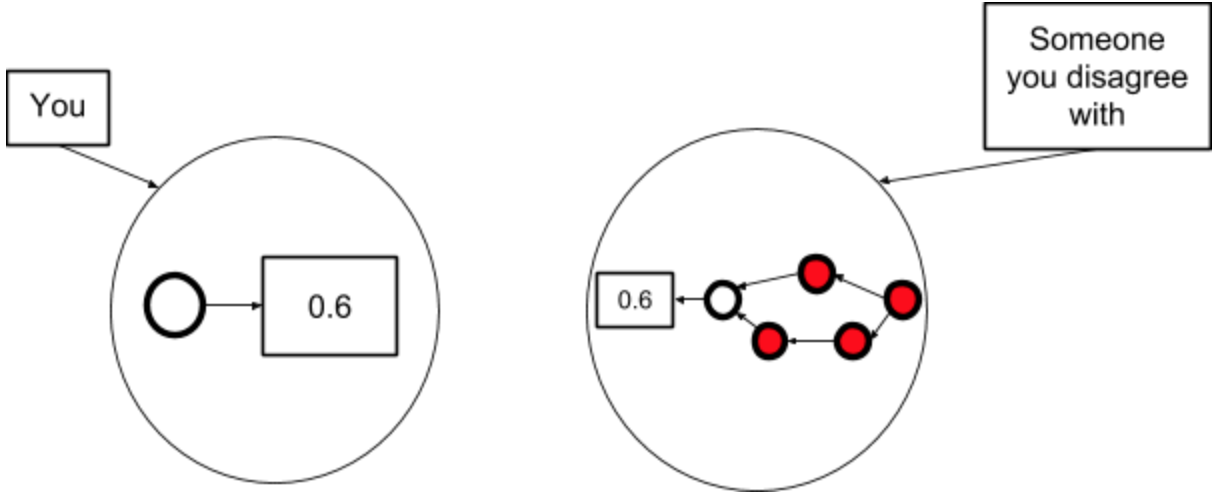

Step 1: You each have a different model that predicts a different probability for a certain event.

Step 2: You talk until you have understood how they see the world.

Step 3: You do some cognitive work to integrate the evidences and ontologies of you and them, and this implies a new probability.

"If we were simply increasing the absolute number of average researchers in the field, then I’d still expect AGI much slower than you, but if now we factor in the very peak researchers having big jumps of insight (for the rest of the field to capitalise on), then I think I actually have shorter timelines than you.”

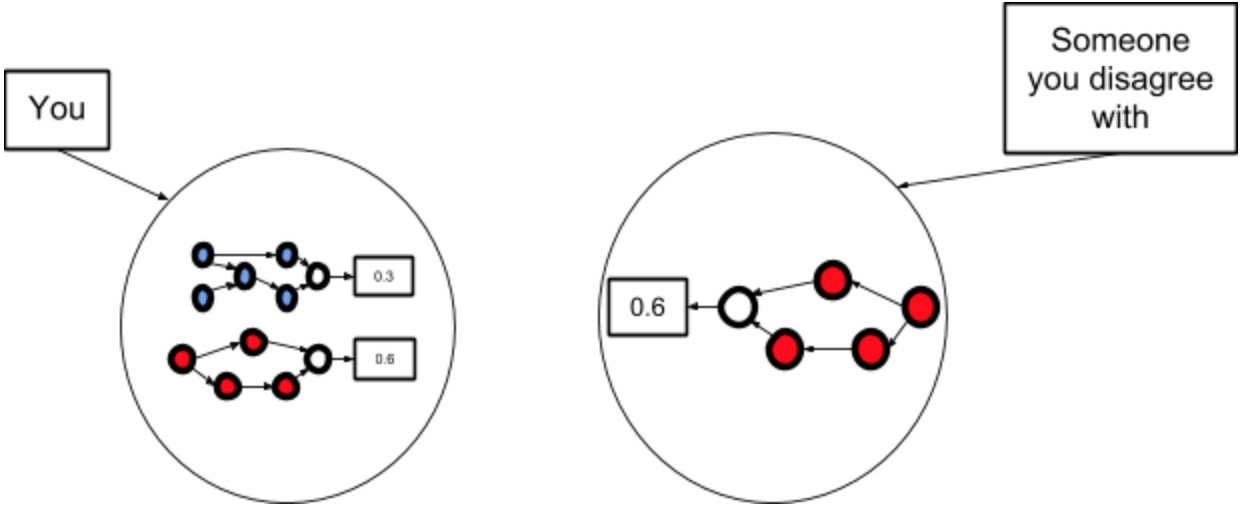

One of the common issues I see with disagreements in general is people jumping prematurely to the third diagram before spending time getting to the second one. It’s as though if you both agree on the decision node, then you must surely agree on all the other nodes.

I prefer to spend an hour or two sharing models, before trying to change either of our minds. It otherwise creates false consensus, rather than successful communication. Going directly to Step 3 can be the right call when you’re on a logistics team and need to make a decision quickly, but is quite inappropriate for research, and in my experience the most important communication challenges are around deep intuitions.

Don't practice coming to agreement; practice exchanging models.

Something other than Good Reasoning

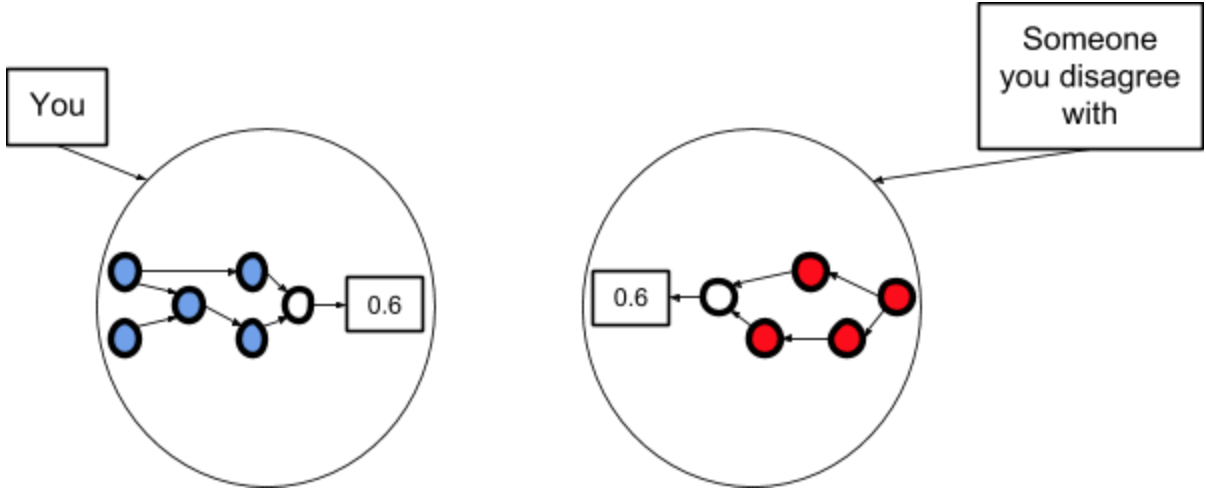

Here’s an alternative thing you might do after Step 1. This is where you haven't changed your model, but decide to agree with the other person anyway.

This doesn’t make any sense but people try it anyway, especially when they’re talking to high status people and/or experts. “Oh, okay, I’ll try hard to believe what the expert said, so I look like I know what I’m talking about.”

This last one is the worst, because it means you can’t notice your confusion any more. It represents “Ah, I notice that p = 0.6 is inconsistent with my model, therefore I will throw out my model.” Equivalently, “Oh, I don’t understand something, so I’ll stop trying.”

This is the first post in a series of short thoughts on epistemic rationality, integrity, and curiosity. My thanks to Jacob Lagerros and Alex Zhu for comments on drafts.

Descriptions of your experiences of successful communication about subtle intuitions (in any domain) are welcomed.

36 comments

Comments sorted by top scores.

comment by Jan_Kulveit · 2018-04-01T13:26:46.627Z · LW(p) · GW(p)

I like the approach but the problem this may run into on many occasions is - parts of the reasoning include things which are not easily communicable. Suppose you talk to AlphaGo and want it to explain why it prefers some move. It may relatively easily communicate part of tree search (an I can easily integrate the non-overlapping part of the tree into my tree), but we will run into trouble communicating the "policy network" consisting of weights obtained on training by very large number of examples. *

From human perspective, such parts of our cognitive infrastructure are often not really accessible, giving their results in the form of "intuition". What's worse, other part of our cognitive infrastructure is very good in making fake stories explaining the intuitions, so if you are motivated enough your mind may generate plausible but in fact fake models for your intuition. In worst case you discard the good intuition when someone shows you problems in the fake model.

Also... it seems to me it is in practice difficult to communicate such parts of your reasoning, and among rationalists in particular. I would expect communicating parts of the model in the form of "my intuition is ..." to be intuitively frowned upon, down-voted, pattern-matched to argument from authority, or "argument" by someone who cannot think clearly. (An exception are intuitions of high-status people). In theory it would help if you describe why your intuitive black box is producing something else than random noise, but my intuition tells me in it may sound even more awkward ("Hey I studied this for several years" )

*Btw in some cases, it _may_ make sense to throw away part of you model and replace it just by the opinion of the expert. I'm not sure I can describe it clearly without drawing

Replies from: Benito, TurnTrout↑ comment by Ben Pace (Benito) · 2018-04-03T09:55:27.488Z · LW(p) · GW(p)

(I found your comment really clear and helpful.)

It's important to have a model of whether AlphaGo is trustworthy before you should trust it. Knowing either (a) it beat all the grandmasters or (b) its architecture and amount of compute it used, is necessary for me to take on its policies. (This is sort of the point of Inadequate Equilibria - you need to make models of the trustworthiness of experts.)

Btw in some cases, it _may_ make sense to throw away part of you model and replace it just by the opinion of the expert. I'm not sure I can describe it clearly without drawing

I think I'd say something like: it may become possible to download the policy network of AlphaGo - to learn what abstractions it's using and what it pays attention to. And AlphaGo may not be able to tell you what experiences it had that lead to the policy network (it's hard to communicate tens of thousands of game's worth of experience). Yet you should probably just replace your Go models with the policy network of AlphaGo if you're offered the choice.

A thing I'm currently confused on regarding this, is how much one is able to further update after downloading the policy network of such an expert. How much evidence should persuade you to change your mind, as opposed to you expecting that info to be built into the policy network you have?

From human perspective, such parts of our cognitive infrastructure are often not really accessible, giving their results in the form of "intuition". What's worse, other part of our cognitive infrastructure are very good in making fake stories explaining the intuitions, so if you are motivated enough your mind may generate plausible but in fact fake models for your intuition. In worst case you discard the good intuition when someone shows you problems in the fake model.

You're right, it does seem that a bunch of important stuff is in the subtle, hard-to-make-explicit reasoning that we do. I'm confused about how to deal with this, but I'll say two things:

- This is where the most important stuff is, try to focus your cognitive work on making this explicit and trading these models. Oliver likes to call this 'communicating taste'.

- Practice not dismissing the intuitions in yourself - use bucket protections [LW · GW] if need-be.

I'm reminded of the feeling when I'm processing a new insight, and someone asks me to explain it immediately, and I try to quickly convey this simple insight, but words I say end up making no sense (and this is not because I don't understand it). If I can't communicate something, one of the key skills here is acting on your models anyway, even if were you to explain your reasoning it might sound inconsistent or like you were making a bucket error.

And yeah, for the people who you think its really valuable to download the policy networks from, it's worth spending the time building intuitions that match theirs. I feel like I often look at a situation using my inner Hansonian-lens, and predict what he'll say (sometimes successfully), even while I can't explicitly state the principles Robin is using.

I don't think the things I've just said constitute a clear solution to the problem you raised, and I think my original post is missing some key part that you correctly pointed to.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2018-10-18T00:38:08.106Z · LW(p) · GW(p)

In retrospect, I think this comment of mine didn't address Jan's key point, which is that we often form intuitions/emotions by running a process analagous to aggregating data into a summary statistic and then throwing away the data. Now the evidence we saw is quite incommunicable - we no longer have the evidence ourselves.

Ray Arnold gave me a good example the other day of two people - one an individualist libertarian, the other a communitarian Christian. In the example these two people deeply disagree on how society should be set up, and this is entirely because they're two identical RL systems built on different training sets (one has repeatedly seen the costs of trying to trust others with your values, and the other has repeatedly seen it work out brilliantly). Their brains have compressed the data into a single emotion, that they feel in groups trying to coordinate (say). Overall they might be able to introspect enough to communicate the causes of their beliefs, but they might not - they might just be stuck this way (until we reach the glorious transhumanist future, that is). Scott might expect them to say they just have fundamental value differences.

I agree that I have not in the OP given a full model of the different parts of the brain, how they do reasoning, and which parts are (or aren't) in principle communicable or trustworthy. I at least claim that I've pointed to a vague mechanism that's more true than the simple model where everyone just has the outputs of their beliefs. There are important gears that are hard-but-possible to communicate, and they're generally worth focusing on over and above the credences they output. (Will write more on this in a future post about Aumann's Agreement Theorem.)

↑ comment by TurnTrout · 2018-04-01T15:01:46.876Z · LW(p) · GW(p)

I think the problem with being willing to throw away your model for an expert opinion is that it means, taken literally, you'd be willing to keep replacing your opinion as more experts (of differing opinions) talked to you, without understanding why. Right now, I view "respected expert disagrees with me" not as "they have higher status, they win" but as I should figure out whether I'm missing information.

Replies from: Pattern, Jan_Kulveit↑ comment by Jan_Kulveit · 2018-04-01T21:24:04.102Z · LW(p) · GW(p)

What I had in mind would, for example, in the case of AlphaGo mean throwing away part of my opinion on "how valuable a position is" and replacing it by AG opinion. That does not mean throwing away your whole model. If done in a right way, you can integrate opinions of experts and come with better overall picture than any of them, even if you miss most of the information.

comment by Kaj_Sotala · 2018-04-01T13:32:21.874Z · LW(p) · GW(p)

One of the common issues I see with disagreements in general is people jumping prematurely to the third diagram before spending time getting to the second one.

Jumping to the third diagram is especially bad if you are trying to coach or help someone with their problems. One heuristic that I've found very useful (when I have the patience to remember to follow it) is that if someone asks me for help, the very first thing to do before making any suggestions is to get an understanding of their model of what the problem is and why they haven't solved it yet. Otherwise, I'm just going to waste a lot of time and frustrate both of us by making suggestions which will feel obviously useless to them.

(And in the best case, asking them questions and trying to figure out the solution together leads to the other person figuring out a possible solution themselves, without me even needing to suggest anything.)

Replies from: Benito↑ comment by Ben Pace (Benito) · 2018-04-03T08:46:27.004Z · LW(p) · GW(p)

This resonates with my experience. Often, the only thing I do when helping someone solve a problem, is try to make their implicit model explicit. "Why does this thing happen, exactly?" "What happens between these two points?" "What do you predict happens if this part changes?" This typically creates a conversation where the other person has enough space to work through the implications (and make good suggestions) themselves.

It has the advantage that, if a solution comes up, they fully understand what generated it, and so they're not likely to forget it later. This is in contrast to a situation where I say "Oh, x totally will work" and they don't *really* get why I think it works.

(I've been trying a bunch of more advanced moves very recently, but getting this basic skill has been my main practice and cause of success in this domain in the past few years.)

comment by Zack_M_Davis · 2019-11-26T05:54:50.371Z · LW(p) · GW(p)

This is where you haven't changed your model, but decide to agree with the other person anyway.

I very often notice myself feeling psychological pressure to agree with whoever I'm currently talking to; it feels nicer, more "cooperative." But that's wrong—pretending to agree when you actually don't is really just lying about what you believe! Lying is bad!

In particular, if you make complicated plans with someone without clarifying the differences between your models, and then you go off and do your part of the plan using your private model (which you never shared) and take actions that your partner didn't expect and are harmed by, then they might feel pretty betrayed—as if you were an enemy who was only pretending to be their collaborator all along. Which is kind of what happened. You never got on the same page. As Sarah Constantin explains in another 2018-Review-nominated post [LW · GW], the process of getting on the same page is not a punishment!

comment by rk · 2018-04-02T00:17:26.605Z · LW(p) · GW(p)

This doesn’t make any sense but people try it anyway, especially when they’re talking to high status people and/or experts. “Oh, okay, I’ll try hard to believe what the expert said, so I look like I know what I’m talking about.”

It seems to me, sometimes you just need to ignore your model's output and just go with an expert's opinion. For example, the current expected value of trying to integrate the expert's model might be low (even zero if it's just impossible for you to get it!).

Still, I agree that you shouldn't throw away your model. Looking at your diagrams, it's easy to think we could take a leaf from Pearl's Causality (Ch. 3). Pearl is talking about modelling intervention in causal graphs. That does seem kind of similar to modelling an 'expert override' in belief networks, but that's not necessary to steal the idea. We can either just snip the arrows from our model to the output probability and set the output to the expert's belief, or (perhaps more realistically), add an additional node 'what I view to be expert consensus' that modulates how our credence depends on our model.

Your diagrams even have white circles before the credence that could represent our 'seeming' -- i.e. what the probability we get just from the model is -- and then that can be modulated by a node representing expert consensus and how confidently we think we can diverge from it.

comment by Jameson Quinn (jameson-quinn) · 2020-01-15T19:07:23.223Z · LW(p) · GW(p)

This is the second time I've seen this. Now it seems obvious. I remember liking it the first time, but also remember it being obvious. That second part of the memory is probably false. I think it's likely that this explained the idea so well that I now think it's obvious.

In other words: very well done.

comment by Jaime Sevilla Molina (jaime-sevilla-molina) · 2018-04-01T18:10:10.840Z · LW(p) · GW(p)

I appreciate that in the example it just so happens that the person assigning a lower probability ends up assigning a higher probability that the other person at the beginning, because it is not intuitive that this can happen but actually very reasonable. Good post!

Replies from: Benito↑ comment by Ben Pace (Benito) · 2018-04-01T22:55:10.499Z · LW(p) · GW(p)

Yup, some people seem to think that one of the correct moves in 'coming to agreement' is that our probabilities begin to average out. (I have another’s post on this drafted.)

Replies from: diss0nance↑ comment by diss0nance · 2018-04-19T16:35:51.983Z · LW(p) · GW(p)

Did you publish this other post?

comment by Emrik (Emrik North) · 2022-08-31T01:33:37.542Z · LW(p) · GW(p)

This is one of the most important reasons why hubris is so undervalued. People mistakenly think the goal is to generate precise probability estimates for frequently-discussed hypotheses (a goal in which deference can make sense). In a common-payoff-game research community, what matters is making new leaps in model space, not converging on probabilities. We (the research community) are bottlenecked by insight-production, not marginally better forecasts or decisions. Feign hubris if you need to, but strive to install it as a defense against model-dissolving deference.

comment by Kaj_Sotala · 2019-11-26T10:26:31.713Z · LW(p) · GW(p)

This model of communication describes what LW would ideally be all about. Have mentally referenced this several times.

comment by Raemon · 2019-12-15T21:18:12.469Z · LW(p) · GW(p)

This is the first post in a series of short thoughts on epistemic rationality, integrity, and curiosity. My thanks to Jacob Lagerros and Alex Zhu for comments on drafts.

I am curious if this sentence turned out to be true, and if so what the followup posts were.

comment by Qiaochu_Yuan · 2018-04-01T02:25:20.418Z · LW(p) · GW(p)

However, 'timelines' is not the interesting question.

Well, they are the decision-relevant question. At some point timelines get short enough that it's pointless to save for retirement. At some point timelines get short enough that it may be morally irresponsible to have children. At some point timelines get short enough that it becomes worthwhile to engage in risky activities like skydiving, motorcycling, or taking drugs (because the less time you have left the fewer expected minutes of life you lose from risking your life). Etc.

But yes, the thing where you discard your model is not great.

(Also, I personally have never generated a timeline using a model; I do something like Focusing on the felt senses of which numbers feel right. This is the final step in Eliezer's "do the math, then burn the math and go with your gut" thing.)

Replies from: Benito, Benquo, Benquo↑ comment by Ben Pace (Benito) · 2018-04-01T08:55:16.376Z · LW(p) · GW(p)

I don't mean "Discussion of timelines is not useful". I mean it is not the central point nor should it be the main part of conversation.

Here's three quick variables that would naturally go into a timelines model, but in fact are more important because of their strategic implications.

- Can ML produce human-level AGI?

- Timelines implications: If the current research paradigm will continue to AGI, then we can do fairly basic extrapolation to determine timelines.

- Strategic implications: If the current research paradigm will continue to AGI, this tells us important things about what alignment strategies to pursue, what sorts of places to look for alignment researchers in, and what sort of relationship to build with academia.

- How flexible is are the key governments (US, Russia, China, etc)?

- Timelines implications: This will let us know how much speed-up they can give to timelines (e.g. by funding, by pushing on race dynamics).

- Strategic implications: This has a lot of impact in terms of how much we should start collaborating with governments, what information we should actively try to propagate through government, whether some of us should take governmental roles, etc.

- Will an intelligence explosion be local or dispersed?

- Timelines implications: If intelligence explosions can be highly local it could be that a take-off is happening right now that we just can't see, and so our timelines should be shorter.

- Strategic Implications: The main reason I might want to know about local v dispersed is because I need to know what sorts of information flows to set up between government, industry, and academia.

The word I'd object to in the sentence "Well, they are the decision-relevant question" is the word 'the'. They are 'a' decision-relevant question, but not at all obviously 'the', nor even obviously one of the first five most important ones (I don't have a particular list in mind, but I expect the top five are mostly questions about alignment agendas and the structure of intelligence).

---

(Also, I personally have never generated a timeline using a model; I do something like Focusing on the felt senses of which numbers feel right. This is the final step in Eliezer's "do the math, then burn the math and go with your gut" thing.)

Yeah, I agree that it's hard to make the most subtle intuitions explicit, and that nonetheless you should trust them. I also want to say that the do-the-math part first is pretty useful ;-)

↑ comment by Benquo · 2018-04-02T15:49:20.668Z · LW(p) · GW(p)

Also, I personally have never generated a timeline using a model; I do something like Focusing on the felt senses of which numbers feel right. This is the final step in Eliezer's "do the math, then burn the math and go with your gut" thing.

This does not seem like the sort of process that would generate an output suitable for shared map-making (i.e. epistemics). It seems like a process designed to produce an answer optimized for your local incentives, which will of course be mostly social ones, since your S1 is going to be tracking those pretty intently.

Replies from: Qiaochu_Yuan↑ comment by Qiaochu_Yuan · 2018-04-02T17:05:43.665Z · LW(p) · GW(p)

I'm also confused by this response. I don't mean to imply that the model-building version of the thing is bad, but I did want to contribute as a data point that I've never done it.

I don't have full introspective access to what my gut is tracking but it mostly doesn't feel to me like it's tracking social incentives; what I'm doing is closer to an inner sim kind of thing along the lines of "how surprised would I be if 30 years passed and we were all still alive?" Of course I haven't provided any evidence for this other than claiming it, and I'm not claiming that people should take my gut timelines very seriously, but I can still pay attention to them.

Replies from: Benquo↑ comment by Benquo · 2018-05-16T07:50:39.679Z · LW(p) · GW(p)

That seems substantially better than Focusing directly on the numbers. Thanks for explaining. I'm still pretty surprised if your anticipations are (a) being produced by a model that's primarily epistemic rather than socially motivated, and (b) hard to unpack into a structured model without a lot more work than what's required to generate them in the first place.

↑ comment by Benquo · 2018-04-02T15:47:43.081Z · LW(p) · GW(p)

Well, they are the decision-relevant question. At some point timelines get short enough that it's pointless to save for retirement. At some point timelines get short enough that it may be morally irresponsible to have children. At some point timelines get short enough that it becomes worthwhile to engage in risky activities like skydiving, motorcycling, or taking drugs (because the less time you have left the fewer expected minutes of life you lose from risking your life). Etc.

This helped me understand why people interpret the Rationalists as just another apocalypse cult. The decision-relevant question is not, apparently, what's going to happen as increasing amounts of cognitive labor are delegated to machines and what sort of strategies are available to make this transition go well and what role one might personally play in instantiating these strategies, but instead, simply how soon the inevitable apocalypse is going to happen and accordingly what degree of time preference we should have.

Replies from: Qiaochu_Yuan↑ comment by Qiaochu_Yuan · 2018-04-02T16:54:31.767Z · LW(p) · GW(p)

I'm confused by this response. I didn't mention this to imply that I'm not interested in the model-building and strategic discussions - they seem clearly good and important - but I do think there's a tendency for people to not connect their timelines with their actual daily lives, which are in fact nontrivially affected by their timelines, and I track this level of connection to see who's putting their money where their mouth is in this particular way. E.g. I've been asking people for the last few years whether they're saving for retirement for this reason.

Replies from: Benquo↑ comment by Benquo · 2018-05-16T07:57:58.624Z · LW(p) · GW(p)

Calling them the decision-relevant question implies that you think this sort of thing is more important than the other questions. This is very surprising - as is the broader emphasis on timelines over other strategic considerations that might help us favor some interventions over others - if you take the AI safety narrative literally. It's a lot less surprising if you think explicit narratives are often cover stories people don't mean literally, and notice that one of the main ways factions within a society become strategically distinct is by coordinating around distinct time preferences.

In particular, inducing high time preference in people already disposed to trusting you as a leader seems like a pretty generally applicable strategy for getting them to defer to your authority. "Emergency powers," wartime authority and rallying behind the flag, doomsday cults, etc.

Replies from: Qiaochu_Yuan↑ comment by Qiaochu_Yuan · 2018-05-16T23:54:21.006Z · LW(p) · GW(p)

Yeah, "the" was much too strong and that is definitely not a thing I think. I don't appreciate the indirect accusation that I'm trying to get people to defer to my authority by inducing high time preference in them.

Replies from: gjm↑ comment by gjm · 2018-05-17T02:33:51.662Z · LW(p) · GW(p)

For what it's worth, I read Benquo as saying not "Qiaochu is trying to do this" but something more like "People who see rationalism as a cult are likely to think the cult leaders are trying to do this". Though I can see arguments for reading it the other way.

Replies from: Raemon↑ comment by Raemon · 2018-05-17T04:47:51.950Z · LW(p) · GW(p)

I anticipated a third thing, which is that "at least some people who are talking about short timelines are at least somewhat unconsciously motivated to do so for this reason, Qiaochu is embedded in the social web that may be playing a role in shaping his intuitions."

Replies from: Benquocomment by Raemon · 2019-11-27T04:15:15.988Z · LW(p) · GW(p)

I generally got value of this post's crisp explanation. In particular, the notion that "model driven information-exchange" can result in people forming new beliefs that are more extreme that either person started with, rather than necessarily "averaging their beliefs together", was something I hadn't really considered before.

comment by Sniffnoy · 2018-04-27T21:21:36.240Z · LW(p) · GW(p)

Some of the images in this post seem to be broken; when I try to load them individually, Firefox complains that they contain errors.

Replies from: Raemon↑ comment by Raemon · 2018-04-27T21:55:55.763Z · LW(p) · GW(p)

Huh. Can you describe your process in more detail? (also, version of Firefox?)

Replies from: Sniffnoy↑ comment by Sniffnoy · 2018-04-27T23:09:37.417Z · LW(p) · GW(p)

Firefox 59.0.2 (64-bit) running on Linux Mint 18.3.

Several of the images don't load. If I take one of these and try opening it in its own tab, I just get the message "The image cannot be displayed because it contains errors."

Here's something that may be more relevant -- it doesn't seem to just be the case of a slightly broken PNG like the error message would suggest and like I initially thought. Rather when I attempt to download it I get an empty file.

comment by Kaj_Sotala · 2018-04-19T13:28:38.238Z · LW(p) · GW(p)

Curated this post for:

- Having a clear explanation of what I feel is a core skill for collective rationality: actually integrating the evidence and models that others have.

- Including the sentence "Don't practice coming to agreement; practice exchanging models" which succinctly summed up the core idea and is easy to remember.

- Having pretty pictures!

How this post could have been improved even further:

- The first time that I looked at this, I semi-automatically started skimming and didn't properly read everything; I had to come back and consciously make myself read everything. I'm not entirely sure of what caused it, but I suspect it had to do with the way that the post contained images and then often only had a brief quote and one or two sentences before the next image; something about this combination made my brain parse it as "there's a bit of stuff between the pictures but it probably isn't very important since there's so little of it", even though that was the actual substantial content.

comment by danielechlin · 2025-03-14T20:32:02.821Z · LW(p) · GW(p)

Well a simple, useful, accurate, non-learning-oriented model, except to the extent that it's a known temporary state, is to turn all the red boxes into one more node in your mental map and average out accordingly. If they're an expert it's like "well what I've THOUGHT to this point is 0.3, but someone very important said 0.6, so it's probably closer to 0.6, but it's also possible we're talking about different situations without realizing it."

comment by jmh · 2019-07-01T16:44:07.271Z · LW(p) · GW(p)

Not sure how, of even if, this relates but seemed somewhat connected to what you're looking at. https://www.quantamagazine.org/were-stuck-inside-the-universe-lee-smolin-has-an-idea-for-how-to-study-it-anyway-20190627/

For some reason your parenthetical made me think of some of what Smolin was saying about how we view the universe and how we should try to model it.