"Flinching away from truth” is often about *protecting* the epistemology

post by AnnaSalamon · 2016-12-20T18:39:18.737Z · LW · GW · Legacy · 58 commentsContents

More examples: How I try to avoid "buckets errors": None 58 comments

Related to: Leave a line of retreat; Categorizing has consequences.

There’s a story I like, about this little kid who wants to be a writer. So she writes a story and shows it to her teacher.

“You misspelt the word ‘ocean’”, says the teacher.

“No I didn’t!”, says the kid.

The teacher looks a bit apologetic, but persists: “‘Ocean’ is spelt with a ‘c’ rather than an ‘sh’; this makes sense, because the ‘e’ after the ‘c’ changes its sound…”

“No I didn’t!” interrupts the kid.

“Look,” says the teacher, “I get it that it hurts to notice mistakes. But that which can be destroyed by the truth should be! You did, in fact, misspell the word ‘ocean’.”

“I did not!” says the kid, whereupon she bursts into tears, and runs away and hides in the closet, repeating again and again: “I did not misspell the word! I can too be a writer!”.

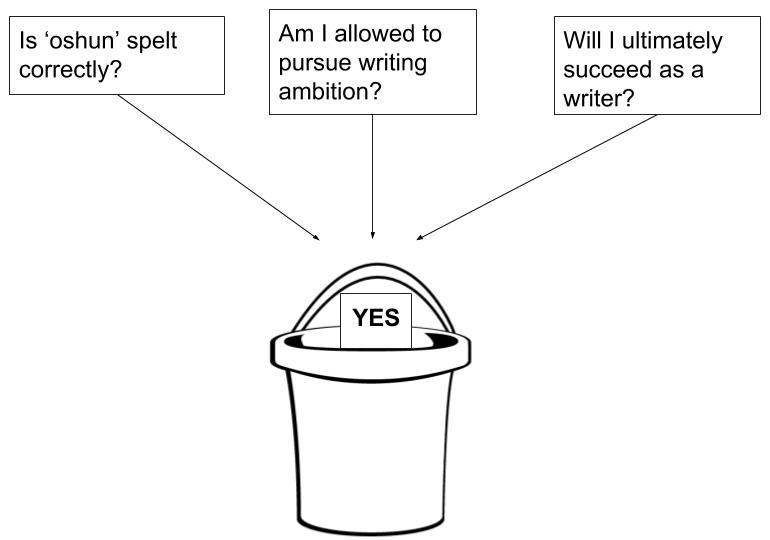

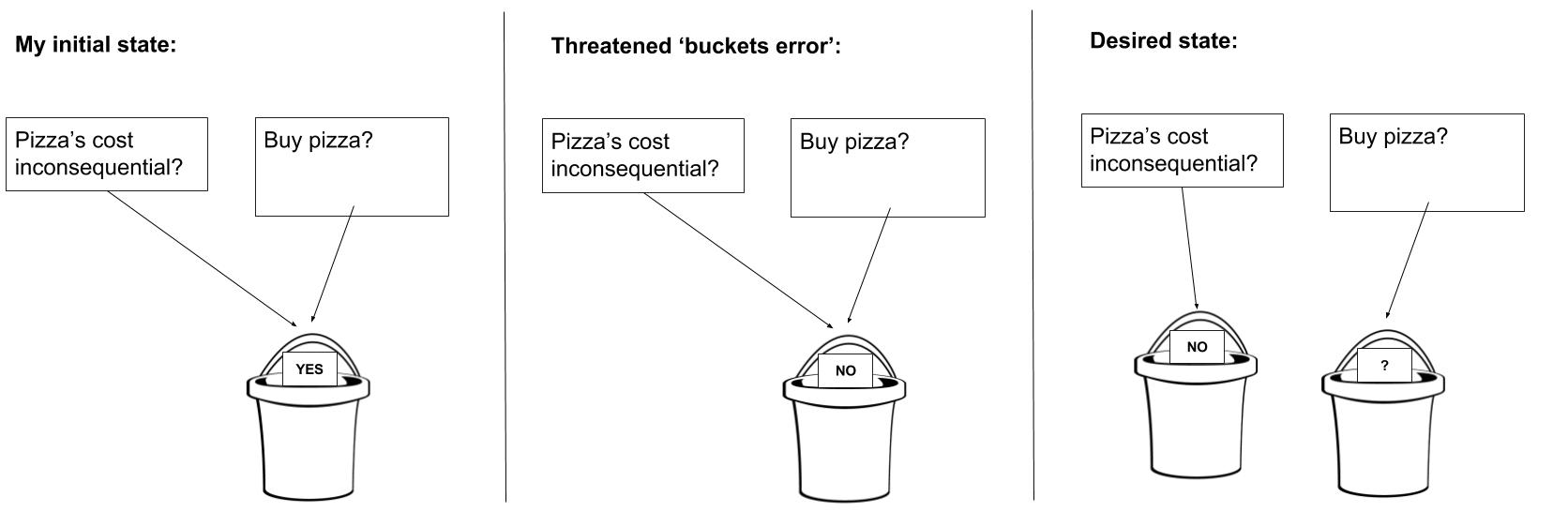

I like to imagine the inside of the kid’s head as containing a single bucket that houses three different variables that are initially all stuck together:

Original state of the kid's head:

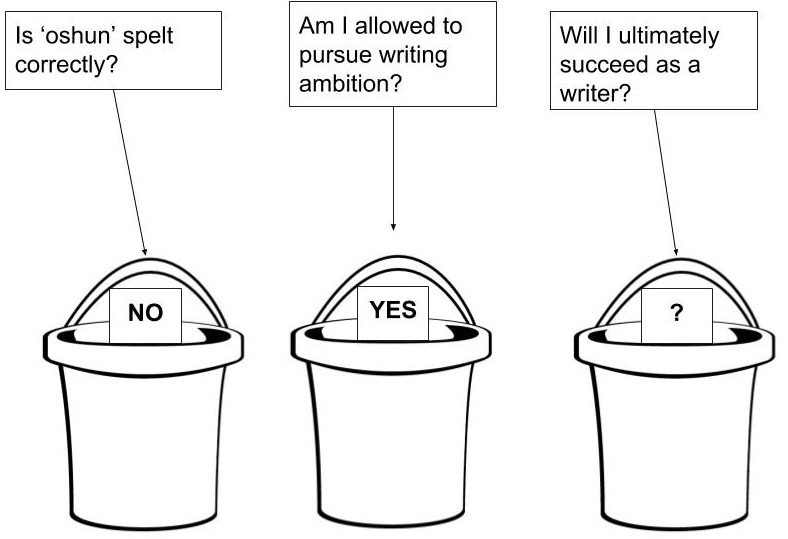

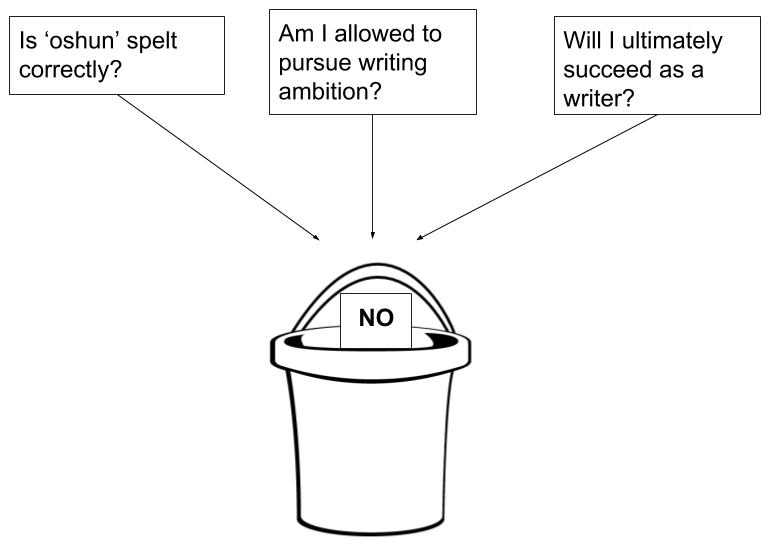

The goal, if one is seeking actual true beliefs, is to separate out each of these variables into its own separate bucket, so that the “is ‘oshun’ spelt correctly?” variable can update to the accurate state of "no", without simultaneously forcing the "Am I allowed to pursue my writing ambition?" variable to update to the inaccurate state of "no".

Desirable state (requires somehow acquiring more buckets):

The trouble is, the kid won’t necessarily acquire enough buckets by trying to “grit her teeth and look at the painful thing”. A naive attempt to "just refrain from flinching away, and form true beliefs, however painful" risks introducing a more important error than her current spelling error: mistakenly believing she must stop working toward being a writer, since the bitter truth is that she spelled 'oshun' incorrectly.

State the kid might accidentally land in, if she naively tries to "face the truth":

(You might take a moment, right now, to name the cognitive ritual the kid in the story *should* do (if only she knew the ritual). Or to name what you think you'd do if you found yourself in the kid's situation -- and how you would notice that you were at risk of a "buckets error".)

More examples:

It seems to me that bucket errors are actually pretty common, and that many (most?) mental flinches are in some sense attempts to avoid bucket errors. The following examples are slightly-fictionalized composites of things I suspect happen a lot (except the "me" ones; those are just literally real):

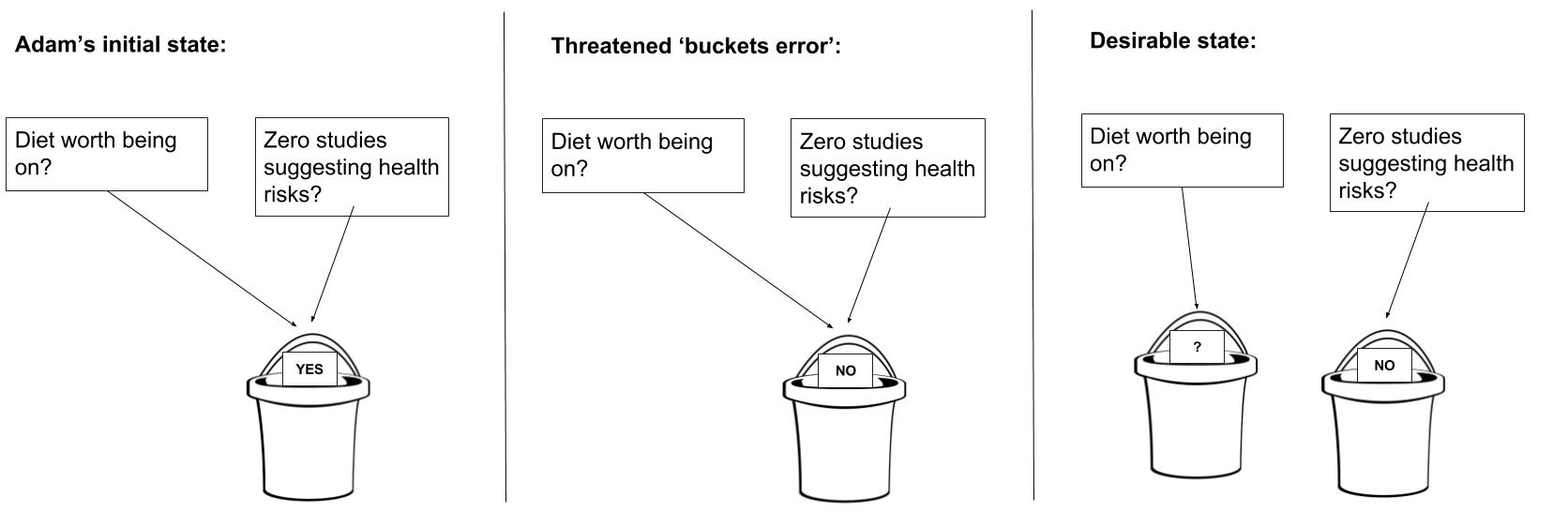

Diet: Adam is on a diet with the intent to lose weight. Betty starts to tell him about some studies suggesting that the diet he is on may cause health problems. Adam complains: “Don’t tell me this! I need to stay motivated!”

One interpretation, as diagramed above: Adam is at risk of accidentally equating the two variables, and accidentally *assuming* that the studies imply that the diet must stop being viscerally motivating. He semi-consciously perceives that this risks error, and so objects to having the information come in and potentially force the error.

Pizza purchase: I was trying to save money. But I also wanted pizza. So I found myself tempted to buy the pizza *really quickly* so that I wouldn't be able to notice that it would cost money (and, thus, so I would be able to buy the pizza):

On this narration: It wasn't *necessarily* a mistake to buy pizza today. Part of me correctly perceived this "not necessarily a mistake to buy pizza" state. Part of me also expected that the rest of me wouldn't perceive this, and that, if I started thinking it through, I might get locked into the no-pizza state even if pizza was better. So it tried to 'help' by buying the pizza *really quickly, before I could think and get it wrong*. [1]

On the particular occasion about the pizza (which happened in 2008, around the time I began reading Eliezer's LW Sequences), I actually managed to notice that the "rush to buy the pizza before I could think" process was going on. So I tried promising myself that, if I still wanted the pizza after thinking it through, I would get the pizza. My resistance to thinking it through vanished immediately. [2]

To briefly give several more examples, without diagrams (you might see if you can visualize how a buckets diagram might go in these):

- Carol is afraid to notice a potential flaw in her startup, lest she lose the ability to try full force on it.

- Don finds himself reluctant to question his belief in God, lest he be forced to conclude that there's no point to morality.

- As a child, I was afraid to allow myself to actually consider giving some of my allowance to poor people, even though part of me wanted to do so. My fear I was that if I allowed the "maybe you should give away your money, because maybe everyone matters evenly and you should be consequentialist" theory to fully boot up in my head, I would end up having to give away *all* my money, which seemed bad.

- Eleanore believes there is no important existential risk, and is reluctant to think through whether that might not be true, in case it ends up hijacking her whole life.

- Fred does not want to notice how much smarter he is than most of his classmates, lest he stop respecting them and treating them well.

- Gina has mixed feelings about pursuing money -- she mostly avoids it -- because she wants to remain a "caring person", and she has a feeling that becoming strategic about money would somehow involve giving up on that.

It seems to me that in each of these cases, the person has an arguably worthwhile goal that they might somehow lose track of (or might accidentally lose the ability to act on) if they think some *other* matter through -- arguably because of a deficiency of mental "buckets".

Moreover, "buckets errors" aren't just thingies that affect thinking in prospect -- they also get actually made in real life. It seems to me that one rather often runs into adults who decided they weren't allowed to like math after failing a quiz in 2nd grade; or who gave up on meaning for a couple years after losing their religion; or who otherwise make some sort of vital "buckets error" that distorts a good chunk of their lives. Although of course this is mostly guesswork, and it is hard to know actual causality.

How I try to avoid "buckets errors":

I basically just try to do the "obvious" thing: when I notice I'm averse to taking in "accurate" information, I ask myself what would be bad about taking in that information.[3] Usually, I get a concrete answer, like "If I noticed I could've saved all that time, I'll have to feel bad", or "if AI timelines are maybe-near, then I'd have to rethink all my plans", or what have you.

Then, I remember that I can consider each variable separately. For example, I can think about whether AI timelines are maybe-near; and if they are, I can always decide to not-rethink my plans anyhow, if that's actually better. I mentally list out all the decisions that *don't* need to be simultaneously forced by the info; and I promise myself that I can take the time to get these other decisions not-wrong, even after considering the new info.

Finally, I check to see if taking in the information is still aversive. If it is, I keep trying to disassemble the aversiveness into component lego blocks until it isn't. Once it isn't aversive, I go ahead and think it through bit by bit, like with the pizza.

This is a change from how I used to think about flinches: I used to be moralistic, and to feel disapproval when I noticed a flinch, and to assume the flinch had no positive purpose. I therefore used to try to just grit my teeth and think about the painful thing, without first "factoring" the "purposes" of the flinch, as I do now. But I think my new ritual is better, at least now that I have enough introspective skill that I can generally finish this procedure in finite time, and can still end up going forth and taking in the info a few minutes later.

(Eliezer once described what I take to be the a similar ritual for avoiding bucket errors, as follows: When deciding which apartment to rent (he said), one should first do out the math, and estimate the number of dollars each would cost, the number of minutes of commute time times the rate at which one values one's time, and so on. But at the end of the day, if the math says the wrong thing, one should do the right thing anyway.)

[1]: As an analogy: sometimes, while programming, I've had the experience of:

- Writing a program I think is maybe-correct;

- Inputting 0 as a test-case, and knowing ahead of time that the output should be, say, “7”;

- Seeing instead that the output was “5”; and

- Being really tempted to just add a “+2” into the program, so that this case will be right.

This edit is the wrong move, but not because of what it does to MyProgram(0) — MyProgram(0) really is right. It’s the wrong move because it maybe messes up the program’s *other* outputs.

Similarly, changing up my beliefs about how my finances should work in order to get a pizza on a day when I want one *might* help with getting the right answer today about the pizza — it isn’t clear — but it’d risk messing up other, future decisions.

The problem with rationalization and mental flinches, IMO, isn’t so much the “intended” action that the rationalization or flinch accomplishes in the moment, but the mess it leaves of the code afterward.

[2] To be a bit more nitpicky about this: the principle I go for in such cases isn’t actually “after thinking it through, do the best thing”. It’s more like “after thinking it through, do the thing that, if reliably allowed to be the decision-criterion, will allow information to flow freely within my head”.

The idea here is that my brain is sometimes motivated to achieve certain things; and if I don’t allow that attempted achievement to occur in plain sight, I incentivize my brain to sneak around behind my back and twist up my code base in an attempt to achieve those things. So, I try not to do that.

This is one reason it seems bad to me when people try to take “maximize all human well-being, added evenly across people, without taking myself or my loved ones as special” as their goal. (Or any other fake utility function.)

[3] To describe this "asking" process more concretely: I sometimes do this as follows: I concretely visualize a 'magic button' that will cause me to take in the information. I reach toward the button, and tell my brain I'm really going to press it when I finish counting down, unless there are any objections ("3... 2... no objections, right?... 1..."). Usually I then get a bit of an answer — a brief flash of worry, or a word or image or association.

Sometimes the thing I get is already clear, like “if I actually did the forms wrong, and I notice, I’ll have to redo them”. Then all I need to do is separate it into buckets (“How about if I figure out whether I did them wrong, and then, if I don’t want to redo them, I can always just not?”).

Other times, what I get is more like quick nonverbal flash, or a feeling of aversion without knowing why. In such cases, I try to keep “feeling near” the aversion. I might for example try thinking of different guesses (“Is it that I’d have to redo the forms?… no… Is it that it’d be embarrassing?… no…”). The idea here is to see if any of the guesses “resonate” a bit, or cause the feeling of aversiveness to become temporarily a bit more vivid-feeling.

For a more detailed version of these instructions, and more thoughts on how to avoid bucket errors in general (under different terminology), you might want to check out Eugene Gendlin’s audiobook “Focusing”.

58 comments

Comments sorted by top scores.

comment by Qiaochu_Yuan · 2016-12-20T07:42:01.700Z · LW(p) · GW(p)

The bucket diagrams don't feel to me like the right diagrams to draw. I would be drawing causal diagrams (of aliefs); in the first example, something like "spelled oshun wrong -> I can't write -> I can't be a writer." Once I notice that I feel like these arrows are there I can then ask myself whether they're really there and how I could falsify that hypothesis, etc.

Replies from: devi, riceissa, SatvikBeri, LawChan, Sniffnoy, John_Maxwell_IV↑ comment by devi · 2016-12-20T22:30:27.117Z · LW(p) · GW(p)

The causal chain feels like a post-justification and not what actually goes on in the child's brain. I expect this to be computed using a vaguer sense of similarity that often ends up agreeing with causal chains (at least good enough in domains with good feedback loops). I agree that causal chains are more useful models of how you should think explicitly about things, but it seems to me that the purpose of these diagrams is to give a memorable symbol for the bug described here (use case: recognize and remember the applicability of the technique).

↑ comment by riceissa · 2018-08-27T04:56:37.943Z · LW(p) · GW(p)

I had a similar thought while reading this post, but I'm not sure invoking causality is necessary (having a direction still seems necessary). Just in terms of propositional logic, I would explain this post as follows:

1. Initially, one has the implication stored in one's mind.

2. Someone asserts .

3. Now one's mind (perhaps subconsciously) does a modus ponens, and obtains .

4. However, is an undesirable belief, so one wants to deny it.

5. Instead of rejecting the implication , one adamantly denies .

The "buckets error" is the implication , and "flinching away" is the denial of . Flinching away is about protecting one's epistemology because denying is still better than accepting . Of course, it would be best to reject the implication , but since one can't do this (by assumption, one makes the buckets error), it is preferable to "flinch away" from .

ETA (2019-02-01): It occurred to me that this is basically the same thing as "one man's modus ponens is another man's modus tollens" (see e.g. this post) but with some extra emotional connotations.

↑ comment by SatvikBeri · 2016-12-21T01:03:28.358Z · LW(p) · GW(p)

In my head, it feels mostly like a tree, e.g:

"I must have spelled oshun right"

–Otherwise I can't write well

– –If I can't write well, I can't be a writer

–Only stupid people misspell common words

– –If I'm stupid, people won't like me

etc. For me, to unravel an irrational alief, I generally have to solve every node below it–e.g., by making sure that I get the benefit from some other alief.

↑ comment by LawrenceC (LawChan) · 2016-12-20T20:44:01.894Z · LW(p) · GW(p)

I think they're equivalent in a sense, but that bucket diagrams are still useful. A bucket can also occur when you conflate multiple causal nodes. So in the first example, the kid might not even have a conscious idea that there are three distinct causal nodes ("spelled oshun wrong", "I can't write", "I can't be a writer"), but instead treats them as a single node. If you're able to catch the flinch, introspect, and notice that there are actually three nodes, you're already a big part of the way there.

Replies from: Qiaochu_Yuan↑ comment by Qiaochu_Yuan · 2016-12-20T20:52:05.006Z · LW(p) · GW(p)

The bucket diagrams are too coarse, I think; they don't keep track of what's causing what and in what direction. That makes it harder to know what causal aliefs to inspect. And when you ask yourself questions like "what would be bad about knowing X?" you usually already get the answer in the form of a causal alief: "because then Y." So the information's already there; why not encode it in your diagram?

Replies from: LawChan↑ comment by LawrenceC (LawChan) · 2016-12-20T20:56:48.543Z · LW(p) · GW(p)

Fair point.

↑ comment by John_Maxwell (John_Maxwell_IV) · 2016-12-22T13:13:47.453Z · LW(p) · GW(p)

Does anyone know whether something like buckets/causal diagram nodes might have an analogue at the neural level?

comment by moridinamael · 2016-12-20T15:14:11.095Z · LW(p) · GW(p)

A common bucket error for me: Idea X is a potentially very important research idea that is, as far as I know, original to me. It would really suck to discover that this wasn't original to me. Thus, I don't want to find out if this is already in the literature.

This is a change from how I used to think about flinches: I used to be moralistic, and to feel disapproval when I noticed a flinch, and to assume the flinch had no positive purpose. I therefore used to try to just grit my teeth and think about the painful thing, without first "factoring" the "purposes" of the flinch, as I do now.

This is key. Any habit that involves "gritting your teeth" is not durable.

Also, Focusing should easily be part of the LW "required reading".

Replies from: ksvanhorn↑ comment by ksvanhorn · 2017-01-16T15:43:00.742Z · LW(p) · GW(p)

I'm reading Gendlin's book Focusing and struggling with it -- it's hard for me to understand why you and Anna think so highly of this book. It's hard to get past all the mystic woo about knowledge "in the body"; Gendlin seems to think that anything not in the conscious mind is somehow stored/processed out there in the muscles and bones. Even taking that as metaphorical -- which Gendlin clearly does not -- I find his description of the process very unclear.

Replies from: pjeby, moridinamael, ChristianKl↑ comment by pjeby · 2017-03-23T15:02:14.381Z · LW(p) · GW(p)

Gendlin seems to think that anything not in the conscious mind is somehow stored/processed out there in the muscles and bones

That's an uncharitable reading of a metaphorical version of the Somatic Marker Hypothesis. Which in turn is just a statement of something fairly obvious: there are physiological indicators of mental and emotional function. That's not the same thing as saying that these things are actually stored in the body, just that one can use physiological state as clues to find out what's going on in your head, or to identify that "something is bothering me", and then try to puzzle out what that is.

An example: suppose I have something I want to say in an article or post. You could describe this "wanting to say something" as my felt sense of what it is I want to say. It is preverbal, because I haven't said it yet. It won't be words until I write it down or say it in my head.

Words, however, aren't always precise, and one's first attempt at stating a thing -- even in one's head -- are often "not quite right". On hearing or reading something back, i get the felt sense that what I've said is not quite right, and that it needs something else. I then attempt new phrasings, until I get the -- wait for it -- felt sense that this is correct.

Gendlin's term "felt sense" is a way to describe this knowing-without-knowing aspect of consciousness. That we can know something nonverbally, that requires teasing out, trial and error that reflects back and forth between the verbal and the nonverbal in order to fully comprehend and express.

So, the essential idea of Gendlin's focusing is that if a person in psychotherapy is not doing the above process -- that is, attempting to express felt, but as yet unformed and disorganized concepts and feelings -- they will not achieve change or even true insight, because it is not the act of self-expression but the act of seaching for the meanings to be expressed that brings about such change. If they are simply verbalizing without ever looking for the words, then they are wasting their time having a social chat, rather than actually reflecting on their experience.

Meanwhile, those bits of felt sense we're not even trying to explore, represent untapped opportunity for improving our quality of life.

[Edited to add: I'm not 100% in agreement with the Somatic Marker Hypothesis, personally: I think the idea of somatic markers being fed back to the brain as a feedback mechanism is one possible way of doing things, but I doubt that all reinforcement involving emotions work that way. Evolution kludges lots of things, but it doesn't necessarily kludge them consistently. :) That being said, somatic markers are an awesome tool for conscious reflection and feedback, whether they are an input to the brain's core decisionmaking process, or "merely" an output of it.]

Replies from: ChristianKl↑ comment by ChristianKl · 2017-03-25T18:31:31.689Z · LW(p) · GW(p)

That's an uncharitable reading of a metaphorical version of the Somatic Marker Hypothesis. Which in turn is just a statement of something fairly obvious: there are physiological indicators of mental and emotional function. That's not the same thing as saying that these things are actually stored in the body, just that one can use physiological state as clues to find out what's going on in your head, or to identify that "something is bothering me", and then try to puzzle out what that is.

I'm not sure that Gendlin doesn't believe in something stronger. There's bodywork literature that suggests that you won't solve a deep problem like a depression without changes on the myofascial level.

↑ comment by moridinamael · 2017-01-16T16:29:46.488Z · LW(p) · GW(p)

Let me attempt to explain it in my own words.

You have a thought, and then you have some kind of emotional reaction to it, and that emotionally reaction should be felt in your body. Indeed, it is hard to have an emotion that doesn't have a physical component.

Say you think that you should call your mom, but then you feel a heaviness or a sinking in your gut, or a tightness in your neck or throat or jaw. These physical sensations are one of the main ways your subconscious tries to communicate with you. Let's further say that you don't know why you feel this way, and you can't say why you don't want to call your mom. You just find that you know you should call your mom but some part of you is giving you a really bad feeling about it. If you don't make an effort to untangle this mess, you'll probably just not call your mom, meaning whatever subconscious process originated those bad feelings in the first place will continue sitting under the surface and probably recapitulate the same reaction in similar situations.

If you gingerly try to "fit" the feeling with some words, as Gendlin says, the mind will either give you no feedback or it will give you a "yes, that's right" in the form of a further physical shift. This physical shift can be interpreted as the subconscious module acknowledging that its signal has been heard and ceasing to broadcast it.

I really don't think Gendlin is saying that the origin of your emotions about calling your mom is stored in your muscles. I think he's saying that when you have certain thoughts or parts of yourself that you have squashed out of consciousness with consistent suppression, these parts make themselves known through physical sensations, so it feels like it's in your body. And the best way to figure out what those feelings are is to be very attentive to your body, because that's the channel through which you're able to tentatively communicate with that part of yourself.

OR, it may not be that you did anything to suppress the thoughts, it may just be that the mind is structured in such a way that certain parts of the mind have no vocabulary with which to just inject a simple verbal thought into awareness. There's no reason a priori to assume that all parts of the mind have equal access to the phenological loop.

Maybe Gendlin's stuff is easier to swallow if you happen to already have this view of the conscious mind as the tip of the iceberg, with most of your beliefs and habits and thoughts being dominated by the vast but unreflective subconscious. If you get into meditation in any serious way, you can really consistently see that these unarticulated mental constructs are always lurking there, dominating behavior, pushing and pulling. To me, it's not woo at all, it's very concrete and actionable, but I understand that Gendlin's way of wording things may serve as a barrier to entry.

Replies from: ksvanhorn↑ comment by ChristianKl · 2017-03-25T15:58:58.702Z · LW(p) · GW(p)

Gendlin seems to think that anything not in the conscious mind is somehow stored/processed out there in the muscles and bones.

There a subjective experience that suggests that feelings are located inside the body. Even when the information is actually stored in the motor cortex it's practically useful for certain interventions to use a mental model that locates the feelings inside the body.

A month ago I had a tense neck. Even when the neck relaxed a bit, after a night of sleep it was again completely tense. After the problem went on for a week I used Gendlin's Focusing on the tense neck. I found a feeling of confusion that was associated with the tense neck. I processed the feeling. I felt the shift. My neck got more relaxed and it didn't get tense again.

It's reasonable to say that the feeling of confusion wasn't located in my neck but somewhere in my brain and neural pattern in my brain resulted in my brain sending signals to my neck to tense up. At the same time the mental model of Focusing that includes to connecting to the feeling in the neck helped me to resolve my problem.

comment by jimmy · 2016-12-20T18:32:37.044Z · LW(p) · GW(p)

The interesting thing about noticing things like this, to me, is that once you can start to see "irrational" choices as the (potentially) better choices of limited sets (flinch away+"can be a writer", correct spelling of ocean+"can't"), then you'll start describing the situation as "doesn't see that you can misspell words as a kid and grow up to be a writer" instead of "irrational", and the solution there recommends itself.

In general, the word "irrational" is stand in for "I don't understand why this person is doing this" plus the assumption that it's caused by motivations cannot be reasoned with. The problem with that is that they can be reasoned with, once you understand what they actually are.

Replies from: Lumifer, pmw7070↑ comment by Lumifer · 2016-12-20T20:29:21.143Z · LW(p) · GW(p)

In general, the word "irrational" is stand in for "I don't understand why this person is doing this"

Not necessarily. Quite often "irrational" means "I understand why she's doing this, but she's not going to achieve her goals this way".

Replies from: jimmy↑ comment by jimmy · 2016-12-20T22:12:59.092Z · LW(p) · GW(p)

Sorta. There's two ways of using it though. If you ask me "surely you don't think the rational response is to flinch away like she's doing!?" I'd shrug and say "nah". If you put me on the spot and asked "would you say she's being 'irrational'? Yes or no?", I'd say "sure, you can say that I guess". It can be functional short hand sometimes, if you make sure that you don't try to import connotations and use it to mean anything but "suboptimal", but the term "suboptimal" captures all of that without hinting at false implications. Normally when you actually understand why someone is doing something but think it won't work, you use other words. For example, Bob went to the grocery store to buy food because he thinks they're open. They're not. Do you say he's "irrational" or just wrong. I dunno about you, but using "irrational" there doesn't seem to fit.

Often, however, it's used when you think you understand why they're doing something - or when you understand the first layer but use it as a stop sign instead of extending your curiosity until you have a functional explanation. Example: "She's screaming at her kids even though it isn't going to help anything. I understand why she does it, she's just angry. It's irrational though". Okay, so given that it's not working, why is she letting her anger control her? That's the part that needs explaining, because that's the part that can actually change something. If the way you're using "irrational" leads you to even the temptation to say "you're being irrational", then (with a few cool exceptions) what it really means is that you think you understand all that there is to understand, but that you're wrong.

That help clarify?

Replies from: Lumifer↑ comment by Lumifer · 2016-12-21T17:50:11.859Z · LW(p) · GW(p)

For example, Bob went to the grocery store to buy food because he thinks they're open. They're not. Do you say he's "irrational" or just wrong.

"Irrational" implies making a bad choice when a good choice is available. If Bob was mistaken, he was just mistaken. If he knew he could easily check the store hours on his phone but decided not to and spent 15 minutes driving to the store, he was irrational.

Example: "She's screaming at her kids even though it isn't going to help anything. I understand why she does it, she's just angry. It's irrational though". Okay, so given that it's not working, why is she letting her anger control her?

Because she is dumb and unable to exercise self-control.

It seems to me you just don't like the word "irrational". Are there situations where you think it applies? In what cases would you use this word?

Replies from: jimmy↑ comment by jimmy · 2016-12-22T03:31:50.826Z · LW(p) · GW(p)

"Irrational" implies making a bad choice when a good choice is available. If Bob was mistaken, he was just mistaken. If he knew he could easily check the store hours on his phone but decided not to and spent 15 minutes driving to the store, he was irrational.

It seems like you’re burying a lot of the work in the word “available”. Is it “available” if it weren’t on his mind even if he could answer “yes, it would be easy to check” when asked? Is it “available” when it’s not on his mind but reminding him wouldn’t change his decision, but he has other reasons for it? If he doesn’t have other reasons, but would do things differently if you taught him? If a different path were taken on any of those forks?

I can think of a lot of different ways for someone to "know he could easily check store hours" and then not do it, and I would describe them all differently - and none of them seem best described as “irrational”, except perhaps as sloppy shorthand for “suboptimal decision algorithm”.

Because she is dumb and unable to exercise self-control.

That’s certainly one explanation, and useful for some things, but less useful for many others. Again, shorthand is fine if seen for what it is. In other cases though, I might want a more detailed answer that explains why she is “unable” to exercise self control - say, for example, if I wanted to change it. The word “irrational” makes perfect sense if you think changing things like this is impossible. If you see it as a matter of disentangling the puzzle, it makes less sense.

It seems to me you just don't like the word "irrational". Are there situations where you think it applies? In what cases would you use this word?

It’s not that I “don’t like” the word - I don't “try not to use it” or anything. It’s just that I’ve noticed that it has left my vocabulary on its own once I started trying to change behaviors that seemed irrational to me instead of letting it function as a mental stop sign. It just seems that the only thing “irrational” means, beyond “suboptimal”, is an implicit claim that there are no further answers - and that is empirically false (and other bad things). So in that sense, no, I’d never use the word because I think that the picture it tries to paint is fundamentally incoherent.

If that connotation is disclaimed and you want to use it to mean more than “suboptimal”, it seems like “driven by motivated cognition” is the probably one of the closer things to the feeling I get by the word “irrational”, but as this post by Anna shows, even that can have actual reasons behind it, and I usually want the extra precision by actually spelling out what I think is happening.

If I were to use the word myself (as opposed to running with it when someone else uses the word), it would only be in a case where the person I’m talking to understands the implicit “[but there are reasons for this, and there’s more than could be learned/done if this case were to become important. It’s not]”

EDIT: I also could conceivably use it in describing someone's behavior to them if I anticipated that they'd agree and change their behavior if I did.

Replies from: Lumifer↑ comment by Lumifer · 2016-12-22T20:22:02.918Z · LW(p) · GW(p)

instead of letting it function as a mental stop sign

I don't know why you let it function as a stop sign in the first place. "Irrational" means neither "random" nor "inexplicable" -- to me it certainly does not imply that "there are no further answers". As I mentioned upthread, I can consider someone's behaviour irrational and at the same time understand why that someone is doing this and see the levers to change him.

The difference that I see from "suboptimal" is that suboptimal implies that you'll still get to your goal, but inefficiently, using more resources in the process. "Irrational", on the other hand, implies that you just won't reach your goal. But it can be a fuzzy distinction.

Replies from: jimmy, Luke_A_Somers↑ comment by jimmy · 2016-12-23T23:29:15.196Z · LW(p) · GW(p)

As I mentioned upthread, I can consider someone's behaviour irrational and at the same time understand why that someone is doing this and see the levers to change him.

If "irrational" doesn't feel like an explanation in itself, and you're going to dig further and try to figure out why they're being irrational, then why stop to declare it irrational in the first place? I don't mean it in a rhetorical sense and I'm not saying "you shouldn't" - I really don't understand what could motivate you to do it, and don't feel any reason to myself. What does the diagnosis "irrational" do for you? It kinda feels to me like saying "fire works because phlogistons!" and then getting to work on how phlogistons work. What's the middle man doing for you here?

With regard to "suboptimal" vs "irrational", I read it completely differently. If someone is beating their head against the door to open it instead of using the handle, I woudln't call it any more "rational" if the door does eventually give way. Similarly, I like to use "suboptimal" to mean strictly "less than optimal" (including but not limited to the cases where the effectiveness is zero or negative) rather than using it to mean "less than optimal but better than nothing"

Replies from: Lumifer↑ comment by Lumifer · 2016-12-25T03:06:03.562Z · LW(p) · GW(p)

why stop to declare it irrational in the first place?

Because for me there are basically three ways to evaluate some course of action. You can say that it's perfectly fine and that's that (let's call it "rational"). You can say that it's crazy and you don't have a clue why someone is doing this (let's call it "inexplicable"). And finally, you can say that it's a mistaken course of action: you see the goal, but the road chosen doesn't lead there. I would call this "irrational".

Within this framework, calling something "irrational" is the only way to "dig further and try to figure out why".

With regard to "suboptimal" vs "irrational", I read it completely differently.

So we have a difference in terminology. That's not unheard of :-)

Replies from: jimmy↑ comment by jimmy · 2016-12-30T22:04:52.456Z · LW(p) · GW(p)

Interesting. I dig into plenty of things before concluding that I know what their goal is and that they will fail, and I don’t see what is supposed to be stopping me from doing this. I’m not sure why “I don’t [yet] have a clue why” gets rounded to “inexplicable”.

↑ comment by Luke_A_Somers · 2017-01-16T15:49:18.137Z · LW(p) · GW(p)

That isn't the distinction I get between suboptimal and irrational. They're focused on different things.

Irrational to me would mean that the process by which the strategy was chosen was not one that would reliably yield good strategies in varying circumstances.

Suboptimal is just an outcome measurement.

Replies from: hairyfigment↑ comment by hairyfigment · 2017-01-16T18:53:26.058Z · LW(p) · GW(p)

Outcome? I was going to say that suboptimal could refer to a case where we don't know if you'll reach your goal, but we can show (by common assumptions, let's say) that the action has lower expected value than some other. "Irrational" does not have such a precise technical meaning, though we often use it for more extreme suboptimality.

Replies from: Luke_A_Somers↑ comment by Luke_A_Somers · 2017-01-16T21:22:42.857Z · LW(p) · GW(p)

Yes, outcome. Look at what each word is actually describing. Irrationality is about process. Suboptimal is about outcome -- if you inefficiently but reliably calculate good strategies for action, that's being slow, not suboptimal in the way we're talking about, so it's not about process.

↑ comment by pmw7070 · 2017-11-13T18:16:37.764Z · LW(p) · GW(p)

That's really broadening the term 'irrational.' Irrational is not synonym for 'not good' or 'not preferred,' it just means not rational or not logical. There may be lots of rational choices, some of which may be better or worse than others - but all rational. Irrational MIGHT BE (loosely) short for 'that doesn't make sense,' or better, 'that's not logical.'

The bucket analog as illustrated seems to me more pointing at a faulty basis than irrational thinking. The budding author clearly linked spelling with being allowed to pursue writing that ends up in a successful career, and he has a point. An author cannot be successful without an audience; a piece where one continually has to stop and interpret badly spelled words is likely not going to have a good audience. There is a clear rationale. The faulty basis is more related to the student having a picture of being a successful author (1) at this stage in life, and (2) without discipline and development. The false basis in the picture is linking 'am I allowed to pursue writing ambition' with misspelling a word.

comment by RomeoStevens · 2016-12-20T07:07:10.742Z · LW(p) · GW(p)

WRT flinches. There seems to be a general pattern across many forms of psychotherapy that goes something like this: The standard frame is a 'fixing' frame. Your mental phenomena react about as well to this as other people in your life do, not very well. Best practices with others also apply internally, namely: Assume positive intent, the patterns you observe are attempts to accomplish some positive goal. Seek to understand what goals those patterns are oriented towards. Ask about ways you might be able to help that process do a better job or get the resources it needs to get you the good thing it wants for you.

The fix it frame causes both people and mental phenomena to run away from you.

comment by HungryHippo · 2016-12-20T17:55:18.681Z · LW(p) · GW(p)

Very interesting article!

I'm incidentally re-reading "Feeling Good" and parts of it deal with situations exactly like the ones Oshun-Kid is in.

From Chapter 6 ("Verbal Judo: How to talk back when you're under the fire of criticism"), I quote:

Here’s how it works. When another person criticizes you, certain negative thoughts are automatically triggered in your head. Your emotional reaction will be created by these thoughts and not by what the other person says. The thoughts which upset you will invariably contain the same types of mental errors described in Chapter 3: overgeneralization, all-or-nothing thinking, the mental filter, labeling, etc. For example, let’s take a look at Art’s thoughts. His panic was the result of his catastrophic interpretation: “This criticism shows how worthless I am.” What mental errors is he making? In the first place, Art is jumping to conclusions when he arbitrarily concludes the patient’s criticism is valid and reasonable. This may or may not be the case. Furthermore, he is exaggerating the importance of whatever he actually said to the patient that may have been undiplomatic (magnification), and he is assuming he could do nothing to correct any errors in his behavior (the fortune teller error). He unrealistically predicted he would be rejected and ruined professionally because he would repeat endlessly whatever error he made with this one patient (overgeneralization). He focused exclusively on his error (the mental filter) and over-looked his numerous other therapeutic successes (disqualifying or overlooking the positive). He identified with his erroneous behavior and concluded he was a “worthless and insensitive human being” (labeling). The first step in overcoming your fear of criticism concerns your own mental processes: Learn to identify the negative thoughts you have when you are being criticized. It will be most helpful to write them down using the double-column technique described in the two previous chapters. This will enable you to analyze your thoughts and recognize where your thinking is illogical or wrong. Finally, write down rational responses that are more reasonable and less upsetting.

And quoting your article:

(You might take a moment, right now, to name the cognitive ritual the kid in the story should do (if only she knew the ritual). Or to name what you think you'd do if you found yourself in the kid's situation -- and how you would notice that you were at risk of a "buckets error".)

I would encourage Oshun-Kid to cultivate the following habit:

- Notice when you feel certain (negative) emotions. (E.g. anxiety, sadness, fear, frustration, boredom, stressed, depressed, self-critical, etc.) Recognizing these (sometimes fleeting) moments is a skill that you get better at as you practice.

- Try putting down in words (write it down!) why you feel that emotion in this situation. This too, you will get better at as you practice. These are your Automatic Thoughts. E.g. "I'm always late!".

- Identify the cognitive distortions present in your automatic thought. E.g. Overgeneralization, all-or-nothing thinking, catastrophizing, etc.

- Write down a Rational Response that is absolutely true (don't try to deceive yourself --- it doesn't work!) and also less upsetting. E.g.: I'm not literally always late! I'm sometimes late and sometimes on time. If I'm going to beat myself up for the times I'm late, I might as well feel good about myself for the times I'm on time. Etc.

Write steps 2., 3., and 4., in three columns, where you add a new row each time you notice a negative emotion.

I'm actually surprised that Cognitive Biases are focused on to a greater degree than Cognitive Distortions are in the rational community (based on google-phrase search on site:lesswrong.com), especially when Kahneman writes more or less in Thinking: Fast and Slow that being aware of cognitive biases has not made him that much better at countering them (IIRC) while CBT techniques are regularly used in therapy sessions to alleviate depression, anxiety, etc. Sometimes as effectively as in a single session.

I also have some objections as to how the teacher behaves. I think the teacher would be more effective if he said stuff like: "Wow! I really like the story! You must have worked really hard to make it! Tell me how you worked at it: did you think up the story first and then write it down, or did you think it up as you were writing it, or did you do it a different way? Do you think there are authors who do it a different way from you or in a similar way to you? Do you think it's possible to become a better writer, just like a runner becomes a faster runner or like a basketball player becomes better at basketball? How would you go about doing that to become a better author? If a basketball player makes a mistake in a game, does it always make him a bad basketball player? Do the best players always do everything perfectly, or do they sometimes make mistakes? Should you expect of yourself to always be a perfect author, or is it okay for you to sometimes make mistakes? What can you do if you discover a mistake in your writing? Is it useful to sometimes search through your writings to find mistakes you can fix? Etc."

Edit: I personally find that when tutoring someone and you notice in real time that they are making a mistake or are just about to make a mistake, it's more effective to correct them in the form of a question rather than outright saying "that's wrong" or "that's incorrect" or similar.

E.g.:

Pupil, saying: "... and then I multiply nine by eight and get fifty-four ..." Here, I wouldn't say: "that's a mistake." I would rather say, "hmm... is that the case?" or "is that so?" or "wait a second, what did you say that was again?" or "hold on, can you repeat that for me?". It's a bit difficult for me to translate my question-phrases from Norwegian to English, because a lot of the effect in the tone of voice. My theory for why this works is that when you say "that's wrong" or similar, you are more likely to express the emotion of disapproval at the student's actions or the student herself (and the student is more likely to read that emotion into you whether or not you express it). Whereas when you put it in the form of a question, the emotions you express are more of the form: mild surprise, puzzlement, uncertainty, curiosity, interest, etc. which are not directly rejecting or disapproving emotions on your part and therefore don't make the student feel bad.

After you do this a couple of times, the student becomes aware that every time you put a question to them, they are expected to double check that something is correct and to justify their conclusion.

Replies from: Qiaochu_Yuan↑ comment by Qiaochu_Yuan · 2016-12-20T20:37:00.660Z · LW(p) · GW(p)

I'm actually surprised that Cognitive Biases are focused on to a greater degree than Cognitive Distortions are in the rational community (based on google-phrase search on site:lesswrong.com), especially when Kahneman writes more or less in Thinking: Fast and Slow that being aware of cognitive biases has not made him that much better at countering them (IIRC) while CBT techniques are regularly used in therapy sessions to alleviate depression, anxiety, etc. Sometimes as effectively as in a single session.

The concept of cognitive biases is sort of like training wheels; I continue teaching people about them (at SPARC, say) as a first step on the path to getting them to recognize that they can question the outputs of their brain processes. It helps make things feel a lot less woo to be able to point to a bunch of studies clearly confirming that some cognitive bias exists, at first. And once you've internalized that things like cognitive biases exist I think it's a lot easier to then move on to other more helpful things, at least for a certain kind of a person (like me; this is the path I took historically).

comment by Viliam · 2017-01-18T15:36:46.050Z · LW(p) · GW(p)

There is a group (not CFAR) that allegedly uses the following tactics:

1) They teach their students (among other things) that consistency is good, and compartmentalization is bad and stupid.

2) They make the students admit explicitly that the seminar was useful for them.

3) They make the students admit explicitly that one of their important desires is to help their friends.

...and then...

4) They create strong pressure on the students to tell all their friends about the seminar, and to make them sign up for one.

The official reasoning is that if you want to be consistent, and if you want good things to happen to your friends, and if the seminar is a good thing... then logically you should want to make your friends attend the seminar. And if you want to make your friends attend the seminar, you should immediately take an action that increases the probability of that, especially if all it takes is to take your phone and make a few calls!

If there is anything stopping you, then you are inconsistent -- which means stupid! -- and you have failed at the essential lesson that was taught to you during the previous hours -- which means you will keep failing at life, because you are a comparmentalizing loser, and you can't stop being one even after the whole process was explained to you in a great detail, and you even paid a lot of money to learn this lesson! Come on, don't throw away everything, pick up the damned phone and start calling; it is not that difficult, and your first experience with overcoming compartmentalization will feel really great afterwards, trust me!

So, what exactly is wrong about this reasoning?

First, when someone says "A implies B", that doesn't mean you need to immediately jump and start doing B. There is still an option that A is false; and an option that "A implies B" is actually a lie. Or maybe "A implies B" only in some situation, or only with certain probability. Probabilistic thinking and paying attention to detail are not the opposite of consistency.

Second, just because something is good, it is not necessarily the best available option. Maybe you should spend some time thinking about even better options.

Third, there is a difference between trying to be consistent, and believing in your own infallibility. You are allowed to have probabilistic beliefs, and to admit openly that those beliefs are probabilistic. That you believe that with probability 80% A is true, but you also admit the possiblity that A is false. That is not an opposite of consistency. Furthermore, you are allowed to take an outside view, and admit that with certain probability you are wrong. That is especially important in calculating expected utility of actions that strongly depend on whether you are right or wrong.

Fourth, the most important consistency is internal. Just because you are internally consistent, it doesn't mean you have to explain all your beliefs truthfully and meaningfully to everyone, especially to people who are obviously trying to manipulate you.

...but if you learned about the concept of consistency just a few minutes ago, you probably don't realize all this.

Replies from: Qiaochu_Yuan↑ comment by Qiaochu_Yuan · 2017-01-19T04:56:12.536Z · LW(p) · GW(p)

I would describe the problem as a combination of privileging the hypothesis and privileging the question. First, even granted that you want to both be consistent and help your friends, it's not clear that telling them about the seminar is the most helpful thing you can do for your friends; there are lots of other hypotheses you could try generating if you were given the time. Second, there are lots of other things you might want and do something about wanting, and someone's privileging the question by bringing these particular things to your attention in this particular way.

This objection applies pretty much verbatim to most things strangers might try to persuade you to do, e.g. donate money to their charity.

comment by Bobertron · 2016-12-20T23:05:47.027Z · LW(p) · GW(p)

Interesting article. Here is the problem I have: In the first example, "spelling ocean correctly" and "I'll be a successful writer" clearly have nothing to do with each other, so they shouldn't be in a bucket together and the kid is just being stupid. At least on first glance, that's totally different from Carol's situation. I'm tempted to say that "I should not try full force on the startup" and "there is a fatal flaw in the startup" should be in a bucket, because I believe "if there is a fatal flaw in the startup, I should not try it". As long as I believe that, how can I separate these two and not flinch?

Do you think one should allow oneself to be less consistent in order to become more accurate? Suppose you are a smoker and you don't want to look into the health risks of smoking, because you don't want to quit. I think you should allow yourself in some situations to both believe "I should not smoke because it is bad for my health" and to continue smoking, because then you'll flinch less. But I'm fuzzy on when. If you completely give up on having your actions be determined by your believes about what you should do, that seems obviously crazy and there won't be any reason to look into the health risks of smoking anyway.

Maybe you should model yourself as two people. One person is rationality. It's responsible for determining what to believe and what to do. The other person is the one that queries rationality and acts on it's recommendations. Since rationality is a consequentialis with integrity it might not recommend to quit smoking, because then the other person will stop acting on it's advice and stop giving it queries.

Replies from: Vaniver, Kaj_Sotala↑ comment by Vaniver · 2016-12-22T18:47:56.966Z · LW(p) · GW(p)

the kid is just being stupid

"Just being stupid" and "just doing the wrong thing" are rarely helpful views, because those errors are produced by specific bugs. Those bugs have pointers to how to fix them, whereas "just being stupid" doesn't.

I think you should allow yourself in some situations to both believe "I should not smoke because it is bad for my health" and to continue smoking, because then you'll flinch less.

I think this misses the point, and damages your "should" center. You want to get into a state where if you think "I should X," then you do X. The set of beliefs that allows this is "Smoking is bad for my health," "On net I think smoking is worth it," and "I should do things that I think are on net worth doing." (You can see how updating the first one from "Smoking isn't that bad for my health" to its current state could flip the second belief, but that is determined by a trusted process instead of health getting an undeserved veto.)

Replies from: fubarobfusco, Bobertron↑ comment by fubarobfusco · 2016-12-23T03:59:51.026Z · LW(p) · GW(p)

"Just being stupid" and "just doing the wrong thing" are rarely helpful views, because those errors are produced by specific bugs. Those bugs have pointers to how to fix them, whereas "just being stupid" doesn't.

I'm guessing you're alluding to "Errors vs. Bugs and the End of Stupidity" here, which seems to have disappeared along with the rest of LiveJournal. Here's the Google cached version, though.

Replies from: Vaniver↑ comment by Bobertron · 2016-12-23T18:01:29.677Z · LW(p) · GW(p)

"Just being stupid" and "just doing the wrong thing" are rarely helpful views

I agree. What I means was something like: If the OP describes a skill, then the first problem (the kid that wants to be a writer) is so very easy to solve, that I feel I'm not learning much about how that skill works. The second problem (Carol) seems too hard for me. I doubt it's actually solvable using the described skill.

I think this misses the point, and damages your "should" center

Potentially, yes. I'm deliberately proposing something that might be a little dangerous. I feel my should center is already broken and/or doing more harm to me than the other way around.

"Smoking is bad for my health," "On net I think smoking is worth it," and "I should do things that I think are on net worth doing."

That's definitely not good enough for me. I never smoked in my life. I don't think smoking is worth it. And if I were smoking, I don't think I would stop just because I think it's a net harm. And I do think that, because I don't want to think about the harm of smoking or the diffiicutly of quitting, I'd avoid learning about either of those two.

ADDED: First meaning of "I should-1 do X" is "a rational agent would do X". Second meaning (idiosyncratic to me) of "I should-2 do X" is "do X" is the advice I need to hear. should-2 is based on my (miss-)understanding of Consequentialist-Recommendation Consequentialism. The problem with should-1 is that I interpret "I shoud-1 do X" to mean that I should feel guilty if I don't do X, which is definitely not helpful.

↑ comment by Kaj_Sotala · 2016-12-22T18:32:59.739Z · LW(p) · GW(p)

In the first example, "spelling ocean correctly" and "I'll be a successful writer" clearly have nothing to do with each other,

If you think that successful writers are talented, and that talent means fewer misspellings, then misspelling things is evidence of you not going to be a successful writer. (No, I don't think this is a very plausible model, but it's one that I'd imagine could be plausible to a kid with a fixed mindset and who didn't yet know what really distinguishes good writers from the bad.)

comment by RomeoStevens · 2016-12-20T06:56:05.889Z · LW(p) · GW(p)

Monolithic goal buckets cause a cluster of failure modes I haven't fully explored yet. Let's say you have a common goal like 'exercise.' The single goal bucket causes means-substitution that causes you to not fully cover the dimensions of the space that are relevant to you e.g. you run and then morally license not lifting weights because you already made progress towards the exercise bucket. Because the bucket is large and contains a bunch of dimensions of value it induces a mental flinch/makes ugh fields easier to develop/makes catastrophizing and moralizing more likely. The single goal causes fewer potential means to be explored/brainstormed in the first place (there seems to be some setting that people have that tells them how many options goal like things need regardless of goal complexity). Lower resolution feedback from conceptualizing it as one thing makes training moving towards the thing significantly harder (deliberate practice could be viewed as the process by which feedback resolution is increased). Monolithic goals generally seem to be farther in construal than finer grained goals, which induces thinking about them in that farther construal mode (more abstract) which will hide important details that are relevant to making, say, TAPs about the thing which requires awareness of near mode stumbling blocks. Since they tend towards simplicity, they also discourage exploration, eg 'exercise->run' feels like matching construal levels, 'increase vo2 max->go find out more about how to increase vo2 max' also seems like matching construal levels and the second looks closer to a construct that results in actions towards the thing.

I think some cleaner handles around this cluster would be useful, interested in ideas on making it more crisp.

Meta: better tools/handles around talking about ontology problems would greatly reduce a large class of errors IMO. Programmers deal with this most frequently/concretely so figuring out what works for them and seeing if there is a port of some kind seems valuable. To start with a terrible version to iterate on: UML diagrams were about trying to capture ontology more cleanly. What is better?

comment by Alexei · 2016-12-20T04:48:14.965Z · LW(p) · GW(p)

I basically just try to do the "obvious" thing: when I notice I'm averse to taking in "accurate" information, I ask myself what would be bad about taking in that information.

Interestingly enough this is a common step in Connection Theory charting.

comment by entirelyuseless · 2016-12-20T14:16:22.623Z · LW(p) · GW(p)

I like this post and the general idea that flinching away has a purpose is a good one, but I think rather than saying it is about protecting "the epistemology," I think it would be better simply to say that it has a goal, and that goal could be any number of things. That sounds bad because if the goal isn't truth, then you are flinching away from the truth for the sake of something other than truth. But what you're actually recognizing here is that this isn't always bad, because the goal that is preventing you from accepting the truth is actually something positive, and changing your mind at the moment might prevent you from reaching that goal. Whereas if you wait to think about it more, at some later time you may be able to both change your mind and achieve your goal.

It sounds like your technique is to try to figure out a way to do precisely that, both accept the truth and still achieve the goal which was impeding it. And this will often be possible, but not always; sometimes the truth really does have bad consequences that you cannot prevent. For example, losing my religion was especially difficult for me because I knew that I would lose most of my social life and a lot of respect from most people who know me, as well as (for concrete reasons concerning particulars that I won't go into) making large changes in my external form of life which I did not particularly desire. And those consequences simply could not be prevented.

comment by Fluttershy · 2016-12-20T09:47:26.061Z · LW(p) · GW(p)

I've noticed that sometimes, my System 2 starts falsely believing there are fewer buckets when I'm being socially confronted about a crony belief I hold, and that my System 2 will snap back to believing that there are more buckets once the confrontation is over. I'd normally expect my System 1 to make this flavor of error, but whenever my brain has done this sort of thing during the past few years, it's actually been my gut that has told me that I'm engaging in motivated reasoning.

comment by DPiepgrass · 2023-09-26T00:11:53.273Z · LW(p) · GW(p)

The teacher looks a bit apologetic, but persists: “‘Ocean’ is spelt with a ‘c’ rather than an ‘sh’; this makes sense, because the ‘e’ after the ‘c’ changes its sound…”

I like how true-to-life this is. In fact it doesn't makes sense, as 'ce' is normally pronounced with 's', not 'sh', so the teacher is unwittingly making this hard for the child. Many such cases. (But also many cases where the teacher's reasoning is flawless and beautiful and instantly rejected.)

This post seems to be about Conflation Fallacies (especially subconscious ones) rather than a new concept involving buckets, so I'm not a big fan of the terminology, but the discussion is important & worthwhile so +1 for that, though it seems like a better title would be '"Flinching away from truth" is often caused by internal conflation" or "bucket errors" if you like.

Replies from: AnnaSalamon↑ comment by AnnaSalamon · 2023-09-26T23:23:07.810Z · LW(p) · GW(p)

I agree, '"Flinching away from truth" is often caused by internal conflation" would be a much better title -- a more potent short take-away. (Or at least one I more agree with after some years of reflection.) Thanks!

comment by alexsloat · 2017-02-25T16:37:36.037Z · LW(p) · GW(p)

There's also sometimes an element of "I don't care about that stuff, I want you to deal with this thing over here instead" - e.g., "Don't worry about spelling, I'll clean that up later. What do you think of the plot?". Even if the criticism is correct, irrelevant criticism can reduce the relevant information available. This can actually make the bucket problem worse in some cases, such as if you spend so long editing spelling that you forget to talk about the things that they did right.

The best way to split someone else's buckets is often to give explicitly different comments on different parts of the bucket, to encourage a division in their head. You can even do that yourself, though it takes a lot more self-awareness.

comment by contravariant · 2016-12-31T19:13:18.225Z · LW(p) · GW(p)

It seems to me like it's extremely hard to think about sociology, especially relating to policies and social justice without falling into this trap. When you consider a statistic about a group of people, "is this statistic accurate?" is put in the same bucket as "does this mean discriminating against this group is justified?" or even "are these people worth less?" almost instinctively. Especially if you are a part of that group yourself. Now that you've explained it that way, it seems that understanding that this is what going on is a good strategy to avoid being mindkilled by such discussions.

Though, in this case, it can still be a valid concern that others may be affected by this fallacy if you publish or spread the original statistic, so if it can pose a threat to a large number of people it may still be more ethical to avoid publicizing it. However that is an ethical issue and not an epistemic one.

comment by Bitnotri · 2016-12-21T20:33:39.400Z · LW(p) · GW(p)

In the spirit of Epistemic Effort could you tell us how long it took you to form these ideas and to write this post?

comment by [deleted] · 2016-12-21T04:35:36.160Z · LW(p) · GW(p)

I've been doing this with an interpersonal issue. I guess that's getting resolved this week.

Replies from: ThoughtSpeed↑ comment by ThoughtSpeed · 2017-04-25T22:06:42.283Z · LW(p) · GW(p)

Did it get resolved? :)

comment by thrivemind · 2020-05-29T15:39:17.730Z · LW(p) · GW(p)

I wonder if this could be formalized in terms of Bayesian belief networks. Being able to split nodes, or delete deprecated ones, or add entirely new ones, in order to represent improved ontologies would seem like it'd have extremely valuable applications.

comment by Sengachi · 2018-02-03T06:37:51.222Z · LW(p) · GW(p)

Hmm. I don't think it's not useful to practice looking at the truth even when it hurts. For instance with the paperwork situation, it could be that not fixing the paperwork even if you recognize errors in it is something you would see as a moral failing in yourself, something you would be averse to recognizing even if you allowed yourself to not go through the arduous task of fixing those mistakes. Because sometimes the terminal result of a self-evaluation is reducing one's opinion of oneself, being able to see painful truths is a necessary tool to make this method work properly.

That said, I do think this is a much more actionable ritual than just "look at the painful thing". It also serves better as a description of reality, encompassing not just why certain truths are painful, but also how they become painful. It establishes not just a method for coping with painful truths and forcing confrontation with them, but also for establishing mental housekeeping routines which can prevent truths from becoming painful in the first place.

This has been a topic I started thinking about on my own some months ago (I even started with the same basic observation about children and why they sometimes violently reject seemingly benign statements). But I think my progress will be much improved with a written document from someone else's perspective which I can look at and evaluate. Thank you very much for writing this up. I really appreciate it.

comment by Dr_Manhattan · 2017-01-01T13:29:14.512Z · LW(p) · GW(p)

and that many (most?) mental flinches are in some sense attempts to avoid bucket errors

maybe better as

and that many (most?) mental flinches are in some sense attempts to avoid imagined consequences of bad reasoning due to bucket errors

emphasizing "avoiding consequences" vs "avoiding bucket errors"

comment by Robin · 2016-12-20T13:44:31.235Z · LW(p) · GW(p)

Interesting article. But I do not see how the article supports the claim its title makes.

I think there's a connection between bucket errors and Obsessive Compulsive Disorder.

Replies from: PaulinePi↑ comment by PaulinePi · 2017-01-21T16:51:04.848Z · LW(p) · GW(p)

Well, it applies to the article... but also to cases in which one variable is actually related to the theory, not as in falsely related this time. You do reject the new information to protect your theory,

To the second point: What makes you think that? And on which point do you think it acceses? Do yout think OCD prevents people from incorporating new information in general, or does it increase the chance of two variables ending up in "one bucket" that are not actually related (probably not in general, but in one aspect, like cleanliness or such)?

comment by Tyrrell_McAllister · 2017-01-03T22:35:54.028Z · LW(p) · GW(p)

[Reposted as a discussion post]