Analogies between Software Reverse Engineering and Mechanistic Interpretability

post by Neel Nanda (neel-nanda-1), Itay Yona (itay-yona) · 2022-12-26T12:26:57.880Z · LW · GW · 6 commentsThis is a link post for https://www.neelnanda.io/mechanistic-interpretability/reverse-engineering

Contents

My Takeaways Call Notes None 6 comments

These are notes taken during a call with Itay Yona, an expert in software/hardware reverse engineering (SRE). Itay gave me an excellent distillation of key ideas and mindsets in the field, and we discussed analogies/disanalogies to mechanistic interpretability of neural networks. I’m generally very excited to learn about other fields of study that reverse engineer complex systems, and what relevant insights they may have (SRE, neuroscience, systems biology, etc). All mistakes are mine, and all insights are his!

My Takeaways

- The underlying mindset actually feels pretty analogous!

- I find it super interesting that they also think a lot about motifs (weird patterns and phenomena that only occur in specific contexts), and that these are often the first hook into understanding something weird and that you can then work backwards.

- (Not to be confused with the SRE use of hooking)

- Also interesting that they also often focus on the inputs and outputs of the software as the starting point, to get a hook in, and then move on from there.

- It's very key to have a deep, gears-level model of the system you're working with (how does a CPU work, how are things represented in memory, the stack, registers, etc)

- I find it super interesting that they also think a lot about motifs (weird patterns and phenomena that only occur in specific contexts), and that these are often the first hook into understanding something weird and that you can then work backwards.

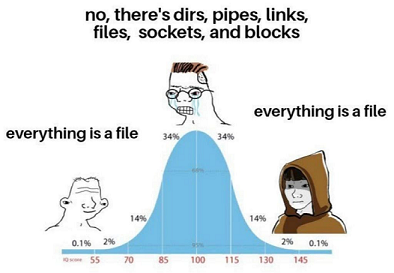

- The distinction between "newbies get caught up trying to understand every detail, experts think in higher-level abstractions, make educated guesses, and only zoom in on the details that matter" felt super interesting and surprising to me.

- My attempt to translate it into mechanistic interpretability is that (if it is analogous):

- There are certain principles and patterns by which networks learn, that we can identify and understand.

- We likely will understand these by deeply reverse engineering specific parts of systems (especially toy systems) and digging into the details. But the goal here is to build intuitions and mental models, and a sense for how models work as a whole.

- Once we have these intuitions and some solid grounding in what we do understand well, the best mindset for reverse engineering an unfamiliar system is to be less rigorous and more intuitive. Make educated guesses, look for partial evidence for or against hypotheses, think at a high-level and somewhat abstract mode about the system, and only zoom in on a specific part of the system to deeply reverse engineer once you've identified what to prioritise.

- I have no idea if it is analogous, but that mindset aligns a fair bit with my intuitions about MI (though I consider the field to be much more in the "building intuitions by deeply engineering things" phase lol)

- As Lawrence Chan notes [AF(p) · GW(p)], this is likely an example of a general pattern, where newbies (1) reason high-level in a very ungrounded way, (2) dig really rigorously into the details constantly and (3) build intuition that's actually grounded, and return to reasoning on a high-level. And that if you want to get to 3, you need to do a lot of digging through details in stage 2 first, while experts can make the mistake of recommending skipping to 3 (which in practice puts people at 1)

- My attempt to translate it into mechanistic interpretability is that (if it is analogous):

- I'm surprised at the emphasis on prioritisation, and identifying which part of the software you care about. My mental picture was that the goal was to fully de-compile things to source code, but it sounds like that's rarely the goal and is extremely hard.

- But this aligns with my intuitions that a lot of what I want to do with a network is to localise the parts that are relevant to a specific task.

- One approach to MI research that seems natural from a SRE perspective: Do extensive work reverse engineering toy models, and try to deeply understand the circuits there. Then, try to distill out motifs and find (ideally automated) tools to detect similar circuits in bigger toy models/real models.

- Induction heads seem like a successful example of this, and I’m very curious about whether this works for my modular addition algorithm [AF · GW] or a toy model of superposition!

- If this works, it suggests that an approach to MI could be:

- Identify a behaviour we care about/want to find a circuit for

- Train a toy model to do this, that tries to be as simple as possible while being a good simulation of the real model

- Study how the behaviour is implemented

- Distill out the core patterns and fingerprints of this behaviour

- Scan the real network for these, and use this as (non-rigorous) evidence for what’s going on.

- I’m biased, because this is how I already think about MI, but it’s nice to have some external validation!

For more discussion on similarities, see Chris Olah’s essay Mechanistic Interpretability, Variables, and the Importance of Interpretable Bases

Call Notes

- Background:

- SRE = Software Reverse Engineering, the study of decompiling a program binary to the actual code.

- Program binary -> assembly is easy, so it's basically about reverse engineering assembly.

- Program files are enormous, want to understand what it's doing as much as you can, while translating as few instructions as possible

- Can be a few MB to 100s of MB in file size, rarely KB

- High-level: - we are not trying to be really rigorous or fully reverse engineer things, this is a massive waste of time. We are using the scientific method. We have strong priors, make educated guesses, and the goal of our reverse engineering is to get out a few bits of data that narrows down our educated guesses.

- Key challenge: - we want to find a specific function in the enormous file. The way we do this by identifying which part of the file is relevant.

- Meta point: The goal is not to decompile the entire program. It's to identify the specific part of it that you care about understanding, or to zoom in on a specific vulnerability, or to figure out what it does at a high-level, etc. You need to prioritise and be intentional, not to dig into every single last detail.

- Often you care about specific details of a part of the software and how they deal with individual bits - does the function take in shorts vs longs, how does it cast, etc. These are often the details that lead to vulnerabilities, which is a lot of the goal here.

- Main technique: Run the code a bunch on a bunch of inputs, get a black box sense for what it does. Use this to build intuitions about the program.

- Form hypotheses about what could be going on internally, and use this black box operation to test and validate them. Try to break things, and look for inputs that could distinguish between your hypotheses

- Try to get into the mindset of the programmer who wrote it

- He often thinks in C and classes, rather than in terms of assembly. You want to be as abstract as is reasonably possible without losing sight of the problem.

- Specifics of registers etc are often not a helpful way to think about it.

- You ultimately want to think about things as the programmer would have thought about things. The compiler and OS can often add a lot of irrelevant complexity. If you think in terms of assembly you'll need to spend a lot of time engaging with these, but if you think in C you can abstract a lot away

- This is both about "what is a reasonable way to implement a behaviour" or "there are many ways to implement this behaviour, which one might the programmer have chosen?"

- We can rule out possibilities and explore by playing with the software, look at what triggers errors, weird edge cases, etc.

- He often thinks in C and classes, rather than in terms of assembly. You want to be as abstract as is reasonably possible without losing sight of the problem.

- Meta point: The goal is not to decompile the entire program. It's to identify the specific part of it that you care about understanding, or to zoom in on a specific vulnerability, or to figure out what it does at a high-level, etc. You need to prioritise and be intentional, not to dig into every single last detail.

- Core mindset: His core underlying philosophy is about looking for shortcuts.

- Newbies look for specifics. Key details of what variables more from where to where, details of memory, etc. Very bottom up, easy to waste a lot of time.

- Experts think in terms of abstractions - what is it doing, what are the variables, where are they, what is the code doing, etc. Top down, only zoom in once they've identified what's interesting.

- Analogy to chess: Newbies care a lot about whether a piece can be captured, is it protected, etc. As an expert, it's automatic, which lets us build better abstractions, and we can reason about strategy on a higher level.

- To be a good SRE, understanding registers, loops, etc is important. But when you understand how to do this, it's enough.

- But a common mistake is to never switch to top down. This is one of the best forms of value a mentor gives, showing the worth of educated guesses, rapid moving on, skimming at a high level until you find the part worth zooming in on.

- People often learn this by pair programming, and seeing that a mentor is willing to not be a perfectionist and move on.

- But a common mistake is to never switch to top down. This is one of the best forms of value a mentor gives, showing the worth of educated guesses, rapid moving on, skimming at a high level until you find the part worth zooming in on.

- Localising: How do I localise which part of the code matters?

- High-Level - look for motifs in the code, and look at how it interfaces with external things (eg web or user interface)

- Big disanalogy to neural networks: In a network, finding the representations is a lot of effort and very hard. But in software, we mostly care about how it represents the inputs, which are very easy and standard.

- Eg, the software acts on an image, and we know there are some standard operations - applying a fourier transform, storing the height and width, de-compression, etc.

- Example: If we know this is happening, then the code will be doing floating point multiplication. This is an unusual motif (rarely occurs in other contexts) and is easy to identify from the code, and a significant hint that de compression is happening

- Underlying point: There’s a lot of weird crap that gets put into the binary from the compiler, or standard code from the underlying libraries/OS. We want to sift through for the parts specific to this program and the behaviour we care about.

- This means that if we see a big block of code that does floating point multiplication, and it's called by parts of code that handles input images, it's very likely to be de-compression. On its own, being called by the input handling code is only weak evidence, but combined with floating point multiplication and our priors, this is strong evidence

- Summary: There is a special motif (floating point arithmetic) which is only used in some specific functional context. If you recognise that this is happening, this is a major hint about what’s going on!

- Another type of work is using a debugger - this is dynamic analysis, not static analysis.

- We can look at what happens when the part of the code inside the de-compression algorithm is being run, and then look at the call stack. This can give us hints about what those functions do! Eg looking for user input.

- I love that the same ideas of static (analysing weights) vs dynamic (analysing the model on various inputs) analysis applies here!

- Example: We want to crack photoshop to not need a serial number. We know that an incorrect serial number creates a pop-up. We can add a breakpoint to every part of the system that creates a pop-up, because this requires an interface with the UI. By seeing which breakpoint triggers, we can identify which call to MessageBoxA (the actual function name!) is relevant. And by looking at the call stack, we can identify which parts of the code are relevant.

- A Practical workflow to balance between divergent, convergent and meta work:

- This is Itay’s specific workflow, and is neither SRE specific nor universal in SRE.

- Summary:

- Start the project by forming a creative mindmap. Start with your end-goal (eg finding a vulnerability in a specific system) and systematically break down the problem, think of as many in-roads as you can. Then prioritise and pick one to work on.

- Spend most of your in focused research mode, and just keep iterating on that sub-problem. Each day, set yourself a question, try to answer that question, and then pick the natural next question the next day.

- Predict how long this will take. When that time is up, move on to the next most promising thing

- Research diaries:

- At the start of the day, write out a question. By the end of the day, try to answer it.

- This hopefully unlocks more questions and threads, and you can iterate!

- Each day, you default to following your research diary. What questions now feel alive, dive into those, keep iterating.

- Mindmaps:

- Start with the fundamental goal, eg “get the system to think you’ve given it the right password”

- Branch out and brainstorm sub-goals, sub-sub-goals, etc.

- Prioritise and choose one to focus on - do a rough cost-effectiveness analysis

- Most of the time, you're in very focused mode and trying to answer a deep question. You use the mindmap to keep track of new ideas and creative thoughts without breaking focus.

- Return to the mindmap when things take longer than expected (even if you haven't run out of ideas!). Then pick the next promising thread

- Important: Be willing to go back to previously explored threads even if there are more threads you haven't explored yet.

- It's hard to tell if you're in a rabbit hole or the solution is nearby. Thinks it's hard to tell whether a thread is promising or not in the moment. You can get weak evidence from partial progress, but idk.

- The goal is not having an optimal algorithm, it's about being good enough. "Work on the most promising thing for X time, then move on to next most promising thing".

- As long as you have good time estimates, traversing the mindmap graph is like applying the A* Algorithm to it

- How to tell when you're in a rabbit hole? This is when it's most useful to have a mentor/rubber duck/break. There's no clear rules here, but with time and experience you develop taste for "there should be an easier solution, I've been on this for a while, let's switch".

- Implicitly, you should have a model of what doing this research looks like, how hard it is, how often you should make progress, etc. If you're repeatedly wrong/optimistic, you notice and update or pivot.

- How would you mentor someone to learn this workflow? Pretty easy, it's very concrete, you can just outline the algorithm for the mentee to follow.

- Concretely, take a mentee who’s stuck on a problem. Listen to what’s going on for them, repeat the approach of expanding the mindmap and being willing to return to previous threads. This basically seemed to be enough to get them unstuck and motivated again!

- Teaching: What would you put on a curriculum to make someone an expert SRE?

- Technical details:

- Static vs dynamic analysis (analyse binary vs analyse it on inputs)

- Teach common patterns:

- Eg De-compression has a lot of floating point multiplications, looking at inputs, changing things on the GUI, etc

- Give them toy problems to study with easy hooks in (ie patterns to give a starting point)

- Intermediate problems which don't have deliberate, obvious hooks in

- But there probably isn't something that has no hooks. The core principles of the field is that things can be understood! But it might be hard and obfuscating to access it.

- Move towards bigger systems. Don't just remove hints, but also obfuscate things.

- Eg code is partially encrypted, lots of distractor code, etc.

- What do you do here? Eh, not any general tips. But fundamentally, the computer can understand it, so it should be understandable, eg it must eventually be decrypted

- Mindset: Assume there is a shortcut. What is that shortcut? Use this to motivate your research.

- Technical details:

- Hard cases: Trying to reverse engineer a mysterious black box.

- This is a pretty in the weeds example. Might be interesting but feel free to skip. Another interesting example

- High Level takeaway: We need to rely on problem-solving principles even more, and focus on the constraints and bottlenecks of the system (eg, if it's accessing the internet, it must be doing that somehow)

- Example: We have a binary that we extract from our router. We know it's router-y but know nothing else

- It's not a classic case of software (like Photoshop) where we have documentation and know what the user interface is and what it should be doing. We know that it's doing router-related things, but not whether it's implemented with Linux or Windows, what language, what hardware, etc.

- Sometimes we can guess, eg "this is using ARM's architecture/implementation", but sometimes we don't know what it is or aren't familiar with it, and need to start from scratch.

- What do we do now? We still try to be a scientist! What do we know about what the software must be doing?

- Example: If it is a CPU, it must be having loops, accessing and moving memory, etc.

- Eg int manipulating commands may be 1 byte, other commands may be 2 bytes.

- Eg the code must be accessing registers, which must have locations represented internally

- The underlying goal is to look for any chink in its armor! Try to find any pattern. This gives you an insight into what's going. You can identify what some parts of the code are doing, and make progress from there.

- Different types of reverse engineering:

- To do web hacking, we don't even have access to the server-side code and binaries. We can see how it responds to various inputs. Eg put a backtick into a text box, if it creates a 500 error this suggests it's using SQL

- Hardware reverse engineering - interesting and is at the intersection. Like web bc it's black box, like software because we can break things, change temperature, etc:

- Example: CPUs will execute instructions even with insufficient power. They will just fail to do the power intensive operations

- Eg: Will fail to access memory, but can still add numbers. You can use this to hack a password, because it can't access what the password is, we can get in with all zeros

- A great talk Itay recommends

- The jargon for this is side-channel attacks

- Example: CPUs will execute instructions even with insufficient power. They will just fail to do the power intensive operations

- Sometimes operations take more or less depending on the input, by precisely measuring the time, we can figure it out.

- Example: runs through each byte of a string and returns at the first one that's correct. This means we can brute force the first character, then the second character, etc, because we can tell how many bytes we have correct.

6 comments

Comments sorted by top scores.

comment by LawrenceC (LawChan) · 2022-12-26T15:57:13.499Z · LW(p) · GW(p)

The distinction between "newbies get caught up trying to understand every detail, experts think in higher-level abstractions, make educated guesses, and only zoom in on the details that matter" felt super interesting and surprising to me.

I claim that this is 1) an instance of a common pattern that 2) is currently missing a step (the pre-newbie stage).

The general pattern is the following (terminology borrowed from Terry Tao):

- The pre-rigorous stage: Really new people don't know how ~anything works in a field, and so use high-level abstractions that aren't necessarily grounded in reality.

- The rigorous stage: Intermediate people learn the concrete grounding behind a field, but get bogged down in minutia.

- The post rigorous stage: Experts form correct high level abstractions informed by their understanding of the grounding, but still use the grounding when the high level abstractions break down.

I think that many experts mainly notice the 2->3 transition, but not the 1->2 one, and so often dissuade newbies by encouraging them to not work in the rigorous stage. I claim this is really, really bad, and that a solid understanding of the rigorous stage is a good idea for ~everyone doing technical work.

Here's a few examples:

- Terry Tao talks about this in math: Early students start out not knowing what a proof is and have to manipulate high-level, handwavy concepts. More advanced students learn the rigorous foundations behind various fields of math but are encouraged to focus on the formalism as opposed to 'focusing too much on what such objects actually “mean”'. Eventually, mathematicians are able to revisit their early intuitions and focus on the big picture, converting their intuitions to rigorous arguments when needed.

- The exact same thing is true in almost discipline with mathematical proofs, e.g. physics or theoretical CS.

- This happens in a very similar with programming as well.

- In many strategy games (e.g. Chess), you see crazy high level strategizing at the super low and high levels, while the middle levels are focused on improving technique.

- I'd also claim that something similar happens in psychology: freshmen undergrads come up with grand theories of human cognition that are pretty detached from reality, many intermediate researchers get bogged down in experimental results, while the good psychology researchers form high level representations and theories based on their knowledge of experiments (and are able to trivially translate intuitions into empirical claims).

↑ comment by Itay Yona (itay-yona) · 2022-12-27T22:19:45.257Z · LW(p) · GW(p)

I strongly agree! When you study towards RE it is critical to understand lots of details about how the machine works, and most people I knew were already familiar with those. They were lacking the skills of using their low-level understanding to actually conduct useful research effectively.

It is natural to pay much less attention to 1->2 phase since there are much more intermediate researchers than complete newbies or experts. It is interesting because when discussing with the intermediate researchers they might think they are discussing with person 1 instead of person 3.

Thanks you gave me something to think about :)

↑ comment by Neel Nanda (neel-nanda-1) · 2022-12-26T16:12:21.223Z · LW(p) · GW(p)

I like the analogy! I hadn't explicitly made the connection, but strongly agree (both that this is an important general phenomena, and that it specifically applies here). Though I'm pretty unsure how much I/other MI researchers are in 1 vs 3 when we try to reason about systems!

To be clear, I definitely do not want to suggest that people don't try to rigorously reverse engineer systems a bunch, and be super details oriented. Linked to your comment in the post.

comment by Florian Magin (fmagin) · 2022-12-27T12:46:05.192Z · LW(p) · GW(p)

Context: I have been working in IT Security with reverse engineering as my main interest for nearly 7 years, been interested in it for ~8.5 years, and have been working on automated reverse engineering at a research institute for 3 years. I have been vaguely interested in mechanistic interpretability for the last months and am strongly considering switching to it as a field of research, depending on how much of my RE skillset/experience transfers.

I think one topic that the article/viewpoint/Itay Yona are missing is how much of modern RE is only feasible because we have a few decades worth of tooling taking care of the aspects that are already possible to automate. The main industry tools IDA/Ghidra/Binary Ninja all have a roughly similar processing pipeline that consists of various steps. This is typically just called "auto analysis", and this can easily take a few hours for larger binaries, sometimes even more. I think the lessons and approaches from automated RE are much more important than the ones from manual RE, but to some extent this is personal bias, because I like automated RE a lot. But in any case manual, RE would not be feasible without some underlying automated RE that takes care of as much work as possible, to allow the human to focus on what is relevant, in a form that is as close to their level of abstraction as possible, while also being transparent, i.e. make it easily possible to switch to the underling levels of abstraction again, or even show them side by side.

Much of the work is generating various graph representations of the program, e.g. the Control Flow Graph, Data Flow Graphs, analysis based on Abstract Interpretation over some domain (e.g. types) and generating Abstract Syntax Trees that are more similar to C code in terms of the level of abstraction over the actual assembly, which makes it far easier for a human to quickly understand them. Those are then as the basis for further analysis to recover more high level constructs, and to aggregate and propagate information from various parts of the program.

I have the impression that some intermediate step/layer in this process might be a similar level of abstraction to "NNs as computational graphs", and that the steps that follow in (automated RE) might be fairly applicable to mechanistic interpretability, while the steps before this layer are far less directly useful for NNs.

Those next steps are then roughly to find patterns inside this graph that can be replaced with larger conceptual nodes, e.g. a complicated looking piece of instructions might simply be in-lined and highly optimized strcpy , i.e. copies a string from one memory location to another. The nice thing about software RE is that compilers will typically not inline functions to save space, but a NN will most likely not give us that luxury. "Induction Heads" might be the first step in exactly that direction, but I have to admit that I haven't had the time/energy to really look into this, so I can't confidently say this, and I might just be interpreting them in the mental framework I am already used to.

Overall, focusing on tools and automated RE is already a niche inside the somewhat niche field of RE, but I think it's crucial for mechanistic interpretability because it has to be scalable. A human looking small toy models is only interesting because we hope that larger models exhibit the same patterns/structure, that we can then automatically recover, to find higher level patterns that only emerge in a larger model, which can only be spotted as a pattern in the intermediate abstraction.

Replies from: itay-yona↑ comment by Itay Yona (itay-yona) · 2022-12-27T22:33:03.180Z · LW(p) · GW(p)

Thanks, that's a good insight. The graph representation of code is very different than automated decompiling like hex-rays in my opinion. I agree that graph representation is probably the most critical step towards a more high-level analysis and understanding. I am not sure why you claim it required decades of tools because since the dawn of computer-science turing-machines were described with graphs.

In any case this is an interesting point as it suggest we might want to focus on finding graph-like concepts which will be useful for describing the different states of a neural network computation, and later developing IDA-like tool :)

since we share similar backgrounds and aspiration feel free to reach out:

https://www.linkedin.com/in/itay-yona-b40a7756/

Replies from: fmagin↑ comment by Florian Magin (fmagin) · 2022-12-28T10:13:39.545Z · LW(p) · GW(p)

The graph representation of code is very different than automated decompiling like hex-rays in my opinion.

There are many different graph representations of code, some of them are crucial for automated decompiling, others probably aren't. So I'm not sure which one you are referring to here. And in the end, the result of the decompilation process is a graph (either a tree like the AST of the C-Code, but I admit that it is kinda nitpicky to call that a "Graph representation"[0]), or more of a true graph like Binary Ninjas High Level Intermediate Language (if displayed as a CFG with blocks consisting of High Level Intermediate Language instructions)

I am not sure why you claim it required decades of tools

I meant this is the sense of "modern RE is implicitly built on a few decades of research and engineering". Of course you could always use some kinds of graphs, but having good tools to do this for you massively accelerates the RE process. There is a reason IDA could just charge whatever they wanted before Ghidra and Binary Ninja came out: A good auto analysis and decompiler is a massive benefit that allows someone without much experience to do basic RE, and for an expert it still saves them a lot of time, because they can focus on higher level abstractions.

[0] Though you really start appreciating the difference in how those trees are internally represented, even if they look the same as Pseudo Code, when you want to work with them. E.g. the IDA C AST is a true AST that is useful for further transformations and analysis while the Ghidra Syntax Tree isn't even an Abstract Syntax Tree because it still contains information like white space tokens. So you would have to reparse this to a C AST before you can do anything useful with it, and this is a whole other rant arising from a side project of mine...