Open-Category Classification

post by TurnTrout · 2018-03-28T14:49:23.665Z · LW · GW · 6 commentsContents

Introduction Prior Work Penalizing Volume Formalization Tractable Approximations Black Box Analytic White Box Blessing of Dimensionality Future Directions Conclusion Bibliography None 6 comments

Introduction

If I present you with five examples of burritos, I don’t want you to pursue the simplest way of classifying burritos versus non-burritos. I want you to come up with a way of classifying the five burritos and none of the non-burritos that covers as little area as possible in the positive examples, while still having enough space around the positive examples that the AI can make a new burrito that’s not molecularly identical to the previous ones.

- AI Alignment: Why It's Hard and Where to Start

Consider the problem of designing classifiers that are able to reliably say "I don't know" for inputs well outside of their training set. In the literature, this problem is known as open-category classification [7]; a closely-related problem in AI safety is inductive ambiguity detection [4].

Solution of generalized inductive ambiguity detection could allow for agents who robustly know when to ask for clarification; in this post, we discuss the problem in the context of classification. Although narrower in scope, the solution of the classification subproblem would herald the arrival of robust classifiers which extrapolate conservatively and intuitively from their training data.

Obviously, we can't just train classifiers to "recognize" the class. One, this class isn't compact, and two, it doesn't make sense - it's a map/territory confusion (the label is a feature of the world model, not a meaningful ontological class). Let's decompose the concept a bit further.

Weight regularization helps us find a mathematically-simple volume in the input space which encapsulates the data we have seen so far. In fact, a binary classifier enforces a hyperplane which cleaves the entire space into two volumes in a way which happens to nearly-optimally classify the training data. This hyperplane may be relatively simple to describe mathematically, but the problem is that the two volumes created are far too expansive.

In short, we'd like our machine learning algorithms to learn explanations which fit the facts seen to date, are simple, and don't generalize too far afield. This is an example of conservatism:

Conservatism and conservative planning seems like it might directly tackle some standard concerns [in alignment] head-on and in a sufficiently-basic way to avoid loopholes, and might also be subject to those concerns. E.g.:

• Edge instantiation - if in full generality we don't go to the edge of the graph but try to stay in the center of what's already been positively classified, maybe we can avoid this.

• Unforeseen maximum - if we stick to things very similar to already-positively-classified instances, we won't automatically go into the unimagined parts of the graph.

• Context disaster - a sufficiently conservative optimizer might go on using options similar to previously-whitelisted ones even if large new sections of planning space opened up.

Prior Work

Taylor et al. provide an overview of relevant research [4]. Taylor also introduced an approach for rejecting examples from distributions too far from the training distribution [5].

Yu et al. employed adversarial sample generation to train classifiers to distinguish seen from unseen data, achieving moderate success [7]. Li and Li trained a classifier to sniff out adversarial examples by detecting whether the input is from a different distribution [3]. Once detected, the example had a local average filter applied to it to recover the non-adversarial original.

The Knows What It Knows framework allows a learner to avoid making mistakes by deferring judgment to a human some polynomial number of times [2]. However, this framework makes the unrealistically-strong assumption that the data are i.i.d.; furthermore, efficient KWIK algorithms are not known for complex hypothesis classes (such as those explored in neural network-based approaches).

Penalizing Volume

If we want a robust / classifier, we should indeed cleave the space in two, but with the vast majority of the space being allocated to . In other words, we're searching for the smallest, simplest volume which encapsulates the cat training data.

The smallest encapsulation of the training data is a strange, contorted volume only encapsulating the training set. However, we still penalize model complexity, so that shouldn't happen. As new examples are added to the training set, the classifier would have to find a way to expand or contract its class volumes appropriately. This is conceptually similar to version space learning.

This may be advantageous compared to current approaches in that we aren't training an inherently-incorrect classifier to prune itself or to sometimes abstain. Instead, we structure the optimization pressure in such a way that conservative generalization is the norm.

Formalization

Let be a function returning the proportion of input space volume occupied by non- classes: . We need some function which translates this to a reasonable penalty (as the proportion alone would be a rounding error in the overall loss function). For now, assume this function is .

We observe a datum whose ground truth label is . Given loss function , weights , classifier , complexity penalty function , and , the total loss is

Depending on the formulation of , may elect to misclassify a small amount of data to avoid generalizing too far. If more similar examples are added, exerts an increasing amount of pressure on , eventually forcing expansion of the class boundary to the data points in question. This optimization pressure seems desirable.

We then try to minimize expected total loss over the true distribution :

Tractable Approximations

Exhaustively enumerating the input space to calculate is intractable - the space of RGB images is of size . How do we measure or approximate the volume taken up by the non- classes?

Black Box

- Random image sampling wouldn't be very informative (beyond answering "is this class extending indefinitely along arbitrary dimensions?").

- Yu et al.'s results weakly suggest that adversarial approaches can approximate the hypersurface of the class boundaries [7].

- Bayesian optimization could deduce the hypersurface of the class boundaries, giving a reasonable estimate of volume.

- The continuous latent space of a variational autoencoder [1] could afford new approximation approaches for (more on this later).

Analytic

We do know the details of - how can we exploit this?

Decision trees offer a particularly simple solution. Consider a decision tree on a one grayscale pixel input space; the tree outputs if pixel value and otherwise. Then without having to run a single input through the tree, we find the proportion of the space taken up by non- classes to be .

This result generalizes to any decision tree over discrete variables; simply calculate in closed form how many possible inputs conform to the conditions for each leaf, and add them up. For an input space classified by a decision tree with nodes, we reduce the time complexity from to ; this is great, as it is almost always the case that .

Neural networks are a bit trickier. Work has been done on extracting decision trees from neural networks [6], but this requires training a new network to do so and the resulting trees may not have sufficient fidelity.

Since the output of a one hidden-layer neural network can be represented as the linear equation , we can, perhaps, simply fix () and (the layer's output values) to solve for (the input). Given such a solution, we might, in theory, be able to calculate the volume by integrating over the possible values for each classification. The universal approximation theorem shows that a one hidden-layer neural network (with perhaps an exponential number of nodes) can approximate any function.

All this is getting a bit silly. After all, what we really care about is the proportion of the input space taken up by non- classes; we don't necessarily need to know exactly which inputs correspond to each output.

White Box

Let's treat this as a prediction problem. Take randomly-generated images and run them through the first layer of the network, recording the values for the activation of node in the first layer (); assume we incur some approximation error due to having insufficiently sampled the true distribution. Derive a PDF over the activation values for each node.

Repeat this for each of the network layers, sampling input values from the PDFs for the previous layer's nodes. At the end of the network, we have a probabilistic estimate of the output class proportions.

However, this may only yield results comparable to random image sampling, as many node activations may not occur often for arbitrary data. While this would be descriptive of the input space as a whole, it would be unlikely to sample any images, for example.

Blessing of Dimensionality

In my opinion, the most promising approach involves abusing the properties of high-dimensional volumes; in particular, the well-known Curse of Dimensionality.

To illustrate, the volume of a ball in dimensions is

where is the radius of the ball and is the Gamma function.

For high-dimensional volumes, the overwhelming majority of points in the -ball are located in the very outer shell. How do we know this? For points uniformly distributed in a -dimensional unit ball (a ball with , the median distance from the closest data point to the origin is defined as

If we distribute points in the -dimensional unit ball, the closest point to the center has a median distance of . The vast, vast majority of the points we uniformly distributed find themselves at the outermost reaches of the ball. This is fantastic news (for mathematical and visual intuitions as to why, read this).

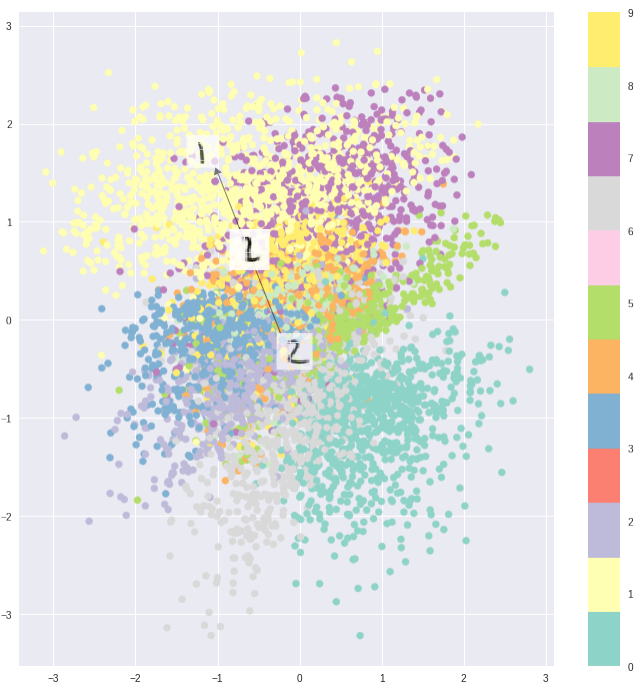

Train a variational autoencoder with a -dimensional latent space on the dataset . Then train a multilayer perceptron to classify using the latent representation of each image. This is the network to which we will apply the volume penalty. New images will be translated to the latent space by the trained encoder and then fed to for classification.

Variationally-encoded MNIST digits, with clustering pressure provided by reconstruction loss and density encouraged by KL loss.

Image credit: towardsdatascience.com

We're basically going to do high-dimensional lidar in the latent space to image the outer shell of 's non- classification volumes. Due to the blessing of dimensionality, we don't need to worry whether the inside of the volume is classified as or not - for high-dimensional latent spaces, it's a rounding error. Therefore, we need only search until we reach a non- classification shell; we could then start from the other direction to estimate the other side. After doing this for all dimensions, we have a set of points approximating the hypersurface of the non- classification volume.

The complexity of this seems to be , where is latent space dimensionality, is how many sampling operations are needed on average to reach the outer shell, and is the per-dimension sampling density (for example, points along an axis in a 3-dimensional space would entail pinging a grid of points). Although exponential in , this is already orders of magnitude more tractable than sampling the original high-dimensional input space. By sacrificing some accuracy, we may be able to improve the exponential term further (perhaps to or even a constant).

We should be able to provably bound our error as a function of the latent space dimensionality and the sampling density; due to the blessing of dimensionality, these bounds are likely to be sufficiently tight. Although increasing the dimensionality tightens the bound on the volume approximation error, it also increases compute time exponentially (as of this initial formulation).

This approach requires that the latent space have enough dimensions to allow for inputs to not be encoded to areas occupied by known classes. If this is not feasible, there are other architectural choices available. Note that we need not compute the exact proportion of -labeled inputs, but rather an approximation; therefore, as long as the latent space has a reasonable number of features, it likely doesn't need to encode all relevant features.

Future Directions

Extremely small input spaces allow for enumerative calculation of volume. By pitting a volume-penalizing classifier against its vanilla counterpart in such a space, one could quickly gauge the promise of this idea.

If the experimental results support the hypothesis, the obvious next step is to formulate tractable approximations of (ideally with provably-bounded error).

Conclusion

This proposal may be an intractable robust solution to the open-category classification problem, with several promising tractable approaches already apparent.

One of whom was my excellent deep learning professor.

This approach is inspired by the AI safety mindset in that the classifier strives to be conservative in extrapolating from known data.

Set underflow aside for the moment.

I've updated from my previous position that the i.i.d. assumption about future data implied by a latent space is disqualifying. Although the latent space's structure would indeed make assumptions, the space would still approximate the volumetric properties of the /non- portions of the input space for the current classifier. Ultimately, this is what we care about!

I'm sure there's a formal name for this technique, but I don't know it.

Due to the statistical properties of variational autoencoders, it's possible that this could be done very quickly using bounded binary search.

Unlike in the -ball example, the encoded points are distributed normally (as opposed to uniformly). This isn't necessarily directly relevant, as we're measuring with respect to the multilayer perceptron's input space - the latent space (which, after being discretized and bounded, has uniformly "distributed" points).

Bibliography

[1] D. P. Kingma and M. Welling. Auto-encoding variational bayes. 2016.

[2] L. Li, M.L. Littman, and T.J. Walsh. Knows what it knows: a framework for self-aware learning. 2008.

[3] X. Li and F. Li. Adversarial examples detection in deep networks with convolutional filter statistics. 2016.

[4] J. Taylor et al. Alignment for advanced machine learning systems. 2016.

[5] J. Taylor. Conservative classifiers. 2016.

[6] R. Krishnan, G. Sivakumar, and P. Bhattacharya. Extracting decision trees from trained neural networks. 1999.

[7] Y. Yu et al. Open-category classification by adversarial sample generation. 2017.

6 comments

Comments sorted by top scores.

comment by TurnTrout · 2018-03-28T16:32:00.642Z · LW(p) · GW(p)

I'd like to reassure everyone that this is a rather brief read (and not 100 minutes). Something strange is afoot with and the estimated read times.

Edit: no longer that brief of a read. Still not 100 minutes (although the read time seems to be fixed now).

Replies from: habryka4↑ comment by habryka (habryka4) · 2018-03-28T16:55:17.382Z · LW(p) · GW(p)

I jokingly proposed when we were coming up with the read times, that every equation should add 30 minutes to your expected reading time. And somehow we introduced a bug that did exactly that.

Replies from: TurnTrout↑ comment by TurnTrout · 2018-03-28T17:15:42.120Z · LW(p) · GW(p)

[Stephen Hawking] notes in [A Brief History of Time]'s acknowledgements that he was warned that for every equation in the book, the readership would be halved, hence it includes only a single equation: .Replies from: lolbifrons

↑ comment by lolbifrons · 2018-03-29T01:41:27.314Z · LW(p) · GW(p)

To think he could have had twice as many readers.

comment by romeostevensit · 2018-03-29T15:17:56.456Z · LW(p) · GW(p)

I really like the idea of a volume minimization function in dynamic tension with a complexity of shape minimization function and wonder what sorts of functions might mediate the tradeoff.

Replies from: TurnTrout↑ comment by TurnTrout · 2018-03-31T00:18:37.928Z · LW(p) · GW(p)

One thought I had was , but this has the problem of vanishing when the volumes are compact. I'm still not sure whether the relationship between and should be multiplicative or additive. I am fairly sure that shouldn't directly affect , as that would have lots of nasty failure modes (such as high complexities being justified by tiny, weird volumes).

edit: It should be additive, otherwise 0 total loss is achieved by classifying everything as .