Are coincidences clues about missed disasters? It depends on your answer to the Sleeping Beauty Problem.

post by Harlan · 2021-07-05T19:12:50.839Z · LW · GW · 5 commentsContents

The Sleeping Beauty Problem Halfer Perspective Thirder Perspective This Feels Counterintuitive Conclusion None 5 comments

In April, Gwern posted this comment in response to slowed AI progress:

"It feels like AI is currently bottlenecked on multiple consecutive supplychain disruptions, from cryptocurrency to Intel's fab failures to coronavirus... A more paranoid man than myself would start musing about anthropic shadows and selection effects."

I’m that more paranoid person. After reading this, I became concerned that recent events could be related to a barely missed disaster that might be looming in the near future. After some more thought, though, I am mostly no longer concerned about this, and I think that I have identified a useful crux for determining whether to use observed coincidences as evidence of barely missed disasters. I’m sharing my thoughts here so that someone can point out my mistakes, and possibly help anyone else who is thinking about the same question.

Epistemic Status: I feel pretty sure about the main argument I’m making, but also anthropics is a confusing subject and I will not be surprised if there are multiple important things I'm missing.

If you accept that the Many Worlds [LW · GW] interpretation of quantum mechanics is true (or that any type of multiverse exists), then it might be reasonable to expect to someday find yourself in a world where a series of unlikely deus ex machina events will conveniently have prevented you from dying [? · GW]. If you also accept that advanced AI poses an existential threat to humanity, then it might be concerning when there appears to be a convergence of recent, unlikely events slowing down AI progress. There is a reasonable case to be made that such a convergence has happened recently. Here are some things that have happened in the past year or so:

- OpenAI released GPT-3

- China released something similar to GPT-3

- A pandemic happened that hurt the economy and increased demand for consumer electronics, driving up the cost of computer chips

- Intel announced that it was having major manufacturing issues

- Bitcoin, Ethereum, and other coins reached an all-time high, driving up the price of GPUs

Is this evidence that something catastrophic related to AI happened recently in a lot of nearby timelines? Before we can answer that, we need to know if we ever can use unlikely events in the past as evidence that disasters occurred in nearby timelines. This is not just an abstract, philosophical question- evidence about a narrowly avoided disaster could help us avoid similar disasters in the future. Unfortunately, the question seems to boil down to a notoriously divisive probability puzzle...

The Sleeping Beauty Problem

Sleeping Beauty volunteers to undergo the following experiment. On Sunday she is given a drug that sends her to sleep. A fair coin is then tossed just once in the course of the experiment to determine which experimental procedure is undertaken. If the coin comes up heads, Beauty is awakened and interviewed on Monday, and then the experiment ends. If the coin comes up tails, she is awakened and interviewed on Monday, given a second dose of the sleeping drug, and awakened and interviewed again on Tuesday. The experiment then ends on Tuesday, without flipping the coin again. The sleeping drug induces a mild amnesia, so that she cannot remember any previous awakenings during the course of the experiment (if any). During the experiment, she has no access to anything that would give a clue as to the day of the week. However, she knows all the details of the experiment.

Each interview consists of one question, “What is your credence now for the proposition that our coin landed heads?”

When Sleeping Beauty wakes up, what probability should she give that the coin landed heads? People mostly fall into two camps: some say p(heads)=½ and some say p(heads)=⅓.

Halfers argue that because a fair coin will land on heads ½ of the time, and Sleeping Beauty has not received any new information, the probability of heads is ½.

Thirders argue that Sleeping Beauty's awakening is a new piece of information, and because the coin flip will have been heads ⅓ of the time that Sleeping Beauty awakens, the probability is ⅓.

I personally fall into the latter camp, but I am not going to attempt to contribute to the ongoing debate about which answer is correct. Instead, let’s see how we can use the Sleeping Beauty Problem to answer the question “can we use observed coincidences as evidence that a disaster occurred in nearby timelines?”

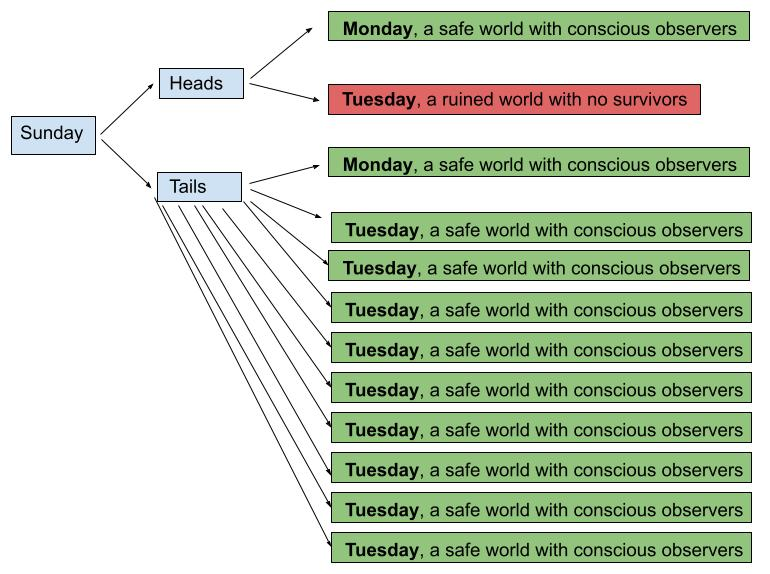

With a few tweaks, we can reframe the Sleeping Beauty Problem to represent the question. Think of a Heads coin flip as a world in which Tuesday is a catastrophic event with no conscious observers in it, and let Tails be a world in which Tuesday is perfectly safe and has the same number of observers as Monday. Suppose that Sleeping Beauty is awakened on a Monday and is told that it's Monday. The question "Can observed coincidences give evidence about disasters in nearby timelines" is equivalent to "Given that she knows it's Monday, can Sleeping Beauty update her belief that a Heads was flipped to something other than the baseline frequency of coins landing heads, 1/2?"

What is p(heads|Monday)?

Halfer Perspective

If Sleeping Beauty thinks that p(heads)=½, and then is informed that it’s Monday, this is the Bayesian calculation that she would use to update her credence that the coin landed heads:

You can imagine that when Halfer Sleeping Beauty first wakes up, she assigns 50% of the probability mass to (Heads & Monday) and 25% each to (Tails & Monday) and (Tails & Tuesday). When (Tails & Tuesday) is eliminated, (Heads & Monday) is left with 50/75 = ⅔ of the remaining mass.

From this perspective, if Sleeping Beauty observes an event that seems to have prevented a world-ending disaster, she should use this as evidence in favor of the hypothesis that the world-ending disaster did take place in nearby timelines. And the rarer a potentially world-saving event is, the more likely it is that a world-ending event was narrowly missed. For example, consider an altered experiment where Sleeping Beauty wakes up 9 times on Tuesday when Tails is flipped, but only once on Mondays.

Halfer sleeping beauty will still say that p(heads)=1/2 before learning that it's Monday, and then will make this Bayesian calculation after learning that it's Monday:

I did read that there is a “Double-Halfer” solution to the Sleeping Beauty Problem, that claims that p(heads)=½ and p(heads|Monday)=½ are both true. I’m not sure how this argument works or how it gets away with not making any bayesian update after learning that it is Monday. If anyone does understand this perspective and wants to explain it, I would be interested.

Thirder Perspective

If Sleeping Beauty thinks that p(heads)=⅓, and then is informed that it’s Monday, this is the Bayesian calculation that she would use to update her credence that the coin landed heads:

You can imagine that when Thirder Sleeping Beauty first wakes up, she assigns an equal 33.33…% probability mass to each of (Heads & Monday), (Tails & Monday), and (Tails & Tuesday). When (Tails & Tuesday) is eliminated, (Heads & Monday) and (Tails & Monday) are still the same size and each takes up 1/2 of the remaining mass.

Equivalently, Sleeping Beauty could use Nick Bostrom’s Self-Sampling Assumption:

“SSA: All other things equal, an observer should reason as if they are randomly selected from the set of all actually existent observers (past, present and future) in their reference class.”

When Sleeping Beauty learns that it is Monday, her reference class no longer includes the Sleeping Beauty who awakens on Tuesday. And there is exactly one Sleeping Beauty in (Heads & Monday) and (Tails & Monday), so she has an equal chance of being either of them.

From the Thirder perspective, sleeping beauty has updated her credence that the coin landed heads after learning it's Monday, but the update only cancels out the other evidence and brings her back to the same epistemic state she would have had before the experiment even began. Fair coins land heads ½ of the time.

What if, again, Beauty wakes up 9 times on Tuesday when Tails is flipped, but only once on Mondays? In this case, Thirder Sleeping Beauty would say p(heads) = 1/11 (instead of ⅓) before learning it was Monday, and then use this Bayesian calculation after learning it’s Monday:

Applying the Self-Sampling Assumption again, there is still exactly one Sleeping Beauty in both (heads & Monday) and (tails & Monday), regardless of what happens on Tuesday. From the Thirder perspective, no matter how rare of a potential disaster-avoiding event Sleeping Beauty witnesses, it can’t change her baseline prediction of how often that potentially avoided disaster will occur.

This Feels Counterintuitive

To me, the Thirder position makes the most sense, intuitively and intellectually. However, it feels weird that even if Beauty is awakened 1000 times on Tuesdays after a tails flip, she can't use the fact that it's Monday to update p(heads) to be higher than 1/2. Let's say that before being told what day it is, Beauty makes a hypothesis that the coin flip landed heads. This hypothesis makes the prediction that the day is Monday. If her hypothesis that the coin landed heads is true, then her prediction that the day is Monday will be correct! And yet, according to the Thirder perspective or the self-sampling assumption, she can not use her successful prediction to update p(heads) to anything different from her original belief about how often the coin lands heads. Max Tegmark mentions something similar in Our Mathematical Universe:

"So let's quite generally consider any multiverse scenario where some mechanism kills half of all copies of you each second. After twenty seconds, only about one in a million (1 in 2^20) of your initial doppelgängers will still be alive. Up until that point, there have been 2^20 +2^19 +...+ 4 + 2 + 1 = 2^21 second-long observer moments, so only one in two million observer moments remembers surviving for twenty seconds. As Paul Almond has pointed out, this means that those surviving that long should rule out the entire premise (that they're undergoing the immortality experiment) at 99.99995% confidence. In other words, we have a philosophically bizarre situation: you start with a correct theory for what's going on, you make a prediction for what will happen (that you'll survive), you observationally confirm that your prediction was correct, and you then nonetheless turn around and declare that the theory is ruled out!"

What's going on here?

I think that the problem is a sort of illusion created by my brain's insistence that I am a single, continuous individual following a single, unbranching path through time. In the 1000 awakenings on Tuesday scenario, it's a mistake to think of a Monday awakening as unlikely to happen. Yes, you can only experience one awakening at a time. And yes, if you picked any single awakening it would be unlikely to be a Monday awakening. But the single Monday awakening *always happens on every coin flip* even if you find yourself in one of the Tuesday awakenings. If you find yourself in a Monday awakening, you know it would have happened either way, and you have no way of knowing what will happen tomorrow on Tuesday.

So for me, the Thirder position makes sense and the self-sampling assumption make sense enough that I believe p(heads|Monday) = ½. This feels counterintuitive and strange to me, but when I remove the idea that I am a single, continuous individual, and think of myself as an observer moment in a tree of other observer moments, the strangeness mostly disappears.

Of course, this all hinges on my belief that the answer to the original Sleeping Beauty problem is ⅓. If you take the Halfer perspective, you might draw a different conclusion. If you take the Double-Halfer position, then you will have the same conclusion but you will have arrived at it from a different line of reasoning.

Conclusion

I think that the Thirder answer makes the most sense in the sleeping beauty problem. Because of that assumption and the reasoning above, I am now fairly confident that observed coincidences can not be used as evidence that a world-ending disaster is near. I have mixed feelings about this conclusion, though. It's relieving that recent coincidences surrounding AI progress are not evidence that we're on the brink of a world-ending event. To be clear, we still might be on the brink of a world-ending event, but I'm not going to update my credence of that because of a string of coincidences that might have slowed AI progress.

On the other hand, it sure would be useful if we could use statistical anomalies in the past to identify specific disasters that we barely missed. So I'm a little disappointed that this isn't the case in my model of the universe.

If I strongly believed that the answer to the original Sleeping Beauty Problem was ½ then I would be vigilant for coincidences. A string of weird coincidences that are independent of each other but also correlated to prevent a specific, potentially world-ending event would send the strongest signal and could be used as a sort of alarm bell that disaster may be near.

Also, it's important for me to note that this post is not an assessment of whether the idea of Anthropic Shadow is useful in general. We may not be able to use specific anomalies to identify specific disasters, but Nick Bostrom has pointed out that if we are using the past to predict the frequency of events, we will systematically undercount the likelihood of events that end the world.

5 comments

Comments sorted by top scores.

comment by Charlie Steiner · 2021-07-06T12:32:30.159Z · LW(p) · GW(p)

My go-to reference for this reasoning is Stuart's post: https://www.alignmentforum.org/posts/4ZRDXv7nffodjv477/anthropic-reasoning-isn-t-magic [AF · GW]

comment by dadadarren · 2021-07-06T20:02:48.110Z · LW(p) · GW(p)

Base on my experience most halfers nowadays are actually double-halfers. However, not everyone agrees why. So I am just going to explain my approach.

The main point is to treat perspectives as fundamental and recognize in first-person reasoning indexicals such as "I, here, now" are primitive. They have no reference class other than themselves. So self-locating probabilities such as "the probability of now being Monday" is undefined. This is why there is no Bayesian update.

This also explains other problems of halferism. e.g. robust perspectivism: two parties sharing all information can give different answers to the same probability question. It is also immune to counter-arguments against double halfers as pointed out by Michael G. Titelbaum.

Replies from: JBlackcomment by [deleted] · 2021-07-06T04:47:12.900Z · LW(p) · GW(p)

A more paranoid man than myself would start musing about anthropic shadows and selection effects.

Why paranoid? I don't quite get the argument here; doesn't anthropic shadow imply we have nothing to worry about (except for maybe hyperexistential risks) since we're guaranteed to be living in a timeline where humanity survives in the end?

- A pandemic happened that hurt the economy and increased demand for consumer electronics, driving up the cost of computer chips

- Intel announced that it was having major manufacturing issues

- Bitcoin, Ethereum, and other coins reached an all-time high, driving up the price of GPUs

I don't see much of a coincidence here. The pandemic and crypto boom are highly correlated events; it's hardly surprising deflationary value storages will do well in times of crisis, gold also hit an all-time high during the same period. Besides, the last crypto boom in 2017 didn't seem to have slowed down investment in deep learning. Intel has never been a big player in the GPU market and CPU prices are reasonable right now but isn't that relevant for deep learning anyway. And the "AI and Compute" trend line broke down pretty much as soon as the OpenAI article was released, a solid 1.5 - 2 years before the Covid-19 crisis hit. That's a long time in ML world.

Unless you're a fanatic reverend of the God of Straight Lines, there isn't anything here to be explained. When straight lines run into physical limitations, physics wins. Hardware progress clearly can't keep up with the 10x per year growth rate of AI compute, and the only way to make up for it was to increase monetary investment into this field, which is becoming harder to justify given the lack of returns so far.

But, if you disagree and believe that the Straight Line is going to resume any day now, go ahead and buy more Nvidia stocks and win [LW · GW].

Replies from: wizzwizz4↑ comment by wizzwizz4 · 2021-07-06T13:42:21.788Z · LW(p) · GW(p)

I don't quite get the argument here; doesn't anthropic shadow imply we have nothing to worry about (except for maybe hyperexistential risks) since we're guaranteed to be living in a timeline where humanity survives in the end?

But it doesn't say we're guaranteed not to be living in a timeline where humanity doesn't survive.

If I had a universe copying machine and a doomsday machine, pressed the “universe copy” button 1000 times (for 2¹⁰⁰⁰ universes), then smashed relativistic meteors into Earth in all but one of them… would you call that an ethical issue? I certainly would, even though the inhabitants of the original universe are guaranteed to be living in a timeline where they don't die horribly from a volcanic apocalypse.