Form Your Own Opinions

post by Ben Pace (Benito) · 2018-04-28T19:50:18.321Z · LW · GW · 1 commentsContents

Summary Footnotes None 1 comment

Follow-up to: A Sketch of Good Communication [LW · GW]

Question:

Why should you integrate an expert's model with your model at all? Haven’t you heard that people weigh their own ideas too heavily - you should just defer to them.

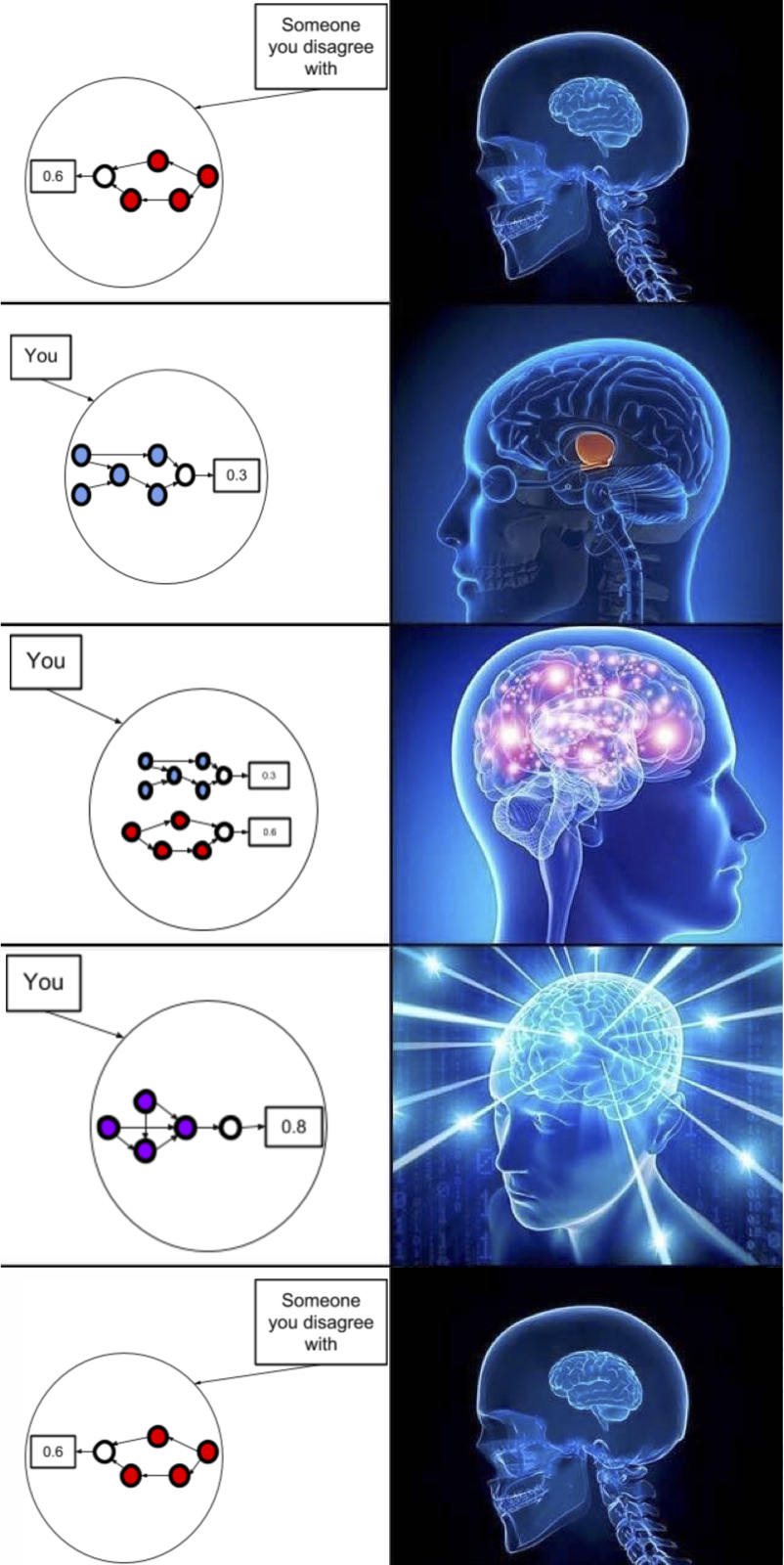

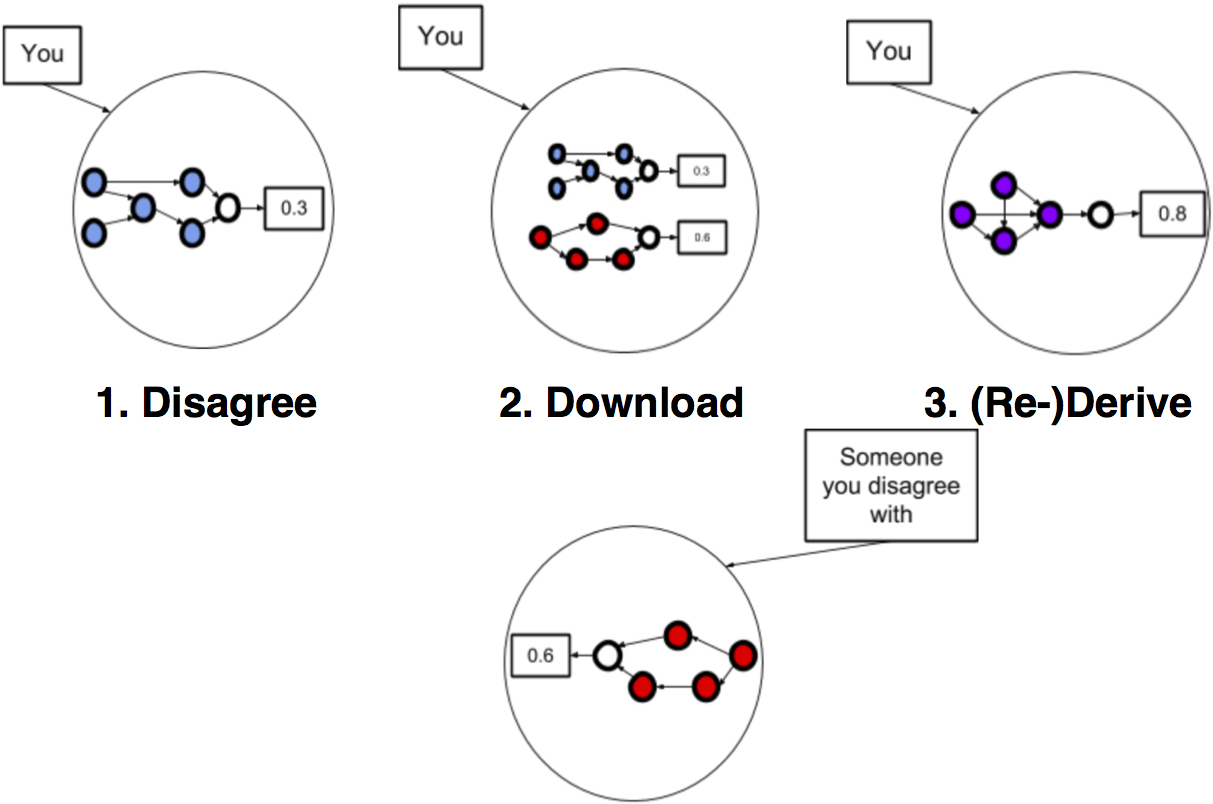

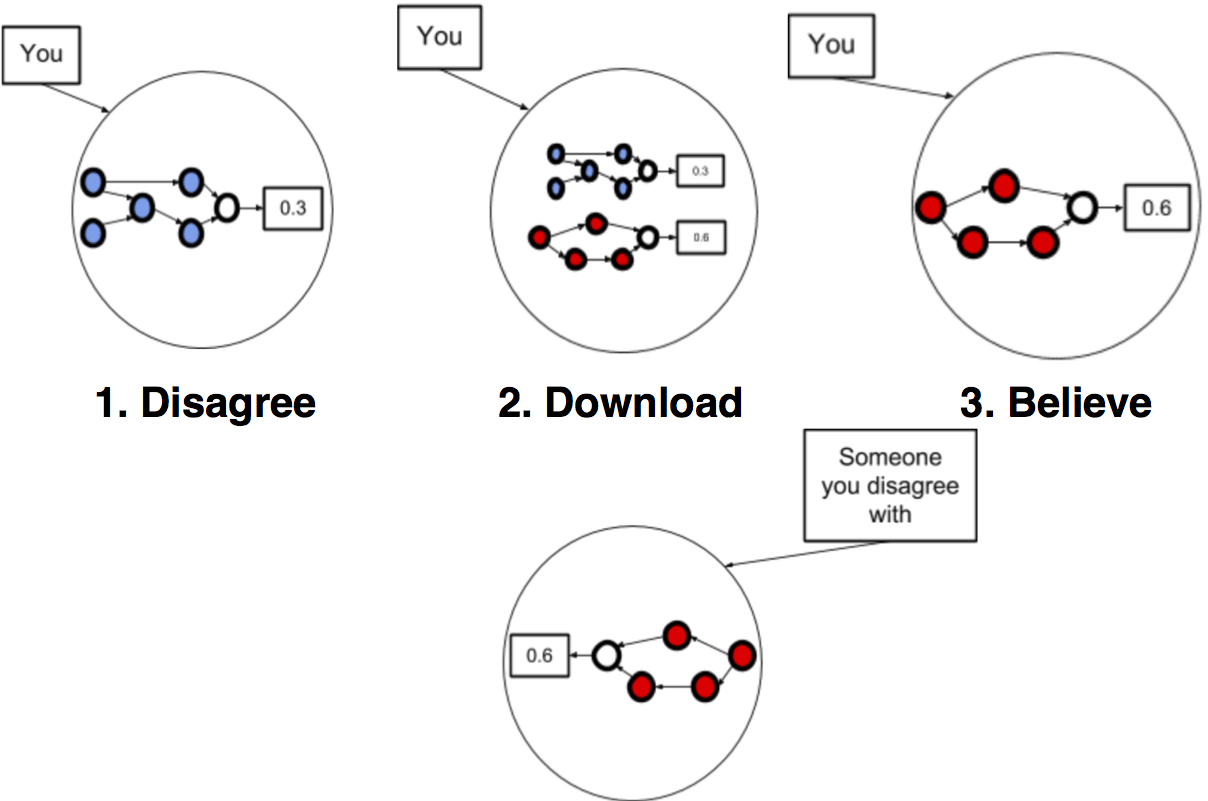

Here’s a quick reminder of the three step process [LW · GW]:

And this new proposal, I think, suggests changing what you do after step 2.

The people who have the best ideas, as it seems to me, often change their plans as a result of their debates in all the other fields. Here’s three examples of people doing this with AI timelines.

- [Person 1] Oh, AI timelines? Well, I recall reading that it took evolution years to go from eukaryotes to human brains. I’d guess that human developers are about times more efficient than evolution, so I expect it to take 1000 years to get there from the point where we built computers. Which puts my date at 2956.

- If I’m wrong I’ll likely have to learn something new about developers competence relative to evolution, or about how humans get to do a type of intelligence evolution wasn’t allowed to for some reason.

- [Person 2] Oh, AI timelines? Well, given my experience working on coding projects, it seems to me that projects take 50% extra time to run than you'd expect once you've got the theory down, so I'll take the date by which we should have enough hardware to build a human brain, estimate the coding work required for the necessary project, and add 1.5x time to it.

- And if you change my mind on this, it will help make my models of project time more accurate, and change how I do my job.

- [Person 3] Oh, AI timelines? Well, given my basic knowledge of GDP growth rates I’d guess that being able to automate this percentage of the workforce would cause a doubling every X unit of time, which I expect for us (at current rates) only to be able to do after K years.

- If you show me I’m wrong it’ll either be because GDP is not as reliable as I think, or because I’ve made a mistake extrapolating the trend as it stands.

Can you see how a conversation between either of these two people would lead them both to learn not only about AI, but also about models of evolution/large scale coding projects/macroeconomics?

Recently, Jacob Lagerros and I were organising a paper-reading session [LW · GW] on a recent Distill.pub paper, and Jacob was arguing for a highly-structured and detailed read-through of the paper. I wanted to focus more on understanding people’s current confusions about the subfield and how this connected to the paper, rather than focusing solely on the details.

Jacob said “Sometimes though Ben, you just need to learn the details. When the AlphaGo paper came out, it’s all well and good to try to resolve your general confusions about Machine Learning, but sometimes you just need to learn how AlphaGo worked.”

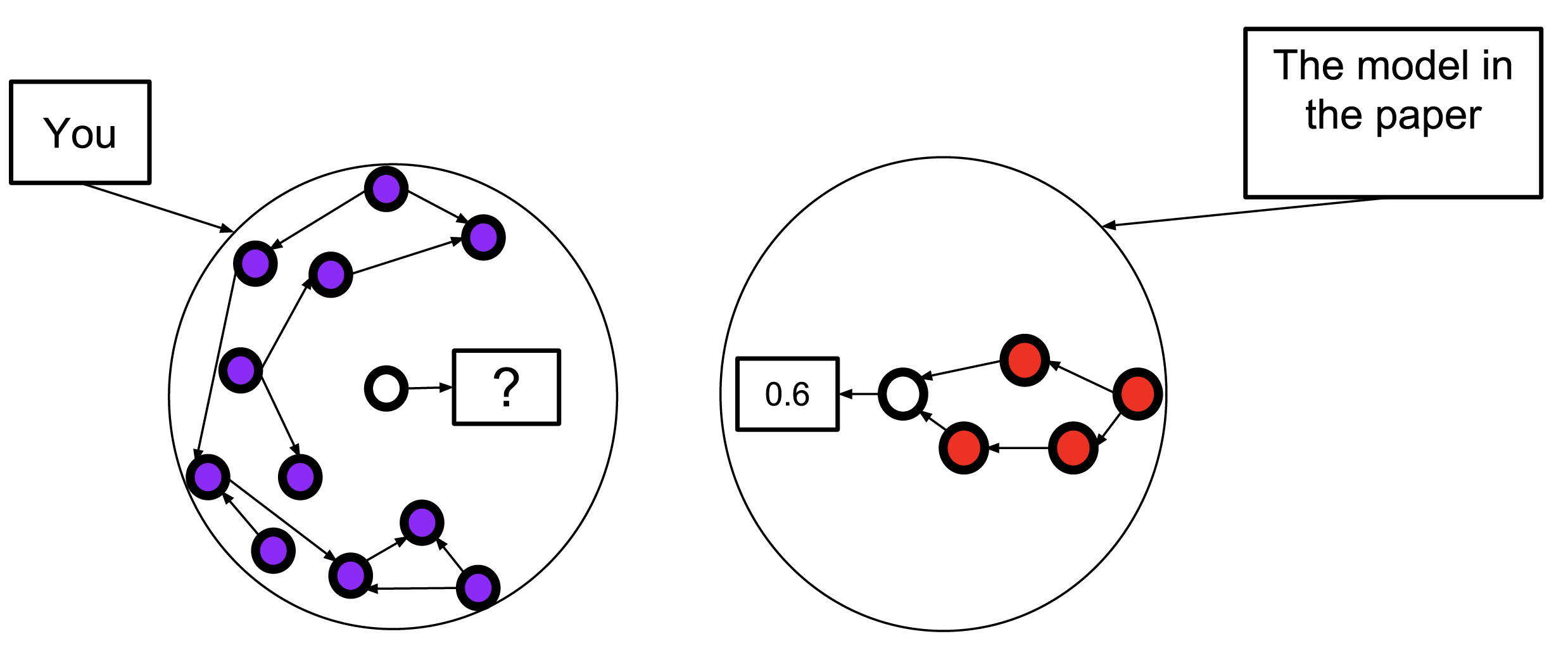

I responded: “Quite to the contrary. When reading an important paper, this is an especially important time to ask high-level questions like ‘is research direction X ever going to be fruitful’ and ‘is this a falsification of my current model of this subfield’, because you rarely get evidence of that sort. We need people to load up their existing models, notice what they’re confused about, and make predictions first.”

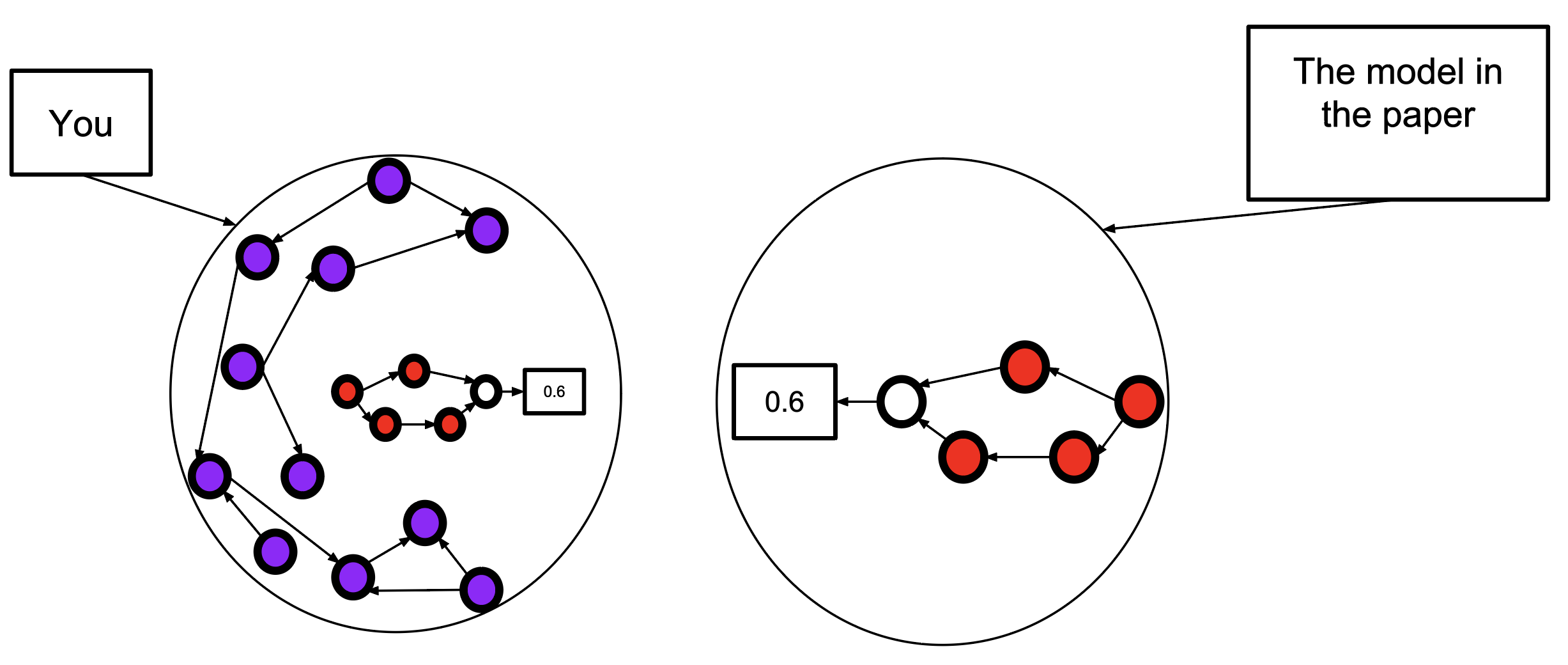

I wanted to draw diagrams with background models, where Jacob was arguing for:

Followed by:

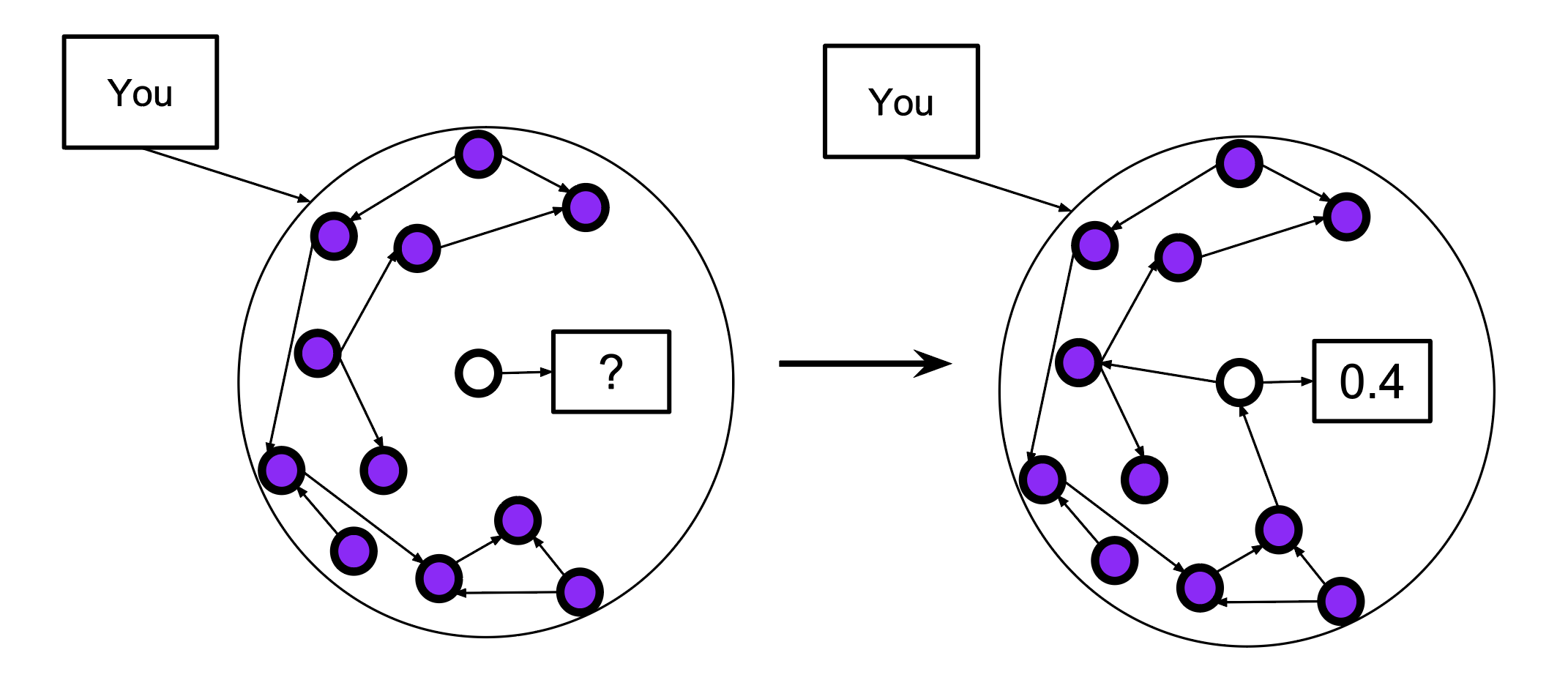

But I was trying to say:

This is my best guess at what it means to have an opinion - to have an integrated causal model of the world that makes predictions about whatever phenomena you're discussing. It is basically steps and before the 3-step process outlined at the top.

The truth is somewhere in between, as it’s not possible to have a model that connects to action without it in some way connecting to your other models. But it’s always important to ask “What other parts of my models have implications here that this can give data on?”

Another way of saying this would be ‘practice introspecting on your existing models and then building models of new domains’.

And I think a way to do this is first to form your own opinion, then download the other person’s model, and finally integrate. Don’t just download someone else’s model first - empirically I find people have a real hard time imagining the world to be a different way once they’ve heard what you think [LW · GW], especially if you’re likely to be right. Tell your friend you need a few minutes silence to think, build an opinion, then forge toward the truth together.

(One way to check if you’re doing this in conversation, is to ask whether you’re regularly repeating things you’ve said before, or whether you’re often giving detailed explanations for the first time - whether you’re thinking thoughts you’ve not thought before. The latter is a sign you’re connecting models of diverse domains.)

I want to distinguish this idea from “Always give a snappy answer when someone asks you a question”. It’s often counterproductive to do that, as building models takes time and thought. But regularly do things like “Okay, let me think for a minute” *minute long silence* “So I predict that key variables here are…”

(Another way of saying that is ‘Don’t be a button-wall [LW · GW]’. If you ever notice that someone is asking you a question and all you have are stock answers and explanations, try instead to think a new thought. It’s much harder, but far more commonly leads to very interesting conversations where you actually learn something.)

Another way of saying ‘form lots of opinions’ is ‘think for yourself’.

Summary

The opening question:

Why is forming your own opinions better than simply downloading someone else’s model (like an expert’s)? Why should you even integrate it with your model at all?

Surely what you want is to get to the truth - the right way of describing things. And the expert is likely closer to that than you are.

If you could just import Einstein’s insights about physics into your mind… surely you should do that?

Integrating new ideas with your current models is really valuable for several reasons:

- It lets your models of different domains share data (this can be really helpful for domains where data is scarce).

- It gives you more and faster feedback loops about new domains:

- More interconnection → More predictions → More feedback

- This does involve downloading the expert's model - it just adds extra sources of information to your understanding.

- It’s the only way to further entangle yourself with reality. Memorising the words of the expert is not a thing that gives you feedback, and it’s not something that’s self-correcting if you got one bit wrong.

Footnotes

1. I’ve picked examples that require varying amounts of expertise. It’s great to integrate your highly detailed and tested models, but you don’t require the protection of ‘expertise’ in order to build a model that integrates with what you already know - I made these up and I know very little about how GDP or large scale coding projects work. As long as you’re building and integrating, you’re moving forwards.

2. I like to think of Tetlock’s Fox/Hedgehog distinction through this lense. A fox is a person who tries to connect models from all different domains, and is happy if their model captures a significant chunk (e.g. 80%) of the variance in that domain. A hedgehog is someone who wants to download the correct model of a domain, and will refine it with details until it captures as much of the variance as possible.

Thanks to Jacob Lagerros (jacobjacob [? · GW]) for comments on drafts.

1 comments

Comments sorted by top scores.

comment by Ben Pace (Benito) · 2018-04-28T19:55:30.842Z · LW(p) · GW(p)

oops i was supposed to be working and now i have this