The Dilemma’s Dilemma

post by James Stephen Brown (james-brown) · 2025-02-19T23:50:47.485Z · LW · GW · 11 commentsThis is a link post for https://nonzerosum.games/dilemmasdilemma.html

Contents

How We Frame Negotiations Matters Constraints Iteration Rationality Confidence Outsourcing Trust Stories Are Important Where To From Here? And That’s Not All So… None 11 comments

How We Frame Negotiations Matters

This is a follow up to a primer for the Prisoner's Dilemma that questions its application in real world scenarios and raises some potentially negative implications. I invite feedback and criticisms.

We’ve considered how the Prisoner’s Dilemma reveals a number of key concepts in Game Theory. Yet, I have some reservations that it is the field’s best advocate for applying Game Theory in the real world. I first expressed this concern on a Reddit thread—receiving helpful feedback which has informed this series of posts.

Game Theory aims to enhance cooperation, and yet the first scenario we’re presented with is a peculiar situation where the Nash Equilibrium is mutual defection. This is due to the fact that it’s a one-shot negotiation, in the dark, with no communication, trust or loyalty, no future consequences and no external factors, being decided by two “rational” (purely self-interested) criminals!

Is this an ideal starting point for fruitful negotiations?

Well, as Kaomet points out…

… there is more drama to it than just a win-win situation

So, it’s provocative, but the potential lesson from such an introduction—rationality equals defensive selfishness, while cooperation is irrational and futile—seems at odds with healthy social norms and moral intuitions. Then again, it can be argued that the fact that the Prisoner’s Dilemma conflicts with pro-social behaviour, makes it a good “dilemma”.

As commented by Forgot_the_Jacobian

The one shot Prisoners Dilemma is often used in Econ teaching… since it is a paradigmatic example of how individuals acting in their own self interest can lead to a situation that is not socially optimal.

… and as MarioVX clarifies

If you claimed this is possible without an example, most people wouldn’t believe you… it’s an excellent hook to get people into game theory

So, it is a good example of how dilemmas arise. But there is a fine line between explaining and excusing behaviour. By framing a situation in such self-serving terms and calling it “rational”, do we run the risk of justifying selfish behaviour? Well, not if you think about it in a nuanced way and see the paradoxical nature of the dilemma and the conflict with our moral intuitions!

To understand why the Prisoner’s Dilemma is at odds with our moral intuitions, we’ll explore some key points of difference between game theory and reality.

- Constraints: Reality doesn’t unfold in a game-theoretical vacuum

- Iteration: There are no one-shot games in life

- Rationality: Pure self-interest is not “rational” in the real world

We’ll then look at potential consequences for ourselves and society.

- Confidence: Our confidence in others influences our own behaviour

- Outsourcing Trust: When society does our cooperating for us, it’s easy to forget how important trust is between individuals

Constraints

As a field of mathematics, Game Theory requires confined variables to reach deductive outcomes. However, real life takes place amidst myriad social forces, from a disapproving glare to the entire justice system, which have evolved to curtail cheating. This is rooted in reciprocity—a key feature of life, because life is a series of interactions which extend beyond the present situation.

If we revisit the Prisoner’s Dilemma but posit a close personal history between the prisoners, trust functions as a contract, shifting the likely outcome from a Nash Equilibrium of mutual defection to a Pareto Efficient mutual cooperation.

And even without such a soft value as trust, there’s always revenge!

If you betray your partner, do you really believe that during their five years in the clink, surrounded by nefarious influences, and with only time on their hands, that they won’t hatch a plan to make you pay?

Iteration

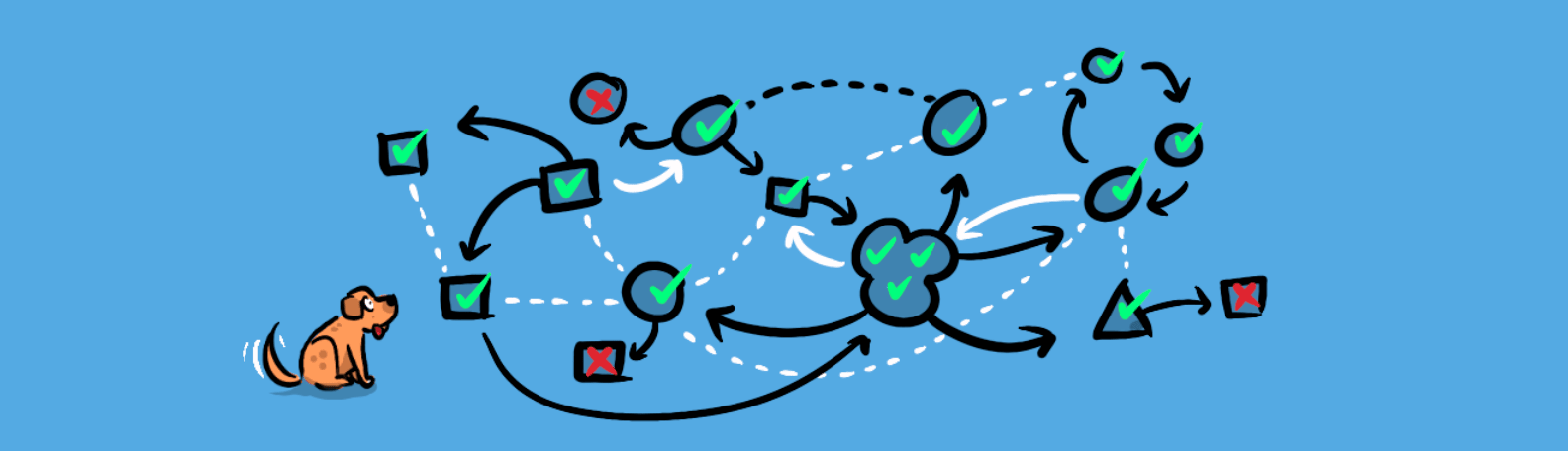

The promise of future reward and the risk of future punishment are ever-present in reality. Game theorists represent this through iterated games. In the iterated version of the Prisoner’s Dilemma, players not only have to account for the current round, but also for how their behaviour will affect the other player’s behaviour in future rounds, and what the accumulative benefits will be of different strategies.

“I think at least the iterated prisoners dilemma is very commonplace, and the original dilemma is a stepping stone to the iterated version”—SmackieT

Jaiveer Singh, in his brilliant repeated games, explores the nuances of how strategies change given iterated prisoner’s dilemmas, finding that with an infinitely or indefinitely iterated game (like choices in life) the dominant strategy becomes mutual cooperation.

This approach was explored in simulation right back in, what my daughter calls, the medieval days of the 1980s. Robert Axelrod played various agents, with different strategies, against each other in a tournament. He found that positive tit-for-tat was the dominant strategy—cooperate first and then mirror your opponent. Axelrod’s “Evolution of Trust” has been entertainingly illustrated in this interactive by Nicky Case, while in Capitalising on Trust we explored how trust can be used to choose better cooperative partners.

Matt Ball even speculates that…

“… self-awareness … evolved to keep track of repeated games. I’m pretty willing to bet that is why some of ‘morality’ [sic] evolved.”

The iterated Prisoner’s Dilemma can help us appreciate that cooperative trust-based actions can determine rationalbehaviour.

Rationality

Game theory defines ‘rationality’ as pure self-interest within the constraints of the game. This tends to suggest that moral intuitions, that contradict the Prisoner’s Dilemma, are necessarily irrational. But as we have seen, accounting for repetition changes the Nash Equilibrium for the Prisoner’s Dilemmas to mutual cooperation. It turns out the conflict with real world social norms is not due to a failure of rationality, but rather a failure of the one-shot Prisoner’s Dilemma to account for all the variables at play in real life.

Confidence

We have seen that there are many reasons why the iterated Prisoner’s Dilemma can help us develop ways to counteract distrust—when played out fully in relation to our moral intuitions. But moral intuitions vary. So, what if we don’t have a strong repulsion to a situation that is not mutually beneficial? What happens when lay-people, looking to justify selfishness, take the one-shot Prisoners Dilemma at face value?

The problem is that assuming selfishness and distrust is not a neutral position. In fact, one of the lessons we learn from Game Theory is that assuming distrust incentivises us to be less trustworthy, as we explored in What is Confidence?—in game theory & life—our propensity to cooperate is strongly influenced by our trust in the cooperation of others. Assuming bad faith can create self-fulfilling cycles of defection and cynicism.

Outsourcing Trust

Because of the clear benefits of cooperation across the board, society has developed many mechanisms that facilitate and protect cooperation, meaning we very rarely run into one-shot prisoner’s dilemmas in the real world, as this comment from MarioVX explains:

Think about moneylending for instance. If there weren’t laws obligating you to do so (by threatening punishment if you deviate), why would you ever pay a credit back? Conversely, knowing this reasoning, why would a bank ever agree to grant you a credit in the first place?

In Yuval Noah Harari’s Sapiens, he points to the credit system as one of society’s key signifiers of progress, a hard-won result of civilisation locking in systematic trust.

Over the last 500 years the idea of progress convinced people to put more and more trust in the future. This trust created credit; credit brought real economic growth; and growth strengthened the trust in the future and opened the way for even more credit.

Society runs on trust, from trusting a barista to bring you a coffee after you’ve handed over your money to getting a mortgage for a home. Systems of democracy, government, the courts and the market, are intended to mitigate self-interest in a way that aligns with collective well-being.

This makes much of this trust invisible—behind the scenes. So, it can be easy to discount our capacity for trust and trustworthiness, and rely on the rules of the system or the invisible hand of the market to take care of everything for us, allowing our own capacity for trust and trustworthiness to atrophy.

Taking trust for granted makes us vulnerable to stories that tell us we are, at base, selfish, untrusting, “rational” agents. You can see that this might be corrosive to social cohesion. This is an idea Rutger Bregman explores in Humankind where he seeks to unweave some of our foundational stories about human nature and selfishness.

Stories Are Important

It could be argued that my agonising over the consequences for society might be overblown, as gmweinberg claims

I think pretty much no one is significantly influenced in their personal actions by game theory, it’s just something people find interesting.

This might be absolutely right, but as thinkers like Harari and Bregman suggest, stories that emerge in philosophical circles end up in the mainstream over time.

“Stories are never just stories… stories can change who we are as a species… we can become our stories… So changing the world often starts with telling a different story”—Rutger Bregman

Where To From Here?

Due to its popularity we can end up shoe-horning every negotiation into a Prisoner’s Dilemma.

“it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail.”—Maslow’s hammer (the law of the instrument)

But what if the situation has important differences? Perhaps it’s asymmetrical like the Ultimatum Game, or the payoff for defection turns out to be the same as the payoff for cooperation, making cooperation more likely, or perhaps there is actually no incentive for one party to defect, meaning the other doesn’t need to worry about defection like in the Toastmasters Payoff?

It might turn out we’re creating a dilemma where there isn’t one. In this way, Game Theory can be one of those “a little knowledge is a dangerous thing” applications.

We can avoid this trap by understanding other Game Theory scenarios. Which is where we’re going next in the series. We’ll be looking at:

- Stag Hunt

- Public Goods game

- Battle of the Sexes

- Hawk Dove game

- Ultimatum game

- Coordination game

And perhaps we’ll find a better Game Theory poster-child. The series will then deconstruct payoff matrices themselves, enabling us to represent our own novel scenarios.

And That’s Not All

Robert Axelrod’s tit-for-tat strategy helps us make sense of how cooperation can emerge in a competitive environment. But when you think about it, it still falls short of our moral intuitions—ideas of forgiveness and doing the right thing even when no one is looking. To try to understand these moral frameworks in game-theoretical terms we’ll be taking on more complex games that better reflect the iterative and evolving nature of systems with…

- Evolutionary Game Theory

- Evolutionary Stable Strategy

These will help us to understand why we have some of the social norms we do.

So…

I’d like to acknowledge and thank those who provided feedback on the blog and Reddit for helping shape my views on the Prisoner’s Dilemma. I’ve come to appreciate the value of the numerous Game Theory concepts it illuminates and the value of having a provocative hook which immediately engages us with a puzzle.

This process has clarified, for me, the importance of the iterated Prisoner’s Dilemma, not as a supplement or extension of the Prisoner’s Dilemma, but as the actual point of (and solution to) the dilemma. To leave a student or lay-person aware of only the one-shot version, risks reinforcing a story that human nature is inherently selfish, untrusting and untrustworthy, when the opposite is true-cooperative relationships and the development of sophisticated networks of trust are defining features of human civilisation.

The issue I have had with the Prisoner’s Dilemma primarily has to do with the constraints of game-theoretical scenarios in general. By going through this process of analysis, I hope to inoculate myself and others against some of the potentially misleading limitations of Game Theory when applied to real-world situations. It’s an important reminder that we can utilise Game Theory without jettisoning the social norms and moral intuitions that have evolved in (and for) our more complex and complicated real world. Those values can point us to more nuanced and interesting explanations, making us better game theorists.

11 comments

Comments sorted by top scores.

comment by Noosphere89 (sharmake-farah) · 2025-02-20T14:14:24.068Z · LW(p) · GW(p)

IMO, the most important ideas from game theory are these:

- Cooperation doesn't happen automatically, and you need to remember that any plan that assumes coordination must figure out ways to either make their values prefer coordination, or their incentives must be changed.

More generally, one of the most powerful things in game theory/economics is looking at the incentives/games can get you a lot of information on why a system has evolved the way it does.

- Except when it isn't that informative, due to the folk theorems of game theory. For the Iterated Prisoners Dillemma, which is actually quite common (as is stag hunt/Schelling problems), it's known that under a number of conditions that could plausibly happen, and at any rate are likely to be more probable in the future, any outcome that is possible and individually rational can happen, which means that game theory imposes no constraints on it's own:

https://en.wikipedia.org/wiki/Folk_theorem_(game_theory)

https://www.lesswrong.com/posts/FE4R38FQtnTNzeGoz/explaining-hell-is-game-theory-folk-theorems [LW · GW]

https://www.lesswrong.com/posts/d2HvpKWQ2XGNsHr8s/hell-is-game-theory-folk-theorems#M6fYXniR4LoJshQr3 [LW(p) · GW(p)]

As a special case, all of Elinor Ostrom's observations around cooperation IRL are mathematically provable to exist, so it can happen, and indeed it did.

- If you want cooperation, peace or a lot of other flourishing goals, it is very helpful to shift from zero-sum games to positive sum games, and there's a good argument to be made that the broad reason things are being more peaceful today is the fundamental shift from a broadly zero-sum world to a broadly positive sum world, primarily because of economic growth, but due to the laws of physics, it's probable that within the next several centuries, zero sum games will come back quite a bit, and while I expect more positive sum games than the era from 200,000 BC-1500 AD, I do expect a lot more zero sum games than the are 1500 AD-2050 AD era.

↑ comment by James Stephen Brown (james-brown) · 2025-02-20T18:28:39.429Z · LW(p) · GW(p)

Thanks for your recommendations, I look forward to reading them all.

I'm aligned with your thinking about the growth of positive-sum games (it's the premise of the site where my posts originate). I was interested that you believe that zero-sum games will return "due to the laws of physics". What do you think is going to change about physics to reverse the trend towards positive-sum games? We live in a planet with surplus free energy (from the sun, which makes positive-sum systems from life to civilisation possible), so I'm not sure why we would expect (while that fuel source exists) for the positive-sum results of that surplus to change.

Perhaps you're making a Malthusian prediction based on limited resources here on earth?

↑ comment by Noosphere89 (sharmake-farah) · 2025-02-20T18:48:33.338Z · LW(p) · GW(p)

Perhaps you're making a Malthusian prediction based limited resources?

Kind of.

More specifically, assuming we can't cheat and circumvent the problem of the laws of thermodynamics/speed of light (which I put 50% probability on at this point, I have been convinced by Adam Brown that this might be cheatable in the far future), then at a global scale, energy, which is the foundation of all utility, is conserved globally, meaning that a global scale, everything must be a 0-sum game, because if it wasn't, you could use this as a way to break the first law of thermodynamics.

This also means that the engine of progress which made basically everything positive sum vanishes, and while I expect more positive sum games than the pre-industrial times, due to being able to cooperate better, the universally positive-sum era of the last few centuries would have to end.

Adam Brown on changing the laws of physics below:

https://www.dwarkeshpatel.com/p/adam-brown

Replies from: james-brown↑ comment by James Stephen Brown (james-brown) · 2025-03-07T18:21:20.850Z · LW(p) · GW(p)

... at a global scale, energy, which is the foundation of all utility, is conserved globally, meaning that a global scale, everything must be a 0-sum game.

If this were the case, there would be no life on earth. The "engine of progress which made basically everything positive sum" is the sun. The sun provides a constant stream of energy and will continue to do so for billions of years. So, "at a global scale" the system is positive-sum, not zero-sum, no breaking of the first law of thermodynamics required. While the total energy on earth remains constant that is because we dissipate heat through entropy. The fact that we take in energy (order) and dissipate heat (disorder) is a byproduct of global "work" which can continually take place as long as the sun survives.

It seems very strange to make arguments referencing the laws of thermodynamics to explain the specifics of civilisation without recognising the role of the sun. Sorry to seem argumentative, I really think you're mistaken on this point.

↑ comment by Noosphere89 (sharmake-farah) · 2025-03-07T18:40:16.848Z · LW(p) · GW(p)

I was implicitly assuming a closed system here, to be clear.

The trick that makes the game locally positive sum is that the earth isn't a closed system relative to the sun, and when I said globally I was referring to the entire accessible universe.

Thinking about that though, I now think this is way less relevant except on extremely long timescales, but the future may be dominated by very long-term people, so this does matter again.

comment by Jonas Hallgren · 2025-02-20T04:34:15.084Z · LW(p) · GW(p)

Just wanted to drop these two books here if you're interested in the cultural evolution side more:

https://www.goodreads.com/book/show/17707599-moral-tribes

https://www.goodreads.com/book/show/25761655-the-secret-of-our-success

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2025-02-20T17:08:07.603Z · LW(p) · GW(p)

I want to flag that I've become much less convinced that the secret of our success/cultural learning is nearly as broad or powerful as an explanation as I used to believe, and I now believe that most of the success of humans comes more so down to the human body being very well optimized for tool use, combined with the bitter lesson for biological brains, and a way to actually cool them down:

This is because Heinrich mostly faked his evidence:

https://www.lesswrong.com/posts/m8ZLeiAFrSAGLEvX8/#MFyWDjh4FomyLnXDk [LW · GW]

https://www.lesswrong.com/posts/m8ZLeiAFrSAGLEvX8/#q7bXpZb8JzHkXjLm7 [LW · GW]

Replies from: Jonas Hallgren↑ comment by Jonas Hallgren · 2025-02-20T23:09:14.603Z · LW(p) · GW(p)

Do you believe it effects most of it or just individual instances, the example you're pointing at there isn't load bearing and there are other people who have written similar things but with more nuance on cultural evolution such as cecilia hayes with cognitive gadgets?

Like I'm not sure how much to throw out based on that?

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2025-02-20T23:15:50.171Z · LW(p) · GW(p)

I'd argue quite a lot, though independent evidence could cause me to update me here, and a key reason for that is there is a plausible argument that a lot of the evidence for cultural learning/cultural practices written in the 1940s-1960s were fundamentally laundered to hide evidence of secret practices.

More generally, I was worried that such an obviously false claim implied a lot more hidden to me wrong claims that I couldn't test, so after spot-checking I didn't want to invest more time into an expensive search process.

comment by James Camacho (james-camacho) · 2025-02-20T03:14:56.132Z · LW(p) · GW(p)

If you're going to be talking about trust in society, you should definitely take a look at Gossner's Simple Bounds on the Value of a Reputation.

Replies from: james-brown↑ comment by James Stephen Brown (james-brown) · 2025-03-07T18:22:03.722Z · LW(p) · GW(p)

Thanks, I'll check it out.