Against Expected Utility

post by Houshalter · 2015-09-23T21:21:59.960Z · LW · GW · Legacy · 28 commentsContents

28 comments

Expected utility is optimal as the number of bets you take approaches infinity. You will lose bets on some days, and win bets on other days. But as you take more and more bets, the day to day randomness cancels out.

Say you want to save as many lives as possible. You can plug "number of lives saved" into an expected utility maximizer. And as the amount of bets it takes increases, it will start to save more lives than any other method.

But the real world obviously doesn't have an infinite number of bets. And following this algorithm in practice will get you worse results. It is not optimal.

In fact, as Pascal's Mugging shows, this could get arbitrarily terrible. An agent following expected utility would just continuously make bets with muggers and worship various religions, until it runs out of resources. Or worse, the expected utility calculations don't even converge, and the agent doesn't make any decisions.

So how do we fix it? Well we could just go back to the original line of reasoning that led us to expected utility, and fix it for finite cases. Instead of caring what method does the best on infinite bets, we might say we want the one that does the best the most on finite cases. That would get you median utility.

For most things, median utility will approximate expected utility. But for very very small risks, it will ignore them. It only cares that it does the best in most possible worlds. It won't ever trade away utility from the majority of your possible worlds to very very unlikely ones.

A naive implementation of median utility isn't actually viable, because at different points in time, the agent might make inconsistent decisions. To fix this, it needs to decide on policies instead of individual decisions. It will pick a decision policy which it believes will lead to the highest median outcome.

This does complicate making a real implementation of this procedure. But that's what you get when you generalize results, and try to make things work on the messy real world. Instead of idealized infinite worlds. The same issue occurs in the multi-armed bandit problem. Where the optimal infinite solution is simple, but finite solutions are incredibly complicated (or simple but require brute force.)

But if you do this, you don't need the independence axiom. You can be consistent and avoid money pumping without it. By not making decisions in isolation, but considering the entire probability space of decisions you will ever make. And choosing the best policies to navigate them.

It's interesting to note this actually solves some other problems. Such an agent would pick a policy that one-boxes on Newcomb's problems, simply because that is the optimal policy. Whereas a straightforward implementation of expected utility doesn't care.

But what if you really like the other mathematical properties of expected utility? What if we can just keep it and change something else? Like the probability function or the utility function?

Well the probability function is sacred IMO. Events should have the same probability of happening (given your prior knowledge), regardless what utility function you have, or what you are trying to optimize. And it's probably inconsistent too. An agent could exploit you. By giving you bets in the areas where your beliefs are forced to be different from reality.

The utility function is not necessarily sacred though. It is inherently subjective, with the goal of just producing the behavior we want. Maybe there is some modification to it that could fix these problems.

It seems really inelegant to do this. We had a nice beautiful system where you could just count the number of lives saved, and maximize that. But assume we give up on that. How can we change the utility function to make it work?

Well you could bound utility to get out of mugging situations. After a certain level, your utility function just stops. It can't get any higher.

But then you are stuck with a bound. If you ever reach it, then you suddenly stop caring about saving any more lives. Now it's possible that your true utility function really is bounded. But it's not a fully general solution for all utility functions. And I don't believe that human utility is actually bounded, but that will have to be a different post.

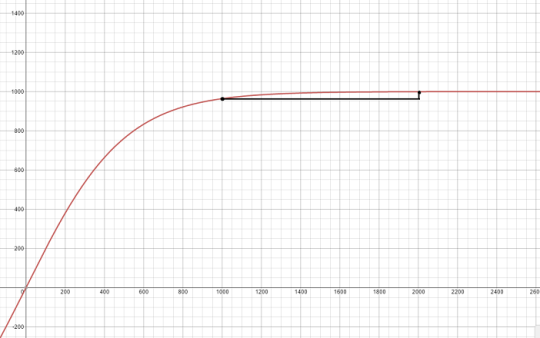

You could transform the utility function so it asymptotic. But this is just a continuous bound. It doesn't solve much. It still makes you care less and less about obtaining more utility, the closer you get to it.

Say you set your asymptote around 1,000. It can be much larger, but I need an example that is manageable. Now, what happens if you find yourself to exist in a world where all utilities are multiplied by a large number? Say 1,000. E.g. you save a 1,000 lives in situations where before, you would have saved only 1.

An example asymptoting function that is capped at 1,000. Notice how 2,000 is only slightly higher than 1,000, and everything after that is basically flat.

Now the utility of each additional life is diminishing very quickly. Saving 2,000 lives might have only 0.001% more utility than 1,000 lives.

This means that you would not take a 1% risk of losing 1,000 people, for a 99% chance at saving 2,000.

This is the exact opposite situation of Pascal's mugging! The probability of the reward is very high. Why are we refusing such an obviously good trade?

What we wanted to do was make it ignore really low probability bets. What we actually did was just make it stop caring about big rewards, regardless of the probability.

No modification to it can fix that. Because the utility function is totally indifferent to probability. That's what the decision procedure is for. That's where the real problem is.

In researching this topic I've seen all kinds of crazy resolutions to Pascal's Mugging. Some try to attack the exact thought experiment of an actual mugger. And miss the general problem of low probability events with large rewards. Others try to come up with clever arguments why you shouldn't pay the mugger. But not any general solution to the problem. And not one that works under the stated premises, where you care about saving human lives equally, and where you assign the mugger less than 1/3↑↑↑3 probability.

In fact Pascal's Mugger was originally written just to be a formalization of Pascal's original wager. Pascal's wager was dismissed for reasons like involving infinite utilities, and the possibility of an "anti-god" that exactly cancels the benefits out. Or that God wouldn't reward fake worshippers. People mostly missed the whole point about whether or not you should take low probability, high reward bets.

Pascal's Mugger showed that, no, it works fine in finite cases, and the probabilities do not have to exactly cancel each other out

Some people tried to fix the problem by adding hacks on top of the probability or utility functions. I argued against these solutions above. The problem is fundamentally with the decision procedure of expected utility.

I've spoken to someone who decided to just bite the bullet. He accepted that our intuition about big numbers is probably wrong, and we should just do what the math tells us.

But even that doesn't work. One of the points made in the original Pascal's Mugging post is that EU doesn't even converge. There is a hypothesis which has even less probability than the mugger, but promises 3↑↑↑↑3 utility. And a hypothesis even smaller than that which promises 3↑↑↑↑↑3 utility, and so on. Expected utility is utterly dominated by increasingly more improbable hypotheses. The expected utility of all actions approaches positive or negative infinity.

Expected utility is at the heart of the problem. We don't really want the average of our utility function over all possible worlds. No matter how big the numbers are or improbable they may be. We don't really want to trade away utility from the majority of our probability mass to infinitesimal slices of it.

The whole justification for EU being optimal in the infinite case, doesn't apply to the finite real world. The axioms that imply you need it to be consistent aren't true if you don't assume independence. So it's not sacred, and we can look at alternatives.

Median utility is just a first attempt at an alternative. We probably don't really want to maximize median utility either. Stuart Armstrong suggests using the mean of quantiles. There are probably better methods too. In fact there is an entire field of summary statistics and robust statistics, that I've barely looked at yet.

We can generalize and think of agents has having two utility functions. The regular utility function, which just gives a numerical value representing how preferable an outcome is. And a probability preference function, which gives a numerical value to each probability distribution of utilities.

Imagine we want to create an AI which acts the same as the agent would, given the same knowledge. Then we would need to know both of these functions. Not just the utility function. And they are both subjective, with no universally correct answer. Any function, so long as it converges (unlike expected utility), should produce perfectly consistent behavior.

28 comments

Comments sorted by top scores.

comment by VincentYu · 2015-09-24T03:00:00.775Z · LW(p) · GW(p)

Downvoted. I'm sorry to be so critical, but this is the prototypical LW mischaracterization of utility functions. I'm not sure where this comes from, when the VNM theorem gets so many mentions on LW.

A utility function is, by definition, that which the corresponding rational agent maximizes the expectation of, by choosing among its possible actions. It is not "optimal as the number of bets you take approaches infinity": first, it is not 'optimal' in any reasonable sense of the word, as it is simply an encoding of the actions which a rational agent would take in hypothetical scenarios; and second, it has nothing to do with repeated actions or bets.

Humans do not have utility functions. We do not exhibit the level of counterfactual self-consistency that is required by a utility function.

The term "utility" used in discussions of utilitarianism is generally vaguely-defined and is almost never equivalent to the "utility" used in game theory and related fields. I suspect that is the source of this never-ending misconception about the nature of utility functions.

Yes, it is common, especially on LW and in discussions of utilitarianism, to use the term "utility" loosely, but don't conflate that with utility functions by creating a chimera with properties from each. If the "utility" that you want to talk about is vaguely-defined (e.g., if it depends on some account of subjective preferences, rather than on definite actions under counterfactual scenarios), then it probably lacks all of useful mathematical properties of utility functions, and its expectation is no longer meaningful.

Replies from: Houshalter↑ comment by Houshalter · 2015-09-24T05:56:41.996Z · LW(p) · GW(p)

I'm not sure where this comes from, when the VNM theorem gets so many mentions on LW.

I understand the VNM theorem. I'm objecting to it.

A utility function is, by definition, that which the corresponding rational agent maximizes the expectation of

If you want to argue "by definition", then yes, according to your definition utility functions can't be used in anything other than expected utility. I'm saying that's silly.

simply an encoding of the actions which a rational agent would take in hypothetical scenarios

Not all rational agents, as my post demonstrates. An agent following median maximizing would not be describable by any utility function maximized with expected utility. I showed how to generalize this to describe more kinds of rational agents. Regular expected utility becomes a special case of this system. I think generalizing existing ideas and mathematics is a desirable thing sometimes.

It is not "optimal as the number of bets you take approaches infinity"

Yes, it is. If you assign some subjective "value" to different outcomes, and to different things, then maximizing expected u̶t̶i̶l̶i̶t̶y̶ value, will maximize it, as the number of decisions approaches infinity. For every bet I lose at certain odds, I will gain more from others some predictable percent of the time. On average it cancels out.

This might not be the standard way of explaining expected utility, but it's very simple and intuitive, and shows exactly where the problem is. It's certainly sufficient for the explanation in my post.

Humans do not have utility functions. We do not exhibit the level of counterfactual self-consistency that is required by a utility function.

That's quite irrelevant. Sure humans are irrational and make inconsistencies and errors in counterfactual situations. We should strive to be more consistent though. We should strive to figure out the utility function that most represents what we want. And if we program an AI, we certainly want it to behave consistently.

Yes, it is common, especially on LW and in discussions of utilitarianism, to use the term "utility" loosely, but don't conflate that with utility functions by creating a chimera with properties from each. If the "utility" that you want to talk about is vaguely-defined (e.g., if it depends on some account of subjective preferences, rather than on definite actions under counterfactual scenarios), then it probably lacks all of useful mathematical properties of utility functions, and its expectation is no longer meaningful.

Again, back to arguing by definition. I don't care what the definition of "utility" is. If it would please you to use a different word, then we can do so. Maybe "value function" or something. I'm trying to come up with a system that will tell us what decisions we should make, or program an AI to make. One that fits our behavior and preferences the best. One that is consistent and converges to some answer given a reasonable prior.

You haven't made any arguments against my idea or my criticisms of expected utility. It's just pedantry about the definition of a word, when it's meaning in this context is pretty clear.

Replies from: AlexMennen, Lumifer, redding↑ comment by AlexMennen · 2015-09-24T18:40:37.571Z · LW(p) · GW(p)

You're missing VincentYu's point, which is also a point I have made to you earlier: the utility function in the conclusion of the VNM theorem is not the same as a utility function that you came up with a completely different way, like by declaring linearity with respect to number of lives.

If you assign some subjective "value" to different outcomes, and to different things, then maximizing expected u̶t̶i̶l̶i̶t̶y̶ value, will maximize it, as the number of decisions approaches infinity. For every bet I lose at certain odds, I will gain more from others some predictable percent of the time. On average it cancels out.

This might not be the standard way of explaining expected utility, but it's very simple and intuitive, and shows exactly where the problem is. It's certainly sufficient for the explanation in my post.

This is an absurd strawman that has absolutely nothing to do with the motivation for EU maximization.

Replies from: Houshalter↑ comment by Houshalter · 2015-09-25T03:55:12.765Z · LW(p) · GW(p)

You're missing VincentYu's point, which is also a point I have made to you earlier: the utility function in the conclusion of the VNM theorem is not the same as a utility function that you came up with a completely different way, like by declaring linearity with respect to number of lives.

I discussed this in my post. I know VNM is indifferent to what utility function you use. I know the utility function doesn't have to be linear. But I showed that no transformation of it fixes the problems or produces the behavior we want.

This is an absurd strawman that has absolutely nothing to do with the motivation for EU maximization.

It's not a strawman! I know there are multiple ways of deriving EU. If you derive it a different way, that's fine. It doesn't affect any of my arguments whatsoever.

Replies from: AlexMennen↑ comment by AlexMennen · 2015-09-25T05:37:59.539Z · LW(p) · GW(p)

But I showed that no transformation of it fixes the problems or produces the behavior we want.

No, you only tried two: linearity, and a bound that's way too low.

It's not a strawman! I know there are multiple ways of deriving EU. If you derive it a different way, that's fine. It doesn't affect any of my arguments whatsoever.

You picked a possible defense of EU maximization that no one ever uses to defend EU maximization, because it is stupid and therefore easy for you to criticize. That's what a strawman is. You use your argument against this strawman to criticize EU maximization without addressing the real motivations behind it, so it absolutely does affect your arguments.

↑ comment by Lumifer · 2015-09-24T14:38:08.705Z · LW(p) · GW(p)

I don't care what the definition of "utility" is.

It's a word you use with wild abandon. If you want to communicate (as opposed to just spill a mind dump onto a page), you should care because otherwise people will not understand what you are trying to say.

I'm trying to come up with a system that will tell us what decisions we should make

There are a lot of those, starting with WWJD and ending with emulating nature that is red in tooth and claw. The question is on which basis will you prefer a system over another one.

Replies from: Houshalter↑ comment by Houshalter · 2015-09-25T03:55:15.362Z · LW(p) · GW(p)

It's a word you use with wild abandon. If you want to communicate (as opposed to just spill a mind dump onto a page), you should care because otherwise people will not understand what you are trying to say.

Everyone except VincentYu seems to understand what I'm saying. I do not understand where people are getting confused. The word "utility" has more meanings than "that thing which is produced by the VNM axioms".

The question is on which basis will you prefer a system over another one.

The preference should be too what extent it would make the same decisions you would. This post was the argue that expected utility doesn't and can not do that. And to show some alternatives which might.

Replies from: Lumifer↑ comment by Lumifer · 2015-09-25T14:44:20.076Z · LW(p) · GW(p)

I do not understand where people are getting confused.

I just told you.

If you want to understand where people are getting confused, perhaps you should listen to them.

The preference should be too what extent it would make the same decisions you would.

Huh? First, why would I need a system to make the same decisions I'm going to make by default? Second, who is that "you"? For particular values of "you", building a system that replicates the preferences of that specific individual is going to be a really bad idea.

Replies from: gjm↑ comment by redding · 2015-09-24T16:29:34.772Z · LW(p) · GW(p)

You say you are rejecting Von Neumann utility theory. Which axiom are you rejecting?

https://en.wikipedia.org/wiki/Von_Neumann–Morgenstern_utility_theorem#The_axioms

Replies from: Vaniver, Houshalter↑ comment by Vaniver · 2015-09-24T16:59:22.498Z · LW(p) · GW(p)

The last time this came up, the answer was:

That for any bet with an infinitesimally small value of p, there is a value of u high enough that I would take it.

This is, as pointed out there, not one of the axioms.

↑ comment by Houshalter · 2015-09-25T03:55:43.895Z · LW(p) · GW(p)

The axiom of independence. I did mention this in the post.

comment by gwern · 2016-01-10T19:14:42.639Z · LW(p) · GW(p)

I like the Kelly criterion response, which brings in gambler's ruin and treats a more realistic setup (limited portfolio of resources). You don't bet on extremely unlikely payoffs, because the increased risk of going bankrupt & being unable to make any bets ever again reduces your expected utility growth.

For example, to use the KC, if someone offers me a 100,000x payoff at 1/90,000 for \$1 and I have \$10, then the KC says that the fraction of my money to wager is (p * (b + 1) - 1) / b:

b is the net odds received on the wager ("b to 1"); that is, you could win \$b (on top of getting back your \$1 wagered) for a \$1 bet

p is the probability of winning

- then:

((1/90000) * ((10*100000) + 1) - 1) / (10*100000)= 1.011112222e-05,10 * 1.011112222e-05= \$0.0001011112222

So I would bet extraordinarily small amounts on each such opportunity and maybe, since 1/100th of a penny is smaller than the offered bet of \$1, I would wind up not betting at all on this opportunity despite being +EV. But as my portfolio gets bigger, or the probability of payoff gets bigger and less exotic (lowering the probability of a long enough run of bad luck to ruin a gambler), I think the KC asymptotically turns into just expected-value maximization as you become more able to approximate an ensemble which can shrug off losses; somewhat like a very large Wall Street company which has enough reserves to play the odds almost indefinitely.

Using KC, which incorporates one's mortality, as well as a reasonable utility function on money like a log curve (since clearly money does diminish), seems like it resolves a lot of problems with expected value.

Not sure who first made this objection but one paper is http://rsta.royalsocietypublishing.org/content/369/1956/4913

comment by Dagon · 2015-09-24T00:56:09.798Z · LW(p) · GW(p)

A lot of your bounding concern go away if you stop talking about utility without reference to resources. Mugging and decisions don't change your utility directly, they change your situation, which affects your utility. You don't get care less and less about getting utility, you get less and less utility from more stuff. I think that's a bullet you need to bite: in a universe with 2000 sentients, you probably do prefer to save 1000 over creating 2000.

I think you're simply wrong when you say things like

The whole justification for EU being optimal in the infinite case, doesn't apply to the finite real world

Classical Decision Theory (what I think you mean by "EU" ; CDT is a process which maximizes EU) applies for each and every finite decision - there is no such thing as the infinite case. Reading the comments of the various "problems" posts you link to (thank you for that, by the way - it's way easier to follow than if you only restate them without linking) is informative - none of them are actually compelling arguments against CDT, they're arguments about assigning ludicrously high probabilities to nigh-impossible experiences.

Replies from: Houshalter↑ comment by Houshalter · 2015-09-24T03:23:49.702Z · LW(p) · GW(p)

Classical Decision Theory (what I think you mean by "EU" ; CDT is a process which maximizes EU)

Well it's not really specific to causal decision theory. I believes it applies to timeless decision theory and anything else that uses EU.

applies for each and every finite decision - there is no such thing as the infinite case.

Well yes you can run it in finite case of course. But it's only guaranteed to be optimal as the number of bets approaches infinity. That's the justification for it that I am disputing.

Reading the comments of the various "problems" posts you link to (thank you for that, by the way - it's way easier to follow than if you only restate them without linking

I'm not sure if you're being sarcastic, but there's not much too link too. A lot of this discussion I am referring to happened over IRC or is scattered in deeply nested comment threads. My post isn't intended to be a reply to any one specific thing, but a general argument against expected utility.

none of them are actually compelling arguments against CDT, they're arguments about assigning ludicrously high probabilities to nigh-impossible experiences.

I've seen that argument before. I dismissed it in the post because I don't think post hoc modifying the probability function is a very elegant or general solution to this problem. There is no dispute that muggers being correct are incredibly low probability. The problem is that utility can grow much faster under any reasonable prior probability distribution.

Replies from: Dagon↑ comment by Dagon · 2015-09-24T05:53:19.033Z · LW(p) · GW(p)

What exactly do you mean "guaranteed to be optimal"? If you assign probabilities correctly and you understand your situation->utility mapping, your optimal choice is that which maximizes the utility in the probability-weighted futures you will experience. That's as optimal as it gets, the word "guarantee" is confusing. It's kind of implied by the name - if you make any other choice, you expect to get less utility.

I don't think post-hoc modification of the probability is what I'm saying. I'm recommending sane priors that give you consistent beliefs that don't include non-infinitesimal chances of insane-magnitude events.

Replies from: Houshalter↑ comment by Houshalter · 2015-09-24T06:29:10.935Z · LW(p) · GW(p)

In any finite case, probability is involved. You could, just by chance, lose every bet you take. So no method is guaranteed to be optimal. (Unless you of course specify what criterion to decide what probability distribution of utilities is optimal. Like the one with the highest mean or median, or some other estimator.)

However, in the infinite case (which I admit is kind of hand wavy, but this is just the simplest intuitive explanation), the probabilities cancel out. You will win exactly 50% of bets you take at 50% odds. So you will win more than you lose on average, on any bet you take with positive expected utility. And lose more than you win on any with negative expected utility. Therefore taking any bet with positive expected utility is optimal (not counting the complexities involved with losing money that could be invested at other bets at a future time.)

I'm recommending sane priors that give you consistent beliefs that don't include non-infinitesimal chances of insane-magnitude events.

Solomonoff induction is a perfectly sane prior. It will produce perfectly reasonable predictions, and I'd expect it to be right far more than any other method. But it doesn't arbitrarily discount hypotheses just because they contain more magnitude.

But it's not specific to Solomonoff induction. I don't believe that high magnitude events are impossible. An example stolen from EY, what if we discover the laws of physics allow large number amounts of computation? Or that the universe is infinite? Or endless? And/or that entropy can be reversed, or energy created? Or any number of other possibilities?

If so, then we humans, alive today, have the possibility of creating and influencing an infinite number of other beings. Every decision you make today would be a Pascal's wager situation, with a possibility of affecting an infinite number of beings for an endless amount of time.

We can't argue about priors of course. If you really believe that, as a prior, that situation is impossible, no argument can convince you otherwise. But I don't think you really do believe it's impossible, or that it's a good model of reality. It seems more like a convenient way of avoiding the mugging.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2015-09-24T11:32:23.610Z · LW(p) · GW(p)

We can't argue about priors of course.

Why not? In any practical statistical problem, a Bayesian must choose a prior, therefore must think about what prior would be appropriate. To think is to have an argument with oneself, and when two statisticians discuss the matter, they are arguing with each other.

So people manifestly do argue about priors; which you say is impossible.

Replies from: Houshalter↑ comment by Houshalter · 2015-09-24T20:11:01.817Z · LW(p) · GW(p)

If two agents have different priors, then they will always come to different conclusions, even if they have the same evidence and arguments available to them. It's the lowest level of debate. You can't go any further.

Choosing a good prior for a statistical model is a very different thing than actually talking about your own prior. If parent comment really believes, a priori, that something has 1/3^^^3 probability, then no argument or evidence could convince him otherwise.

Replies from: Richard_Kennaway, Lumifer↑ comment by Richard_Kennaway · 2015-09-24T21:53:41.995Z · LW(p) · GW(p)

Choosing a good prior for a statistical model is a very different thing than actually talking about your own prior.

Can you clarify what you mean by "your own prior", contrasting it with "choosing a good prior for a statistical model"?

This is what I think you mean. A prior for a statistical model, in the practice of Bayesian statistics on practical problems, is a distribution over a class of hypotheses ℋ (often, a distribution for the parameters of a statistical model), which one confronts with the data D to compute a posterior distribution over ℋ by Bayes' theorem. A good prior is a compromise between summarising existing knowledge on the subject and being open to substantial update away from that knowledge, so that the data have a chance to be heard, even if they contradict it. The data may show the original choice of prior to have been wrong (e.g. the prior specified parameters for a certain family of distributions, while the data clearly do not belong to that family for any value of the parameters). In that case, the prior must be changed. This is called model checking. It is a process that in a broad and intuitive sense can be considered to be in the spirit of Bayesian reasoning, but mathematically is not an application of Bayes' theorem.

The broad and intuitive sense is that the process of model checking can be imagined as involving a prior that is prior to the one expressed as a distribution over ℋ. The activity of updating a prior over ℋ by the data D was all carried out conditional upon the hypothesis that the truth lay within ℋ, but when that hypothesis proves untenable, one must enlarge ℋ to some larger class. And then, if the data remain obstinately poorly modelled, to a larger class still. But there cannot be an infinite regress: ultimately (according to the view I am extrapolating from your remarks so far) you must have some prior over all possible hypotheses, a universal prior, beyond which you cannot go. This is what you are referring to as "your own prior". It is an unchangeable part of you: even if you can get to see what it is, you are powerless to change it, for to change it you would have to have some prior over an even larger class, but there is no larger class.

Is this what you mean?

ETA: See also Robin Hanson's paper putting limitations on the possibility of rational agents having different priors. Irrational agents, of course, need not have priors at all, nor need they perform Bayesian reasoning.

↑ comment by Lumifer · 2015-09-24T20:14:22.260Z · LW(p) · GW(p)

If two agents have different priors, then they will always come to different conclusions

That's just plain false, and obviously so.

Replies from: Houshalter↑ comment by Houshalter · 2015-09-24T21:58:25.737Z · LW(p) · GW(p)

No it is not. If you put different numbers into the prior, then the probability produced by a bayesian update will always be different. If the evidence is strong enough, it might not matter too much. But if one of the priors is many orders of magnitude difference, then it matters quite a lot.

Replies from: entirelyuseless↑ comment by entirelyuseless · 2015-09-25T14:43:18.233Z · LW(p) · GW(p)

If people have different priors both for the hypothesis and for the evidence, it is obvious, as Lumifer said, that those can combine to give the same posterior for the hypothesis, given the evidence, since I can make the posterior any value I like by setting the prior for the evidence appropriately.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2015-09-29T09:54:35.046Z · LW(p) · GW(p)

You don't get to set the prior for the evidence. Your prior distribution over the evidence is determined by your prior over the hypotheses by P(E) = the sum of P(E|H)P(H) over all hypotheses H. For each H, the distribution P(E|H) over all E is what H is.

But the point holds, that the same evidence can update different priors to identical posteriors. For example, one of the four aces from a deck is selected, not necessarily from a uniform distribution. Person A's prior distribution for which ace it is is spades 0.4, clubs 0.4, heart 0.1, diamonds 0.1. B's prior is 0.2, 0.2, 0.3,0.3. Evidence is given to them: the card is red. They reach the same posterior: spades 0, clubs 0, hearts 0.5, diamonds 0.5.

Some might quibble over the use of probabilities of zero, and there may well be a theorem to say that if all the distributions involved are everywhere nonzero the mapping of priors to posteriors is 1-1, but the underlying point will remain in a different form: for any separation between posteriors, however small, and any separation between the priors, however large, some observation is strong enough evidence to transform those priors into posteriors that close. (I have not actually proved this as a theorem, but something along those lines should be true.)

comment by AlexMennen · 2015-09-24T19:04:05.522Z · LW(p) · GW(p)

But then you are stuck with a bound. If you ever reach it, then you suddenly stop caring about saving any more lives.

Just because there's a bound doesn't mean there's a reachable bound. The range of the utility function could be bounded but open. In fact, it probably is.

But it's not a fully general solution for all utility functions.

Pascal's mugger isn't a problem for agents with unbounded utility functions either: they just go ahead and pay the mugger. The fact that this seems irrational to you shows that agents with unbounded utility functions seem so alien that you can't empathize with them. (A caveat to this is that there can be agents with unbounded utility functions, but such that it takes an extremely large amount of evidence to convince them that the probability of getting extremely large utilities is non-negligible, and it is thus extremely difficult to get them to pay Pascal's mugger.)

This is the exact opposite situation of Pascal's mugging! The probability of the reward is very high. Why are we refusing such an obviously good trade?

Because you set the bound too low. The behavior you describe is the desirable behavior when large enough numbers are involved. For example, which do you prefer? (A) 100% chance of a flourishing civilization with 3↑↑↑3 happy lives, or (B) a 99% chance of a flourishing civilization with 3↑↑↑↑3 happy lives and a 1% chance of extinction.

But not any general solution to the problem.

Bounded utility functions are a perfectly good general solution.

Replies from: Houshalter↑ comment by Houshalter · 2015-09-25T04:12:00.916Z · LW(p) · GW(p)

But then you are stuck with a bound. If you ever reach it, then you suddenly stop caring about saving any more lives.

Just because there's a bound doesn't mean there's a reachable bound. The range of the utility function could be bounded but open. In fact, it probably is.

I think you are suggesting an asymptotic bound. I literally discuss this just a sentence after what you are quoting.

Pascal's mugger isn't a problem for agents with unbounded utility functions either: they just go ahead and pay the mugger. The fact that this seems irrational to you shows that agents with unbounded utility functions seem so alien that you can't empathize with them.

I also discussed biting the bullet on Pascal's Mugging in the post. The problem isn't just that you will be mugged of all of your money very quickly. It's that expected utility doesn't even converge. All actions have positive or negative expected utility, or are simply undefined. Increasingly improbable hypotheses utterly dominate the calculation.

I do think that agents can have unbounded preferences, without wanting to pay the mugger. Or spend resources on low probability bets in general. Median utility and some other alternatives allow this.

Because you set the bound too low. The behavior you describe is the desirable behavior when large enough numbers are involved. For example, which do you prefer? (A) 100% chance of a flourishing civilization with 3↑↑↑3 happy lives, or (B) a 99% chance of a flourishing civilization with 3↑↑↑↑3 happy lives and a 1% chance of extinction.

I currently think that 3↑↑↑↑3 happy lives with 1% chance of extinction is the correct choice. Though I'm not certain. It vastly increases the probability that a given human will find themselves living in this happy civilization, vs somewhere else.

And in this sense, human preferences can't be bounded. Because we should always want to make the trade offs that help Big Number of humans, no matter how Big.

Replies from: AlexMennen↑ comment by AlexMennen · 2015-09-25T06:30:28.233Z · LW(p) · GW(p)

I think you are suggesting an asymptotic bound. I literally discuss this just a sentence after what you are quoting.

Yes, but you still criticized bounded utility functions in a way that does not apply to asymptotic bounds.

The problem isn't just that you will be mugged of all of your money very quickly. It's that expected utility doesn't even converge.

Ok, that's a good point; non-convergence is a problem for EU maximizers with unbounded utility functions. There are exactly 2 ways out of this that are consistent with the VNM assumptions:

(1) you can omit the gambles on which your utility function does not converge from the domain of your preference relation (the completeness axiom says that the preference relation is defined on all pairs of lotteries, but it doesn't actually say what a "lottery" is, and only relies on it being possible to mix finite numbers of lotteries). If your utility function has sufficiently sharply diminishing returns, then it is possible for it to still converge on all lotteries it could possibly encounter in real life, even while not being bounded. That kind of agent will have the behavior I described in my parenthetical remark in my original comment.

(2) You can just pick some utility for the lottery, without worrying about the fact that it isn't the expected utility of the outcome. The VNM theorem actually gives you a utility function defined directly on lotteries, rather than outcomes, in a way so that it is linear with respect to probability. Whenever you're just looking at lotteries over finitely many outcomes, this means that you can just define the utility function on the outcomes and use expected utility, but the theorem doesn't say anything about the utility function being continuous, and thus doesn't say that the utility of a lottery involving infinitely many outcomes is what you think it should be from the utilities of the outcomes. I've never heard anyone seriously suggest this resolution though, and you can probably rule it out with a stronger continuity axiom on the preference relation.

I don't like either of these approaches, but since realistic human utility functions are bounded anyway, it doesn't really matter.

I do think that agents can have unbounded preferences, without wanting to pay the mugger.

Being willing to pay some Pascal's mugger for any non-zero probability of payoff is basically what it means to have an unbounded utility function in the VNM sense.

Median utility and some other alternatives allow this.

The question of whether a given median utility maximizer has unbounded preferences doesn't even make sense, because the utility functions that they maximize are invariant under positive monotonic transformations, so any given median-maximizing agent has preferences that can be represented both with an unbounded utility function and with a bounded one.

I currently think that 3↑↑↑↑3 happy lives with 1% chance of extinction is the correct choice. Though I'm not certain.

I'm confident that you're wrong about your preferences under reflection, but my defense of that assertion would rely on the independence axiom, which I think I've already argued with you about before, and Benja also defends in a section here.

It vastly increases the probability that a given human will find themselves living in this happy civilization, vs somewhere else.

I was assuming that the humans mentioned in the problem accounted for all (or almost all) of the humans. Sorry if that wasn't clear.