Minor interpretability exploration #2: Extending superposition to different activation functions

post by Rareș Baron · 2025-03-06T11:22:53.528Z · LW · GW · 0 commentsContents

Introduction TL;DR results Previous work Methods Results General Specific Discussion Conclusion Acknowledgements None No comments

Epistemic status: small exploration without previous predictions, results low-stakes and likely correct.

Introduction

As a personal exercise for building research taste and experience in the domain of AI safety and specifically interpretability, I have done four minor projects, all building upon code previously written. They were done without previously formulated hypotheses or expectations, but merely to check for anything interesting in low-hanging fruit. In the end, they have not given major insights, but I hope they will be of small use and interest for people working in these domains.

This is the second project: extending Anthropic's interpretability team's toy model of superposition notebook to 26 more activation functions.

The toy model of superposition results have been redone using the original code, while changing the activation function where appropriate.

TL;DR results

Broadly, there were three main, general observations, all somewhat confusing and suggesting that additional research is needed:

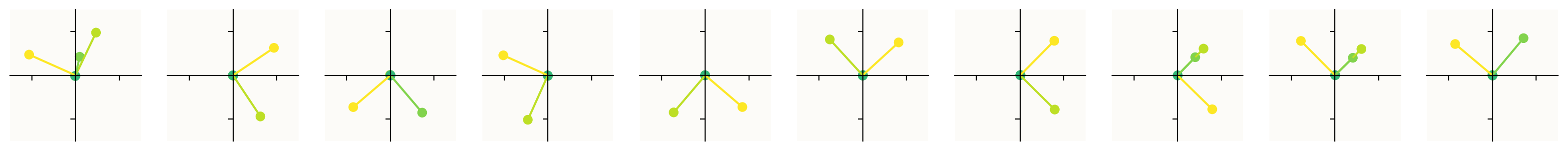

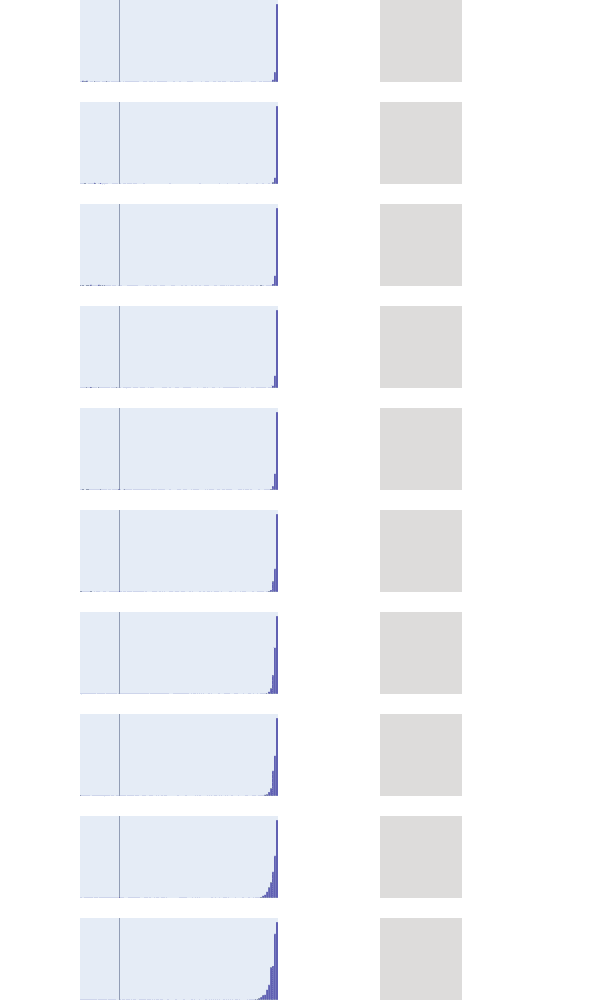

- Losses were in the 10^-4 – 10^-3 range. Loss was not marginally different between the functions.

- Degree of superposition did not seem to be correlated with loss beyond a certain threshold. More superposition does seem to reduce loss by at most one degree of magnitude.

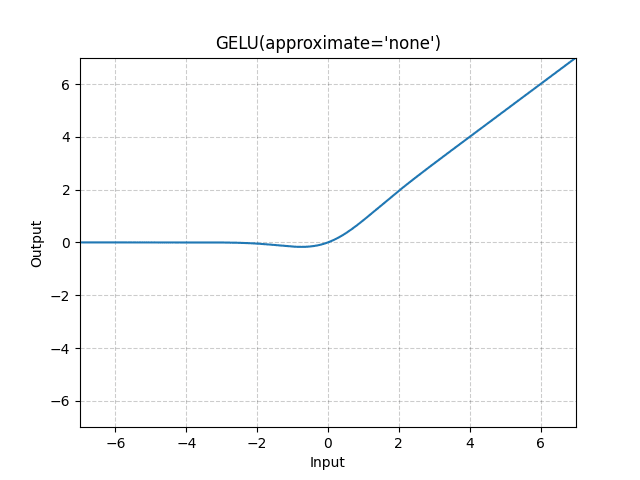

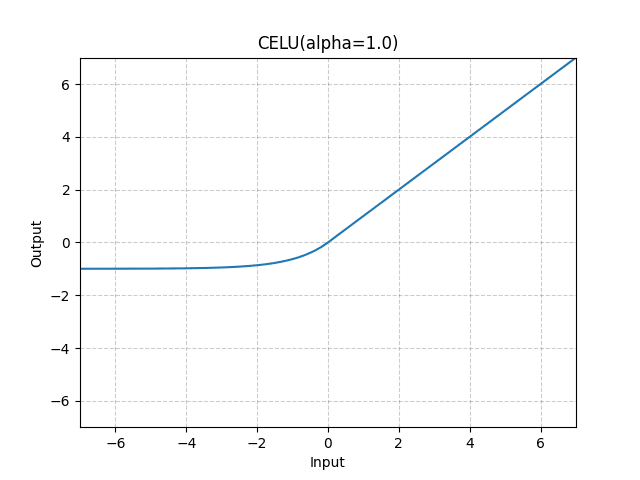

- Small variations in activation functions lead to massive differences in superposition (see CELU vs. GELU), which do not however translate into differences in the loss landscape.

Previous work

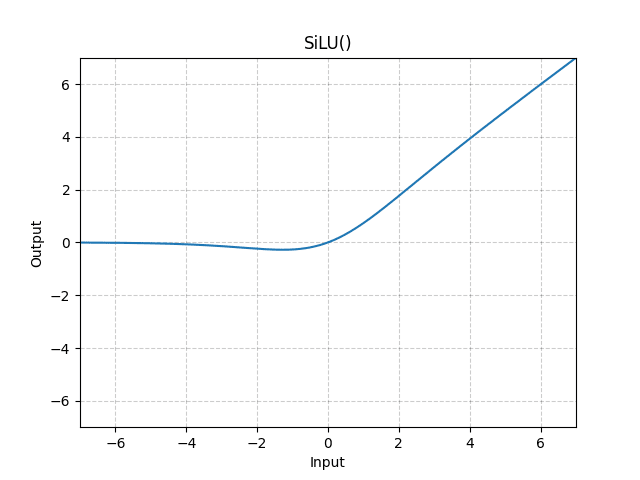

Lad and Kostolansky (2023) extended superposition results to GeLU, SiLU, Sigmoid, Tanh, and SoLU. This project extends this to 20 more activation functions, while confirming previous findings (GELU/SiLU reduce superposition and polysemanticity while smoothing out ReLU, Sigmoid extending superposition to many more neurons, Tanh eliminating it, and SoLU enforcing sparsity).

Methods

The basis for these findings is Anthropic’s toy model of superposition notebook, as well as Zephaniah Roe's reproduction and extension ('demonstrating superposition' and 'introduction'). All modifications are straight-forward replacements of ReLU, as well as extensions of the sparsity regimes.

All resulted notebooks, extracted graphs, tables, and word files with clean, tabular comparisons can be found here.

Results

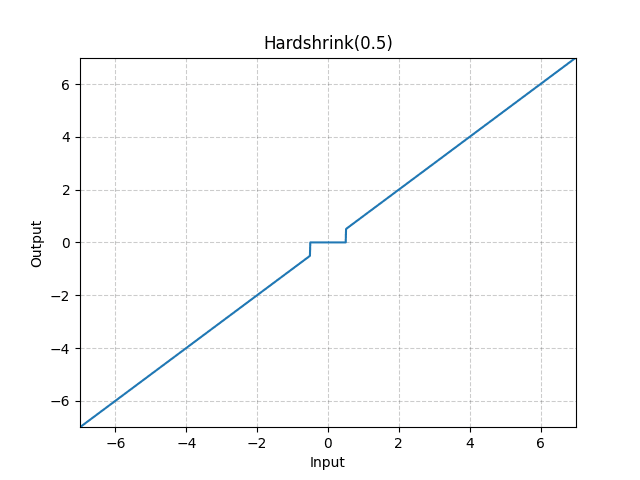

Besides the original ReLU, the 26 activation functions surveyed are the following: LeakyReLU, SELU, CELU, GELU, Sigmoid, Hardsigmoid, SiLU/Swish, Hardswish, Mish, Softplus, Tanh, Hardtanh, ELU, Hardshrink, Softmax, SoLU/Softmax Linear, Logsoftmax, Logsigmoid, Exp, Exp linear, Tanhshrink, ReLU6, ReLU^2, Softshrink, Softsign, and Softmin. Implementations were PyTorch or PyTorch-derived.

General

General observations have been given above.

Specific

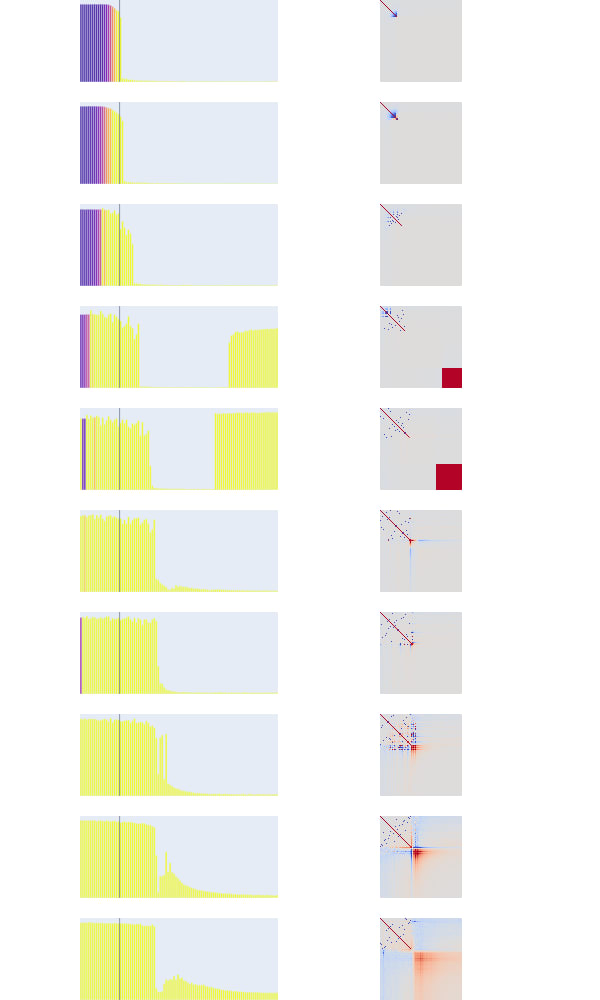

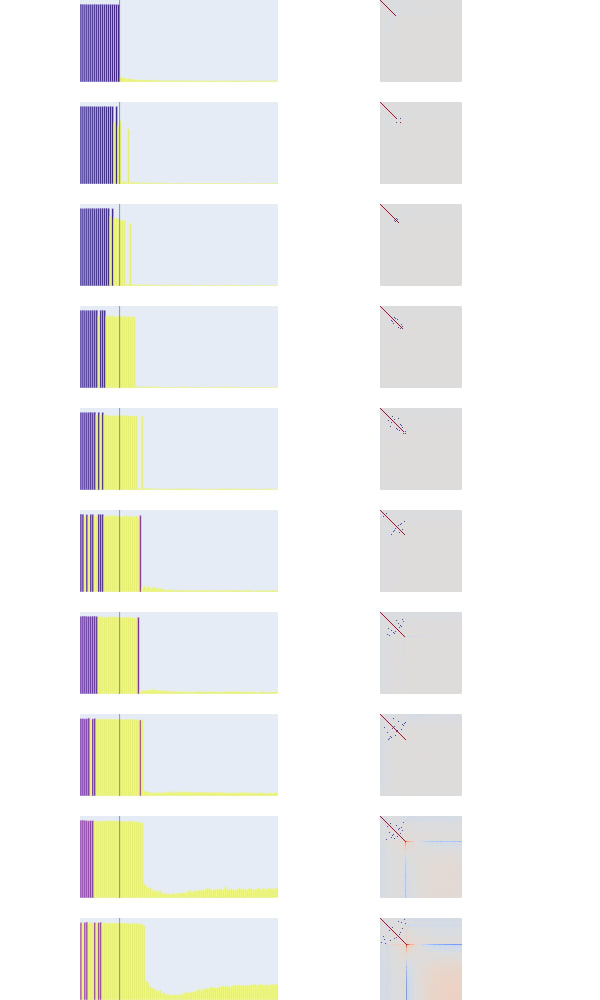

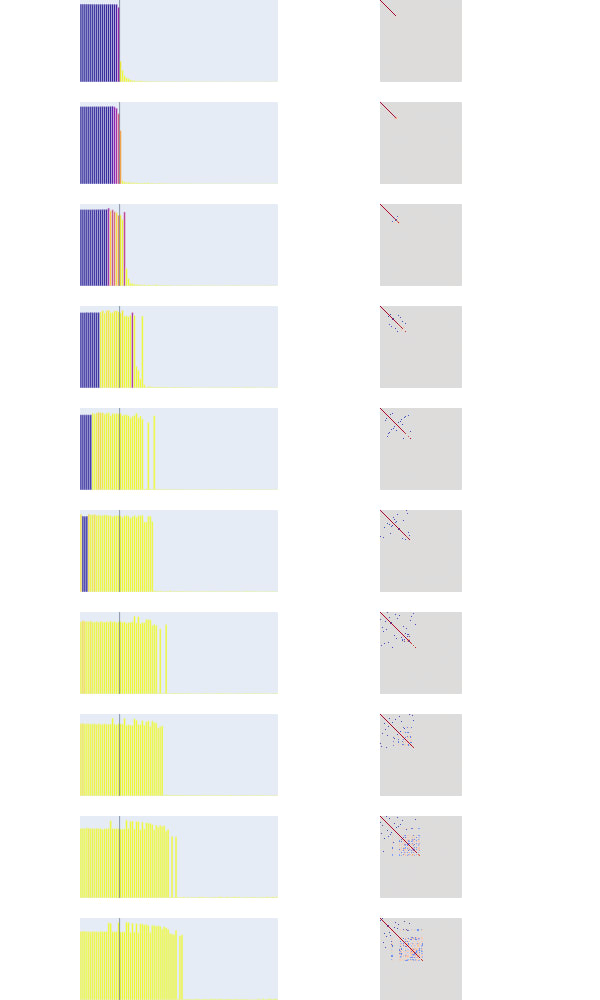

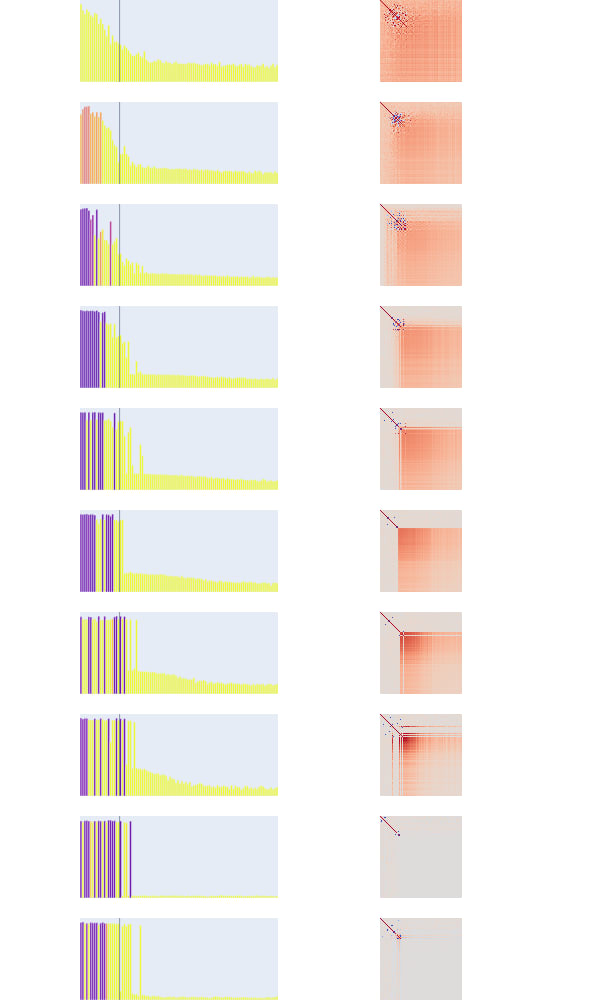

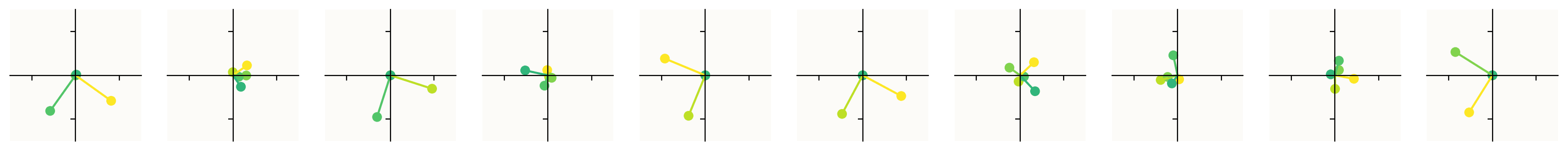

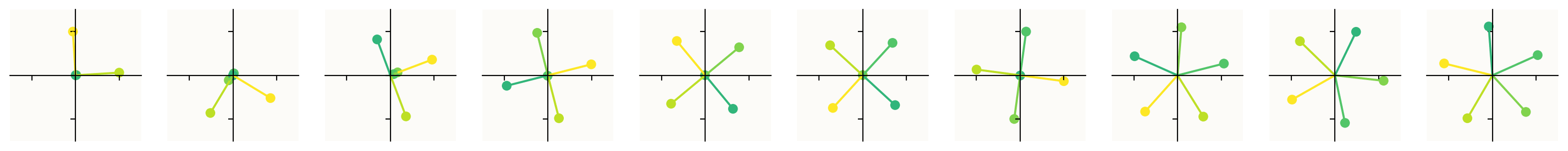

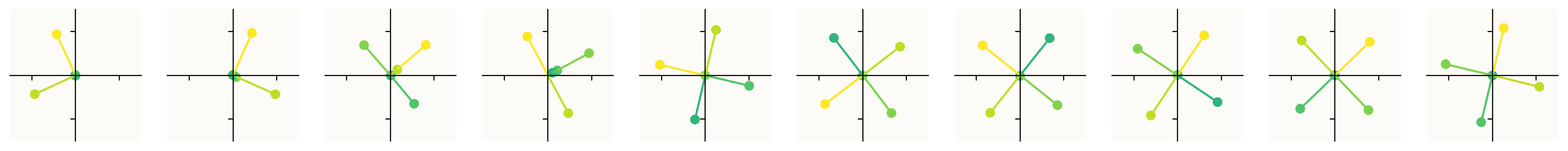

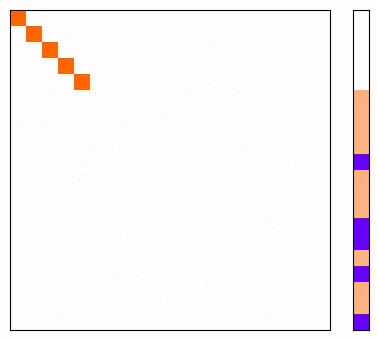

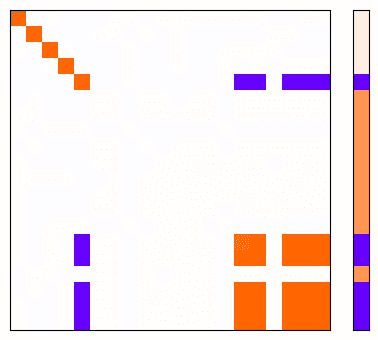

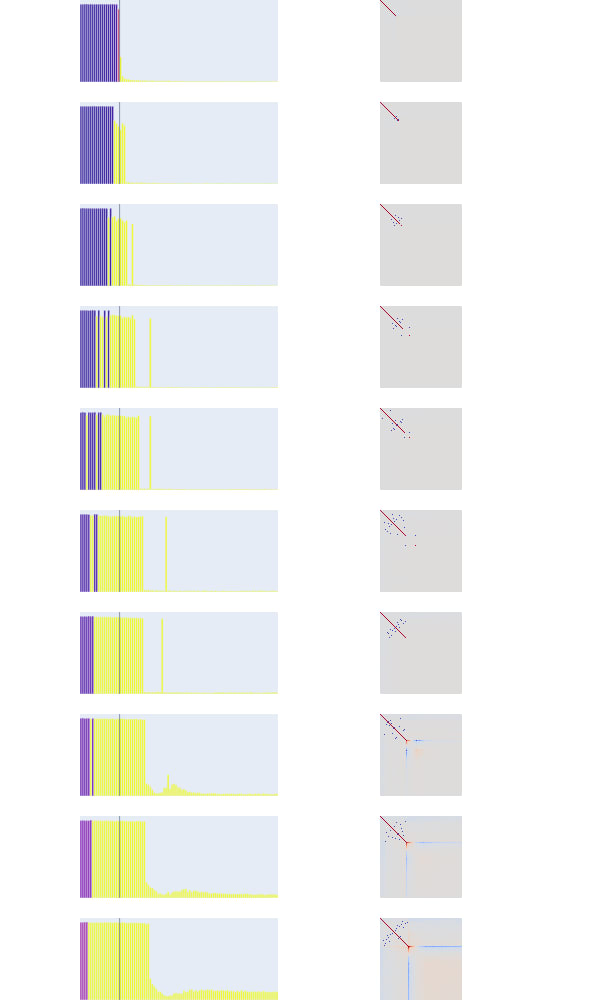

Successive graphs indicate increased sparsification (from 0 to 0.999).

Specific observations for the functions:

(Hard)sigmoid show a very strange pattern: enormous, quick superposition, for unimportant features. This may be a bug/floating point error. This somewhat contradicts other research (see project #3 [LW · GW]), which showed sigmoid being incapable of developing superposition in other models. More research is needed.

- Softsign and logsigmoid are even worse in this regard. Idem.

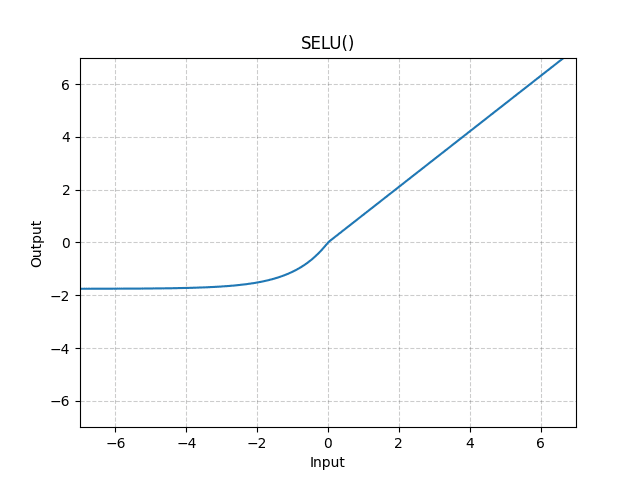

(Hard)tanh and SELU show very limited superposition, likely due to their continuity, symmetry, and limited output.

Softmin and LogSoftmax are bad. The first showed an importance reversing effect, and the second failed to converge, likely due to being unstable.

Exp(Linear) showed very strange behaviours (and for low sparsities failed to converge). More research is needed.

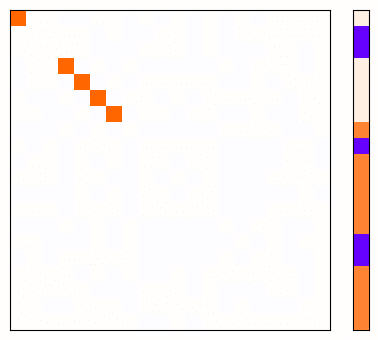

SoLU (bottom) behaves weirdly: it initially had comparable superposition to softmax, larger than ReLU (top), but as sparsity increased it had less (though a lot more low-level superposition). SoLU was designed to reduce superposition, and it pans out here.

- Shrinks shows lots of superposition, though of small amplitude. This is likely an artifact of its nigh-linearity. Still, it managed to produce a small amount of superposition, indicating that it is a feature that networks will exploit as much as they can.

(200 interp problems - superposition): A-B* 4.10, C* 4.11 [? · GW] – GELU (bottom) is mostly just a smoother ReLU (top), showcasing less superposition over time. However, it showed greater superposition in the 0 sparsity regime, where its non-monotonic “dip” and quadratic nature could exploit a bit of superposition with the least important features, while otherwise hindering clearly-delineated antipodes. SiLU is similar.

- ReLU6 and ReLU^2 did not have significant impact on ReLU’s superposition.

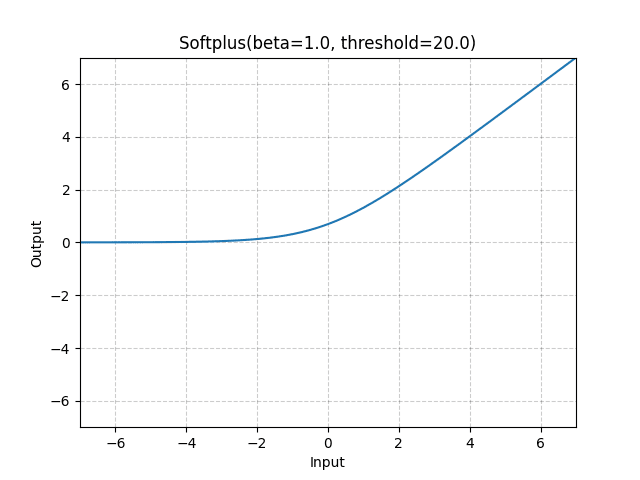

- (LeakyReLU), GELU and Softplus showed the most superposition. Possible causes:

- The sharper gradient/elbow, as they more effectively cap certain intervals of the input (most pronounced with ReLU). This would however entail a larger difference between swish and hardswish, which is not present.

Some of the activation functions let through negative values, even if only up to -1/-2, hindering the masking effect of these functions, necessary for superposition. This might explain the prominent superposition of GELU/Softplus ((softly) capped at 0) versus the smaller superpositions of CELU/ELU. Another piece of supporting evidence is the fact that the only function that (quickly) goes to -2 is SELU, which shows the lowest level of superposition in its group.

Softplus’s prolific superposition managed to touch every single feature, regardless of importance. More research is needed.

(Hard)swish showed very early superposition, their “quirky”, broad shapes affecting even the 0 sparsity regime, which lead to a preference for less important features.

- Hardness generally reduces (or slows the development of) superposition. This seems confusing.

Discussion

While ReLU is the most often used activation functions in neural networks, it is not the only one, and not the only one of interest either. I have gone through almost all PyTorch standard activation functions in order to be exhaustive, but I have also included functions that have been discussed in the interpretability literature: GeLU, SoLU, and the exponential activation function. No LayerNorm or other modifications have been applied to the initial networks, so this exercise was done strictly to see the impact each function had upon the development and degree of superposition.

ReLU is unlikely to be replaced as the standard activation functions. It is, however, important to know what properties of these functions lead to superposition, and therefore to lessened interpretability potential. This experiment has shown preliminary results in what characteristics of these functions help or hinder superposition (non-negative, asymmetrical, smooth functions help superposition): developing one to combat superposition while keeping performance high (such as SoLU) might benefit from them.

More research is needed, especially for the weird behaviour of the Sigmoid, Exps, and especially SoLU, and for the powerful superposition of the Softplus.

Conclusion

The broad conclusion is that more superposition leads to marginally smaller losses; non-negative, asymmetrical activation functions gave the best superposition and lowest losses. The fact that loss and superposition are not so harshly tied together is encouraging, however, showing that it is possible to remove a degree of superposition from the network without sacrificing performance to a large degree.

Acknowledgements

I would like to thank the original Anthropic interpretability team for starting this research direction, establishing its methods, and writing the relevant code, as well as for the excellent reproduction and extension by Zephaniah Roe.

0 comments

Comments sorted by top scores.