If influence functions are not approximating leave-one-out, how are they supposed to help?

post by Fabien Roger (Fabien) · 2023-09-22T14:23:45.847Z · LW · GW · 5 commentsContents

From leave-one-out to influence functions What are influence functions doing What are influence functions useful for Current empirical evidence Speculations about what influence functions won't and will be useful for None 5 comments

Thanks to Roger Grosse for helping me understand his intuitions and hopes for influence functions. This post combines highlights from some influence function papers, some of Roger Grosse’s intuitions (though he doesn’t agree with everything I’m writing here), and some takes of mine.

Influence functions are informally about some notion of influence of a training data point on the model’s weights. But in practice, for neural networks, “influence functions” do not approximate well “what would happen if a training data point was removed”. Then, what are influence functions about, and what can they be used for?

From leave-one-out to influence functions

Ideas from Bae 2022 (If influence functions are the answer, what is the question?).

The leave-one-out function is the answer to “what would happen, in a network trained to its global minima, if one point was omitted”:

Under some assumptions such as a strongly convex loss landscape, influence functions are cheap-to-compute approximation to leave-one-out function, thanks to the Implicit Function Theorem, which tells us that under those assumptions

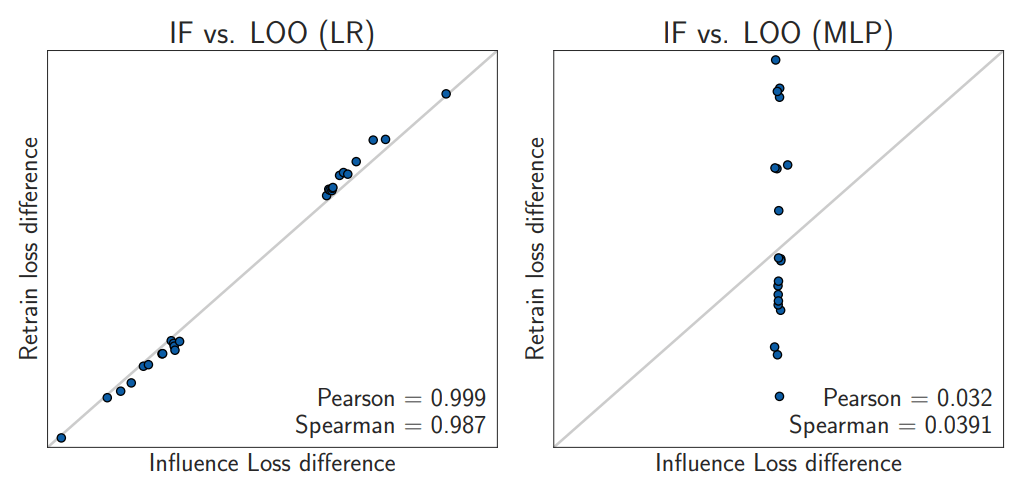

But these assumptions don't hold for neural networks, and Basu 2020 shows that influence functions are a terrible approximation of leave-one-out in the context of neural networks, as shown in this figure from Bae 2022 (left is for Linear Regression, where the approximation hold, right is for MultiLayer-Perceptron, where it doesn’t):

Moreover, even the leave-one-out function is about parameters at convergence, which is not the regime most deep learning training runs operate in. Therefore, influence functions are even less about answering the question “what would happen if this point had more/less weight in the (incomplete) training run?”.

So every time you see someone introducing influence functions as an approximation of the effect of up/down-weighting training data points (as in this LW post about interpretability [LW · GW]), remember that this does not apply when they are applied to neural networks.

What are influence functions doing

Bae 2022 shows that influence functions (not leave-one-out!) can be well approximated by the minimization of another training objective called PBRF, which is the sum of 3 terms:

- , The loss function with the soft labels as computed by the studied function with weights after training : the new should not change the output of the function much.

- , The opposite of the loss function on the target point: the new should give a high loss on the considered data point

- , A penalization of weights very different from the final training weights (Roger told me the specific value of didn’t have a huge influence on the result.)

This does not answer the often advertised question about leave-one-out, but this does answer something which looks related, and which happens to be much cheaper to compute than the leave-one-out function (which can only be computed by retraining the network and doesn’t have cheaper approximations).

Influence functions are currently among the few options to say anything about the intuitive “influence” of individual data points in large neural networks, which justifies why they are used. (Alternatives have roughly the same kind of challenges as influence functions.)

Note: this explanation of what influence function are doing is not the only way to describe their behavior, and other works may shine new lights on what they are doing.

What are influence functions useful for

Current empirical evidence

To this date, there has been almost no work externally validating that influence functions tell us anything about the influence of data points on neural networks behaviors beyond:

- “It looks reasonable” when running it on individual examples of influence function approximations results on large language models

- Influence functions are useful for identifying mislabeled examples: if 10% of MNIST labels are changed, and flag points as suspicious if they have a large influence on themselves, then about 60% of mislabeled points are in the top-10% of the most suspicious points. (See last appendix of Bae 2022)

(These two methods are common in the influence function literature and were not introduced by the two papers I cite here.)

[Edit] Other empirical evidence since the initial post date: influence functions correctly predict that LLMs don't generalize from "A is B" to "B is A".

I would be excited about future work using influence functions to make specific predictions about the effect of removing some points from the training set and retraining the network to see if those predictions were accurate. I would be even more interested if those predictions were about generalization properties of LLMs outside their training distribution.

Speculations about what influence functions won't and will be useful for

The main practical use case I see is formulating hypotheses about what kind of dataset modification would lead to what changes in behavior. I say “formulating hypotheses” and not something stronger like “making suggestions” because I currently see no strong evidence that influence functions can make reliable predictions about effects of modifying training datasets.

If in the future, influence functions are shown to be a good way to suggest and predict effects of dataset modifications, how would that be differentially useful for AI safety? I see 3 paths forward:

- Influence functions might help generate hypothesis about what makes adversarial training work best, and thus improve its sample efficiency significantly (while capabilities of frontier models might benefit differentially more from scale alone);

- Influence functions localization suggestions might help steer inductive biases of models by suggesting freezing some parts of the models during some crucial fine-tuning stages (e.g. by favoring changes to possible motives as opposed to superficial word choices);

- Influence functions might lead to better intuitions of how models' internal work: since influence functions describe influence of points on specific weights, they could tell us which weights would be influenced as a result of dataset changes, which could help formulate hypotheses about the inner workings of neural networks.

5 comments

Comments sorted by top scores.

comment by Quintin Pope (quintin-pope) · 2023-09-23T03:08:21.071Z · LW(p) · GW(p)

Re empirical evidence for influence functions:

Didn't the Anthropic influence functions work pick up on LLMs not generalising across lexical ordering? E.g., training on "A is B" doesn't raise the model's credence in "Bs include A"?

Which is apparently true: https://x.com/owainevans_uk/status/1705285631520407821?s=46

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2023-09-25T14:11:59.225Z · LW(p) · GW(p)

That's an exciting experimental confirmation! I'm looking forward for more predictions like those. (I'll edit the post to add it, as well as future external validation results.)

comment by Charlie Steiner · 2023-09-22T20:43:04.621Z · LW(p) · GW(p)

I feel like there's also a Bayesian NN perspective on the PBRF thing. It has some ingredients that look like a Gaussian prior (L2 regularization), and an update (which would have to be the combination of the first two ingredients - negative loss function on a single datapoint but small overall difference in loss).

Said like this, it's obvious to me that this is way different from leave-one-out. First learning to get low loss at a datapoint and then later learning to get high loss there is not equivalent to never learning anything directly about it.

comment by Fabien Roger (Fabien) · 2024-07-27T21:53:49.922Z · LW(p) · GW(p)

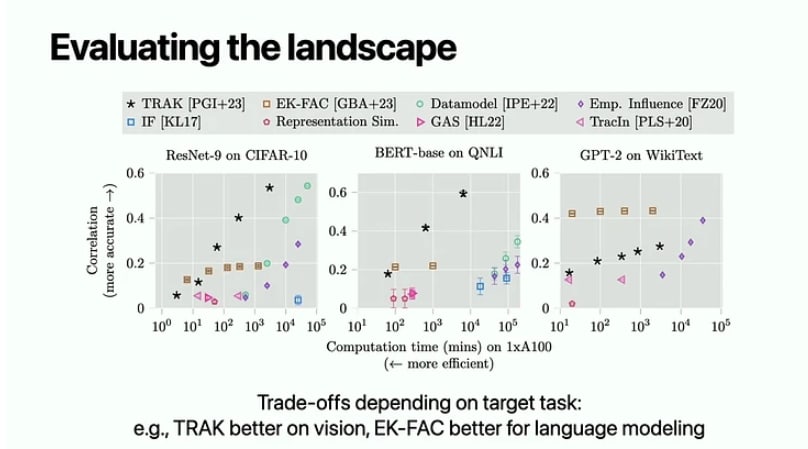

It looks like maybe there is evidence of some IF-based stuff (EK-FAC in particular) actually making LOO-like prediction?

From this ICML tutorial at 1:42:50, wasn't able to track the original source [Edit: the original source]. Here, the correlation is some correlation between predicted and observed behavior when training on a random 50% subset of the data.

comment by IlyaShpitser · 2023-09-22T15:51:38.946Z · LW(p) · GW(p)

Influence functions are for problems where you have a mismatch between the loss of the target parameter you care about and the loss of the nuisance function you must fit to get the target parameter.