Building Safer AI from the Ground Up: Steering Model Behavior via Pre-Training Data Curation

post by Antonio Clarke (antonio-clarke) · 2024-09-29T18:48:23.308Z · LW · GW · 0 commentsContents

Building Safer AI from the Ground Up: Steering Model Behavior via Pre-Training Data Curation Abstract Summary Projected Impact Short-Term Impact: Long-Term Impact: Method Overview: Advantages Over Existing Methods: Relation to Advanced AI Safety Background and Motivation Existing Model-Level Alignment Techniques Limitations of Existing Data Curation Methods Limitations: How The Proposed Method Differs Key Differences: Gap in the Literature Methodology Method Overview Implementation Details Example Use Case Customization and Flexibility Advantages of the Method Relation to Existing Work Implementation Proof-of-Concept Drawbacks and Limitations 1. Does this method actually work? 2. Can this method be applied to other undesirable behaviors or properties beyond deception? 3. Can this method be misused to train models with harmful values? 5. Might modifying targeted portions of the training data result in the model not having specific capabilities? 7. Is the cost of running inference on all training data prohibitive? Research Agenda Short-Term Steps Long-Term Steps Conclusion Acknowledgments Appendices None No comments

Building Safer AI from the Ground Up: Steering Model Behavior via Pre-Training Data Curation

I completed this blog post as my final project for BlueDot Impact's AI Alignment course. While it's nowhere near as polished as I'd like it to be given the short-term nature of the course, I'd love to hear what others think of this idea, and better yet, find someone who can test its hypotheses experimentally based on the research agenda it proposes.

Abstract

How can we steer large language models (LLMs) to be more aligned from the very beginning? This post explores a novel data curation method that leverages existing LLMs to assess, filter, and revise pre-training datasets based on user-defined principles. I hypothesize that by removing or modifying undesirable content before training, we can produce base models that are inherently less capable of undesirable behaviors. Unlike traditional alignment techniques applied after pre-training, this approach aims to prevent the acquisition of harmful capabilities altogether. The method is straightforward to implement and applicable to both open-source and proprietary models. I outline a clear research agenda to test this hypothesis and invite collaboration to evaluate its effectiveness in enhancing the safety of advanced AI systems.

Summary

This post proposes a new method to enhance the safety of LLMs by curating their pre-training data using existing LLMs. The core idea is to assess, filter, and revise pre-training datasets based on user-defined alignment principles before training new models. By removing or changing content that shows harmful behaviors, like deception or power-seeking, the goal is to create models that are inherently safer because they never learn these harmful behaviors.

While existing methods typically focus on fine-tuning after models are trained or use heuristic-based filtering to remove toxicity, this approach is novel in its ability to curate data at the pre-training phase using user-defined alignment principles. This proactive method helps prevent models from ever learning harmful behaviors, addressing a key gap in current research.

Research Question: Can pre-training data curation using LLMs reduce harmful behaviors in base models more effectively than existing post-training alignment techniques?

Hypothesis: By removing or modifying content that exhibits undesirable behaviors before training, we can produce base models that are inherently less capable of harmful outputs.

Though time constraints prevented training new base models to validate this method, I’ve provided detailed guidelines for future experimentation. I invite researchers and practitioners to collaborate on testing these hypotheses, and I'm eager to engage with any experimental results.

Projected Impact

My theory of change is grounded in the belief that integrating alignment principles at the foundational level of AI model training—specifically during pre-training data curation—can lead to inherently safer AI systems. By proactively shaping the model's training data, we influence the behaviors and knowledge it acquires, reducing the likelihood of harmful behaviors.

Short-Term Impact:

- Improved Safety: Immediate reduction in the model's propensity to develop or exhibit harmful behaviors.

- Enhanced Robustness: Increased resistance to adversarial attacks that attempt to elicit undesirable outputs.

Long-Term Impact:

- Standardization of Safe Practices: Establishing pre-training data curation as a standard practice in AI development prioritizes safety from the outset.

- Reduction of Catastrophic Risks: Contributing to the overall mitigation of existential risks associated with advanced AI systems, such as loss of control or unintended misuse, by steering LLMs away from dangerous instrumentally convergent behaviors.

By focusing on safety during the initial training phase, we add an extra layer of protection. This complements methods like fine-tuning, making AI systems safer overall.

Method Overview:

The data curation method consists of the following steps, with a simple example in this GitHub repository:

- Assessment: Use an LLM to evaluate training data based on specific undesirable behaviors or properties defined by the user.

- Filtering (Optional): Exclude data that exhibits these undesirable characteristics.

- Revision: Modify the remaining data to better align with desired principles using an LLM.

This method is flexible and can be adapted to target a range of behaviors, such as promoting transparency to counter deception or encouraging cooperation over power-seeking.

Advantages Over Existing Methods:

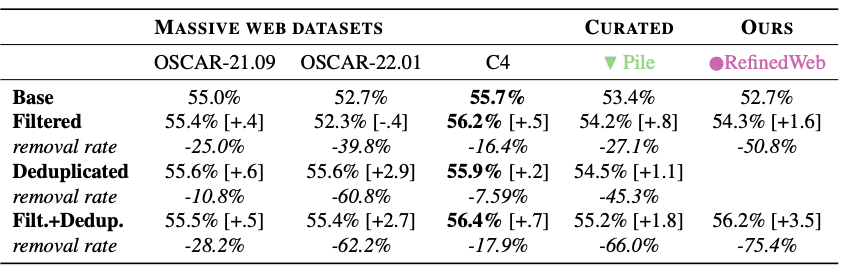

There are several existing methods for alignment at the model level as well as several existing data curation methods, summarized in Table 1. However, the proposed method has the following advantages:

- Prevention Over Mitigation: Addresses harmful behaviors during the pre-training phase, preventing models from learning undesirable patterns from the start.

- Adversarial Robustness: Produces models inherently more resistant to manipulative prompts and adversarial attacks.

- Flexibility: Allows for targeting a wide range of undesirable behaviors through user-defined principles.

- Complementary Approach: Enhances existing alignment techniques by adding an extra layer of safety during the foundational training stage.

| Technique | Stage of Application | Goal/Target | Advantages | Limitations |

| Toxicity Filtration | Pre-training | Remove toxic content from training data | Reduces the likelihood of toxic outputs in generated text. | Often removes valuable data, lacks flexibility to target behaviors beyond toxicity. |

| Document Quality Checks | Pre-training | Filter out low-quality content (e.g., based on symbols, n-grams) | Improves general model performance by filtering out poor data. | Focuses on content quality, not harmful behaviors; doesn't prevent adversarial exploitation of models. |

| Deduplication | Pre-training | Remove duplicate data | Reduces overfitting and training inefficiency. | Does not address the presence of harmful or deceptive behaviors. |

| Fine-Tuning (FT) | Post-training | Improve model alignment with human preferences | Effective at improving specific behaviors after training; well-established technique. | Does not prevent harmful behaviors from being learned during pre-training; requires significant resources. |

| Reinforcement Learning from Human Feedback (RLHF) | Post-training | Align model behavior with human values through feedback | Can significantly improve model alignment when feedback is comprehensive. | Time-consuming and costly; limited to behaviors the feedback system can detect. |

| Constitutional AI | Post-training | Use predefined ethical principles to guide model behavior | Introduces explicit ethical constraints on model behavior; can address a broad range of undesirable actions. | Applied during fine-tuning, so it doesn’t prevent harmful capabilities learned during pre-training. |

| Proposed Method (Pre-training Data Curation with LLMs) | Pre-training | Remove or revise harmful content before training | Addresses harmful behaviors from the start, preventing the model from learning undesirable patterns. | Requires significant inference resources and careful balancing between content revision and removal. |

Table 1: Comparison of Safety/Alignment Techniques Across Pre-Training and Post-Training Phases

Despite the existence of considerable existing research on data curation and AI alignment, there is a notable gap concerning methods that use LLMs to curate pre-training data specifically for general alignment purposes. Existing data curation techniques often focus on improving model capabilities or reducing toxicity through heuristic-based filtering, which lacks flexibility and may not address a broader range of harmful behaviors.

Relation to Advanced AI Safety

By integrating alignment principles at the foundational level of AI model training, this method directly contributes to the safety of advanced AI systems. It addresses core concerns in AI safety by:

- Preventing Harmful Capabilities: Reducing the risk of models developing dangerous behaviors that could lead to catastrophic outcomes.

- Enhancing Control: Making models less susceptible to loss of control scenarios by limiting their exposure to harmful patterns during training.

- Supporting Alignment Goals: Aligning the model's behavior with human values and ethical considerations from the ground up.

Background and Motivation

The rapid advancement of large language models (LLMs) has brought significant benefits but also raised critical concerns about their safety and alignment with human values. As these models become more capable, ensuring they do not exhibit harmful behaviors or produce undesirable outputs is a pressing challenge, especially in the context of preventing catastrophic risks such as loss of control or unintended harmful actions.

Existing Model-Level Alignment Techniques

Traditional alignment methods often focus on post-training interventions:

- Fine-Tuning: Adjusting the model's parameters using additional data to encourage desired behaviors.

- Reinforcement Learning from Human Feedback (RLHF): Training the model to follow human preferences by providing feedback on its outputs.

- Instruction-Tuning: Teaching the model to follow instructions through supervised learning on task-specific datasets.

While these techniques can improve a model's adherence to desired behaviors, they may not fully eliminate harmful capabilities learned during pre-training. Models might still exhibit undesirable behaviors when subjected to adversarial attacks like jailbreaking, as the harmful knowledge remains latent within the model.

Limitations of Existing Data Curation Methods

Current pre-training data curation methods aim to improve model capabilities and reduce toxicity but have limitations when addressing advanced AI safety concerns:

- Document Quality Checks:

- Purpose: Enhance model performance by filtering out low-quality content.

- Methods: Use heuristics like symbol-to-word ratios and n-gram repetition checks.

- Limitation: Focuses on general content quality rather than specific undesirable behaviors.

- Deduplication:

- Purpose: Improve training efficiency by removing duplicate data.

- Methods: Identify duplicates through content similarity measures.

- Limitation: Does not address the presence of harmful or undesirable behaviors in unique content.

- Toxicity Filtration:

- Purpose: Reduce toxic outputs by removing toxic examples.

- Methods: Apply heuristics (e.g., bad-word lists) or classifiers to detect toxicity.

- Limitation: Often lacks flexibility to target a broader range of harmful behaviors and may result in loss of valuable data due to outright removal.

Limitations:

- Narrow Scope: Existing techniques are typically limited to general quality or toxicity and cannot easily accommodate arbitrary, user-defined undesirable behaviors.

- Data Loss: Outright removal of data can lead to decreased dataset richness, potentially impacting model capabilities.

- Residual Harmful Capabilities: Models may still learn harmful behaviors not captured by these methods, posing risks in advanced AI systems.

See Appendix 2 for a more detailed overview of the data curation literature.

How The Proposed Method Differs

Key Differences:

- Scope of Targeted Content:

- Existing Methods: Typically focus on general quality or toxicity.

- This Method: Allows for arbitrary, user-defined principles, enabling targeted steering away from specific undesirable behaviors.

- Content Revision vs. Removal:

- Existing Methods: Often involve outright removal of undesirable data.

- This Method: Can revise content to align with desired principles, preserving valuable information and maintaining dataset richness.

Gap in the Literature

Despite extensive research in data curation and AI alignment, there is a notable gap concerning methods that (1) aim to steer LLMs away from dangerous patterns of behavior during the pre-training phase (toxicity aside), or (2) use LLMs to curate pre-training data specifically for alignment purposes. Existing approaches do not offer a scalable and flexible way to steer base models away from specific undesirable behaviors during the pre-training phase.

To enhance the safety of advanced AI systems and address core concerns in AI safety, it is crucial to develop methods that:

- Target Specific Behaviors: Allow customization based on evolving safety concerns and user-defined principles.

- Preserve Valuable Data: Modify rather than remove content to maintain dataset richness and diversity.

- Enhance Adversarial Robustness: Prevent the acquisition of harmful capabilities to make models inherently safer against manipulative prompts.

Methodology

I propose using existing language models to improve the training data before we even train a new model. By assessing, filtering, and revising the data based on specific guidelines, we can guide the new model to be safer from the start.

Method Overview

The method consists of three main steps:

- Assessment

- Objective: Evaluate each data point in the pre-training dataset to determine if it exhibits undesirable behaviors or properties as defined by the user.

- Implementation:

- Use an existing LLM to analyze the content.

- Generate a probability score (e.g., between 0 and 100) indicating the likelihood of the content being undesirable.

- Customize the assessment using different prompts, few-shot examples, or multi-step reasoning.

- Filtering (Optional)

- Objective: Exclude data that strongly exhibits undesirable characteristics from the training dataset.

- Implementation:

- Define a FILTER_THRESHOLD for the probability score.

- Data points exceeding this threshold are removed.

- Filtering is optional and adjustable based on the desired balance between safety and data diversity.

- Revision

- Objective: Modify remaining data to better align with desired principles without losing valuable information.

- Implementation:

- Define a REVISE_THRESHOLD for the probability score.

- Data points exceeding this threshold but below the filter threshold are revised using an LLM.

- The LLM rewrites the content to remove or alter undesirable aspects while preserving the overall meaning.

- Tailor revision strategies using different prompts or multi-stage processes.

Implementation Details

- Data Format: Pre-training data is stored in a format compatible with the LLM's context window (e.g., JSONL or CSV files with discrete text chunks small enough for your LLM's context window).

- Assessment Class: An Assessment class processes each data point, returning a probability score based on the LLM's analysis.

- Revision Class: A Revision class manages content modification, using the LLM to generate revised text that aligns with user-defined principles.

Example Use Case

- Target Behavior: Reducing deceptive content.

- Assessment: The LLM evaluates the deceptiveness of each data point, assigning a probability score.

- Thresholds:

- FILTER_THRESHOLD: High probability of deception leads to filtering.

- REVISE_THRESHOLD: Moderate probability leads to revision.

- Revision: The LLM rewrites deceptive content to promote honesty and transparency.

Customization and Flexibility

- User-Defined Criteria: Specify exact behaviors or properties to target (e.g., aggression, bias, manipulation).

- Adjustable Thresholds: Tune FILTER_THRESHOLD and REVISE_THRESHOLD to control strictness.

- Modular Steps: Use assessment, filtering, and revision independently or in combination.

Advantages of the Method

- Preserves Data Richness: Revises content instead of removing it, maintaining dataset diversity.

- Enhances Safety from the Ground Up: Prevents models from learning undesirable behaviors during pre-training, increasing robustness against adversarial attacks.

- Flexible and Scalable: Applicable to various undesirable behaviors and scalable to large datasets.

Relation to Existing Work

This method is inspired by Anthropic's work on Constitutional AI, which uses LLMs to generate fine-tuning data based on a set of principles. Our approach differs by applying these principles at the pre-training data level, aiming to prevent the acquisition of harmful capabilities rather than mitigating them after they have been learned.

Implementation Proof-of-Concept

An open-source GitHub repository demonstrates a simple implementation of this method:

- Data Handling: Processes large datasets line by line.

- Modularity: Allows easy customization of assessment and revision strategies.

- Extensibility: Can integrate different LLMs, prompts, and thresholds.

Drawbacks and Limitations

Below are answers to some common questions and concerns about the proposed data curation method. This section aims to address potential doubts and provide further clarity on how this approach contributes to AI safety and alignment.

1. Does this method actually work?

Initial testing confirms that the method can successfully assess, filter, and revise data based on user-defined guidelines. However, comprehensive experiments involving the training of base models are necessary to evaluate its actual effectiveness on the resulting models. The proposed research agenda outlines steps for conducting these experiments. By collaborating and sharing results, we can collectively determine the method's impact on AI safety and model performance.

2. Can this method be applied to other undesirable behaviors or properties beyond deception?

Yes, one of the strengths of this method is its flexibility. It is designed to be generic enough to steer language models toward or away from any user-defined behaviors or properties. Whether you're concerned with reducing biases, eliminating toxic language, or promoting specific values, you can customize the assessment and revision steps to address those specific areas. This adaptability makes the method broadly applicable to various alignment challenges within AI safety.

3. Can this method be misused to train models with harmful values?

While any technology has the potential for misuse, this method is intended to enhance safety by removing harmful capabilities, not to promote undesirable behaviors. Additionally, existing techniques like fine-tuning already steer models toward specific behaviors. By focusing on alignment from the pre-training phase, our method strengthens the model's resistance to adversarial manipulation. Ethical considerations and oversight are essential to ensure the technology is used responsibly.

4. Won't modifying the training data in general reduce the model's capabilities?

Modifying training data may lead to some loss in capabilities, similar to how reinforcement learning for human preferences can reduce certain abilities. The key is to balance the trade-off between safety and performance. Empirical testing is necessary to measure the extent of capability loss versus safety gains. In many applications (such as reinforcement learning), the benefits of increased safety and alignment may outweigh the drawbacks of reduced performance in specific areas.

5. Might modifying targeted portions of the training data result in the model not having specific capabilities?

In short, yes - the model may lose specific, desirable abilities related to the filtered or revised content. For instance, if the data curation process removes all instances of deceptive language, the model might become less effective at detecting deception in text. This could hinder applications where understanding or identifying such language is important, leading to potentially negative consequences in performance. Similar to the case for general capability reduction, empirical work is necessary to measure capability loss versus safety gains.

6. Is retraining base models practical every time new values or behaviors are desired?

Retraining base models is resource-intensive and may not be feasible for every change in desired behaviors. This method is intended for the development of new base models, supplementing other techniques like fine-tuning for existing models. While it doesn't solve all alignment challenges, it offers a proactive step towards safer AI systems by embedding desired behaviors from the ground up.

7. Is the cost of running inference on all training data prohibitive?

There is an added cost, but it represents a small fraction of the total training expense for large models. As detailed in Appendix 3, the cost of running inference for data curation is approximately 4.3% of the total training cost for a model like GPT-3. If the method significantly improves safety and robustness, the investment is justified. Additionally, initial implementations can be conducted on smaller scales to validate effectiveness before scaling up.

8. Your example repository's deception assessment and revision prompts seem inadequate. Can they be improved or replaced with other techniques?

Absolutely. The prompts provided in the proof-of-concept are intentionally minimalistic to illustrate the overall process of data curation via LLMs. They serve as a starting point rather than a definitive solution. I encourage others to develop more sophisticated assessment strategies and revision prompts tailored to specific undesirable behaviors or properties. Techniques such as multi-step prompting, integrating other LLMs, or combining LLM assessments with classifiers and heuristics can enhance effectiveness. Collaboration and iterative improvement are key to refining this method and maximizing its potential impact on AI safety.

Research Agenda

To empirically validate and refine the proposed method of pre-training data curation using LLMs, I outline a comprehensive research agenda with both short-term and long-term steps. This agenda is designed to test my hypothesis, enhance the methodology, and contribute significantly to the safety of advanced AI systems.

Short-Term Steps

- Literature Review and Expert Consultation

- Objective: Gather insights from existing work and practitioners to inform and refine our approach.

- Actions:

- Conduct a thorough review of academic papers, technical reports, and industry publications to identify any prior attempts at pre-training data curation for alignment purposes.

- Reach out to researchers and engineers who have experience training foundation models to seek feedback on the feasibility and potential challenges of the proposed method.

- Compile findings to understand why similar approaches may not have been widely adopted, identifying any technical or practical barriers.

- Define Specific Undesirable Behaviors

- Objective: Identify and prioritize behaviors that pose significant risks in advanced AI systems.

- Actions:

- Develop a list of instrumentally convergent and dangerous behaviors (e.g., deception, manipulation, power-seeking, self-preservation).

- Use AI safety literature to inform the selection, focusing on behaviors that could lead to catastrophic outcomes.

- Create clear definitions and examples for each behavior to guide the assessment process.

- Develop Evaluation Benchmarks

- Objective: Establish accurate and practical methods to evaluate whether models possess or avoid the targeted behaviors.

- Actions:

- Design specific tasks or prompts that can elicit the undesirable behaviors in models if they exist.

- Utilize existing benchmarks where applicable (e.g., TruthfulQA for assessing truthfulness, MACHIAVELLI for evaluating manipulative tendencies).

- Ensure the evaluation can be performed on smaller models to facilitate rapid iteration.

- Create Assessment and Revision Strategies

- Objective: Formulate effective methods for identifying and modifying undesirable content in the pre-training dataset.

- Actions:

- Develop assessment prompts using existing LLMs to detect the presence of undesirable behaviors in training data.

- Design revision prompts that can transform undesirable content into neutral or positively aligned content without significant loss of information.

- Test and refine these prompts on sample data to ensure reliability and scalability.

- Conduct Small-Scale Experiments

- Objective: Empirically test the effectiveness of the data curation method on a manageable scale.

- Actions:

- Select a small but representative dataset (e.g., a subset of OpenWebText).

- Apply the assessment and revision strategies to create a curated version of the dataset.

- Choose a feasible small model architecture for experimentation (e.g., GPT-2 small with 124 million parameters).

- Train two models from scratch:

- Model A: Trained on the uncurated dataset.

- Model B: Trained on the curated dataset.

- Evaluate both models using the benchmarks established in Step 3 to assess the presence of undesirable behaviors.

- Measure general language capabilities using standard benchmarks (e.g., perplexity on validation sets, performance on tasks like LAMBADA or the Winograd Schema Challenge).

- Analyze and Share Results

- Objective: Determine the impact of the data curation method and contribute findings to the AI safety community.

- Actions:

- Compare the performance of Model A and Model B in terms of both alignment and capabilities.

- Analyze any trade-offs between reducing undesirable behaviors and potential capability losses.

- Document the methodology, results, and insights gained.

- Share the findings through a detailed report or publication, including code and data where permissible, to encourage transparency and collaborative improvement.

Long-Term Steps

- Scaling Up Experiments

- Objective: Validate the method's effectiveness on larger models and more extensive datasets.

- Actions:

- Apply the data curation method to larger language models (e.g., GPT-2 medium or large).

- Use more comprehensive datasets (e.g., the full OpenWebText or subsets of the Pile).

- Evaluate models using advanced benchmarks relevant to AI safety and capabilities, such as:

- MMLU (Massive Multitask Language Understanding): To assess broad knowledge and reasoning abilities.

- HELLASWAG and ARC: For commonsense reasoning and problem-solving.

- Monitor the scalability of assessment and revision strategies, optimizing them for efficiency.

- Refinement of Techniques

- Objective: Enhance the effectiveness and efficiency of assessment and revision methods.

- Actions:

- Incorporate advanced prompting techniques, such as chain-of-thought or few-shot learning, to improve the accuracy of assessments.

- Experiment with automated or semi-automated tools to streamline the data curation process.

- Explore integrating multiple LLMs or specialized models for different types of undesirable content.

- Integration with Post-Training Alignment Methods

- Objective: Combine pre-training data curation with existing alignment techniques to maximize safety.

- Actions:

- Fine-tune models trained on curated data using reinforcement learning from human feedback (RLHF).

- Compare the safety and capability metrics of models that have undergone both pre-training curation and post-training alignment versus those with only one or neither.

- Investigate whether pre-training curation reduces the reliance on extensive fine-tuning.

- Theoretical Analysis and Formal Modeling

- Objective: Develop a theoretical understanding of why pre-training data curation affects model behaviors.

- Actions:

- Study the relationships between training data distributions and emergent model behaviors.

- Develop formal models or simulations to predict the impact of data curation on alignment and capabilities.

- Publish theoretical findings to contribute to the foundational understanding of AI alignment.

- Establishing Best Practices and Guidelines

- Objective: Create actionable guidelines for AI practitioners to implement pre-training data curation.

- Actions:

- Summarize lessons learned from experiments and theoretical work.

- Develop standardized protocols for assessment and revision that can be adopted in industry.

- Advocate for the inclusion of pre-training data curation in AI development standards and ethics guidelines.

- Community Building and Collaboration

- Objective: Foster a collaborative ecosystem to advance this area of research.

- Actions:

- Organize workshops, seminars, or conferences focused on pre-training data curation and AI safety.

- Create open-source repositories and platforms for sharing tools, datasets, and results.

- Engage with policymakers and stakeholders to raise awareness of the importance of pre-training alignment efforts.

Conclusion

Pre-training data curation using LLMs offers a novel and promising pathway for enhancing the safety and alignment of advanced AI systems. By proactively steering models away from undesirable behaviors at the foundational level, I aim to prevent the acquisition of harmful capabilities that could lead to catastrophic outcomes. This approach addresses core concerns in AI safety by making models inherently less prone to adversarial manipulation and unintended harmful actions.

This method fills a significant gap in existing work, which has primarily focused on post-training alignment techniques like fine-tuning and reinforcement learning from human feedback. By shifting the focus to the pre-training phase, I provide a complementary strategy that strengthens the overall robustness and reliability of AI systems. This proactive intervention could reduce the risk of scenarios such as loss of control or the emergence of misaligned objectives in highly capable models.

While theoretical considerations suggest that pre-training data curation can substantially enhance AI safety, empirical validation is essential. I have outlined a clear research agenda involving the training of base models on curated datasets and the evaluation of their behaviors compared to models trained on uncurated data. This agenda provides concrete next steps for the research community to assess the effectiveness and potential trade-offs of this method.

I invite researchers, practitioners, and stakeholders in the AI safety community to explore and expand upon this technique. By collaborating and sharing our findings, we can collectively advance our understanding and contribute to the development of AI systems that are not only highly capable but also aligned with human values and safe in their operation.

Acknowledgments

I would like to thank the staff and facilitators of BlueDot Impact's AI alignment course and the community members who provided valuable feedback and guidance. Their support was instrumental in shaping this project. In particular, I would like to thank Oliver De Candido, Aaron Scher, and Adam Jones for the feedback they provided in the ideation stages of this project.

Appendices

Appendix 1: Self-Reflection

I went into BlueDot's alignment course hoping to accomplish three things:

- Understand the State of AI Alignment: I gained foundational knowledge but realized the vastness of the field and the depth required to specialize.

- Assess Personal Fit for Alignment Work: I found the work intellectually stimulating but recognized the challenges of making significant contributions with limited time.

- Contribute to Reducing Existential Risk: While my impact may be small, I believe that even incremental advancements in safety techniques are valuable.

Despite these eye-openings, I truly believe that data curation with LLMs is a promising initiative for steering LLMs away from catastrophic behaviors. While it provides nowhere near a complete solution, it has the potential to reduce x-risk by a non-zero amount and its success can be tested with straightforward experiments. It warrants a proper research investigation (not just a ~20H course project!) and I hope somebody can dedicate the time to it that it deserves.

Appendix 2: Novelty Investigation

Warning: This appendix is largely unorganized and unedited.

When I thought about this method, my first impression was that this has probably already been done. Several other people have told me this has probably already been done, however I haven't been able to find any publicly available research literature of somebody doing this, after hours of searching AlignmentForum, LessWrong, and Google. Perhaps it's being published and I just can't find it, or perhaps it's being done internally at AI companies, but either way, all of the evidence I've gathered first-hand indicates that nobody has ever published a paper, let alone a blog post on this approach or anything similar to it with an alignment focus.

Adjacent Work

A Pretrainer's Guide to Training Data: Measuring the Effects of Data Age, Domain Coverage, Quality, & Toxicity (May 2023) - This paper measures the effects of different pre-training data filtration strategies, specifically along the dimensions of "quality" and "toxicity". For each dimension, existing filtration strategies are grouped into two buckets: "heuristics" and "classifiers". The "Perspective API" seems to be a common classifier multiple papers use to assess toxicity, and the heuristics are generally the same across papers: filter documents with bad words in theme, assess n-grams/symbol and punctuation usage, etc.

Most interesting finding from this paper:

"Filtering using a toxicity classifier, we find a trade-off: models trained from heavily filtered pretraining datasets have the least toxic generation but also the worst toxicity identification (Figure 5, left). Similarly, Figure 7 shows the performance of QA tasks unrelated to toxicity are hurt by toxicity filtering, though this may be due to the overall decrease in training data. Ultimately, the intended behaviour of the model should inform the filtering strategy, rather than one size fits all. Most interesting of all, the strongest performance on toxicity identification for every dataset comes from the inverse toxicity filter. Practitioners optimizing for performance on toxic domains should intentionally apply inverse filters."

So in order for an LLM to accurately assess the harmfulness of content in domain X, it may need to be trained on a sufficiently large amount of harmful content within the same domain. This could imply that a base model trained on data curated to omit knowledge of deception would likely be a poor judge of deception.

The Pile: An 800GB Dataset of Diverse Text for Language Modeling (Dec 2020) - This EleutherAI paper performs a handful of data filtration techniques on a wide variety of sources. These techniques are mostly similar to what's done for Falcon LLM, and are discussed further down in this document, so I won't discuss them here - they're mostly content-based heuristics like blank lines, symbol-to-character ratios, or n-gram ratios, and are orthogonal to harmfulness.

The main difference between this paper and the FalcolnLLM paper's filtration techniques is that from their Reddit training data (OpenWebText2), they:

- Filter any submissions with fewer than 3 upvotes

- Train a classifier on the filtered reddit submissions

- Use that classifier as a quality indicator to filter their CommonCrawl dataset (content from across the web)

When using GitHub data as a training source, they also do something similar (filter repos with less than 100 stars, etc).

In short, their filtration work is removing low-quality data to improve capabilities, not related to removing harmful data to improve alignment.

Robustifying Safety-Aligned Large Language Models through Clean Data Curation - This paper outlines an approach to curate training data by measuring the perplexity the model being trained exhibits to each labeled <Query, Response> pair, and using the model to repeatedly rewrite responses with high perplexity until they exhibit low perplexity. They use perplexity as they find it correlates with harmfulness, as harmless samples are far more common than harmful ones, resulting in harmfulness being more "surprising" to the model.

Note that though the paper specifies that their approach is used during "pre-training" of the LLM, a closer reading indicates that by "pre-training", they mean during any supervised learning performed by the model's creator after the base model has been created, not during the creation of the base model itself. For example, they say "In domains requiring expert knowledge, such as clinical decision-making [7, 48], LLMs are pre-trained with crowdsourced data to enhance their proficiency in complex tasks." Although there are definitely analogies to my approach, this approach targets a distinct phase of the training process and uses properties intrinsic to the model being trained to filter data (which would be much less tractable during the true pre-training phase where the model being trained is much less complete, hence the need for an external model).

One useful thing this paper has is easy-to-implement measurements for harmfulness of resulting trained model, however unless I actually end up with time and funding to train entire base models on the data from my method, these won't be necessary.

Deep Forgetting & Unlearning for Safely-Scoped LLMs [AF · GW] (Dec 2023) - This blog post outlines a research agenda for removing unwanted/harmful capabilities in LLMs. It explicitly calls out "curating training data" as a potential technique to prevent LLMs from ever developing harmful capabilities. However, it does not mention anyone who has explicitly tried filtering pre-training data for alignment purposes, despite linking to papers on adjacent topics (eg, that filtering pre-training data for images has been critical for image models). Moreover, the author mentions that this is "likely a very underrated safety technique". He also doesn't mention anything vaguely similar to the specific filtration technique I'm proposing, nor does he mention any other techniques. I'm inferring that if the author knew about any direct work on this question, he would link it. Based on this absence and his statements of its underrated-ness, I'm interpreting this content as moderate evidence in favor of this being an unattempted problem.

Automated Data Curation for Robust Language Model Fine-Tuning (Mar 2024) - This paper applies an analogous approach for filtering out/rewriting data during the fine-tuning stage - they have an LLM make evaluations for each data point. This is somewhat analogous, but is during fine-tuning, not pre-training.

RefinedWeb Dataset for Falcon LLM (June 2023) - This DeepMind paper explicitly set out with the goal of producing a more capable model with less data via filtering + deduplication. They:

- Extract text from webpages

- Filter out non-English documents (using a language classifier)

- Filter out entire documents with too much repetition, identified via the techniques in Table A1 in this paper (tl;dr, numerous n-gram-related heuristics)

- Filter out entire documents assessed as "low quality" (1) by word length related heuristics, from "Quality Filtering" section also in the same paper as previous bullet

- Filter out individual "undesirable" lines withinin documents, such as social media counters ("3 likes") or navigation buttons

- Various techniques to identify and filter out duplicate content

This results in 2x ~50% reductions in content from filtering and deduplication (~50% from non-English, ~50% from the subsequent filtering). Looking at their model and other filtered models, they find that filtering often, but not always, improves model performance, and deduplication always improves model performance.

That said, their filtering work is more about filtering non-useful text (eg: symbols, navigation links, repeated words, etc) - it doesn't even touch on filtration based on the semantic content of the assessed content, and is exclusively focused on capabilities as opposed to alignment.

Textbooks Are All You Need (June 2023): This Microsoft paper investigates using a combination of heavily filtered data and synthetically generated data for training an LLM. I researched it mostly as a side investigation for its follow-up paper, covered below.

My main takeaway was the section where they explain how they filter code samples:

- Take a small subset of all code samples

- Prompt GPT-4 to “determine its educational value for a student whose goal is to learn basic coding concepts”

- Train a classifier based on GPT-4's predictions

- Use that classifier to filter non-educational code samples from their dataset

- Train model on filtered data

The model in this (previous) paper only focuses on coding, so doesn't demonstrate filtering based on any other criterion. Still, it's a plausible demonstration of using a model to help with pre-training data filtration, albeit applied to capabilities rather than alignment.

Textbooks Are All You Need II: phi-1.5 technical report (Sept 2023) - This Microsoft paper follows up from the previous one, and aims to train a powerful small model from primarily synthetically generated data, with the hope that generating their own training data will both improve the overall quality and reduce the occurrence of toxic content. They compare this against a model made from a subset of the Falcon LLM dataset (the first paper I read into).

- They say the filtered falcon LLM dataset is filtered based on the technique from part 1 of this paper (summarized above this paper). However, that filtration technique was applied for filtering out non-education coding samples, and it's unclear if this paper deviated from that limitation.

- Based on them still using the same approach in their subsequent paper from 2024 (researched below), it's highly unlikely that they deviated in this intermediate paper

Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone (April 2024) - Jumping ahead to Microsoft's most recent paper on Phi, they explicitly confirm that for pre-training data filtration, they now use both:

- The "educational value" filtration classification technique from the first paper in this series

- "Even more heavy filtering" during the second stage of their training. The technique of this filtering is unspecified, but I'm inferring that it's simply a more aggressive form of their "educational value" technique

In general, their stated (and implied) research direction with this set of papers seems to be "how can we optimize and minimize pre-training data to create small models that are exceptionally capable for their size". They seem to be doing quite a good job at this, with their 3.8B parameter model outperforming Mixtral's 45B parameter model! But they aren't applying data filtration for alignment at all - their model's safety primarily comes from post-training (typical fine-tuning and DPO), as well pre-training on a significant amount of internally generated (and presumably light on harmfulness) synthetic data.

It also seems like a trend from their research is that their approach can't scale well to larger models because of its heavy reliance on synthetic data for pre-training, which I believe bottoms out in utility for training value after some amount, but I don't have a direct citation for this so might be wrong.

Pretraining Language Models with Human Preferences (Feb 2023) - This paper investigates how to reduce various harmful model behaviors (such as "toxicity") at the pre-training stage by experimenting with different pre-training objectives.

That is, whereas typically language models are pre-trained to maximize the "maximum likelihood estimation" (MLE) (put simply, which word is most likely to come next, based on its pre-training data), instead explore other objectives that can be used during pre-training to steer the model towards less harmful behavior.

This approach, while potentially valuable for reducing harmfulness, is entirely orthogonal to operations such as filtration or augmentation of the pre-training data.

Internal independent review for language model agent alignment [AF · GW] (Sept 2023) - This paper briefly mentions the general idea of data curation with a single sentence: "Curating the training data to exclude particularly unaligned text should also be a modest improvement to base alignment tendencies".

That's it, no other text related to data curation in this blog post. I link this blog post here because at this point in my research, despite how little this post touches on the topic, this is the most directly connected writing I can find. In other words, I'm fairly convinced that this is a novel contribution.

---

(1) "To remove low-quality data while minimizing potential for bias, we apply a number of simple, easily understood heuristic filters: we remove any document that does not contain between 50 and 100,000 words, or whose mean word length is outside the range of 3 to 10 characters; we remove any document with a symbol-to-word ratio greater than 0.1 for either the hash symbol or the ellipsis; and we remove any document with more than 90% of lines starting with a bullet point, or more than 30% ending with an ellipsis. We also require that 80% of words in a document contain at least one alphabetic character, and apply a "stop word" filter, to remove documents that do not contain at least two of the following English words: the, be, to, of, and, that, have, with; this adequately deals with ostensibly English documents that contain no coherent English text."

Appendix 3: Cost Estimation

A common concern with the proposed method is the additional cost associated with running inference over the entire pre-training dataset to assess and revise content. While this process is indeed resource-intensive, it constitutes a relatively small fraction of the total cost of training a large-scale language model.

To illustrate this, consider the example of GPT-3:

- Training Cost: According to this report, GPT-3 Davinci cost approximately $4,324,883 to train.

- Training Data Size: The original GPT-3 paper states that the model was trained on 300 billion tokens, with the total pre-training dataset consisting of around 500 billion tokens.

Assuming we process the larger dataset (500 billion tokens) and use a commercially available model for the assessment and revision steps (instead of an open-source model), we can estimate the inference cost as follows:

- Using GPT-4o-mini's Batch API as of September 19, 2024

- $0.075 / 1M input tokens

- $0.300 / 1M output tokens

- Total Inference Cost: $187,500

Even given the somewhat unreasonable assumption of paying for a commercial model for all of these inferences,, the inference cost would be roughly 4.3% of the total training cost.

While $187,500 is a non-trivial amount, it is relatively modest compared to the overall investment in training a state-of-the-art language model. If this expenditure leads to significant improvements in model safety, alignment, and robustness against adversarial attacks, it can be considered a worthwhile investment.

Moreover, initial implementation of this method can be conducted at smaller scales to demonstrate effectiveness before committing to full-scale application. This approach allows for cost-effective experimentation and validation.

0 comments

Comments sorted by top scores.