Mechanism Design: Constructing Algorithms for Strategic Agents

post by badger · 2014-04-30T18:37:20.875Z · LW · GW · Legacy · 14 commentsContents

Overly optimizing whether or not to paint an room Framework for institutional design Putting the mechanism in mechanism design Wrapping up None 14 comments

tl;dr Mechanism design studies how to design incentives for fun and profit. A puzzle about whether or not to paint a room is posed. A modeling framework is introduced, with lots of corresponding notation.

Mechanism design is a framework for constructing institutions for group interactions, giving us a language for the design of everything from voting systems to school admissions to auctions to crowdsourcing. Think of it as the engineering side of game theory, building algorithms for strategic agents. In game theory, the primary goal is to answer the question, “Given agents who can take some actions that will lead to some payoffs, what do we expect to happen when the agents strategically interact?” In other words, game theory describes the outcomes of fixed scenarios. In contrast, mechanism design flips the question around and asks, “Given some goals, what payoffs should agents be assigned for the right outcome to occur when agents strategically interact?” The rules of the game are ours to choose, and, within some design constraints, we want to find the best possible ones for a situation.

Although many people, even high-profile theorists, doubt the usefulness of game theory, its application in mechanism design is one of the major success stories of modern economics. Spectrum license auctions designed by economists paved the way for modern cell-phone networks and garnered billions in revenue for the US and European governments. Tech companies like Google and Microsoft employ theorists to improve advertising auctions. Economists like Al Roth and computer scientists like Tuomas Sandholm have been instrumental in establishing kidney exchanges to facilitate organ transplants, while others have been active in the redesign of public school admissions in Boston, Chicago, and New Orleans.

The objective of this post is to introduce all the pieces of a mechanism design problem, providing the setup for actual conclusions later on. I assume you have some basic familiarity with game theory, at the level of understanding the concept of a dominant strategies and Nash equilbria. Take a look at Yvain’s Game Theory Intro if you’d like to brush up.

Overly optimizing whether or not to paint an room

Let’s start with a concrete example of a group choice: Jack, an economics student, and Jill, a computer science student, are housemates. To procrastinate studying for finals, they are considering whether to repaint their living room. Conveniently, they agree on what color they would choose, but are unsure whether it’s worth doing at all. Neither Jack nor Jill would pay the known fixed cost of $300 entirely on their own. They’re not even sure the cost is less than their joint value1, so it’s not only a matter of bargaining to split the cost (a non-trivial question on its own). The decision to paint the room depends on information neither person fully knows, since each knows their own value, but not the value to the other person.

The lack of complete information is what makes the problem interesting. If the total value is obviously greater than $300, forcing them to paint the room and split the cost evenly would be utility-maximizing2. One person might be worse off, but the other would be correspondingly better off. This solution corresponds to funding a public good (in the technical sense of something non-excludable and non-rivalrous) through taxation. Alternatively, if the total value is obviously less than $300, then the project shouldn’t be done, and the question of how to split the cost becomes moot. With some overall uncertainty, we now have to worry that either housemate might misrepresent their value to get a better deal, causing the room to be painted when it shouldn’t be or vice versa.

Assuming the two want to repaint the room if and only if their total value is greater than $300, how would you advise they decide whether to do the project and how much each should contribute?

Pause a moment to ponder this puzzle…

.

.

.

Some naive solutions would be to:

- Vote on painting the room, and if both say yes, do the project with each contributing $150.

- Vote, and if either one says yes, do the project. If both say yes, both contribute $150. If only one says yes, that person contributes $225 and the other contributes $75.

- Both simultaneously write down a number. If the total is greater than $300, do the project. Each contributes a share of the $300 proportional their number.

- Flip a coin to decide who makes an initial offer of how to split the cost, i.e. Jack paying $50 and Jill paying $250. The other person can accept the proposal, in which case they do the project with those contributions. Otherwise, that person makes a new proposal. Alternating proposals continue until one is accepted. If 100 rounds pass without an accepted proposal, don’t paint the room.

None of these will guarantee the room is painted exactly when it’s worth the cost. The first procedure never paints the room when it shouldn’t be, but sometimes fails to paint when it would be worthwhile, like when Jack values the renovation at $120 and Jill values it at $200. Jack would vote “no”, even though their total value is $320. The second procedure can make mistakes of both kinds and can also result in someone contributing more than their value. The other two are more difficult to analyze, but still turn out to be non-optimal.

These protocols barely scratch the surface of all the possible institutions out there. Maybe we just need to be a little more creative to find something better. To definitively solve this problem, some formalism is in order.

Framework for institutional design

Trigger warning: Greek letters, subscripts, sets, and functions.

Getting a little more abstract, let’s specify all the relevant elements in an institutional design setting. First of all, the agents involved in our process need to be identified. Typically, this doesn’t need to go beyond labeling each agent. For instance, we might assign each a number from 1 to n, with i representing a generic agent. Above, we have two agents named Jack and Jill.

Notes on notation: A generic agent has the label i. A set or variable Zi belongs to agent i. Typically, variables are lowercase, and the set a variable lives in is uppercase. Without a subscript, Z refers to the vector Z = (Z1, …, Zi, …, ZN) with one element for each agent. It’s often useful to talk about the vector Z − i = (Z1, …, Zi − 1, Zi + 1, …, ZN) for all agents except agent i (think of deleting Zi from the vector Z). This enables us to write Z as (Zi, Z − i), highlighting agent i’s part in the profile.

Once we’ve established who’s involved, the next step is determining the relevant traits of agents that aren’t obviously known. This unknown data is summarized as that agent’s type. Types can describe an agent’s preferences, their capabilities, their beliefs about the state of the world, their beliefs about others’ types, their beliefs about others’ beliefs, etc. The set of possible types for each agent is their type space. A typical notation for the type of agent i is θi, an element of the type space Θ i. If an assumption about an agent’s preferences or beliefs seems unreasonable, that’s a sign we should enlarge the set of possible types, allowing for more variety in behavior. In our housemate scenario, the type of each agent needs to – at a bare minimum – specify the value each puts on having new paint. If knowing that value fully specifies the person’s preferences and beliefs, we’re done. Otherwise, we might need to stick more information inside the person’s type. Of course, there is a trade-off between realism and tractibility that guides the modeling choice of how to specify types.

Next, we need to consider all possible outcomes that might result from the group interaction. This could be an overarching outcome, like having one candidate elected to office, or a specification of the sub-outcomes for each agent, like the job each one is assigned. Let’s call the set of all outcomes X. In the housemate scenario, the outcomes consist of the binary choice of whether the room is painted and the payment each person makes. Throwing some notation on this, each outcome is a triple (q, PJack, PJill) ∈ X = {0, 1} × R × R.

To talk about the incentives of agents, their preferences over each outcome must be specified as a function of their type. In general, preferences could be any ranking of the outcomes, but we often assume a particular utility function. For instance, we might numerically represent the preferences of Jack or Jill as ui(q, Pi, θi) = qθi − Pi, meaning each gets benefit θi if and only if the room is painted, minus their payment, and with no direct preference over the payment of the other person.

After establishing what an agent wants, we need to describe what an agent believes, again conditional on their type. Usually, this is done by assuming agents are Bayesians with a common prior over the state of the world and the types of others, who then update their beliefs after learning their type3. For instance, Jack and Jill might both think the value of the other person is distributed uniformly between $0 and $300, independently of their own type.

Based on the agents’ preferences and beliefs, we now need to have some theory of how they choose actions. One standard assumption is that everyone is an expected utility maximizer who takes actions in Nash equilibrium with everyone else. Alternatively, we might consider agents who reason based on the worst case actions of everyone else, who minimize maximum regret, who are boundedly rational, who are willing to tell the truth as long as they only lose a small amount of utility, or who can only be trusted to play dominant strategies rather than Nash equilibrium strategies, etc. What’s impossible under one behavioral theory or solution concept can be possible under another.

In summary so far, a design setting consists of:

- The agents involved.

- The potential types of each agent, representing all relevant private information the agent has.

- The potential outcomes available.

- The agents’ preferences over each outcome for each type, possibly expressed as a utility function.

- The beliefs of each agent as a function of their type.

- A theory about the behavior of agents.

In our housemate scenario, these could be modeled as following:

- Agents involved: Two people, Jack and Jill.

- Potential types: The maximum dollar amounts, θJack and θJill, each would be willing to contribute, contained in the type spaces Θ Jack = Θ Jill = [0, 300].

- Potential outcomes: A binary decision variable q = 1 if the room is repainted and q = 0 if not, as well as the amounts paid pJack and pJill, contained in the outcome space X = {0, 1} × R × R.

- Preferences over each outcome for each type: A numerical representation of how much each agent likes each outcome ui(q, pi, θi) = qθi − pi.

- Beliefs: Independently of their own valuation, each thinks the valuation of the other is uniformly distributed between $0 and $300.

- Behavioral theory: Each housemate is an expected utility maximizer, and we expect them to play strategies in Nash equilibrium with each other.

Given a design setting, we presumably have some goals about what should happen, once again conditional on the types of each agent. In particular, we might want specific outcomes to occur for each profile of types. A goal like this is called a social choice function4, specifying a mapping from profiles of agent types to outcomes f: ∏ i Θ i → X. A social choice function f says, “If the types of agents are θ1 through θN, the outcome f(θ1, …, θN) ∈ X should happen”. A social choice fucntion could be defined indirectly as whatever outcome maximizes some objective subject to design constraints. For instance, we could find the social choice function that maximizes agents’ utility or the one that maximizes the total payments collected from the agents in an auction, conditional on no agent being worse off for participating.

Putting the mechanism in mechanism design

So far, this has been an exercise in precisely specifying the setting we’re working in. With all this in hand, we now want to create a protocol/game/institution where agents will interact to produce outcomes according to our favorite social choice function. We’ll formalize any possible institution as a mechanism (M, g) consisting of a set of messages Mi for each agent and an outcome function g: ∏ i Mi → X that assigns an outcome for each profile of messages received from agents. The messages could be very simple, like a binary vote, or very complex, like the source code of a program. We can force any set of rules into this formalism by having agents submit programs to act as a their proxy. If we wanted the housemates to bargain back and forth about how to split the cost, their messages could be parameters for a pre-written bargaining program or full programs that make initial and subsequent offers, depending on how much flexibility we allow the agents. Messages represent strategies we’re making available to the agent, which are then translated into outcomes by g.

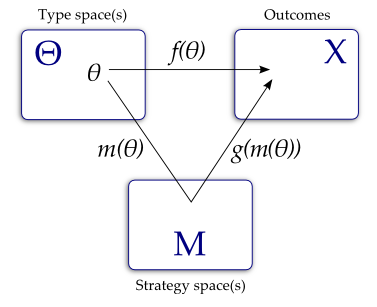

When agents interact together in the mechanism, each chooses the message mi(θi) they’ll send as a function of their type, which then results in the overall outcome g(m1(θ1), …, mn(θn)). The mechanism (M, g) implements a social choice function f if, for all profiles of types θ, the outcome we get under the mechanism equals the outcome we want, i.e.

g(m1(θ1), …, mn(θn)) = f(θ1, …, θn), for all profiles (θ1, …, θn) ∈ Θ = ∏ i Θ i

In other words, we want the strategies (determined by whatever behavioral theory we have for each agent) to compose with the outcome function (which we are free to choose, up to design constraints) to match up with our goal, as shown in the following diagram:

A social choice function f is implementable if some mechanism exists that implements it. Whether a social choice function is implementable depends on our behavioral theory. If we think agents choose strategies in Nash equilibrium with each other, we’ll have more flexiblity in finding a mechanism than if agents need the stronger incentive of a dominant strategy, since more Nash equilibria exist than dominant-strategy equilibria. Rather than assuming agents choose strategically based on their preferences, perhaps we think agents are naively honest (maybe because they are computer programs we’ve programmed ourselves). In this case, we can trivially implement a social choice rule by having each agent tell us their full type and simply choosing the corresponding goal by picking Mi = Θ i and g = f. Here the interesting question is instead which mechanism can implement f with the minimal amount of communication, either by minimizing the number of dimensions or bits in each message. It’s also worth asking whether social choice rules satisfying certain properties can exist at all (much less whether we can implement them), along the lines of Arrow’s Impossibility Theorem.

Wrapping up

In summary, agents have types that describe all their relevant information unknown to the mechanism designer. Once we have a social choice function describing a goal of which outcomes should occur for each realization of types, we can build a mechanism consisting of sets of messages or strategies for each agent and a function that assigns outcomes based on the messages received. The hope is that the outcome realized by the agents’ choice of messages based on their type matches up with the intended outcome. If so, that mechanism implements the social choice function.

How can we actually determine whether a social choice function is implementable though? If we can find a mechanism that implements it, we’ve answered our question. In the reverse though, how would we go about showing that no such mechanism exists? We’re back to the problem of searching over all possible ways the agents could interact and hoping we’re creative enough.

In the next post, I’ll discuss the Revelation Principle, which allows us to cut through all this complexity via incentive compatibility, along with a solution to the painting puzzle.

Next up: Incentive compatibility and the Revelation Principle

-

To clarify if necessary, Jack’s value for the project is the amount of money that he’d just barely be willing to spend on the project. If his value is $200, then he would be willing to pay $199 since that leaves a dollar of value left over as economic surplus, but he wouldn’t pay $201 dollars. If the cost was $200, identical to his value, then he’s indifferent between making the purchase and not. The joint value of Jack and Jill is just the sum of their individual valuations.↩

-

Assuming dollars map roughly equally onto utilities for each. In general, maximizing total willingness-to-pay is known as Kaldor-Hicks efficiency.↩

-

This idea is originally due to John Harsanyi. As long as the type spaces are big enough, this can be done without loss of generality as formalized by Mertens and Zamir (1985).↩

-

If multiple outcomes are acceptable for individual profiles, we have a social choice correspondence.↩

14 comments

Comments sorted by top scores.

comment by kpreid · 2014-05-01T17:16:09.343Z · LW(p) · GW(p)

As I reached the end of the introductory example, this article abruptly started using the term “institution” (I had forgotten the occurrence in the first paragraph). It is unclear to me in what way an “institution” is different from a “mechanism”.

If there is no significant difference then I would advise not using “institution” except in the context of pointing out other concepts which map to this formalism.

comment by Vaniver · 2014-05-03T20:59:53.830Z · LW(p) · GW(p)

I very much enjoyed this post, and am happy to see that you're writing a sequence on this.

Throwing some notation on this, each outcome is a triple (q, PJack, PJill) ∈ X = {0, 1} × R × R.

In the previous sentence, I would put q and P in parentheses after the things they refer to, to make this slightly clearer.

comment by Vulture · 2014-05-01T02:01:12.393Z · LW(p) · GW(p)

like when Jack values the renovation at $120 and Jill values it at $200. Jack would vote “no”, even though their total value is $310.

I think there's an error in the arithmetic here...

That said, great post! The math bits could probably do to be more gentle, mostly just cause you introduce a lot of concepts in a short space. Nevertheless, it seemed fundamentally approachable - I have relatively little math background (high school calculus and some dabbling in elementary game theory) but I feel like I'm I capable of understanding this fairly easily, even if I didn't actually understand all of it on the first or second go.

Thumbs up!

Replies from: badger, Gunnar_Zarncke↑ comment by badger · 2014-05-01T13:25:08.822Z · LW(p) · GW(p)

Thanks for catching that!

I did introduce a lot here. Now that I've thrown all the pieces of the model out on the table, I'll include refreshers as I go along so it can actually sink in.

Replies from: Vulture↑ comment by Vulture · 2014-05-01T13:37:43.918Z · LW(p) · GW(p)

Oh, and now that I'm going over it more carefully, another nitpick: You don't seem to actually define the notation Π_i before using it in the definition of a social choice function, and it isn't clear (to me) from context what it's supposed to mean.

Replies from: badger↑ comment by badger · 2014-05-01T14:35:43.913Z · LW(p) · GW(p)

That's an indexed Cartesian product, analogous to sigma notation for indexed summation, so is the set of all vectors of agent types.

↑ comment by Gunnar_Zarncke · 2014-05-01T08:22:45.221Z · LW(p) · GW(p)

I think there's an error in the arithmetic here...

I don't Jack will vote no because he has to pay 150 but values the renovation at 120 leading to a benefit of -30 for him if he assumes that same for Jill.

comment by Arkanj3l · 2014-05-02T21:44:16.361Z · LW(p) · GW(p)

Just found this lecture dump for a course on algorithmic game theory and mechanism design for computer scientists: https://www.cs.duke.edu/courses/fall06/cps296.2/

If you scan the domain with google (i.e. with the 'site:' operator) some important PDFs come up.

comment by farari7 · 2021-04-01T01:48:13.261Z · LW(p) · GW(p)

Thank you for this refreshing explanation about Mechanism Design. While reading this I was wondering if it could be used as a framework for the alignment of AGI agents? If there is any possibility to add several layers of mechanisms to achieve particular behaviors from an AGI a? My intuition tells me something that could be useful to know from an Intelligent Agent is its Source code. What do you think?

comment by dougclow · 2014-05-01T14:13:49.787Z · LW(p) · GW(p)

Interesting stuff, thanks; looking forward to the rest of the series.

As an aside, this makes the benefits of being able to rely on trust most of the time very apparent. Jack and Jill can coordinate very simply and quickly if they trust each other to honestly disclose their true value for the project. They don't even need to be able to trust 100%, just trust enough that on average they lose no more to dishonesty than the costs of more complex and sophisticated methods of bargaining. (Which require more calculating capacity than unaided humans have evolved.)

comment by 9eB1 · 2014-05-01T01:37:40.505Z · LW(p) · GW(p)

I'm very interested to see the solution to the painting puzzle. I tried out a bunch of different sealed-bid mechanisms and none seemed to work properly. All of them provided an advantage to shading from one player assuming the other played honestly. I was just trying them out in a table of outcomes, not trying to solve analytically as I don't think I understand the math well enough to do so at the moment.