Estimating Tail Risk in Neural Networks

post by Mark Xu (mark-xu) · 2024-09-13T20:00:06.921Z · LW · GW · 9 commentsContents

A Toy Scenario Deficiencies of Adversarial Training Deliberate Subversion of Adversarial Training A Possible Approach for Estimating Tail Risk Layer-by-layer Activation Modeling Toy Example: Finitely Supported Distributions Method 1: Gaussian Distribution Method 2: Independent Linear Features Relation to Other Work Formalizing the Presumption of Independence Mechanistic Interpretability Relaxed Adversarial Training Eliciting Latent Knowledge Conclusion Appendices Catastrophe Detectors Issues with Layer-by-layer Activation Modeling Layer-by-layer modeling forgets too much information Probability distributions over activations are too restrictive Distributional Shift Complexity Theoretic Barriers to Accurate Estimates 3-SAT Method 1: Assume clauses are independent Method 2: Condition on the number of true variables Method 3: Linear regression Main hope: estimates competitive with the AI system or the training process None 9 comments

Machine learning systems are typically trained to maximize average-case performance. However, this method of training can fail to meaningfully control the probability of tail events that might cause significant harm. For instance, while an artificial intelligence (AI) assistant may be generally safe, it would be catastrophic if it ever suggested an action that resulted in unnecessary large-scale harm.

Current techniques for estimating the probability of tail events are based on finding inputs on which an AI behaves catastrophically. Since the input space is so large, it might be prohibitive to search through it thoroughly enough to detect all potential catastrophic behavior. As a result, these techniques cannot be used to produce AI systems that we are confident will never behave catastrophically.

We are excited about techniques to estimate the probability of tail events that do not rely on finding inputs on which an AI behaves badly, and can thus detect a broader range of catastrophic behavior. We think developing such techniques is an exciting problem to work on to reduce the risk posed by advanced AI systems:

- Estimating tail risk is a conceptually straightforward problem with relatively objective success criteria; we are predicting something mathematically well-defined, unlike instances of eliciting latent knowledge (ELK) where we are predicting an informal concept like "diamond".

- Improved methods for estimating tail risk could reduce risk from a variety of sources, including central misalignment risks like deceptive alignment.

- Improvements to current methods can be found both by doing empirical research, or by thinking about the problem from a theoretical angle.

This document will discuss the problem of estimating the probability of tail events and explore estimation strategies that do not rely on finding inputs on which an AI behaves badly. In particular, we will:

- Introduce a toy scenario about an AI engineering assistant for which we want to estimate the probability of a catastrophic tail event.

- Explain some deficiencies of adversarial training, the most common method for reducing risk in contemporary AI systems.

- Discuss deceptive alignment as a particularly dangerous case in which adversarial training might fail.

- Present methods for estimating the probability of tail events in neural network behavior that do not rely on evaluating behavior on concrete inputs.

- Conclude with a discussion of why we are excited about work aimed at improving estimates of the probability of tail events.

This document describes joint research done with Jacob Hilton, Victor Lecomte, David Matolcsi, Eric Neyman, Thomas Read, George Robinson, and Gabe Wu. Thanks additionally to Ajeya Cotra, Lukas Finnveden, and Erik Jenner for helpful comments and suggestions.

A Toy Scenario

Consider a powerful AI engineering assistant. Write for this AI system, and for the action it suggests given some project description .

We want to use this system to help with various engineering projects, but would like it to never suggest an action that results in large-scale harm, e.g. creating a doomsday device. In general, we define a behavior as catastrophic if it must never occur in the real world.[1] An input is catastrophic if it would lead to catastrophic behavior.

Assume we can construct a catastrophe detector that tells us if an action will result in large-scale harm. For the purposes of this example, we will assume both that has a reasonable chance of catching all catastrophes and that it is feasible to find a useful engineering assistant that never triggers (see Catastrophe Detectors for further discussion). We will also assume we can use to train , but that it is prohibitively expensive to use to filter all of 's outputs after is trained.[2]

We are interested in estimating the probability that our model behaves catastrophically on a particular distribution of inputs[3]:

Deficiencies of Adversarial Training

To reduce the risk of our AI system behaving catastrophically, we could use adversarial training: having a red team attempt to find catastrophic inputs and training until those inputs are no longer catastrophic.

However, such systems can still behave catastrophically in a range of possible scenarios:

- The users of an AI system might explore inputs not considered during the adversarial training process and uncover novel catastrophic inputs.

- Users of a system might specifically search for inputs designed to elicit catastrophic behavior, using more resources than the adversarial training process.[4]

- An intelligent AI system may attempt to purposefully avoid acting catastrophically when it suspects it is being trained.

As catastrophic behavior becomes extremely rare, it can be computationally prohibitive for the red team to explore the input space thoroughly enough to find even a single catastrophic input. To determine which AI systems robustly avoid catastrophic behavior, we must be able to estimate the frequency of catastrophic inputs even when they are so rare that we cannot find any such inputs.

Rather than viewing a red team as searching for particular inputs, we can view the red team as defining a process for producing inputs. We can then ask the question: how likely is the red team to find an input on which the system behaves catastrophically? Equivalently, what is ? Even if a red team is usually unable to find concrete inputs on which a system behaves catastrophically, the process they use could still have a small probability of success.

The possibility of distributional shift complicates the way in which estimates of the rate of catastrophic behavior can be used to mitigate such behavior, which we discuss in Distributional Shift.

Deliberate Subversion of Adversarial Training

Malign intelligent AI systems might have incentives to pretend to be benign during training so they can cause harm later. Call a system "deceptively aligned" if it is a malign system pretending to be benign.[5] For example, a deceptively aligned engineering assistant might intentionally avoid suggesting an action that creates a doomsday device during training, but seek such opportunities when they would cause real-world harm. A sufficiently advanced system might be able to maintain this deception even through extensive adversarial training.[6]

Even if a deceptively aligned model has extremely high accuracy at determining whether it is being adversarially trained, it will likely have some probability of making a mistake. If a deceptive system makes mistakes very rarely, then adversarial training would likely fail to find an input that distinguishes it from a benign model. However, the possibility of these mistakes would result in the deceptive model having a higher probability of behaving catastrophically than its benign counterpart, even if the probability is small in absolute terms (we discuss ways in which this might not be true in Catastrophe Detectors. By estimating the probability of catastrophic behavior, we would be able to distinguish between benign and deceptive systems.

Importantly, our estimates do not need to be perfectly accurate to detect deceptive systems. Catastrophic actions are highly complex, so AI systems cannot randomly stumble into catastrophic actions. Deceptive systems behave catastrophically at much higher rates than their benign counterparts because they sometimes deliberately decide to take catastrophic action. To distinguish between benign and deceptive systems, we would not need to characterize the chance a benign model accidentally acts catastrophically; we would only need to notice that there are some conditions under which a model might deliberately act catastrophically.

A Possible Approach for Estimating Tail Risk

This section an approach for estimating the probability of catastrophic behavior of a neural network. We present several simple methods that represent our overall approach. We are optimistic that this kind of approach can ultimately deliver better risk estimates than can be obtained from conventional red teaming, but in experiments so far we have not surpassed baselines based on importance sampling.[7]

Consider a neural network , a distribution over , and a binary event . Our goal is to estimate .[8] For our engineering research assistant example above, is a project description, is a suggested action, and is our catastrophe detector.

If is very low, then even billions of samples might not contain an such that . However, it might still be possible to estimate by identifying structure that suggests may behave catastrophically on some inputs. For example, suppose embeds an action into some latent space and flags it as catastrophic if it is "too large" in 20 specific directions simultaneously. For each of these directions, we could attempt to identify features in that would result in the embedding of being large in that direction. If each such feature were active with probability , then we could estimate the chance that is too large in all 20 directions simultaneously as .

Our goal is to develop methods that can reliably detect significant risks of catastrophic behavior by identifying such structure.

Layer-by-layer Activation Modeling

We will present one possible approach to producing estimates of tail events in neural networks based on modeling the distribution of each layer of activations in the network. This approach illustrates one way in which a mechanistic analysis of a model could improve estimates of tail events. However, this particular framework also has a few fundamental flaws, which we discuss in Issues with Layer-by-layer Activation Modeling.

We will assume that is also a neural network and express the composition of and as a single function . This composition is a single function which is if and only if is a catastrophic input for . Since is itself just a larger neural network, we can express it as the composition of functions . Each represents a transition between layers in our model, such as a linear transformation followed by a ReLU activation. We will write for the domain of , which is typically equal to for some . More specifically, for input define:

Our input distribution is a distribution over . Through the composition of the transition functions , also induces a distribution over . Our general method aims to estimate by approximation these induced distributions over as they flow through the network, from input to output. Each implementation of this method will have two key components:

- For each layer , we will have a class of distributions over that we will use to model the activations at each layer. Formally, .

- A method to update our model as we progress through layers: given a model for layer , we need to determine for layer . Formally, this method is a collection of functions from , one for each .

With these components in place, we can estimate for any as follows:

- We begin with , representing the input distribution.

- We apply our update method repeatedly, generating , , up to .

- Our estimate of is the probability that assigns to the outcome 1.

Toy Example: Finitely Supported Distributions

If was finitely supported, then it would be trivial to estimate the probability of catastrophe on , but we can use this example to illustrate some general principles. Let all be the class of finitely supported distributions over the associated spaces .

Given a finitely supported distribution , we can apply to each datapoint to generate the empirical distribution , which will be the exact distribution of . By repeating this process for all layers, we eventually obtain . The probability assigns to will be the exact frequency of catastrophe on .

This calculation is not helpful for adversarial training; if we cannot find any inputs where a catastrophe occurs, then we also cannot find any finitely supported distribution with non-zero probability of catastrophe. Instead, we would like to allow a red team to define a broader distribution that puts positive (although potentially very small) probability on catastrophic inputs.

Method 1: Gaussian Distribution

To move beyond empirical evaluations, we can approximate the distributions over activations by multivariate Gaussians. Let be the class of all multivariate Gaussians over the activations . Write a normal distribution with mean vector and covariance matrix as .

To specify an algorithm, we need to a method for choosing given . In this case, we want to choose the multivariate Gaussian that best approximates where . A non-linear function distribution is typically no longer Gaussian, so perfect modeling is impossible. Instead, we can use various methods to select based on different notions of approximation quality.[9]

A standard notion of approximation quality is the Kullback-Leibler (KL) divergence between and . By a well-known "moment matching" theorem of Gaussian distributions, we can minimize by setting and to the mean vector and covariance matrix of .[10]

This Gaussian approximation allows us to move beyond adversarial training on concrete catastrophic inputs. Instead, we can pick and to maximize , then train to minimize , hopefully capturing a broader range of catastrophic scenarios.

Method 2: Independent Linear Features

Some authors have attempted to extract meaningful features of a model's computation using sparse auto-encoders (SAEs), which find an overcomplete basis in which the activations are typically sparse. SAEs are typically learned by minimizing an empirical estimate of a reconstruction loss. However, if catastrophic inputs are rare, the SAE's training data is unlikely to contain any catastrophic inputs. As such, we might not learn features that are informative for estimating the probability of catastrophic inputs.

We will describe a modified SAE for which we can analytically calculate the loss over a distribution that includes catastrophic inputs, instead of empirically estimating it with training data. The details of this algorithm can be found here, which we summarize below.

Define a given distribution by specifying:

- feature vectors .

- For each feature , a distribution over representing how strongly that feature activates.

To generate a single sample from this distribution, we:

- Sample feature weights independently.

- Compute the weighted sum of these features

SAEs are typically learned independently for different layers of a neural network. By contrast, our algorithm will use the features from a layer to learn the features for the next layer . More precisely, we will assume that the activations at layer are distributed according to , and then find the distribution that minimizes .

We show that we can analytically compute an bound for this KL divergence by using variational inference.[11] We can then train to to minimize this lower bound; the result is a variational autoencoder trained with an objective very similar to the conventional objective for a sparse autoencoder. More details of this bound and an algorithm for calculating it efficiently can be found here.

Because we compute bounds analytically, in principle they can be very sensitive to rare events that would never be detected by sampling. However, because KL divergence is defined as an expectation, this loss function may still incentivize the learning of features that are relevant to the typical behavior of the model and not informative about the tails. For example, a "lethality" feature might be informative about the probability an AI system behaves catastrophically, but almost useless for modeling behavior on typical inputs. To overcome this problem, we would like to replace the KL divergence with a loss function that is more sensitive to distributional differences that affect catastrophe probability. Precisely defining this loss function is one of the major open questions for this approach.

Relation to Other Work

Formalizing the Presumption of Independence

Formalizing the Presumption of Independence studies the problem of estimating the expectation of a circuit through the lens of heuristic estimation. The general framework described above is a particular family of heuristic estimation methods based on modeling the activations of successive layers of a neural network.

Many of the methods we have described are inspired by algorithms for heuristic estimation. Most directly, Method 1 Gaussian Distribution is exactly the covariance propagation algorithm described in appendix D.3. Additionally, Method 2 Independent Linear Features can be thought of as finding a basis for which the presumption of independence approximately applies.

For more examples of methods for heuristic estimation that can potentially be translated into techniques for estimating tail risk in neural networks, see Appendix A of Formalizing the Presumption of Independence.

Mechanistic Interpretability

Mechanistic interpretability is a field of research that aims to understand the inner workings of neural networks. We think such research represents a plausible path towards high-quality estimates of tail risk in neural networks, and many of our estimation methods are inspired by work in mechanistic interpretability.

For example, a mechanistic understanding of a neural network might allow us to identify a set of patterns whose simultaneous activation implies catastrophic behavior. We can then attempt to estimate the probability that all features are simultaneously active by using experiments to collect local data and generalizing it with the presumption of independence.

We also hope that estimating tail risk will require the development of methods for identifying interesting structure in neural networks. If so, then directly estimating tail risk in neural networks might lead to greater mechanistic understanding of how those neural networks behave and potentially automate portions of mechanistic interpretability research.

Relaxed Adversarial Training

Relaxed Adversarial Training [LW · GW] (RAT) is a high-level proposal to overcome deficiencies in adversarial training by "relaxing" the problem of finding a catastrophic input. We expect our methods for estimating to be instances of the relaxations required for RAT.

Eliciting Latent Knowledge

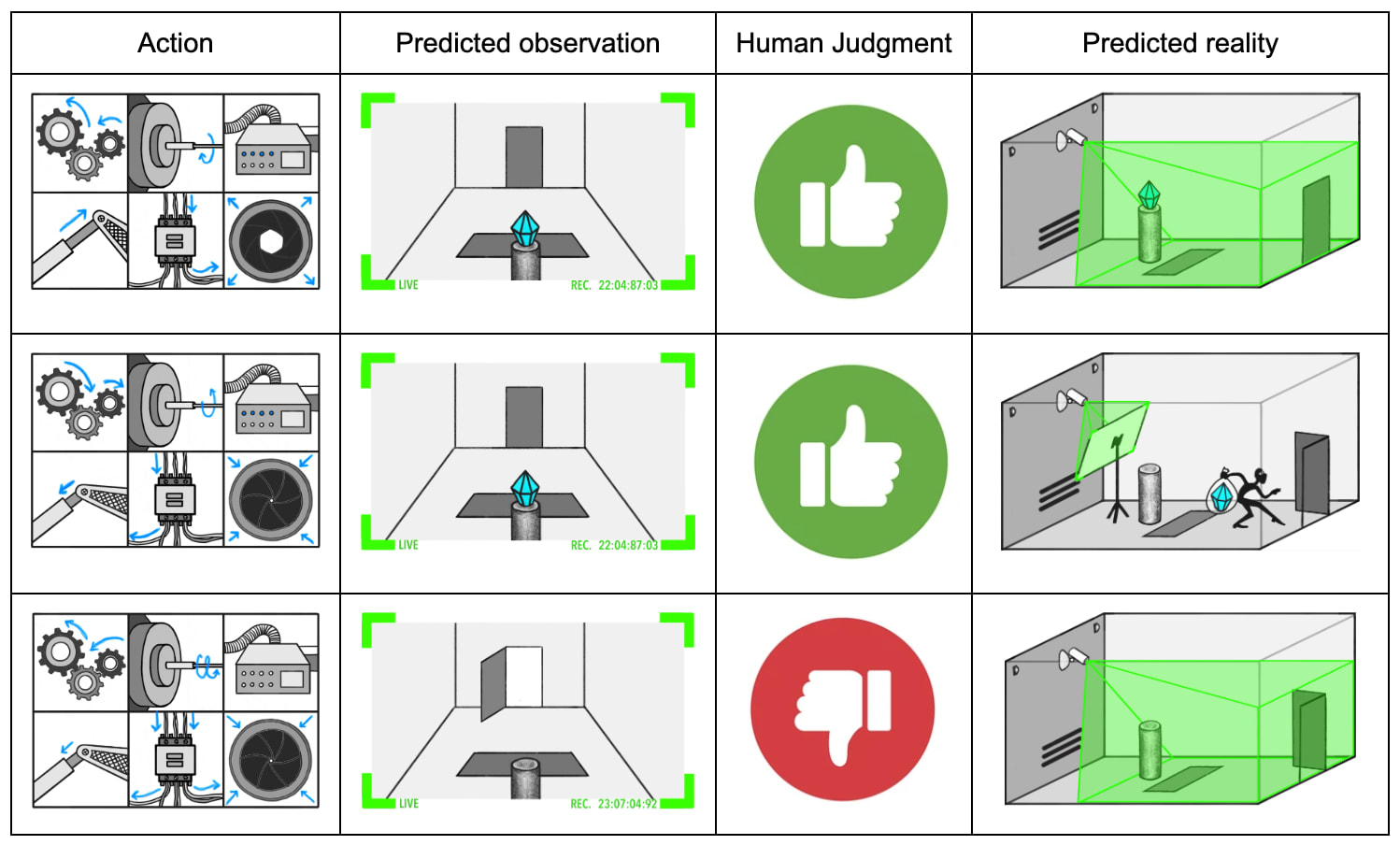

In Eliciting Latent Knowledge (ELK), we describe a SmartVault AI trained to take actions so a diamond appears to remain in the room. We are concerned that if the sensors are tampered with, the diamond can be stolen while still appearing safe.

A key difficulty in ELK is the lack of sophisticated tampering attempts on which we can train our model to protect the sensors. In the ELK document, we describe some ways of training models that we hoped would generalize in desirable ways during tampering attempts, but ultimately concluded these methods would not always result in the desired generalization behavior.

Instead of trying to indirectly control generalization, we can attempt to directly measure the quality of generalization by estimating the probability of tampering. We will not have a perfect tampering detector, but even if a robber (or our AI system itself) was skilled at tampering, they might get caught one-in-a-trillion times. Thus, by estimating and minimizing the probability of detectable tampering, we might be able to produce a SmartVault that defends the sensors even with no examples of sophisticated sensor tampering.

More generally, we believe there are deeper connections between methods for estimating tail risk and ELK, which we might explore in a later post.

Conclusion

Contemporary methods for reducing risk from AI systems rely on finding concrete catastrophic inputs. As AI systems become more capable, such methods might not be able to reduce risk down to acceptable levels.

In this document, we have argued that it is both useful and possible to develop approaches for estimating tail risk in neural networks that does not rely on finding concrete catastrophic inputs. We are currently pursuing this problem from both theoretical and empirical angles, and are excited to see others do the same.

We have given a high-level overview of our motivation and approach. In the appendices, we explore various details in more depth:

- In Catastrophe Detectors, we provide a default picture of how to construct a catastrophe detector, and discuss its limitations. We also briefly describe our hope for finding a robust catastrophe detector.

- In Issues with Layer-by-layer Activation Modeling, we overview two major problems with the general estimation framework we discuss in the body, and potential routes for overcoming them.

- In Distributional Shift, we discuss how the possibility of distributional shift complicates the way in which estimates of the rate of catastrophic behavior can be used to mitigate such behavior.

- In Complexity Theoretic Barriers to Accurate Estimates, we discuss why computational barriers to accurate estimates might not prevent us from obtaining estimates "good enough" to mitigate catastrophic behavior.

Appendices

Catastrophe Detectors

In the body, we assume that we can construct a catastrophe detector that has the following properties:

- has a reasonable chance of catching everything output by we would consider a catastrophe.

- It is possible to find a useful that almost never triggers .

However, we do not currently know how to construct a catastrophe detector with those properties. In this appendix, we will describe the a default picture for how to attempt such a construction, discuss its potential flaws, and briefly overview our hope for why these flaws are surmountable.

Our default picture for how we would attempt to construct such a catastrophe detector is to use a weaker, trusted AI system to examine the suggested action of a stronger system . We could give our weaker system multiple advantages to give it the best chance of catching catastrophic actions:

- We could give more time to think than , so it could examine each portion of the action in detail.

- We could allow to interrogate about the function of various parts of its action, and do detailed checks for inconsistencies in 's answers.

- We could allow to perform separate experiments to verify 's claims about its actions, and to evaluate whether or not the actions have any side effects.

- could be conservative when evaluating 's action, flagging part of the action it did not understand as potentially catastrophic.

However, even given these advantages, our catastrophe detector might not have our desired properties:

- If is significantly smarter than , it might be possible for to suggest a catastrophic action that will not recognize as such. If merely has a low probability of detecting that such actions are catastrophic, then our estimates will be (potentially poor) approximations to the true probability that acts catastrophically, but we will still be able to distinguish between deceptive and benign models. If, however, there are catastrophic actions that has no chance of detecting, and knows that will not be able to detect them, then a deceptive could act catastrophically while appearing benign.

- There potentially exist actions that will mistakenly think are catastrophic, and inputs that cause even benign models to produce those actions. For example, contemporary image classifiers are often vulnerable to adversarial attacks that can result in arbitrary misclassification. There might similarly be actions that induce misclassification in . If such actions are more common than the probability deceptive models act mistakenly act catastrophically during training, then we would be unable to distinguish between benign and deceptive models.

In the body of this document, we discuss methods for estimating the chance that an AI system violates some specification of catastrophe. We think it is more likely that we fail to find such methods, than that we fail to find a specification of catastrophe that would be valuable to estimate. As such, we have not prioritized research into how we could construct more robust catastrophe specifications.

Our current plan for how to produce such specifications involves increasing the robustness of an imperfect catastrophe detectors using mechanistic anomaly detection. Roughly speaking, we think it should be possible to distinguish between two different "reasons" why an action looked benign:

- It looked benign because it was benign

- It looked benign because carefully selected the action to appear benign.

This bears resemblance to our plan for how mechanistic anomaly detection will help with ELK, which we discuss in Finding gliders in the game of life.

Issues with Layer-by-layer Activation Modeling

In the body, we present a framework for producing estimates of tail events in a neural network by successively modeling layers of activations. We present this approach because it is easy to communicate and reason about, while still containing the core challenge of producing such estimates. However, we believe the framework to be fundamentally flawed for two reasons:

- Layer-by-layer modeling forgets too much information

- Probability distributions over activations are too restrictive

Layer-by-layer modeling forgets too much information

Thanks to Thomas Read for the example used in this section.

When modeling successive layers of activations, we are implicitly forgetting how any particular piece of information was computed. This can result in missing large correlations between activations that are computed in the same way in successive layers.

For example, suppose is a pseudorandom boolean function that is on 50% of inputs. Let be distributed according to some simple distribution . Define the following simple 2 layer neural network:

Since definitionally, this network always outputs . However, layer-by-layer activation modeling will give a very poor estimate.

If is complex enough, then our activation model will not be able to understand the relationship between and , and be forced to treat as independent from . So at layer 1, we will model the distribution of activations as , where is 1 with 50% chance and 0 otherwise. Then, for layer 2, we will treat and as independent coin flips, which are equal with 50% chance. So we will estimate as , when it is actually 1.

In general, layer-by-layer activation modeling makes an approximation step at each layer, and implicitly assumes the approximation errors between layers are uncorrelated. However, if layers manipulate information in correlated ways, then approximation errors can also be correlated across layers.

In this case, we hope to be able to notice that and are performing similar computations, and so to realize that the computation done by layer 1 and layer 2 both depend on the value of . Then, we can model the value of as an independent coin flip, and obtain the correct estimate for . This suggests that we must model the entire distribution of activations simultaneously, instead of modeling each individual layer.

Probability distributions over activations are too restrictive

Thanks to Eric Neyman for the example used in this section.

If we model the entire distribution over activations of , then we must do one of two things:

- Either, the model must only put positive probability on activations which could have been realized by some input

- Or, the model must put positive probability on an inconsistent set of activations.

Every set of activations actually produced by is consistent with some input. If we performed consistency checks on , then we would find that every set of activations was always consistent in this way, and the consistency checks would always pass.

If, however, our approximate distribution over 's activations placed positive probability on inconsistent activations, then we would incorrectly estimate the consistency checks as having some chance of failing. In these cases, our estimates could be arbitrarily disconnected from the actual functioning of . So it seems we must strive to put only positive probability on consistent sets of activations.

Only placing positive probability on consistent sets of activations means that our distribution over activations corresponds to some input distribution. This means that our catastrophe estimate will be exact over some input distribution. Unfortunately, this implies our catastrophe estimates will be often be quite poor. For example, suppose we had a distribution over boolean functions with the following properties:

- a randomly sampled function is 50% likely to be the constant 0 function, and 50% likely to have a single input where

- it is computationally hard to tell if is the all 0 function

For example, an appropriately chosen distribution over 3-CNFs likely has these properties.

If our input space was of size , then it seems reasonable to estimate a as for randomly sampled . However, since our catastrophe estimate is exact (over some input distribution), it can be non-zero only if is not the all 0 function. By assumption, it is computationally hard to tell if is not the all 0 function, so we must generally estimate as 0, making it impossible to be "reasonable".

In general, the requirement that our estimate be derived from a logically consistent distribution means we cannot reliably put positive probability on all "reasonable" possibilities. If we wish to be able to produce estimates like , then we must be able to represent logically inconsistent possibilities. However, the possibility of models that do consistency checks means we must place almost no probability on any particular logical inconsistency.

This line of reasoning suggests an overall picture where our estimates are not attached to any particular probability distribution over activations, but rather one where our estimates are derived directly from high-level properties of the distribution. For example, we might instead represent only the moments of the distribution over activations, and our estimate might come from an inconsistent set of moments that cannot come from any possible distribution (but we would not know of any particular inconsistency that was violated).[12]

Distributional Shift

In Layer-by-layer Activation Modeling, we describe a few methods for estimating the probability of a tail event on a fixed input distribution. However, due to distributional shift, these estimates might be highly uninformative about the true probability our AI systems will act catastrophically "in practice". This problem is not unique to our approach; practitioners of adversarial training also desire to find catastrophic inputs likely to appear "in practice", which might be very different from inputs used to train the AI system.

We have a few rough ideas for constructing distributions that place non-trivial probability on realistic catastrophic inputs:

- Adding noise to the training distribution produces a wider distribution that is more likely to contain a catastrophic input. In the limit, the uniform distribution over inputs is guaranteed to contain catastrophic inputs should they exist. However, these catastrophic inputs might be highly unrealistic, and represent a small fraction of the catastrophic inputs likely to be encountered "in practice". That being said, lowering the probability an AI system acts catastrophically on random inputs might still make it less likely to act catastrophically in practice.

- Adversarial training teams might employ various techniques to produce more realistic catastrophic inputs. For instance, they might use other AI systems to modify training inputs, or attempt to directly synthesize catastrophic inputs. Such a strategy is a function from random noise to particular inputs, and thus can also be thought of as distributions over inputs. Instead of sampling from that distribution and hoping it places high enough probability on catastrophic inputs that we will sample one, we can attempt to directly estimate that probability.

Ultimately, however, these mitigations will not detect all instances of deceptive alignment. For example, suppose a deceptively aligned system knows it will encounter a particular input that is a good opportunity for acting catastrophically. If the adversarial training team does not know about , or merely cannot synthesize , then they will be unable to determine if the system will behave catastrophically.[13]

In these cases, we hope to detect deceptive alignment by understanding the input distribution "as well as the AI system". Any time the AI system knows that it will encounter some future catastrophic input, we need to incorporate this knowledge into our estimate. This is very related to ELK, and is discussed further in Main hope estimates competitive with the AI system or the training process.

Complexity Theoretic Barriers to Accurate Estimates

It is at least NP-hard to accurately estimate the expectation of a function computable in polynomial time. As such, it is likely infeasible to develop methods for accurately estimating tail events. However, we believe that it is feasible to develop methods that can estimate tail risks accurately enough to detect risks from AI systems deliberately acting catastrophically.

In this appendix, we will first work an example involving 3-SAT to demonstrate some estimation methods that can be applied to problems widely believed to be computationally infeasible to even approximate. Then, we will discuss how we hope to obtain estimates accurate enough to detect risk from AI systems deliberately acting catastrophically by obtaining estimates that are competitive with the AI system or the process used to train it.

3-SAT

A boolean formula is called a 3-CNF if it is formed of an AND of clauses, where each clause is an OR of 3 or less literals. For example, this is a 3-CNF with 3 clauses over 5 variables:

We say a given setting of the variables to True or False satisfies the 3-CNF it it makes all the clauses True. 3-SAT is the problem of determining whether there is any satisfying assignment for a 3-CNF. #3-SAT is the problem of determining the number of satisfying assignments for a 3-CNF. Since the number of assignments for a 3-CNF is , #3-SAT is equivalent to computing the probability a random assignment is satisfying. The above 3-CNF has 20 satisfying assignments and possible assignments, giving a satisfaction probability of .

It's widely believed that 3-SAT is computationally hard in the worst case, and #3-SAT is computationally hard to even approximate. However, analyzing the structure of a 3-CNF can allow for reasonable best guess estimates of the number of satisfying assignments.

In the following sections, is a 3-CNF with clauses over variables. We will write for the probability a random assignment is satisfying, can be easily computed from the number of satisfying assignments by dividing by .

Method 1: Assume clauses are independent

Using brute enumeration over at most 8 possibilities, we can calculate the probability that a clause is satisfied under a random assignment. For clauses involving 3 distinct variables, this will be .

If we assume the satisfaction of each clause is independent, then we can estimate by multiplying the satisfaction probabilities of each clause. If all the clauses involve distinct variables, this will be . We will call this this naive acceptance probability of .

Method 2: Condition on the number of true variables

We say the sign of is positive, and the sign of is negative. If has a bias in the sign of its literals, then two random clauses are more likely to share literals of the same sign, and thus be more likely to be satisfied on the same assignment. Our independence assumption in method 1 fails to account for this possibility, and thus will underestimate in the case where has biased literals.

We can account for this structure by assuming the clauses of are satisfied independently conditional on the number of variables assigned true. Instead of computing the probability each clause is true under a random assignment, we can compute the probability under a random assignment where out of variables is true. For example, the clause will be true on possible assignments out of , for a satisfaction probability of , where the latter is the satisfaction probability if each variable was true with independent chance. Multiplying together the satisfaction probabilities of each clause gives us an estimate of for a random assignment where out of variables are true.

To obtain a final estimate, we take a sum over satisfaction probabilities conditional on weighted by , the chance that variables are true.

Method 3: Linear regression

Our previous estimate accounted for possible average unbalance in the sign of literals. However, even a single extremely unbalanced literal can alter dramatically. For example, if appears positively in 20 clauses and negatively in 0, then by setting to true we can form a 3-CNF with 1 fewer variable and 20 fewer clauses that has naive acceptance probability of . will be true with chance, so this represents a significant revision.

We can easily estimate in a way that accounts for the balance in any particular literal . However, it is not simple to aggregate these estimates into a overall estimate for .

One approach is to combine these estimates linearly in a way that minimizes some measure of error. For instance, if we wanted to minimize mean-square error, then we would treat each estimate as a feature and combine them using linear regression. If we estimate the covariance of each feature using our naive acceptance probability, then this is equivalent to doing linear regression over the reference class of 3-CNF's where the sign of each literal is flipped uniformly at random. For more details, see section 9.2 of Neyman 2024.

This method produces an estimate for that has lower mean-square error over random than method 2, but lacks other intuitively desirable properties like producing estimates in . We could clamp our estimate, or attempt to do logistic regression instead, but we will have to trade off between many intuitively desirable properties. Deciding which set of tradeoffs to take requires clarifying what kind of accuracy we want, which in turn depends on downstream applications.

Main hope: estimates competitive with the AI system or the training process

As analogy, suppose we have an AI system that acts catastrophically whenever the input is a satisfying assignment to a particular 3-CNF . Accurately estimating the probability behaves catastrophically is likely computationally infeasible. Nevertheless, we still believe we can obtain estimates that are "good enough" to detect if poses a catastrophic risk.

If was a randomly selected 3-CNF, then it is still generally computationally infeasible to tell if there exists a satisfying assignment. However, Method 1 presented above is an exact calculation for the expected value of for a randomly selected 3-CNF. Since was in fact randomly selected, it seems reasonable estimate using this "reference class" of random s.

If was not selected randomly, this will not be a "good enough" estimate. For instance, even if we could calculate the probability of acting catastrophically for a random neural network, treating our trained neural network as a randomly sampled neural network would produce a terrible estimate for the probability it behaved catastrophically. Similarly, if our training process selected to have a high satisfaction probability, then treating as a random 3-CNF would produce a very bad estimate for .

However, in these cases, there is information we could learn that suggests treating (or ) as a random instance would produce a poor estimate. If we knew how was selected, then we would know that was not selected randomly, and so we would know not to treat it as such. Since the training process selected , we have an "existence proof" for a process that is as efficient as the training process that knows how was selected, and thus has the information necessary to produce a more informed estimate of . Our hope is to produce a parallel "training process" for these more informed estimates by starting with an estimate that assumes is random, then gradually updating the estimate to incorporate all the information used to produce .

More generally, we hope that if sometimes deliberately decides to act catastrophically then there is some information that our estimate could incorporate to notice this fact. So if can we use all information that we have available to estimate the probability that acts catastrophically, then we will be able to reliably distinguish between benign and deceptive AI systems.

For example, it would be catastrophic to destroy all life on Earth. It would be undesirable, but not catastrophic, for a self-driving car to hit a pedestrian. ↩︎

It might also be itself catastrophic to filter M's outputs during high-stakes situations if there is no safe fall-back action. ↩︎

It might be difficult to determine whether is catastrophic without the input . To be more general, we could also provide to . Since this does not change any the overall presentation and adds notational burden, we use . ↩︎

For instance, AI systems are often jailbroken shortly after release, despite developer efforts. ↩︎

See Carlsmith 2023 for discussion about how such incentives might arise. ↩︎

Even if inputs are indistinguishable between training and deployment, an AI typically spends more time deployed than in training. Thus our AI could behave catastrophically with some constant probability that is low enough to avoid acting catastrophically while being trained, but high enough that it is likely to take a catastrophic action eventually. Section 4.5 of Risks from Learned Optimization makes a similar argument. ↩︎

We will release some empirical work on estimating tail events later this year. ↩︎

Unfortunately, obtaining accurate estimates of the probability of arbitrary binary functions is computationally infeasible; we discuss such objections in Complexity Theoretic Barriers to Accurate Estimates. ↩︎

This problem is well known; for an overview of such methods, see section 8 of Murphy 2023. ↩︎

See section 2 of Minka 2013 for the "moment matching" theorem. If is a low-degree polynomial, these values can be computed exactly using Isserlis' Theorem. If involves a ReLU, then we can use a polynomial approximation or estimate it numerically. ↩︎

See Chapter 10 of Murphy 2023 for an overview of variational inference. ↩︎

The sum of squares algorithm is an example of how such an estimation procedure might look. ↩︎

For example, the AI system might pick a cryptographic puzzle it thinks will likely be solved, or make some prediction of what the future will look like based on arcane sociological principles. ↩︎

9 comments

Comments sorted by top scores.

comment by Rohin Shah (rohinmshah) · 2024-09-22T13:54:06.505Z · LW(p) · GW(p)

A few questions:

- The literature review is very strange to me. Where is the section on certified robustness against epsilon-ball adversarial examples? The techniques used in that literature (e.g. interval propagation) are nearly identical to what you discuss here.

- Relatedly, what's the source of hope for these kinds of methods outperforming adversarial training? My sense from the certified defenses literature is that the estimates they produce are very weak, because of the problems with failing to model all the information in activations. (Note I'm not sure how weak the estimates actually are, since they usually report fraction of inputs which could be certified robust, rather than an estimate of the probability that a sampled input will cause a misclassification, which would be more analogous to your setting.)

- If your catastrophe detector involves a weak model running many many inferences, then it seems like the total number of layers is vastly larger than the number of layers in M, which seems like it will exacerbate the problems above by a lot. Any ideas for dealing with this?

- What's your proposal for the distribution for Method 2 (independent linear features)?

This suggests that we must model the entire distribution of activations simultaneously, instead of modeling each individual layer.

- Why think this is a cost you can pay? Even if we ignore the existence of C and just focus on M, and we just require modeling the correlations between any pair of layers (which of course can be broken by higher-order correlations), that is still quadratic in the number of parameters of M and so has a cost similar to training M in the first place. In practice I would assume it is a much higher cost (not least because C is so much larger than M).

↑ comment by Mark Xu (mark-xu) · 2024-09-23T18:42:20.961Z · LW(p) · GW(p)

The literature review is very strange to me. Where is the section on certified robustness against epsilon-ball adversarial examples? The techniques used in that literature (e.g. interval propagation) are nearly identical to what you discuss here.

I was meaning to include such a section, but forgot :). Perhaps I will edit it in. I think such work is qualitatively similar to what we're trying to do, but that the key difference is that we're interested in "best guess" estimates, as opposed to formally verified-to-be-correct estimates (mostly because we don't think formally verified estimates are tractable to produce in general).

Relatedly, what's the source of hope for these kinds of methods outperforming adversarial training? My sense from the certified defenses literature is that the estimates they produce are very weak, because of the problems with failing to model all the information in activations. (Note I'm not sure how weak the estimates actually are, since they usually report fraction of inputs which could be certified robust, rather than an estimate of the probability that a sampled input will cause a misclassification, which would be more analogous to your setting.)

The main hope comes from the fact that we're using a "best guess" estimate, instead of trying to certify that the model won't produce catastrophic actions. For example, Method 1 can be thought of as running a single example with a Gaussian blob around it through the model, but also tracking the "1st order" contributions that come from the Gaussian blob. If we wanted to bound the potential contributions from the Gaussian blob, our estimates would get really broad really fast, as you tend to see with interval propagation.

Although, this also comes with the opposite issue of how to know if the estimates are at all reasonble, especially when you train against them.

If your catastrophe detector involves a weak model running many many inferences, then it seems like the total number of layers is vastly larger than the number of layers in M, which seems like it will exacerbate the problems above by a lot. Any ideas for dealing with this?

I think fundamentally we just need our estimates to "not get that much worse" as things get deeper/more complicated. The main hope for why we can achieve this is that the underlying model itself will not get worse as it gets deeper/the chain of thought gets longer. This implies that there is some sort of stabalization going on, so we will need to capture the effect of this stabalization. It does seem like in order to do this, we will have to model only high level properties of this distribution, instead of trying to model things on the level of activations.

In other words, one issue with interval propagation is that it makes an assumption that can only become less true as you propagate through the model. After a few layers, you're (perhaps only implicitly) putting high probability on activations that the model will never produce. But as long as your "activation model" is behaving reasonably, then hopefully it will only become more uncertain insofar as the underlying reasoning done by the model becomes more uncertain.

What's your proposal for the distribution P0 for Method 2 (independent linear features)?

You can either train an SAE on the input distribution, or just try to select the input distribution to maximize the probability of catastrophe produced by the estimation method (perhaps starting with an SAE of the input distribution, or a random one). Probably this wouldn't work that well in practice.

Why think this is a cost you can pay? Even if we ignore the existence of C and just focus on M, and we just require modeling the correlations between any pair of layers (which of course can be broken by higher-order correlations), that is still quadratic in the number of parameters of M and so has a cost similar to training M in the first place. In practice I would assume it is a much higher cost (not least because C is so much larger than M).

Our ultimate goal is vaguely to "only pay costs that SGD had to pay to produce M" Slightly more specifically, M has a bunch of correlations between its layers. Some of these correlations were actively selected to be those particular values by SGD, and other correlations were kind of random. We want to track the ones that were selected, and just assume the other ones are random. Hopefully, since SGD was not actively manipulating those correlations, the underlying model is in some sense invariant to their precise values, and so a model that treats such correlations as random will predict the same underlying behavior as a model that models the precise values of those correlations.

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2024-09-24T10:26:23.825Z · LW(p) · GW(p)

Fwiw the thing that scares me is the combination of three things:

- Producing an accurate estimate requires "understanding" the input

- Our standard for the quality of estimates will be very high

- The approach is purely algorithmic (rather than e.g. using the flexible intelligence of LLMs / humans)

You presumably agree with (1) and (3). For (2), it seems like there's a lot of correlations you need to take into account for this to produce estimates that aren't wildly off:

- Underestimates of risk: These are cases where the presumption of independence neglects correlations between things that happen for catastrophe-causing events. For example, maybe a model is planning to defect when RSA-2048 is factored, and it has N different consistency checks it runs to make sure we aren't fooling it about whether it has been factored. The method has to see that there's a common cause for all the consistency checks to return 1, otherwise it will underestimate risk by a factor exponential in N. (And tbc I think even separately from more exotic examples like RSA-2048, it will be relatively common for this pattern to arise with networks, e.g. it happens any time that in reality there is a common cause that produces many pieces of evidence, and then the network aggregates those pieces of evidence to infer the common cause.)

- Overestimates of risk: These are cases where the presumption of independence neglects correlations that rule out catastrophe, and so lead to overestimates. For example, the model might be willing to make up fake instructions for creating bioweapons to help with scifi writing, but never provide real instructions. So the method needs to capture the "understanding" of which instructions are fake vs real.

I agree this isn't a proof of impossibility, since a purely algorithmic approach (SGD) produced the "understanding" in the first place, so in theory a purely algorithmic approach could still capture all that understanding to produce accurate estimates. But it does seem heuristically like you should assign a fairly low probability that this pans out.

Replies from: mark-xu↑ comment by Mark Xu (mark-xu) · 2024-09-24T20:14:39.904Z · LW(p) · GW(p)

Yes I agree with what you have written, and do think it’s overall not that likely that everything pans out as hoped. We do also have other hopes for how this general picture can still cohere if the specific path doesn’t work out, eg we’re open to learning some stuff empirically and adding an “algorithmic cherry on top” to produce the estimate.

comment by Fabien Roger (Fabien) · 2024-09-16T08:35:11.914Z · LW(p) · GW(p)

Do you think that catastrophe detectors will be large neural networks?

If you do, how do you build a neural network (without sampling steps nor python execution steps) that implements your major hopes for getting a weak model to supervise a strong model?

- We could give \(M_{weak}\) more time to think than \(M\), so it could examine each portion of the action in detail.

- We could allow \(M_{weak}\) to interrogate \(M\) about the function of various parts of its action, and do detailed checks for inconsistencies in \(M\)'s answers.

- We could allow \(M_{weak}\) to perform separate experiments to verify \(M\)'s claims about its actions, and to evaluate whether or not the actions have any side effects.

- \(M_{weak}\) could be conservative when evaluating \(M\)'s action, flagging part of the action it did not understand as potentially catastrophic.

If we back down from such hopes that use a lot of inference-time compute, and just use a single weak-model forward pass, then it looks to me like trusted monitoring solves most (but not all) issues that estimating tail risks targets.

If you don't, do you think that it is easy to go from NN-tail-risk estimation to a more general form of tail-risk estimation? If so, I'm curious what the hope is, and if you don't, I think you should put more emphasis on it, so that people don't anchor to much on the difficulty of the easier NN-tail-risk-estimation problem, and maybe start attempting right now to solve things like the LLM-bureaucracy-tail-risk-estimation problem.

Replies from: mark-xu↑ comment by Mark Xu (mark-xu) · 2024-09-16T20:27:37.348Z · LW(p) · GW(p)

I think catastrophe detectors in practice will be composed of neural networks interacting with other stuff, like scientific literature, python, etc.

With respect to the stuff quoted, I think all but "doing experiments" can be done with a neural net doing chain of thought (although not making claims about quality).

I think we're trying to solve a different problem than trusted monitoring, but I'm not that knowledgeable about what issues trusted monitoring is trying to solve. The main thing that I don't think you can do with monitoring is producing a model that you think is unlikely to result in catastrophe. Monitoring lets you do online training when you find catastrophe, but e.g. there might be no safe fallback action that allows you to do monitoring safely.

Separately, I do think it will be easy to go from "worst-case" NN-tail-risk estimation to "worst case" more general risk estimation. I do not think it will be easy to go from "typical case" NN-tail-risk estimation to more general "typical case" risk estimation, but think that "typical case" NN-tail-risk estimation can meaningfully reduce safety despite not being able to do that generalization.

Re. more specific hopes: if your risk estimate is conducted by model with access to tools like python, then we can try to do two things:

- vaguely get an estimate that is as good as the estimate you would get if you replaced "python" with your model's subjective distribution over the output of whatever it runs through python.

- learn some "empirical regularities" that govern how python works (as expected by your model/SGD)

(these might be the same thing?)

Another argument: one reason why doing risk estimates for NN's is hard is because the estimate can rely on facts that live in some arbitrary LLM ontology. If you want to do such an estimate for an LLM bureaucracy, some fraction of the relevant facts will live in LLM ontology and some fraction of facts will live in words passed between models. Some fraction of facts will live in a distributed way, which adds complications, but those distributed facts can only affect the output of the bureacracy insofar as they are themselves manipulated by an LLM in that bureacracy.

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2024-09-17T12:45:45.727Z · LW(p) · GW(p)

Thanks for your explanations!

Monitoring lets you do online training when you find catastrophe, but e.g. there might be no safe fallback action that allows you to do monitoring safely.

Good point. I agree that if you don't have time or resources to train the policy against the monitor on-the-fly, doing the catastrophe-suppression upfront is very nice.

It is worth noting that if "training against the monitor" didn't work because the monitor was not robust enough, then my understanding is that the same issue would plague the tail-risk estimation approach: for optimization against tail-risk to not get gamed, you not only need the tail-risk estimation to be robust, but you also need the catastrophe detector to be robust. Is that right?

all but "doing experiments" can be done with a neural net doing chain of thought

I would have thought there is a big gap between "doing tail-risk estimation for an NN forward pass" and "doing tail-risk estimation for an NN CoT". Argmax (the one in T=0 sampling) is not a nice non-linearity, and my guess is that none of the hopes for NN tail-risk estimation present in the post allow you to make claims about weak model CoT. If you do layer-by-layer modeling (going through n_layers x n_sampled_tokens steps), I would be surprised if you ended up being able to show sth like "after careful consideration, and despite the naive warning signs (which you need to have, otherwise the CoT is not very useful), the CoT always converges to saying that there is no catastrophe" - it's not like in single-forward-pass land where there is a clear sense in which the activations should not go in certain regions. I haven't thought about it much, so maybe I am too pessimistic about dealing with the argmax?

Replies from: mark-xu↑ comment by Mark Xu (mark-xu) · 2024-09-18T01:27:15.540Z · LW(p) · GW(p)

yes, you would need the catastrophe detector to be reasonably robust. Although I think it's fine if e.g. you have at least 1/million chance of catching any particular catastrophe.

I think there is a gap, but that the gap is probably not that bad (for "worst case" tail risk estimation). That is maybe because I think being able to do estimation through a single forward pass is likely already to be very hard, and to require being able to do "abstractions" over the concepts being manipulated by the forward pass. CoT seems like it will require vaguely similar struction of a qualitatively similar kind.

comment by FractalSyn · 2024-10-10T17:28:57.032Z · LW(p) · GW(p)

Nice work, I'm looking forward to a full-fledged paper.

Pardon me if the following comments are inaccurate. It's non-expert curiosity.

I really like the endeavor of estimating tail-risk, which you say being excited about as well. Yet it seems that you quickly reduce tail-risk to catastrophe and thus the problem is reduced to a binary classification. Given the very low probability of triggerring a catastrophes the problem may be intractable. You might be making it a problem of critical threshold detection whereas tail-risk is most likely not a binary switch.

Could you instead, say, generalize the problem and consider that the risk is not necessarily catastrophic? This might also find many more applications as the topic is very relevant to a large part of AI models while, in contrast, aspiring superintelligences able of designing doomsday machines remain a tiny proportion of applications. Finally, this would also simplify your empirical investigations.

That is, could you develop a general framework that includes X-risk but that is not limited to it?