Explaining a Math Magic Trick

post by Robert_AIZI · 2024-05-05T19:41:52.048Z · LW · GW · 10 commentsContents

Introduction Functional Analysis Summary None 10 comments

Introduction

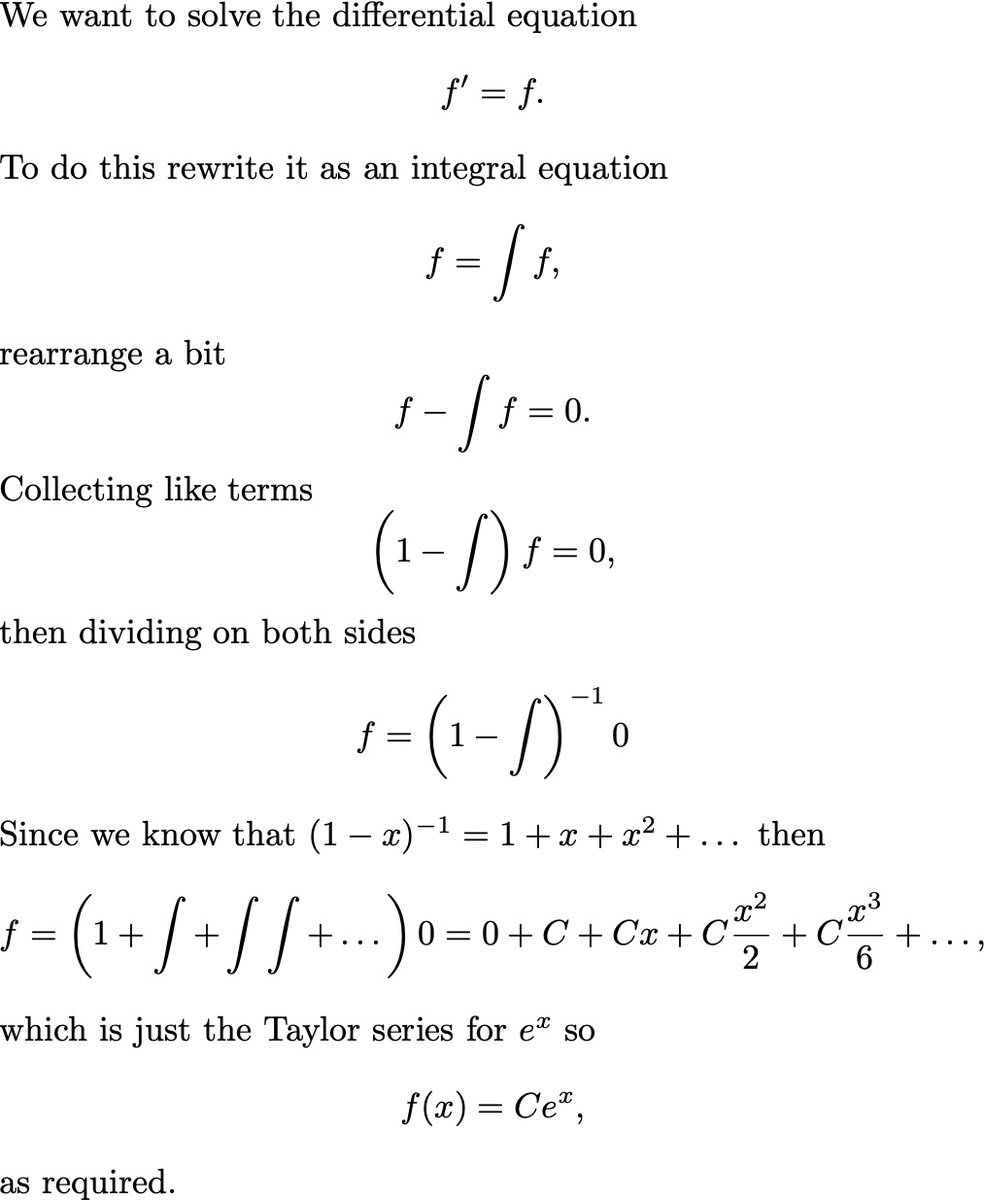

A recent popular tweet did a "math magic trick", and I want to explain why it works and use that as an excuse to talk about cool math (functional analysis). The tweet in question:

This is a cute magic trick, and like any good trick they nonchalantly gloss over the most important step. Did you spot it? Did you notice your confusion?

Here's the key question: Why did they switch from a differential equation to an integral equation? If you can use when , why not use it when ?

Well, lets try it, writing for the derivative:

So now you may be disappointed, but relieved: yes, this version fails, but at least it fails-safe, giving you the trivial solution, right?

But no, actually can fail catastrophically, which we can see if we try a nonhomogeneous equation like (which you may recall has solution ):

However, the integral version still works. To formalize the original approach: we define the function (for integral) to take in a function and produce the function defined by . This rigorizes the original trick, elegantly incorporates the initial conditions of the differential equation, and fully generalizes to solving nonhomogeneous versions like (left as an exercise to the reader, of course).

So why does fail, but works robustly? The answer is functional analysis!

Functional Analysis

Savvy readers may already be screaming that the trick for numbers only holds true for , and this is indeed the key to explaining what happens with and ! But how can we define the "absolute value" of "the derivative function" or "the integral function"?

What we're looking for is a norm, a function that generalizes absolute values. A norm is a function satisfying these properties:

- for all (positivity), and if and only if (positive-definite)

- for all and (triangle inequality)

- for all and real numbers , where denotes the usual absolute value (absolute homogeneity)

Here's an important example of a norm: fix some compact subset of , say , and for a continuous function define , which would commonly be called the -norm of . (We may use a maximum here due to the Extreme Value Theorem. In general you would use a supremum instead.) Again I shall leave it to the reader to check that this is a norm.

This example takes us halfway to our goal: we can now talk about the "absolute value" of a continuous function that takes in a real number and spits out a real number, but and take in functions and spit out functions (what we usually call an operator, so what we need is an operator norm).

Put another way, the -norm is "the largest output of the function", and this will serve as the inspiration for our operator norm. Doing the minimal changes possible, we might try to define . There are two problems with this:

- First, since is linear, you can make arbitrarily large by scaling by 10x, or 100x, etc. We can fix this by restricting the set of valid f for these purposes, just like how for the example restricted the inputs of to the compact set . Unsurprisingly nice choice of set to restrict to is the "unit ball" of functions, the set of functions with .

- Second, we must bid tearful farewell to the innocent childhood of maxima, and enter the liberating adulthood of suprema. This is necessary since ranges over the infinite-dimensional vector space of continuous functions, so the Heine-Borel theorem no longer guarantees the unit ball is compact, and therefore the extreme value theorem no longer guarantees that we will attain a maximum.

So the proper definition of the norm of and are:

(and you can define similar norms for any linear operator, including , etc.) A good exercise is to show these equivalent definitions of the operator norm for any linear function L:

So another way of thinking of the operator norm is the maximum stretching factor of the linear operator. The third definition also motivates the terminology of bounded linear operators: each such is a bound on the operator , and the least such bound is the norm. Fun exercise: show that a linear operator is bounded if and only if it is continuous (with respect to the correct topologies). Hint: you'll need to work in infinite dimensional spaces here, because any finite-dimensional linear operator must be bounded.

Now let's actually compute these norms! For , remember that our -norm is defined over the interval . First observe that for the constant function , , so . Thus . To show that this is indeed the maximum we use the triangle inequality for integrals:

So we have shown ! Put a pin in that while we check .

For , we have a problem: for any positive number , . In other words, can stretch functions by any amount, so it has no norm, or we'd write (and I promise this is a failure of , not of our definitions). Put another way, is not bounded as a linear operator, since it can stretch functions by an arbitrary amount.

But now let's return to . We said that (if we're defining it relative to the -norm on ), but isn't only true when ? For real numbers, yes, but for operators, something magical happens: ! (Its like there's a whole algebra of these operators...)

In fact, you can show that assumes its maximum value when applied to the constant function , and hence have . Since grows faster than exponential functions, is converging to 0 quickly, so is a Cauchy sum, and it is then straightforward to show that the limit is the multiplicative inverse of . Thus, is a valid expression that you can apply to any continuous (or bounded) function on any compact set . This convergence happens regardless of the choice of the compact set, though it will happen at different rates, analogous to uniform convergence on compact sets.

Summary

- Writing for derivative and for integral, we showed that can fail, even though is always true.

- To explain this, we have to show that is fundamentally better behaved than , in a way analogous to .

- We built this up in two steps. First, we defined the -norm for real-valued functions, which lets you say how "large" those functions are. Then, we extended this to function-valued functions (operators), having to make two slight modifications along the way.

- With this machinery in place, we could show that , or we can say that is bounded. The resulting norm depends on the domain of the functions under consideration, but any compact domain is allowable. Also, since , the exact value doesn't matter since the norm of each term goes to 0.

- Since sufficiently quickly, we can say that is Cauchy as a sequence of operators. In other words, if you apply the partial sums as operators to any function , the functions will converge with respect to the -norm. Writing for the function they converge to, it follows that , so we may write as a statement about linear operators.

- In contrast, is unbounded as an operator, meaning . Thus algebra tricks like will break down if you put in the wrong function .

10 comments

Comments sorted by top scores.

comment by Dacyn · 2024-05-06T21:11:35.471Z · LW(p) · GW(p)

This doesn't completely explain the trick, though. In the step where you write f=(1-I)^{-1} 0, if you interpret I as an operator then you get f=0 as the result. To get f=Ce^x you need to have f=(1-I)^{-1} C in that step instead. You can get this by replacing \int f by If+C at the beginning.

Replies from: Robert_AIZI↑ comment by Robert_AIZI · 2024-05-07T03:33:15.599Z · LW(p) · GW(p)

Ah sorry, I skipped over that derivation! Here's how we'd approach this from first principals: to solve f=Df, we know we want to use the (1-x)=1+x+x^2+... trick, but now know that we need x=I instead of x=D. So that's why we want to switch to an integral equation, and we get

f=Df

If=IDf = f-f(0)

where the final equality is the fundamental theorem of calculus. Then we rearrange:

f-If=f(0)

(1-I)f=f(0)

and solve from there using the (1-I)=1+I+I^2+... trick! What's nice about this is it shows exactly how the initial condition of the DE shows up.

comment by notfnofn · 2024-05-06T17:50:57.237Z · LW(p) · GW(p)

Here's a puzzle I came up with in undergrad, based on this idea:

Let be a function with nice derivatives and anti-derivatives (like exponentials, sine, or cosine) and be a polynomial. Express the th anti-derivative of in terms of derivatives and anti-derivatives of and .

Can provide link to a post on r/mathriddles with the answer in the comments upon request

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2024-05-07T03:25:05.238Z · LW(p) · GW(p)

Use integration by parts:

Then is another polynomial (of smaller degree), and is another "nice" function, so we recurse.

↑ comment by notfnofn · 2024-05-07T03:29:38.913Z · LW(p) · GW(p)

This is true, but I'm looking for an explicit, non-recursive formula that needs to handle the general case of the kth anti-derivative (instead of just the first).

The solution involves doing something funny with formal power series, like in this post.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2024-05-07T04:58:23.060Z · LW(p) · GW(p)

Heh, sure.

Promote from a function to a linear operator on the space of functions, . The action of this operator is just "multiply by ". We'll similarly define meaning to multiply by the first, second integral of , etc.

Observe:

Now we can calculate what we get when applying times. The calculation simplifies when we note that all terms are of the form . Result:

Now we apply the above operator to :

The sum terminates because a polynomial can only have finitely many derivatives.

↑ comment by notfnofn · 2024-05-07T13:12:04.374Z · LW(p) · GW(p)

Very nice! Notice that if you write as , and play around with binomial coefficients a bit, we can rewrite this as:

which holds for as well, in which case it becomes the derivative product rule. This also matches the formal power series expansion of , which one can motivate directly

(By the way, how do you spoiler tag?)

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2024-05-07T16:07:05.757Z · LW(p) · GW(p)

Oh, very cool, thanks! Spoiler tag in markdown is:

:::spoiler

text here

:::

comment by Donald Hobson (donald-hobson) · 2024-05-13T17:27:48.159Z · LW(p) · GW(p)

You can make work out, if you are prepared to make your mathematics even more deranged.

So lets look at

Think of the not as but as some infinitesimal times some unknown function .

If that function is then we get which is finite, so multiplied by it becomes infinitesimal.

If then we get and as we know because

So this case is the same as before.

But for we get which doesn't converge. The infinite largeness of this sum cancels with the infinitesimally small size of (Up to an arbitrary finite constant).

So

Great. Now lets apply the same reasoning to

. First note that this is infinite, it's , so undefined. Can we make this finite. Well think of as actually being and in this case, take

For the final term, the smallness of epsilon counteracts having to sum to infinity. For the first and middle term, the sum is

Which is

Now

So we have

The first term is negligible. So

Note that the can be ignored, because we have for arbitrary (finite) C as before.

Now is big, but it's probably less infinite than somehow. Let's just group it into the and hope for the best.

comment by quiet_NaN · 2024-05-10T16:32:09.536Z · LW(p) · GW(p)

Edit: looks like was already raised by Dacyn and answered to my satisfaction by Robert_AIZI. Correctly applying the fundamental theorem of calculus will indeed prevent that troublesome zero from appearing in the RHS in the first place, which seems much preferable to dealing with it later.

My real analysis might be a bit rusty, but I think defining I as the definite integral breaks the magic trick.

I mean, in the last line of the 'proof', gets applied to the zero function.

Any definitive integral of the zero function is zero, so you end up with f(x)=0, which is much less impressive.

More generally, asking the question Op(f)=0 for any invertable linear operator Op is likely to set yourself up for disappointment. Since the trick relies on inverting an operator, we might want to use a non-linear operator.

where C is some global constant might be better. (This might affect the radius of convergence of that Taylor series, do not use for production yet!)

This should result in... uhm... ?

Which is a lot more work to reorder than the original convention used in the 'proof' where all the indefinite integrals of the zero function are conveniently assumed to be the same constant, and all other indefinite integrals conveniently have integration constants of zero.

Even if we sed s/C// and proclaim that should be small (e.g. compared to x) and we are only interested in the leading order terms, this would not work. What one would have to motivate is throwing everything but the leading power of x out for every evaluation, then later meticulously track these lower order terms in the sum to arrive at the Taylor series of the exponential.