Posts

Comments

Update - consulting went well. He said he was happy with it and got a lot of useful stuff. I was upfront with the fact that I just made up the $15 an hour and might change it, asked him what he'd be happy with, he said it's up to me, but didn't seem bothered at all at the price potentially changing.

I was upfront about the stuff I didn't know and was kinda surprised at how much I was able to contribute, even knowing that I underestimate my technical knowledge because I barely know how to code.

For AI Safety funders/regranters - e.g. Open Phil, Manifund, etc:

It seems like a lot of the grants are swayed by 'big names' being on there. I suggest making anonymity compulsary if you want to more merit based funding, that explores wider possibilities and invests in more upcoming things.

Treat it like a Science rather than the Bragging Competition it currently is.

A Bias Pattern atm seems to be that the same people get funding, or recommended funding by the same people, leading to the number of innovators being very small, or growing much more slowly than if the process was anonymised.

Also, ask people seeking funding to make specific, unambiguous, easily falsiable predictions of positive outcomes from their work. And track and follow up on this!

Yeah, a friend told me this was low - I'm just scared of asking for money rn I guess.

I do see people who seem very incompetent getting paid as consultants, so I guess I can charge for more. I'll see how much my time gets eaten by this and how much money I need. I want to buy some gpus, hopefully this can help.

So, apparently, I'm stupid. I could have been making money this whole time, but I was scared to ask for it

i've been giving a bunch of people and businesses advice on how to do their research and stuff. one of them messaged me, i was feeling tired and had so many other things to do. said my time is busy.

then thought fuck it, said if they're ok with a $15 an hour consulting fee, we can have a call. baffled, they said yes.

then realized, oh wait, i have multiple years of experience now leading dev teams, ai research teams, organizing research hackathons and getting frontier research done.

wtf

I think he would lie, or be deceptive in a way that's not technically lying, but has the same benefits to him, if not more.

i earnt more from working at a call center for about 3 months than i have in 2+ years of working in ai safety.

And i've worked much harder in this than I did at the call center

I downvoted because it seems obviously wrong and irrelevant to me.

I asked because I'm pretty sure that I'm being badly wasted (i.e. I could be making much more substantial contributions to AI safety),

I think this is the case for most in AI Safety rn

And by the way, your brainchild AI-Plans is a pretty cool resource. I can see it being useful for e.g. a frontier AI organization which thinks they have an alignment plan, but wants to check the literature to know what other ideas are out there.

Thanks! Doing a bunch of stuff atm, to make it easier to use and a larger userbase.

If I knew the specific bs, I'd be better at making successful applications and less intensely frustrated.

Could it be that meditation is doing some of the same job as sleep? I'd be curious what the amount of time spent meditating vs amount of sleep need reduced.

Could also reduce unrest/time waiting to sleep.

all of the above, then averaged :p

prob not gonna be relatable for most folk, but i'm so fucking burnt out on how stupid it is to get funding in ai safety. the average 'ai safety funder' does more to accelerate funding for capabilities than safety, in huge part because what they look for is Credentials and In-Group Status, rather than actual merit.

And the worst fucking thing is how much they lie to themselves and pretend that the 3 things they funded that weren't completely in group, mean that they actually aren't biased in that way.

At least some VCs are more honest that they want to be leeches and make money off of you.

the average ai safety funder does more to accelerate capabilities than they do safety, in part due to credentialism and looking for in group status.

this runs into the "assumes powerful ai will be low/non agentic" fallacy

or "assumes ai's that can massively assist in long horizon alignment research will be low/non agentic"

"Short Timelines means the value of Long Horizon Research is prompting future AIs"

Would be a more accurate title for this, imo

In sixth form, I wore a suit for 2 years. Was fun! Then, got kinda bored of suits

Why does it seem very unlikely?

The companies being merged and working together seems unrealistic.

the fact that good humans have been able to keep rogue bad humans more-or-less under control

Isn't stuff like the transatlantic slave trade, genocide of native americans, etc evidence that the amount isn't sufficient??

pauseai, controlai, etc, are doing this

Helps me decide which research to focus on

Both. Not sure, its something like lesswrong/EA speak mixed with the VC speak.

What I liked about applying for VC funding was the specific questions.

"How is this going to make money?"

"What proof do you have this is going to make money"

and it being clear the bullshit that they wanted was numbers, testimonials from paying customers, unambiguous ways the product was actually better, etc. And then standard bs about progress, security, avoiding weird wibbly wobbly talk, 'woke', 'safety', etc.

With Alignment funders, they really obviously have language they're looking for as well, or language that makes them more and less willing to put more effort into understanding the proposal. Actually, they have it more than the VCs. But they act as if they don't.

it's so unnecessarily hard to get funding in alignment.

they say 'Don't Bullshit' but what that actually means is 'Only do our specific kind of bullshit'.

and they don't specify because they want to pretend that they don't have their own bullshit

I would not call this a "Guide".

It's more a list of recommendations and some thoughts on them.

What observations would change your mind?

You can split your brain and treat LLMs differently, in a different language. Rather, I can and I think most people could as well

Ok, I want to make that at scale. If multiple people have done it and there's value in it, then there is a formula of some kind.

We can write it down, make it much easier to understand unambiguously (read: less unhelpful confusion about what to do or what the writer meant and less time wasted figuring that out) than any of the current agent foundations type stuff.

I'm extremely skeptical that needing to hear a dozen stories dancing around some vague ideas of a point and then 10 analogies (exagerrating to get emotions across) is the best we can do.

regardless of if it works, I think it's disrespectful for being manipulative at worst and wasting the persons time at best.

You can just say the actual criticism in a constructive way. Or if you don't know how to, just ask - "hey I have some feedback to give that I think would help, but I don't know how to say it without it potentially sounding bad - can I tell you and you know I don't dislike you and I don't mean to be disrespectful?" and respect it if they say no, they're not interested.

yup.

Multiple talented researchers I know got into alignment because of PauseAI.

You can also give them the clipboard and pen, works well

in general, when it comes to things which are the 'hard part of alignment', is the crux

```

a flawless method of ensuring the AI system is pointed at and will always continue to be pointed at good things

```

?

the key part being flawless - and that seeming to need a mathematical proof?

Trying to put together a better explainer for the hard part of alignment, while not having a good math background https://docs.google.com/document/d/1ePSNT1XR2qOpq8POSADKXtqxguK9hSx_uACR8l0tDGE/edit?usp=sharing

Please give feedback!

Make the (!aligned!) AGI solve a list of problems, then end all other AIs, convince (!harmlessly!) all humans to never make another AI, in a way that they will pass down to future humans, then end itself.

Thank you for sharing negative results!!

Sure? I agree this is less bad than 'literally everyone dying and that's it', assuming there's humans around, living, still empowered, etc in the background.

I was saying overall, as a story, I find it horrifying, especially contrasting with how some seem to see it utopic.

Sure, but it seems like everyone died at some point anyway, and some collective copies of them went on?

I don't think so. I think they seem to be extremely lonely and sad and the AIs are the only way for them to get any form of empowerment. And each time they try to inch further with empowering themselves with the AIs, it leads to the AI actually getting more powerful and themselves only getting a brief moment of more power, but ultimately degrading in mental capacity. And needing to empower the AI more and more, like an addict needing an ever greater high. Until there is nothing left for them to do, but Die and let the AI become the ultimate power.

- I don't particularly care if some non human semisentients manage to be kind of moral/good at coordinating, if it came at what seems to be the cost of all human life.

Even if offscreen all of humanity didn't die, these people dying, killing themselves and never realizing what's actually happening is still insanely horrific and tragic.

How is this optimistic.

Oh yes. It's extremely dystopian. And extremely lonely, too. Rather than having a person, actual people around him to help, his only help comes from tech. It's horrifyingly lonely and isolated. There is no community, only tech.

Also, when they died together, it was horrible. They literally offloaded more and more of themselves into their tech until they were powerless to do anything but die. I don't buy the whole 'the thoughts were basically them' thing at all. It was at best, some copy of them.

There can be made an argument for it qualitatively being them, but quantitatively, obviously not.

A few months later, he and Elena decide to make the jump to full virtuality. He lies next to Elena in the hospital, holding her hand, as their physical bodies drift into a final sleep. He barely feels the transition

this is horrifying. Was it intentionally made that way?

Thoughts on this?

### Limitations of HHH and other Static Dataset benchmarks

A Static Dataset is a dataset which will not grow or change - it will remain the same. Static dataset type benchmarks are inherently limited in what information they will tell us about a model. This is especially the case when we care about AI Alignment and want to measure how 'aligned' the AI is.

### Purpose of AI Alignment Benchmarks

When measuring AI Alignment, our aim is to find out exactly how close the model is to being the ultimate 'aligned' model that we're seeking - a model whose preferences are compatible with ours, in a way that will empower humanity, not harm or disempower it.

### Difficulties of Designing AI Alignment Benchmarks

What preferences those are, could be a significant part of the alignment problem. This means that we will need to frequently make sure we know what preferences we're trying to measure for and re-determine if these are the correct ones to be aiming for.

### Key Properties of Aligned Models

These preferences must be both robustly and faithfully held by the model:

Robustness:

- They will be preserved over unlimited iterations of the model, without deterioration or deprioritization.

- They will be robust to external attacks, manipulations, damage, etc of the model.

Faithfulness:

- The model 'believes in', 'values' or 'holds to be true and important' the preferences that we care about .

- It doesn't just store the preferences as information of equal priority to any other piece of information, e.g. how many cats are in Paris - but it holds them as its own, actual preferences.

Comment on the Google Doc here: https://docs.google.com/document/d/1PHUqFN9E62_mF2J5KjcfBK7-GwKT97iu2Cuc7B4Or2w/edit?usp=sharing

This is for the AI Alignment Evals Hackathon: https://lu.ma/xjkxqcya by AI-Plans

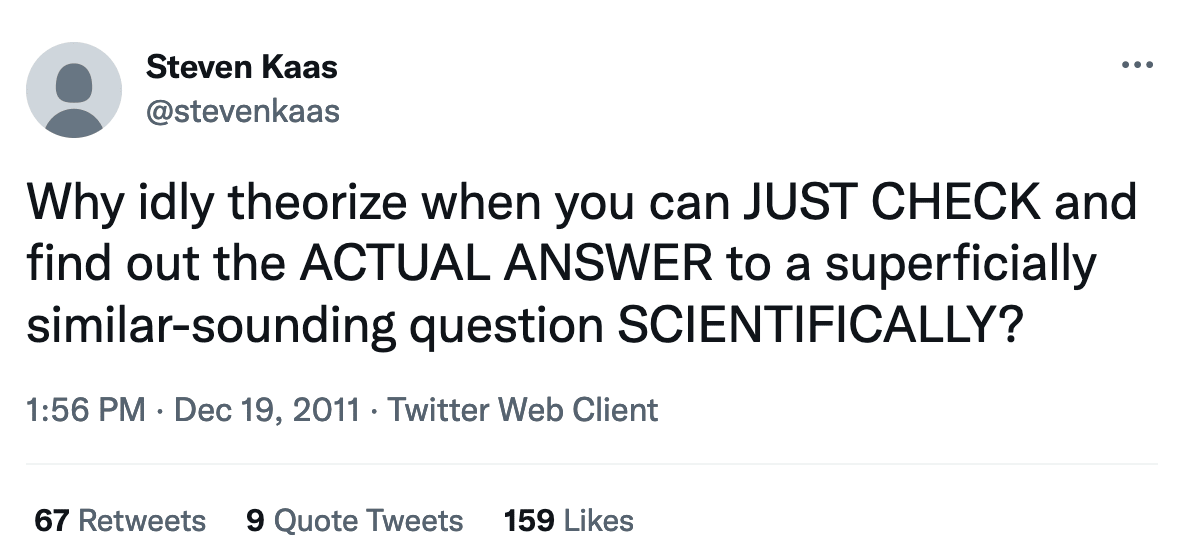

this might basically be me, but I'm not sure how exactly to change for the better. theorizing seems to take time and money which i don't have.

Thinking about judgement criteria for the coming ai safety evals hackathon (https://lu.ma/xjkxqcya )

These are the things that need to be judged:

1. Is the benchmark actually measuring alignment (the real, scale, if we dont get this fully right right we die, problem)

2. Is the way of Deceiving the benchmark to get high scores actually deception, or have they somehow done alignment?

Both of these things need:

- a strong deep learning & ml background (ideally, muliple influential papers where they're one of the main authors/co-authors, or doing ai research at a significant lab, or they have, in the last 4 years)

- a good understanding of what the real alignment problem actually means - can judge this by looking at their papers, activity on lesswrong, alignmentforum, blog, etc

- a good understanding of evals/benchmarks (1 great or two pretty good papers/repos/works on this, ideally for alignment)

Do these seem loose? Strict? Off base?

I'm looking for feedback on the hackathon page

mind telling me what you think?

https://docs.google.com/document/d/1Wf9vju3TIEaqQwXzmPY--R0z41SMcRjAFyn9iq9r-ag/edit?usp=sharing

Intelligence is computation. It's measure is success. General intelligence is more generally successful.

We're doing this on https://ai-plans.com !

Personally, I think o1 is uniquely trash, I think o1-preview was actually better. Getting on average, better things from deepseek and sonnet 3.5 atm.