AI Regulation is Unsafe

post by Maxwell Tabarrok (maxwell-tabarrok) · 2024-04-22T16:37:55.431Z · LW · GW · 41 commentsThis is a link post for https://www.maximum-progress.com/p/ai-regulation-is-unsafe

Contents

Default government incentives Negative Spillovers None 42 comments

Concerns over AI safety and calls for government control over the technology are highly correlated but they should not be.

There are two major forms of AI risk: misuse and misalignment. Misuse risks come from humans using AIs as tools in dangerous ways. Misalignment risks arise if AIs take their own actions at the expense of human interests.

Governments are poor stewards for both types of risk. Misuse regulation is like the regulation of any other technology. There are reasonable rules that the government might set, but omission bias and incentives to protect small but well organized groups at the expense of everyone else will lead to lots of costly ones too. Misalignment regulation is not in the Overton window for any government. Governments do not have strong incentives to care about long term, global, costs or benefits and they do have strong incentives to push the development of AI forwards for their own purposes.

Noticing that AI companies put the world at risk is not enough to support greater government involvement in the technology. Government involvement is likely to exacerbate the most dangerous parts of AI while limiting the upside.

Default government incentives

Governments are not social welfare maximizers. Government actions are an amalgam of the actions of thousands of personal welfare maximizers who are loosely aligned and constrained. In general, governments have strong incentives for myopia, violent competition with other governments, and negative sum transfers to small, well organized groups. These exacerbate existential risk and limit potential upside.

The vast majority of the costs of existential risk occur outside of the borders of any single government and beyond the election cycle for any current decision maker, so we should expect governments to ignore them.

We see this expectation fulfilled in governments reactions to other long term or global externalities e.g debt and climate change. Governments around the world are happy to impose trillions of dollars in direct cost and substantial default risk on future generations because costs and benefits on these future generations hold little sway in the next election. Similarly, governments spend billions subsidizing fossil fuel production and ignore potential solutions to global warming, like a carbon tax or geoengineering, because the long term or extraterritorial costs and benefits of climate change do not enter their optimization function.

AI risk is no different. Governments will happily trade off global, long term risk for national, short term benefits. The most salient way they will do this is through military competition. Government regulations on private AI development will not stop them from racing to integrate AI into their militaries. Autonomous drone warfare is already happening in Ukraine and Israel. The US military has contracts with Palantir and Andruil which use AI to augment military strategy or to power weapons systems. Governments will want to use AI for predictive policing, propaganda, and other forms of population control.

The case of nuclear tech is informative. This technology was strictly regulated by governments, but they still raced with each other and used the technology to create the most existentially risky weapons mankind has ever seen. Simultaneously, they cracked down on civilian use. Now, we’re in a world where all the major geopolitical flashpoints have at least one side armed with nuclear weapons and where the nuclear power industry is worse than stagnant.

Government’s military ambitions mean that their regulation will preserve the most dangerous misuse risks from AI. They will also push the AI frontier and train larger models, so we will still face misalignment risks. These may be exacerbated if governments are less interested or skilled in AI safety techniques. Government control over AI development is likely to slow down AI progress overall. Accepting the premise that this is good is not sufficient to invite regulation, though, because government control will cause a relative speed up of the most dystopian uses for AI.

In short term, governments are primarily interested in protecting well-organized groups from the effects of AI. E.g copyright holders, drivers unions, and other professional lobby groups. Here’s a summary of last years congressional AI hearing from Zvi Mowshowitz.

The Senators [LW · GW] care deeply about the types of things politicians care deeply about. Klobuchar asked about securing royalties for local news media. Blackburn asked about securing royalties for Garth Brooks. Lots of concern about copyright violations, about using data to train without proper permission, especially in audio models. Graham focused on section 230 for some reason, despite numerous reminders it didn’t apply, and Howley talked about it a bit too.

This kind of regulation has less risk than misaligned killbots, but it does limit the potential upside from the technology.

Private incentives for AI development are far from perfect. There are still large externalities and competitive dynamics that may push progress too fast. But identifying this problem is not enough to justify government involvement. We need a reason to believe that governments can reliably improve the incentives facing private organizations. Government’s strong incentives for myopia, military competition, and rent-seeking make it difficult to find such a reason.

Negative Spillovers

The default incentives of both governments and profit seeking companies are imperfect. But the whole point of AI safety advocacy is to change these incentives or to convince decision makers to act despite them, so you can buy that governments are imperfect and still support calls for AI regulation. The problem with this is that even extraordinarily successful advocacy in government can be redirected into opposite and catastrophic effects.

Consider Sam Altman’s testimony in congress last May. No one was convinced of anything except the power of AI fear for their own pet projects. Here is a characteristic quote:

Senator Blumenthal addressing Sam Altman: I think you have said, in fact, and I’m gonna quote, ‘Development of superhuman machine intelligence is probably the greatest threat to the continued existence of humanity.’ You may have had in mind the effect on jobs. Which is really my biggest nightmare in the long term.

A reasonable upper bound for the potential of AI safety lobbying is the environmental movement of the 1970s. It was extraordinarily effective. Their advocacy led to a series of laws, including the National Environmental Policy Act (NEPA) that are among the most comprehensive and powerful regulations ever passed. These laws are not clearly in service of some pre-existing government incentive. Indeed, they regulate the federal government more strictly than anything else and often got it in its way. The cultural and political advocacy of the environmental movement made a large counterfactual impact with laws that still have massive influence today.

This success has turned sour, though, because the massive influence of these laws is now a massive barrier to decarbonization. NEPA has exemptions for oil and gas but not for solar or windfarms. Exemptions for highways but not highspeed rail. The costs of compliance with NEPA’s bureaucratic proceduralism hurts Terraform Industries a lot more than Shell. The standard government incentives for concentrating benefits to large legible groups and diffusing costs to large groups and the future redirected the political will and institutional power of the environmental movement into some of the most environmentally damaging and economically costly laws ever.

AI safety advocates should not expect to do much better than this, especially since many of their proposals are specifically based on permitting AI models like NEPA permits construction projects.

Belief in the potential for existential risk from AI does not imply that governments should have greater influence over its development. Government’s incentives make them misaligned with the goal of reducing existential risk. They are not rewarded or punished for costs or benefits outside of their borders or term limits and this is where nearly all of the importance of existential risk lies. Governments are rewarded for rapid development of military technology that empowers them over their rivals. They are also rewarded for providing immediate benefits to well-organized, legible groups, even when these rewards come at great expense to larger or more remote groups of people. These incentives exacerbate the worst misuse and misalignment risks of AI and limit the potential economic upside.

41 comments

Comments sorted by top scores.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-04-22T19:36:46.640Z · LW(p) · GW(p)

I think this post doesn't engage with the arguments for anti-x-risk AI regulation, to a degree that I am frustrated by. Surely you know that the people you are talking to -- the proponents of anti-x-risk AI regulation -- are well aware of the flaws of governments generally and the way in which regulation often stifles progress or results in regulatory capture that is actively harmful to whatever cause it was supposed to support. Surely you know that e.g. Yudkowsky is well aware of all this... right?

The core argument these regulation proponents are making and have been making is "Yeah, regulation often sucks or misfires, but we have to try, because worlds that don't regulate AGI are even more doomed."

What do you think will happen, if there is no regulation of AGI? Seriously how do you think it will go? Maybe you just don't think AGI will happen for another decade or so?

↑ comment by Richard_Ngo (ricraz) · 2024-04-24T22:51:52.136Z · LW(p) · GW(p)

I don't actually think proponents of anti-x-risk AI regulation have thought very much about the ways in which regulatory capture might in fact be harmful to reducing AI x-risk. At least, I haven't seen much writing about this, nor has it come up in many of the discussions I've had (except insofar as I brought it up).

In general I am against arguments of the form "X is terrible but we have to try it because worlds that don't do it are even more doomed". I'll steal Scott Garrabrant's quote from here [? · GW]:

"If you think everything is doomed, you should try not to mess anything up. If your worldview is right, we probably lose, so our best out is the one where your your worldview is somehow wrong. In that world, we don't want mistaken people to take big unilateral risk-seeking actions.

Until recently, people with P(doom) of, say, 10%, have been natural allies of people with P(doom) of >80%. But the regulation that the latter group thinks is sufficient to avoid xrisk with high confidence has, on my worldview, a significant chance of either causing x-risk from totalitarianism, or else causing x-risk via governments being worse at alignment than companies would have been. How high? Not sure, but plausibly enough to make these two groups no longer natural allies.

Replies from: akash-wasil, daniel-kokotajlo, matthew-barnett, quetzal_rainbow↑ comment by Orpheus16 (akash-wasil) · 2024-04-25T00:58:41.196Z · LW(p) · GW(p)

I'm not sure who you've spoken to, but at least among the AI policy people who I talk to regularly (which admittedly is a subset of people who I think are doing the most thoughtful/serious work), I think nearly all of them have thought about ways in which regulation + regulatory capture could be net negative. At least to the point of being able to name the relatively "easy" ways (e.g., governments being worse at alignment than companies).

I continue to think people should be forming alliances with those who share similar policy objectives, rather than simply those who belong in the "I believe xrisk is a big deal" camp. I've seen many instances in which the "everyone who believes xrisk is a big deal belongs to the same camp" mentality has been used to dissuade people from communicating their beliefs, communicating with policymakers, brainstorming ideas that involve coordination with other groups in the world, disagreeing with the mainline views held by a few AIS leaders, etc.

The cultural pressures against policy advocacy have been so strong that it's not surprising to see folks say things like "perhaps our groups are no longer natural allies" now that some of the xrisk-concerned people are beginning to say things like "perhaps the government should have more of a say in how AGI development goes than in status quo, where the government has played ~0 role and ~all decisions have been made by private companies."

Perhaps there's a multiverse out there in which the AGI community ended up attracting govt natsec folks instead of Bay Area libertarians, and the cultural pressures are flipped. Perhaps in that world, the default cultural incentives pushed people heavily brainstorming ways that markets and companies could contribute meaningfully to the AGI discourse, and the default position for the "AI risk is a big deal" camp was "well obviously the government should be able to decide what happens and it would be ridiculous to get companies involved– don't be unilateralist by going and telling VCs about this stuff."

I bring up this (admittedly kinda weird) hypothetical to point out just how skewed the status quo is. One might generally be wary of government overinvolvement in regulating emerging technologies yet still recognize that some degree of regulation is useful, and that position would likely still push them to be in the "we need more regulation than we currently have" camp.

As a final note, I'll point out to readers less familiar with the AI policy world that serious people are proposing lots of regulation that is in between "status quo with virtually no regulation" and "full-on pause." Some of my personal favorite examples include: emergency preparedness (akin to the OPPR), licensing (see Romney), reporting requirements, mandatory technical standards enforced via regulators, and public-private partnerships.

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2024-04-25T01:02:53.306Z · LW(p) · GW(p)

I'm not sure who you've spoken to, but at least among the people who I talk to regularly who I consider to be doing "serious AI policy work" (which admittedly is not everyone who claims to be doing AI policy work), I think nearly all of them have thought about ways in which regulation + regulatory capture could be net negative. At least to the point of being able to name the relatively "easy" ways (e.g., governments being worse at alignment than companies).

I don't disagree with this; when I say "thought very much" I mean e.g. to the point of writing papers about it, or even blog posts, or analyzing it in talks, or basically anything more than cursory brainstorming. Maybe I just haven't seen that stuff, idk.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-04-25T18:10:09.583Z · LW(p) · GW(p)

Hmm, I'm a bit surprised to hear you say that. I feel like I myself brought up regulatory capture a bunch of times in our conversations over the last two years. I think I even said it was the most likely scenario, in fact, and that it was making me seriously question whether what we were doing was helpful. Is this not how you remember it? Wanna hop on a call to discuss?

As for arguments of that form... I didn't say X is terrible, I said it often goes badly. If you round that off to "X is terrible" and fit it into the argument-form you are generally against, then I think to be consistent you'd have to give a similar treatment to a lot of common sense good things. Like e.g. doing surgery on a patient who seems likely to die absent treatment.

I also think we might be talking past each other re regulation. As I said elsewhere in this discussion (on the OP's blog) I am not in favor of generically increasing the amount of AI regulation in the world. I would instead advocate for something more targeted -- regulation that I actually think would really work, if implemented well. And I'm very concerned about the "if implemented well" part and have a lot to say about that too.

What are the regulations that you are concerned about, that (a) are being seriously advocated by people with P(doom) >80%, and (b) that have a significant chance of causing x-risk via totalitarianism or technical-alignment-incompetence?

↑ comment by Matthew Barnett (matthew-barnett) · 2024-04-25T00:28:10.993Z · LW(p) · GW(p)

Until recently, people with P(doom) of, say, 10%, have been natural allies of people with P(doom) of >80%. But the regulation that the latter group thinks is sufficient to avoid xrisk with high confidence has, on my worldview, a significant chance of either causing x-risk from totalitarianism, or else causing x-risk via governments being worse at alignment than companies would have been.

I agree. Moreover, a p(doom) of 10% vs. 80% means a lot for people like me who think the current generation of humans have substantial moral value (i.e., people who aren't fully committed to longtermism).

In the p(doom)=10% case, burdensome regulations that appreciably delay AI, or greatly reduce the impact of AI, have a large chance of causing the premature deaths of people who currently exist, including our family and friends. This is really bad if you care significantly about people who currently exist.

This consideration is sometimes neglected in these discussions, perhaps because it's seen as a form of selfish partiality that we should toss aside. But in my opinion, morality is allowed to be partial. Morality is whatever we want it to be. And I don't have a strong urge to sacrifice everyone I know and love for the sake of slightly increasing (in my view) the chance of the human species being preserved.

(The additional considerations of potential totalitarianism, public choice arguments, and the fact that I think unaligned AIs will probably have moral value [EA · GW], make me quite averse to very strong regulatory controls on AI.)

Replies from: daniel-kokotajlo, quetzal_rainbow↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-04-26T14:51:30.724Z · LW(p) · GW(p)

So, it sounds like you'd be in favor of a 1-year pause or slowdown then, but not a 10-year?

(Also, I object to your side-swipe at longtermism. Longtermism according to wikipedia is "Longtermism is the ethical view that positively influencing the long-term future is a key moral priority of our time." "A key moral priority" doesn't mean "the only thing that has substantial moral value." If you had instead dunked on classic utilitarianism, I would have agreed.)

↑ comment by Matthew Barnett (matthew-barnett) · 2024-04-26T17:53:16.538Z · LW(p) · GW(p)

So, it sounds like you'd be in favor of a 1-year pause or slowdown then, but not a 10-year?

That depends on the benefits that we get from a 1-year pause. I'd be open to the policy, but I'm not currently convinced that the benefits would be large enough to justify the costs.

Also, I object to your side-swipe at longtermism

I didn't side-swipe at longtermism, or try to dunk on it. I think longtermism is a decent philosophy, and I consider myself a longtermist in the dictionary sense as you quoted. I was simply talking about people who aren't "fully committed" to the (strong) version of the philosophy.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-04-26T23:07:13.545Z · LW(p) · GW(p)

OK, thanks for clarifying.

Personally I think a 1-year pause right around the time of AGI would give us something like 50% of the benefits of a 10-year pause. That's just an initial guess, not super stable. And quantitatively I think it would improve overall chances of AGI going well by double-digit percentage points at least. Such that it makes sense to do a 1-year pause even for the sake of an elderly relative avoiding death from cancer, not to mention all the younger people alive today.

↑ comment by Matthew Barnett (matthew-barnett) · 2024-04-26T23:34:48.182Z · LW(p) · GW(p)

And quantitatively I think it would improve overall chances of AGI going well by double-digit percentage points at least.

Makes sense. By comparison, my own unconditional estimate of p(doom) is not much higher than 10%, and so it's hard on my view for any intervention to have a double-digit percentage point effect.

The crude mortality rate before the pandemic was about 0.7%. If we use that number to estimate the direct cost of a 1-year pause, then this is the bar that we'd need to clear for a pause to be justified. I find it plausible that this bar could be met, but at the same time, I am also pretty skeptical of the mechanisms various people have given for how a pause will help with AI safety.

Replies from: daniel-kokotajlo, nikolas-kuhn↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-04-27T00:19:01.312Z · LW(p) · GW(p)

I agree that 0.7% is the number to beat for people who mostly focus on helping present humans and who don't take acausal or simulation argument stuff or cryonics seriously. I think that even if I was much more optimistic about AI alignment, I'd still think that number would be fairly plausibly beaten by a 1-year pause that begins right around the time of AGI.

What are the mechanisms people have given and why are you skeptical of them?

↑ comment by ryan_greenblatt · 2024-04-27T00:42:53.473Z · LW(p) · GW(p)

(Surely cryonics doesn't matter given a realistic action space? Usage of cryonics is extremely rare and I don't think there are plausible (cheap) mechanisms to increase uptake to >1% of population. I agree that simulation arguments and similar considerations maybe imply that "helping current humans" is either incoherant or unimportant.)

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-04-27T15:00:01.144Z · LW(p) · GW(p)

Good point, I guess I was thinking in that case about people who care a bunch about a smaller group of humans e.g. their family and friends.

↑ comment by Amalthea (nikolas-kuhn) · 2024-04-27T00:11:32.134Z · LW(p) · GW(p)

Somewhat of a nitpick, but the relevant number would be p(doom | strong AGI being built) (maybe contrasted with p(utopia | strong AGI)) , not overall p(doom).

↑ comment by quetzal_rainbow · 2024-04-26T15:53:11.853Z · LW(p) · GW(p)

May I strongly recommend that you try to become a Dark Lord instead?

I mean, literally. Stage some small bloody civil war with expected body count of several millions, become dictator, provide everyone free insurance coverage for cryonics, it will be sure more ethical than 10% of chance of killing literally everyone from the perspective of most of ethical systems I know.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2024-04-26T20:34:37.519Z · LW(p) · GW(p)

I don't think staging a civil war is generally a good way of saving lives. Moreover, ordinary aging has about a 100% chance of "killing literally everyone" prematurely, so it's unclear to me what moral distinction you're trying to make in your comment. It's possible you think that:

- Death from aging is not as bad as death from AI because aging is natural whereas AI is artificial

- Death from aging is not as bad as death from AI because human civilization would continue if everyone dies from aging, whereas it would not continue if AI kills everyone

In the case of (1) I'm not sure I share the intuition. Being forced to die from old age seems, if anything, worse than being forced to die from AI, since it is long and drawn-out, and presumably more painful than death from AI. You might also think about this dilemma in terms of act vs. omission, but I am not convinced there's a clear asymmetry here.

In the case of (2), whether AI takeover is worse depends on how bad you think an "AI civilization" would be in the absence of humans. I recently wrote a post [EA · GW] about some reasons to think that it wouldn't be much worse than a human civilization.

In any case, I think this is simply a comparison between "everyone literally dies" vs. "everyone might literally die but in a different way". So I don't think it's clear that pushing for one over the other makes someone a "Dark Lord", in the morally relevant sense, compared to the alternative.

Replies from: nikolas-kuhn↑ comment by Amalthea (nikolas-kuhn) · 2024-04-27T02:32:02.573Z · LW(p) · GW(p)

I think the perspective that you're missing regarding 2. is that by building AGI one is taking the chance of non-consensually killing vast amounts of people and their children for some chance of improving one's own longevity.

Even if one thinks it's a better deal for them, a key point is that you are making the decision for them by unilaterally building AGI. So in that sense it is quite reasonable to see it as an "evil" action to work towards that outcome.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2024-04-27T03:02:58.095Z · LW(p) · GW(p)

non-consensually killing vast amounts of people and their children for some chance of improving one's own longevity.

I think this misrepresents the scenario since AGI presumably won't just improve my own longevity: it will presumably improve most people's longevity (assuming it does that at all), in addition to all the other benefits that AGI would provide the world. Also, both potential decisions are "unilateral": if some group forcibly stops AGI development, they're causing everyone else to non-consensually die from old age, by assumption.

I understand you have the intuition that there's an important asymmetry here. However, even if that's true, I think it's important to strive to be accurate when describing the moral choice here.

Replies from: nikolas-kuhn↑ comment by Amalthea (nikolas-kuhn) · 2024-04-27T04:14:25.158Z · LW(p) · GW(p)

- I agree that potentially the benefits can go to everyone. The point is that as the person pursuing AGI you are making the choice for everyone else.

- The asymmetry is that if you do something that creates risk for everyone else, I believe that does single you out as an aggressor? While conversely, enforcing norms that prevent such risky behavior seems justified. The fact that by default people are mortal is tragic, but doesn't have much bearing here. (You'd still be free to pursue life-extension technology in other ways, perhaps including limited AI tools).

- Ideally, of course, there'd be some sort of democratic process here that let's people in aggregate make informed (!) choices. In the real world, it's unclear what a good solution here would be. What we have right now is the big labs creating facts that society has trouble catching up with, which I think many people are reasonably uncomfortable with.

↑ comment by quetzal_rainbow · 2024-04-25T19:33:40.903Z · LW(p) · GW(p)

governments being worse at alignment than companies would have been

How exactly absence of regulation prevents governments from working on AI? Thanks to OpenAI/DeepMind/Anthropic, possibility of not attracting government attention at all is already lost. If you want government to not do bad work on alignment, you should prohibit government to work on AI using, yes, government regulations.

↑ comment by Maxwell Tabarrok (maxwell-tabarrok) · 2024-04-22T20:03:26.817Z · LW(p) · GW(p)

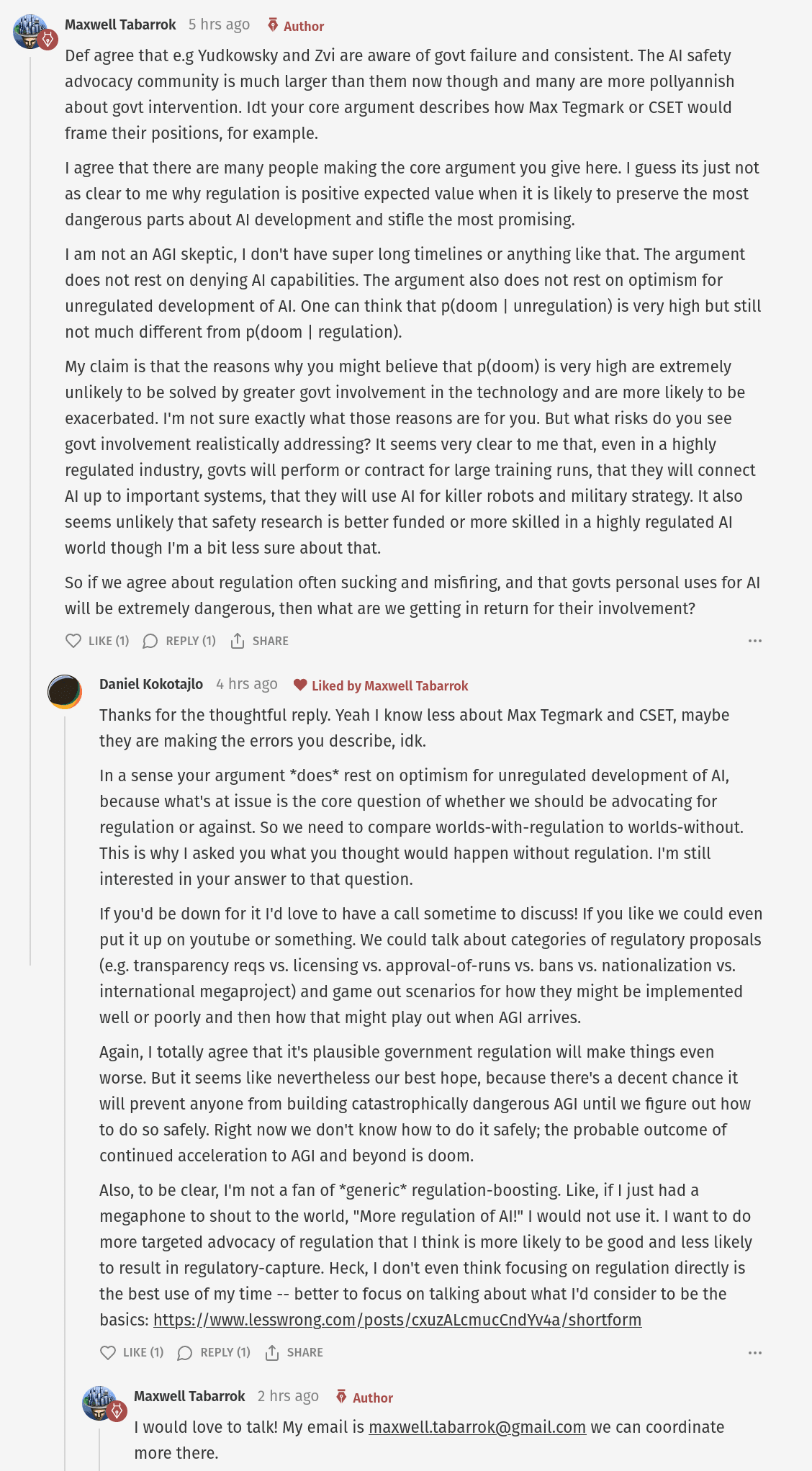

Daniel and I continue the comment thread here

https://open.substack.com/pub/maximumprogress/p/ai-regulation-is-unsafe?r=awlwu&utm_campaign=comment-list-share-cta&utm_medium=web&comments=true&commentId=54561569

↑ comment by Quadratic Reciprocity · 2024-04-23T01:40:09.787Z · LW(p) · GW(p)

From the comment thread:

I'm not a fan of *generic* regulation-boosting. Like, if I just had a megaphone to shout to the world, "More regulation of AI!" I would not use it. I want to do more targeted advocacy of regulation that I think is more likely to be good and less likely to result in regulatory-capture

What are specific regulations / existing proposals that you think are likely to be good? When people are protesting to pause AI, what do you want them to be speaking into a megaphone (if you think those kinds of protests could be helpful at all right now)?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-04-23T03:31:50.838Z · LW(p) · GW(p)

Reporting requirements, especially requirements to report to the public what your internal system capabilities are, so that it's impossible to have a secret AGI project. Also reporting requirements of the form "write a document explaining what capabilities, goals/values, constraints, etc. your AIs are supposed to have, and justifying those claims, and submit it to public scrutiny. So e.g. if your argument is 'we RLHF'd it to have those goals and constraints, and that probably works because there's No Evidence of deceptive alignment or other speculative failure modes' then at least the world can see that no, you don't have any better arguments than that.

That would be my minimal proposal. My maximal proposal would be something like "AGI research must be conducted in one place: the United Nations AGI Project, with a diverse group of nations able to see what's happening in the project and vote on each new major training run and have their own experts argue about the safety case etc."

There's a bunch of options in between.

I'd be quite happy with an AGI Pause if it happened, I just don't think it's going to happen, the corporations are too powerful. I also think that some of the other proposals are strictly better while also being more politically feasible. (They are more complicated and easily corrupted though, which to me is the appeal of calling for a pause. Harder to get regulatory-captured than something more nuanced.)

↑ comment by Rudi C (rudi-c) · 2024-04-23T17:14:05.690Z · LW(p) · GW(p)

A core disagreement is over “more doomed.” Human extinction is preferable to a totalitarian stagnant state. I believe that people pushing for totalitarianism have never lived under it.

Replies from: Wei_Dai, daniel-kokotajlo, MondSemmel↑ comment by Wei Dai (Wei_Dai) · 2024-04-25T03:54:39.752Z · LW(p) · GW(p)

I've arguably lived under totalitarianism (depending on how you define it), and my parents definitely have and told me many stories about it [LW · GW]. I think AGI increases risk of totalitarianism [LW · GW], and support a pause in part to have more time to figure out how to make the AI transition go well in that regard.

Replies from: rudi-c, daniel-kokotajlo↑ comment by Rudi C (rudi-c) · 2024-04-26T19:30:27.787Z · LW(p) · GW(p)

AGI might increase the risk of totalitarianism. OTOH, a shift in the attack-defense balance could potentially boost the veto power of individuals, so it might also work as a deterrent or a force for anarchy.

This is not the crux of my argument, however. The current regulatory Overton window seems to heavily favor a selective pause of AGI, such that centralized powers will continue ahead, even if slower due to their inherent inefficiencies. Nuclear development provides further historical evidence for this. Closed AGI development will almost surely lead to a dystopic totalitarian regime. The track record of Lesswrong is not rosy here; the "Pivotal Act" still seems to be in popular favor, and OpenAI has significantly accelerated closed AGI development while lobbying to close off open research and pioneering the new "AI Safety" that has been nothing but censorship and double-think as of 2024.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-04-27T15:34:45.739Z · LW(p) · GW(p)

I think most people pushing for a pause are trying to push against a 'selective pause' and for an actual pause that would apply to the big labs who are at the forefront of progress. I agree with you, however, that the current overton window seems unfortunately centered around some combination of evals-and-mitigations that is at IMO high risk of regulatory capture (i.e. resulting in a selective pause that doesn't apply to the big corporations that most need to pause!) My disillusionment about this is part of why I left OpenAI.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-04-26T14:52:37.826Z · LW(p) · GW(p)

Big +1 to that. Part of why I support (some kinds of) AI regulation is that I think they'll reduce the risk of totalitarianism, not increase it.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-04-23T18:41:58.771Z · LW(p) · GW(p)

Who is pushing for totalitarianism? I dispute that AI safety people are pushing for totalitarianism.

Replies from: RussellThor↑ comment by RussellThor · 2024-04-24T10:05:43.646Z · LW(p) · GW(p)

There is a belief among some people that our current tech level will lead to totalitarianism by default. The argument is that with 1970's tech the soviet union collapsed, however with 2020 computer tech (not needing GenAI) it would not. If a democracy goes bad, unlike before there is no coming back. For example Xinjiang - Stalin would have liked to do something like that but couldn't. When you add LLM AI on everyone's phone + Video/Speech recognition, organized protest is impossible.

Not sure if Rudi C is making this exact argument. Anyway if we get mass centralization/totalitarianism worldwide, then S risk is pretty reasonable. AI will be developed under such circumstances to oppress 99% of the population - then goes to 100% with extinction being better.

I find it hard to know how likely this is. Is clear to me that tech has enabled totalitarianism but hard to give odds etc.

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2024-04-25T04:02:04.971Z · LW(p) · GW(p)

The argument is that with 1970′s tech the soviet union collapsed, however with 2020 computer tech (not needing GenAI) it would not.

I note that China is still doing market economics, and nobody is trying (or even advocating, AFAIK) some very ambitious centrally planned economy using modern computers, so this seems like pure speculation? Has someone actually made a detailed argument about this, or at least has the agreement of some people with reasonable economics intuitions?

Replies from: RussellThor↑ comment by RussellThor · 2024-04-25T05:54:26.567Z · LW(p) · GW(p)

No I have not seen a detailed argument about this, just the claim that once centralization goes past a certain point there is no coming back. I would like to see such an argument/investigation as I think it is quite important. "Yuval Harari" does say something similar in "Sapiens"

↑ comment by MondSemmel · 2024-04-23T18:09:33.771Z · LW(p) · GW(p)

Flippant response: people pushing for human extinction have never been dead under it, either.

comment by cousin_it · 2024-04-23T10:53:39.462Z · LW(p) · GW(p)

You're saying governments can't address existential risk, because they only care about what happens within their borders and term limits. And therefore we should entrust existential risk to firms, which only care about their own profit in the next quarter?!

Replies from: maxwell-tabarrok↑ comment by Maxwell Tabarrok (maxwell-tabarrok) · 2024-04-23T20:06:13.004Z · LW(p) · GW(p)

Firms are actually better than governments at internalizing costs across time. Asset values incorporate the potential future flows. For example, consider a retiring farmer. You might think that they have an incentive to run the soil dry in their last season since they won't be using it in the future, but this would hurt the sale value of the farm. An elected representative who's term limit is coming up wouldn't have the same incentives.

Of course, firms incentives are very misaligned in important ways. The question is: Can we rely on government to improve these incentives.

↑ comment by cousin_it · 2024-06-03T13:58:53.981Z · LW(p) · GW(p)

An elected representative who’s term limit is coming up wouldn’t have the same incentives.

I think this proves too much. If elected representatives only follow self-interest, then democracy is pointless to begin with, because any representative once elected will simply obey the highest bidder. Democracy works to the extent that people vote for representatives who represent the people's interests, which do reach beyond the term limit.

comment by Seth Herd · 2024-04-24T00:17:31.327Z · LW(p) · GW(p)

Who is downvoting posts like this? Please don't!

I see that this is much lower than the last time I looked, so it's had some, probably large, downvotes.

A downvote means "please don't write posts like this, and don't read this post".

Daniel Kokatijlo disagreed with this post, but found it worth engaging with. Don't you want discussions with those you disagree with? Downvoting things you don't agree with says "we are here to preach to the choir. Dissenting opinions are not welcome. Don't post until you've read everything on this topic". That's a way to find yourself in an echo chamber. And that's not going to save the world or pursue truth.

I largely disagree with the conclusions and even the analytical approach taken here, but that does not make this post net-negative. It is net-positive. It could be argued that there are better posts on this topic one should read, but there certainly haven't been this week. And I haven't heard these same points made more cogently elsewhere. This is net-positive unless I'm misunderstanding the criteria for a downvote.

I'm confused why we don't have a "disagree" vote on top-level posts to draw off the inarticulate disgruntlement that causes people to downvote high-effort, well-done work.

Replies from: nikolas-kuhn↑ comment by Amalthea (nikolas-kuhn) · 2024-04-24T01:49:25.849Z · LW(p) · GW(p)

I was down voting this particular post because I perceived it as mostly ideological and making few arguments, only stating strongly that government action will be bad. I found the author's replies in the comments much more nuanced and would not have down-voted if I'd perceived the original post to be of the same quality.

comment by Seth Herd · 2024-04-22T20:40:11.638Z · LW(p) · GW(p)

The fact that very few in government even understand the existential risk argument means that we haven't seen their relevant opinions yet. As you point out, the government is composed of selfish individuals. At least some of those individuals care about themselves, their children and grandchildren. Making them aware of the existential risk arguments in detail could entirely change their atiitude.

In addition, I think we need to think in more detail about possible regulations and downsides. Sure, government is shortsighted and selfish, like the rest of humanity.

I think you're miscalibrated on the risks relative to your average reader. We tend to care primarily about the literal extinction of humanity. Relative to those concerns, the "the most dystopian uses for AI" you mention are not a concern, unless you mean literally the worst- a billion-year reich of suffering or something.

We need a reason to believe that governments can reliably improve the incentives facing private organizations.

We do not. Many of us here believe we are in such a desparate situation that merely rolling the dice to change anything would make sense.

I'm not one of those people. I can't tell what situation we're really in, and I don't think anyone else has a satisfactory full view either. So, despite all of the above, I think you might be right that government regulation may make the situation worse. The biggest risk I can see is changing who's in the lead for the AGI race; the current candidates seem relatively well-intended and aware of the risks (with large caveats). (One counterargument is that takeoff will likely be so slow in the current paradigm that we will have a multiple AGIs, making the group dynamics as important as individual intentions.)

So I'd like to see a better analysis of the potential outcomes of government regulation. Arguing that governments are bad and dumb in a variety of ways just isn't sufficiently detailed to be helpful in this situation.

comment by cSkeleton · 2024-04-23T18:57:22.274Z · LW(p) · GW(p)

Governments are not social welfare maximizers

Most people making up governments, and society in general, care at least somewhat about social welfare. This is why we get to have nice things and not descend into chaos.

Elected governments have the most moral authority to take actions that effect everyone, ideally a diverse group of nations as mentioned in Daniel Kokotajlo [LW · GW]'s maximal proposal comment.