Scanless Whole Brain Emulation

post by Knight Lee (Max Lee) · 2025-01-27T10:00:08.036Z · LW · GW · 5 commentsContents

Hard, but necessary anyways? But how would you even start? Details AI can simulate physics and other systems far more cheaply than traditional physics simulators None 5 comments

Warning: this is very speculative and I have no expertise.

If we can build superintelligence by starting with simulated humans, it should be safer [LW · GW].

Scanless Whole Brain Emulation attempts to simulate the development of the nervous system from the very start, thus not requiring a brain scan.

Hard, but necessary anyways?

For a Whole Brain Emulation to be useful, the simulated humans need to be able to learn. They need to understand new things and build on top of their new understandings, otherwise they would be intellectually disabled.

They need some level of neuroplasticity. The system needs to simulate how neurons use past information, as well how neurons form and prune connections. It needs to simulate the part of brain development in adulthood.

Given that it must simulate the part of brain development in adulthood, simulating brain development from the very start might not be that much harder.

But how would you even start?

The idea is to create protein models using chemistry simulations, and then create organelle models from protein simulations, and then create cell models from organelle simulations, and finally create "Scanless Whole Brain Emulation" from cell simulations.

Each model is created by running simulations of lower level mechanics, and fitting the simulation to empirical data. Once the simulation matches empirical data well enough, a model is then trained to match the simulation's behaviour.[1]

This differs from traditional Whole Brain Emulation, where the neuron models come from purely empirical data. Gaining empirical data about which neuron connects to which other neuron requires a brain scan, but brain scans are very hard to do.

By using lower level simulations, we can make a far finer model of the neuron, which captures all the important behaviours and computations that a neuron does.[2]

A human cell such as a neuron has 750MB of data in its DNA or "source code", and may store a smaller amount of data in its epigenetics or "memory." It may store a much smaller amount of data in the concentrations of various molecules and ions, which is the very short term memory or "CPU buffer."

Its behaviour is very complex in the sense it is hard to understand. But it isn't very "computationally complex" in the sense the nonrandom and important computations are fewer than the computations in a cheap computer.

If we can model human neurons and cells accurately enough to capture most of their nonrandom and important computations, then we can not only simulate how they send signals, but simulate how they grow, move, and use past information (in their epigenetics).

Such a model would be useful for studying the mysterious mechanisms of many neurological diseases, and how interventions would influence the neurological disease, and this may be a better investment pitch than Whole Brain Emulation.

But if the neurons and cells can be modelled both accurately and cheaply, it may be possible to simulate brain development from the very start.

Details

- The FHI's article on Whole Brain Emulation lists the Simulation Scales which a simulation could occur on. They are not talking about this idea of Scanless Whole Brain Emulation

- Simulations may occur on a mix of scales, e.g. organelles unimportant for cellular decisions may be simplified into "black boxes" with inputs and outputs, whereas proteins which cannot be boxed into an organelle are still simulated at the scale of individual proteins.

- In order to fit simulations to empirical data, or at least verify that simulations fit empirical data, it may be best to start with small animals like roundworms. Roundworms have already been simulated. It's important to start with smaller animals and gradually move up to humans, to make sure the simulated beings are not suffering in unnatural ways. Fit the embryo simulations to empirical data before simulating later development.[3] An unhappy simulated human may be as bad as a misaligned AI.

- I do not expect a human effort on this project to succeed faster than a human effort to build superintelligence (without Whole Brain Emulation). The only way it'll work is if superintelligence efforts are paused for a long time.

- Or if superintelligence is paused for a short time, and human-level AGI (which is good at engineering and thinks many times faster) can build it within that short time. But this sounds like a far shot.

- If the system is built, converting the simulated human into a human-like superintelligence should be relatively easier than building it in the first place.

AI can simulate physics and other systems far more cheaply than traditional physics simulators

An example of this is AI simulating the exact behaviour of potassium atoms, and correctly predicting the existence of a new state of matter.

"Why didn't they use traditional physics simulators without AI?" Well, to match the behaviour of real atoms accurately enough, they had to take quantum mechanics into account. Simulating atoms in a way which matches quantum mechanics, gets exponentially harder with more atoms (on a non-quantum computer).

AI on the other hand, learns a bunch of heuristics which approximates how quantum mechanics behaves, without doing the really hard quantum computations. How exactly it succeeds is mysterious, it just does.

Here is the beautiful video that I learned this from:

The mechanics of a bunch of potassium atoms is relatively simple, but even more complex phenomena like protein folding is being predicted better and better by Google DeepMind's AlphaFold.

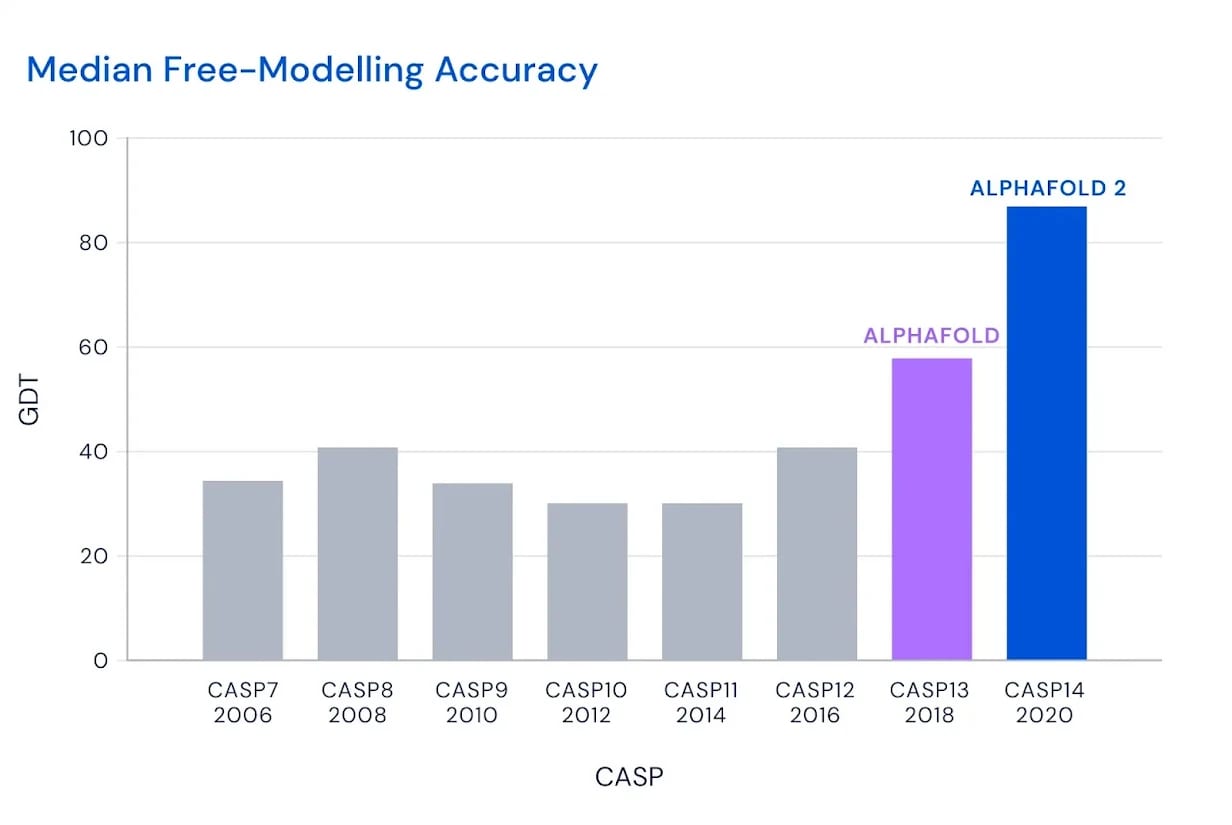

What's amazing about AlphaFold is that people tried many machine learning approaches on the protein folding problem for a long time. There was this competition called the Critical Assessment of Structure Prediction, where most attempts didn't getting very close to accurate results. AlphaFold scored thrillingly better than the other methods in a way no one thought possible.

It's an open question what other breakthroughs can be made in the field of predicting biochemistry with AI.

I admit, the rate of progress from AlphaFold to Scanless Whole Brain Emulation looks a bit slower than the rate of progress from Gemini and o3 to superintelligence. This requires something like an AI pause to work.

- ^

I see analogies to Iterated Amplification [? · GW]

- ^

Simulations fit empirical data much better than directly fitting models to empirical data, because a simulation may be made out of millions of "identical" objects. Each "identical" object has the same parameters. This means the independent parameters of the simulation may be a millionfold fewer than a model with the same number of moving parts. This means you need far fewer empirical data to fit the simulation to empirical data, assuming the real world has the same shape as the simulation, and is also made up of many moving parts "with the same parameters."

- ^

We can get empirical data about fetus brains because it's feasible to do a brain scan on a few cubic millimeters of the brain at enough resolution to see neuron connections.

5 comments

Comments sorted by top scores.

comment by avturchin · 2025-03-24T07:26:36.430Z · LW(p) · GW(p)

Nesov suggested in a comment that we can solve uploading without scanning via predicting important decisions of a possible person:

They might persistently exist outside concrete instantiation in the world, only communicating with it through reasoning about their behavior, which might be a more resource efficient way to implement a person than a mere concrete upload.

comment by ChristianKl · 2025-01-29T15:22:38.322Z · LW(p) · GW(p)

If you would create a general AI pause, you would also pause the development of AlphaFold successors. There are problems like developing highly targeted cancer vaccines based on AI models, that are harder to solve than what AlphaFold can currently solve but easier to solve than simulating a whole organ system.

It makes sense to focus biological emulation at this point to those problems that are valuable for medical purposes as you can get a lot of capital deployed if you provide medical value.

In general, it's not clear why superintelligence that comes from "Scanless Whole Brain Emulation" would help with AI safety. Any entity that you create this way, can copy itself and self-modify to change it's cognition in a way that makes it substantially different from humans.

Replies from: Max Lee↑ comment by Knight Lee (Max Lee) · 2025-01-29T23:32:28.422Z · LW(p) · GW(p)

Thank you, these are very thoughtful points and concerns.

You're right that a general AI pause on everything AI wouldn't be wise. My view is that most (but not all) people talking about an AI pause, only refer to pausing general purpose LLM above a certain level of capability, e.g. o1 or o3. I should have clarified what I meant by "AI pause."

I agree that companies which want to be profitable, should focus on medical products rather than such a moonshot. The idea I wrote here is definitely not an investor pitch, it's more of an idea for discussion similar to the FHI's discussion on Whole Brain Emulation.

AI safety implications

Yes, building any superintelligence is inherently dangerous. But not all superintelligences are equally dangerous!

No self modifications

In the beginning, the simulated humans should not do any self modifications all, and just work like a bunch of normal human researchers (e.g. on AI alignment, or aligning the smarter versions of themselves). The benefit is that the smartest researchers can be cloned many times, and they might think many times faster.

Gradual self modifications

The simulated humans can modify a single volunteer become slightly smarter, while other individuals monitor her. The single modified volunteer might describe her ideal world in detail, and may be subject to a lie detector which actually works.

Why modified humans are still safer than LLMs

The main source of danger is not a superintelligence which kills or harms people out of "hatred" or "disgust" or any human-like emotion. Instead, the main source of extinction is a superintelligence which assigns absolutely zero weight to everything humans cherish, and converts the entire universe into paperclips or whatever its goal is. It does not even spare a tiny fraction for humans to live in.

The only reason to expect LLMs to be safe in any way, is that they model human thinking, and are somewhat human-like. But they are obviously far less human-like than actual simulated humans.

A group of simulated humans who modify themselves to become smarter can definitely screw up at some stage, and end up as a bad superintelligence which assigns exactly zero weigh to everything humans cherish. The path may be long and treacherous, and success is by no means guaranteed.

However, it is still relatively much more hopeful than having a bunch of o3 like AI, which undergoes progressively more and more reinforcement learning towards rewards such as "solve this programming challenge," "prove this math equation," until there is so much reinforcement learning that their thoughts no longer resemble the pretrained LLMs they started off as (which were at least trying to model human thinking).

"You can tell the RL is done properly when the models cease to speak English in their chain of thought"

-Andrej Karpathy

Since the pretrained LLMs can never truly exceed human level intelligence, the only way for reinforcement learning to create an AI with far higher intelligence, may be to steer them far from how humans think.

These strange beings are optimized to solve these problems and occasionally optimized to give lip service to human values. But it is extremely unknown what their resulting end goals will be. It could easily be very far from human values.

Reinforcement learning (e.g. for solving math problems or giving lip service to human values) only controls their behavior/thoughts, not their goals. Their goals, for all we know, are essentially random lotteries, with a mere tendency to resemble human goals (since they started off as LLMs). The tendency gets weaker and weaker as more reinforcement learning is done, and you can only blindly guess if the tendency will remain strong enough to save us.

Replies from: ChristianKl↑ comment by ChristianKl · 2025-01-30T01:28:37.719Z · LW(p) · GW(p)

I agree that companies which want to be profitable, should focus on medical products rather than such a moonshot. The idea I wrote here is definitely not an investor pitch, it's more of an idea for discussion similar to the FHI's discussion on Whole Brain Emulation.

You might also want to read Truthseeking is the ground in which other principles grow. Solving actual problems on the way to building up capabilities is a way that keeps everyone honest.

In the beginning, the simulated humans should not do any self modifications all, and just work like a bunch of normal human researchers (e.g. on AI alignment, or aligning the smarter versions of themselves). The benefit is that the smartest researchers can be cloned many times, and they might think many times faster.

That's like the people who advocated AI boxing. Connecting AI's to the internet is so economically valuable that it's done automatically.

The main source of danger is not a superintelligence which kills or harms people out of "hatred" or "disgust" or any human-like emotion. Instead, the main source of extinction is a superintelligence which assigns absolutely zero weight to everything humans cherish

Humans consider avoiding death to have a pretty high weight. Entities that spin up and kill copies at a regular basis are likely going to evolve quite different norms about the value of life than humans. A lot of what humans value comes out of how we interact with the world in an embodied way.

Replies from: Max Lee↑ comment by Knight Lee (Max Lee) · 2025-01-30T01:54:47.693Z · LW(p) · GW(p)

I completely agree with solving actual problems instead of only working on Scanless Whole Brain Emulation :). I also agree that just working on science and seeing what comes up is valuable.

Both simulated humans and other paths to superintelligence will be subject to AI race pressures. I want to say that given the same level of race pressure, simulated humans are safer. Current AI labs are willing to wait months before releasing their AI, the question is whether this is enough.

I didn't think of that, that is a very good point! They should avoid killing copies, and maybe save them to be revived in the future. I highly suspect that compute is more of a bottleneck than storage space. (You can store the largest AI models in a typical computer hard-drive, but you won't have enough compute to run them.)