We’re not as 3-Dimensional as We Think

post by silentbob · 2024-08-04T14:39:16.799Z · LW · GW · 17 commentsContents

Vision Screens Video Games Volumes Volume Habitat Conclusion None 17 comments

While thinking about [LW(p) · GW(p)] high-dimensional spaces and their less intuitive properties, I came to the realization that even three spatial dimensions possess the potential to overwhelm our basic human intuitions. This post is an exploration of the gap between actual 3D space, and our human capabilities to fathom it. I come to the conclusion that this gap is actually quite large, and we, or at least most of us, are not well equipped to perceive or even imagine “true 3D”.

What do I mean by “true 3D”? The most straightforward example would be some ℝ³ → ℝ function, such as the density of a cloud, or the full (physical) inner structure of a human brain (which too would be a ℝ³ → whatever function). The closest example I’ve found is this visualization of a ℝ³ → ℝ³ function (jump to 1:14):

(It is of course a bit ironic to watch a video of that 3D display on a 2D screen, but I think it gets the point across.)

Vision

It is true that having two eyes allows us to have depth perception. It is not true that having two eyes allows us to “see in 3D”. If we ignore colors for simplicity and assume we all saw only in grayscale, then seeing with one eye is something like ℝ² → ℝ as far as our internal information processing is concerned – we get one grayscale value for each point on the perspective projection from the 3D physical world onto our 2D retina. Seeing with two eyes then is ℝ² → ℝ² (same as before, but we get one extra piece of information for each point of the projection, namely depth[1]), but it's definitely not ℝ³ → (...). So the information we receive still has only two spatial dimensions, just with a bit more information attached.

Also note that people who lost an eye, or for other reasons don’t have depth perception, are not all that limited in their capabilities. In fact, other people may barely notice there’s anything unusual about them. The difference between “seeing in 2D” and “seeing with depth perception” is much smaller than the difference to not seeing at all, which arguably hints at the fact that seeing with depth perception is suspiciously close to pure 2D vision.

Screens

For decades now, humans have surrounded themselves with screens, whether it’s TVs, computer screens, phones or any other kind of display. The vast majority of screens are two-dimensional. You may have noticed that, for most matters and purposes, this is not much of a limitation. Video games work well on 2D screens. Movies work well on 2D screens. Math lectures work well on 2D screens. Even renderings of 3D objects, such as cubes and spheres and cylinders and such, work well in 2D. This is because 99.9% of the things we as humans interact with don’t actually require the true power of three dimensions.

There are some exceptions, such as brain scans – what is done there usually is to use time as a substitute for the third dimension, and show an animated slice through the brain. In principle it may be better to view brain scans with some ~holographic 3D display, but even then, the fact remains that our vision apparatus is not capable of perceiving 3D in its entirety, but only the projection onto our retinas, which even makes true 3D displays less useful than they theoretically could be.

Video Games

The vast majority of 3D video games are based on polygons: 2D surfaces placed in 3D space. Practically every 3D object in almost any video game is hollow. They’re just an elaborate surface folded and oriented in space. You can see this when the camera clips into some rock, or car, or even player character: they’re nothing but a hull. As 3D as the game looks, it’s all a bit of an illusion, as the real geometry in video games is almost completely two-dimensional.

Here’s one example of camera clipping:

The only common exception I’m aware of is volumetric smoke – but this is primarily a visual gimmick.

You might now say that there’s something I’m overlooking, namely voxel games, such as Minecraft or Teardown. Voxel engines are inherently 3-dimensional! But even then, while this is true, Minecraft is in its standard form still using 2D polygons to render its voxels.

Volumes

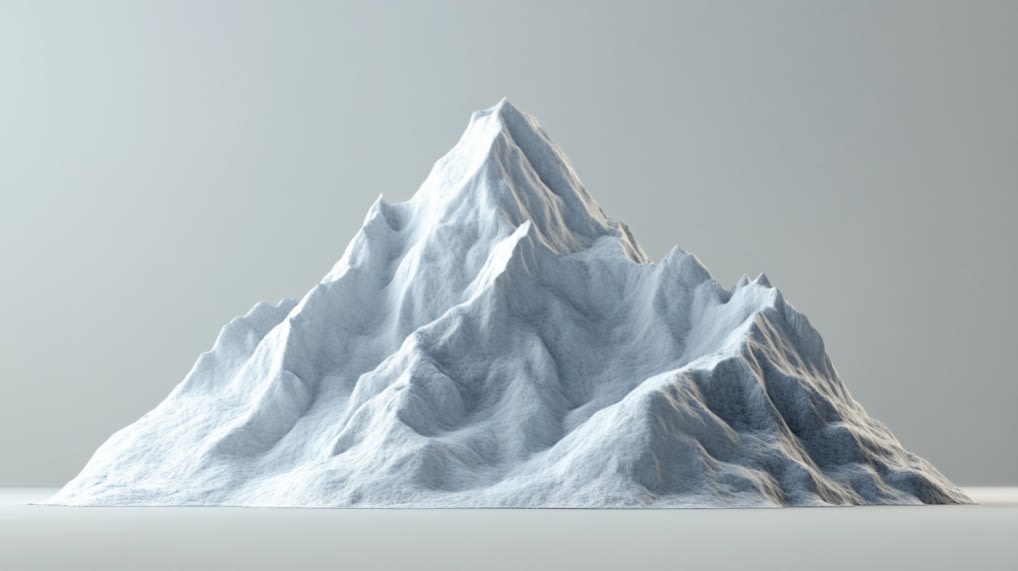

Stop reading for a second and try to imagine a mountain in as much detail as possible.

The mountain in your head may at first glance seem quite 3-dimensional. But when you think of a mountain, you most likely think primarily of the surface of a mountain. Sure, you are aware there’s a bunch of matter below the surface, but is the nature of that matter below the surface truly an active part of your imagination while you’re picturing a mountain? In contrast, imagine a function from ℝ³ → ℝ. Something like a volumetric cloud with different densities at each point in 3D space. We can kind of imagine this in principle, but I think it becomes apparent quickly that our hardware is not as well equipped for this, particularly once the structures become more detailed than the smooth blobbyness of a cloud. And even if you’re able to think about complex volumes, it becomes much more difficult once you try to discuss them with others, let alone create some accurate visualization that preserves all information.

Let’s forget about mountains and clouds, and do something as seemingly simple as visualizing the inner complexity of an orange. Can you truly do that? In high detail? Do you really have an idea what shape an orange would have from the inside, in 3D? Where are the seeds placed? What do the orange’s cells look like in 3D and how are they arranged? How and where are the individual pieces separated by skin, and so on?

Most people are just fine picturing a slice of an orange, or an orange cut in half – but that would once again reduce it to surfaces. Imagining an orange in true 3D is difficult, no less because we simply have never actually seen one (and wouldn’t really be able to, because, once again, we can’t truly see in 3D, but only 2D projections with depth perception).

Volume

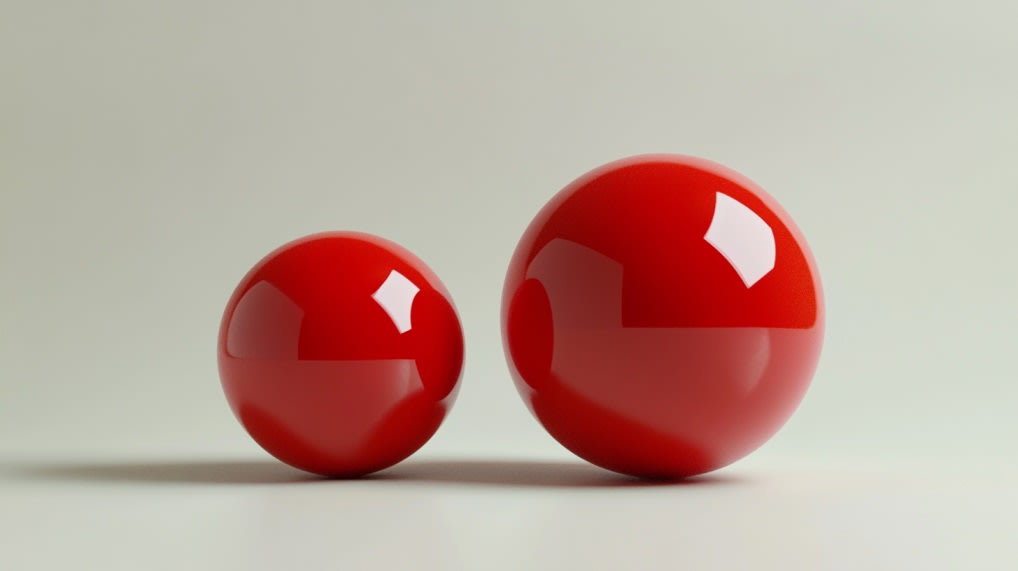

For most people it’s rather unintuitive how similar in size a sphere of double the volume of another sphere looks. Maybe you know on System 2 level that the third root of 2 is about 1.26, and hence a ball of radius 1.26 would have double the volume of a ball of radius 1. Still, if you put these two balls in front of random people and let them estimate the ratio of the volumes, the average answer you’ll get will very likely be much smaller than 2.

Habitat

Lastly, an evolutionary reason for why it makes sense that our brains don’t truly grasp three dimensions: for most of history, humans cared most about surfaces and very little about volumes. We live on the ground. Most of the things we meaningfully interact with are solid, and hence the shared interface is their and our surface.

There are some animals that live in true 3D. Most notably fish. Birds? Probably much more than us, but birds are already pretty surface-bound for much of their lives. Theoretically animals that dig tunnels might also benefit from proper 3D processing capabilities, but most of them are small and probably don’t have the mental complexity required to really grasp three dimensions. What about apes? Well, anything that’s climbing a lot certainly has more need for verticality than us, but still, I’d argue it’s very similar in nature to what humans are up to, when it comes to spatial perception. It’s all about surfaces, and very often even about 1-dimensional properties such as the distance between some prey or predator and yourself. Your brain is trained to care about distances. It’s less well equipped to think about areas. Even less to think about complex volumes.

Conclusion

We tend to think of ourselves as “3D natives”, but it turns out that 3D can go quite far beyond what our brains are good at processing. “True” 3D geometry can quickly overwhelm us, and it’s easy to underestimate what it actually entails. Whether this realization is useful or not, I certainly find it interesting to think about. And if you have indeed read this whole post up to this point, then I hope you do too[2].

- ^

Depth of course is not what we get directly, but the interpretation our brain ends up with based on the two input channels that are our two eyes; but what ends up in our awareness then is not two separate images from the eyes, but instead this mix of brightness (or color) and depth.

- ^

I suspect some readers will rather disagree with the post and (probably rightly) insist that they are indeed able to intuitively think about complex 3D structures without major issues. I certainly don't think it's impossible to do so. But I still think that there's quite a gap between "the kind of 3D we need to deal with to get through life", and "the full extent of what 3D actually means", and that it's easy to overlook that difference.

- ^

The larger sphere has roughly 2.3x the volume of the smaller sphere (hard to say exactly, as the spheres are in fact not perfectly spherical)

17 comments

Comments sorted by top scores.

comment by ChrisHibbert · 2024-08-04T16:40:02.858Z · LW(p) · GW(p)

I think there's a lot of research that shows we're fairly bad at predicting how other people see the world, and how much detail there is in their heads. I've read quite a few books that talk about how some people presume that other people are speaking metaphorically when they talk about imagining scenes in color or in 3-d, or how they can "hear" a musical piece in their mind. Those who can assume that others just aren't trying. Face-blindness wasn't recognized for quite a while.

Some people are much better at doing mental rotations of 3-d objects than others. I can do a decent mental image of the inside of an orange, an apple, a persimmon, or a pear, but perhaps I've spent more time cutting up fruit than others. My mental image of the internal shape of the branches in our persimmon tree is pretty detailed, since I've been climbing inside to pick fruit and trim for 35 years.

google.com/images?q=mental+rotation+three-dimensional+objects

There was a time when I was working on n-dimensional data structures that I could cleanly think in 4 or 5 dimensional "images". They weren't quite visual, since vision is so 2-d, but I could independently manipulate features of the various dimensions separately.

When looking at full-color stereograms, you have to have a mental model of the image depth to make sense of it, even if the rendering is all of surfaces.

Replies from: Mo Nastri↑ comment by Mo Putera (Mo Nastri) · 2024-08-05T09:28:42.147Z · LW(p) · GW(p)

There was a time when I was working on n-dimensional data structures that I could cleanly think in 4 or 5 dimensional "images". They weren't quite visual, since vision is so 2-d, but I could independently manipulate features of the various dimensions separately.

This remark is really interesting. It seems related to the brain rewiring that happens after, say, a subject has been blindfolded for a week, in that their hearing and tactile discrimination improves a lot to compensate.

Replies from: Errorcomment by Gunnar_Zarncke · 2024-08-06T21:46:25.584Z · LW(p) · GW(p)

The big brains of marine mammals may be needed for the 3D nature of their habitat [LW(p) · GW(p)].

For what it's worth, I don't have much visual imagination. It's not Aphantasia, I can "visualize" 3D things, but I have described it at times more like an exploded view drawing. I don't "see" the details of the surface, but the structures. When you mentioned the orange, I didn't "see" the orange surface or what a cut looks like, but I visualized the segmented structure with embedded seeds surrounded by two layers of white and orange skin.

I wonder whether that is partly because I played so much with Lego Technic bricks and built machines. Tesla said that he could animate whole machines in his mind, so I guess there are people who can think in 3D. Maybe even 4D. Though I'm sure resolution goes down quickly.

Replies from: silentbob↑ comment by silentbob · 2024-08-10T19:36:08.679Z · LW(p) · GW(p)

Thanks for sharing your thoughts and experience, and that first link indeed goes exactly in the direction I was thinking.

I think in hindsight I would adjust the tone of my post a bit away from "we're generally bad at thinking in 3D" and more towards "this is a particular skill that many people probably don't have as you can get through the vast majority of life without it", or something like that. I mostly find this distinction between "pseudo 3D" (as in us interacting mostly with surfaces that happen to be placed in a 3D environment, but very rarely, if ever, with actual volumes) and "real 3D" interesting, as it's probably rather easy to overlook.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2024-08-11T05:13:16.285Z · LW(p) · GW(p)

I do agree that we cannot perceive 3D thru the senses and have to infer the 3D structure and build a mental model of it. And a model composed mostly of surfaces is probably much more common.

comment by ErioirE (erioire) · 2024-08-12T17:33:38.150Z · LW(p) · GW(p)

We might be able to package this up into a nice tidy term and call it "volume insensitivity". See also: The un-intuitiveness of the square-cube law in regards to scaling things up or down.

I find I'm much less adept at first person three dimensional video games than two dimensional ones. This may have more to do with how in e.g. platformers, everything that can effect the player is in your field of view. Not so in three dimensional games where you can get, say, stabbed in the back and never so much as glimpse what got you. Hollow Knight is a much easier game for me than Dark Souls 3, despite people on the internet characterizing Hollow Knight as "2-D Dark Souls".

In similar avenues, there seems to be a dichotomy between people who think in relative directions vs those who intuitively think in cardinal directions.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-08-04T22:51:00.641Z · LW(p) · GW(p)

Something I've noticed myself in studying and writing about neuroscience is that I can be quite good at visualizing a lot of specific details about a 3D object, but still terrible at describing it clearly. I think I'm pretty good at visualizing 3D objects, as far as human go, but I've spent a number of fruitless hours attempting to conceptualize 4D objects and remain totally lost. I can memorize facts about higher dimensional objects, but my intuitions fail me.

An article related to this that I like: http://www.penzba.co.uk/cgi-bin/PvsNP.py?SpikeySpheres#HN2

When you try to map the curved cortical sheet as a 2D surface it's suddenly harder for me to recognize the otherwise familiar shapes. But there are definitely advantages to a 2D projection.

https://www.cell.com/cms/attachment/6518ce2f-b8cd-48cd-b65d-e747bc739379/gr4.jpg

(source: https://www.cell.com/neuron/fulltext/S0896-6273(23)00338-0)

Similar data presented as a movie of a spinning object feels easier to process:

But going even simpler, to representing the data simply as a matrix, is even easier to interpret, but you then have to make a lot of choices about which aspects of the data to represent in this reduced simplified form:

https://dataportal.brainminds.jp/marmoset-tracer-injection/connection-matrix

comment by espoire · 2025-04-14T06:51:24.008Z · LW(p) · GW(p)

An anecdata point:

I couldn't visualize the mountain at all. ...but I feel like I was able to visualize an orange's innards in high fidelity -- which surprised me, because I often fail at 2d visualizations which seem to be easy for the majority of the population. I attribute the difference between the orange and the mountain to simple subject familiarity; I actually do know what's in an orange.

I also had the experience of feeling like I was able to visualize 4d spaces in some non-abstract way when I studied non-Euclidean geometry in my early teens. I used visualizations in a 13-dimensional space in designing some software about 7 years ago, and am currently using a visual argument in a 5+ (variable) dimensional vector space to "prove" that a subsystem for my video game will achieve its purpose. I sometimes make 3d model assets by visualizing the 3d shape and then manually typing in coordinates for each vertex.

My case seems to me to suggest that 3+ dimensional visualization is a distinct skill/ability from 2d visualization -- and that high competence in 2d visualization is not a prerequisite for higher-dimensional visualization. It also "feels" introspectively like a single skill for 3+ dimensional visualization, NOT a separate skill for each dimensionality as might be assumed due to 2d seeming to be a special case.

The existence of the 2d special case in my brain seems curious; naively, if I can handle any dimensionality 3+, I ought to be able to simply use that skill if it's more competent than my 2d visualization. There having ever been a 2d special case makes some sense; I can imagine some instinctual ability there, or perhaps it being inductively simple to create given the 2d input data. But why did the 2d special case persist after it became outclassed by an emerging 3+d ability?

I'm now curious about what may happen if I attempt to explicitly involve my 3+d ability to take over for 2d visualization tasks. Can I gain 2d visualization capacity this way? Why is suppressing the "native" 2d mode so difficult for 2d tasks? If I do, will it break anything? I'd be worried about e.g. loss of other plausibly-instinctual visual abilities like facial recognition, emotion recognition, etc, but I already seem to be inept at those skills; I don't have much to lose.

comment by Jonathan Claybrough (lelapin) · 2024-08-04T22:42:53.655Z · LW(p) · GW(p)

I don't think your second footnote sufficiently addresses the large variance in 3D visualization abilities (note that I do say visualization, which includes seeing 2D video in your mind of a 3D object and manipulating that smoothly), and overall I'm not sure where you're getting at if you don't ground your post in specific predictions about what you expect people can and cannot do thanks to their ability to visualize 3D.

You might be ~conceptually right that our eyes see "2D" and add depth, but *um ackshually*, two eyes each receiving 2D data means you've received 4D input (using ML standards, you've got 4 input dimensions per time unit, 5 overall in your tensor). It's very redundant, and that redundancy mostly allows you to extract depth using a local algo, which allows you to create a 3D map in your mental representation. I don't get why you claim we don't have a 3D map at the end.

Back to concrete predictions, are there things you expect a strong human visualizer couldn't do? To give intuition I'd say a strong visualizer has at least the equivalent visualizing, modifying and measuring capabilities of solidworks/blender in their mind. You tell one to visualize a 3D object they know, and they can tell you anything about it.

It seems to me the most important thing you noticed is that in real life we don't that often see past the surface of things (because the spectrum of light we see doesn't penetrate most material) and thus most people don't know the inside of 3D things very well, but that can be explained by lack of exposure rather than inability to understand 3D.

Fwiw looking at the spheres I guessed an approx 2.5 volume ratio. I'm curious, if you visualized yourself picking up these two spheres, imagining them made of a dense metal, one after the other, could you feel one is 2.3 times heavier than the previous?

Replies from: Archimedes↑ comment by Archimedes · 2024-08-04T23:20:22.668Z · LW(p) · GW(p)

I also guessed the ratio of the spheres was between 2 and 3 (and clearly larger than 2) by imagining their weight.

I was following along with the post about how we mostly think in terms of surfaces until the orange example. Having peeled many oranges and separated them into sections, they are easy for me to imagine in 3D, and I have only a weak "mind's eye" and moderate 3D spatial reasoning ability.

Replies from: silentbob↑ comment by silentbob · 2024-08-10T19:30:18.762Z · LW(p) · GW(p)

I find your first point particularly interesting - I always thought that weights are quite hard to estimate and intuit. I mean of course it's quite doable to roughly assess whether one would be able to, say, carry an object or not. But when somebody shows me a random object and I'm supposed to guess the weight, I'm easily off by a factor of 2+, which is much different from e.g. distances (and rather in line with areas and volumes).

comment by AprilSR · 2024-08-04T22:12:31.291Z · LW(p) · GW(p)

I think I have a much easier time imagining a 3D volume if I'm imagining, like, a structure I can walk through? Like I'm still not getting the inside of any objects per se, but... like, a complicated structure made out of thin surfaces that have holes in them or something is doable?

Basically, I can handle 3D, but I won't by default have all the 3Dish details correctly unless I meaningfully interact with the full volume of the object.

comment by Lorxus · 2024-08-06T17:36:41.083Z · LW(p) · GW(p)

most of them are small and probably don’t have the mental complexity required to really grasp three dimensions

Foxes and ferrets strike me as two obvious exceptions here, and indeed, we see both being incredibly good at getting into, out of, and around spaces, sometimes in ways that humans might find unexpected.

comment by Benjamin Kost (benjamin-kost) · 2024-08-05T06:14:30.595Z · LW(p) · GW(p)

I am not sure what your point was with this, but I think the concept presented is more easily explained by the fact that the more complex the model our brains try to map to, the higher the expected error rate rather than this being a unique phenomenon from mapping 2D vs 3D objects.

comment by philip_b (crabman) · 2024-08-04T18:29:45.169Z · LW(p) · GW(p)

So you say humans don't reason about the space and objects around them by keeping 3d representations. You think that instead the human brain collects a bunch of heuristics what the response should be to a 2d projection of 3d space, given different angles - an incomprehhensible mishmash of neurons like in an artificial neural network that doesn't have any CNN layers for identifying the digit by image, and just memorizes all rules for all types of pictures with all types of angle like a fully connected layer.

comment by ChrisHibbert · 2024-08-04T17:14:00.132Z · LW(p) · GW(p)

https://www.quantamagazine.org/what-happens-in-a-mind-that-cant-see-mental-images-20240801/