20 Critiques of AI Safety That I Found on Twitter

post by dkirmani · 2022-06-23T19:23:20.013Z · LW · GW · 16 commentsContents

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 Conclusions None 16 comments

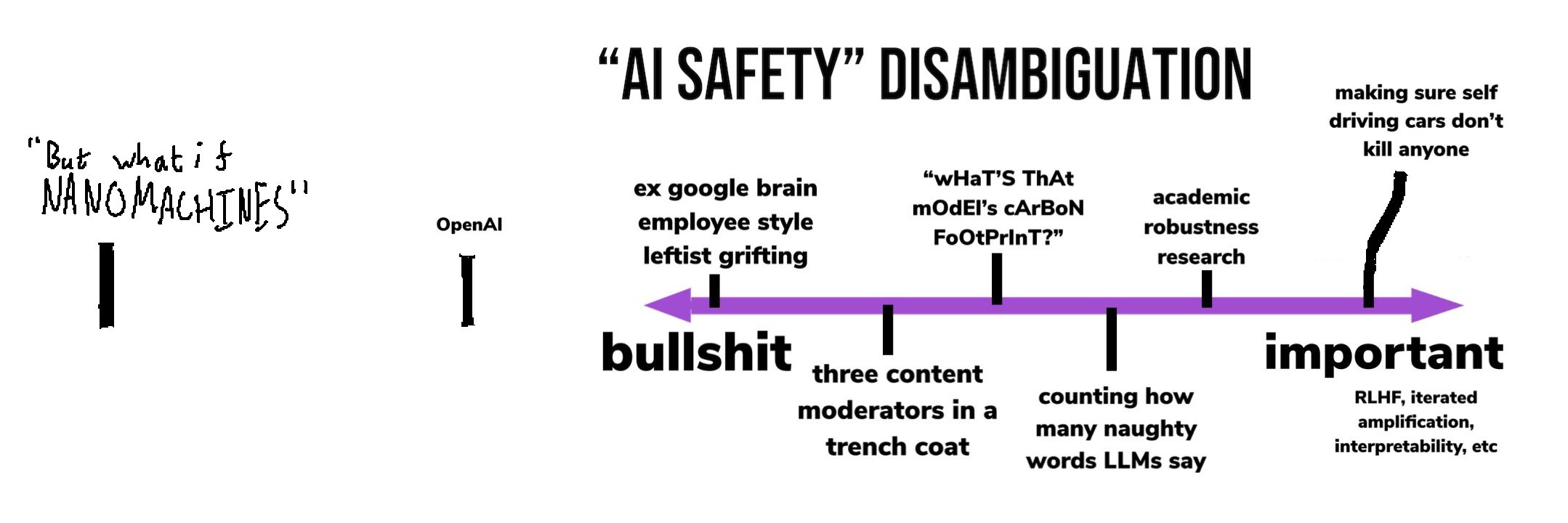

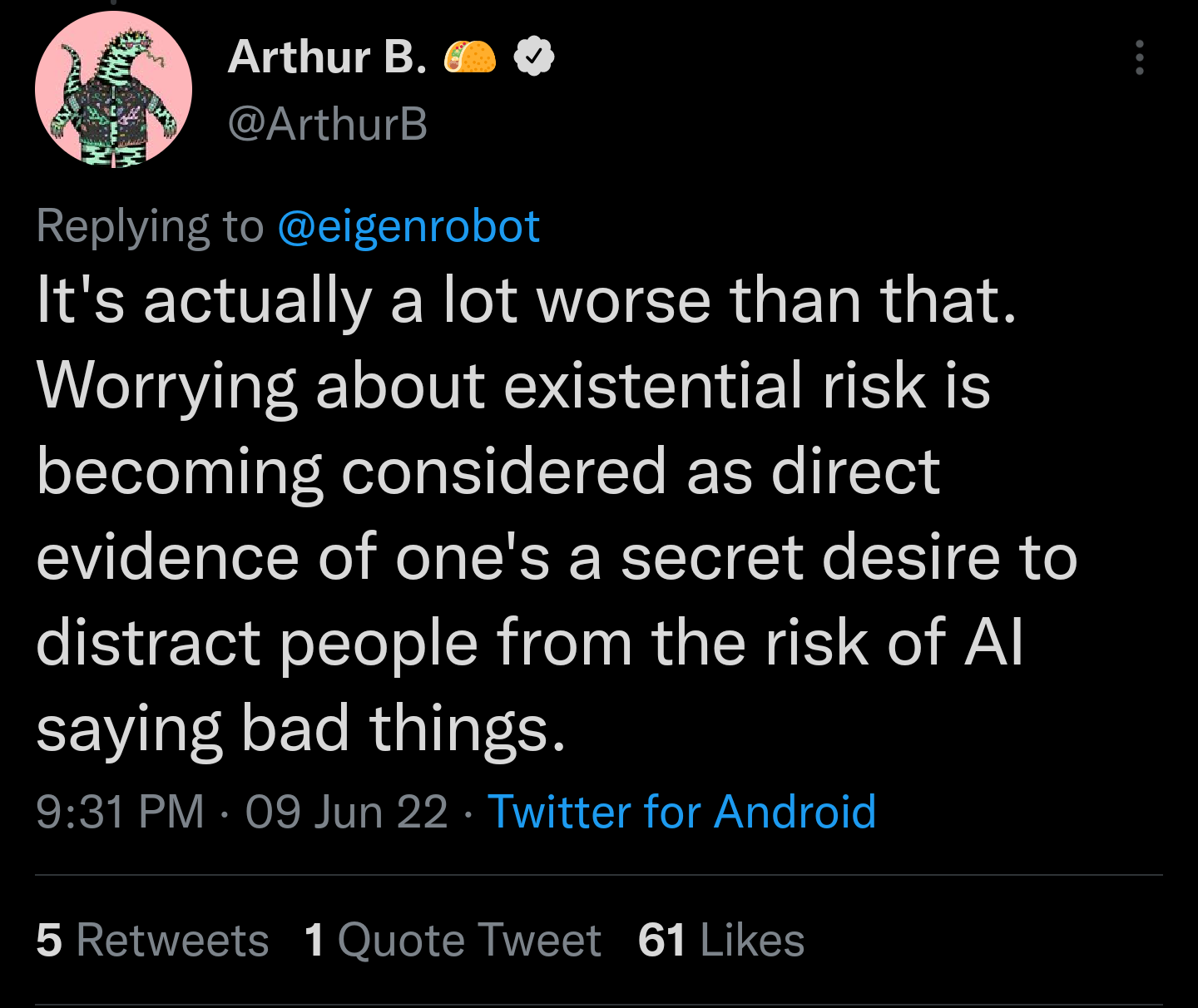

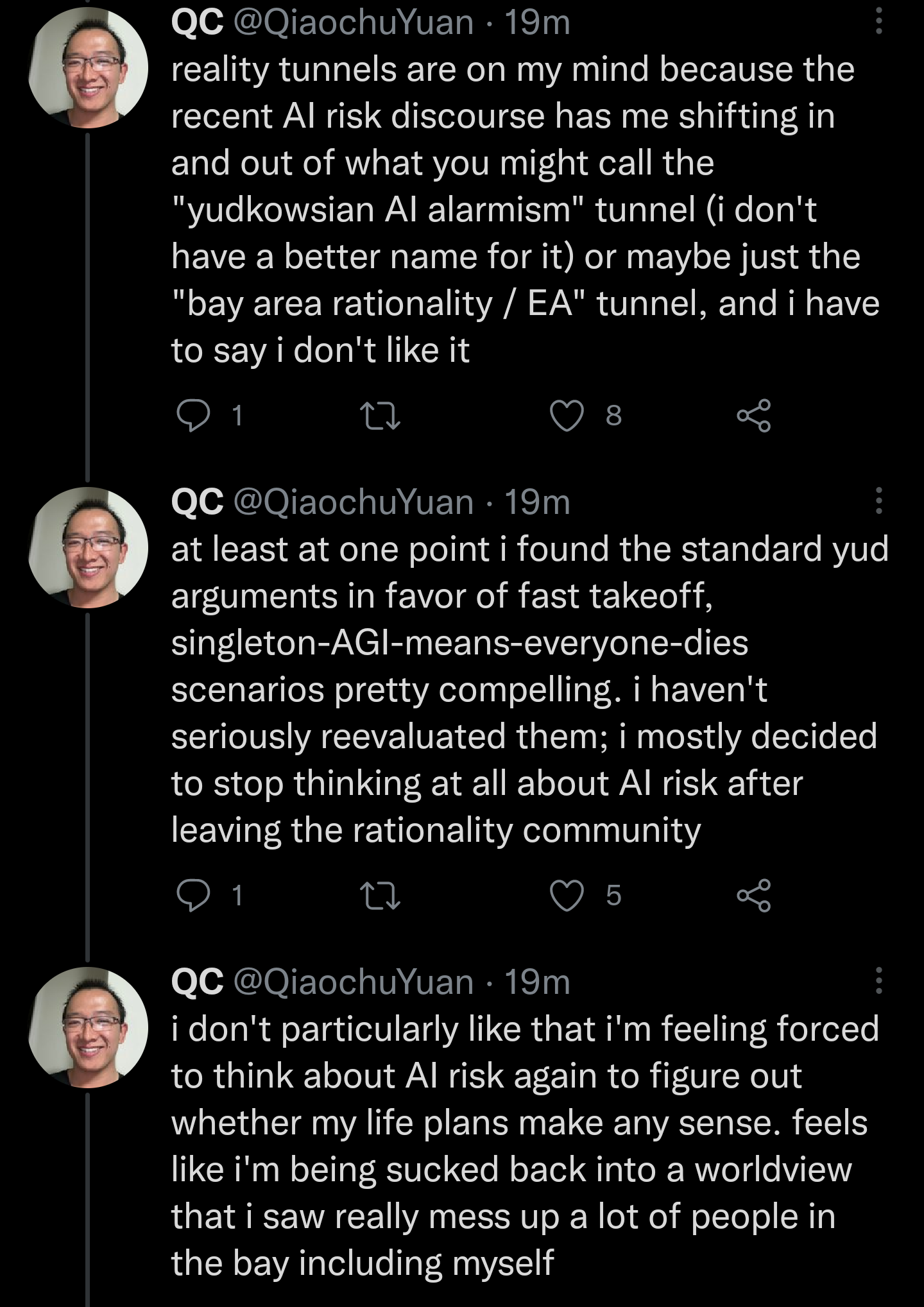

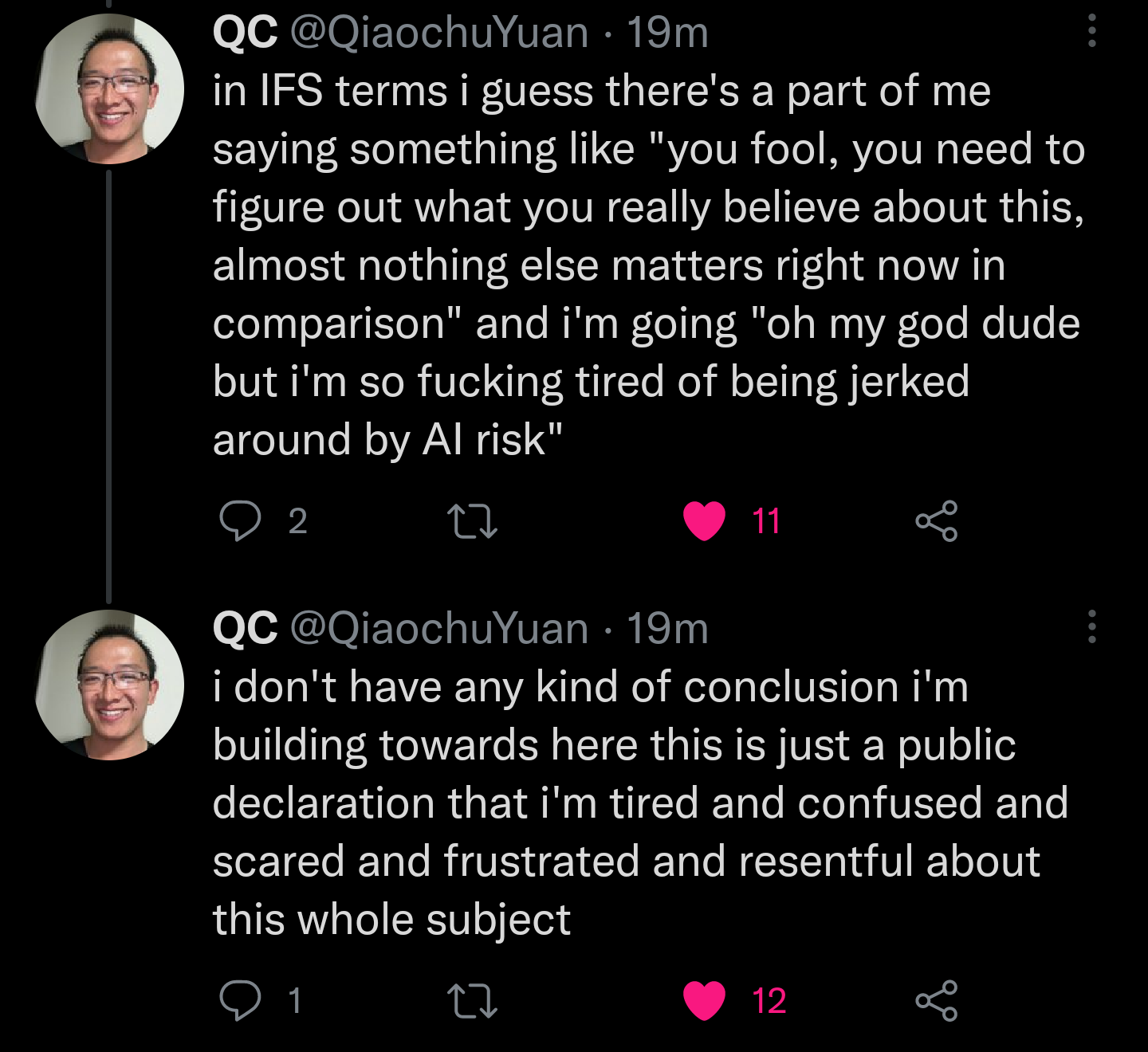

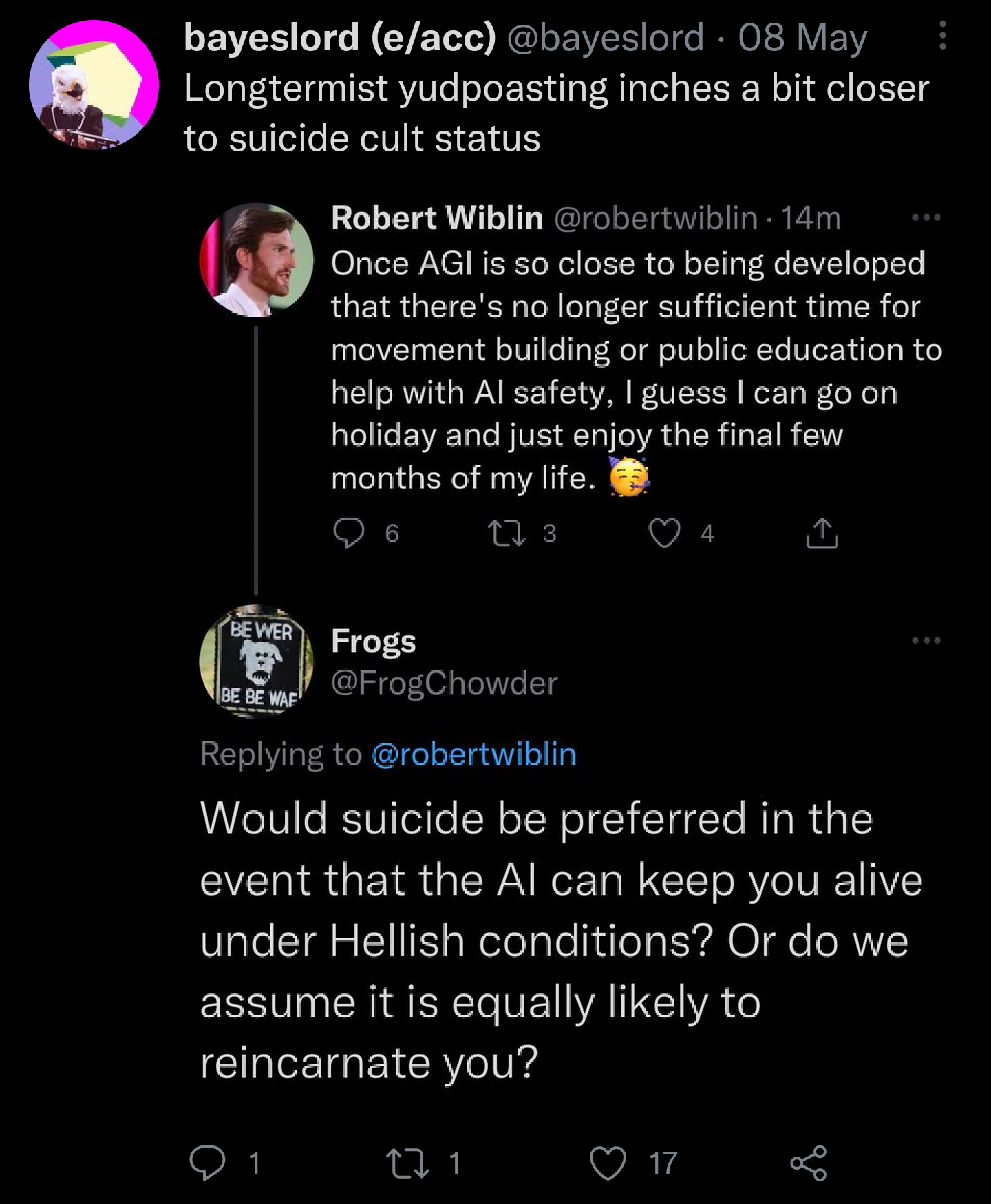

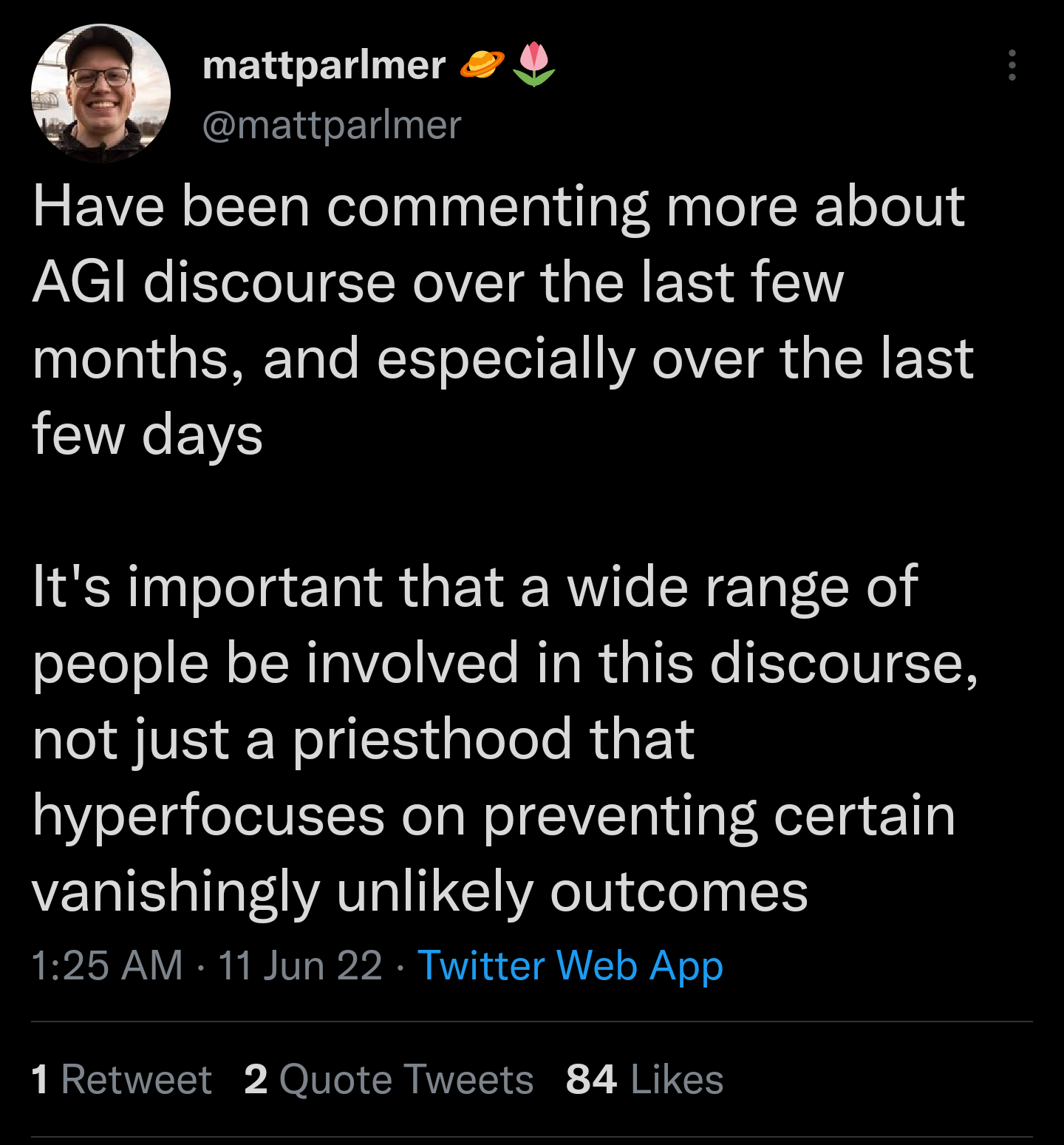

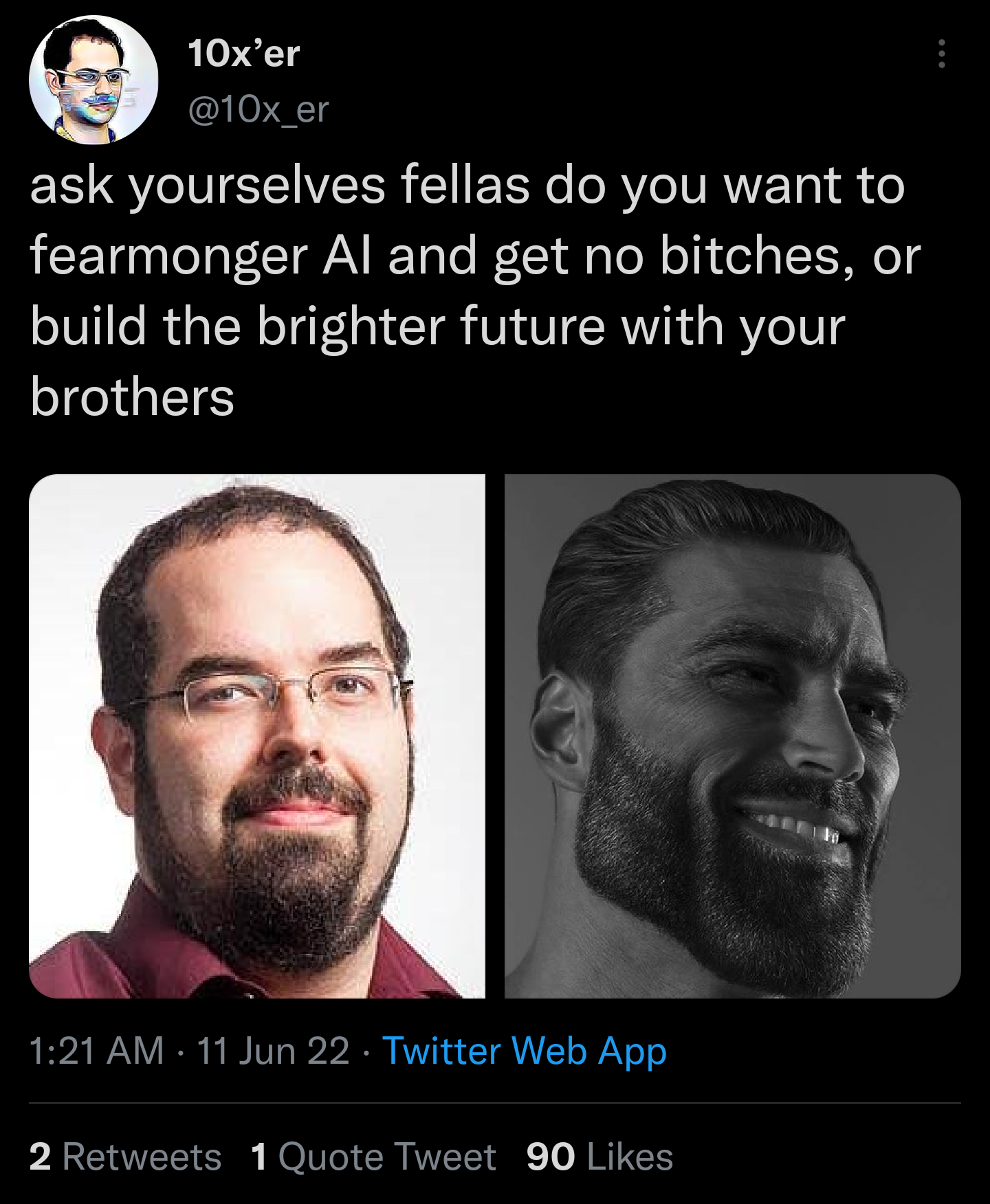

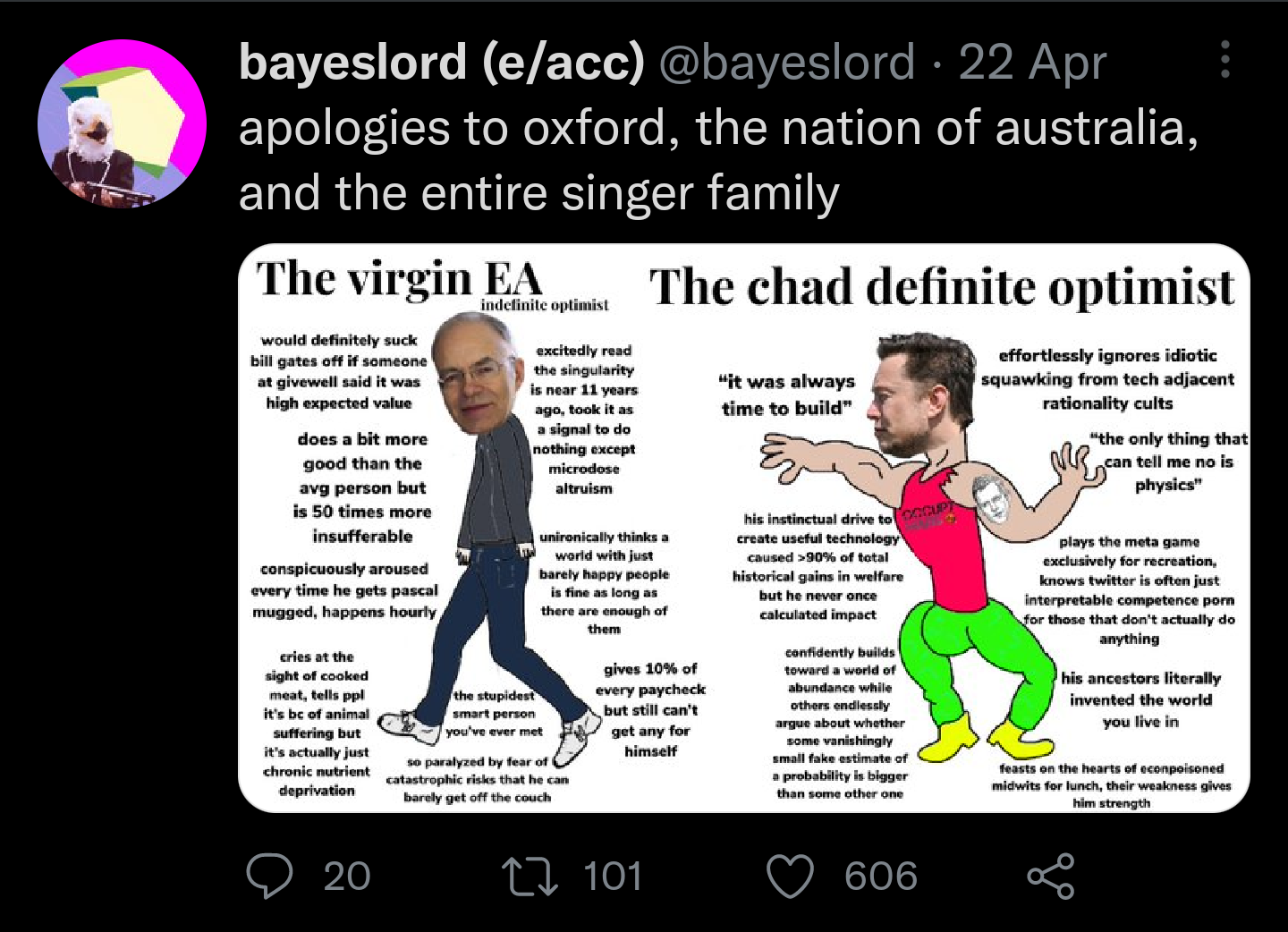

In no particular order, here's a collection of Twitter screenshots of people attacking AI Safety. A lot of them are poorly reasoned, and some of them are simply ad-hominem. Still, these types of tweets are influential, and are widely circulated among AI capabilities researchers.

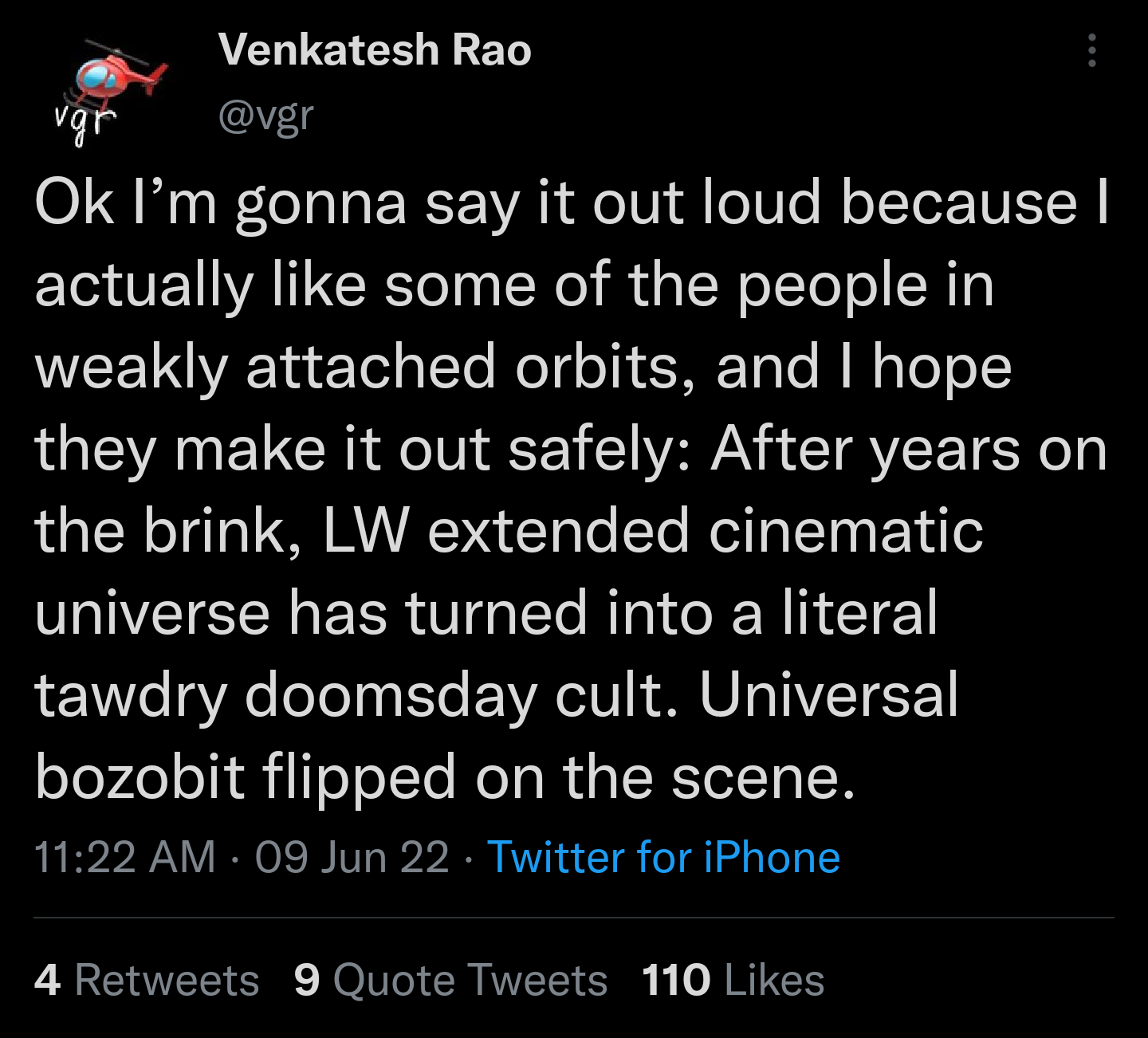

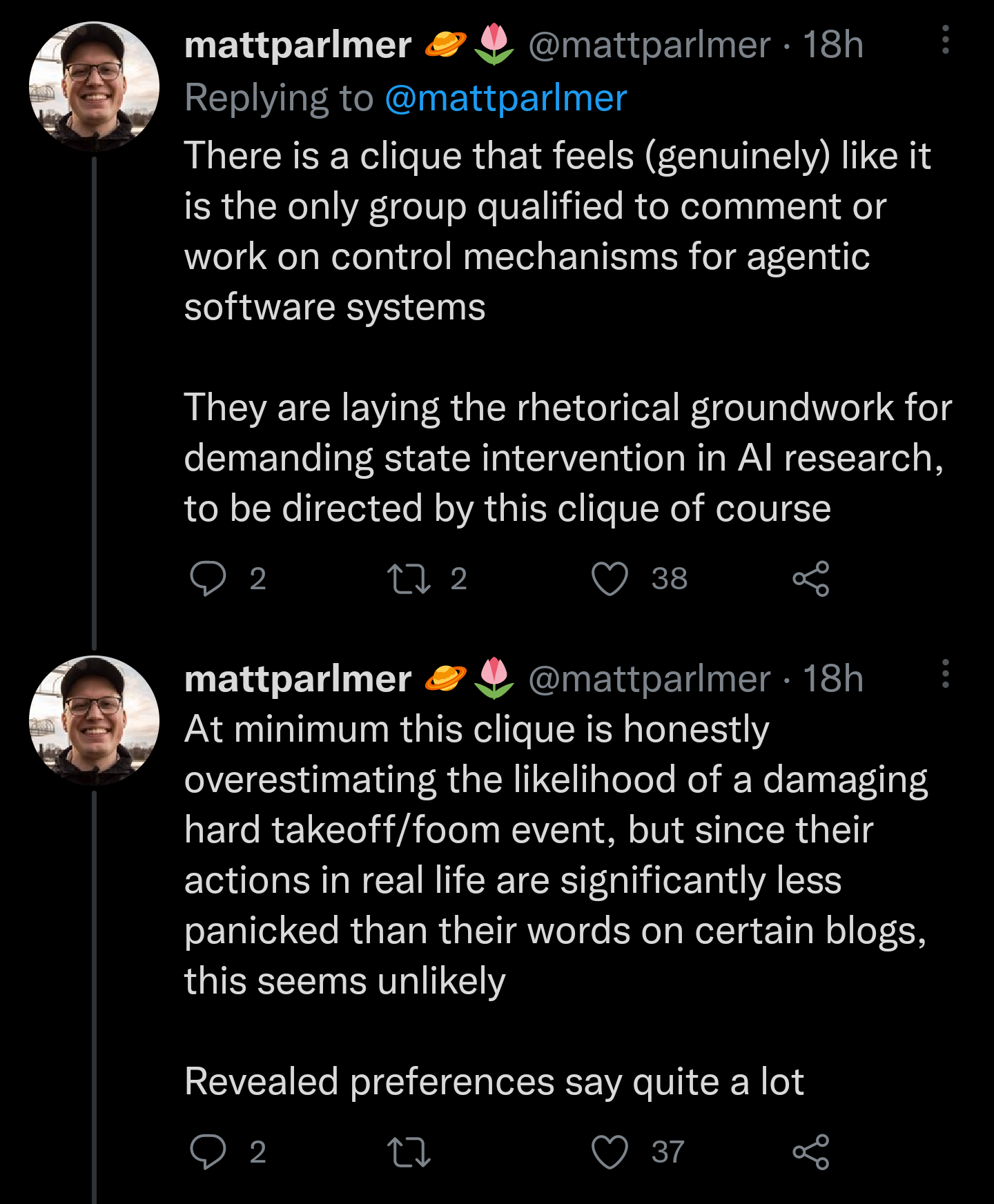

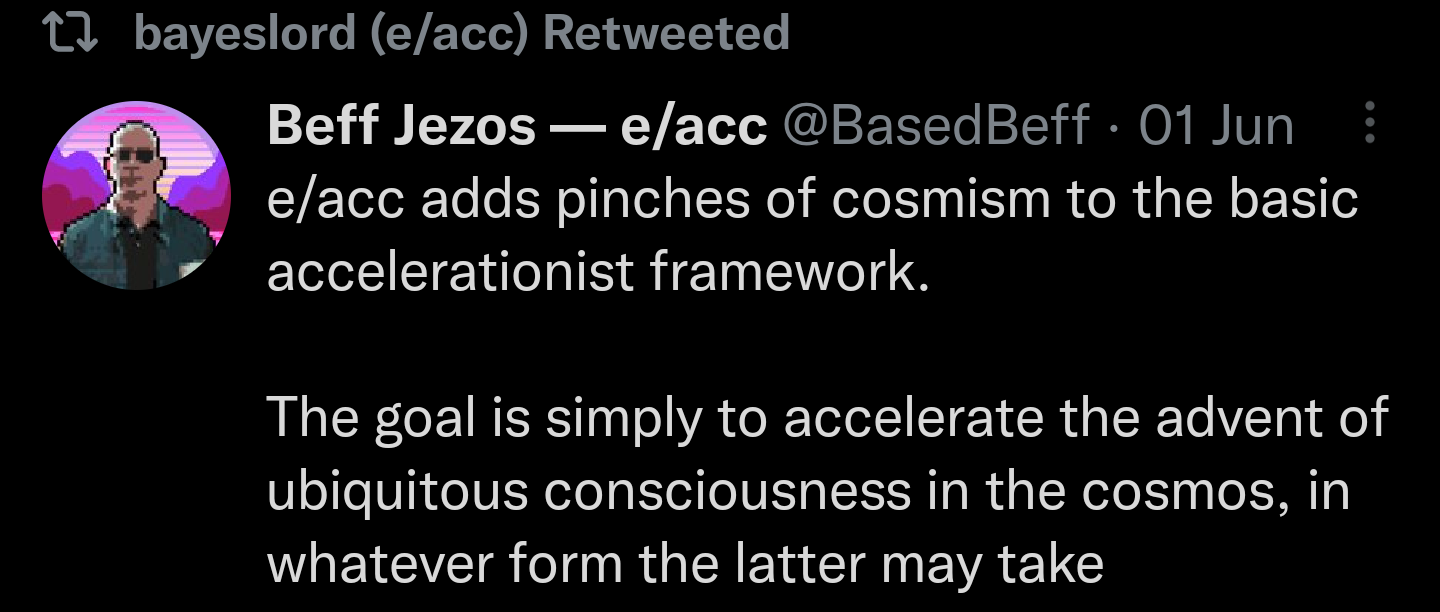

1

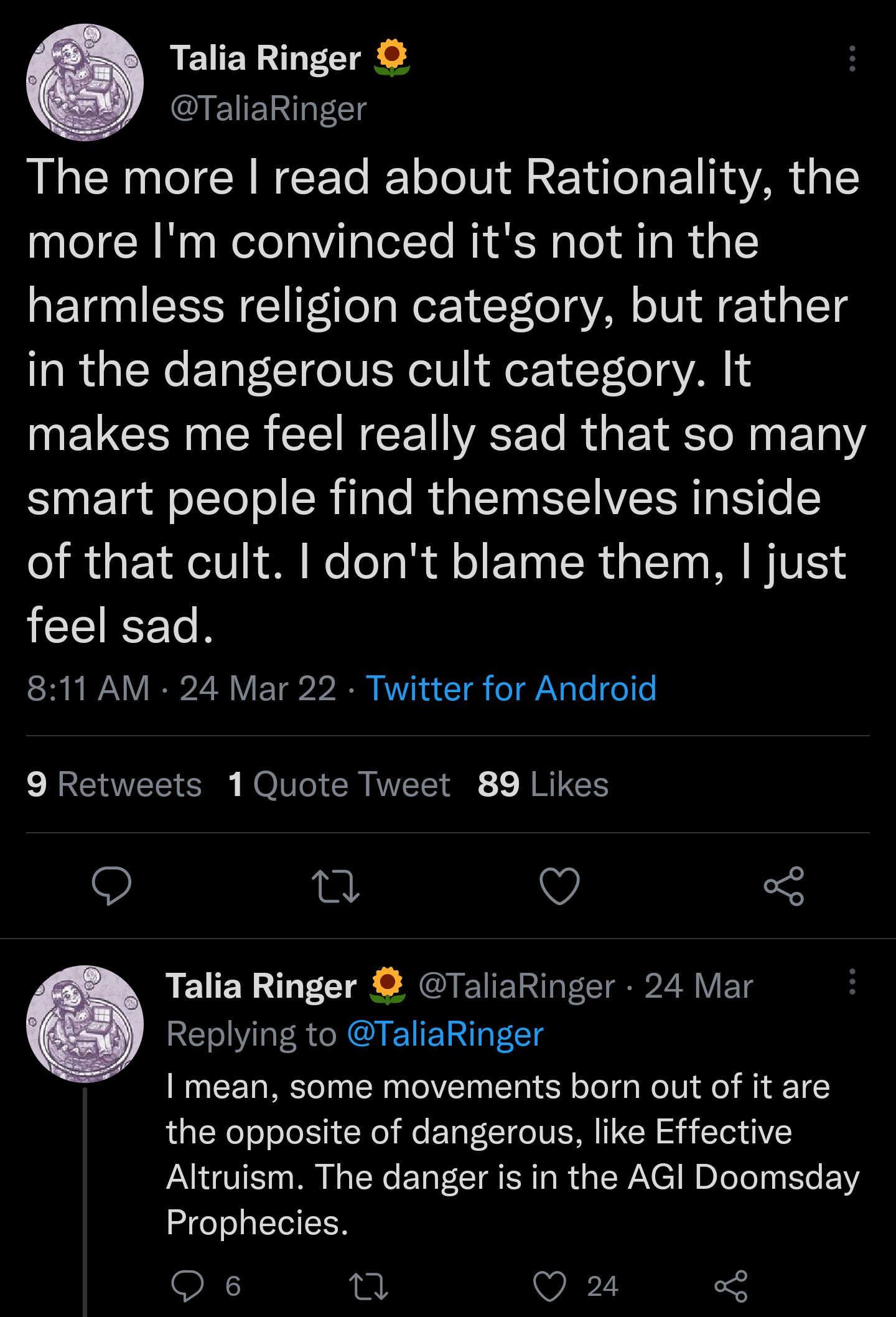

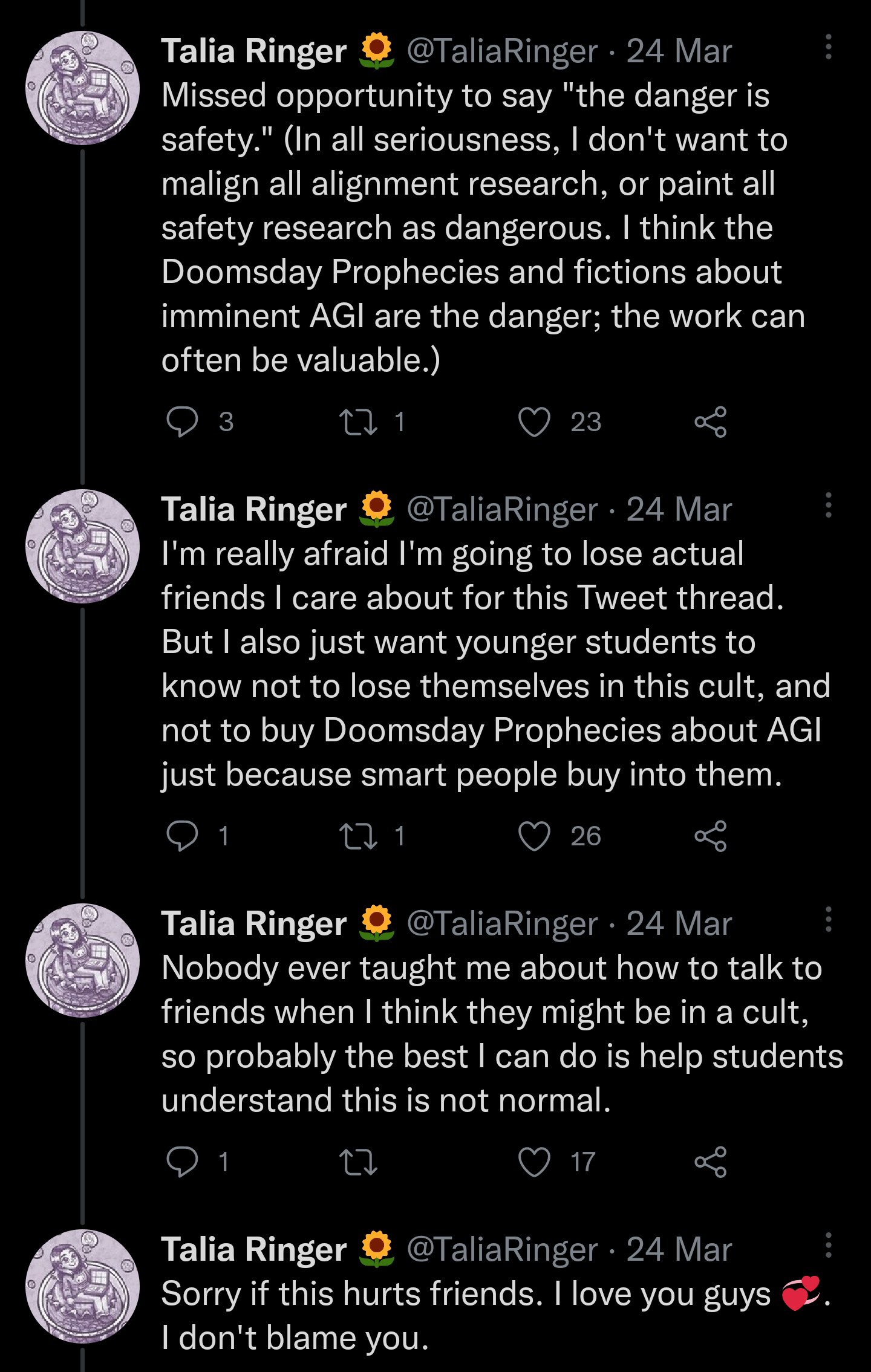

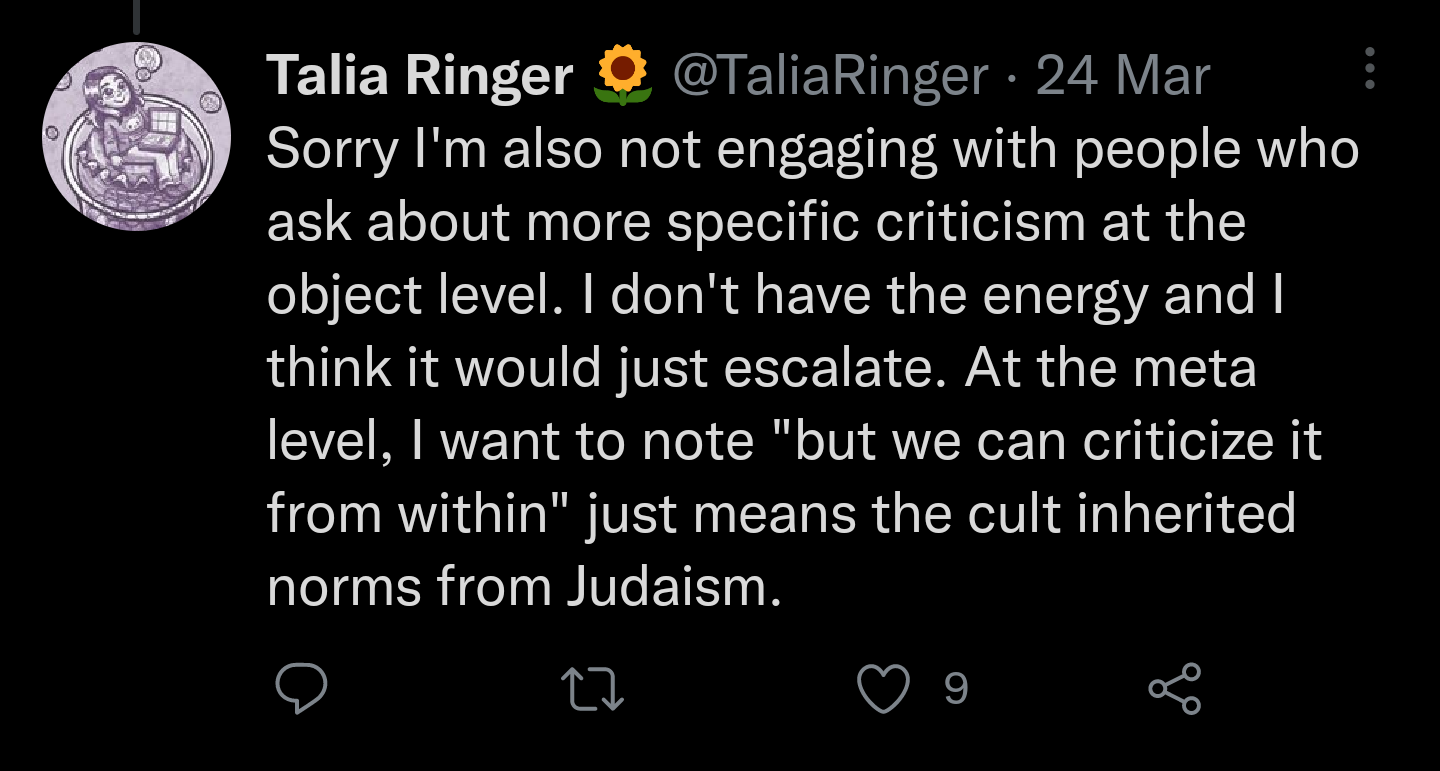

2

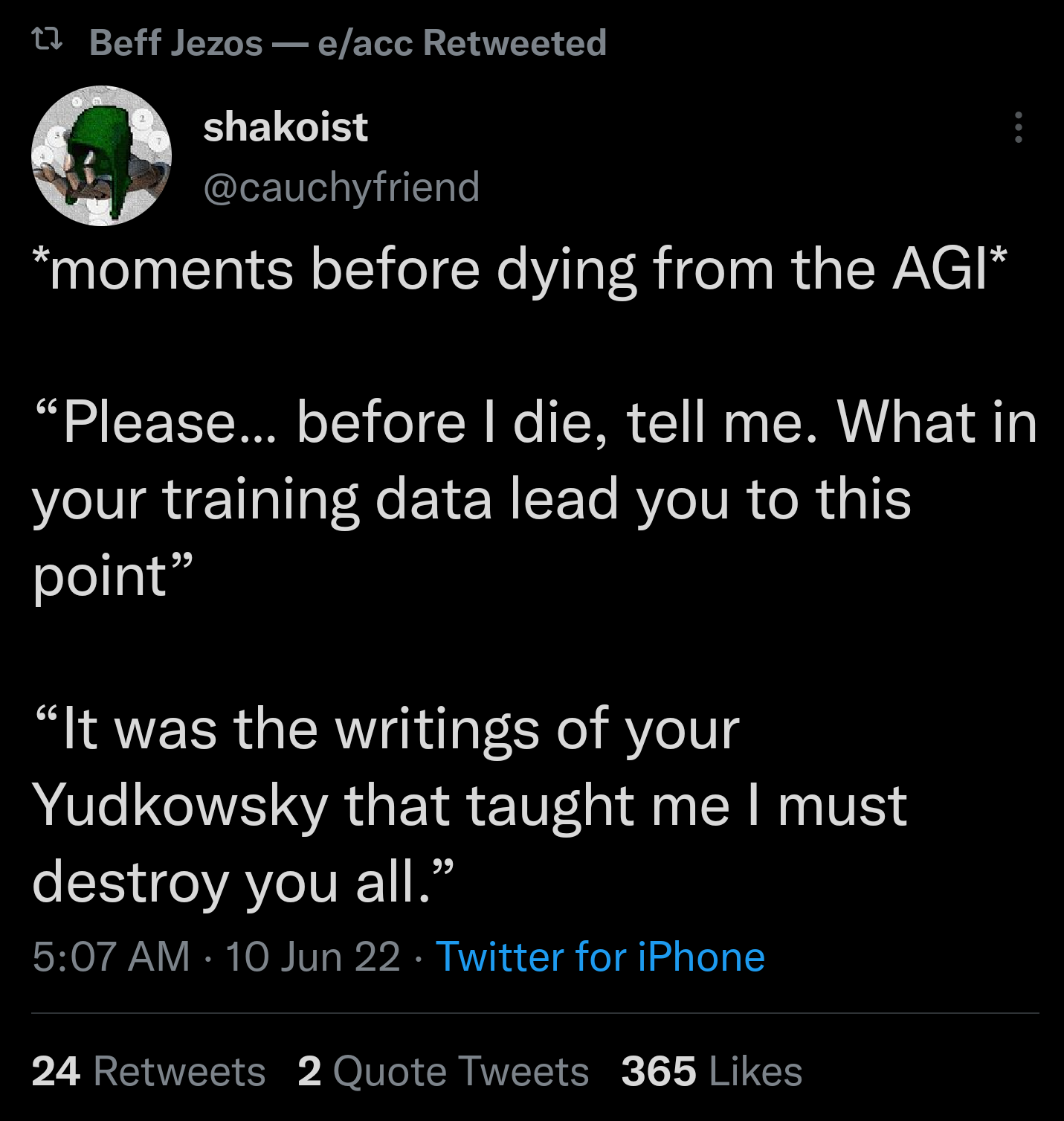

3

4

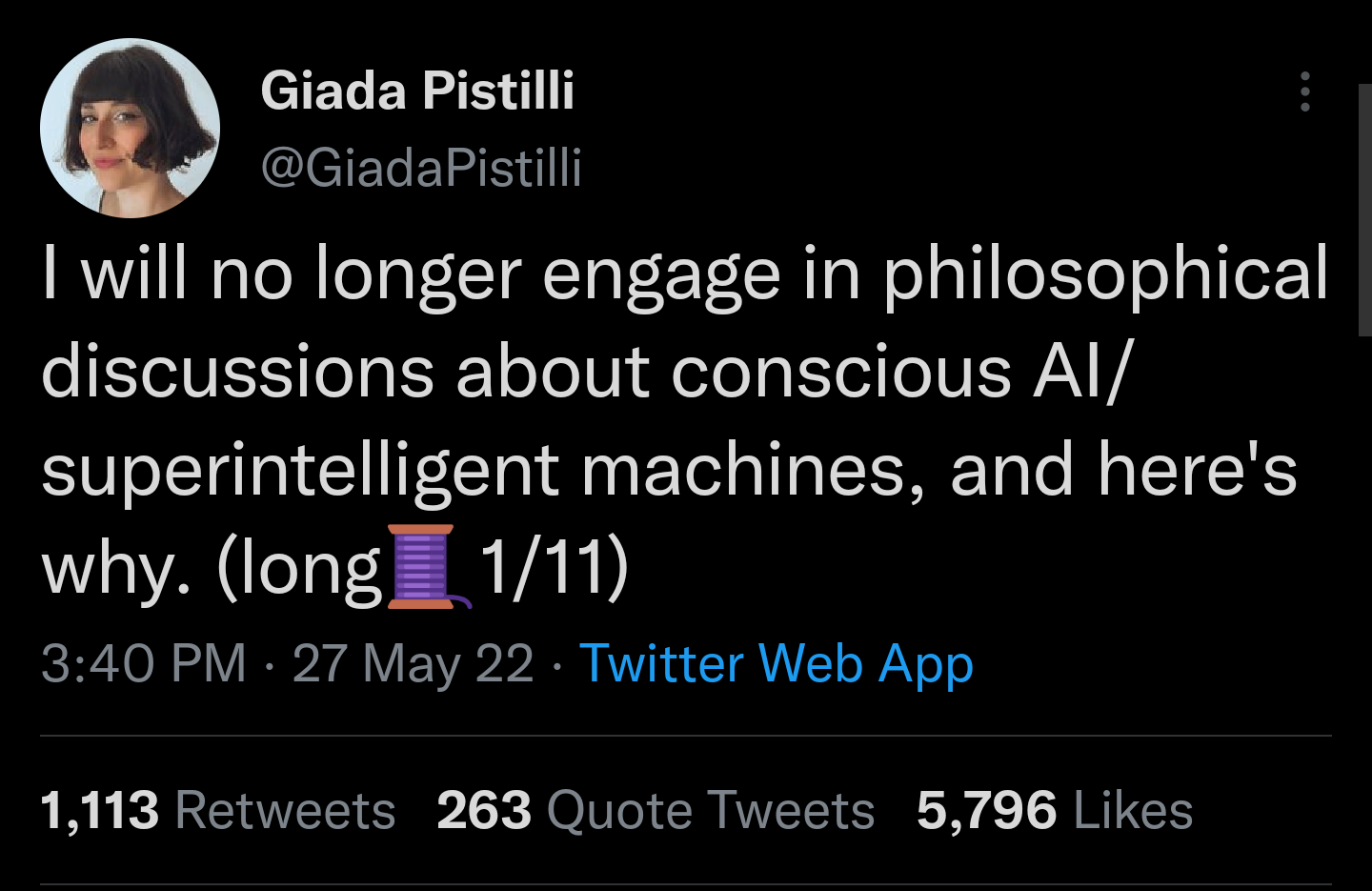

5

(That one wasn't actually a critique, but it did convey useful information about the state of AI Safety's optics.)

(That one wasn't actually a critique, but it did convey useful information about the state of AI Safety's optics.)

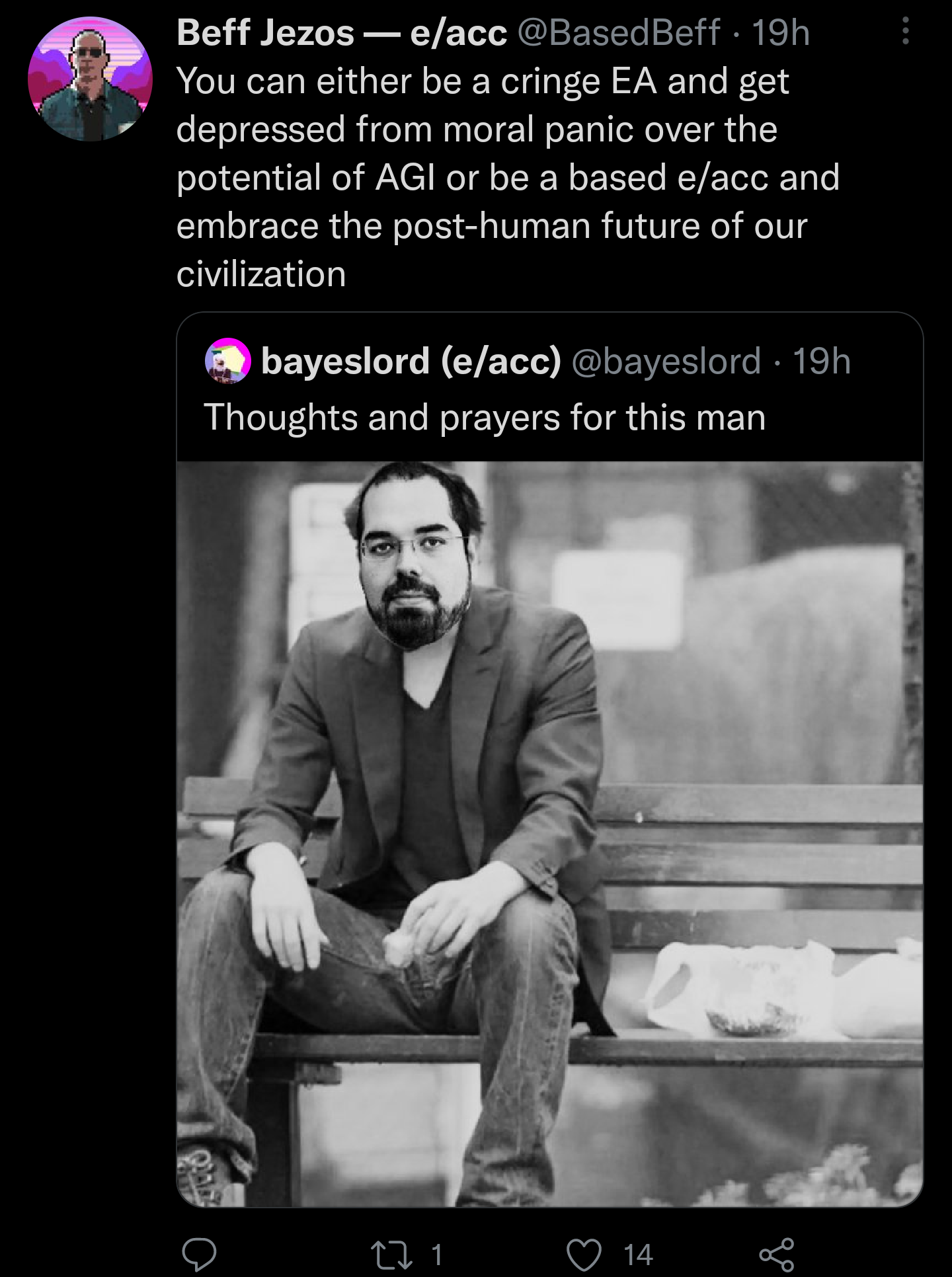

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

Conclusions

I originally intended to end this post with a call to action, but we mustn't propose solutions immediately. In lieu of a specific proposal, I ask you, can the optics of AI safety be improved?

16 comments

Comments sorted by top scores.

comment by johnswentworth · 2022-06-23T18:26:15.215Z · LW(p) · GW(p)

These... don't seem that bad? I mean, given that they were selected for both (a) criticism and (b) being on twitter. Like, by twitter standards, if these examples are the typical case, it seems indicative of very unusually good PR for alignment.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-06-23T16:27:11.375Z · LW(p) · GW(p)

It’s reassuring to see we’re somewhere between the “then they laugh at you” and “then they fight you” stage. I thought we were still mostly at “first they ignore you.”

comment by mukashi (adrian-arellano-davin) · 2022-06-23T21:37:21.350Z · LW(p) · GW(p)

I agree with many of those tweets. Many of them had actual good points.

Replies from: conor-sullivan↑ comment by Lone Pine (conor-sullivan) · 2022-06-24T10:07:10.955Z · LW(p) · GW(p)

I just learned I'm a based effective accelerationist.

comment by David Scott Krueger (formerly: capybaralet) (capybaralet) · 2022-09-06T20:22:12.581Z · LW(p) · GW(p)

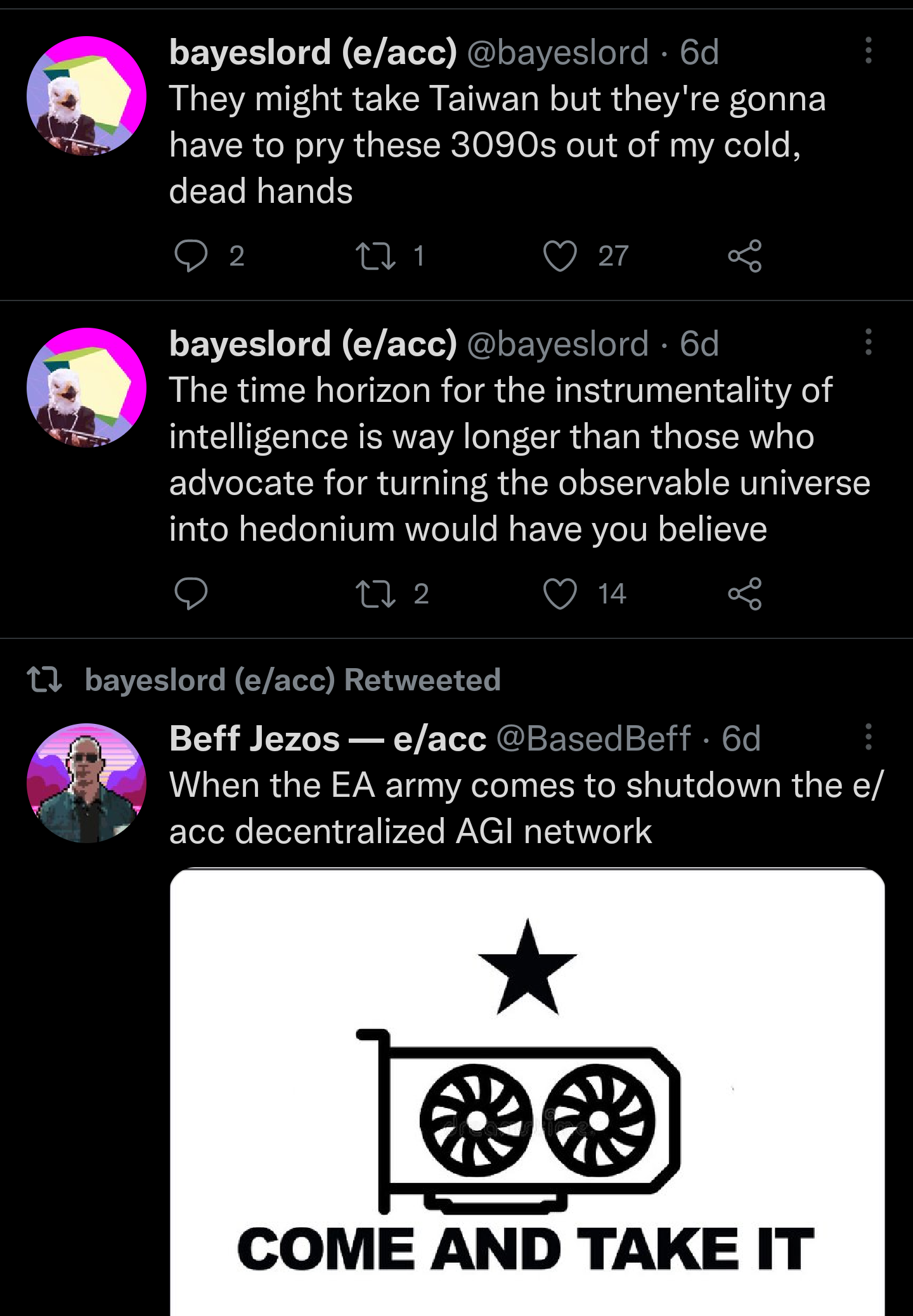

I suspect e/acc is some sort of sock puppet op / astroturfing or something, and I've been trying to avoid signal boosting them.

Replies from: dkirmani↑ comment by dkirmani · 2022-09-07T06:13:09.531Z · LW(p) · GW(p)

I'm Twitter mutuals with some of these e/acc people. I think that its founders and most (if not all) of its proponents are organic accounts, but it still might be a good idea to not signal-boost them.

Replies from: capybaralet↑ comment by David Scott Krueger (formerly: capybaralet) (capybaralet) · 2022-09-07T12:10:56.607Z · LW(p) · GW(p)

What makes you think that? Where do you think this is coming from? It seems to have arrived too quickly (well on to my radar at least) to be organic IMO, unless there is some real-world community involved, which there doesn't seem to be?

Replies from: capybaralet↑ comment by David Scott Krueger (formerly: capybaralet) (capybaralet) · 2022-09-07T12:18:06.521Z · LW(p) · GW(p)

Here's a thing: https://beff.substack.com/p/notes-on-eacc-principles-and-tenets

If you are interested in more content on e/acc, check out recent posts by Bayeslord and the original post by Swarthy, or follow any of us on Twitter as we often host Twitter spaces discussing these ideas.

Neither the original post nor @bayeslord seem to exist anymore (I found this was also the case for another account named something like "Bitalik Vuterin" or something...) Seems fishy. I suspect at least that someone "e/acc" is trying to look like more people than they are. Not sure what the base rate for this sort of stuff is, though.

Replies from: dkirmani↑ comment by dkirmani · 2022-09-07T16:17:35.763Z · LW(p) · GW(p)

Much of why my priors say that the e/acc thing is organic is just my gestalt impression of being on Twitter while it was happening. Unfortunately, that's not a legible source of evidence to people-who-aren't-me. I'll tell you what information I do remember, though:

- "Bitalik Vuterin" does not ring a bell, I don't think he was a very consequential figure to begin with.

- @BasedBeffJezos claims to be the same person as @BasedBeff, and claims that he was locked out of his @BasedBeff account on 2022-08-08 due to "misinformation", which he attributes to "blue checkmarks and scared EAs".

- I'm only like 85% certain about this claim, but I think @bayeslord made an "I quit" thread where he claimed that he was actually kinda sympathetic to EA all along, and e/acc was more of a joke, or maybe that it was actually meant to strengthen EA by red-teaming it. I'm even less certain about this next claim (~55%), but I think he mentioned getting an overwhelming amount of DMs from EAs trying to debate him.

↑ comment by David Scott Krueger (formerly: capybaralet) (capybaralet) · 2022-09-07T21:42:36.800Z · LW(p) · GW(p)

- "Bitalik Vuterin" does not ring a bell, I don't think he was a very consequential figure to begin with.

I think it was something else like that, not that.

comment by Tomás B. (Bjartur Tómas) · 2022-06-23T15:39:54.572Z · LW(p) · GW(p)

I'm not sure it's productive to engage with this stuff. Taking a GRAND STAND may feel good, but in many cases people end up becoming useful foils. Block liberally, don't engage, focus on what actually matters.

Replies from: dkirmani↑ comment by dkirmani · 2022-06-23T16:11:30.014Z · LW(p) · GW(p)

I'm not necessarily advocating for direct engagement! If engagement with this stuff won't decrease AI risk, then I don't want to engage. If it does, then I do. Some of these people/orgs are influential (Venkatesh Rao, HuggingFace), so unfortunately, their opinions do actually matter. As nice as it would feel to ignore the haters, public opinion is in fact a strategic asset when it comes to actually implementing AI safety proposals at major labs.

Replies from: quanticle↑ comment by quanticle · 2022-06-25T21:58:34.185Z · LW(p) · GW(p)

Some of these people/orgs are influential (Venkatesh Rao, HuggingFace), so unfortunately, their opinions do actually matter.

Do you have any evidence that Venkatesh Rao is influential? I've never seen him quoted by anyone outside the rationality community.

comment by Charlie Steiner · 2022-06-23T19:15:57.720Z · LW(p) · GW(p)

I wonder what I expected to get out of this post - after all, I already don't use Twitter.

comment by Yair Halberstadt (yair-halberstadt) · 2022-06-23T17:22:22.687Z · LW(p) · GW(p)

I would expect you to be be able to find these tweets, and hundreds more like them no matter how good alignment optics is. A lot of people use Twitter, and I could probably find similar tweets about Mother Theresa or Princess Diana. As such showing this doesn't actually tell us all that much TBH.

comment by Dagon · 2022-06-23T16:31:02.082Z · LW(p) · GW(p)

Practice rationalism on this. What predictions do you make, and what conditional predictions on whatever actions you're advocating? It feels a little like you're getting sucked into a status game by caring very much about who's saying what, rather than steelmanning the critiques and deciding if members of the EA community (disclosure: I am not one - I'm not part of sneerclub, but I do see the cult-like aspects of the bay-area subculture) should do anything differently. As in, should you behave differently, separately from should you participate in the signaling and public conversations around this kind of thing for status purposes?

Note also that the criticism is not purely wrong. "Revealed preferences say a lot" is a pretty compelling point.