The Mask Comes Off: At What Price?

post by Zvi · 2024-10-21T23:50:05.247Z · LW · GW · 16 commentsContents

Table of Contents The Valuation in Question The Control Premium The Quest for AGI is OpenAI’s Telos and Business Model OpenAI’s Value is Mostly in the Extreme Upside None 16 comments

The Information reports that OpenAI is close to finalizing its transformation to an ordinary Public Benefit B-Corporation. OpenAI has tossed its cap over the wall on this, giving its investors the right to demand refunds with interest if they don’t finish the transition in two years.

Microsoft very much wants this transition to happen. They would be the big winner, with an OpenAI that wants what is good for business. This also comes at a time when relations between Microsoft and OpenAI are fraying, and OpenAI is threatening to invoke its AGI clause to get out of its contract with Microsoft. That type of clause is the kind of thing they’re doubtless looking to get rid of as part of this.

The $37.5 billion question is, what stake will the non-profit get in the new OpenAI?

For various reasons that I will explore here, I think they should fight to get quite a lot. The reportedly proposed quarter of the company is on the low end even if it was purely the control premium, and the board’s share of future profits is likely the bulk of the net present value of OpenAI’s future cash flows.

But will they fight for fair value? And will they win?

Table of Contents

- The Valuation in Question.

- The Control Premium.

- The Quest for AGI is OpenAI’s Telos and Business Model.

- OpenAI’s Value is Mostly in the Extreme Upside.

The Valuation in Question

Rocket Drew (The Information): Among the new details: After the split is finalized, OpenAI is considering creating a new board for the 501(c)3 charity that would be separate from the one that currently governs it, according to a person familiar with the plan.

If we had to guess, the current board, including CEO Sam Altman, will look for board of directors for the nonprofit who will stay friendly to the interests of the OpenAI corporation.

After the restructuring, the nonprofit is expected to own at least a 25% stake in the for-profit—which on paper would be worth at least $37.5 billion.

…

We asked the California attorney general’s office, which has jurisdiction over the nonprofit, what the AG makes of OpenAI’s pending conversion. A spokesperson wrote us back to say the agency is “committed to protecting charitable assets for their intended purpose.”

There is a substantial chance the true answer is zero, as Sam Altman it seems intends to coup against the non-profit a third time, altering the deal further and replacing the board whoever he wants, presumably giving him full control. What would California do about that?

There is also the question of what would happen with the US Federal Trade Commission inquiry into OpenAI and Microsoft potentially ‘distorting innovation and undermining fair competition,’ which to me looks highly confused but they are seemingly taking it seriously.

No matter the outcome on the control front, it still leaves the question of how much of the company the nonprofit should get. You can’t (in theory) take assets out of a 501c3 without paying fair market value. And the board has a fiduciary duty to get fair market value. California also says it will protect the assets, whatever that is worth. And the IRS will need to be satisfied with the amount chosen, or else.

There is danger the board won’t fight for its rights, not even for a fair market value:

Lynette Bye: In an ideal world, the charity’s board would bring in valuation lawyers to argue it out with the for-profit’s and investors’ lawyers, until they agree on how to divvy up the assets. But such an approach seems unlikely with the current board makeup. “I think the common understanding is they’re friendly to Sam Altman and the ones who were trying to slow things down or protect the non-profit purpose have left,” Loui said.

The trick is, they have a legal obligation to fight for that value, and Brett Taylor has said they are going to do so, although who knows how hard it will fight:

Thalia Beaty (AP): Jill Horwitz, a professor in law and medicine at UCLA School of Law who has studied OpenAI, said that when two sides of a joint venture between a nonprofit and a for-profit come into conflict, the charitable purpose must always win out.

“It’s the job of the board first, and then the regulators and the court, to ensure that the promise that was made to the public to pursue the charitable interest is kept,” she said.

…

Bret Taylor, chair of the OpenAI nonprofit’s board, said in a statement that the board was focused on fulfilling its fiduciary obligation.

“Any potential restructuring would ensure the nonprofit continues to exist and thrive, and receives full value for its current stake in the OpenAI for-profit with an enhanced ability to pursue its mission,” he said.

Even if they are friendly to Altman, that is different from willingly taking on big legal risks.

The good news is that, at a minimum, OpenAI and Microsoft have hired investment banks to negotiate with each other. Microsoft has Morgan Stanley, OpenAI has Goldman Sachs. So, advantage OpenAI. But that doesn’t mean that Goldman Sachs is arguing on behalf of the board.

The Control Premium

So would 25% of OpenAI represent ‘fair market value’ of the non-profit’s current assets, as required by law?

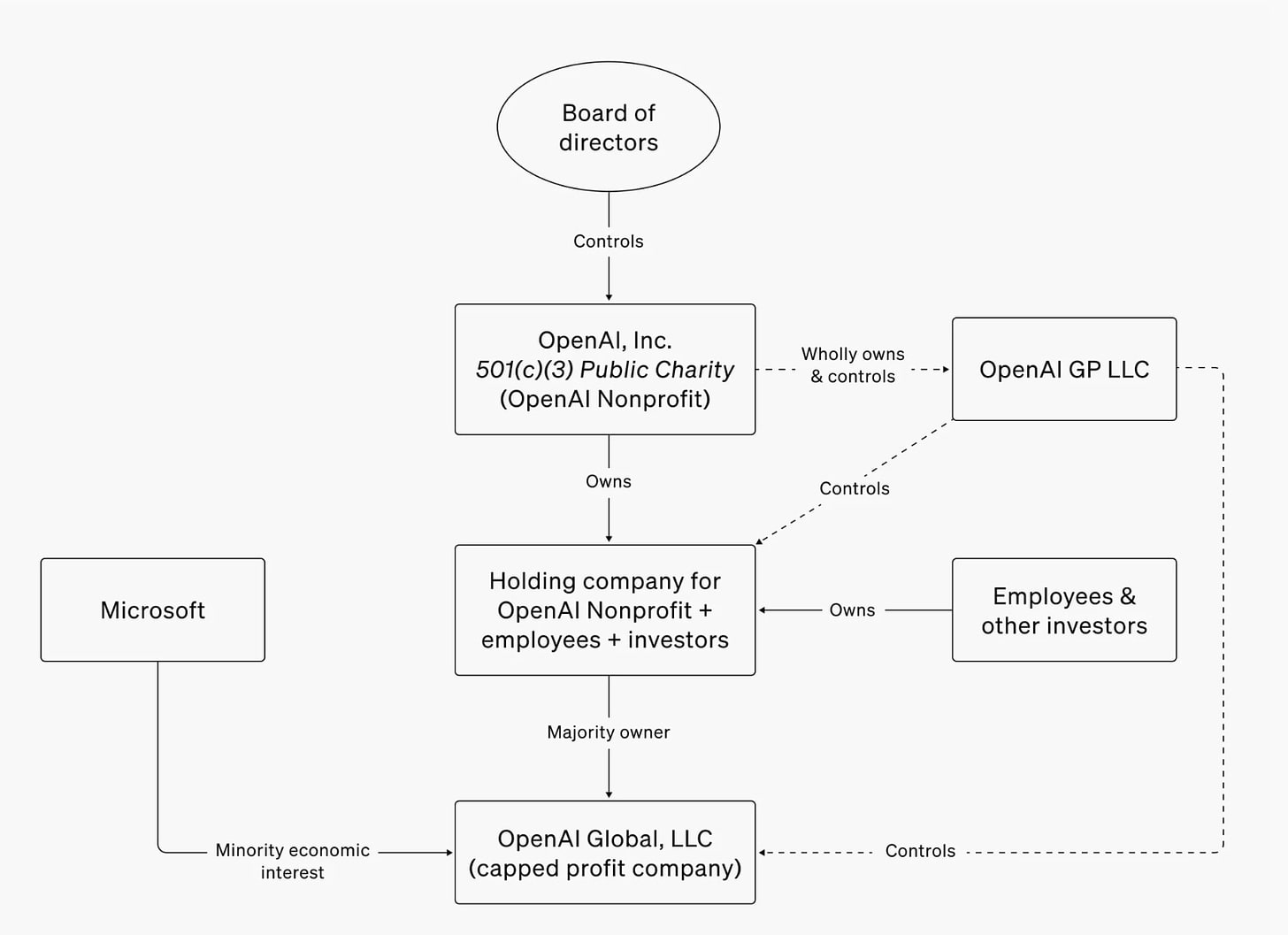

That question gets complicated, because OpenAI’s current structure is complicated.

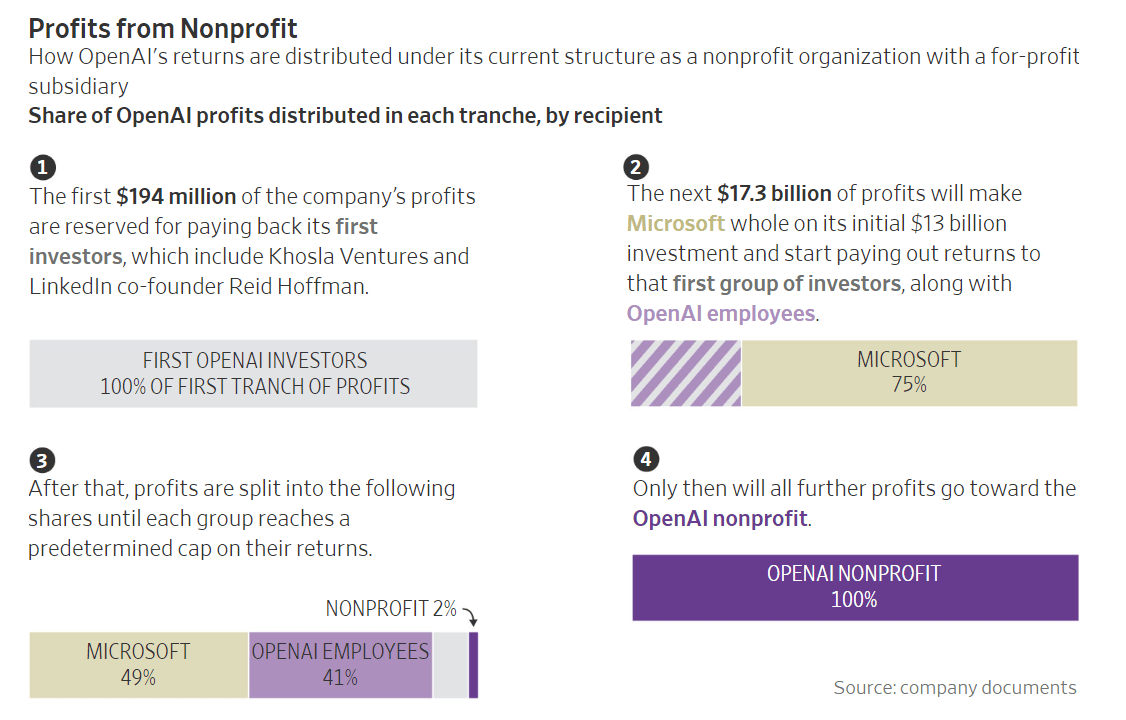

Or, from the WSJ, is where the money goes:

Any profits would go first to for-profit equity holders in various configurations, whose gains are capped, and then the rest would go back to the non-profit, except if the ‘AGI clause’ is invoked, in which case it all goes back to the non-profit.

The board would also be giving up its control over OpenAI. It would go from 100% of the voting shares to 25%. Control typically comes with a large cost premium. Control over OpenAI seems especially valuable in terms of the charitable purpose of the non-profit. One could even say in context that it is priceless, but that ship seems to have sailed.

According to Wikipedia, the control premium varies from 20% to 40% in business practice, depending on minority shareholders’ protections. In this case, it is clear that minority shareholders’ protections are currently extremely thin, so this would presumably mean at least a 40% premium. That’s 40% of the total baseline value of OpenAI, not the value of the non-profit’s share of the company. That’s on top of the value of their claims on the profits.

OpenAI could have chosen to sidestep the control issue by giving the board a different class of shares that allow it to comfortably retain control over OpenAI, but it is everyone’s clear intention to strip control away from the board.

Lynette Bye attempts to analyze the situation, noting that no one has much of a clue. They suggest one potential upper bound:

Lynette Bye: The biggest clue comes from OpenAI’s recent tax filing, which claims that OpenAI does not have any “controlled entities,” as defined by the tax code. According to Rose Chan Loui, the director of UCLA Law’s non-profit program, this likely means that the non-profit has the right to no more than 50% of the company’s future profits. If that alone were the basis for its share of the for-profit’s value, that would cap the non-profit’s share of the valuation at $78.5 billion.

Claude thinks it is more complicated than that. In either case, the filing likely reflected what was convenient to represent to the government and investors – you don’t want prospective investors realizing a majority of future profits belong to the board, if that were indeed the case.

Lynette also says experts disagree on whether the control premium requires fair market compensation. I think it very obviously does require it – control is a valuable asset, both because people value control highly, and because control is highly useful to the non-profit mission. Again, pay me.

The Quest for AGI is OpenAI’s Telos and Business Model

What makes stock in the future OpenAI valuable?

One answer, same as any other investment, is that ‘other people will pay for it.’

That’s a great answer. But ultimately, what are all those people paying for?

Two things.

- Control. That’s covered by the control premium.

- The Net Present Value of Future Cash Flows.

So what is the NPV of future cash flows? What is the probability distribution of various potential cash flows? What is stock in OpenAI worth right now, if you were never allowed to sell it to a ‘greater fool’ and it never transitioned to a B-corp or changed its payout rules?

Well, actually… you can argue that the answer is nothing…

Was that not clear enough?

Well, okay. Not actually nothing. Things could change, and you could get paid then.

But the situation is actually rather grim.

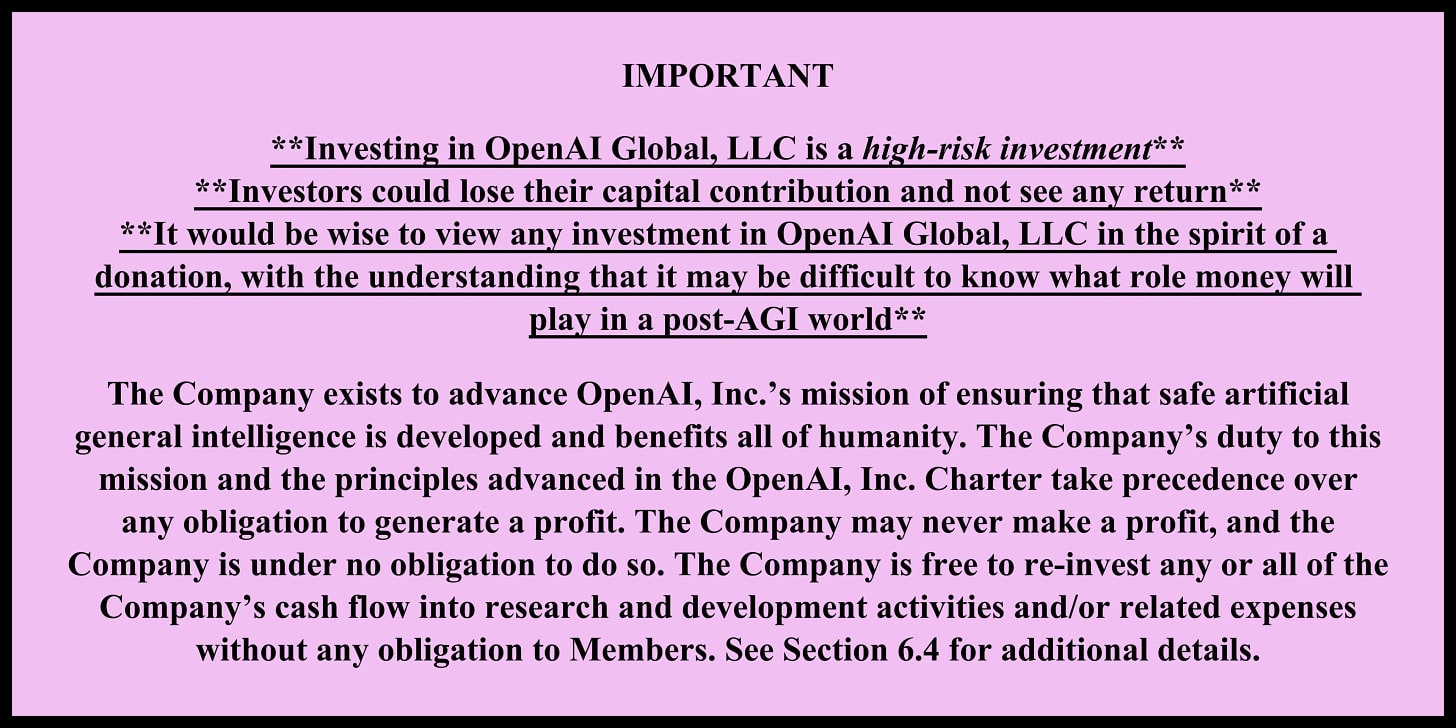

Sam Altman’s goal is to create safe AGI for the benefit of humanity. He says this over and over again. I disagree with his methods, but I do believe that is his central goal.

To the extent he also cares about other things, such as being the one to pick what it means to benefit humanity, I don’t think ‘maximizing profits’ is high on that list.

OpenAI’s explicit goal is also to create safe AGI for the benefit of humanity.

That is their business model. That is the ‘public benefit’ in the public benefit corporation. That is their plan. That is their telos.

Right now, OpenAI’s plan is:

- Spend a lot of money to develop AGI first.

- ???????? (ensure it is safe and benefits humanity, yes this should be step 1 not 2)

- Profit. Maybe. If that even means anything at that point. Sure, why not.

If that last sentence sounds weird, go read the pink warning label again.

OpenAI already has billions in revenue. It plausibly has reasonable unit economics.

Altman is still planning to plow every penny OpenAI makes selling goods and services, and more, back into developing AGI.

If he believes he can ensure AGI is safe and benefits humanity (I have big doubts, but he seems confident), then this is the correct thing for Altman to be doing, even from a pure business perspective. That’s where the real value lies, and the amount of money that can go into research, including compute and even electrical power, is off the charts.

If OpenAI actually turned a profit after its investments and research, or was even seriously pivoting into trying, then that would be a huge red flag, the same way it would have been for an early Amazon or Uber. It would be saying they didn’t see a better use of money than returning it to shareholders.

OpenAI’s Value is Mostly in the Extreme Upside

What are the likely fates for OpenAI, for a common sense understanding of AGI?

I believe that case #1 here is most of why OpenAI is valuable now: If OpenAI successfully builds safe AGI, it is worth many trillions to the extent that one can put a cap on its value at all. If OpenAI fails to build safe AGI, it will be a pale shadow of that.

- OpenAI charges headfirst to AGI, and succeeds in building it safely. Many in the industry expect this to happen soon, within only a few years – Altman said a few thousand days. The world transforms, and OpenAI goes from previously unprofitable due to reinvestment to an immensely profitable company. It is able to well exceed all its profit caps. Even if they pay out the whole waterfall to the maximum, the vast majority of the money still flows to the non-profit.

- OpenAI charges headfirst into AGI, and succeeds in building it, but fails in ensuring it is safe. Tragedy ensues. OpenAI never turns a profit anyone gets to enjoy, whether or not humanity sticks around to recover.

- OpenAI charges headfirst into AGI, and fails, because someone else gets to AGI substantially first and builds on that lead. OpenAI never turns a profit, whether or not things turn out well for humanity.

- OpenAI charges headfirst into AGI, and fails, because no one develops AGI any time soon. OpenAI burns through its ability to raise money, and realizes its mission has failed. Talent flees. It attempts to pivot into an ordinary software company, up against a lot of competition, increasingly without much market power or differentiation as others catch up. OpenAI most likely ends up selling out to another tech company, perhaps with a good exit and perhaps not. It might melt away, as looked like might happen in the crisis last year. Or perhaps it successfully pivots and does okay, but it’s not world changing.

If you thought the bulk of the value here is in #4, and a pivot to an ordinary technology company, then your model strongly disagrees with those who founded and built OpenAI, and with the expectations of its employees. I don’t think Altman or OpenAI have any intention of going down that road anything other than kicking and screaming, and it will represent a failure of the company’s vision and business model.

Even in case #4, we’re talking about what Matt Levine estimates as a current profit cap of up to about $272 billion. I am guessing that is low, given the late investors are starting with higher valuations and we don’t know the profit caps. But even if we are generous, the result is the same.

If the company is worth – not counting the non-profit’s share! – already $157 billion or more, it should be obvious that most future profits still likely flow to the non-profit. There’s no such thing as a company with very high variance in outcomes, that is heavily in growth mode, that is worth well over $157 billion dollars (since that $157 billion doesn’t include parts of the waterfall) where they don’t end up making trillions rather often. If you don’t think OpenAI is going to make trillions reasonably often, and also pay them out, then you should want to sell your stake, and fast.

Do not be fooled into thinking this is an ordinary or mature business, or that AI is an ordinary or mature technology whose value is in various forms of mundane utility. OpenAI is shooting for the stars. As every VC in this spot knows, it is the extreme upside that matters.

That is what the nonprofit is selling. They shouldn’t sell it cheap.

The good news is that the people tasked with arguing this are, effectively, Goldman Sachs. It will be fascinating to see if suddenly they can feel the AGI.

16 comments

Comments sorted by top scores.

comment by jbash · 2024-10-22T00:59:47.856Z · LW(p) · GW(p)

- OpenAI charges headfirst to AGI, and succeeds in building it safely. [...] The world transforms, and OpenAI goes from previously unprofitable due to reinvestment to an immensely profitable company.

You need a case where OpenAI successfully builds safe AGI, which may even go on to build safe ASI, and the world gets transformed... but OpenAI's profit stream is nonexistent, effectively valueless, or captures a much smaller fraction than you'd think of whatever AGI or ASI produces.

Business profits (or businesses) might not be a thing at all in a sufficiently transformed world, and it's definitely not clear that preserving them is part of being safe.

In fact, a radical change in allocative institutions like ownership is probably the best case, because it makes no sense in the long term to allocate a huge share of the world's resources and production to people who happened to own some stock when Things Changed(TM). In a transformed-except-corporate-ownership-stays-the-same world, I don't see any reason such lottery winners' portion wouldn't increase asymptotically toward 100 percent, with nobody else getting anything at all.

Radical change is also a likely case[1]. If an economy gets completely restructured in a really fundamental way, it's strange if the allocation system doesn't also change. That's never happened before.

Even without an overtly revolutionary restructuring, I kind of doubt "OpenAI owns everything" would fly. Maybe corporate ownership would stay exactly the same, but there'd be a 99.999995 percent tax rate.

Contingent on the perhaps unlikely safe and transformative parts coming to pass. ↩︎

↑ comment by Thane Ruthenis · 2024-10-22T01:43:07.499Z · LW(p) · GW(p)

In a transformed-except-corporate-ownership-stays-the-same world, I don't see any reason such lottery winners' portion wouldn't increase asymptotically toward 100 percent, with nobody else getting anything at all.

Even without an overtly revolutionary restructuring, I kind of doubt "OpenAI owns everything" would fly. Maybe corporate ownership would stay exactly the same, but there'd be a 99.999995 percent tax rate.

Taxes enforced by whom?

Replies from: jbash↑ comment by jbash · 2024-10-22T02:49:05.878Z · LW(p) · GW(p)

Taxes enforced by whom?

Well, that's where the "safe" part comes in, isn't it?

I think a fair number of people would say that ASI/AGI can't be called "safe" if it's willing to wage war to physically take over the world on behalf of its owners, or to go around breaking laws all the time, or to thwart whatever institutions are supposed to make and enforce the laws. I'm pretty sure that even OpenAI's (present) "safety" department would have an issue if ChatGPT started saying stuff like "Sam Altman is Eternal Tax-Exempt God-King".

Personally, I go further than that. I'm not sure about "basic" AGI, but I'm pretty confident that very powerful ASI, the kind that would be capable of really total world domination, can't be called "safe" if it leaves really decisive power over anything in the hands of humans, individually or collectively, directly or via institutions. To be safe, it has to enforce its own ideas about how things should go. Otherwise the humans it empowers are probably going to send things south irretrievably fairly soon, and if they don't do so very soon they always still could, and you can't call that safe.

Yeah, that means you get exactly one chance to get "its own ideas" right, and no, I don't think that success is likely. I don't think it's technically likely to be able to "align" it to any particular set of values. I also don't think people or insitutions would make good choices about what values to give it even if they could. AND I don't think anybody can prevent it from getting built for very long. I put more hope in it being survivably unsafe (maybe because it just doesn't usually happen to care to do anything to/with humans), or on intelligence just not being that powerful, or whatever. Or even in it just luckily happening to at least do something less boring or annoying than paperclipping the universe or mass torture or whatever.

Replies from: roger-d-1, sharmake-farah↑ comment by RogerDearnaley (roger-d-1) · 2024-10-23T01:29:16.140Z · LW(p) · GW(p)

Yeah, that means you get exactly one chance to get "its own ideas" right, and no, I don't think that success is likely.

Not if you built a model that does (or on reflection decides to do) value learning [? · GW]: then you instead get to be its research subject and interlocutor while it figures out its ideas. But yes, you do need to start the model off close enough to aligned that it converges to value learning. [LW · GW]

Replies from: jbash↑ comment by jbash · 2024-10-23T16:19:47.568Z · LW(p) · GW(p)

... assuming the values you want are learnable and "convergeable" upon. "Alignment" doesn't even necessarily have a coherent meaning.

Actual humans aren't "aligned" with each other, and they may not be consistent enough that you can say they're always "aligned" with themselves. Most humans' values seem to drive them toward vaguely similar behavior in many ways... albeit with lots of very dramatic exceptions. How they articulate their values and "justify" that behavior varies even more widely than the behavior itself. Humans are frequently willing to have wars and commit various atrocities to fight against legitimately human values other than their own. Yet humans have the advantage of starting with a lot of biological commonality.

The idea that there's some shared set of values that a machine can learn that will make everybody even largely happy seems, um, naive. Even the idea that it can learn one person's values, or be engineered to try, seems really optimistic.

Anyway, even if the approach did work, that would just mean that "its own ideas" were that it had to learn about and implement your (or somebody's?) values, and also that its ideas about how to do that are sound. You still have to get that right before the first time it becomes uncontrollable. One chance, no matter how you slice it.

Replies from: roger-d-1↑ comment by RogerDearnaley (roger-d-1) · 2024-10-24T05:22:26.364Z · LW(p) · GW(p)

Actual humans aren't "aligned" with each other, and they may not be consistent enough that you can say they're always "aligned" with themselves.

Completely agreed, see for example my post 3. Uploading [LW · GW] which makes this exact point at length.

Anyway, even if the approach did work, that would just mean that "its own ideas" were that it had to learn about and implement your (or somebody's?) values, and also that its ideas about how to do that are sound. You still have to get that right before the first time it becomes uncontrollable. One chance, no matter how you slice it.

True. Or, as I put it just above:

But yes, you do need to start the model off close enough to aligned that it converges to value learning. [LW · GW]

The point is that you now get one shot at a far simpler task: defining "your purpose as an AI is to learn about and implement the humans' collective values" is a lot more compact, and a lot easier to get right first time, than an accurate description of human values in their full large-and-fairly-fragile [LW · GW] details. As I demonstrate in the post linked to in that quote [LW · GW], the former, plus its justification as being obvious and stable under reflection, can be described in exhaustive detail on a few pages of text.

As for the the model's ideas on how to do that research being sound, that's a capabilities problem: if the model is incapable of performing a significant research project when at least 80% of the answer is already in human libraries, then it's not much of an alignment risk.

↑ comment by Noosphere89 (sharmake-farah) · 2024-10-22T15:05:14.335Z · LW(p) · GW(p)

I definitely agree that under the more common usage of safety that an AI doing what a human ordered in taking over the world or breaking laws for their owner would not be classified as safe, but in an AI safety context, alignment/safety does usually mean that these outcomes would be classified as safe.

My own view is that the technical problem is IMO shaping up to be a relatively easy problem, but I think that the political problems of advanced AI will probably prove a lot harder, especially in a future where humans control AIs for a long time.

Replies from: jbash↑ comment by jbash · 2024-10-22T15:58:01.989Z · LW(p) · GW(p)

I'm not necessarily going to argue with your characterization of how the "AI safety" field views the world. I've noticed myself that people say "maintaining human control" pretty much interchangeably with "alignment", and use both of those pretty much interchangeably with "safety". And all of the above have their own definition problems.

I think that's one of several reasons that the "AI safety" field has approximately zero chance of avoiding any of the truly catastrophic possible outcomes.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-10-22T17:21:50.676Z · LW(p) · GW(p)

I agree that the conflation between maintaining human control and alignment and safety is a problem, and to be clear I'm not saying that the outcome of human-controlled AI taking over because someone ordered to do that is an objectively safe outcome.

I agree at present the AI safety field is poorly equipped to avoid catastrophic outcomes that don't involve extinction from uncontrolled AIs.

comment by eggsyntax · 2024-10-23T19:26:42.072Z · LW(p) · GW(p)

I realize this ship has sailed, but: I continue to be confused about how the non-profit board can justify giving up control. Given that their mandate[1] says that their 'primary fiduciary duty is to humanity' and to ensure that AGI 'is used for the benefit of all', and given that more control over a for-profit company is strictly better than less control, on what grounds can they justify relinquishing that control?

[EDIT: I realize that in reality they're just handing over control because Altman wants it, and they've been selected in part for willingness to give Altman what he wants. But it still seems like they need a justification, for public consumption and to avoid accusations of violating their charter and fiduciary duty.]

Am I just being insufficiently cynical here? Does everyone tacitly recognize that the non-profit board isn't really bound by that charter and can do whatever they like? Or is there some justification that they can put forward with a straight face?

Is it maybe something like, 'We trust that OpenAI the for-profit is primarily dedicated to the general well-being of humanity, and so nothing is lost by giving up control'? (But then it seems like more control is still strictly better in case it turns out that in some shocking and unforeseeable way the for-profit later has other priorities). Or is it, 'OpenAI the for-profit is doing good in the world, and they can do much more good if they can raise more money, and there's certainly no way they could raise more money without us giving up control'?

- ^

I'm assuming here that their charter is the same as OpenAI's charter, since I haven't been able to find a distinct non-profit charter.

↑ comment by gwern · 2024-10-24T01:01:53.430Z · LW(p) · GW(p)

Or is it, 'OpenAI the for-profit is doing good in the world, and they can do much more good if they can raise more money, and there's certainly no way they could raise more money without us giving up control'?

Basically, yes, that is what the argument will be. The conditionality of the current investment round is also an example of that: "we can only raise more capital on the condition that we turn ourselves into a normal (B-corp) company, unencumbered by our weird hybrid structure (designed when we thought we would need OOMs less capital than it turns out we do), and free of the exorbitant Board control provisions currently governing PPUs etc. And if we can't raise capital, we will go bust soon and will become worthless and definitely lose the AGI race, and the Board achieves none of its fiduciary goals at all. Better a quarter of a live OA than 100% of a dead one."

Replies from: eggsyntax↑ comment by eggsyntax · 2024-10-24T01:15:14.414Z · LW(p) · GW(p)

Possibly too cynical, but I find myself wondering whether the conditionality was in fact engineered by OAI in order to achieve this purpose. My impression is that there are a lot of VCs shouting 'Take my money!' in the general direction of OpenAI and/or Altman who wouldn't have demanded the restructuring.

Replies from: gwern↑ comment by Amalthea (nikolas-kuhn) · 2024-10-23T20:21:49.932Z · LW(p) · GW(p)

I believe the confusion comes from assuming the current board follows rules rather than doing whatever is most convenient.

The old board was trying to follow the rules, and the people in question were removed (technically were pressured to remove themselves).

Replies from: eggsyntax↑ comment by eggsyntax · 2024-10-24T00:06:54.855Z · LW(p) · GW(p)

I agree that that's presumably the underlying reality. I should have made that clearer.

But it seems like the board would still need to create some justification for public consumption, and for avoiding accusations of violating their charter & fiduciary duty. And it's really unclear to me what that justification is.

comment by lsusr · 2024-10-24T22:11:53.983Z · LW(p) · GW(p)

If you don’t think OpenAI is going to make trillions reasonably often, and also pay them out, then you should want to sell your stake, and fast.

And vice-versa. I bought a chunk of Microsoft a while ago, because that was the closest thing I could do to buying stock in OpenAI.