How to solve the argument about what the algorithm should do

post by Yoav Ravid · 2021-01-15T06:08:15.627Z · LW · GW · 8 commentsContents

8 comments

Should YouTube's algorithm recommend conspiracy theory videos? Should twitter recommend tweets that disagree with the WHO? Should Facebook recommend things that'll make you angry?

There's countless such questions about recommender algorithms, usually with good arguments on both sides.

But the elephant in the room that all these arguments take place in, is that the problem isn't so much how to design the algorithm, but why do we only have one choice?

My suggestion is that sites with recommender algorithms will have a system that lets everyone write their own algorithm, upload it, and use it instead of the companies algorithm, or use an algorithm uploaded by someone else.

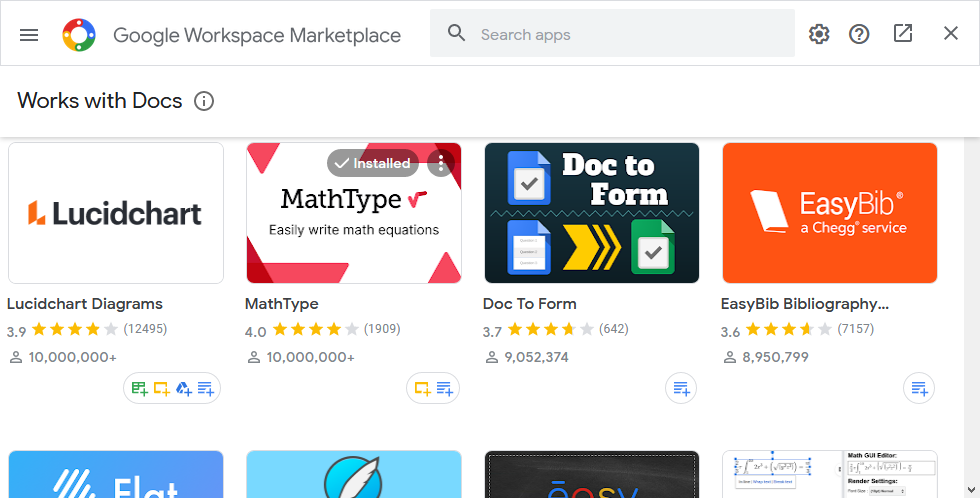

The algorithms would be selected through an add-on-shop-like interface, and could have further options for the users to tweak through a UI.

This will move the argument from "How should the one algorithm be designed", to the much easier problem of just designing an algorithm you're happy with and uploading it, or finding one that was already uploaded.

When it comes to large companies like Facebook, YouTube, Instagram, Twitter, etc. I would force them to implement such a system, and use a simple no-filter chronological order algorithm in their feeds as a default (then offer their own algorithm as one choice among the other choices).

With everyone able to choose their own algorithms, we'll be one step closer to a social media landscape that works.

8 comments

Comments sorted by top scores.

comment by River (frank-bellamy) · 2021-01-15T16:09:41.219Z · LW(p) · GW(p)

It's an interesting idea, but I worry that it would only make these platforms more polarizing. I'm imagining a social justice recommender algorithm, which promotes the most extreme and censorious social justice positions, and maybe flags people taking not-so-woke positions (say against affirmative action) for unfriending/unfollowing. One could imagine something similar coming from the political right. And then these platforms become even more polarizing.

Replies from: Viliam, Yoav Ravid↑ comment by Viliam · 2021-01-27T22:05:49.040Z · LW(p) · GW(p)

We have the following options:

- allow people to do what they want to do, and as a result many of them will do wrong things;

- have endless debates about what is the right thing; or

- decide from the position of power what is the right thing.

...or some combination of them. For example, from the position of power we could declare the set of permissible choices, and then allow people to choose freely within this set.

Bubbles or not bubbles, that's the same, but more meta. We could allow people to set up their bubble coefficient individually, but what if some stupid people will set it to 100% and will never be confronted with our wisdom? Or we leave the choice to the YouTube's algorithm.

↑ comment by Yoav Ravid · 2021-01-16T07:35:08.870Z · LW(p) · GW(p)

That is indeed a potential problem, and another person i told this idea to was skeptical that people would choose the "healthy" algorithms instead of algorithms similar to the current ones that are more amusing.

I think it's hard to know what the outcome of this will be, I'm optimistic, but the pessimistic side definitely has merit too. this is in a way similar to the internet, where people were very optimistic for what it can do, but failed to see the potential problems it would create, and when social media finally came it showed a much sadder image.

But also similar to the internet, i think people should have the choice, i wouldn't want anyone to restrict the algorithms i can use because some people may choose algorithms that someone thinks are harmful.

At the end of the day, if someone is really motivated to be in an echo chamber, nothing's gonna stop them. this idea might help those that find themselves in echo chambers because of the current algorithm, but would rather be out.

comment by SelectionMechanism · 2021-01-16T02:07:35.315Z · LW(p) · GW(p)

Current algorithms are made to maximize clicks, view time, engagement, etc... Things that result in ad revenue or in keeping you on for as long as possible (and thus, ad revenue). What would incentivize these companies to allow you customize your algorithm in a way that would likely reduce your overall value to them?

As for the polarization problem - it’s bad enough already, and existing solutions don’t seem to be working very well to solve it. Might as well try new things. Nothing is as polarizing as cable news anyway.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2021-01-16T07:24:45.879Z · LW(p) · GW(p)

Right, i assumed it was common knowledge at this point that companies make their algorithms that way for this reason, and that they don't have an incentive to change them, so i didn't even mention it.

And this is true for any discussion about how the algorithm should be different, you either need to find a way in which the companies have an incentive to make the change, try to compete with them, or force them to do it.

So i suggested to solve this by forcing the big ones to do this by law (I don't think small social media sites should be regulated, the only ones that should be regulated are those that dominate the market through network effects, especially if they're utility-like).

comment by Measure · 2021-01-16T17:48:52.687Z · LW(p) · GW(p)

How would a third party create an alternative algorithm without all the analytics data of which people that watched a video watched which other videos, etc. Would Youtube be forced to release this info to third parties for this purpose?

Replies from: mr-hire, Yoav Ravid↑ comment by Matt Goldenberg (mr-hire) · 2021-01-16T17:54:19.586Z · LW(p) · GW(p)

I suspect that the data would be a black box, and you'd have to make an algorithm that just took in that data and spit out results.

A more sophisticated scheme could use homomorphic encryption that allowed you to do computations over the data without revealing the data itself.

↑ comment by Yoav Ravid · 2021-01-16T18:23:47.336Z · LW(p) · GW(p)

My technical knowledge is limited, so i didn't try to go into implementation details. but just thinking out loud about options:

- The company would run the code itself (Technically solves the problem really easily, but then running someone else's code is a big no no, so that's probably not a viable direction)

- The company would create an API for these algorithms to use (Probably also solves the problem, but severely limits the freedom of the third party)

- The data would be sent to the user, and the algorithm would run client side (though It's possibly too much data to send to the client, or running the algorithm will require too much computing)

I expect someone more knowledgeable than myself to have a much better idea of the possibilities and the difficulties.