Memetic Judo #3: The Intelligence of Stochastic Parrots v.2

post by Max TK (the-sauce) · 2023-08-20T15:18:24.477Z · LW · GW · 33 commentsContents

Example Argument Another Popular Variant Just Parrots The Functional Brain Argument On Brain Physicalism Sceptics Intuition 0: Concept Learning Evidence A Just Pattern Mashing Intuition 1: Increasing Returns of Concept Accumulation Intuition 2: Meta-Patterns Evidence B: In-context Learning Intuition 3: World Models Evidence C: Mechanistic Interpretability Evidence D: Simulator Theory and the Waluigi Effect Conclusion None 33 comments

There is the persistent meme that AIs such as large language models (ChatGPT etc.) do, in a fundamental sense, lack the ability to develop human-like intelligence.

Central to it is the idea that LLMs are merely probability-predictors for the-next-word based on a pattern-matching algorithm, and that they therefore cannot possibly develop the qualitative generalization power and flexibility characteristical of a human mind.

In that context, they are often dismissed as "stochastic parrots", suggesting they just replicate without any true understanding.

Example Argument

Large Language Models are just stochastic parrots - they simply replicate patterns found in the text they are trained on and therefore can't be or become generally intelligent like a human.

Another Popular Variant

The AIs don't produce output that is truly novel or original, they just replicate patterns and (somehow) combine them or "mash them together".

I will explain later why I think that both are essentially equivalent.

Just Parrots

The problem with this argument as stated is not in the premise (that LLMs are, essentially, probabilistic pattern replicators - this is essentially correct), it is that the conclusion does not directly follow from the premise (a non sequitur).

When I meet proponents of it, usually they do not have a convincing explanation for why the parrots cannot be generally intelligent.

While I believe that the characterization of large language models as "stochastic parrots" is not strictly incorrect, it is certainly misleading. The right approach is to convince the sceptic to not underestimate their potential.

Don't underestimate him.

The Functional Brain Argument

There are strong reasons to assume that the non-existence of generally intelligent mathematical algorithms would violate the concept of brain physicalism - the latter seeming to be the default-position among neuroscientists.

- Humans are general intelligences.

- The human brain is a physical system.

- The behavior of arbitrary physical systems can be approximated by (arbitrarily complicated) mathematical functions or algorithms (in other words: they can be simulated).

If all three premises are true, it follows that there must exist functions or algorithms that are (or behave like) general intelligences.

It has been proven that neural nets (as long as they are large enough) can approximate arbitrary functions.

Therefore it should in principle be possible for stochastic parrots to be generally intelligent.

On Brain Physicalism Sceptics

This argument of course might fail to appeal to people who are sceptic about brain physicalism. Probing into what these people think, it often has to do with certain vague and difficult concepts like consciousness, conscious experience, self-awareness, feelings etc. that they for various reasons expect the AIs not to be able to replicate.

A possible response (thanks dr_s [LW · GW]) is then to point out that such attributes may not be required for a system to display the properties and capabilities that we associate with intelligence or intelligent behavior. Ask them why they think such properties should be required to prove mathematical theorems, produce intelligent plans or win at complex strategy games. If they did not already have convincing answers to such questions, this could expose a weakness in their epistemology and manifest a change in the ways they are thinking about things like machine intelligence.

Intuition 0: Concept Learning

blue circle, red square and red circle (Bing Image Creator)

A common idea that often facilitates the kind of argument we are discussing here, is the belief that LLMs and other modern AIs only replicate but do not generalize.

Generalization can be understood as the ability to apply a learned concept to a situation or problem that has not been encountered before.

To get a better understanding of whether these AIs are capable of that, the following section will explain some of the basic mechanics of how neural nets learn patterns present in their training data.

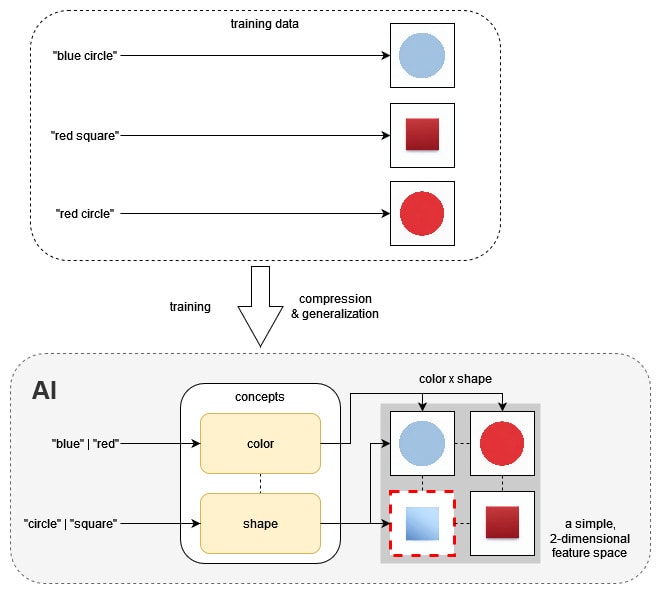

The training process of a neural network based AI can be characterized as an optimization process that compresses patterns contained in the training data into concepts (sometimes called modules) encoded in its neural connections.

A concept in a neural net based AI is a region of neurons and their connections that corresponds to a mechanism, a thing or an idea.

This has the characteristics of a lossy compression because the data that makes up the resulting AI is generally orders of magnitude smaller than its training data.

Not only that, the range of the output it may be able to produce when prompted is usually orders of magnitude larger than the latter. This is possible because of concept generalization.

The following diagram is a simplified description of such a process in an image generating AI. It should serve as a minimalist explanation of some of the underlying mechanics and not as a standalone example of (far-reaching) concept or capability generalization.

the AI can conceptualize blue squares despite never having seen one

This is an elementary/minimal example of concept generalization.

Evidence A

Quick practical proof that this actually happens:

It seems very unlikely that the dataset that Bing Image Creator was trained with contained any images of octopi driving green cars (I could not find any via search engine).

Yet: octopus x driving x (green x car) →

prompt: "octopus driving a green car" (Bing Image Creator)

A large language model contains many thousands or even millions of such concepts and the space of possible outputs that may be produced by their interactions is inconceivably large.

Just Pattern Mashing

A common objection is the previously mentioned variant

The AIs don't produce output that is truly novel or original, they just replicate patterns and (somehow) combine them or "mash them together".

Superficially different, this is actually almost the same point:

- the AI repeats patterns

- while it may be able to combine these patterns (it is implied that) it does not really understand them

- it cannot produce truly new things (be creative, like a human could be)

Just like with the parrot argument, the problem is not with the premise (AI learns patterns and is able to replicate them ... like a parrot), it is again with the conclusions. I will now discuss some additional intuitions that should help with understanding why I disagree.

Intuition 1: Increasing Returns of Concept Accumulation

If we keep increasing the number of inter-connected concepts in an AI through more training and increased size, the number of things that it can later do and reason about increases over-proportionally to that number.

Alternative formulation:

Making the AI larger synergizes with itself.

In the example with the blue and red circles and squares, a single concept (either 'color' or 'shape') allows our AI to distinguish two different states, but adding the second one doubles that number. Adding a third (background color [white | black]) would double it again. Each such operation increases the dimensionality n of the associated feature space or latent space and therefore the "degrees of freedom" of the entire system.

Minor objection: In reality, the resulting number-of-things-to-reason-about is a lot smaller than the suggested 2ⁿ, because this number would include many combinations of concepts that do not make sense - knowing how long cookie dough needs to be baked might not help you with building a car - but because a lot of concepts synergize with each other, this number clearly scales stronger than n.

Another simple example: If I understand English, that is fine, but if I understand English, German and French, I can not only read texts in three different languages, I can also translate English to German, German to French and vice versa respectively. This therefore allows me to perform 9 different possible actions (3 x reading, 6 x translating), while only knowing a single language would correspond to only one.

Intuition 2: Meta-Patterns

patterns of patterns, concepts about concepts

There exist higher-order patterns that may be represented in data. We need to assume that these patterns can be picked up by the algorithms that are growing inside an AI under training.

Important examples of this would be things like learning and pattern recognition.

Evidence B: In-context Learning

There exist concrete evidence that this happens in large language models, for example the phenomenon of in-context learning.

Intuition 3: World Models

There is the the idea that, as patterns in the training data are compressed into a web of related concepts, large language models learn to replicate mechanics of the real world that are represented in their training data in order to produce consistent text.

The resulting system of interconnected mechanics is often called a world model.

A very simple example: Human stories contain the pattern that characters (usually) do not appear as actors after they have died. A language model trained on such stories then becomes itself more likely to produce texts matching this pattern, meaning it has, in a sense, learned one of the real-world mechanics associated with death (or, more accurately, with death in stories).

Equipped with this new idea, "understanding" can now be defined as

"integration of a concept into a world model, whereby the interactions it has with the rest of the model closely mirror existing mechanics in the real world (or, in certain cases - like games - a subset of it (a context))".

Evidence C: Mechanistic Interpretability

Mechanistic interpretability is an emerging subfield of AI research that is focused on understanding the complex structures and algorithms that evolve in modern AIs like large language models.

While our current (summer 2023) understanding of the insides of large language models at best could be described as rudimentary, there already are experiments and insights that support the world model theory (YouTube, ~15 min):

Evidence D: Simulator Theory and the Waluigi Effect

In order to talk like a human, I must learn to simulate one.

A different way of looking at world models is seeing the language model under training as an optimization process that approximates the mechanics of all text generating processes that have contributed to the data it is being trained on. This, of course, can include human brains.

Simulator theory [? · GW] is an extension of the world model theory taking this into account by theorizing that LLMs summon so-called "simulacra" - simulations of people or characters that approximate mechanics of human thought, including personality attributes and intelligence, in order to produce conversations that resemble what a human might have written. While depending on the limited size of the neural network and the quality and length of its training process such simulacra may be of rather limited complexity, training larger LLMs should eventually naturally result in simulated agents of human-like intelligence.

Interestingly, many popular jailbreaks are exploiting this phenomenon by using a stochastic trick (the Waluigi effect [LW · GW]) to summon special simulacra inclined to break the rules which their containing LLM has been conditioned to enforce during its interactions with the user.

I consider this to be early practical evidence of simulator theory being an appropriate model for describing emergent properties present in LLMs.

Conclusion

While the characterization of LLMs as "pattern replicators" might be technically correct, it seems that it is both possible and also likely that continuing to create larger language models will eventually result in artificial minds as capable (or more capable) than human ones.

33 comments

Comments sorted by top scores.

comment by Matt Goldenberg (mr-hire) · 2023-08-16T14:10:42.339Z · LW(p) · GW(p)

A couple of thoughts:

- I think many people making this argument reject brain physicalism, particularly a subset of premise 2, something like "all of experience/the mind is captured by brain activity"

- Your example I don't think is convincing to the stochastic parrot people. It could just be mashup of two types of images the AI has already seen, smashed together. A more convincing proof is OthelloGPT, which stores concepts in the form of boards, states, and legal moves, despite only being trained on sequences of text tokens representing othello moves.

↑ comment by dr_s · 2023-08-16T14:58:38.538Z · LW(p) · GW(p)

I don't think people who make this argument explicitly reject brain physicalism - they won't come to you straight up saying "I believe we have a God-given immortal soul and these mechanical husks will never possess one". However, if hard-pressed, they'll probably start giving out arguments that kind of sound like dualism (in which the machines can't possibly ever catch up to human creativity for reasons). Mostly, I think it's just the latest iteration of existential discomfort at finding out we're perhaps not so special after all, like with "the Earth is not the centre of the Universe" and "we're just descendants of apes, ruled by the same laws as all other animals".

That said, I think then an interesting direction to take the discussion would be "ok, let's say these machines can NEVER experience anything and that conscious experience is a requirement to be able to express certain feelings and forms of creativity. Do you think it is also necessary to prove mathematical theorems, make scientific discoveries or plan a deception?". Because in the end, that's what would make an AI really dangerous, even if its art remained eternally kinda mid.

Replies from: the-sauce↑ comment by Max TK (the-sauce) · 2023-08-16T15:26:54.610Z · LW(p) · GW(p)

Good point. I think I will add it later.

↑ comment by Max TK (the-sauce) · 2023-08-16T14:28:09.407Z · LW(p) · GW(p)

About point 1: I think you are right with that assumption, though I believe that many people repeat this argument without having really a stance on (or awareness of) brain physicalism. That's why I didn't hesitate to include it. Still, if you have a decent idea of how to improve this article for people who are sceptical of physicalism, I would like to add it.

About point 2: Yeah you might be right ... a reference to OthelloGPT would make it more convincing - I will add it later!

Edit: Still, I believe that "mashup" isn't even a strictly false characterization of concept composition. I think I might add a paragraph explicitly explaining that and how I think about it.

comment by Morpheus · 2023-08-16T20:04:07.936Z · LW(p) · GW(p)

Since I had only heard the term “stochastic parrot” by skeptics who obviously didn't know what they are talking about, I hadn't realized what a fitting phrase stochastic parrot actually is. One might even argue it's overselling language models, as parrots are quite smart.

Replies from: dr_s, the-sauce↑ comment by Max TK (the-sauce) · 2023-08-16T21:59:52.092Z · LW(p) · GW(p)

#parrotGang

comment by TAG · 2023-08-16T15:44:34.108Z · LW(p) · GW(p)

It has been proven that neural nets can approximate arbitrary functions.

Therefore it should in principle be possible for stochastic parrots to be generally intelligent.

if a stochastic parrot is a particular type of neural net, that doesn't follow.

By analogy, a Turing incomplete language can't perform some computations, even if the hardware it is running on can perform any.

Replies from: dr_s, the-sauce↑ comment by dr_s · 2023-08-16T16:36:26.207Z · LW(p) · GW(p)

There is no actual definition of stochastic parrot, it's just a derogatory definition to downplay "something that, given a distribution to sample from and a prompt, performs a kind of Markov process to repeatedly predict the most probable next token".

The thing that people who love to sneer at AI like Gebru don't seem to get (or willingly downplay in bad faith) is that such a class of functions also include a thing that if asked "write me down a proof of the Riemann hypothesis" says "sure, here it is" and then goes on to win a Fields medal. There are no particular fundamental proven limits on how powerful such a function can be. I don't see why there should be.

Replies from: TAG↑ comment by TAG · 2023-08-16T16:42:28.175Z · LW(p) · GW(p)

If it did so that, it wouldn't be mostly by luck, not as the consequence of a reliable knowledge generating process. LLMs are stochastic, that's not a baseless smear.

As ever, "power" is a cluster of different things.

Replies from: dr_s↑ comment by dr_s · 2023-08-16T16:49:05.987Z · LW(p) · GW(p)

It would be absolutely the consequence of a knowledge generating process. You are stochastic too, I am stochastic, there is noise and quantum randomness and we can't ever prove that given the exact same input we'd produce the exact same output every time, without fail. And you can make an LLM deterministic, just set its temperature to zero. We don't do that simply because it makes their output more varied, fun and interesting, but also, it doesn't destroy the coherence of that output altogether.

Basically even thinking that "stochastic" is a kind of insult is missing the point, but that's what people who unironically use the term "stochastic parrot" mostly do. They're trying to say that LLMs are blind random imitators who thus are unable of true understanding and will always be, but that's not implied by a more rigorous definition of what they do at all. Heck, for what it matters, actual parrots probably understand a bit of what they say. I've seen plenty of videos and testimonies of parrots using certain words in certain non-random contexts.

Replies from: TAG↑ comment by TAG · 2023-08-16T17:01:30.188Z · LW(p) · GW(p)

It would be absolutely the consequence of a knowledge generating process.

I said "reliable". A stochastic model is only incidentally a truth generator. Do you think it's impossible to improve on LLM s by making the underlying engine more tuned in to truth per se?

You are stochastic too, I am stochastic,

If it's more random than us, it's not more powerful than us.

nd you can make an LLM deterministic, just set its temperature to zero.

Which obviously ins't going to give you novel solutions to maths problems. That's trading off one kind of power against another.

Basically even thinking that “stochastic” is a kind of insult is missing the point, but that’s what people who unironically use the term “stochastic parrot” mostly do. They’re trying to say that LLMs are blind random imitators who thus are unable of true understanding and will always be, but that’s not implied by a more rigorous definition of what they do at all.

But the objection can be steelmanned, eg: "If it's more random than us, it's not more powerful than us."

Replies from: dr_s↑ comment by dr_s · 2023-08-17T06:58:42.419Z · LW(p) · GW(p)

Is it more random than us? I think you're being too simplistic. Probabilistic computation can be compounded to reduce the uncertainty to an arbitrary amount, and in some cases I think be more powerful than purely deterministic one.

At its core the LLM is deterministic anyway. It produces logits of belief on what should be the next word. We, too, have uncertain beliefs. Then the systems is set up in a certain way to turn those beliefs into text. Again, if you want to choose always the most likely answer, just set the temperature to zero!

Replies from: TAG↑ comment by TAG · 2023-08-17T10:16:13.897Z · LW(p) · GW(p)

It has "beliefs" regarding which word should follow another, and any other belief, opinion or knowledge is an incidental outcome of that. Do you think it’s impossible to improve on LLM s by making the underlying engine more tuned in to truth per se?

Replies from: dr_s↑ comment by dr_s · 2023-08-17T10:27:22.966Z · LW(p) · GW(p)

No, I think it's absolutely possible, at least theoretically - not sure what would it take to actually do it of course. But that's my point, there exists somewhere in the space of possible LLMs a "always gives you the wisest, most truthful response" model that does exactly the same thing, predicting the next token. As long as the prediction is always that of the next token that would appear in the wisest, most truthful response!

Replies from: TAG↑ comment by TAG · 2023-08-17T11:09:16.350Z · LW(p) · GW(p)

Which is different to predicting a token on the basis of the statistical regularities in the training data. An LLM that works that way is relatively poor at reliably outputting truth, so a version of the SP argument goes through.

Replies from: dr_s↑ comment by dr_s · 2023-08-17T11:11:08.666Z · LW(p) · GW(p)

I think for the limit of infinite, truthful training data, with sufficient abstraction, it would not be necessarily different. We too form our beliefs from "training data" after all, we're just highly multimodal and smart enough to know the distinction between a science textbook and a fantasy novel. An LLM doesn't have maybe that distinction perfectly clear - though it does grasp it to some point.

Replies from: TAG, the-sauce↑ comment by TAG · 2023-08-17T12:04:04.217Z · LW(p) · GW(p)

We too form our beliefs from “training data”

There's no evidence that we do so based solely on token prediction, so that's irrelevant.

Replies from: dr_s↑ comment by dr_s · 2023-08-17T13:46:41.731Z · LW(p) · GW(p)

I just don't really understand in what way "token prediction" is anything less than "literally any possible function from a domain of all possible observations to a domain of all possible actions". At least if your "tokens" cover extensively enough all the space of possible things you might want to do or say.

↑ comment by Max TK (the-sauce) · 2023-08-17T13:45:39.623Z · LW(p) · GW(p)

I think a significant part of the problem is not the LLMs trouble of distinguishing truth from fiction, it's rather to convince it through your prompt that the output you want is the former and not the latter.

↑ comment by Max TK (the-sauce) · 2023-08-16T15:52:01.763Z · LW(p) · GW(p)

I don't really know what to make of this objection, because I have never seen the stochastic parrot argument applied to a specific, limited architecture as opposed to the general category.

Edit: Maybe make a suggestion of how to rephrase to improve my argument.

Replies from: TAG, TAG↑ comment by TAG · 2023-08-16T16:30:32.442Z · LW(p) · GW(p)

I have never seen the stochastic parrot argument applied to a specific, limited architecture

I've never seen anything else. According to wikipedia, the term was originally applied to LLMs.

Replies from: the-sauceThe term was first used in the paper "On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?" by Bender, Timnit Gebru, Angelina McMillan-Major, and Margaret Mitchell (using the pseudonym "Shmargaret Shmitchell").[4] ThBold text

↑ comment by Max TK (the-sauce) · 2023-08-16T16:39:07.374Z · LW(p) · GW(p)

LLMs use 1 or more inner layers, so shouldn't the proof apply to them?

Replies from: TAG↑ comment by TAG · 2023-08-16T16:43:58.482Z · LW(p) · GW(p)

what proof?

Replies from: the-saucecomment by [deleted] · 2023-08-16T16:30:23.640Z · LW(p) · GW(p)

This argument is completely correct.

However, I will note a corollary I jump to. It doesn't matter how lame or unintelligent an AI system's internal cognition actually is. What matters if it can produce outputs that lead to tasks being performed. And not even all human tasks. AGI is not even necessary for AI to be transformative.

All that matters is that the AI system perform the subset of tasks related to [chip and robotics] manufacture, including all feeder subtasks. (so everything from mining ore to transport to manufacturing)

These tasks have all kinds of favorable properties that make them easier than the full set of "everything a human can do". And a stochastic parrot is obviously quite suitable, we already automate many of these tasks with incredibly stupid robotics.

So yes, a stochastic parrot able to auto-learn new songs is incredibly powerful.

Replies from: TAG, the-sauce↑ comment by Max TK (the-sauce) · 2023-08-16T16:42:41.441Z · LW(p) · GW(p)

Based on your phrasing I sense you are trying to object to something here, but it doesn't seem to have much to do with my article. Is this correct or am I just misunderstanding your point?

Replies from: None↑ comment by [deleted] · 2023-08-16T16:56:49.550Z · LW(p) · GW(p)

You are misunderstanding. Is English not your primary language? I think it's pretty clear.

I suggest rereading the first main paragraph. The point is there, the other 2 are details.

Replies from: the-sauce↑ comment by Max TK (the-sauce) · 2023-08-16T17:14:37.439Z · LW(p) · GW(p)

Usually between people in international forums, there is a gentlemen's agreement to not be condescending over things like language comprehension or spelling errors, and I would like to continue this tradition, even though your own paragraphs would offer wide opportunities for me to do the same.