Sinclair Chen's Shortform

post by Sinclair Chen (sinclair-chen) · 2023-04-15T07:27:32.738Z · LW · GW · 77 commentsContents

77 comments

77 comments

Comments sorted by top scores.

comment by Sinclair Chen (sinclair-chen) · 2023-07-22T21:08:28.786Z · LW(p) · GW(p)

wheras yudkowsky called rationality as a perfect dance, where you steps land exactly right, like a marching band, or like performing a light and airy piano piece perfectly via long hours of arduous concentration to iron out all the mistakes -

and some promote a more frivolous and fun dance, playing with ideas with humor and letting your mind stretch with imagine, letting your butterflies fly -

perhaps there is something to the synthesis, to a frenetic, awkward, and janky dance. knees scraping against the world you weren't ready for. excited, ebullient, manic discovery. the crazy thoughts at 2am. the gold in the garbage. climbing trees. It is not actually more "nice" than a cool logical thought, and it is not actually more easy.

Do not be afraid of crushing your own butterflies, stronger ones will take its place!

comment by Sinclair Chen (sinclair-chen) · 2023-09-15T17:43:52.117Z · LW(p) · GW(p)

Sometimes when one of my LW comments gets a lot of upvotes, I feel an urge that it's too high relative to how much I believe it and I need to "short" it

comment by Sinclair Chen (sinclair-chen) · 2023-08-08T23:09:04.031Z · LW(p) · GW(p)

why should I ever write longform with the aim of getting to the top of LW, as opposed to the top of Hacker News? similar audiences, but HN is bigger.

I don't cite. I don't research.

I have nothing to say about AI.

my friends are on here ... but that's outclassed by discord and twitter.

people here speak in my local dialect ... but that trains bad habits.

it helps LW itself ... but if im going for impact surely large reach is the way to go?

I guess LW is uniquely about the meta stuff. Thoughts on how to think better. but I'm suspicious of meta.

↑ comment by Raemon · 2023-08-09T00:43:45.254Z · LW(p) · GW(p)

it helps LW itself ... but if im going for impact surely large reach is the way to go?

I think the main question is "do you have things to say that build on states-of-the-art in a domain that LessWrong is on the cutting edge of?". Large reach is good when you have a fairly simple thing you want to communicate to raise the society baseline, but sometimes higher impact comes from pushing the state of the art forward, or communicating something nuanced with a lot of dependencies.

You say you don't research, so maybe not, but just wanted to note you may want to consider variations on that theme.

If you're communicating something to broader society, fwiw I also don't know that there's actually much tradeoff between optimizing for hacker news vs LessWrong. If you optimize directly for doing well on hacker news probably LW folk will still reasonably like it, even if it's not, like, peak karma or whatever (with some caveats around politically loaded stuff which might play differently with different audiences).

↑ comment by Viliam · 2023-08-09T07:38:49.349Z · LW(p) · GW(p)

You could publish on LW and submit the article to HN.

From my perspective (as a reader), the greatest difference between the websites is that cliché stupid comments will be downvoted on LW; on HN there is more of this kind of noise.

I think you are correct that getting to the top of HN would give you way more visibility. Though I have no idea about specific numbers.

(So, post on HN for exposure, and post on LW for rational discussion?)

comment by Sinclair Chen (sinclair-chen) · 2023-05-13T22:17:28.645Z · LW(p) · GW(p)

Ways I've followed math off a cliff

- In my first real job search, I told myself I about a month to find a job. Then, after a bit over a week I just decided to go with the best offer I had. I justified this as the solution to optimal stopping problem, to pick the best option after 1/e time has passed. The job was fine, but the reasoning was wrong - the secretary problem assumes you know no info other than which candidate is better. Instead, I should've put a price on features I wanted from a job (mentorship, ability to wear a lot of hats and learn lots of things) and judged each job within what I thought was the distribution.

- Notably: my next job didn't pay very well and I stayed there too long after I'd given up hope in the product. I think I was following a pattern of following a path of low resistance both for the first and second jobs.

- I was reading up on crypto a couple years ago and saw what I thought was an amazing opportunity. It was a rebasing dao on the avalanche chain, called Wonderland. I looked into the returns, and guessed how long it would keep up, and put that probability and return rate into a Kelly calculator.

- Someone at a LW meetup: "hmm I don't think Kelly is the right model for this..." but I didn't listen.

- I did eventually cut my losses after only losing ~$20,000, and some further reasoning that the whitepaper didn't really make sense.

comment by Sinclair Chen (sinclair-chen) · 2023-05-17T16:19:57.755Z · LW(p) · GW(p)

I’ve been learning more from YouTube than from Lesswrong or ACX these days

Replies from: rhollerith_dot_com↑ comment by RHollerith (rhollerith_dot_com) · 2023-07-13T22:26:28.204Z · LW(p) · GW(p)

Same here. More information. [LW · GW]

comment by Sinclair Chen (sinclair-chen) · 2023-04-25T17:31:16.344Z · LW(p) · GW(p)

Moderation is hard yo

Y'all had to read through pyramids of doom containing forum drama last week. Or maybe, like me, you found it too exhausting and tried to ignore it.

Yesterday Manifold made more than $30,000, off a single whale betting in a self-referential market designed like a dollar auction, and also designed to get a lot of traders. It's the biggest market yet, 2200 comments, mostly people chanting for their team. Incidentally parts of the site went down for a bit.

I'm tired.

I'm no longer as worried about series A. But also ... this isn't what I wanted to build. The rest of the team kinda feels this way too. So does the whale in question.

Once upon a time, someone at a lw meetup asked me, half joking, that I please never build a social media site.

↑ comment by Sinclair Chen (sinclair-chen) · 2023-04-26T04:58:28.898Z · LW(p) · GW(p)

Update: Monetary policy is hard yo

Isaac King ended up regretting his mana purchase a lot after it started to become clear that he was losing in the whales vs minnows market. We ended up refunding most of his purchase (and deducting his mana accordingly, bringing his manifold account deeply negative).

Effectively, we're bailing him out and eating the mana inflation :/

Aside: I'm somewhat glad my rant here has not gotten much upvotes/downvotes ... it probably means the meme war and the spammy "minnow" recruitment calls hasn't reached here much, fortunately...

comment by Sinclair Chen (sinclair-chen) · 2023-04-15T07:27:33.096Z · LW(p) · GW(p)

Lesswrong is too long.

It's unpleasant for busy people and slow readers. Like me.

Please edit before sending. Please put the most important ideas first, so I can skim or tap out when the info gets marginal. You may find you lose little from deleting the last few paragraphs.

Replies from: Viliam↑ comment by Viliam · 2023-04-15T20:58:04.754Z · LW(p) · GW(p)

Some articles have an abstract, but most don't. Perhaps the UI could nudge authors towards writing one.

Now when you click "New Post", there are text fields: title and body. It could be: title, abstract/summary, body. So that if you hate writing abstracts, you can still leave it empty and it works like previously, but it would always remind you that this option exists.

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2023-04-16T02:09:10.947Z · LW(p) · GW(p)

That's a bad design imo. I'd bet it wouldn't get used much.

Generally more options are bad. All UI options incur an attention cost on everyone who sees it even if the feature is niche. (There's also dev upkeep cost.)

Fun fact: Manifold's longform feature, called Posts, used to have required subtitles. The developer who made it has since left, but I think the subtitles were there to solve a similar thing - there was a page that showed all the posts and a little blurb about each one.

One user wrote "why is this a requirement" as their subtitle. So I took out the subtitle from the creation flow, and instead used the first few lines of the body as the preview. I think not showing a preview at all, and just making it more compact, would also be good and I might do that instead.

An alternate UI suggestion: show from the edit screen what would show up "above the fold" in the hover over preview. No, I think that's still too much, but its still better than an optional abstract field.

comment by Sinclair Chen (sinclair-chen) · 2023-04-15T09:09:14.000Z · LW(p) · GW(p)

Mr Beast's philanthropic business model:

- Donate money

- Use the spectacle to record a banger video

- Make more money back on the views via ads and sponsors.

- Go back to step 1

- Exponential profit!

EA can learn from this.

- Imagine a Warm Fuzzy economy where the fuzzies themselves are sold and the profit funds the good

- The EA castle is good actually

- When picking between two equal goods, pick the one that's more MEMEY

- Content is outreach. Content is income

- YouTube's feedback popup is like RLHF. Mr Beast says because of this you can't game the algo, just have to make good videos that people actually enjoy. Youtube has solved aligning human optimizers, enough that the top YouTuber got there by donating money and helping people.

- Mr Beast plans to give all his money away. He eschews luxury in favor of degenerately investing it all.

- Unlike FTX it does good now. With his own money.

- Mr Beast is scope sensitive

- Mr Beast has an international audience.

- circle-of-concern of his content has grown to reflect that

- The philanthropy is mostly cash transfers. In briefcases.

Isn't this effective altruism? At least with a lowercase "e"

Why isn't EA talking about this?

Should I edit up a real post on this?

↑ comment by Viliam · 2023-04-15T21:03:50.731Z · LW(p) · GW(p)

As far as I know, Mr Beast is the first who tried this model. I wonder what happens when people notice that this model works, and suddenly he will have a lot of competition? Maybe Moloch will find a way to eat all the money. Like, maybe the most meme-y donations will turn out to be quite useless, but if you keep optimizing for usefulness you will lose the audience.

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2023-04-16T02:51:00.019Z · LW(p) · GW(p)

Other creators are trying videos like "I paid for my friend's art supplies". I think Mr. Beast currently has a moat as the person who's willing to spend the most and therefore get the craziest videos.

I hope do-nice-things becomes a content genre that displaces some of the stunts, pranks, reaction videos, mean takedowns etc. common on popular youtube.

People put up with the ads because they want to watch the video. They want to watch the video because it makes them feel warm and happy. Therefore, it is the warm fuzzies, the feeling of caring, love, and empathy, that pays for the philanthropy in the first place. You help the people in the video, ever so slightly, just by wanting them to be helped, and thus clicking the video to see them be helped. It's altruism and wholesomeness at scale. If this eats 20% of the media economy, I will be glad.

The counterfactual is not that people donate more money or attention to a more effective cause.

The counterfactual is people watching other media, or watching less and doing something else.

Yes, Make-a-Wish tier ineffectiveness "I helped this kid with cancer" might be just as compelling content as "1000 blind people see for the first time." I don't think that by default the altruism genre has a high impact. Mr Beast just happens to be nerdy and entrepreneurial in effective ways, so far.

I do think there's an opportunity to shape this genre, contribute to it, and make it have a high impact.

comment by Sinclair Chen (sinclair-chen) · 2024-12-06T19:23:11.303Z · LW(p) · GW(p)

I kinda feel like I literally have more subjective experience after experiencing ego death/rebirth. I suspect that humans vary quite a lot in how often they are conscious, and to what degree. And if you believe, as I do, that consciousness is ultimately algorithmic in nature (like, in the "surfing uncertainty" predictive processing view, that it is a human-modeling thing which models itself to transmit prediction-actions) it would not be crazy for it to be a kind of mental motion which sometimes we do more or less of, and which some people lack entirely.

I don't draw any moral conclusions about this because I don't ethically value people or things in proportion to how conscious they are. I love the people I love, I'm certainly happy they are alive, and I would be happier for them to be more alive, but this is not why I love them.

↑ comment by Seth Herd · 2024-12-06T20:01:06.767Z · LW(p) · GW(p)

I think you are probably attending more often to sensory experiences, and thereby both creating and remembering more detailed representations of physical reality.

You are probably doing less abstract thought, since the number of seconds in a day hasn't changed.

Which do you want to spend more time on? And which sorts? It's a pretty personal question. I like to try to make my abstract thought productive (relative to my values), freeing up some time to enjoy sensory experiences.

I'm not sure there's a difference in representational density in doing sensory experience vs. abstract thought. Maybe there is. One factor in making it seem like you're having more sensory experience is how much you can remember after a set amount of time; another is whether each moment seems more intense by having strong emotional experience attached to it.

Or maybe you mean something different by more subjective experience.

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2024-12-06T22:37:17.577Z · LW(p) · GW(p)

You are definitely right about tradeoff of my direct sensory experience vs other things my brain could be doing like calculation or imagination. I hope with practice or clever tool use I will get better at something like doing multiple modes at once, task switching faster between modes, or having a more accurate yet more compressed integrated gestalt self.

↑ comment by Sinclair Chen (sinclair-chen) · 2024-12-06T19:30:27.638Z · LW(p) · GW(p)

tbh, my hidden motivation for writing this is that I find it grating when people say we shouldn't care how we treat AI because it isn't conscious. this logic rests on the assumption that consciousness == moral value.

if tomorrow you found out that your mom has stopped experiencing the internal felt sense of "I", would you stop loving her? would you grieve as if she were dead or comatose?

↑ comment by Seth Herd · 2024-12-06T19:56:30.975Z · LW(p) · GW(p)

It depends entirely on what you mean by consciousness. The term is used for several distinct things. If my mom had lost her sense of individuality but was still having a vivid experience of life, I'd keep valuing her. If she was no longer having a subjective experience (which would pretty much require being unconscious since her brain is producing an experience as part of how it works to do stuff), I would no longer value her but consider her already gone.

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2024-12-06T22:45:36.802Z · LW(p) · GW(p)

interesting. what if she has her memories and some abstract theory of what she is, and that theory is about as accurate as anyone else's theory, but her experiences are not very vivid at all. she's just going through the motions running on autopilot all the time - like when people get in a kind of trance while driving.

comment by Sinclair Chen (sinclair-chen) · 2024-05-26T02:38:00.895Z · LW(p) · GW(p)

New startup idea: cell-based / cultured saffron. Saffron is one of the most expensive substances by mass. Most pricey substances are precious metals that are valuable for signalling value or rare elements used in industry. On the other hand saffron is bought and directly consumed by millions(?) of poor people - they just use small amounts because the spice is very strong. Unlike lab-grown diamonds, lab-grown saffron will not face less demand due to lower signalling value.

The current way saffron is cultivated is they grow these whole fields of flowers, harvest them, then just pick out the stigmata - that tiny strand is the saffron. You can't even use the whole strand if you want good quality saffron - you only keep the red part of the strand.

Vanilla, also an expensive space, gets the vast majority of its flavor from the chemical vanillin. But saffron gets it's flavor from lots of different chemicals. Artificial vanilla flavoring is very prevalent but artificial saffron flavoring is not because it's harder to get it right.

I expect that lab grown saffron would make a lot of money if you can get the cost lower than field-grown saffron. It would not be easy for existing providers to lower their prices because growing saffron is intrinsically expensive and it's already fairly priced. The demand for it is very robust, and its existing consumers are price-sensitive.

comment by Sinclair Chen (sinclair-chen) · 2024-02-21T21:07:17.102Z · LW(p) · GW(p)

woah I didn't even know lw team was working on a "pre 2024 review" feature using prediction markets probabilities integrated into the UI. super cool!

comment by Sinclair Chen (sinclair-chen) · 2023-07-12T10:49:00.223Z · LW(p) · GW(p)

I am a GOOD PERSON

(Not in the EA sense. Probably more closer to an egoist or objectivist or something like that. I did try to be vegan once and as a kid I used to dream of saving the world. I do try to orient my mostly fun-seeking life to produce big postive impact as a side effect, but mostly trying big hard things is cuz it makes mee feel good

Anyways this isn't about moral philosophy. It's about claiming that I'm NOT A BAD PERSON, GENERALLY. I definitely ABIDE BY BROADLY AGREED SOCIAL NORMS in the rationalist community. Well, except when I have good reason to think that the norms are wrong, but even then I usually follow society's norms unless I believe those are wrong, in which case I do what I BELIEVE IS RIGHT:

I hold these moral truths to be evident: that all people, though not created equal, deserve a baseline level of respect and agency, and that that bar should be held high, that I should largely listen to what people want, and not impose on them what they do not want, especially when they feel strongly. That I should say true things and not false things, such that truth is created in people's heads, and though allowances are made for humor and irony, that I speak and think in a way reflective of reality and live in a way true to what I believe. That I should maximize my fun, aliveness, pleasure, and all which my body and mind find meaningful, and avoid sorrow and pain except when that makes me stronger. and that I should likewise maximize the joy of those I love, for my friends and community, for their joy is part of my joy and their sorrow is part of my sorrow. and that I will behave with honor towards strangers, in hopes that they will behave with honor towards me, such that the greater society is not diminished but that these webs of trust grow stronger and uplift everyone within.

Though I may falter in being a fun person, or a nice person, I strive strongly to not falter in being a good person.

This post is brought to you by: someone speculating that I claim to be a bad person. You may very well dispute whether I live up to the moral code outlined above, or whether I live up to a your moral code or one you think is better. I encourage you to point out my mistakes. However, I will never claim to be a person who no longer abides by the part of the moral law necessary to cooperate with the rationalist community and broader society. I acknowledge that any community I am a part of has the right to remove me if they no longer believe that I will abide by their stated and unstated rules. I do not fear this happening, yet I strive to prevent it from happening, because I follow my own code. I declare this not out timid sense of danger, but out of a sense of self determination, to see if this community will allow me to grow the strengths that I share with it.

comment by Sinclair Chen (sinclair-chen) · 2024-12-24T16:00:29.834Z · LW(p) · GW(p)

what's the deal with bird flu? do you think it's gonna blow up

Replies from: Viliam↑ comment by Viliam · 2025-01-08T07:45:08.647Z · LW(p) · GW(p)

See this: https://www.astralcodexten.com/p/h5n1-much-more-than-you-wanted-to

comment by Sinclair Chen (sinclair-chen) · 2023-09-14T05:57:13.015Z · LW(p) · GW(p)

EA forum has better UI than lesswrong. a bunch of little things are just subtly better. maybe I should start contributing commits hmm

Replies from: james-fisher↑ comment by Jim Fisher (james-fisher) · 2023-09-18T17:09:26.912Z · LW(p) · GW(p)

Please say more! I'd love to hear examples.

(And I'd love to hear a definition of "better". I saw you read my recent post [LW · GW]. My definition of "better" would be something like "more effective at building a Community of Inquiry". Not sure what your definition would be!)

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2023-09-20T00:10:05.417Z · LW(p) · GW(p)

As in visually looks better.

- LW font still has bad kerning on apple devices that makes it harder to read I think, and makes me tempted to add extra spaces at the end of sentences. (See this github issue)

- Agree / Disagree are reactions rather than upvotes. I do think the reversed order on EAF is weird though

- EAF's Icon set is more modern

comment by Sinclair Chen (sinclair-chen) · 2023-09-05T03:44:54.000Z · LW(p) · GW(p)

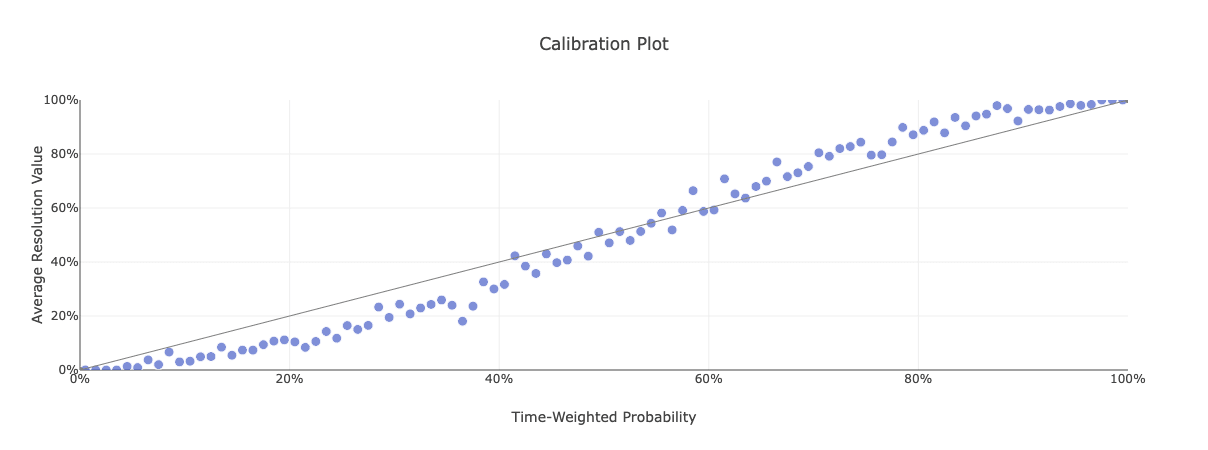

The s-curve in prediction market calibrations

https://calibration.city/manifold displays an s-curve for the market calibration chart. this is for non-silly markets with >5 traders.

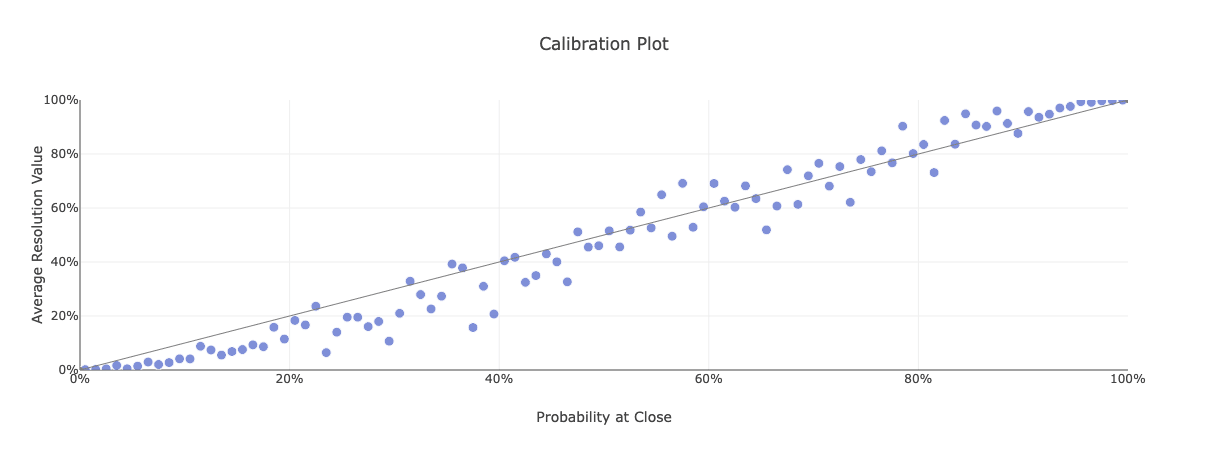

This is what it looks like at close:

this means the true probability is farther from 50% than the price makes it seem.

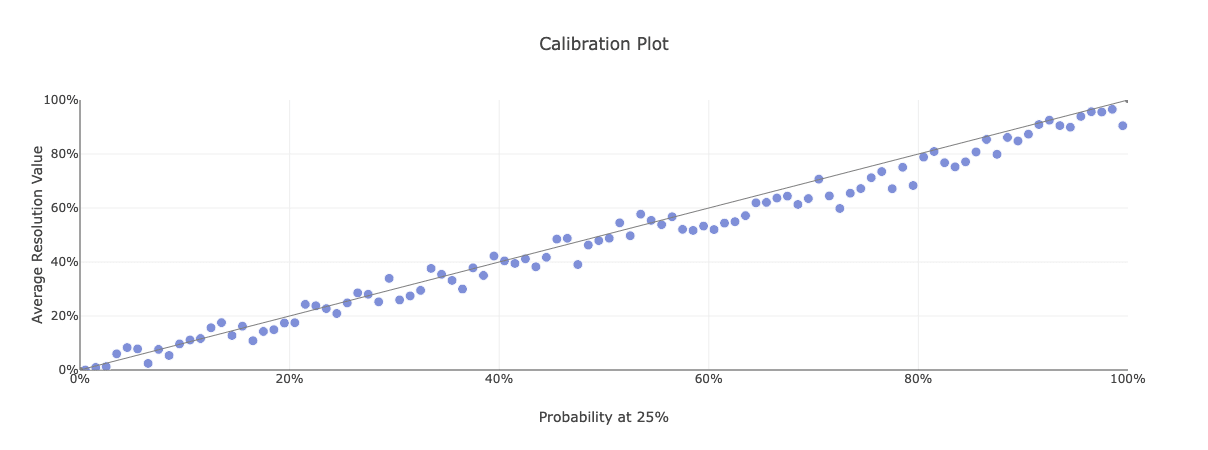

The calibration is better at 1/4 of the way to close:

you might think it's because markets closing near 20% are weird in some way (unclear resolution) but markets 3/4 of the way to close also show the s-curve. Go see for yourself.

The CSPI tournament also exhibited this s-curve. In that article it points out predictit does too!

I left a comment there on why I think this might be the case: the AMM is inefficient, limited balances, people chasing higher investment returns elsewhere.

I'm curious what people think. And also curious if polymarket or the indian prediction markets also have a similar curve.

---

should I make a real post for this? to-do's are: look into polymarket data (surely it exists? it's blockchain) and fit a curvy formula to this.

comment by Sinclair Chen (sinclair-chen) · 2023-04-19T16:17:35.488Z · LW(p) · GW(p)

If I got a few distinct points in reply to a comment or post, should I put my points in seperate comments or a single one?

Replies from: r, Dagon↑ comment by RomanHauksson (r) · 2023-04-22T08:08:37.670Z · LW(p) · GW(p)

I'm biased towards generously leaving multiple comments in order to let people upvote the most important insights to the top.

↑ comment by Dagon · 2023-04-20T03:44:25.191Z · LW(p) · GW(p)

Either choice can work well, depending on how separable the points are, and how "big" they are (including how much text you will devote to them, but also how controversial or interesting you predict they'll be in spawning further replies and discussion). You can also mix the styles - one comment for multiple simple points, and one with depth on just one major expository direction.

I'd bias toward "one reply" most of the time, and perhaps just skipping some less-important points in order to give sufficient weight to the steelman of the thing you're responding to.

Replies from: jam_brand↑ comment by jam_brand · 2023-04-21T03:33:16.143Z · LW(p) · GW(p)

Another hybrid approach if you have multiple substantive comments is to silo each of them in their own reply to a parent comment you've made to serve as an anchor point to / index for your various thoughts. This also has the nice side effect of still allowing separate voting on each of your points as well as (I think) enabling them, when quoting the OP, to potentially each be shown as an optimally-positioned side-comment [LW · GW].

comment by Sinclair Chen (sinclair-chen) · 2024-08-15T18:12:02.337Z · LW(p) · GW(p)

is anyone in this community working on nanotech? with renewed interest in chip fabrication in geopolitics and investment in "deep tech" in the vc world i think now is a good time to revisit the possibility of creating micro and nano scale tools that are capable of manufacturing.

like ASML's most recent machine is very big, so will the next one have to be even bigger? how would they transport it if it doesn't fit on roads? seems like the approach of just stacking more mirrors and more parts will hit limits eventually. "Moore's Second Law" says the cost of semiconductor production increases exponentially. perhaps making machines radically smaller, manufacturing nano things using many micro scales machines working in parallel, could be a way to reign in costs and shorten iteration cycles.

there's a two papers I found about the concept of a "fab on a chip" - they seem promising, but mostly exploratory. they did succeed in using microelectromechanics (MEMS) and tweezers to create a tiny vapor deposition tool i believe.

obviously the holy grail would be a tiny fab that could create another version of itself as well as other useful things (chips, solar panels). then you can do the whole industrial revolution recursion thing where you forge better tongs to forge better. I think this vision is lost on people that do nanoscale R&D now - academics and people working long hours in cleanrooms running expensive tests on big expensive machines.

anyways, I've only been looking into this for a short while

comment by Sinclair Chen (sinclair-chen) · 2024-02-22T14:21:23.981Z · LW(p) · GW(p)

I see smart ppl often try to abstract, generalize, mathify, in arguments that are actually emotion/vibes issues. I do this too.

The neurotypical finds this MEAN. but the real problem is that the math is wrong

↑ comment by Dagon · 2024-02-22T16:17:03.638Z · LW(p) · GW(p)

From what I've seen, the math is fine, but the axioms chosen and mappings from the vibes to the math are wrong. The results ARE mean, because they try to justify that someone's core intuitions are wrong.

Amusingly, many people's core intuitions ARE wrong (especially about economics and things that CAN be analyzed with statistics and math), and they find it very uncomfortable when it's pointed out.

↑ comment by Vladimir_Nesov · 2024-02-22T14:38:08.176Z · LW(p) · GW(p)

For it to make sense to say that the math is wrong, there needs to be some sort of ground truth, making it possible for math to also be right, in principle. Even doing the math poorly is exercise that contributes to eventually making the math less wrong.

comment by Sinclair Chen (sinclair-chen) · 2023-07-09T08:57:51.120Z · LW(p) · GW(p)

LW posts about software tools are all yay open source, open data, decentralization, and user sovereignty! I kinda agree ... except for the last part. Settings are bad. If users can finely configure everything then they will be confused and lost.

The good kind of user sovereignty comes from simple tools that can be used to solve many problems.

↑ comment by Dagon · 2023-07-09T15:32:25.546Z · LW(p) · GW(p)

This comes from over-aggregation of the idea of "users". There is a very wide range of capabilities, interest, and availability of time for different people to configure things. For almost all systems, the median and modal user is purely using defaults.

People on LW who care enough to post about software are likely significant outliers in their emphasis on such things. I know I am - I strongly prefer transparency and openness in my tooling, and this is a very different preference even from many of my smart coworkers.

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2023-07-09T21:11:37.036Z · LW(p) · GW(p)

I think the vast majority of people who use software tools are busy. Technically-inclined intelligent people value their time more highly than average.

Most of the user interactions you do in your daily life are seemless like your doorknob. You pay attention to the few things that you configure because they are fun or annoying or because you get outsized returns from configuring it - aka the person who made it did a bad job serving your needs.

Configuring something is generally something you do because it is fun, not because it is efficient. If it takes a software engineer a few hours to assemble a computer, then the opportunity cost makes it more expensive than buying the latest macbook. I say this as someone who has spent a few hundred dollars and a few hours building four custom ergonomic split keyboards. It is better than my laptop keyboard, but not like 10x better

I guess there is a bias/feature where if you build something, you value it more. But Ikea still sends you one set of instructions. Good user sovereignty is when hobbyists hack together different ikea sets or mod it with stuff from the hardware store. It would be bad for a furniture company to expected every user to do that, unless they specifically target that niche and deliver so much more value to them to make up for the smaller market.

I think a lot of people are hobbyists on something, but no rational person is a hobbyist on everything. Your users will be too busy hacking on their food (i.e. cooking) or customizing the colors and shape of their garments and won't bother to hack on your product.

↑ comment by Dagon · 2023-07-10T00:27:41.468Z · LW(p) · GW(p)

I don't know what the vast majority thinks, but I suspect people value their time differently, not necessarily more or less. Technically-minded people see the future time efficiency of current time spent tweaking/configuring AND enjoy/value that time more, because they're solving puzzles at the same time.

After the first few computer builds, the fun and novelty factor declines greatly, but the optimization pressure (performance and price/performance) remains pretty strong for some. I do know some woodworkers who've made furniture, and it's purely for the act of creation, not any efficiency.

Not sure what my point is here, except that most of these decisions are more individually variant than the post implied.

comment by Sinclair Chen (sinclair-chen) · 2023-04-26T05:12:27.282Z · LW(p) · GW(p)

despite the challenge, I still think being a founder or early employee is incredibly awesome

coding, product, design, marketing, really all kinds of building for a user - is the ultimate test.

it's empirical, challenging, uncertain, tactical, and very real.

if you succeeds, you make something self-sustaining that continues to do good.

if you fail, it will do bad. and/or die.

and no one will save you.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-04-26T05:31:58.261Z · LW(p) · GW(p)

strongly agreed except that...

continues to survive and continues to do good are not guaranteed to be the same and often are directly opposed. it is much easier to build a product that keeps getting used than one that should.

it's possible that yours is good for the world, but I suspect mine isn't. I regret helping make it.

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2023-05-01T07:21:25.531Z · LW(p) · GW(p)

it's easy to have a mission and almost every startup has one. only 10% of startups survive. but yes, surviving and continuing to do good is strictly harder.

what was your company?

↑ comment by the gears to ascension (lahwran) · 2023-05-01T09:46:00.245Z · LW(p) · GW(p)

I'm the other founder of vast.ai besides Jacob Cannell [LW · GW]. I left in early 2019 partially induced by demotivation about the mission, partially induced by wanting a more stable job, partially induced by creative differences, and a mix of other smaller factors.

comment by Sinclair Chen (sinclair-chen) · 2024-11-26T05:52:09.595Z · LW(p) · GW(p)

why do people equate conciousness & sentience with moral patienthood? your close circle is not more conscious or more sentient than people far away, but you care about your close circle more anyways. unless you are SBF or ghandi

Replies from: cubefox, MondSemmel, localdeity, elityre↑ comment by cubefox · 2024-11-26T11:30:28.570Z · LW(p) · GW(p)

Ethics is a global concept, not many local ones. That I care more about myself than about people far away from me doesn't mean that this makes an ethical difference.

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2024-11-29T07:06:53.630Z · LW(p) · GW(p)

I didn't use the word "ethics" in my comment, so are you making a definitional statement, to distinguish between [universal value system] and [subjective value system] or just authoritatively saying that I'm wrong?

Are you claiming moral realism? I don't really believe that. If "ethics" is global, why should I care about "ethics"? Sorry if that sounds callous, I do actually care about the world, just trying to pin down what you mean.

↑ comment by cubefox · 2024-11-29T17:08:16.700Z · LW(p) · GW(p)

Yeah definitional. I think "I should do x" means about the same as "It's ethical to do x". In the latter the indexical "I" has disappeared, indicating that it's a global statement, not a local one, objective rather than subjective. But "I care about doing x" is local/subjective because it doesn't contain words like "should", "ethical", or "moral patienthood".

↑ comment by MondSemmel · 2024-11-26T11:07:25.718Z · LW(p) · GW(p)

Re: moral patienthood, I understand the Sam Harris position (paraphrased by him here as "Morality and values depend on the existence of conscious minds—and specifically on the fact that such minds can experience various forms of well-being and suffering in this universe.") as saying that anything else that supposedly matters, only matters because conscious minds care about it. Like, a painting has no more intrinsic value in the universe than any other random arrangement of atoms like a rock; its value stems purely from conscious minds caring about it. Same with concepts like beauty and virtue and biodiversity and anything else that's not directly about conscious minds.

And re: caring more about one's close circle: well, everyone in your close circle has their own close circle they care about, and if you repeat that exercise often enough, the vast majority of people in the world are in someone's close circle.

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2024-11-29T07:27:21.230Z · LW(p) · GW(p)

I definitely think that if I was not conscious then I would not coherently want things. But that conscious minds are the only things that can truly care, does not mean that conscious minds are the only things we should terminally care about.

The close circle composition isn't enough to justify Singerian altruism from egoist assumptions, because of the value falloff. With each degree of connection, I love the stranger less.

↑ comment by MondSemmel · 2024-11-29T11:10:05.142Z · LW(p) · GW(p)

Well, if there were no minds to care about things, what would it even mean that something should be terminally cared about?

Re: value falloff: sure, but if you start with your close circle, and then aggregate the preferences of that close circle (who has close circles of their own), and rinse and repeat, then this falloff for any individual becomes comparatively much less significant for society as a whole.

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2024-11-29T19:56:28.327Z · LW(p) · GW(p)

Uh, there are minds. I think you and I both agree on this. Not really sure what the "what if no one existed" thought experiment is supposed to gesture at. I am very happy that I exist and that I experience things. I agree that if I didn't exist then I wouldn't care about things

I think your method double counts the utility. In the absurd case, if I care about you and you care about me, and I care about you caring about me caring about you... then two people who like each other enough have infinite value. unless the repeating sum converges. How likely is the converging sum exactly right such that a selfish person should love all humans equally? Also even if it was balanced, if two well-connected socialites in latin america break up then this would significantly change the moral calculus for millions of people!

Being real for a moment, I think my friends (degree 1) are happier if I am friends with their friends (degree 2), want us to be at least on good terms, and would be sad if I fought with them. But my friends don't care that much how I feel about the friends of their friends (degree 3)

↑ comment by MondSemmel · 2024-11-29T20:22:26.584Z · LW(p) · GW(p)

Apologies if I gave the impression that "a selfish person should love all humans equally"; while I'm sympathetic to arguments from e.g. Parfit's book Reasons and Persons[1], I don't go anywhere that far. I was making a weaker and (I think) uncontroversial claim, something closer to Adam Smith's invisible hand: that aggregating over every individual's selfish focus on close family ties, overall results in moral concerns becoming relatively more spread out, because the close circles of your close circle aren't exactly identical to your own.

- ^

Like that distances in time and space are similar. So if you imagine people in the distant past having the choice for a better life at their current time, in exchange for there being no people in the far future, then you wish they'd care about more than just their own present time. A similar logic argues against applying a very high discount rate to your moral concern for beings that are very distant to you in e.g. space, close ties, etc.

↑ comment by localdeity · 2024-11-26T10:06:43.638Z · LW(p) · GW(p)

One argument I've encountered is that sentient creatures are precisely those creatures that we can form cooperative agreements with. (Counter-argument: one might think that e.g. the relationship with a pet is also a cooperative one [perhaps more obviously if you train them to do something important, and you feed them], while also thinking that pets aren't sentient.)

Another is that some people's approach to the Prisoner's Dilemma is to decide "Anyone who's sufficiently similar to me can be expected to make the same choice as me, and it's best for all of us if we cooperate, so I'll cooperate when encountering them"; and some of them may figure that sentience alone is sufficient similarity.

Replies from: sinclair-chen, nathan-helm-burger↑ comment by Sinclair Chen (sinclair-chen) · 2024-11-29T07:36:57.614Z · LW(p) · GW(p)

we completely dominate dogs. society treat them well because enough humans love dogs.

I do think that cooperation between people is the origin of religion, and its moral rulesets which create tiny little societies that can hunt stags.

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-11-26T17:08:03.174Z · LW(p) · GW(p)

We need better, more specific terms to break up the horrible mishmash of correlated-but-not-truly-identical ideas bound up in words like consciousness and sentient.

At the very least, let's distinguish sentient vs sapient. All animals all sentient, only smart ones are sapient (maybe only humans depending on how strict your definition).

https://english.stackexchange.com/questions/594810/is-there-a-word-meaning-both-sentient-and-sapient

Some other terms needing disambiguation... Current LLMs are very knowledgeable, somewhat but not very intelligent, somewhat creative, and lack coherence. They have some self-awareness but it seems to lack some aspects that animals have around "feeling self state", but some researchers are working on adding these aspects to experimental architectures.

What a mess our words based on observing ourselves make of trying to divide reality at the joints when we try to analyze non-human entities like animals and AI!

↑ comment by Eli Tyre (elityre) · 2024-11-26T19:32:03.447Z · LW(p) · GW(p)

Well presumably because they're not equating "moral patienthood" with "object of my personal caring".

Something can be a moral patient, who you care about to the extent you're compelled by moral claims, or who's rights you are deontologically prohibited from trampling on, without your caring about that being in particular.

You might make the claim that calling something a moral patient is the same as saying that you care (at least a little bit) about its wellbeing, but not everyone buys that calim.

↑ comment by Eli Tyre (elityre) · 2024-11-26T19:33:34.322Z · LW(p) · GW(p)

your close circle is not more conscious or more sentient than people far away, but you care about your close circle more anyways

Or, more specifically, this is a non-sequitor to my deonotology, which holds regardless of whether I personally like or privately wish for the wellbeing of any particular entity.

comment by Sinclair Chen (sinclair-chen) · 2024-04-08T20:16:10.763Z · LW(p) · GW(p)

lactose intolerence is treatable with probiotics, and has been since 1950. they cost $40 on amazon.

works for me at least.

comment by Sinclair Chen (sinclair-chen) · 2023-07-16T02:32:35.593Z · LW(p) · GW(p)

The latest ACX book review of The Educated Mind is really good! (as a new lens on rationality. am more agnostic about childhood educational results though at least it sounds fun.)

- Somantic understanding is logan's Naturalism [? · GW]. It's your base layer that all kids start with, and you don't ignore it as you level up.

- incorporating heroes into science education is similar to an idea from Jacob Crawford that kids should be taught a history of science & industry - like what does it feel like to be the Wright brothers, tinkering on your device with no funding, defying all the academics that are saying that heavier than air flight is impossible. How did they decide on what materials, designs? If you are a kid that just wants to build model rockets you'd prefer to skip straight to the code of the universe, but I think most kids would be engaged by this. A few kids will want to know the aerodynamics equations, but a lot more kids would want to know the human story.

- the postrats are Ironic, synthesizing the rationalist Philosophy with stories, jokes, ideals, gossip, "vibes".

fun, joy, imagination are important for lifelong learning and competence!

anyways go read the actual review

comment by Sinclair Chen (sinclair-chen) · 2023-07-14T10:14:26.557Z · LW(p) · GW(p)

LWers worry too much, in general. Not talking about AI.

I mean ppl be like Yud's hat is bad for the movement. Don't name the house after a fictional bad place. Don't do that science cuz it makes the republicans stronger. Oh no nukes it's time to move to New Zealand.

Remember when Thiel was like "rationalists went from futurists to luddites in 20 years" well he was right.

↑ comment by Sinclair Chen (sinclair-chen) · 2023-07-14T10:15:23.849Z · LW(p) · GW(p)

it is time to build.

A C C E L E R A T E*

*not the bad stuff

comment by Sinclair Chen (sinclair-chen) · 2023-04-25T17:38:43.385Z · LW(p) · GW(p)

Moderating lightly is harder than moderating harshly.

Walled gardens are easier than creating a community garden of many mini walled gardens.

Platforms are harder than blogs.

Free speech is more expensive than unfree speech.

Creating a space for talk is harder than talking.

The law in the code and the design is more robust than the law in the user's head

yet the former is much harder to build.

comment by Sinclair Chen (sinclair-chen) · 2024-01-15T16:39:40.565Z · LW(p) · GW(p)

People should be more curious about what the heck is going on with trans people on a physical, biological, level. I think this is could be a good citizen science research project for y'all since gender dysphoria afflicts a lot of us in this community, and knowledge leads to better detection and treatment. Many trans-women especially do a ton of research/experimentation themselves. Or so I hear. I actually haven't received any mad-science google docs of research from any trans person yet. What's up with that? Who's working on this?

Where I'd start maybe:

- https://transfemscience.org/

- Dr Powers's powerpoint deck

- r/diyhrt

My open questions

- trans is comorbid with adhd, autism, and connective tissue disorders.

- what's the playbook for connective tissue disorders? Should more people try supplementing collagen? it's cheap!

- trans is slightly genetic. which genes?

- do any non-human animals have similar conditions?

- is the late-transitioning + female-attraction / early-transitioning + male-attraction typology for trans-women real? like are these traits actually bimodal or is it more linear and correlated?

- best ways to maintain sexual desire and performance on feminizing hrt.

but probably best research directions are ones I haven't thought of. there's so much we don't know (or or maybe just so much I don't know) just gotta keep pulling threads until we get answers!

comment by Sinclair Chen (sinclair-chen) · 2023-07-28T07:40:42.786Z · LW(p) · GW(p)

anyone else super hyped about superconductors?

gdi i gotta focus on real work. in the morning

↑ comment by mako yass (MakoYass) · 2023-07-28T22:41:03.041Z · LW(p) · GW(p)

No because prediction markets are low

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2023-08-05T18:18:52.484Z · LW(p) · GW(p)

you and i have very different conceptions of low

Replies from: MakoYass↑ comment by mako yass (MakoYass) · 2023-08-06T01:15:34.232Z · LW(p) · GW(p)

Update: I'm not excited because deploying this thing in most applications seems difficult:

- it's a ceramic, not malleable,

so it's not going in powerlinesapparently this was surmounted before, though those cables ended up being difficult and more expensive than the fucken titanium-niobium alternatives. - because printing it onto a die sounds implausible and because it wouldn't improve heat efficiency as much as people would expect (because most heat comes from state changes afaik? Regardless, this).

- because 1D

- because I'm not even sure about energy storage applications, how much energy can you contain before the magnetic field is strong enough to break the coil or just become very inconvenient to shield from neighboring cars? Is it really a lot?

↑ comment by Sinclair Chen (sinclair-chen) · 2023-08-07T04:17:07.446Z · LW(p) · GW(p)

if it is superconducting, I'm excited not just for this material but of the (lost soviet?) theories outlined in the original paper - in general new physics leads to new technology and in particular it would imply other room-temp standard pressure superconductors are possible.

idk it could be the next carbon nanotubes (as in not actually useful for much at our current tech level) or it could be the next steel / (not literally, I mean in terms of increasing rate of gdp growth). like if it allows for more precise magnetic sensors that leads to further materials science innovation, or just like gets us to fusion or something.

I'm not a physicist, just a gambler, but I have a hunch that if the lk-99 stuff pans out that we're getting a lot of positive EV dice rolls in the near future.

comment by Sinclair Chen (sinclair-chen) · 2023-07-28T07:35:39.486Z · LW(p) · GW(p)

There's something meditative about reductionism.

Unlike mindfulness you go beyond sensation to the next baser, realer level of the physics

You don't, actually. It's all in your head. It's less in your eyes and fingertips. In some ways, it's easier to be wrong.

Nonetheless cuts through a lot of noise - concepts, ideologies, social influences.

comment by Sinclair Chen (sinclair-chen) · 2023-04-16T03:00:25.779Z · LW(p) · GW(p)

In 2022 we tried to buy policy. We failed.

This time let's get the buy-in of the polis instead.

comment by Sinclair Chen (sinclair-chen) · 2024-03-27T08:24:31.127Z · LW(p) · GW(p)

let people into your heart, let words hurt you. own the hurt, cry if you must. own the unsavory thoughts. own the ugly feelings. fix the actually bad. uplift the actually good.

emerge a bit stronger, able to handle one thing more.

comment by Sinclair Chen (sinclair-chen) · 2023-04-29T16:45:55.782Z · LW(p) · GW(p)

my approach to AI safety is world domination