In Defense of Epistemic Empathy

post by Kevin Dorst · 2023-12-27T16:27:06.320Z · LW · GW · 19 commentsThis is a link post for https://kevindorst.substack.com/p/in-defense-of-epistemic-empathy

Contents

Absurdity. Ease. Baselessness. Conformity. Psychology. None 19 comments

TLDR: Why think your ideological opponents are unreasonable? Common reasons: their views are (1) absurd, or (2) refutable, or (3) baseless, or (4) conformist, or (5) irrational. None are convincing.

Elizabeth is skeptical about the results of the 2020 election. Theo thinks Republicans are planning to institute a theocracy. Alan is convinced that AI will soon take over the world.

You probably think some (or all) of them are unhinged.

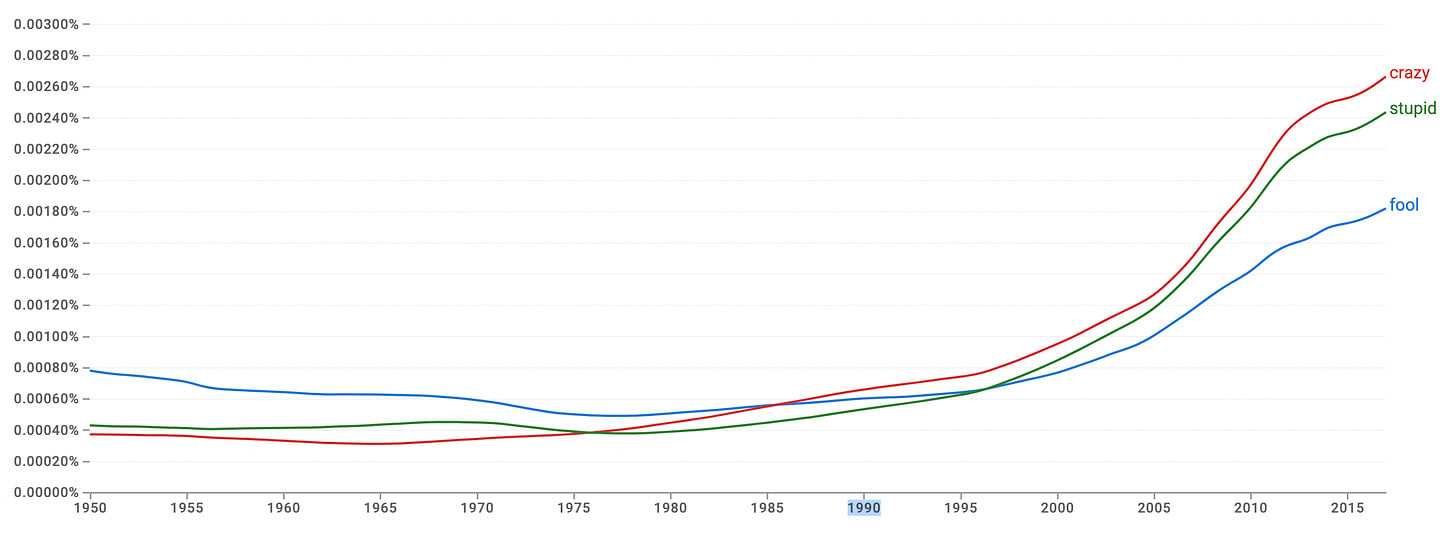

As I’ve argued before, we seem to be losing our epistemic empathy: our ability to both (1) be convinced that someone’s opinions are wrong, and yet (2) acknowledge that they might hold those opinions for reasonable reasons. For example, since the 90s our descriptions of others as ‘crazy’, ‘stupid’ or ‘fools’ has skyrocketed:

I think this is a mistake. Lots of my work aims to help us recover our epistemic empathy—to argue that reasonable processes can drive such disagreements, and that we have little evidence that irrationality (the philosophers’ term for being “crazy”, “stupid”, or a “fool”) explains it.

The most common reaction: “Clever argument. But surely you don’t believe it!”

I do.

Obviously people sometimes act and think irrationally. Obviously that sometimes helps explain how they end up with mistaken opinions. The question is whether we have good reason to think that this is generically the explanation for why people have such different opinions than we do.

Today, I want to take a critical look at some of the arguments people give for suspending their epistemic empathy: (1) that their views are absurd; (2) that the questions have easy answers; (3) that they don’t have good reasons for their beliefs; (4) that they’re just conforming to their group; and (5) that they’re irrational.

None are convincing.

Absurdity.

“Sure, reasonable people can disagree on some topics. But the opinions of Elizabeth, Theo, and Alan are so absurd that only irrationality could explain it.”

This argument over-states the power of rationality.

Spend a few years in academia, and you’ll see why. Especially in philosophy, it’ll become extremely salient that reasonable people often wind up with absurd views.

David Lewis thought that there were talking donkeys. (Since the best metaphysical system is one in which every possible world we can imagine is the way some spatio-temporally isolated world actually is.)

Timothy Williamson thinks that it’s impossible for me to not have existed—even if I’d never been born, I would’ve been something or other. (Since the best logical system is one on which necessarily everything necessarily exists.)

Peter Singer thinks that the fact that you failed to give $4,000 to the Against Malaria Fund this morning is the moral equivalent of ignoring a drowning toddler as you walked into work. (Since there turns out to be no morally significant difference between the cases.)

And plenty of reasonable people (including sophisticated philosophers) think both of the following:

- It’s monstrous to run over a bunny instead of slamming on your brakes, even if doing so would hold up traffic significantly; yet

- It’s totally fine to eat the carcass of an animal that was tortured for its entire life (in a factory farm), instead of eating a slightly-less-exciting meal of beans and rice.

David Lewis, Tim Williamson, Peter Singer, and many who believe both (1) and (2) are brilliant, careful thinkers. Rationality is no guard against absurdity.

Ease.

“Unlike philosophical disputes, political issues just aren’t that difficult.”

This argument belies common sense.

There are plenty of easy questions that we are not polarized over. Is brushing you teeth a good idea? Are Snickers bars healthy? What color is grass? Etc.

Meanwhile, the sorts of issues that people polarize over almost always involve complex, chaotic systems that only large networks of people (with strong norms of trust, honesty, and reliability) can hope to figure out. Hot-button political issues—vaccine safety, election integrity, climate change, police violence, gender dynamics, etc.—are all topics which require amalgamating massive amounts of disparate evidence from innumerable sources.

If you doubt this, switch from qualitative to quantitative questions. Instead of “Is the climate changing?” ask “How many degrees will atmospheric temperatures increase by 2100?” Instead of “Is police violence a problem?” ask “How much does police violence (vs. economic inequality, generational poverty, institutional racism, etc.) harm racial minorities?” Even with complete institutional trust, we don’t know!

Back to the qualitative questions. They may be “easy” to answer for anyone who shares your life experiences, social circles, and patterns of trust. But most people who disagree with you don’t share them.

Nor is it easy to figure out whom to trust. It may seem obvious to you that the trustworthy sources are X, Y, and Z. But networks of trust are built up over an entire lifetime, amalgamating countless experiences of who-said-what-when—you can’t figure it out from the armchair or a quick Google search.

To see this, imagine what would happen if tomorrow all your friends and co-workers started voicing extreme skepticism about your favorite news networks and scientific organizations. I doubt it’d take long before you to became unsure whether to trust them.

But that is the position of your political opponents! Most of their friends and co-workers do think that your favored (X, Y, and Z) sources are unreliable. And, more generally, they’ve had a different lifetime of amalgamating different experiences leading to different networks of trust. No wonder they think differently—you would too, in their shoes.

Baselessness.

“I’ve talked to people who believe these things, and they don’t have any good reasons.”

This argument underestimates the communicative divide between people with radically different viewpoints.

I recently had the following experience. One day I gave a lecture to a room full of philosophers who said (I hope, honestly!) that I gave articulate defense of the rationality of a form of confirmation bias. The next day, I was having coffee with a friend who’s a biologist, and I tried to explain the idea.

It didn’t go well. I gave examples that were confusing. I referred to concepts they didn’t know. I started to explain them, only to realize the concept was unnecessary. I backtracked and vacillated. I was an inarticulate mess.

Most academics have had similar experiences. Why?

Conversations always take place within a common ground of mutual presuppositions. This is extremely useful, because it allows us to move much quicker over familiar territory to get to new ideas—at least when our audience shares our presuppositions.

But it causes a problem when we get used to talking in that way. When we talk to someone who doesn’t share those presuppositions, we find ourselves having to unlearn a bunch of conversational habits on the fly. This is hard, so often the conversation runs aground. (This is why academics are so often so bad at explaining their research to non-academics.)

Talking across the political aisle raises exactly the same problem: it pulls the conversational rug of shared presuppositions out from under us.

Consider the following thought experiment. Suppose you think that climate-change is human caused. Now you find yourself in an Uber, talking to someone who—though open and curious—is unsure about that. It’s clear that he watches completely different media than you, has no direct experience with scientists or the institution of science, has a completely different social network, and is generically skeptical about powerful institutions. You have 5 minutes to explain why you believe in climate change. How well do you think you’ll do?

Not well! (If you doubt this, try it—you’re likely suffering from an illusion of explanatory depth.)

The result? Your interlocutor would probably come away thinking that you don’t have any good reasons for your views. But the fact that you seemed this way to him in a brief conversation obviously doesn’t show that you don’t have good reasons for your beliefs—rather, it shows how hard it is to convey those good reasons across such large communicative divide.

Conformity.

“People are too conformist, and to the wrong sources.”

This argument uses a double-standard.

Either (i) people should listen to their social circles and trusted authorities, or (ii) they shouldn’t.

If (i) they should listen to their social circles and trusted authorities, the result will be that those with very different patterns of social trust than you should believe very different things. This is what happens to people like Elizabeth. When their friends, co-workers, and regular media outlets spend months talking about suspicious facts about the 2020 election, of course it’s reasonable for them to become skeptical. (Wouldn’t you do the same, if your friends and regular media outlets started saying such things about—say—the 2016 election?)

On the other hand, if (ii) people shouldn’t listen to their social circles and trusted authorities, then consequence will be excess skepticism. This is what happens to those who are skeptical of vaccines, climate change, or science generally. In fact, people who believe in conspiracy theories have usually “done their own research”— people who are skeptical of the covid-19 vaccines know much more about the fine details of their development than I do.

Psychology.

“Psychology and behavioral economics have shown, beyond any doubt, that people are systematically irrational.”

They really haven’t.

That’s what my posts are about. It’s my attempt to step outside my presuppositional bubble and explain why there’s reason to be skeptical of narratives of irrationality.

In short: empirical work on irrationality always presupposes normative claims about what rational people would think or do in the situation. Often those claims are oversimplified—or just wrong.

I’ve argued that the is true for research on overconfidence, belief persistence, confirmation bias, the conjunction fallacy, the gambler’s fallacy, and polarization.

I'm not alone. Plenty of people—most notably, the cognitive scientists and AI researchers who try to replicate the everyday feats of human cognition—agree. We've learned a lot about how the mind works from studying the ways inferences can go wrong. But we have not learned that the explanation is that people are dumb, stupid, or foolish.

That’s my attempt to say why I’m unconvinced by the common reasons for suspending epistemic empathy. Maybe we really should think that those who disagree with us have good reasons to.

But I recognize the meta-point: lots of people disagree with me, about this! They’re convinced that irrationality is what drives many societal disagreements. And I’d be a hypocrite if I didn’t think those beliefs were reasonable, too. I do. (Which is not to say I think those beliefs are true—thinking a belief is reasonable is compatible with thinking it’s wrong.)

So please: share your reasons! I want to know why you think your political opponents are irrational, so I can better work out why (and to what degree) I disagree.

19 comments

Comments sorted by top scores.

comment by joseph_c (cooljoseph1) · 2023-12-29T08:10:12.788Z · LW(p) · GW(p)

This graph seems to match the rise of the internet. Here's my alternate hypothesis: Most people are irrational, and now it's more reasonable to call them crazy/stupid/fools because they have much greater access to knowledge that they are refusing/unable to learn from. I think people are just about as empathetic as they used to be, but incorrect people are less reasonable in their beliefs.

Replies from: Kevin Dorst↑ comment by Kevin Dorst · 2024-01-01T16:15:30.402Z · LW(p) · GW(p)

Interesting! A middle-ground hypothesis is that people are just as (un)reasonable as they've always been, but the internet has given people greater exposure to those who disagree with them.

comment by Jiro · 2023-12-29T17:38:27.712Z · LW(p) · GW(p)

Like many other pieces of advice , some people need more epistemic empathy, some need less, and it's hard to aim your advice at only the ones who need more. In fact, it's unclear that you even recognize that there are a significant number who have too much of it.

Given the quokkas in the rationalist movement and on this site, I suspect that most of the audience has too much of it and could use less.

comment by Bruce Lewis (bruce-lewis) · 2023-12-28T03:02:39.022Z · LW(p) · GW(p)

I strongly upvoted this post because I believe epistemic empathy is important.

The word "irrational" has too many meanings, and I try to avoid it. And I try to direct criticism at arguments rather than people. But I do want to answer your final question as best I can. I'll just phrase it as problems with arguments rather than people's irrationality.

In my experience, the problem with arguments against COVID-19 vaccines is that they mainly consist of evidence that there's risk involved in getting vaccinated. To usefully argue against getting vaccinated, one needs evidence not only that vaccine risks exist, but that they're worse risks than those of remaining unvaccinated.

Similarly, arguments against masks are usually arguing against the wrong statement. They argue against "Masks always prevent transmission", when to be useful, they should be arguing against "Masks reduce transmission".

Replies from: dr_s↑ comment by dr_s · 2023-12-28T08:17:12.918Z · LW(p) · GW(p)

In my experience, the problem with arguments against COVID-19 vaccines is that they mainly consist of evidence that there's risk involved in getting vaccinated. To usefully argue against getting vaccinated, one needs evidence not only that vaccine risks exist, but that they're worse risks than those of remaining unvaccinated.

In the most extreme cases they also assume vast conspiracies that I assign a very low prior probability to simply based on "people can't do that sort of thing on that scale without screwing up". Paradoxically enough, very often conspiratorial beliefs assume more rationality and convergent behaviour (from the elites seen as hostile) than is warranted!

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2023-12-28T14:50:22.024Z · LW(p) · GW(p)

I'd say the main flaws in conspiracy theories are that they tend to assume that coordination is easy, especially when the conspiracy requires a large group of people to do something, generally assumes agency/homunculi too much, and underestimates the costs of secrecy, especially when trying to do complicated tasks. As a bonus, it also suffers from the problem of a lot of claimed conspiracy theories being told in a way that talks about it as though it was a narrative, which tends to be a general problem around a lot of subjects.

It's already hard enough to cooperate openly, and secrecy amplifies this difficulty a lot, so much so that conspiracies that are attempted usually go nowhere, and the successful conspiracies are a very rare set of the set of all conspiracies attempted.

Replies from: dr_s↑ comment by dr_s · 2023-12-28T15:02:31.452Z · LW(p) · GW(p)

Yeah, that's what I meant when I said people can't do that sort of thing on that scale without screwing it up. It just breaks down at some point.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-12-29T01:25:20.651Z · LW(p) · GW(p)

Counterpoint: PRISM, which was a very large, very complex operation dispersed over dozens or hundreds of locales, multiple governments, many private companies, etc., that managed to stay secret for at least a decade.

I wouldn't be surprised if something a bit less ambitious could be hidden for at least half a century.

Replies from: dr_s↑ comment by dr_s · 2023-12-29T06:19:32.382Z · LW(p) · GW(p)

That is an interesting counterpoint, but there's the fact that things like PRISM can exist in at least something like a pseudo-legal space; if government spooks come to you and ask you to do X and Y because terrorism, and it sounds legit, that's probably a strong coordination mechanism. It still came out eventually.

To compare with COVID-19, there probably are forms of more or less convergent behaviours that produce a conspiracy like appearance, but no space for real large conspiracies of that sort I can think of. My most nigh-conspiratorial C19 opinions are that early "masks are useless" recommendations were more of a ploy to protect PPE stocks than genuine advice, and that regardless of its truth, a lab leak was discounted way too quickly and too thoroughly for political reasons. Both these though don't require large active conspiracies, but simply convergent interests and biases across specific groups of people.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-12-29T16:47:34.209Z · LW(p) · GW(p)

if government spooks come to you and ask you to do X and Y because terrorism, and it sounds legit, that's probably a strong coordination mechanism.

There's no way that would apply to the people working at a facility intercepting high end Cisco routers by the truckload and planting backdoors on them, likely mostly bound for large enterprises of certain countries. No credible terrorist groups, or even all of them combined, would order so many thousands of high end routers month after month.

comment by gjm · 2023-12-27T21:17:09.633Z · LW(p) · GW(p)

I think you're (maybe deliberately) being unfair to David Lewis here.

I'm pretty sure that if you'd asked him "Are there, in the actual world, talking donkeys?" he would have said no. Which is roughly what most people would take "Are there talking donkeys?" to mean. And if someone says "there are talking donkeys", at least part of why we think there's something unreasonable about that is that if there were talking donkeys then we would expect to have seen them, and we haven't, which is not true of talking donkeys that inhabit other possible-but-not-actual worlds.

David Lewis believed (or at least professed to believe; I think he meant it) that the things we call "possible worlds" are as real as the world we inhabit. But he didn't think that they are the actual world and he didn't think that things existing in them need exist in the actual world. And I think (what I conjecture to have been) his actual opinion on this question is less absurd and more reasonable than the opinion you say, or at least imply, or at least insinuate, that he held.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2023-12-28T01:54:17.160Z · LW(p) · GW(p)

This is one of my biggest pet-peeves about a lot of languages, they basically have no way to bound the domain of discourse without getting quite complicated, and perhaps getting more formal as well, and in ordinary communication, a claim is usually assumed to have a bounded domain of discourse that's different from the set of all possible X, whether it's realities, worlds or whatever else is being talked about here, and I think this is the main problem with the attempt to make claim "In the real world, there are talking donkeys" sound absurd, because the real word is essentially attempting to bound the domain of discourse to talk about 1 world, the world we live in.

comment by metachirality · 2023-12-27T23:59:59.879Z · LW(p) · GW(p)

I think it makes more sense to word this as "others are not remarkably more irrational than you are" rather than saying that disagreements are not caused by irrationality.

Replies from: dr_s, sharmake-farah↑ comment by dr_s · 2023-12-28T08:13:58.272Z · LW(p) · GW(p)

I think some of these cases aren't irrationality at all, but difference in partial information. Belief trajectories are not path-independent: even just learning the same events in different order will lead you to updating differently. But there's also irrationality involved (e.g. I'm very unconvinced about arguments against the gambler's fallacy when there are people who express it in so many words all the time), it just shouldn't be your go to explanation to dismiss everyone else but yourself.

↑ comment by Noosphere89 (sharmake-farah) · 2023-12-28T02:10:36.097Z · LW(p) · GW(p)

Yep, I think this is the likely wording as well, since on a quick read, I suspect that what the research is showing isn't that humans are rational, but rather that we simply can't be rational in realistic situations due to resource starvation/resource scarcity issues.

Note, that doesn't mean it's easy or possible at all to fix the problem of irrationality, but I might agree with "others are not remarkably more irrational than you are."

Replies from: Kevin Dorst↑ comment by Kevin Dorst · 2024-01-01T16:25:08.218Z · LW(p) · GW(p)

I think it depends a bit on what we mean by "rational". But it's standard to define as "doing the best you CAN, to get to the truth (or, in the case of practical rationality, to get what you want)". We want to put the "can" proviso in there so that we don't say people are irrational for failing to be omniscient. But once we put it in there, things like resource-constraints look a lot like constraints on what you CAN do, and therefore make less-ideal performance rational.

That's controversial, of course, but I do think there's a case to be made that (at least some) "resource-rational" theories ARE ones on which people are being rational.

comment by Samuel Hapák (hleumas) · 2023-12-27T19:39:22.844Z · LW(p) · GW(p)

Thank you for writing this.

I always felt lots of empathy to all the people in my out-group[1]. Yeah, they believe to a bunch of wrong ideas for dubious reasons. So? My in-group usually believes a bunch of right ideas for similarly dubious reasons! They have no moral high-ground to claim the truth. Often, the out-group has better ideas to believe what they do than my in-group despite being wrong about the end result.

I actually think that it's worse when people are right for the wrong reasons than if they are wrong. In the latter case, there is at least a chance that reality will slap them into face eventually.

- ^

Out-group here is strongly topic dependent. Pretty much for every topic the in-group and out-group looks different to me.

comment by Lenmar · 2023-12-28T18:23:17.248Z · LW(p) · GW(p)

I'm curious how much of the decline stems from tribalistic moralization, and the rise of the cultural meme that all beliefs must support one tribe or another, and any belief associated with the other tribe leads inevitably to all the harm that the other tribe is (you believe) responsible for.

Replies from: Kevin Dorst↑ comment by Kevin Dorst · 2024-01-01T16:28:39.614Z · LW(p) · GW(p)

Good question. It's hard to tell exactly, but there's lots of evidence that the rise in "affective polarization" (dislike of the other side) is linked to "partisan sorting" (or "ideological sorting")—the fact that people within political parties increasingly agree on more and more things, and also socially interact with each other more. Lilliana Mason has some good work on this (and Ezra Klein got a lot of his opinions in his book on this from her).

This paper raises some doubts about the link between the two, though. It's hard to know!