Telic intuitions across the sciences

post by mrcbarbier · 2022-10-22T21:31:28.672Z · LW · GW · 0 commentsContents

[WORK IN PROGRESS - I will keep editing this post over time]

General intuitions in existing theories

Generative grammar

Why grammar is an important example

Grammar is also the only science I know which has tried to propose autonomous and non-trivial theories of functions.

Quick recap: how grammar works

How grammar is relevant

Intuition: imposing laws upon possible functional hierarchies

Intuition: Roles and contexts as equivalence classes

How grammar is unsatisfactory

Semantics

A book holds no meaning

How semantics is unsatisfactory

Semantics and grammar

Intuition: grammatical words and non-factorizable selection

Intuition: Failure modes

Agents, goals and values

Mīmāmsā

Biology

Intuition: Context as filtering

Protein sequence and function, and molecular evolution

Networks and systems biology

What is unsatisfactory about systems biology?

Ecology & the niche

Reductionist labels on telic objects - e.g. the Carbon Cycle

Software architecture, strategy games

Role = interface

Intuition: Functions can be quite universal at the top of the hierarchy but become implementation-specific going down

Intuition: Interesting functions arise from the combination of multiple selective forces

Ties to and differences with other formal theories

Statistical physics, inference, optimization, variational calculus

Intuition: distributed modes are as relevant as localized modules

Information theory

Information is not meaning, shared information is still not meaning

Ideal gas and beyond

Category theory

Taxonomies of function

[WIP: Here I will try to list examples that could be insightful for future work, and progressively work my way through them to understand if there is any structure or regularity there]

Software architecture

Evolution

Ecology

Strategy

None

No comments

[WORK IN PROGRESS - I will keep editing this post over time]

See the introductory post [LW · GW] for a motivation.

Explaining a phenomenon in terms of function, role, purpose, goal or meaning [LW · GW] is extremely common throughout, let's say, most of the sciences that are not physics or chemistry. Yet it is almost always done qualitatively, verbally, without real support from any kind of math [LW · GW].

In this post, I will try to list every important intuition about this class of concepts that I have stumbled upon so far. This probably does not make for a great page turner/scroller, but this is more of a reference post for future ones to quote.

Bias/competence disclosure: Having been trained all the way as a physicist and half-way as a linguist, my main inspirations are grammar and statistical mechanics. My understanding of other topics will be far more cursory, besides theoretical ecology which is where I work now. That being said, I do think everyone should learn a bit of grammar and stat mech, as those are virtuous pursuits.

General intuitions in existing theories

Generative grammar

Why grammar is an important example

Grammar is the only science I know which has been consistently good at not confusing "nature" and "function":

- Nature/category is what a word is, e.g. noun or adjective or verb... I can ask this question of the word in itself: is cat a noun or a verb?

- Function is what role a word serves in a larger context, e.g. cat serves as the subject in "Cat bites man." You cannot take the word out of context and ask of it, apart from any sentence: is cat a subject or a complement?

The same function can be served by many different natures (e.g. a verb-like thing serves as the subject in "To live forever sounds exciting/tiring."), and the same nature can perform many different functions.

Grammar is also the only science I know which has tried to propose autonomous and non-trivial theories of functions.

Quick recap: how grammar works

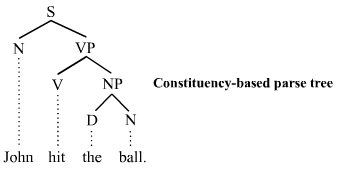

Many of you will have seen a parse tree such as the following (from Wikipedia)

Each node on such a tree is labeled with its nature (S: sentence, N: noun, V: verb, D: determiner; XP: phrase of type X). The position of a node in the tree indicates its grammatical function: the noun closest to the sentence is the grammatical subject, the noun phrase inside the verb phrase is the object.

How do we come up with any of this? There is a quite fascinating empirical process in grammatical analysis. Linguists use native speakers as guinea pigs, and ask them to make judgments of grammaticality: we take a valid sentence, delete a word or phrase or clause, or substitute another [LW · GW], or apply some other transformation (e.g. switching between active and passive voice) and ask if the sentence is still grammatical. Then, we classify together components that behave the same way under these transformations. For instance, did you know that some verbs behave as if they only need a subject, and others behave as if they only need an object? This is shown by this kind of comparison (asterisk: expression judged ungrammatical)

| The snow melted. | the melted snow | The victim shouted. | *the shouted victim |

Even though snow is the grammatical subject in the first sentence, it is a semantic object: melting is happening to it. This has led to all sorts of ideas like "The snow starts as object in a subject-less sentence '_ melted the snow' and then moves as it gets promoted to subject of the sentence" which try to produce non-trivial predictions.

How grammar is relevant

Why is this telism rather than reductionism? Because we are not trying to explain the sentence from the words, but rather explain the words from the sentence. The judgment of grammaticality is a selective force applying to the sentence as whole, and from it, we explain the role of each subcomponent, and within it each sub-subcomponent (much like natural selection applies to the organism as a whole, and from it, we explain each organ). The system is selectively simplest at large scales (a binary test: is the sentence grammatical or not), and most complex at small scales (an intricate question: what does a word contribute within each of the sentence's nested contexts).

Intuition: imposing laws upon possible functional hierarchies

Importantly, generative grammar has non-trivial claims about how functions are organized, that are mostly or entirely independent from the nature of components. A parse tree is just a representation of a functional hierarchy, but most theories put strong restrictions on which hierarchies are admissible.

A phrase structure theory such as X-bar claims that a parse tree is necessarily binary, and asymmetric: when two components combine, the resulting component behaves more like one of the two. In the example above, a Determiner and Noun combine to give a Noun Phrase that behaves very much like a noun (you could replace "the ball" by "Bill" and everything still works).

Intuition: Roles and contexts as equivalence classes

A context can be defined as the equivalence class of all the words that "work" (that preserve meaning or grammaticality or both) in that context. A role/function may also be an equivalence class: component x is to sentence S what component x' is to sentence S'.

How grammar is unsatisfactory

First, everyone who has taken grammar as an inspiration to do math has failed to escape the event horizon, and fallen back into the black hole of reductionist questions: how small things combine to generate larger things. It's even in the name: generative grammar. They will ask stuff such as "what rules should you put at the level of simple individual components to generate structures that look interesting/lifelike", which is as reductionist as it gets.

More importantly, grammar is far from having reached the level of consensus that one finds in e.g. physics. Various theories, various ways of building parse trees exist, and not everyone agrees on even the basics of what they should be. X-bar theory believes that the basic structure of any group of linguistic elements is subject-predicate: clarify what we're talking about, and what we are saying about it. In Less Wrongy terms, language tries to update your model of the world; to do so, it must first signal which existing part of the model should be updated (the subject), then what's novel about it (the predicate), and so on recursively for each sub-component. Since the predicate is a patch, an add-on, the whole group behaves more like the subject. For instance, the group "any cat" behaves like "cat", because "any" is a patch clarifying how we are using that concept - thus, D+N -> NP, the group acts like a noun (or does it?). Conversely "hit the ball" behaves as a verb.

This is plausible, but this is far from the only way that we can conceptualize language (critics say this view is overly influenced by logic), and the jury's still out. As a consequence, each theory has become more and more refined, and each is insightful on its own terms, but it is not even clear that grammar as a whole has made much consensual progress at all. Which is a bit sad for the most telos-aware of all existing sciences.

I can see at least two reasons why:

- maybe language is far too complicated to be the "perfect sphere falling in a void" of telism, and we need a much simpler toy example to jumpstart a fundamental theory.

- debates between grammatical schools are, in some sense, debates about meaning; maybe the functional structure of grammar cannot be grasped without also addressing semantics.

Semantics

It seems reasonable to say that meaning is pointing (see also) - meaning is always defined with reference to something external, and so it feels like we could connect it with the rest of the telic concepts (even if it may have a bunch of additional complexities).

A book holds no meaning

In the introductory post [LW · GW], I made a claim that a book does not contain its meaning - that meaning is not even a 'meaningful' thing to talk about when we consider a book on its own. Of course, that goes against our intuition that it is possible to "store" information.

A slightly longer version of the argument goes something like this:

Imagine that you build a "star decoder" comprised of a camera and a printer. When you point the camera at the stars in the northern hemisphere's night sky, the printer outputs the text of Hamlet. We can probably all agree that the text of Hamlet is not contained in the stars. But if you point the decoder at anything else, you don't get Hamlet but some other sequence of characters, so Hamlet is not really contained in the decoder alone either. Hamlet is only present in some three-way interaction between the night sky, the decoder, and you who can recognize the text for what it is. If that seems complicated, meaning is worse.

How semantics is unsatisfactory

On the one hand, you will find the "physicists" of meaning, like structuralist or formal semantics, who try to describe it purely in terms of abstract relations:

- Structuralists since Saussure have held that meaning can only be defined by exclusion: cat means "everything, minus everything that is not a cat". Because the association of a sign and a meaning is arbitrary, we can only use boundaries in words to reflect boundaries in the world, without any intrinsic content to anything.

- Formal semantic theories derive from logic, and are thus preoccupied exclusively with truth value.

Neither of those is a very satisfactory definition of meaning IMHO, nor is the simple "meaning is reference/pointing". My problem is they are too flat, they lose everything about the substance of meaning, the vague qualitative intuitions that lead us to group meanings in clusters like "colors", "famous Egyptians" or "action verbs applicable to inanimate objects". Truth value could have worked a bit like natural selection: a sentence is true or not, like an organism lives or not, this is a simple constraint that can be used to explain the 'organs' functionally. But to push that biological analogy, all that formal semantics allows us to express is how the survival or death (truth or untruth) of parts determines the survival of a whole -- say, a living body requires a living heart but can survive with dead hair -- without telling us what a heart or a hair does.

On the other hand, you will find the "chemists" of meaning, like conceptual semantics, who try to build all word meanings from a finite set of simple, universal primitives ordered in a kind of periodic table. The problem is they don't have any physical law telling them what the right primitives are, where they come from and why they can or cannot combine the way they do in various languages. Again, qualitative intuitions have to do all the heavy lifting, while the formal aspects are pretty boring (to me).

Semantics and grammar

Semantics and grammar are partially independent, but I have come to some useful (to me) intuitions by considering them together from a telic perspective.

Let's say that the speaker and listener apply selection pressure on a sentence to have a particular meaning, and use a simple interpretation of meaning that harks back to the subject/predicate idea described above:

- subject: an algorithm for finding a referent in the experienced world,

- predicate: an update to the set of handles/known ways of interacting mentally or physically with that referent.

Essentially, the mind is seen here as a pattern-matching machine that tries to identify situations, and then unpacks a list of "moves" (predictions or actions) it knows it is allowed to perform in that situation. For instance, the meaning of a sentence like "Unicorns are fond of virgins" is: given a certain algorithm for finding unicorns (look for something horse-shaped, check if it has a horn), we can predict how these entities will react to the presence of a certain class of other entities. The fact that unicorns do not exist in the real world does not make the interpretation of this sentence any different, deep down, from saying "Men with red berets like beer" or "Anger is painful" - the algorithms are similarly constructed, even if it turns out some of them never end up tested by real experience (I, for one, have never seen a man with a red beret).

Furthermore, it appears that this semantic load gets split up between (at least) lexicon and syntax, and how it is distributed depends on the language. For instance, whether the sentence describes a past or future event, or whether it is said rudely or politely, is inscribed into the grammar in some languages (e.g. Korean), while it is only expressed by the addition of extra words like "tomorrow", "yesterday" or "please" in other languages (e.g. Mandarin, as far as I can tell).

Assuming you accept this rough premise, I can now formulate a few ideas of how grammar may guide our thinking about telos in general.

Intuition: grammatical words and non-factorizable selection

In physics, a basic example of selection from the outside is applying an electric or magnetic field to a bunch of particles. In a simple ferromagnet, the result is simply that every spin aligns more or less with the external field -- even though there are interactions, the selection on the whole translates very transparently into selection on each part.

The linguistic equivalent could be the lexical meaning of e.g. nouns. It's not hard to imagine a primitive kind of sentence as a bag of nouns, like a description of a picture or a tag system. Each noun transparently bears part of the semantic load of the whole. Likewise, the organism's burden of "being alive" is in some sense transparently transferred to organs, most of which should also be alive at any given point.

Yet, language has grammatical words (also called function words) - words like with or whom in "the man with whom I spoke". Such a word barely carries any semantics in itself, yet it is crucial in organizing the contributions of other words. It may be a pretty good hint that there is something interesting happening functionally when some part of the system does not seem to be doing something obviously related to selection on the whole, yet still causes the whole to fail if it is removed.

Intuition: Failure modes

A problem with grammatical parse trees is that, if a function is just a position in the tree, what's the substance of any function? How is the meaning of a verb different from the meaning of a noun? Why can two sentences with very different functions have a similar-looking tree? On the other hand, if we need to label each node in the tree with the nature of the components, then we cannot have a theory of functions that is independent from natures (and so telism does not have the same autonomy as reductionism).

I believe a useful framing is to think of various aspects of meaning as different failure modes of trying convey useful information, for instance:

- Failure to identify the referent: nouns, determiners

- Failure to bound the statement in space and time: tense, pragmatics

and so on. The point is that, to add content, we might not need to know anything else about an object than how many different ways it has of failing (equivalent to the valence of a word i.e. how many positions it has open for complements, modifiers... each being a possible way in which the word fails to convey the necessary meaning), and how each potential failure at one level connects to failure at other levels (e.g. a failure of tense at the verb level entails a failure of temporal precision at the sentence level).

Agents, goals and values

I have been trying to avoid discussing goals and the Intentional stance [? · GW] because I feel this is neither the best starting point for this endeavor, nor a place where I have significant insights to contribute (the Design stance is good enough). Still, there can be some interesting insights to gather there.

Mīmāmsā

Grammar is the source of many of my telic intuitions. Modern grammatical theory was born when 19th century Europeans read ancient Indian linguistic texts, such as the grammar of Sanksrit by Pāṇini, and realized they'd better step up their game. Ancient Indian linguistics was born from the even more ancient Indian science of ritual, called Mīmāṁsā. Thus: modern grammar, meet great-grandma.

Most of what you can read online about Mimamsa discusses really tangential points of its epistemology, which got popular in the West. The core of Mimamsa is a simple question: how do you decide that a ritual is correct and effective? It reads very much like 2nd century BC Less Wrong with Vedic injunctions in lieu of Bayes' rule. In particular, the mimamsakas discuss the hierarchical structure of action in a way that sometimes bears a striking resemblance to parse trees (see above).

In particular, they list different ways in which a subgoal can derive from a higher goal, and how to detect that hierarchy in an existing ritual. Amusingly, a recurrent argument is that the less obvious the purpose of an action, the more likely it is to serve a higher-level hidden goal, while more intuitive actions should be thought as subordinate to it. For instance, bringing wood serves the obvious purpose of lighting the fire, so it is a subordinate action, whereas burning an offering serves no other obvious purpose and is thus higher in the hierarchy.

(I believe that this is very close to how our brain infers latent variables such as hidden values and beliefs driving other humans' behavior, and that that's precisely why rituals are a core social activity, essential to transferring those values and beliefs, but that's a can of worms for another day.)

Biology

Intuition: Context as filtering

Meaning or function are essentially context-dependent properties, but we do not have any mathematical way of defining context that makes this concept easy to manipulate. If our only way to define the context of a component is describing exhaustively the entire system minus that component, it becomes really hard to identify what a context does and evaluate whether two contexts are similar or different.

Here is a possible direction: In a technological or biological setting, it often makes sense to think of systems (both the whole and its component subsystems) as blackboxes defined by their input/output relationship. We may then formalize what a context is as "how system-wide inputs are filtered before reaching the component, and how component outputs are transformed into system-wide outputs". For instance, a membrane severely filters the possible external inputs into any inner cell process, but also prevents these processes from 'leaking' most of their byproducts to the outside. This definition of context as filtering allows us to ignore what the context is made of, and thus to avoid the problem of having to describe it exhaustively, as 'every other object in the world'. It also clarifies how even the notion of context depends on the overall system we are taking as a starting point. And it may be something that we can give a clear mathematical interpretation.

Protein sequence and function, and molecular evolution

An interesting system and a potential Rosetta stone for reductionism and telism:

- Selection at the level of the whole (protein function) can be rigorously defined, typically as "it must fold in one or a few states such that it binds to a certain other protein", which can be tested by simulation, at least in principle. This selective principle can then be used to explain the function of various domains within the protein.

- At the same time, the space of possible states is entirely generated by the underlying genetic code, which obeys rigorously known rules.

Overall, this is an almost unique situation in biology: having a complete and quantitative handle on what "selection" means and on how candidate states are generated. The duality of spaces (sequences and spatial configurations) and the complex mapping between them suggests that boring. fragile or idiosyncratic optimization is not so likely. Unfortunately the basic problem is computationally very hard, but toy models are possible and often interesting.

Networks and systems biology

Here I am talking about theory on genetic networks, metabolic networks, (natural) neural networks, ecological networks and so forth. The basic premise is that we decide of some level at which we treat components as black boxes, and study their patterns of connection.

What is unsatisfactory about systems biology?

At its least relevant to my purposes, there's a lot of classic reductionism, dealing with how motifs are built from smaller parts and how their structure determines what the whole motif does [LW · GW].

At its most relevant, it becomes very evocative of functional architecture, talking of stuff like modularity [LW · GW], and you'll even find some numerical experiments showing that a constraint on the whole can create constraints on the parts... but then everyone stops short of developing an actual theory for it. Perusing this literature, one sees all the right intuitions trying to take flight, and then back down to either verbal just-so stories, or reductionist/dynamical questions of "how it is implemented", "how could it evolve" and so on.

Ecology & the niche

The most annoying concept in ecology is the niche, because it usually feels like there is no way to define it that is not tautological. IMHO, the reason is that all the basic intuitions are telic in nature, and there is no established language for those.

Ecologists trying to explain species diversity and coexistence can broadly be split into those who think in terms of networks (often many species, arbitrary links between them), and those who think in terms of niches (usually focusing on one or a few salient traits and trade-offs to explain who competes with whom and how strongly).

But what is your niche if not a you-shaped hole in the world? i.e. explaining the attributes of a species by fitting into its context, by selection coming down from a larger system. While we can say interesting stuff about interactions looking only at two species together, the niche concept really clicks only if we consider the entire environment. This suggests that the only interesting way of thinking about niches is to relate them to meta-niches, meta-meta-niches, etc.

Reductionist labels on telic objects - e.g. the Carbon Cycle

Ecology has beautiful examples of the "reductionist black hole", where something attempts a telic explanation and then gets drawn to reductionism.

We verbally explain the existence of a species by its contribution to some much larger phenomenon. Then we try to give a reductionist name and interpretation to that phenomenon, such as the carbon cycle.

To me, that is like calling vision the "photon cycle".

The fact that the 'cycle' is 'carried' by photons is really the least interesting thing about it, although it makes it measurable and properly scientific because we are talking about well-defined particles. Trying to model all visual interactions between species by just having a global model of how many photons are reflected and intercepted might "work" to some extent, but it is also beautifully missing the point.

Software architecture, strategy games

Role = interface

Games like chess with their finite (if massive) configuration space and extensive data and analytical tools, could be a great starting point for an empirical investigation of nested purposes. Likewise, with a bit more difficulty, for software architecture.

Intuition: Functions can be quite universal at the top of the hierarchy but become implementation-specific going down

This intuition comes as easily from tactics vs strategy as it does from programming: chess and go probably share the same large-scale strategic insights, although converting them from one game to the other may require complex transformations (e.g. a piece in chess may sometimes play a similar role to a pattern or region on the go board). At the other end, tactics are highly dependent on the peculiarities of micro-level rules in each game, such as which moves are allowed, what shape the board is, etc.

Intuition: Interesting functions arise from the combination of multiple selective forces

A strategy game is a fight between two (or more) opposing selective forces, two possible frames for giving meaning to any move or set of moves. Defense and offense are functions that make sense within those frames: a move that attempts to preserve agreement to one selection rule, or attack the other.

More generally, a function may be defined by how a component contributes to a multiplicity of selection pressures taking place at various scales: a certain type of move may be tactically offensive while strategically defensive.

Ties to and differences with other formal theories

Statistical physics, inference, optimization, variational calculus

For my purposes, optimization is a subset of statistical physics, the zero temperature limit. Switching between stat phys and Bayesian inference is almost just a matter of perspective. So I will treat all these cousins in one breath.

Both stat mech and variational explanations show selection at work even in the most basic physics: when we say that light takes the shortest path, we don't say anything about how it does it, we don't decompose the system into molecules. We simply deduce which states will be observed given a system-wide constraint. Likewise, equilibrium laws like PV=NkT lack any kind of dynamics or causal structure - they don't tell us whether P is causing T or the other way around, nor what particles are doing. They are constraints selecting acceptable states (those whose P and V correspond to their N and T). External selection is applied by the bath of energy and/or particles that surrounds the system of interest, biasing the realization of states with a Gibbs/Boltzmann weight.

This suggests we could model role or function as a mapping between probabilistic biases applied to the whole system and biases arising in a subsystem.

Intuition: distributed modes are as relevant as localized modules

The focus on modularity, on spatial contiguity to think about functionally coherent subsystems may be deceptive, given that statistical physics gives many examples of nonlocally-held information (Hopfield memories, distributed computation, even just waves). There may be some way to rethink it in local terms, such as modularity in Fourier rather than direct space.

Information theory

The classical objects of information theory are the ideal gas of telism, the most basic thing that we can talk about and still say something interesting.

Information is not meaning, shared information is still not meaning

Information theory has nothing to say about meaningful information. Any measurement you can make on an object in itself, such as entropy, is by definition missing the point of what I want to call meaning, since it does not refer to any larger context/system. Shared information, transfer entropy and the like (e.g. Granger, Lizier) are bit closer... but still not there - it's building complexity up in reductionism, not building it down in telism. Confusing the two is the same kind of problems as I have with systems biology: mistaking the medium for the message.

Ideal gas and beyond

A cipher is pretty much an ideal gas from a functional viewpoint: many non-interacting copies of the same basic selection rule, namely that one symbol in the original message should be systematically associated with one symbol in the encoded message. The system-wide goal is to be able to discriminate between any two original messages. This resonates with the Saussurean idea of meaning purely as discrimination, as a mapping between ruptures in language (different words) and ruptures in the real world (different objects).

An error-correcting code is then a bit more complicated than an ideal gas, let's say a van der Waals gas. It has basic "grammatical words" (parity bits, which are not meaningful in themselves) and simple interactions with data bits, all deriving from having added another system-wide goal which is to be robust to a certain level of noise.

Category theory

An important concept from category theory is that of Cartesian category, which essentially refers to any object that behaves well under reductionism. This strongly suggests that the type of maths we want to use for telism will come from non-Cartesian categories, but that leaves a lot of possibilities. The minimal generic kind of non-Cartesianness in physics seems to be quantum entanglement, but that's a very specific way of adding context-dependence.

An example of maybe building the right kind of Rosetta stone between generative and selective viewpoints is the work by John Baez and coworkers on category theory for circuits, see in particular: Baez and Fong, https://arxiv.org/pdf/1504.05625. This is perhaps one of the most advanced attempts at having something like "applied" category theory, and still it feels pretty darn philosophical, or - much more likely - I am missing the implications of the foundational work being done.

Taxonomies of function

Functionalism is a whole thing in linguistics, sociology, anthropology and so on. It is the typical example of qualitative telism: people coming up with taxonomies of goals or purposes or roles, and going around putting labels on things and describing relations between these labels.

[WIP: Here I will try to list examples that could be insightful for future work, and progressively work my way through them to understand if there is any structure or regularity there]

Software architecture

(From SWEBOK and other sources)

Normal behavior

Modularity

Abstraction (interface, encapsulation, hiding internal structure)

Coupling

Time/resources

Synchronization, parallelization, performance

Event handling

Data Persistence

User interface

Anormal behavior

Exception handling

Security

Architectural styles

• General structures (for example, layers, pipes and filters, blackboard)

• Distributed systems (for example, client-server, three-tiers, broker)

• Interactive systems (for example, Model-View-Controller, Presentation-Abstraction-Control)

• Adaptable systems (for example, microkernel, reflection)

• Others (for example, batch, interpreters, process control, rule-based)

Design patterns

• Creational patterns (for example, builder, factory, prototype, singleton)

• Structural patterns (for example, adapter, bridge, composite, decorator, façade, fly-weight, proxy)

• Behavioral patterns (for example, command, interpreter, iterator, mediator, memento, observer, state, strategy, template, visitor)

Evolution

Persist

Limit or avoid change

Protection/Isolation

Mobility

Revert change (correction)

Expand or export self

Reproduction

Mobility

Generate diversity

Ecology

Trophic roles: defined with respect to the flux of energy and matter through the system

Producer

Consumer

Recycler

Host

Parasite

Pollinator

Commensal

Succession roles: defined with respect to the temporal sequence of compositions

Pioneer

Competitor

Nurse

Climax

Invader

Dynamical roles:

Regulator

Keystone

Strategy

An important peculiarity of strategy games is having two system-wide selective forces (each player trying to win), thus every move's function is defined with respect to its impact on each of the two.

Offense

Cause damage

Restrict action potential

Defense

Resist

Avoid

Delay

0 comments

Comments sorted by top scores.