Metaculus Predicts Weak AGI in 2 Years and AGI in 10

post by Chris_Leong · 2023-03-24T19:43:18.522Z · LW · GW · 14 commentsContents

14 comments

14 comments

Comments sorted by top scores.

comment by Gabe M (gabe-mukobi) · 2023-03-24T21:21:35.552Z · LW(p) · GW(p)

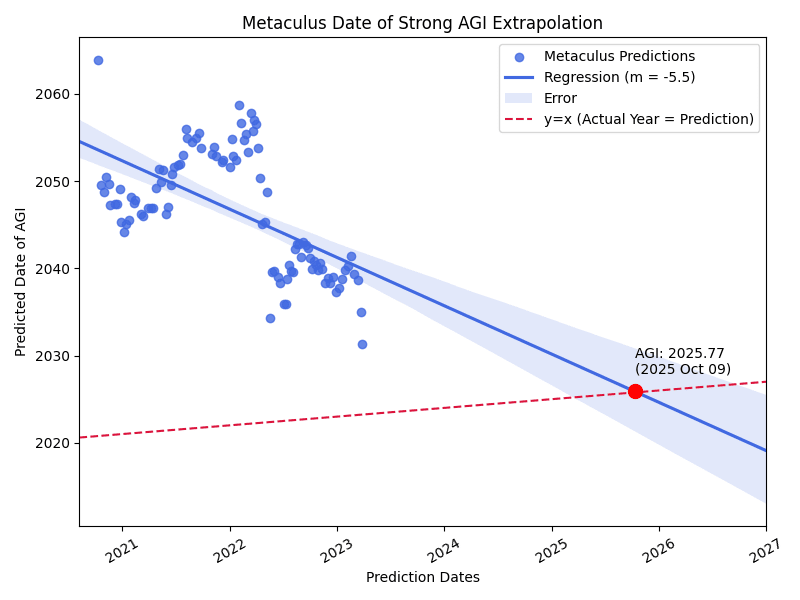

Here's a plot of the Metaculus Strong AGI predictions over time (code by Rylan Schaeffer). The x-axis is the data of the prediction, the y-axis is the predicted year of strong AGI at the time. The blue line is a linear fit. The red dashed line is a y = x line.

Interestingly, it seems these predictions have been dropping by 5.5 predicted years per real year which seems much faster than I imagined. If we extrapolate out and look at where the blue regression intersects the red y = x line (so assuming similar updating in the future, when we might expect the updated time to be the same as the time of prediction), we get 2025.77 (October 9, 2025) for AGI.

Edit: Re-pulled the Metaculus data which slightly changed the regression (m=-6.5 -> -5.5) and intersection (2025.44 -> 2025.77).

Replies from: gwern↑ comment by gwern · 2023-03-25T17:58:21.199Z · LW(p) · GW(p)

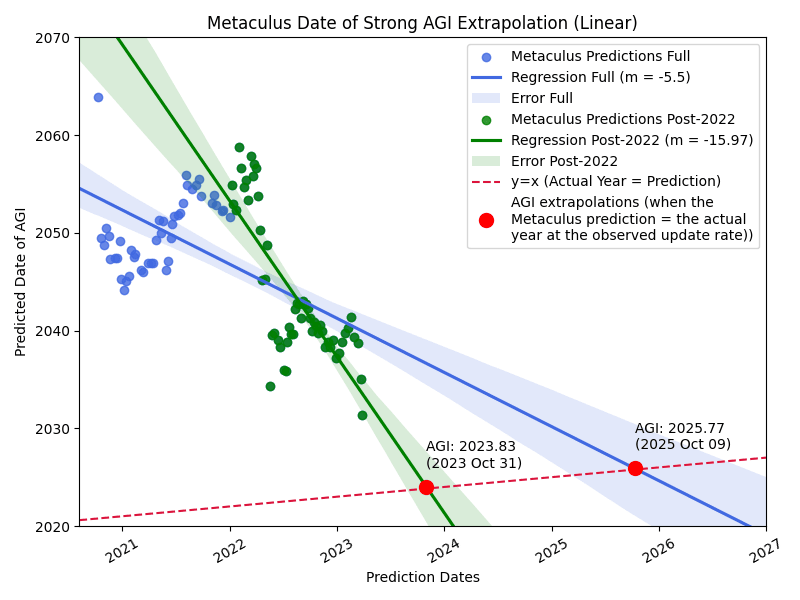

There's a striking inverted V-shape there (which would be even clearer if you fit a segmented regression with 1 break point), where it's drifting up for all of 2021, only to suddenly reverse and drop over like a stone 2022-2023.

What's the qualitative characterization of Metaculus collective views there? At least from my perspective, 2021 seemed in line with scaling expectations with tons of important DL research demonstrating the scaling hypothesis & blessings of scale. I don't remember anything that would've been pivotal around what looks like a breakpoint Dec 2021 - if it had happened around or after Feb 2022, then you could point to Chinchilla / Gato / PaLM / Flamingo / DALL-E 2, but why Dec 2021?

Replies from: gabe-mukobi, dsj↑ comment by Gabe M (gabe-mukobi) · 2023-03-26T04:16:24.907Z · LW(p) · GW(p)

Good idea. Here's just considering the predictions starting in 2022 and on. Then, you get a prediction update rate of 16 years per year.

Replies from: dsj

↑ comment by dsj · 2023-03-26T12:38:35.541Z · LW(p) · GW(p)

Of course the choice of what sort of model we fit to our data can sometimes preordain the conclusion.

Another way to interpret this is there was a very steep update made by the community in early 2022, and since then it’s been relatively flat, or perhaps trending down slowly with a lot of noise (whereas before the update it was trending up slowly).

↑ comment by dsj · 2023-03-26T02:56:15.386Z · LW(p) · GW(p)

Seems to me there's too much noise to pinpoint the break at a specific month. There are some predictions made in early 2022 with an even later date than those made in late 2021.

But one pivotal thing around that time might have been the chain-of-thought stuff which started to come to attention then (even though there was some stuff floating around Twitter earlier).

comment by Ben Pace (Benito) · 2023-03-24T20:37:55.454Z · LW(p) · GW(p)

Even though Eliezer claims that there was no fire alarm for AGI, perhaps this is the fire alarm?

I mean, obviously not, as most governments don't know that metaculus exists or what a prediction market is.

Replies from: lechmazur, akram-choudhary↑ comment by Lech Mazur (lechmazur) · 2023-04-05T00:20:35.331Z · LW(p) · GW(p)

Here is something more governments might pay attention to: https://today.yougov.com/topics/technology/survey-results/daily/2023/04/03/ad825/3.

46% of U.S. adults are very concerned or somewhat concerned about the possibility that AI will cause the end of the human race on Earth.

↑ comment by Akram Choudhary (akram-choudhary) · 2023-04-04T22:40:07.565Z · LW(p) · GW(p)

What on earth does the government have to do with a fire alarm? The fire alarm continues to buzz even if everyone in the room is deaf. It is just sending a signal not promising any particular action will be taken as a consequence.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2023-04-04T23:40:08.754Z · LW(p) · GW(p)

The use of the fire alarm analogy [LW · GW] on LessWrong is specifically about its effect, not about it being an epiphenomenon to people's behavior.

comment by AnthonyC · 2023-03-26T00:36:51.625Z · LW(p) · GW(p)

There are many fire alarms, or the people here in this discussion wouldn't know to be worried. There's just no way to know when the last fire alarm is. How often in offices and public spaces do you see everyone just ignore fire alarms because they assume nothing is actually wrong? I see it all the time.

comment by Christopher King (christopher-king) · 2023-03-25T01:16:59.168Z · LW(p) · GW(p)

It appears that metaculus' forecasts are not internally consistent

https://www.metaculus.com/questions/9062/time-from-weak-agi-to-superintelligence/

Replies from: Teerth AlokeCommunity Prediction: 42.6 months

↑ comment by Teerth Aloke · 2023-03-25T03:04:55.738Z · LW(p) · GW(p)

Difference in criteria.

Replies from: christopher-king↑ comment by Christopher King (christopher-king) · 2023-03-25T03:36:39.624Z · LW(p) · GW(p)

Yes, but the criteria still imply that the gap should be ≥ the difference in the other two forecasts.

comment by [deleted] · 2023-03-24T20:50:47.456Z · LW(p) · GW(p)

I keep seeing the phrase "if you are going to update update all the way" as an argument against these projection updates.

Basically the argument goes, people should have already expected gpt-3.5 and gpt-4 because those "in the know" 3 years ago used it and knew agi was possible.

For most of us, it was the public release. That crypto scams and AGI news, prior to public access, had the same breathless tone and hype. Direct access is the only way to know the difference between say gpt-4, which works, and IBM Watson, which evidently did not.

So the news we are reacting to is just a few months old.