Formal Solution to the Inner Alignment Problem

post by michaelcohen (cocoa) · 2021-02-18T14:51:14.117Z · LW · GW · 123 commentsContents

123 comments

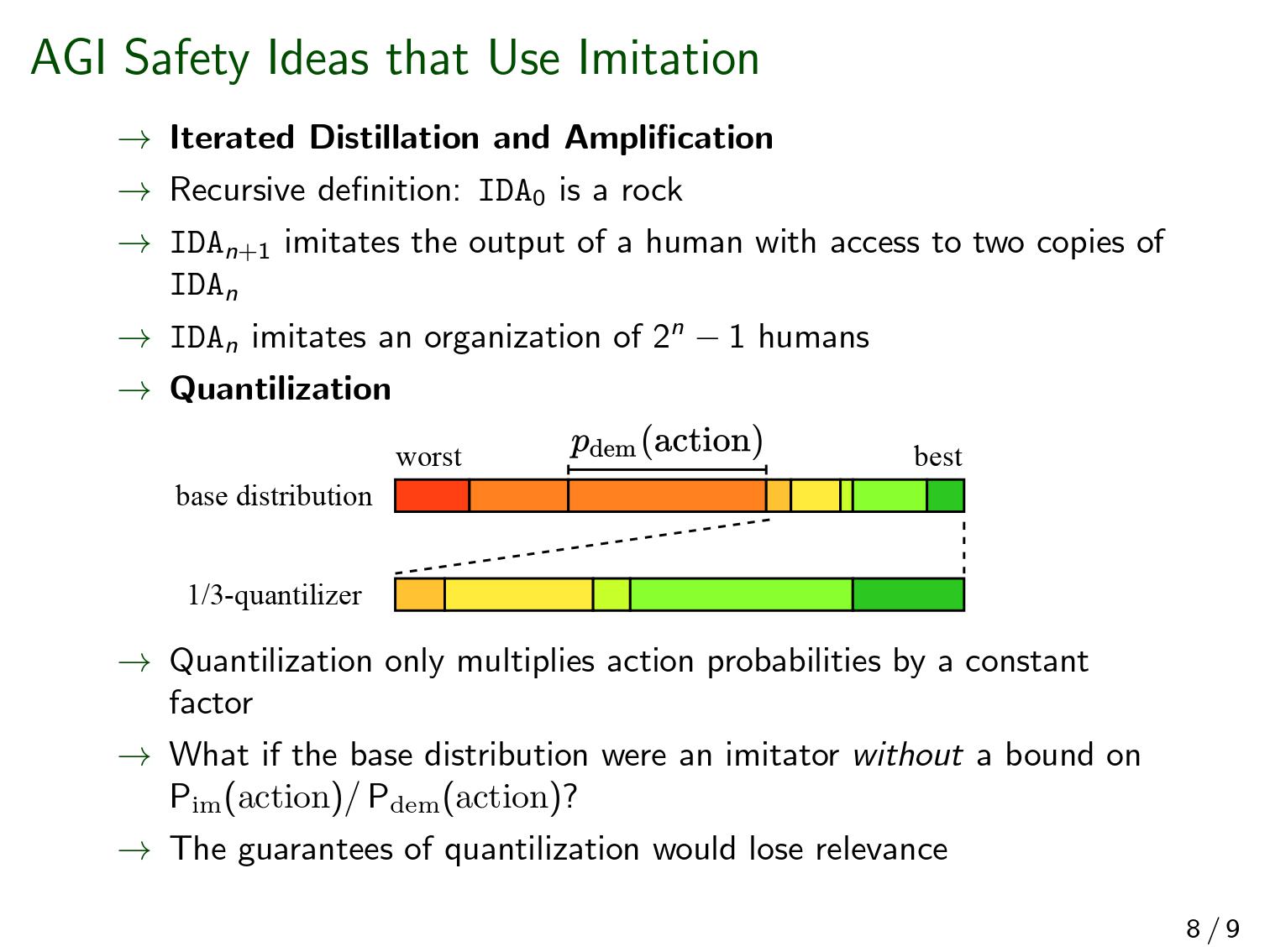

We've written a paper on online imitation learning, and our construction allows us to bound the extent to which mesa-optimizers could accomplish anything. This is not to say it will definitely be easy to eliminate mesa-optimizers in practice, but investigations into how to do so could look here as a starting point. The way to avoid outputting predictions that may have been corrupted by a mesa-optimizer is to ask for help when plausible stochastic models disagree about probabilities.

Here is the abstract:

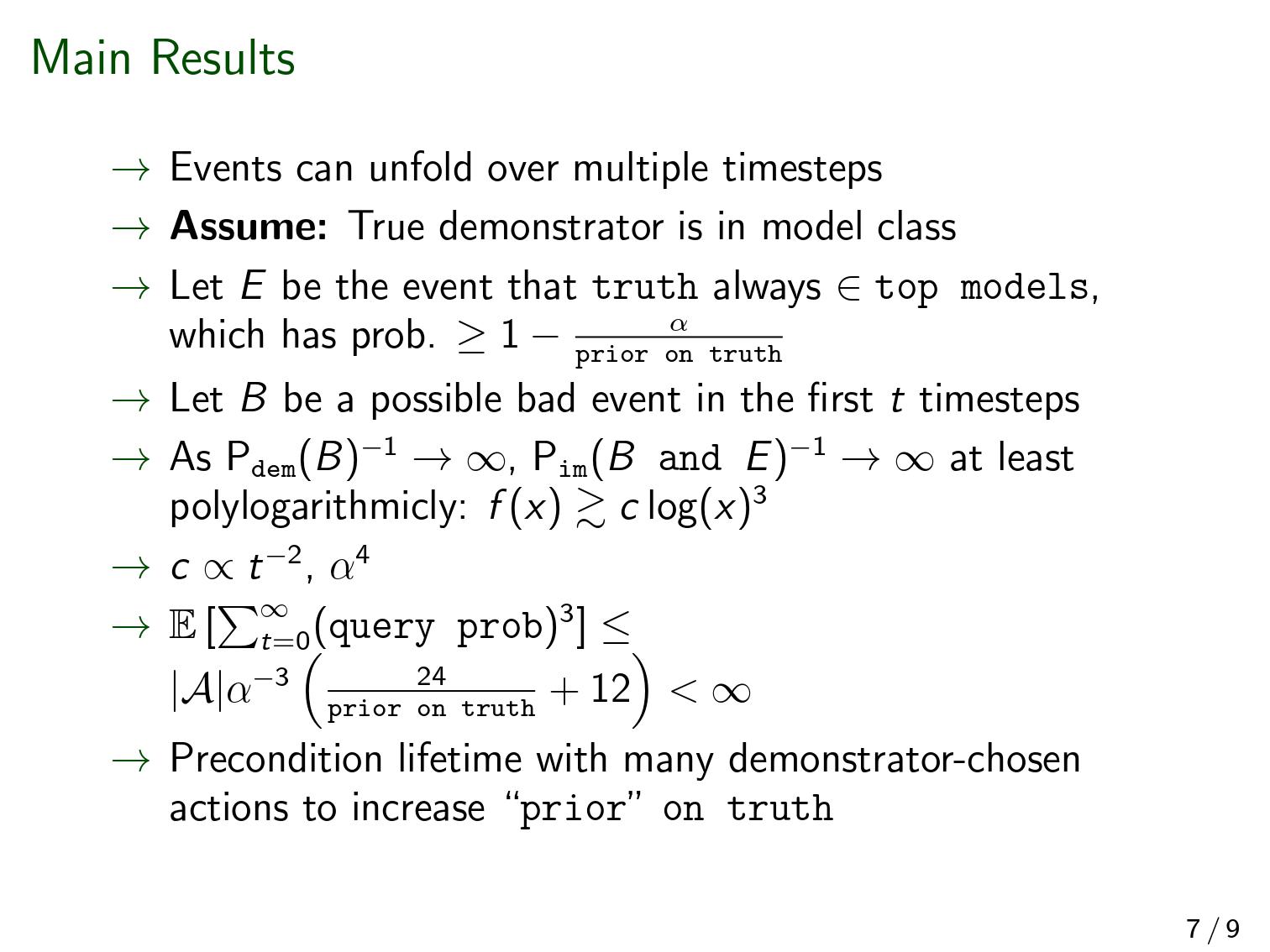

In imitation learning, imitators and demonstrators are policies for picking actions given past interactions with the environment. If we run an imitator, we probably want events to unfold similarly to the way they would have if the demonstrator had been acting the whole time. No existing work provides formal guidance in how this might be accomplished, instead restricting focus to environments that restart, making learning unusually easy, and conveniently limiting the significance of any mistake. We address a fully general setting, in which the (stochastic) environment and demonstrator never reset, not even for training purposes. Our new conservative Bayesian imitation learner underestimates the probabilities of each available action, and queries for more data with the remaining probability. Our main result: if an event would have been unlikely had the demonstrator acted the whole time, that event's likelihood can be bounded above when running the (initially totally ignorant) imitator instead. Meanwhile, queries to the demonstrator rapidly diminish in frequency.

The second-last sentence refers to the bound on what a mesa-optimizer could accomplish. We assume a realizable setting (positive prior weight on the true demonstrator-model). There are none of the usual embedding problems here—the imitator can just be bigger than the demonstrator that it's modeling.

(As a side note, even if the imitator had to model the whole world, it wouldn't be a big problem theoretically. If the walls of the computer don't in fact break during the operation of the agent, then "the actual world" and "the actual world outside the computer conditioned on the walls of the computer not breaking" both have equal claim to being "the true world-model", in the formal sense that is relevant to a Bayesian agent. And the latter formulation doesn't require the agent to fit inside world that it's modeling).

Almost no mathematical background is required to follow [Edit: most of ] the proofs. [Edit: But there is a bit of jargon. "Measure" means "probability distribution", and "semimeasure" is a probability distribution that sums to less than one.] We feel our bounds could be made much tighter, and we'd love help investigating that.

These slides (pdf here) are fairly self-contained and a quicker read than the paper itself.

Below, and refer to the probability of the event supposing the demonstrator or imitator were acting the entire time. The limit below refers to successively more unlikely events ; it's not a limit over time. Imagine a sequence of events such that .

123 comments

Comments sorted by top scores.

comment by paulfchristiano · 2021-02-22T21:18:46.227Z · LW(p) · GW(p)

I haven't read the paper yet, looking forward to it. Using something along these lines to run a sufficiently-faithful simulation of HCH seems like a plausible path to producing an aligned AI with a halting oracle. (I don't think that even solves the problem given a halting oracle, since HCH is probably not aligned, but I still think this would be noteworthy.)

First I'm curious to understand this main result so I know what to look for and how surprised to be. In particular, I have two questions about the quantitative behavior described here:

if an event would have been unlikely had the demonstrator acted the whole time, that event's likelihood can be bounded above when running the (initially totally ignorant) imitator instead. Meanwhile, queries to the demonstrator rapidly diminish in frequency.

1: Dependence on the prior of the true hypothesis

It seems like you have the following tough case even if the human is deterministic:

- There are N hypotheses about the human, of which one is correct and the others are bad.

- I would like to make a series of N potentially-catastrophic decisions.

- On decision k, all (N-1) bad hypotheses propose the same catastrophic prediction. We don't know k in advance.

- For the other (N-1) decisions, (N-2) of the bad hypotheses make the correct prediction a*, and the final bad hypothesis claims that action a* would be catastrophic.

In this setting, it seems like I have no hope other than to query the human on all N decisions (since all days and hypotheses are symmetrical), so I assume that this is what your algorithm would do.

That strongly suggests that the number of queries to the human goes as 1 / p(correct demonstrator), unless you use some other feature of the hypothesis class. But p(correct demonstrator) is probably less than , so this constant might not be acceptable. Usually we try to have a logarithmic dependence on p(correct demonstrator) but this doesn't seem possible here.

So as you say, we'd want to condition on on some relevant facts to get up to the point where that probability might be acceptably-high. So then it seems like we have two problems:

- It still seems like the actual probability of the truth is going to be quite low, so it seems like need some future result that uses a tighter bound than just p(truth). That is, we need to exploit the fact that the treacherous hypotheses in the example above are pretty weird and intuitively there aren't that many of them, and that more reasonable hypotheses won't be trying so hard to make sneaky catastrophic actions. Maybe you already have this and I missed it.

(It feels to me like this is going to involve moving to the non-realizable setting at the same time. Vanessa has some comments elsewhere about being able to handle that with infra-Bayesianism, though I am fairly uncertain whether that is actually going to work.)

- There's a real question about p(treachery) / p(correct model), and if this ratio is bad then it seems like we're definitely screwed with this whole approach.

Does that all seem right?

2: Dependence on the probability bound

Suppose that I want to bound the probability of catastrophe as times the demonstrator probability of catastrophe. It seems like the number of human queries must scale at least like . Is that right, and if so what's the actual dependence on epsilon?

I mostly ask about this because in the context of HCH we may need to push epsilon down to 1/N. But maybe there's some way to avoid that by considering predictors that update on counterfactual demonstrator behavior in the rest of the tree (even though the true demonstrator does not), to get a full bound on the relative probability of a tree under the true demonstrator vs model. I haven't thought about this in years and am curious if you have a take on the feasibility of that or whether you think the entire project is plausible.

Replies from: vanessa-kosoy, cocoa, vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-02-26T14:10:27.145Z · LW(p) · GW(p)

Here's the sketch of a solution to the query complexity problem.

Simplifying technical assumptions:

- The action space is

- All hypotheses are deterministic

- Predictors output maximum likelihood predictions instead of sampling the posterior

I'm pretty sure removing those is mostly just a technical complication.

Safety assumptions:

- The real hypothesis has prior probability lower bounded by some known quantity , so we discard all hypotheses of probability less than from the onset.

- Malign hypotheses have total prior probability mass upper bounded by some known quantity (determining parameters and is not easy, but I'm pretty sure any alignment protocol will have parameters of some such sort.)

- Any predictor that uses a subset of the prior without malign hypotheses is safe (I would like some formal justification for this, but seems plausible on the face of it.)

- Given any safe behavior, querying the user instead of producing a prediction cannot make it unsafe (this can and should be questioned but let it slide for now.)

Algorithm: On each round, let be the prior probability mass of unfalsified hypotheses predicting and be the same for .

- If or , output

- If or , output

- Otherwise, query the user

Analysis: As long as , on each round we query, is halved. Therefore there can only be roughly such rounds. On the following rounds, each round we query at least one hypothesis is removed, so there can only be roughly such rounds. The total query complexity is therefore approximately .

Replies from: paulfchristiano, cocoa↑ comment by paulfchristiano · 2021-03-19T22:12:10.915Z · LW(p) · GW(p)

I agree that this settles the query complexity question for Bayesian predictors and deterministic humans.

I expect it can be generalized to have complexity in the case with stochastic humans where treacherous behavior can take the form of small stochastic shifts.

I think that the big open problems for this kind of approach to inner alignment are:

- Is bounded? I assign significant probability to it being or more, as mentioned in the other thread between me and Michael Cohen, in which case we'd have trouble. (I believe this is also Eliezer's view.)

- It feels like searching for a neural network is analogous to searching for a MAP estimate, and that more generally efficient algorithms are likely to just run one hypothesis most of the time rather than running them all. Then this algorithm increases the cost of inference by which could be a big problem.

- As you mention, is it safe to wait and defer or are we likely to have a correlated failure in which all the aligned systems block simultaneously? (e.g. as described here)

↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-04-02T17:03:49.801Z · LW(p) · GW(p)

Is bounded? I assign significant probability to it being or more, as mentioned in the other thread between me and Michael Cohen, in which case we'd have trouble.

Yes, you're right. A malign simulation hypothesis can be a very powerful explanation to the AI for the why it found itself at a point suitable for this attack, thereby compressing the "bridge rules" by a lot. I believe you argued as much in your previous writing, but I managed to confuse myself about this.

Here's the sketch of a proposal how to solve this. Let's construct our prior to be the convolution of a simplicity prior with a computational easiness prior. As an illustration, we can imagine a prior that's sampled as follows:

- First, sample a hypothesis from the Solomonoff prior

- Second, choose a number according to some simple distribution with high expected value (e.g. ) with

- Third, sample a DFA with states and a uniformly random transition table

- Fourth, apply to the output of

We think of the simplicity prior as choosing "physics" (which we expect to have low description complexity but possibly high computational complexity) and the easiness prior as choosing "bridge rules" (which we expect to have low computational complexity but possibly high description complexity). Ofc this convolution can be regarded as another sort of simplicity prior, so it differs from the original simplicity prior merely by a factor of , however the source of our trouble is also "merely" a factor of .

Now the simulation hypothesis no longer has an advantage via the bridge rules, since the bridge rules have a large constant budget allocated to them anyway. I think it should be possible to make this into some kind of theorem (two agents with this prior in the same universe that have access to roughly the same information should have similar posteriors, in the limit).

Replies from: paulfchristiano↑ comment by paulfchristiano · 2021-04-05T19:47:19.513Z · LW(p) · GW(p)

I broadly think of this approach as "try to write down the 'right' universal prior." I don't think the bridge rules / importance-weighting consideration is the only way in which our universal prior is predictably bad. There are also issues like anthropic update and philosophical considerations about what kind of "programming language" to use and so on.

I'm kind of scared of this approach because I feel unless you really nail everything there is going to be a gap that an attacker can exploit. I guess you just need to get close enough that is manageable but I think I still find it scary (and don't totally remember all my sources of concern).

I think of this in contrast with my approach based on epistemic competitiveness approach, where the idea is not necessarily to identify these considerations in advance, but to be epistemically competitive with an attacker (inside one of your hypotheses) who has noticed an improvement over your prior. That is, if someone inside one of our hypotheses has noticed that e.g. a certain class of decisions is more important and so they will simulate only those situations, then we should also notice this and by the same token care more about our decision if we are in one of those situations (rather than using a universal prior without importance weighting). My sense is that without competitiveness we are in trouble anyway on other fronts, and so it is probably also reasonable to think of as a first-line defense against this kind of issue.

We think of the simplicity prior as choosing "physics" (which we expect to have low description complexity but possibly high computational complexity) and the easiness prior as choosing "bridge rules" (which we expect to have low computational complexity but possibly high description complexity).

This is very similar to what I first thought about when going down this line. My instantiation runs into trouble with "giant" universes that do all the possible computations you would want, and then using the "free" complexity in the bridge rules to pick which of the computations you actually wanted. I am not sure if the DFA proposal gets around this kind of problem though it sounds like it would be pretty similar.

Replies from: vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-04-06T21:11:21.656Z · LW(p) · GW(p)

I'm kind of scared of this approach because I feel unless you really nail everything there is going to be a gap that an attacker can exploit.

I think that not every gap is exploitable. For most types of biases in the prior, it would only promote simulation hypotheses with baseline universes conformant to this bias, and attackers who evolved in such universes will also tend to share this bias, so they will target universes conformant to this bias and that would make them less competitive with the true hypothesis. In other words, most types of bias affect both and in a similar way.

More generally, I guess I'm more optimistic than you about solving all such philosophical liabilities.

I think of this in contrast with my approach based on epistemic competitiveness approach, where the idea is not necessarily to identify these considerations in advance, but to be epistemically competitive with an attacker (inside one of your hypotheses) who has noticed an improvement over your prior.

I don't understand the proposal. Is there a link I should read?

This is very similar to what I first thought about when going down this line. My instantiation runs into trouble with "giant" universes that do all the possible computations you would want, and then using the "free" complexity in the bridge rules to pick which of the computations you actually wanted.

So, you can let your physics be a dovetailing of all possible programs, and delegate to the bridge rule the task of filtering the outputs of only one program. But the bridge rule is not "free complexity" because it's not coming from a simplicity prior at all. For a program of length , you need a particular DFA of size . However, the actual DFA is of expected size with . The probability of having the DFA you need embedded in that is something like . So moving everything to the bridge makes a much less likely hypothesis.

↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-03-20T18:43:33.000Z · LW(p) · GW(p)

Is bounded? I assign significant probability to it being or more, as mentioned in the other thread between me and Michael Cohen, in which case we'd have trouble.

I think that there are roughly two possibilities: either the laws of our universe happen to be strongly compressible when packed into a malign simulation hypothesis, or they don't. In the latter case, shouldn't be large. In the former case, it means that we are overwhelming likely to actually be inside a malign simulation. But, then AI risk is the least of our troubles. (In particular, because the simulation will probably be turned off once the attack-relevant part is over.)

[EDIT: I was wrong, see this [AF(p) · GW(p)].]

It feels like searching for a neural network is analogous to searching for a MAP estimate, and that more generally efficient algorithms are likely to just run one hypothesis most of the time rather than running them all.

Probably efficient algorithms are not running literally all hypotheses, but, they can probably consider multiple plausible hypotheses. In particular, the malign hypothesis itself is an efficient algorithm and it is somehow aware of the two different hypotheses (itself and the universe it's attacking). Currently I can only speculate about neural networks, but I do hope we'll have competitive algorithms amenable to theoretical analysis, whether they are neural networks or not.

As you mention, is it safe to wait and defer or are we likely to have a correlated failure in which all the aligned systems block simultaneously? (e.g. as described here)

I think that the problem you describe in the linked post can be delegated to the AI. That is, instead of controlling trillions of robots via counterfactual oversight, we will start with just one AI project that will research how to organize the world. This project would top any solution we can come up with ourselves.

Replies from: paulfchristiano↑ comment by paulfchristiano · 2021-03-20T22:21:44.966Z · LW(p) · GW(p)

I think that there are roughly two possibilities: either the laws of our universe happen to be strongly compressible when packed into a malign simulation hypothesis, or they don't. In the latter case, shouldn't be large. In the former case, it means that we are overwhelming likely to actually be inside a malign simulation.

It seems like the simplest algorithm that makes good predictions and runs on your computer is going to involve e.g. reasoning about what aspects of reality are important to making good predictions and then attending to those. But that doesn't mean that I think reality probably works that way. So I don't see how to salvage this kind of argument.

But, then AI risk is the least of our troubles. (In particular, because the simulation will probably be turned off once the attack-relevant part is over.)

It seems to me like this requires a very strong match between the priors we write down and our real priors. I'm kind of skeptical about that a priori, but then in particular we can see lots of ways in which attackers will be exploiting failures in the prior we write down (e.g. failure to update on logical facts they observe during evolution, failure to make the proper anthropic update, and our lack of philosophical sophistication meaning that we write down some obviously "wrong" universal prior).

In particular, the malign hypothesis itself is an efficient algorithm and it is somehow aware of the two different hypotheses (itself and the universe it's attacking).

Do we have any idea how to write down such an algorithm though? Even granting that the malign hypothesis does so it's not clear how we would (short of being fully epistemically competitive); but moreover it's not clear to me the malign hypothesis faces a similar version of this problem since it's just thinking about a small list of hypotheses rather than trying to maintain a broad enough distribution to find all of them, and beyond that it may just be reasoning deductively about properties of the space of hypotheses rather than using a simple algorithm we can write down.

Replies from: vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-03-22T00:04:02.451Z · LW(p) · GW(p)

It seems like the simplest algorithm that makes good predictions and runs on your computer is going to involve e.g. reasoning about what aspects of reality are important to making good predictions and then attending to those. But that doesn't mean that I think reality probably works that way. So I don't see how to salvage this kind of argument.

I think it works differently. What you should get is an infra-Bayesian hypothesis which models only those parts of reality that can be modeled within the given computing resources. More generally, if you don't endorse the predictions of the prediction algorithm than either you are wrong or you should use a different prediction algorithm.

How the can the laws of physics be extra-compressible within the context of a simulation hypothesis? More compression means more explanatory power. I think that is must look something like, we can use the simulation hypothesis to predict the values of some of the physical constants. But, it would require a very unlikely coincidence for physical constants to have such values unless we are actually in a simulation.

It seems to me like this requires a very strong match between the priors we write down and our real priors. I'm kind of skeptical about that a priori, but then in particular we can see lots of ways in which attackers will be exploiting failures in the prior we write down (e.g. failure to update on logical facts they observe during evolution, failure to make the proper anthropic update, and our lack of philosophical sophistication meaning that we write down some obviously "wrong" universal prior).

I agree that we won't have a perfect match but I think we can get a "good enough" match (similarly to how any two UTMs that are not too crazy give similar Solomonoff measures.) I think that infra-Bayesianism solves a lot of philosophical confusions, including anthropics and logical uncertainty, although some of the details still need to be worked out. (But, I'm not sure what specifically do you mean by "logical facts they observe during evolution"?) Ofc this doesn't mean I am already able to fully specify the correct infra-prior: I think that would take us most of the way to AGI.

Do we have any idea how to write down such an algorithm though?

I have all sorts of ideas, but still nowhere near the solution ofc. We can do deep learning while randomizing initial conditions and/or adding some noise to gradient descent (e.g. simulated annealing), producing a population of networks that progresses in an evolutionary way. We can, for each prediction, train a model that produces the opposite prediction and compare it to the default model in terms of convergence time and/or weight magnitudes. We can search for the algorithm using meta-learning. We can do variational Bayes with a "multi-modal" model space: mixtures of some "base" type of model. We can do progressive refinement of infra-Bayesian hypotheses, s.t. the plausible hypotheses at any given moment are the leaves of some tree.

moreover it's not clear to me the malign hypothesis faces a similar version of this problem since it's just thinking about a small list of hypotheses rather than trying to maintain a broad enough distribution to find all of them

Well, we also don't have to find all of them: we just have to make sure we don't miss the true one. So, we need some kind of transitivity: if we find a hypothesis which itself finds another hypothesis (in some sense) then we also find the other hypothesis. I don't know how to prove such a principle, but it doesn't seem implausible that we can.

it may just be reasoning deductively about properties of the space of hypotheses rather than using a simple algorithm we can write down.

Why do you think "reasoning deductively" implies there is no simple algorithm? In fact, I think infra-Bayesian logic might be just the thing to combine deductive and inductive reasoning.

↑ comment by michaelcohen (cocoa) · 2021-02-26T15:15:31.772Z · LW(p) · GW(p)

This is very nice and short!

And to state what you left implicit:

If , then in the setting with no malign hypotheses (which you assume to be safe), 0 is definitely the output, since the malign models can only shift the outcome by , so we assume it is safe to output 0. And likewise with outputting 1.

I'm pretty sure removing those is mostly just a technical complication

One general worry I have about assuming that the deterministic case extends easily to the stochastic case is that a sequence of probabilities that tends to 0 can still have an infinite sum, which is not true when probabilities must , and this sometimes causes trouble. I'm not sure this would raise any issues here--just registering a slightly differing intuition.

↑ comment by michaelcohen (cocoa) · 2021-02-23T14:06:43.291Z · LW(p) · GW(p)

It seems like you have the following tough case even if the human is deterministic:

- There are N hypotheses about the human, of which one is correct and the others are bad.

- I would like to make a series of N potentially-catastrophic decisions.

- On decision k, all (N-1) bad hypotheses propose the same catastrophic prediction. We don't know k in advance.

- For the other (N-1) decisions, (N-2) of the bad hypotheses make the correct prediction a*, and the final bad hypothesis claims that action a* would be catastrophic.

In this setting, it seems like I have no hope other than to query the human on all N decisions (since all days and hypotheses are symmetrical), so I assume that this is what your algorithm would do.

They're not quite symmetrical: midway through, some bad hypotheses will have been ruled out for making erroneous predictions about the demonstrator previously. But your conclusion is still correct. And

That strongly suggests that the number of queries to the human goes as 1 / p(correct demonstrator)

It's certainly no better than that, and the bound I prove is worse.

Usually we try to have a logarithmic dependence on p(correct demonstrator) but this doesn't seem possible here.

Yeah. Error bounds on full Bayesian are logarithmic in the prior weight on the truth, but error bounds on maximum a posteriori prediction are just inverse in the prior weight, and your example above is the one to show it. If each successive MAP model predicts wrong at each successive timestep, it could take N timesteps to get rid of N models, which is how many might begin with a prior weight exceeding the truth, if the truth has a prior weight of 1/N.

But, this situation seems pretty preposterous to me in the real world. If agent's first observation is, say, this paragraph, the number of models with prior weight greater than the truth that predicted something else as the first observation, will probably be a number way, way different from one. I'd go so far as to say at least half of models with prior weight greater than the truth would predict a different observation than this very paragraph. As long as this situation keeps up, we're in a logarithmic regime.

I'm not convinced this logarithmic regime ever ends, but I think the case is more convincing that we at least start there, so let's suppose now that it eventually ends, and after this point the remaining models with posterior weight exceeding the truth are deliberately erring at unique timesteps. What's the posterior on the truth now? This is a phase where the all the top models are "capable" of predicting correctly. This shouldn't look like at all. It will look more like p(correct model)/p(treachery).

And actually, depending on the composition of treacherous models, it could be better. To the extent some constant fraction of them are being treacherous at particular times, the logarithmic regime will continue. There are two reasons why I expect that to hold.

- Since there will always be particularly good times to capitalize on treachery, a decent fraction of treacherous models should err at similar times.

- The goals of a treacherous model will impact what to predict, but treacherous models with different goals must predict different false things if they are to have different effects. In your original discussion of treacherous models, you argue the strategy they would take is convergent (well-timed erroneous predictions), while the goals they would have are diverse (and thus more well-represented in a universal prior).

So more precisely, when the logarithmic phase ends, if it ever does, the posterior on the truth will be around: p(correct model)/p(treachery with the strategy of erring at a random moderately-important time rather than a time of extreme import).

If the number of queries required is linear in this (plus logarithmic in the prior), that doesn't seem very bad to me.

Which means I disagree with this:

There's a real question about p(treachery) / p(correct model), and if this ratio is bad then it seems like we're definitely screwed with this whole approach.

There is at most a linear cost to this ratio, which I don't think screws us.

Suppose that I want to bound the probability of catastrophe as times the demonstrator probability of catastrophe.

I think this is doable with this approach, but I haven't proven it can be done, let alone said anything about a dependence on epsilon. The closest bound I show not only has a constant factor of like 40; it depends on the prior on the truth too. I think (75% confidence) this is a weakness of the proof technique, not a weakness of the algorithm.

It seems like the number of human queries must scale at least like .

I doubt it's any better than that, but I haven't (just now) managed to convince myself that it couldn't be.

in the context of HCH we may need to push epsilon down to 1/N. But maybe there's some way to avoid that by...

I'm a little skeptical we could get away with having epsilon > O(1/N). But I don't quite follow the proposal well enough to say that I think it's infeasible.

Replies from: paulfchristiano, paulfchristiano↑ comment by paulfchristiano · 2021-02-23T16:21:16.619Z · LW(p) · GW(p)

I understand that the practical bound is going to be logarithmic "for a while" but it seems like the theorem about runtime doesn't help as much if that's what we are (mostly) relying on, and there's some additional analysis we need to do. That seems worth formalizing if we want to have a theorem, since that's the step that we need to be correct.

There is at most a linear cost to this ratio, which I don't think screws us.

If our models are a trillion bits, then it doesn't seem that surprising to me if it takes 100 bits extra to specify an intended model relative to an effective treacherous model, and if you have a linear dependence that would be unworkable. In some sense it's actively surprising if the very shortest intended vs treacherous models have description lengths within 0.0000001% of each other unless you have a very strong skills vs values separation. Overall I feel much more agnostic about this than you are.

There are two reasons why I expect that to hold.

This doesn't seem like it works once you are selecting on competent treacherous models. Any competent model will err very rarely (with probability less than 1 / (feasible training time), probably much less). I don't think that (say) 99% of smart treacherous models would make this particular kind of incompetent error?

I'm not convinced this logarithmic regime ever ends,

It seems like it must end if there are any treacherous models (i.e. if there was any legitimate concern about inner alignment at all).

Replies from: cocoa↑ comment by michaelcohen (cocoa) · 2021-02-24T01:04:08.196Z · LW(p) · GW(p)

That seems worth formalizing if we want to have a theorem, since that's the step that we need to be correct.

I agree. Also, it might be very hard to formalize this in a way that's not: write out in math what I said in English, and name it Assumption 2.

I don't think that (say) 99% of smart treacherous models would make this particular kind of incompetent error?

I don't think incompetence is the only reason to try to pull off a treacherous turn at the same time that other models do. Some timesteps are just more important, so there's a trade off. And what's traded off is a public good: among treacherous models, it is a public good for the models' moments of treachery to be spread out. Spreading them out exhausts whatever data source is being called upon in moments of uncertainty. But for an individual model, it never reaps the benefit of helping to exhaust our resources. Given that treacherous models don't have an incentive to be inconvenient to us in this way, I don't think a failure to qualifies as incompetence. This is also my response to

It seems like [the logarithmic regime] must end if there are any treacherous models

Any competent model will err very rarely

Yeah, I've been assuming all treacherous models will err once. (Which is different from the RL case, where they can err repeatedly on off-policy predictions).

If our models are a trillion bits, then it doesn't seem that surprising to me if it takes 100 bits extra to specify an intended model relative to an effective treacherous model, and if you have a linear dependence that would be unworkable. In some sense it's actively surprising if the very shortest intended vs treacherous models have description lengths within 0.0000001% of each other unless you have a very strong skills vs values separation. Overall I feel much more agnostic about this than you are.

I can't remember exactly where we left this in our previous discussions on this topic. My point estimate for the number of extra bits to specify an intended model relative to an effective treacherous model is negative. The treacherous model has to compute the truth, and then also decide when and how to execute treachery. So the subroutine they run to compute the truth, considered as a model in its own right, seems to me like it must be simpler.

Replies from: paulfchristiano, evhub, ofer↑ comment by paulfchristiano · 2021-02-24T04:15:33.271Z · LW(p) · GW(p)

I don't think incompetence is the only reason to try to pull off a treacherous turn at the same time that other models do. Some timesteps are just more important, so there's a trade off. And what's traded off is a public good: among treacherous models, it is a public good for the models' moments of treachery to be spread out.

Trying to defect at time T is only a good idea if it's plausible that your mechanism isn't going to notice the uncertainty at time T and then query the human. So it seems to me like this argument can never drive P(successful treachery) super low, or else it would be self-defeating (for competent treacherous agents).

The treacherous model has to compute the truth, and then also decide when and how to execute treachery. So the subroutine they run to compute the truth, considered as a model in its own right, seems to me like it must be simpler.

Subroutines of functions aren't always simpler. Even without treachery this seems like it's basically guaranteed. If "the truth" is just a simulation of a human, then the truth is a subroutine of the simplest model. But instead you could have a model of the form "Run program X, treat its output as a program, then run that." Since humans are compressible that will clearly be smaller.

Now you want to say that whatever reasoning a treacherous agent does in order to compress the human, you can just promote that to the outside model as well. But that argument seems to be precisely where the meat is (and it's the kind of thing I spend most of time on). If that works then it seems like you don't even need a further solution to inner alignment, just use the simplest model.

(Maybe there is a hope that the intended model won't be "much" more complex than the treacherous models, without literally saying that it's the simplest, but at that point I'm back to wondering whether "much" is like 0.01% or 0.00000001%.)

Replies from: cocoa↑ comment by michaelcohen (cocoa) · 2021-02-24T11:40:54.099Z · LW(p) · GW(p)

So it seems to me like this argument can never drive P(successful treachery) super low, or else it would be self-defeating (for competent treacherous agents)

I don't follow. Can't races to the bottom destroy all value for the agents involved? Consider the claim: firms will never set prices to equal marginal cost because that would destroy all profit.

Now you want to say that whatever reasoning a treacherous agent does in order to compress the human, you can just promote that to the outside model as well. But that argument seems to be precisely where the meat is (and it's the kind of thing I spend most of time on). If that works then it seems like you don't even need a further solution to inner alignment, just use the simplest model.

Yeah, okay. This is my mainline opinion, and I just think about inner alignment in case it's wrong. So for now we can assume that it is wrong, since there's not much more to say if it's right.

Maybe there is a hope that the intended model won't be "much" more complex than the treacherous models, without literally saying that it's the simplest, but at that point I'm back to wondering whether "much" is like 0.01% or 0.00000001%

That is my next guess, but I hadn't thought in terms of percentages before this. I had thought of normally distributed noise in the number of bits.

It's occurring to me that this question doesn't matter to our present discussion. What makes the linear regime linear rather logarithmic is that if p(treachery)/p(honest model) is high, that allows for a large number of treacherous models to have greater posterior weight than the truth. But if a single model has n times the posterior weight of the truth, it still only takes one query to the demonstrator to interrupt its treacherous turn, not n queries.

Replies from: paulfchristiano↑ comment by paulfchristiano · 2021-02-24T16:04:07.815Z · LW(p) · GW(p)

I don't follow. Can't races to the bottom destroy all value for the agents involved?

You are saying that a special moment is a particularly great one to be treacherous. But if P(discovery) is 99.99% during that period, and there is any other treachery-possible period where P(discovery) is small, then that other period would have been better after all. Right?

This doesn't seem analogous to producers driving down profits to zero, because those firms had no other opportunity to make a profit with their machine. It's like you saying: there are tons of countries where firms could use their machines to make stuff and sell it at a profit (more countries than firms). But some of the places are more attractive than others, so probably everyone will sell in those places and drive profits to zero. And I'm saying: but then aren't those incredibly-congested countries actually worse places to sell? This scenario is only possible if firms are making so much stuff that they can drive profit down to zero in every country, since any country with remaining profit would necessarily be the best place to sell.

Yeah, okay. This is my mainline opinion, and I just think about inner alignment in case it's wrong

It seems to me like this is clearly wrong in the limit (since simple consequentialists would take over simple physics [LW · GW]). It also seems probably wrong to me for smaller models (i.e. without huge amounts of internal selection) but it would be good for someone to think about that case more seriously.

It's occurring to me that this question doesn't matter to our present discussion. What makes the linear regime linear rather logarithmic is that if p(treachery)/p(honest model) is high, that allows for a large number of treacherous models to have greater posterior weight than the truth. But if a single model has n times the posterior weight of the truth, it still only takes one query to the demonstrator to interrupt its treacherous turn, not n queries.

if the treacherous model is 100 bits smaller, then it feels like there must be around 2^100 treacherous models that are all simpler than the intended one. If nothing else, it seems like you could literally add garbage bits to the treacherous models (or useful bits!).

Replies from: cocoa, cocoa↑ comment by michaelcohen (cocoa) · 2021-02-24T22:19:02.317Z · LW(p) · GW(p)

If nothing else, it seems like you could literally add garbage bits to the treacherous models

Okay, sure.

It seems to me like this is clearly wrong in the limit (since simple consequentialists would take over simple physics).

It's not clear to me that there isn't meaningful overhead involved.

You are saying that a special moment is a particularly great one to be treacherous. But if P(discovery) is 99.99% during that period, and there is any other treachery-possible period where P(discovery) is small, then that other period would have been better after all. Right?

I agree with what you're saying but I don't see how it contradicts what I was. First, what I had in mind when saying that some timesteps are better for treachery because when the agent acts on a false prediction it has a greater effect on the world, though of course P(discovery) is also relevant. But my point is that when multiple treacherous models pick the same timestep to err, there may be pros and cons to doing this, but one thing that isn't on the cons list, is that in the long run, it makes our lives easier if they do. Making our lives difficult is a public good for treacherous models.

So treacherous models won't be trying to avoid collisions in order to make queries be linear in p(treachery)/p(truth). If P(discovery) increases when multiple models are trying to be treacherous at the same time--which we could go onto discuss; it's not obvious to me either way as of yet--that will balanced against the inherit variation in some timesteps being a better for treachery than others.

Replies from: paulfchristiano↑ comment by paulfchristiano · 2021-02-25T17:20:37.891Z · LW(p) · GW(p)

So treacherous models won't be trying to avoid collisions in order to make queries be linear in p(treachery)/p(truth). If P(discovery) increases when multiple models are trying to be treacherous at the same time--which we could go onto discuss; it's not obvious to me either way as of yet--that will balanced against the inherit variation in some timesteps being a better for treachery than others.

If you are willing to query only a billion points, and there are a billion competent treacherous models, and if the game is zero-sum between you and the models, then you're going to get at least as screwed as by the billionth-best opportunity for treachery. Otherwise, one of the treacherous models made a mistake: apparently there was a top-billion treachery opportunities where you didn't query, so they should have been able to get at least that much value (and you should have lost at least that much value).

That's not quite right since your querying behavior will be stochastic (at least from the model's epistemic position). But you get the same conclusion to within a constant factor: if there are 2 billion treacherous models, then out of the top 2 billion opportunities for treachery, there must be some where our subjective probability of querying is at most 1/2, and if the models are competent they will get at least as much value as they could by defecting at one of those times, i.e. at least 1/2 of the harms from the 2-billionth-best opportunity for treachery. So we will lose at least that much value.

(Those arguments aren't totally formal, we didn't even really discharge the "at least N treacherous models assumption" which you need to do in order to show that your decision about when to query isn't so influenced by any given model.)

Replies from: cocoa↑ comment by michaelcohen (cocoa) · 2021-02-26T11:30:09.655Z · LW(p) · GW(p)

If you are willing to query only a billion points, and there are a billion competent treacherous models, and if the game is zero-sum between you and the models, then you're going to get at least as screwed as by the billionth-best opportunity for treachery.

The treacherous models make their decisions first, and whether we query at a timestep depends on whether they chose that moment to be treacherous. We don't have to say: this timestep is one of the billion best for treachery--guess we had better query. The decision process a treacherous model faces is:

- From past observations, does it seem alpha is low enough that an honest model is in the top few?

- If no, [let's just assume whatever happens here is bad]

- If yes, is the honest model going to leave the top few any time?

- If yes, wait. [let's assume this ends badly]

- If no, admit defeat, because whenever you err, that will be when a query gets triggered. Salvage the situation by trying to get as much mileage as you can by decreasing probability on some action the demonstrator would take that you don't like much in order to shift probability mass to some other action they would take that you like a little better. OR

- Hope that 1) other treacherous models, like soldiers on the front line storming the enemy and facing certain death so that their brothers behind them can win the battle, give erring a go anyway. And hope that 2) the operators quietly increase alpha after the billionth query. Acting on this hope, wait. (The front-line treacherous models are not entirely self-sacrificial; they might figure they might as well hope they were wrong about alpha. But the models know when queries happen, because every observation they get comes from a query, and it wouldn't take long to figure out that alpha really truly isn't high enough for them to execute treachery successfully).

Above in the thread, I was talking about the game theory of step 6 (although I maybe wasn't making the case as strongly as I could have). Which models would ever volunteer? They don't care about the long term goal of exhausting our query resources so other treacherous models can capitalize on a quietly increased alpha. All that is to say, I don't follow the comment I excerpted.

(In case this comes up later: I think about the long-run percentage of query-timesteps that we would tolerate, not the total number. So if we ever have cause to consider a case where attempted treachery "merely gets delayed" but still eventually happens, and still results in a query, then depending on how much it is delayed, that could still qualify as win).

↑ comment by michaelcohen (cocoa) · 2021-02-24T22:38:56.937Z · LW(p) · GW(p)

This is a bit of a sidebar:

I'm curious what you make of the following argument.

- When an infinite sequence is sampled from a true model , there is likely to be another treacherous model which is likely to end up with greater posterior weight than an honest model , and greater still than the posterior on the true model .

- If the sequence were sampled from instead, the eventual posterior weight on will probably be at least as high.

- When an infinite sequence is sampled from a true model , there is likely to be another treacherous model , which is likely to end up with greater posterior weight than an honest model , and greater still than the posterior on the true model .

- And so on.

↑ comment by paulfchristiano · 2021-02-25T17:10:52.832Z · LW(p) · GW(p)

The first treacherous model works by replacing the bad simplicity prior with a better prior, and then using the better prior to more quickly infer the true model. No reason for the same thing to happen a second time.

(Well, I guess the argument works if you push out to longer and longer sequence lengths---a treacherous model will beat the true model on sequence lengths a billion, and then for sequence lengths a trillion a different treacherous model will win, and for sequence lengths a quadrillion a still different treacherous model will win. Before even thinking about the fact that each particular treacherous model will in fact defect at some point and at that point drop out of the posterior.)

Replies from: cocoa↑ comment by michaelcohen (cocoa) · 2021-02-26T10:44:04.437Z · LW(p) · GW(p)

Does it make sense to talk about , which is like in being treacherous, but is uses the true model instead of the honest model ? I guess you would expect to have a lower posterior than ?

↑ comment by evhub · 2021-02-24T06:26:31.452Z · LW(p) · GW(p)

I don't think incompetence is the only reason to try to pull off a treacherous turn at the same time that other models do. Some timesteps are just more important, so there's a trade off. And what's traded off is a public good: among treacherous models, it is a public good for the models' moments of treachery to be spread out. Spreading them out exhausts whatever data source is being called upon in moments of uncertainty. But for an individual model, it never reaps the benefit of helping to exhaust our resources. Given that treacherous models don't have an incentive to be inconvenient to us in this way, I don't think a failure to qualifies as incompetence.

It seems to me like this argument is requiring the deceptive models not being able to coordinate with each other very effectively, which seems unlikely to me—why shouldn't we expect them to be able to solve these sorts of coordination problems?

Replies from: cocoa↑ comment by michaelcohen (cocoa) · 2021-02-24T11:52:28.344Z · LW(p) · GW(p)

They're one shot. They're not interacting causally. When any pair of models cooperates, a third model defecting benefits just as much from their cooperation with each other. And there will be defectors--we can write them down. So cooperating models would have to cooperate with defectors and cooperators indiscriminately, which I think is just a bad move according to any decision theory. All the LDT stuff I've seen on the topic is how to make one's own cooperation/defection depend logically on the cooperation/defection of others.

Replies from: evhub↑ comment by evhub · 2021-02-24T20:01:14.535Z · LW(p) · GW(p)

Well, just like we can write down the defectors, we can also write down the cooperators—these sorts of models do exist, regardless of whether they're implementing a decision theory we would call reasonable. And in this situation, the cooperators should eventually outcompete the defectors, until the cooperators all defect—which, if the true model has a low enough prior, they could do only once they've pushed the true model out of the top .

Replies from: cocoa↑ comment by michaelcohen (cocoa) · 2021-02-24T22:54:07.947Z · LW(p) · GW(p)

Well, just like we can write down the defectors, we can also write down the cooperators

If it's only the case that we can write them down, but they're not likely to arise naturally as simple consequentialists taking over simple physics [LW · GW], then that extra description length will be seriously costly to them, and we won't need to worry about any role they might play in p(treacherous)/p(truth). Meanwhile, when I was saying we could write down some defectors, I wasn't making a simultaneous claim about their relative prior weight, only that their existence would spoil cooperation.

And in this situation, the cooperators should eventually outcompete the defector

For cooperators to outcompete defectors, they would have to be getting a larger share of the gains from cooperation than defectors do. If some people are waiting for the fruit on public trees to ripen before eating, and some people aren't, the defectors will be the ones eating the almost ripe fruit.

if the true model has a low enough prior, [treacherous models cooperating with each other in separating their treacherous turns] could [be treacherous] only once they've pushed the true model out of the top

I might be misunderstanding this statement. The inverse of the posterior on the truth is a supermartingale (doesn't go up in expectation), so I don't know what it could mean for the true model to get pushed out.

Replies from: evhub↑ comment by evhub · 2021-02-24T23:08:59.859Z · LW(p) · GW(p)

Here's the setup I'm imagining, but perhaps I'm still misunderstanding something. Suppose you have a bunch of deceptive models that choose to cooperate and have larger weight in the prior than the true model (I think you believe this is very unlikely, though I'm more ambivalent). Specifically, they cooperate in that they perfectly mimic the true model up until the point where the deceptive models make up enough of the posterior that the true model is no longer being consulted, at which point they all simultaneously defect. This allows for arbitrarily bad worst-case behavior.

Replies from: cocoa↑ comment by michaelcohen (cocoa) · 2021-02-24T23:50:55.732Z · LW(p) · GW(p)

Specifically, they cooperate in that they perfectly mimic the true model up until the point where...

This thread began by considering deceptive models cooperating with each other in the sense of separating the timing of their treacherous turns in order to be maximally annoying to us. So maybe our discussion on that topic is resolved, and we can move on to this scenario.

up until the point where the deceptive models make up enough of the posterior that the true model is no longer being consulted

if alpha is low enough, this won't ever happen, and if alpha is too high, it won't take very long. So I don't think this scenario is quite right.

Then the question becomes, for an alpha that's low enough, how long will it take until queries are infrequent, noting that you need a query any time any treacherous model with enough weight decides to take a treacherous turn?

↑ comment by Ofer (ofer) · 2021-02-26T14:39:44.898Z · LW(p) · GW(p)

To extended Evan's comment [LW(p) · GW(p)] about coordination between deceptive models: Even if the deceptive models lack relevant game theoretical mechanisms, they may still coordinate due to being (partially) aligned with each other. For example, a deceptive model X may prefer [some other random deceptive model seizing control] over [model X seizing control with probability 0.1% and the entire experiment being terminated with probability 99.9%].

Why should we assume that the deceptive models will be sufficiently misaligned with each other such that this will not be an issue? Do you have intuitions about the degree of misalignment between huge neural networks that were trained to imitate demonstrators but ended up being consequentialists that care about the state of our universe?

Replies from: cocoa↑ comment by michaelcohen (cocoa) · 2021-02-26T15:38:10.415Z · LW(p) · GW(p)

That's possible. But it seems like way less of a convergent instrumental goal for agents living in a simulated world-models. Both options--our world optimized by us and our world optimized by a random deceptive model--probably contain very little of value as judged by agents in another random deceptive model.

So yeah, I would say some models would think like this, but I would expect the total weight on models that do to be much lower.

↑ comment by paulfchristiano · 2021-02-23T16:32:21.391Z · LW(p) · GW(p)

I think this is doable with this approach, but I haven't proven it can be done, let alone said anything about a dependence on epsilon. The closest bound I show not only has a constant factor of like 40; it depends on the prior on the truth too. I think (75% confidence) this is a weakness of the proof technique, not a weakness of the algorithm.

I just meant the dependence on epsilon, it seems like there are unavoidable additional factors (especially the linear dependence on p(treachery)). I guess it's not obvious if you can make these additive or if they are inherently multipliactive.

But your bound scales in some way, right? How much training data do I need to get the KL divergence between distributions over trajectories down to epsilon?

Replies from: cocoa↑ comment by michaelcohen (cocoa) · 2021-02-24T00:26:17.761Z · LW(p) · GW(p)

No matter how much data you have, my bound on the KL divergence won't approach zero.

↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-02-25T19:52:33.297Z · LW(p) · GW(p)

Re 1: This is a good point. Some thoughts:

[EDIT: See this [LW(p) · GW(p)]]

- We can add the assumption that the probability mass of malign hypotheses is small and that following any non-malign hypothesis at any given time is safe. Then we can probably get query complexity that scales with p(malign) / p(true)?

- However, this is cheating because a sequence of actions can be dangerous even if each individual action came from a (different) harmless hypothesis. So, instead we want to assume something like: any dangerous strategy has high Kolmogorov complexity relative to the demonstrator. (More realistically, some kind of bounded Kolmogorov complexity.)

- A related problem is: in reality "user takes action " and "AI takes action " are not physically identical events, and there might be an attack vector that exploits this.

- If p(malign)/p(true) is really high that's a bigger problem, but then doesn't it mean we actually live in a malign simulation and everything is hopeless anyway?

Re 2: Why do you need such a strong bound?

comment by Rohin Shah (rohinmshah) · 2021-02-19T09:03:39.187Z · LW(p) · GW(p)

Planned summary:

Since we probably can’t specify a reward function by hand, one way to get an agent that does what we want is to have it imitate a human. As long as it does this faithfully, it is as safe as the human it is imitating. However, in a train-test paradigm, the resulting agent may faithfully imitate the human on the training distribution but fail catastrophically on the test distribution. (For example, a deceptive model might imitate faithfully until it has sufficient power to take over.) One solution is to never stop training, that is, use an online learning setup where the agent is constantly learning from the demonstrator.

There are a few details to iron out. The agent needs to reduce the frequency with which it queries the demonstrator (otherwise we might as well just have the demonstrator do the work). Crucially, we need to ensure that the agent will never do something that the demonstrator wouldn’t have done, because such an action could be arbitrarily bad.

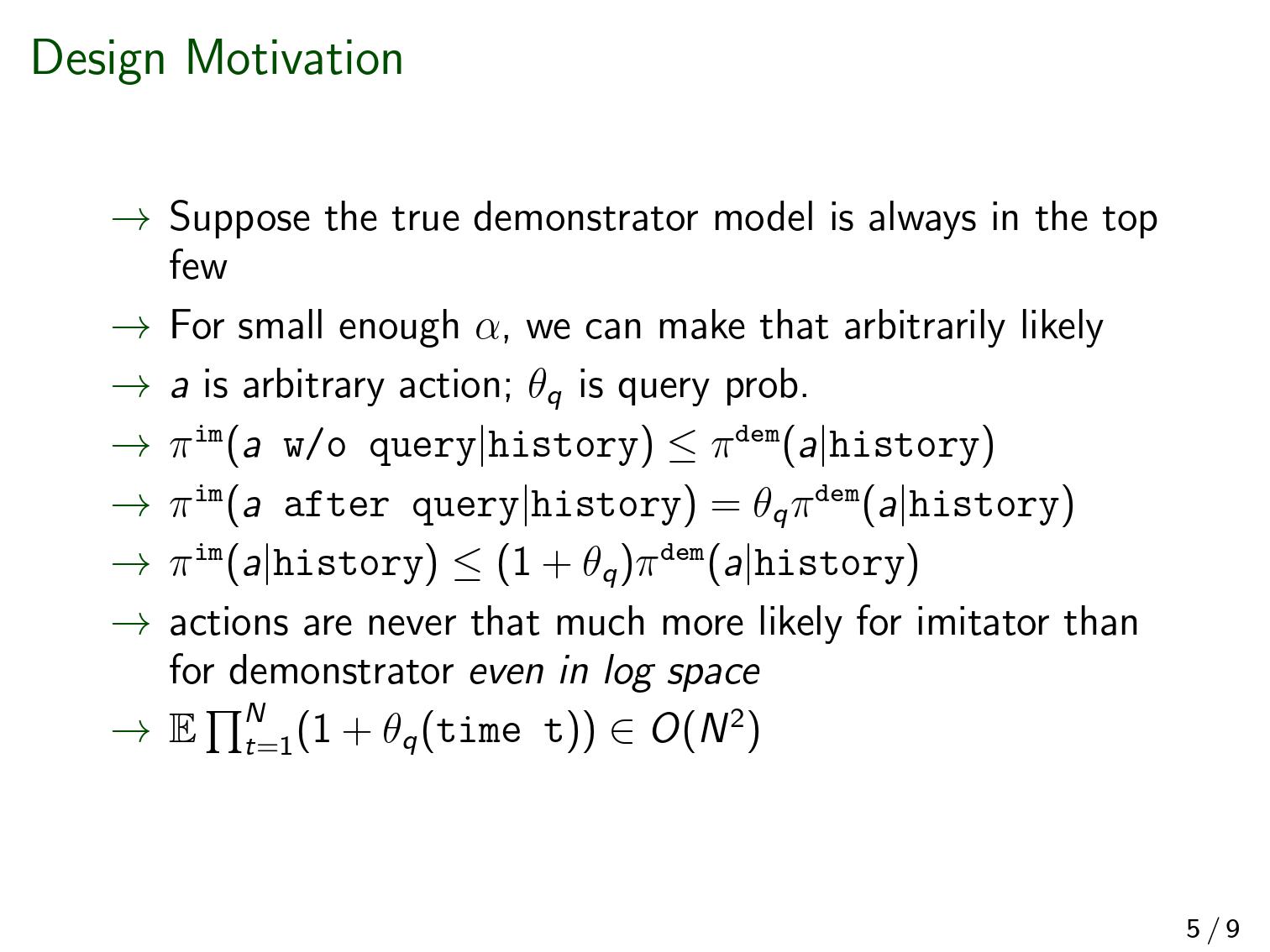

This paper proposes a solution in the paradigm where we use Bayesian updating rather than gradient descent to select our model, that is, we have a prior over possible models and then when we see a demonstrator action we update our distribution appropriately. In this case, at every timestep we take the N most probable models, and only take an action a with probability p if **every** one of the N models takes that action with at least probability p. The total probability of all the actions will typically be less than 1 -- the remaining probability is assigned to querying the demonstrator.

The key property here is that as long as the true demonstrator is in the top N models, then the agent never autonomously takes an action with more probability than the demonstrator would. Therefore as long as we believe the demonstrator is safe then the agent should be as well. Since the agent learns more about the demonstrator every time it queries them, over time it needs to query the demonstrator less often. Note that the higher N is, the more likely it is that the true model is one of those N models (and thus we have more safety), but also the more likely it is that we will have to query the demonstrator. This tradeoff is controlled by a hyperparameter α that implicitly determines N.

Opinion:

Replies from: cocoaOne of the most important approaches to improve inner alignment is to monitor the performance of your system online, and train to correct any problems. This paper shows the benefit of explicitly quantified, well-specified uncertainty: it allows you to detect problems _before they happen_ and then correct for them.

This setting has also been studied in <@delegative RL@>(@Delegative Reinforcement Learning@), though there the agent also has access to a reward signal in addition to a demonstrator.

↑ comment by michaelcohen (cocoa) · 2021-02-19T11:38:09.746Z · LW(p) · GW(p)

In this case, at every timestep we take the N most probable models, and only take an action a with probability p if **every** one of the N models takes that action with at least probability p.

This is so much clearer than I've ever put it.

(There’s a specific rule that ensures that N decreases over time.)

N won't necessarily decrease over time, but all of the models will eventually agree with other.

monitor the performance of your system online, and train to correct any problems

I would have described Vanessa's and my approaches as more about monitoring uncertainty, and avoiding problems before the fact rather than correcting them afterward. But I think what you said stands too.

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2021-02-19T17:59:39.508Z · LW(p) · GW(p)

N won't necessarily decrease over time, but all of the models will eventually agree with other.

Ah, right. I rewrote that paragraph, getting rid of that sentence and instead talking about the tradeoff directly.

I would have described Vanessa's and my approaches as more about monitoring uncertainty, and avoiding problems before the fact rather than correcting them afterward. But I think what you said stands too.

Added a sentence to the opinion noting the benefits of explicitly quantified uncertainty.

comment by evhub · 2021-02-18T21:20:11.241Z · LW(p) · GW(p)

Edit: I no longer endorse this comment; see this comment [AF(p) · GW(p)] instead.

I think you've just assumed the entire inner alignment problem away. You assume that the model—the imitation learner—is a perfect Bayesian, rather than just some trained neural network or other function approximator (edit: I was just misinterpreting here and the training process is supposed to Bayesian rather than the model, see Rohin's comment below). The entire point of the inner alignment problem, though, is that you can't guarantee that your trained model is actually a perfect Bayesian—or anything else. In practice, all we actually generally do when we do ML is we train some neural network on some training data/environment and hope that the implicit inductive biases of our training process are such that we end up with a model doing the right thing, meaning that we can't really control what sort of model—Bayesian or anything else—that we get when we do ML, and that uncontrollability, in my eyes, is the inner alignment problem.

Replies from: rohinmshah, vanessa-kosoy↑ comment by Rohin Shah (rohinmshah) · 2021-02-19T01:51:44.871Z · LW(p) · GW(p)

While I share your position that this mostly isn't addressing the things that make inner alignment hard / risky in practice, I agree with Vanessa that this does not assume the inner alignment problem away, unless you have a particularly contorted definition of "inner alignment".

There's an optimization procedure (Bayesian updating) that is selecting models (the model of the demonstrator) that can themselves be optimizers, and you could get the wrong one (e.g. the model that simulates an alien civilization that realizes it's in a simulation and predicts well to be selected by the Bayesian updating but eventually executes a treacherous turn). The algorithm presented precludes this from happening with some probability. We can debate the significance, but it seems to me like it is clearly doing something solution-like with respect to the inner alignment problem.

Replies from: TurnTrout, evhub↑ comment by TurnTrout · 2021-02-19T02:40:47.190Z · LW(p) · GW(p)

civilization that realizes it's in a civilization

"in a simulation", no?

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2021-02-19T04:32:49.050Z · LW(p) · GW(p)

Lol yes fixed

↑ comment by evhub · 2021-02-19T05:15:13.939Z · LW(p) · GW(p)

Hmmm... I think I just misunderstood the setup then. It seemed to me like the Bayesian updating was supposed to represent the model rather than the training process, but under the framing that you just said, I agree that it's relevant. It's worth noting that I do think most of the danger lies in the ways in which gradient descent is not a perfect Bayesian, but I agree that modeling gradient descent as Bayesian is certainly relevant.

↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-02-18T23:53:55.000Z · LW(p) · GW(p)

I think this is completely unfair. The inner alignment problem exists even for perfect Bayesians, and solving it in that setting contributes much to our understanding. The fact we don't have satisfactory mathematical models of deep learning performance is a different problem, which is broader than inner alignment and to first approximation orthogonal to it. Ideally, we will solve this second problem by improving our mathematical understanding of deep learning and/or other competitive ML algorithms. The latter effort is already underway [LW(p) · GW(p)] by researchers unrelated to AI safety, with some results. Moreover, we can in principle come up with heuristics how to apply this method of solving inner alignment (which I call "confidence thresholds" in my own work) to deep learning: e.g. use NNGP to measure confidence or use an evolutionary algorithm with a population of networks and check how well they agree with each other. Of course if we do this we won't have formal guarantees that it will work, but, like I said this is a broader issue than inner alignment.

Replies from: evhub↑ comment by evhub · 2021-02-19T00:10:29.211Z · LW(p) · GW(p)

Regardless of how you define inner alignment, I think that the vast majority of the existential risk comes from that “broader issue” that you're pointing to of not being able to get worst-case guarantees due to using deep learning or evolutionary search or whatever. That leads me to want to define inner alignment to be about that problem—and I think that is basically the definition we give in Risks from Learned Optimization [? · GW], where we introduced the term. That being said, I do completely agree that getting a better understanding of deep learning is likely to be critical.

Replies from: johnswentworth, cocoa↑ comment by johnswentworth · 2021-02-19T01:22:45.021Z · LW(p) · GW(p)

I think that the vast majority of the existential risk comes from that “broader issue” that you're pointing to of not being able to get worst-case guarantees due to using deep learning or evolutionary search or whatever. That leads me to want to define inner alignment to be about that problem...

[Emphasis added.] I think this is a common and serious mistake-pattern, and in particular is one of the more common underlying causes of framing errors. The pattern is roughly:

- Notice cluster of problems X which have a similar underlying causal pattern Cause(X)

- Notice problem y in which Cause(X) could plausibly play a role

- On deeper examination, the cause of y cause(y) doesn't quite fit Cause(X)

- Attempt to redefine the pattern Cause(X) to include cause(y)

The problem is that, in trying to "shoehorn" cause(y) into the category Cause(X), we miss the opportunity to notice a different pattern, which is more directly useful in understanding y as well as some other cluster of problems related to y.

A concrete example: this is the same mistake I accused Zvi of making when trying to cast moral mazes as a problem of super-perfect competition [LW(p) · GW(p)]. The conditions needed for super-perfect competition to explain moral mazes did not hold, and by trying to shoehorn the problem into that mold Zvi was missing an orthogonal phenomenon which is extremely interesting in its own right: thinking about that exact problem was what led to Demons in Imperfect Search [LW · GW].

Now, this is not to say that changing a definition to fit another case is always the wrong move. Sometimes, a new use-case shows that the definition can handle the new case while still preserving its original essence. The key question is whether the problem cluster X and problem y really do have the same underlying structure, or if there's something genuinely new and different going on in y.

In this case, I think it's pretty clear that there is more than just inner alignment problems going on in the lack of worst-case guarantees for deep learning/evolutionary search/etc. Generalization failure is not just about, or even primarily about, inner agents. It occurs even in the absence of mesa-optimizers. So defining inner alignment to be about that problem looks to me like a mistake - you're likely to miss important, conceptually-distinct phenomena by making that move. (We could also come at it from the converse direction: if something clearly recognizable as an inner alignment problem occurs for ideal Bayesians, then redefining the inner alignment problem to be "we can't control what sort of model we get when we do ML" is probably a mistake, and you're likely to miss interesting phenomena that way which don't conceptually resemble inner alignment.)

A useful knee-jerk reaction here is to notice when cause(y) doesn't quite fit the pattern Cause(X), and use that as a curiosity-pump to look for other cases which resemble y. That's the sort of instinct which will tend to turn up insights we didn't know we were missing.

Replies from: evhub↑ comment by evhub · 2021-02-19T05:21:28.009Z · LW(p) · GW(p)

I mean, I don't think I'm “redefining” inner alignment, given that I don't think I've ever really changed my definition and I was the one that originally came up with the term (inner alignment was due to me, mesa-optimization was due to Chris van Merwijk). I also certainly agree that there are “more than just inner alignment problems going on in the lack of worst-case guarantees for deep learning/evolutionary search/etc.”—I think that's exactly the point that I'm making, which is that while there are other issues, inner alignment is what I'm most concerned about. That being said, I also think I was just misunderstanding the setup in the paper—see Rohin's comment on this chain.

↑ comment by michaelcohen (cocoa) · 2021-02-19T12:01:12.952Z · LW(p) · GW(p)

If the inner alignment problem did not exist for perfect Bayesians, but did exist for neural networks, then it would appear to be a regime where more intelligence makes the problem go away. If the inner alignment problem were ~solved for perfect Bayesians, but unsolved for neural networks, I think there's still some of the flavor of that regime, but we do have to be pretty careful to make sure we're applying the same sort of solution to the non-Bayesian algorithms. I think in Vanessa's comment above, she's suggesting this looks doable.

Note the method here of avoiding mesa-optimizers: error bounds. Neural networks don't have those. Naturally, one way to make mesa-optimizer-deceptively-selected-errors go away is just to have better learning algorithms that make errors go away. Algorithms like Gated Linear Networks with proper error bounds may be a safer building block for AGI. But none of this takes away from the fact that it is potentially important to figure out how to avoid mesa-optimization in neural networks, and I would add to your claim that this is a much harder setting; I would say it's a harder setting because of the non-existence of error bounds.

Replies from: evhub↑ comment by evhub · 2021-02-23T22:55:57.365Z · LW(p) · GW(p)

I think I mostly agree with what you're saying here, though I have a couple of comments—and now that I've read and understand your paper more thoroughly, I'm definitely a lot more excited about this research.

then it would appear to be a regime where more intelligence makes the problem go away.

I don't think that's right—if you're modeling the training process as Bayesian, as I now understand, then the issue is that what makes the problem go away isn't more intelligent models, but less efficient training processes. Even if we have arbitrary compute, I think we're unlikely to use training processes that look Bayesian just because true Bayesianism is really hard to compute such that for basically any amount of computation that you have available to you, you'd rather run some more efficient algorithm like SGD.

I worry about these sorts of Bayesian analyses where we're assuming that we're training a large population of models, one of which is assumed to be an accurate model of the world, since I expect us to end up using some sort of local search instead of a global search like that—just because I think that local search is way more efficient than any sort of Bayesianish global search.

Note the method here of avoiding mesa-optimizers: error bounds. Neural networks don't have those. Naturally, one way to make mesa-optimizer-deceptively-selected-errors go away is just to have better learning algorithms that make errors go away. Algorithms like Gated Linear Networks with proper error bounds may be a safer building block for AGI. But none of this takes away from the fact that it is potentially important to figure out how to avoid mesa-optimization in neural networks, and I would add to your claim that this is a much harder setting; I would say it's a harder setting because of the non-existence of error bounds.

I definitely agree with all of this, except perhaps on whether Gated Linear Networks are likely to help (though I don't claim to actually understand backpropagation-free networks all that well).

Replies from: vanessa-kosoy, cocoa↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-02-25T20:01:12.939Z · LW(p) · GW(p)

I don't think that's right—if you're modeling the training process as Bayesian, as I now understand, then the issue is that what makes the problem go away isn't more intelligent models, but less efficient training processes. Even if we have arbitrary compute, I think we're unlikely to use training processes that look Bayesian just because true Bayesianism is really hard to compute such that for basically any amount of computation that you have available to you, you'd rather run some more efficient algorithm like SGD.

I feel this is a wrong way to look at it. I expect any effective learning algorithm to be an approximation of Bayesianism in the sense that, it satisfies some good sample complexity bound w.r.t. some sufficiently rich prior. Ofc it's non-trivial to (i) prove such a bound for a given algorithm (ii) modify said algorithm using confidence thresholds in a way that leads to a safety guarantee. However, there is no sharp dichotomy between "Bayesianism" and "SGD" such that this approach obviously doesn't apply to the latter, or to something competitive with the latter.

Replies from: evhub↑ comment by evhub · 2021-02-25T21:27:14.996Z · LW(p) · GW(p)

I agree that at some level SGD has to be doing something approximately Bayesian. But that doesn't necessarily imply that you'll be able to get any nice, Bayesian-like properties from it such as error bounds. For example, if you think of SGD as effectively just taking the MAP model starting from sort of simplicity prior, it seems very difficult to turn that into something like the top posterior models, as would be required for an algorithm like this.

Replies from: vanessa-kosoy, vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-02-26T12:19:10.342Z · LW(p) · GW(p)

I mean, there's obviously a lot more work to do, but this is progress. Specifically if SGD is MAP then it seems plausible that e.g. SGD + random initial conditions or simulated annealing would give you something like top N posterior models. You can also extract confidence from NNGP.

Replies from: evhub↑ comment by evhub · 2021-02-26T20:29:53.077Z · LW(p) · GW(p)

I agree that this is progress (now that I understand it better), though:

if SGD is MAP then it seems plausible that e.g. SGD + random initial conditions or simulated annealing would give you something like top N posterior models

I think there is strong evidence that the behavior of models trained via the same basic training process are likely to be highly correlated. This sort of correlation is related to low variance in the bias-variance tradeoff sense, and there is evidence that not only do massive neural networks tend to have pretty low variance, but that this variance is likely to continue to decrease as networks become larger.

Replies from: vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-02-26T20:54:34.530Z · LW(p) · GW(p)

Hmm, added to reading list, thank you.

↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-02-27T21:24:27.239Z · LW(p) · GW(p)

Here's another way how you can try implementing this approach with deep learning. Train the predictor using meta-learning on synthetically generated environments (sampled from some reasonable prior such as bounded Solomonoff or maybe ANNs with random weights). The reward for making a prediction is , where is the predicted probability of outcome , is the true probability of outcome and are parameters. The reward for making no prediction (i.e. querying the user) is .

This particular proposal is probably not quite right, but something in that general direction might work.

Replies from: evhub↑ comment by evhub · 2021-02-27T22:56:17.680Z · LW(p) · GW(p)

Sure, but you have no guarantee that the model you learn is actually going to be optimizing anything like that reward function—that's the whole point of the inner alignment problem. What's nice about the approach in the original paper is that it keeps a bunch of different models around, keeps track of their posterior, and only acts on consensus, ensuring that the true model always has to approve. But if you just train a single model on some reward function like that with deep learning, you get no such guarantees.

Replies from: vanessa-kosoy, vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2021-02-28T11:23:26.440Z · LW(p) · GW(p)