QNR prospects are important for AI alignment research

post by Eric Drexler · 2022-02-03T15:20:53.826Z · LW · GW · 12 commentsContents

Abstract 1. Background 2. Prospective support for AI capabilities 2.1 Efficient scaling of GPT-like functionality 2.2 Quasi-cognitive memory 2.3 Contribution to shared knowledge 2.4 Formal and informal reasoning 2.5 Knowledge accumulation, revision, and synthesis 3. Prospective support for AI alignment 3.1 Support for interpretability 3.2 Support for value learning 3.3 Support for corrigibility 3.4 Support for behavioral alignment 4. Conclusion None 12 comments

Attention conservation notice: This discussion is intended for readers with an interest in prospects for knowledge-rich intelligent systems and potential applications of improved knowledge representations to AI capabilities and alignment. It contains no theorems.

Abstract

Future AI systems will likely use quasilinguistic neural representations (QNRs) to store, share, and apply large bodies of knowledge that include descriptions of the world and human values. Prospects include scalable stores of “ML-native” knowledge that share properties of linguistic and cognitive representations, with implications for AI alignment concerns that include interpretability, value learning, and corrigibility. If QNR-enabled AI systems are indeed likely, then studies of AI alignment should consider the challenges and opportunities they may present.

1. Background

Previous generations of AI typically relied on structured, interpretable, symbolic representations of knowledge; neural ML systems typically rely on opaque, unstructured neural representations. The concept described here differs from both and falls in the broad category of structured neural representations. It is neither fully novel nor widely familiar and well explored.

The term “quasilinguistic neural representations” (QNRs) will be used to denote vector-attributed graphs with quasilinguistic semantics of kinds that (sometimes) make natural language a useful point of reference; a “QNR-enabled system” employs QNRs as a central mechanism for structuring, accumulating, and applying knowledge. QNRs can be language-like in the sense of organizing (generalizations of) NL words through (generalizations of) NL syntax, yet are strictly more expressive, upgrading words to embeddings[1a] (Figure 1) and syntax trees to general graphs (Figure 2). In prospective applications, QNRs would be products of machine learning, shaped by training, not human design. QNRs are not sharply distinguished from constructs already in use, a point in favor of their relevance to real-world prospects.[1b]

Motivations for considering QNR-enabled systems have both descriptive and normative aspects — both what we should expect (contributions to AI capabilities in general) and what we might want (contributions to AI alignment in particular).[1c] These are discussed in (respectively) Sections 2 and 3.

[1a] For example, embeddings can represent images in ways that would be difficult to capture in words, or even paragraphs (see Figure 1). Embeddings have enormous expressive capacity, yet from a semantic perspective are more computationally tractable than comparable descriptive text or raw images.

[1b] For an extensive discussion of QNRs and prospective applications, see "QNRs: Toward Language for Intelligent Machines", FHI Technical Report #2021-3, here cited as “QNRs”. A brief introduction can be found here: "Language for Intelligent Machines: A Prospectus".

[1c] Analogous descriptive and normative considerations are discussed in "Reframing Superintelligence: Comprehensive AI Services as General Intelligence", FHI Technical Report #2019-1, Section 4.

Figure 1: Generalizing semantic embeddings. Images corresponding to points in a two-dimensional grid in a high-dimensional space of face embeddings. Using text to describe faces and their differences in a high-dimensional face-space (typical dimensionalities are on the rough order of 100) would be difficult, and we can expect a similar gap in expressive capacity between embeddings and text in semantic domains where rich denotations cannot be so readily visualized or (of course) described. Image from Deep Learning with Python (2021).

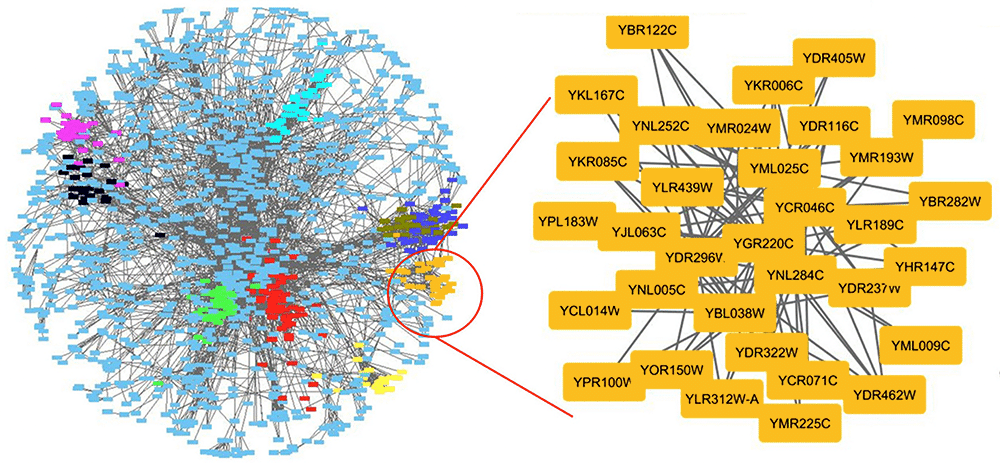

Figure 2: Generalizing semantic graphs. A graph of protein-protein interactions in yeast cells; proteins can usefully be represented by embeddings (see, for example, "Sequence-based prediction of protein-protein interactions: a structure-aware interpretable deep learning model" (2021)). Image source: "A Guide to Conquer the Biological Network Era Using Graph Theory" (2020). Analogous graphs are perhaps typical of quasilinguistic, ML-native representations of the world, but have a kind of syntax and semantics that strays far from NL. Attaching types or other semantic information to links is natural within a generalized QNR framework.

2. Prospective support for AI capabilities

Multiple perspectives converge to suggest that QNR-enabled implementations of knowledge-rich systems are a likely path for AI development, and taken as a whole can help clarify what QNR-enabled systems might be and do. If QNR-enabled systems are likely, then they are important to problems of AI alignment both as challenges and as solutions. Key aspects include support for efficient scaling, quasi-cognitive content, cumulative learning, semi-formal reasoning, and knowledge comparison, correction, and synthesis.

2.1 Efficient scaling of GPT-like functionality

The cost and performance of language models has increased with scale, for example, from BERT (with 340 million parameters)[2.1a] to GPT-3 (with 175 billion parameters)[2.1b]; the computational cost of a training run on GPT-3 is reportedly in the multi-million-dollar range. Large language models encode not only linguistic skills, but remarkable amounts of detailed factual knowledge, including telephone numbers, email addresses, and the first 824 digits of pi.[2.1c] They are also error-prone and difficult to correct.[2.1d]

The idea that detailed knowledge (for example, of the 824th digit of pi) is best encoded, accurately and efficiently, by gradient descent on a trillion-parameter model is implausible. A natural alternative is to enable retrieval from external stores of knowledge indexed by embeddings and accessed through similarity search, and indeed, recent publications describe Transformer-based systems that access external stores of NL content using embeddings as keys.[2.1e] Considering the complementary capabilities of parametric models and external stores, we can expect to see a growing range of systems in which extensive corpora of knowledge are accessed from external stores, while intensively used skills and commonsense knowledge are embodied in neural models.[2.1f]

…And so we find a natural role for QNR stores (as potential upgrades of NL stores), here viewed from the perspective of state-of-the-art NLP architectures.

[2.1a] “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding” (2018).

[2.1b] “Language Models are Few-Shot Learners” (2020).

[2.1c] “Extracting training data from large language models” (2021).

[2.1d] Factual accuracy is poor even on simple questions, and it would be beyond challenging to train a stand-alone language model to provide reliable, general, professional-level knowledge that embraced (for example) number theory, organic chemistry, and academic controversies regarding the sociology, economics, politics, philosophies, origins, development, and legacy of the Tang dynasty.

[2.1e] Indexing and retrieving content from Wikipedia is a popular choice. Examples are described in “REALM: Retrieval-Augmented Language Model Pre-Training” (2020), “Augmenting Transformers with KNN-Based Composite Memory for Dialog” (2020), and “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks” (2021). In a paper last month, “Improving language models by retrieving from trillions of tokens” (2022), DeepMind described a different, large-corpus-based approach, exhibiting performance comparable to GPT-3 while using 1/25 as many parameters. In another paper last month, Google reported a system that uses text snippets obtained by an “information retrieval system” that seems similar to Google Search (“LaMDA: Language Models for Dialog Applications” (2022)).

[2.1f] Current work shows that parametric models and external stores can represent overlapping semantic content; stores based on QNRs can deepen this relationship by providing overlapping semantic representations. Local QNR structures could correspond closely to graph network states, and standard Transformers in effect operate on fully connected graphs.

2.2 Quasi-cognitive memory

Human memory-stores can be updated by single-shot experiences that include reading journal articles. Our memory-stores include neural representations of things (entities, relationships, procedures…) that are compositional in that they may be composed of multiple parts,[2.2a] and we can retrieve these representations by associative mechanisms. Memories may or may not correspond closely to natural-language expressions — some represent images, actions, or abstractions that one may struggle to articulate. Thus, aspects of human memory include:

• Components with neural representations (much like embeddings)

• Connections among components (in effect, graphs)

• Single-shot learning (in effect, writing representations to a store)

• Retrieval by associative memory (similar to similarity search)[2.2b]

…And so we again find the essential features of QNR stores, here viewed from the perspective of human memory.

[2.2a] Compositionality does not exclude multi-modal representations of concepts, and (in the neurological case) does not imply cortical localization (“Semantic memory: A review of methods, models, and current challenges” (2020)). Rule representations also show evidence of compositionality (“Compositionality of Rule Representations in Human Prefrontal Cortex” (2012)). QNRs, Section 4.3, discusses various kinds and aspects of compositionality, a term with different meanings in different fields.

[2.2b] Graphs can be modeled in an associative memory store, but global similarity search is ill-suited to representing connections that bind components together, for example, the components of constructs like sentences or paragraphs. To the extent that connections can be represented by computable relationships among embeddings, the use of explicit graph representations can be regarded as a performance optimization.

2.3 Contribution to shared knowledge

To achieve human-like intellectual competence, machines must be fully literate, able not only to learn by reading, but to write things worth retaining as contributions to shared knowledge. A natural language for literate machines, however, is unlikely to resemble a natural language for humans. We typically read and write sequences of tokens that represent mouth sounds and imply syntactic structures; a machine-native representation would employ neural embeddings linked by graphs.[2.3a] Embeddings strictly upgrade NL words; graphs strictly upgrade NL syntax. Together, graphs and embeddings strictly upgrade both representational capacity and machine compatibility.

…And so again we find the features of QNR content, here emerging as a natural medium for machines that build and share knowledge.[2.3b]

[2.3a] QNRs, Section 10, discusses potential architectures and training methods for QNR-oriented models, including proposals for learning quasilinguistic representations of high-level abstractions from NL training sets (some of these methods are potentially applicable to training conventional neural models).

[2.3b] Note that this application blurs differences between individual, human-like memory and shared, internet-scale corpora. Similarity search (≈ associative memory) scales to billions of items and beyond; see “Billion-scale similarity search with GPUs” (2017) and “Billion-scale Commodity Embedding for E-commerce Recommendation in Alibaba” (2018). Retrieval latency in RETRO (“Improving language models by retrieving from trillions of tokens” (2022)) is 10 ms.

2.4 Formal and informal reasoning

Research in neurosymbolic reasoning seeks to combine the strengths of structured reasoning with the power of neural computation. In symbolic representations, syntax encodes graphs over token-valued nodes, but neural embeddings are, of course, strictly more expressive than tokens (note that shared nodes in DAGs can represent variables). Indeed, embeddings themselves can express mutual relationships,[2.4a] while reasoning with embeddings can employ neural operations beyond those possible in symbolic systems.

Notions of token-like equality can be generalized to measures of similarity between embeddings, while unbound variables can be generalized to refinable values with partial constraints. A range of symbolic algorithms, including logical inference, have continuous relaxations that operate on graphs and embeddings.[2.4b] These relaxations overlap with pattern recognition and informal reasoning of the sort familiar to humans.

…And so we find a natural role for graphs over embeddings, now as a substrate for quasi-symbolic reasoning.[2.4c]

[2.4a] For example, inference on embeddings can predict edges for knowledge-graph representations; see “Neuro-symbolic representation learning on biological knowledge graphs” (2017), “RotatE: Knowledge Graph Embedding by Relational Rotation in Complex Space” (2019), and “Knowledge Graph Embedding for Link Prediction: A Comparative Analysis” (2021).

[2.4b] See, for example, “Beta Embeddings for Multi-Hop Logical Reasoning in Knowledge Graphs” (2020) and systems discussed in QNRs, Section A1.4.

[2.4c] Transformer-based models have shown impressive capabilities in the symbolic domains of programming and mathematics (see “Evaluating Large Language Models Trained on Code” (2021) and “A Neural Network Solves and Generates Mathematics Problems by Program Synthesis” (2022)). As with the overlapping semantic capacities of parametric models and external stores (Section 1, above), the overlapping capabilities of pretrained Transformers and prospective QNR-oriented systems suggest prospects for their compatibility and functional integration. The value of attending to and updating structured memories (perhaps mapped to and from graph neural networks; see “Graph Neural Networks Meet Neural-Symbolic Computing: A Survey and Perspective” (2021)) presumably increases with the scale and computational depth of semantic content.

2.5 Knowledge accumulation, revision, and synthesis

The performance of current ML systems is challenged by faulty information (in need of recognition and marking or correction) and latent information (where potentially accessible information may be implied — yet not provided — by inputs). These challenges call for comparing semantically related or overlapping units of information, then reasoning about their relationships in order to construct more reliable or complete representations, whether of a thing, a task, a biological process, or a body of scientific theory and observations.[2.5a] This functionality calls for structured representations that support pattern matching, reasoning, revision, synthesis and recording of results for downstream applications.

Relationships among parts are often naturally represented by graphs, while parts themselves are often naturally represented by embeddings, and the resulting structures are natural substrates for the kind of reasoning and pattern matching discussed above. Revised and unified representations can be used in an active reasoning process or stored for future retrieval.[2.5b]

…And so again we find a role for graphs over embeddings, now viewed from the perspective of refining and extending knowledge.

[2.5a] Link completion in knowledge graphs illustrates this kind of process.

[2.5b] For a discussion of potential applications at scale, see QNRs, Section 9. Soft unification enables both pattern recognition and combination; see discussion in QNRs, Section A.1.4.

In light of potential contributions to AI scope and functionality discussed above, it seems likely that QNR-enabled capabilities will be widespread in future AI systems, and unlikely that QNR functionality will be wholly unavailable. If QNR-enabled capabilities are likely to be widespread and relatively easy to develop, then it will be important to consider challenges that may arise from AI development marked by broadly capable, knowledge rich systems. If QNR functionality is unlikely to be unavailable, then it will be important to consider how that functionality might help solve problems of AI alignment, in part through differential technology development.

3. Prospective support for AI alignment

Important considerations for AI alignment include interpretability, value learning, and corrigibility in support of strategies for improving behavioral alignment.

3.1 Support for interpretability

In a particularly challenging range of scenarios, AI systems employ opaque representations of knowledge and behaviors that can be understood only though their inputs and outputs. While QNR representations could be opaque, their inherent inductive bias (perhaps intentionally strengthened by training and regularization) should tend to produce relatively compositional, interpretable representations: Embeddings and subgraphs will typically represent semantic units with distinct meanings that are composed into larger units by distinct relationships.[3.1a]

In some applications, QNR expressions could closely track the meanings of NL expressions,[3.1b] making interpretability a matter of lossy QNR → NL translation. In other applications, QNR expressions will be “about something” that can be — at least in outline — explained (diagrammed, demonstrated) in ways accessible to human understanding. In the worst plausible case, QNR expressions will be about recognizable topics (stars, not molecules; humans, not trees), yet substantially opaque in their actual content.[3.1c] Approaches to interpretability that can yield some understanding of opaque neural models seem likely to yield greater understanding when applied to QNR-based systems.

[3.1a] Note that graph edges can carry attributes (types or embeddings), while pairs of embeddings can themselves encode interpretable relationships (as with protein-protein interactions).

[3.1b] For example, QNR semantics could be shaped by NL → NL training tasks that include autoencoding and translation. Interpretable embeddings need not correspond closely to words or phrases: Their meanings may instead correspond to extended NL descriptions, or (stretching the concept of interpretation beyond language per se) may correspond to images or other human-comprehensible but non-linguistic representations.

[3.1c] This property (distinguishability of topics) should hold at some level of semantic granularity even in the presence of strong ontological divergence. For a discussion of the general problem, see the discussion of ontology identification in “Eliciting Latent Knowledge” (2022).

3.2 Support for value learning

Many of the anticipated challenges of aligning agents’ actions with human intentions hinge on the anticipated difficulty of learning human preferences. However, systems able to read, interpret, integrate, and generalize from large corpora of human-generated content (history, news, fiction, science fiction, legal codes, court records, philosophy, discussions of AI alignment...) could support the development of richly informed models of human law and ethical principles, together with predictive models of general human concerns and preferences that reflect ambiguities, controversies, partial ordering, and inconsistencies.[3.2a]

[3.2a] Along lines suggested by Stuart Russell; see discussion in “Reframing Superintelligence: Comprehensive AI Services as General Intelligence”, Section 22. Adversarial training is also possible: Humans can present hypotheticals and attempt to provoke inappropriate responses; see the use of “adversarial-intent conversations” in “LaMDA: Language Models for Dialog Applications” (2022).

Training models using human-derived data of the sort outlined above should strongly favor ontological alignment; for example, one could train predictive models of (human descriptions of actions and states) → (human descriptions of human reactions).[3.2b] It should go without saying that this approach raises deep but familiar questions regarding the relationship between what people say, what they mean, what they think, what they would think after deeper, better-informed reflection, and so on.

[3.2b] Online sources can provide massive training data of this sort — people enjoy expressing their opinions. Note that this general approach can strongly limit risks of agent-like manipulation of humans during training and application: An automatically curated training set can inform a static but provisional value model for external use.

3.3 Support for corrigibility

Reliance on external, interpretable stores should facility corrigibility.[3.3a] In particular, if distinct entities, concepts, rules, etc., have (more or less) separable, interpretable representations, then identifying and modifying those representations may be practical, a process like (or not entirely unlike) editing a set of statements. In particular, reliance by diverse agents on (portions of) shared, external stores[3.3b] can enable revision by means that are decoupled from the experiences, rewards, etc., of the agents affected. In other words, agents can act based on knowledge accumulated and revised by other sources; to the extent that this knowledge is derived from science, history, sandboxed experimentation, and the like, learning can be safer and more effective than it might be if conducted by (for example) independent RL agents in the wild learning to optimize a general reward function.[3.3c] Problems of corrigibility should be relatively tractable in agents guided by relatively interpretable, editable, externally-constructed knowledge representations.

[3.3a] “A corrigible agent is one that doesn't interfere with what we would intuitively see as attempts to ‘correct’ the agent, or ‘correct’ our mistakes in building it; and permits these ‘corrections’ despite the apparent instrumentally convergent reasoning saying otherwise.” “Corrigibility [? · GW]”, AI Alignment Forum.

[3.3b] A system can “rely on a store” without constantly consulting it: A neural model can distill QNR content for use in common operations. For an example of this general approach, see the (knowledge graph) → (neural model) training described in “Symbolic Knowledge Distillation: from General Language Models to Commonsense Models” (2021).

[3.3c] Which seems like a bad idea.

3.4 Support for behavioral alignment

In typical problem-cases for AI alignment, a central difficulty is to provide mechanisms that would enable agents to assess human-relevant aspects of projected outcomes of candidate actions — in other words, mechanisms that would enable agents to take account of human concerns and preferences in choosing among those actions. Expressive, well-informed, corrigible, ontologically aligned models of human values could provide such mechanisms, and the discussion above suggests that QNR-enabled approaches could contribute to their development and application.[3.4a]

[3.4a] Which seems like a good idea.

4. Conclusion

AI systems likely will (or readily could) employ quasilinguistic neural representations as a medium for learning, storing, sharing, reasoning about, refining, and applying knowledge. Attractive features of QNR-enabled systems could include affordances for interpretability and corrigibility with applications to value modeling and behavioral alignment.[4a]

• If QNR-enabled capabilities are indeed likely, then they are important to understanding prospective challenges and opportunities for AI alignment, calling for exploration of possible worlds that would include these capabilities.

• If QNR-enabled capabilities are at least accessible, then they should be studied as potential solutions to key alignment problems and are potentially attractive targets for differential technology development.

The discussion here is, of course, adjacent to a wide range of deep, complex, and potentially difficult problems, some familiar and others new. Classic AI alignment concerns should be revisited with QNR capabilities in mind.

[4a] Perhaps better approaches will be discovered. Until then, QNR-enabled systems could provide a relatively concrete model of some of what those better approaches might enable.

12 comments

Comments sorted by top scores.

comment by PeterMcCluskey · 2024-01-14T00:48:41.115Z · LW(p) · GW(p)

I'm reaffirming my relatively long review [LW · GW] of Drexler's full QNR paper.

Drexler's QNR proposal seems like it would, if implemented, guide AI toward more comprehensible systems. It might modestly speed up capabilities advances, while being somewhat more effective at making alignment easier.

Alas, the full paper is long, and not an easy read. I don't think I've managed to summarize its strengths well enough to persuade many people to read it.

comment by RHollerith (rhollerith_dot_com) · 2022-02-03T20:29:28.965Z · LW(p) · GW(p)

Because the most salient thing about QNRs is that they are linguistic, it bothers me slightly that they are not named QLNRs, but I suppose it is too late to change the name.

Replies from: davidad, rhollerith_dot_com, Charlie Steiner↑ comment by RHollerith (rhollerith_dot_com) · 2022-02-03T20:34:22.807Z · LW(p) · GW(p)

Or even LNRs, short for (quasi-)linguistic neural representations.

↑ comment by Charlie Steiner · 2022-02-03T22:03:23.959Z · LW(p) · GW(p)

My brain kept interpreting QNR as quantum non-realizability. Which would be a great thing to tie into AI :P

comment by Charlie Steiner · 2022-02-03T21:59:03.027Z · LW(p) · GW(p)

The main argument I see not to take this too literally is something like "conservation of computation."

I think it's quite likely that left to its own devices a black-box machine learning agent would learn to represent information in weakly hierarchical models that don't have a simple graph structure (but maybe have some more complicated graph structure where representations are connected by complicated transition functions that also have side-effects on the state of the agent). If we actually knew how to access these representations, that would already be really useful for interpretability, and we could probably figure out some broad strokes of what was going on, but we wouldn't be able to get detailed guarantees. Nor would this be sufficient for trust - we'd still need a convincing story about how we'd designed the agent to learn to be trustworthy.

Then if we instead constrain a learning algorithm to represent information using simple graphs of human-understandable pieces, but it can still do all the impressive things the unconstrained algorithm could do, the obvious inference is that all of that human-mysterious complicated stuff that was happening before is still happening, it's just been swept as "implicit knowledge" into the parts of the algorithm that we didn't constrain.

In other words, I think the use of QNR as a template for AI design provides limited benefit, because so long as the human-mysterious representation of the world is useful, and your learning algorithm learns to do useful things, it will try to cram the human-mysterious representation in somewhere. Tying to stop it is pitting yourself against the search algorithm optimizing the learned content, and this will become a less and less useful project as that search algorithm becomes more powerful. But interpreted not-too-literally I think it's a useful picture of how we might model an AI's knowledge so that we can do broad-strokes interpretability.

Replies from: Eric Drexler↑ comment by Eric Drexler · 2022-02-04T11:18:19.580Z · LW(p) · GW(p)

Although I don’t understand what you mean by “conservation of computation”, the distribution of computation, information sources, learning, and representation capacity is important in shaping how and where knowledge is represented.

The idea that general AI capabilities can best be implemented or modeled as “an agent” (an “it” that uses “the search algorithm”) is, I think, both traditional and misguided. A host of tasks require agentic action-in-the-world, but those tasks are diverse and will be performed and learned in parallel (see the CAIS report, www.fhi.ox.ac.uk/reframing). Skill in driving somewhat overlaps with — yet greatly differs from — skill in housecleaning or factory management; learning any of these does not provide deep, state-of-the art knowledge of quantum physics, and can benefit from (but is not a good way to learn) conversational skills that draw on broad human knowledge.

A well-developed QNR store should be thought of as a body of knowledge that potentially approximates the whole of human and AI-learned knowledge, as well as representations of rules/programs/skills/planning strategies for a host of tasks. The architecture of multi-agent systems can provide individual agents with resources that are sufficient for the tasks they perform, but not orders of magnitude more than necessary, shaping how and where knowledge is represented. Difficult problems can be delegated to low-latency AI cloud services. .

There is no “it” in this story, and classic, unitary AI agents don’t seem competitive as service providers — which is to say, don’t seem useful..

I’ve noted the value of potentially opaque neural representations (Transformers, convnets, etc.) in agents that must act skillfully, converse fluently, and so on, but operationalized, localized, task-relevant knowledge and skills complement rather than replace knowledge that is accessible by associative memory over a large, shared store.

comment by Gunnar_Zarncke · 2022-02-27T19:12:03.589Z · LW(p) · GW(p)

QNRs look cool for more general knowledge representation than both natural language and explicit semantic representations. But I notice that neither this QNR post nor Drexler's prospectus mention drift. But drift (though you might want to call it "refinement") is common both in natural languages (to adapt to growing knowledge) as well as in neural representations ("in mice").

Related: The "meta-representational level" from Meditation and Neuroscience, some odds and ends [LW · GW]

comment by ESRogs · 2022-02-21T04:04:00.624Z · LW(p) · GW(p)

Question about terminology — would it be fair to replace "embedding" with "concept"?

(Something feels weird to me about how the word "embedding" is used here. It seems like it's referring to a very basic general idea — something like "the building blocks of thought, which would include named concepts that we have words for, but also mental images, smells, and felt senses". But the particular word "embedding" seems like it's emphasizing the geometric idea of representing something from a high dimensional space in a lower dimensional space, and that seems like it's not the most relevant part. (Yes, we can think of all concepts geometrically, as clusters in thingspace [LW · GW], but depending on context, it might be more natural to emphasize that idea of a location in a space, or to think of them as just concepts.))

comment by Jon Garcia · 2022-02-05T21:40:48.057Z · LW(p) · GW(p)

Thanks for this excellent overview, and all the links to resources. It's nice to have a label for this class of models.

Recently, I've come to see natural language as a medium for encoding and transmitting cognitive programs. These would be analogous to computer programs, with working memory for state embeddings (heap), for intermediate predicate-call-specific data (stack), and for the causal graph itself (program code). Such programs would be used by conscious agents for simulating models of the world and for carrying out behavioral policies, depending on what part of the brain is carrying out the program.

Under this scheme, the cognitive programs themselves would run on QNR-type models, but they would be constructed or transferred between minds using (non-quasi) natural language. Language, then, involves a traversal over cognitive program space, using the rules of syntax to unravel a cognitive program when speaking/writing or to assemble one when listening/reading.

In the brain, the dynamic routing of information necessary for all this would be carried out by the basal ganglia, while the token embeddings and program code would exist as patterns in the cortex. If this is all valid, then it would seem that the brains of more intelligent species become that way due to the evolution of neural architectures that let the brain operate more like a CPU.

I would be interested to hear if you had any thoughts on this.

comment by Roman Leventov · 2023-12-15T12:28:23.126Z · LW(p) · GW(p)

Looks like OpenCog Hyperon (Goertzel et al., 2023) is similar to the QNR paradigm for learning and intelligence. Some of the ideas about not cramming intelligence into a unitary "agent" that Eric expressed in these comments and later posts are also taken up by Goertzel.

I didn't find references to Goertzel in the original QNR report from 2021; I wonder if there are references in the reverse direction and what Eric thinks of OpenCog Hyperon.