[Request for Distillation] Coherence of Distributed Decisions With Different Inputs Implies Conditioning

post by johnswentworth · 2022-04-25T17:01:08.767Z · LW · GW · 14 commentsContents

14 comments

There’s been a lot of response to the Call For Distillers [LW · GW], so I’m experimenting with a new post format. This post is relatively short and contains only a simple mathematical argument, with none of the examples, motivation, more examples, or context which would normally make such a post readable. My hope is that someone else will write a more understandable version.

Jacob is offering [LW(p) · GW(p)] a $500 bounty on a distillation.

Goal: following the usual coherence argument setup, show that if multiple decisions are each made with different input information available, then each decision maximizes expected utility given its input information.

We’ll start with the usual coherence argument setup: a system makes a bunch of choices, aiming to be pareto-optimal across a bunch of goals (e.g. amounts of various resources) . Pareto optimality implies that, at the pareto-optimum, there exists some vector of positive reals such that the choices maximize . Note that can be freely multiplied by a constant, so without loss of generality we could either take to sum to (in which case we might think of as probabilities) or take to be where is amount of money (in which case is a marginal price vector).

When the goals are all “the same goal” across different “worlds” , and we normalize to sum to , is a probability distribution over worlds in the usual Bayesian sense. The system then maximizes (over its actions ) , i.e. it’s an “expected utility maximizer”.

That’s the usual setup in a nutshell. Now, let’s say that the system makes multiple decisions in a distributed fashion. Each decision is made with only limited information: receives as input (and nothing else). The system then chooses the functions to maximize .

Consider the maximization problem for just , i.e. the optimal action for choice i given input . Expanded out, the objective is .

Note that the only terms in that sum which actually depend on are those for which . So, for purposes of choosing specifically, we can reduce the objective to

… which is equal to . The multiplier is always positive and does not depend on , so we can drop it without changing the optimal . Thus, action maximizes the conditional expected value .

Returning to the optimization problem for all of the actions simultaneously: any optimum for all actions must also be an optimum for each action individually (otherwise we could change one action to get a better result), so each action must maximize .

A few notes on this:

- We’ve implicitly assumed that actions do not influence which information is available to other actions (i.e. the actions are “spacelike separated”). That can be relaxed: let depend on both and previous actions , and then will maximize ; the general structure of the proof carries over.

- We’ve implicitly assumed that the action when is observed does not influence worlds where is not observed (i.e. no Newcomblike shenanigans). We can still handle Newcomblike problems if we use FDT [? · GW], in which case the action function would appear in more than one place.

- As usual with coherence arguments, we’re establishing conditions which must be satisfied (by a pareto-optimal system with the given objectives); the conditions do not necessarily uniquely specify the system’s behavior. The classic example is that might not be unique. Once we have distributed decisions there may also be “local optima” such that each individual action is optimal but the actions are not jointly optimal; that’s another form of non-uniqueness.

14 comments

Comments sorted by top scores.

comment by johnswentworth · 2022-04-25T17:12:27.941Z · LW(p) · GW(p)

I haven't put a distillation bounty on this, but if anyone else wants to do so, leave a comment and I'll link to it in the OP.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2022-04-26T01:37:29.821Z · LW(p) · GW(p)

How long would it have taken you to do the distillation step yourself for this one? I'd be happy to post a bounty, but price depends a bit on that.

Replies from: johnswentworth↑ comment by johnswentworth · 2022-04-26T04:01:28.714Z · LW(p) · GW(p)

Short answer: about one full day.

Longer answer: normally something like this would sit in my notebook for a while, only informing my own thinking. It would get written up as a post mainly if it were adjacent to something which came up in conversation (either on LW or in person). I would have the idea in my head from the conversation, already be thinking about how best to explain it, chew on it overnight, and then if I'm itching to produce something in the morning I'd bang out the post in about 3-4 hours.

Alternative paths: I might need this idea as background for something else I'm writing up, or I might just be in a post-writing mood and not have anything more ready-to-go. In either of those cases, I'd be starting more from scratch, and it would take about a full day.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2022-04-26T22:28:16.691Z · LW(p) · GW(p)

Cool, I'll add $500 to the distillation bounty then, to be paid out to anyone you think did a fine job of distilling the thing :) (Note: this should not be read as my monetary valuation for a day of John work!)

(Also, a cooler pay-out would be basis points, or less, of Wentworth impact equity)

Replies from: johnswentworth, thomas-kwa↑ comment by johnswentworth · 2022-04-27T21:19:57.653Z · LW(p) · GW(p)

to be paid out to anyone you think did a fine job of distilling the thing

Needing to judge submissions is the main reason I didn't offer a bounty myself. Read the distillation, and see if you yourself understand it. If "Coherence of Distributed Decisions With Different Inputs Implies Conditioning" makes sense as a description of the idea, then you've probably understood it.

If you don't understand it after reading an attempted distillation, then it wasn't distilled well enough.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2022-05-16T01:18:32.625Z · LW(p) · GW(p)

An update on this: sadly I underestimated how busy I would be after posting this bounty. I spent 2h reading this and Thomas post the other day, but didn't not manage to get into the headspace of evaluating the bounty (i.e. making my own interpretation of John's post, and then deciding whether Thomas' distillation captured that). So I will not be evaluating this. (Still happy to pay if someone else I trust claim Thomas' distillation was sufficient.) My apologies to John and Thomas about that.

↑ comment by Thomas Kwa (thomas-kwa) · 2022-04-27T20:31:15.069Z · LW(p) · GW(p)

I will attempt to fill this bounty. Does the fact that I'm on a grant preclude me from claiming it?

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2022-05-03T19:01:01.293Z · LW(p) · GW(p)

Sorry for late reply: no, it does not.

comment by Thomas Kwa (thomas-kwa) · 2022-05-03T20:39:06.121Z · LW(p) · GW(p)

I don't understand why this setup needs multiple decisions (even after asking johnswentworth).

- Thomas: Why doesn't this setup work with a single decision (say, a poker player imagining her opponent raising, calling, or folding?)

- John (as understood by me): If the agent only ever receives one piece of information, the sense in which it uses conditional probability is a bit trivial. Suppose the agent has an explicit world-model and is its set of all possible worlds. If the agent is only receiving a single piece of information which constrains the set of worlds to , then the agent can have U=S, being unable to imagine any world inconsistent with what it sees. For this agent, conditioning on f is vacuous. But if the agent is making multiple decisions based on different information that constrain the possible worlds to different sets , it must be able to reason about a set of worlds larger than any particular .

- Thomas: But doesn't the agent need to do this for a single decision, given that it could observe either or some other information ?

- Here I don't know what to respond, nor does my model of John. Maybe the answer is it doesn't have to construct a lookup table for and can just act "on the fly"? This doesn't make sense, because it could do the same thing across multiple decisions. Also, there's a weird thing going on where the math in the post is a behavioral claim: "we can model the agent as using conditional expected value", but the interpretation, including the second bullet point, references the agent's possible structure.

↑ comment by johnswentworth · 2022-05-03T22:41:37.923Z · LW(p) · GW(p)

Yeah, my explanation of that wasn't very good. Let me try again.

If there's just one decision, the agent maximizes . But then we could define a behaviorally-equivalent utility function ; there isn't necessarily a sense in which the agent cares about rather than .

With many decisions, we could perform a similar construction to get a behaviorally-equivalent utility function . But if there's enough decisions with enough different inputs then may be bigger than - i.e. it may have more dimensions/more bits. Then representing all these different decision-inputs as being calculated from one "underlying world" yields a model which is "more efficient", in some sense.

Another way to put it: with just one decision, ~any should be behaviorally equivalent to a -maximizer for some . But with many decisions, that should not be the case. (Though I have not yet actually come up with an example to prove that claim.)

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2022-05-04T02:48:09.493Z · LW(p) · GW(p)

edit: the numbers are wrong here; go see my distillation [LW · GW] for the correct numbers

Proposed example to check my understanding:

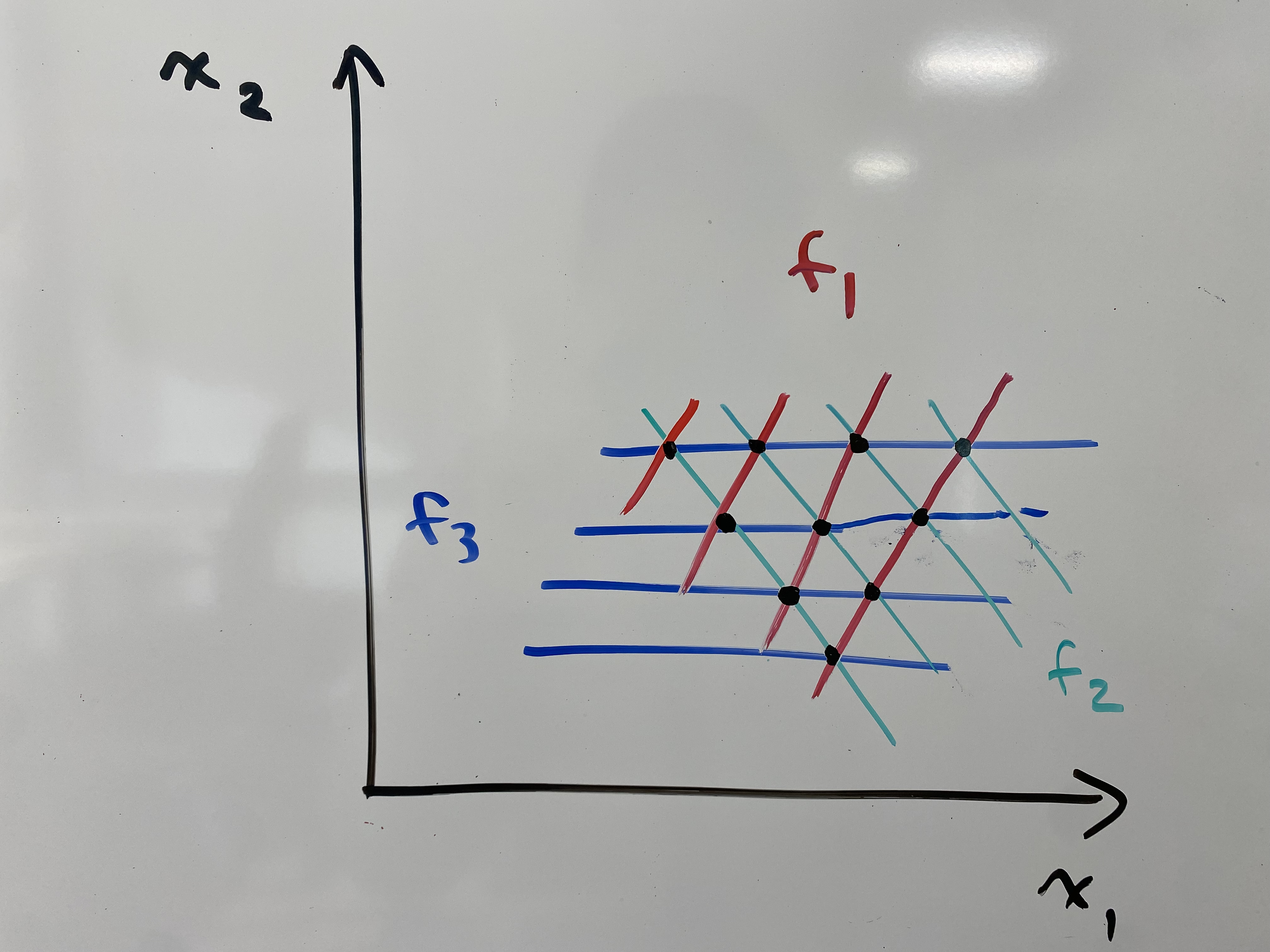

Here, where is the 10 black points representing possible worlds.

We have three different observations , each of which has 4 possible outcomes and gives partial information about X. Call the set of combinations of observations .

It seems that

- while : there are more combinations of partial observations than possible worlds.

- Therefore, storing a representation of possible values of X might be simpler than storing a representation of possible values

- Also, this notion of conditional expected utility actually constrains the behavior; for an action space not all of the policies which map correspond to conditional expected utility maximization.

- If we were not conditioning, there would be only policies that are expected utility maximization.

- If we are conditioning, it seems like there are such policies-- the agent is able to make decisions given 3 types of possible information , and each possible type of information i has .

- So by pigeonhole not every policy over distributed decisions is a conditional expected utility maximizer?

↑ comment by johnswentworth · 2022-05-04T03:42:47.130Z · LW(p) · GW(p)

I didn't check the math in your counting argument, but qualitatively that is correct.

comment by jeremy (johfst) · 2022-05-02T04:04:16.749Z · LW(p) · GW(p)

Registering that I will attempt this. Not sure if I will be able to produce something publishable in a reasonable amount of time, but I expect to learn from the attempt.

Also, I think some probabilities were left out of some sums there? Was that intentional, or a typo?

Replies from: johnswentworth↑ comment by johnswentworth · 2022-05-02T04:38:58.982Z · LW(p) · GW(p)

Typo. Good catch, thanks.