Linear encoding of character-level information in GPT-J token embeddings

post by mwatkins, Joseph Bloom (Jbloom) · 2023-11-10T22:19:14.654Z · LW · GW · 4 commentsContents

Letter presence: Linear probes vs MLPs Understanding linear probes in token embedding space Linear probes and GPT spelling capabilities Obstacle: Multiple forward passes / chain of thought Solution: Focus on first-letter information Cosine similarity with probe vectors First-letter prediction task: Comparing probe- and prompt-based results Mechanistic speculation Removing character-level information by "subtracting probe directions" Projection: First-letter information still largely present Reflection: First-letter information begins to fade Pushback: First-letter information reliably disappears Probe-based prediction after pushback with k = 5 Prompt-based predictions as k varies Interpretation Preservation of semantic integrity Semantic integrity is often preserved for large k Semantic decay Introducing character-level information by "adding probe directions" Examples of first-letter probe addition Compensating for variations in probe magnitude Increasing and decreasing the scaling coefficient Future directions Specific next steps Broader directions Appendix A: Probe magnitudes Appendix B: Semantic decay phenomena Examples of semantic decay token: ' hatred' token: ' Missile' token: 'Growing' token: 'write' Recurrent themes Glitch tokens None 4 comments

This post was primarily authored by MW, reporting recent research which was largely directed by suggestions from and discussions with JB. As secondary author, JB was responsible for the general shape of the post and the Future Directions [? · GW] section.

Work supported by the Long Term Future Fund (both authors) and Manifund (JB).

Links: Github repository, Colab notebook

TL;DR

Previous results have shown that character-level information, such as whether tokens contain a particular letter, can be extracted from GPT-J embeddings with non-linear probes. We [LW · GW] show that linear probes can retrieve character-level information from embeddings [? · GW] and we perform interventional experiments to show that [? · GW]this [LW · GW] information is used by the model to carry out character-level tasks [? · GW]. This sheds new light on how "character blind" LLMs are able to succeed on character-related tasks like spelling. As spelling represents a foundational and unambiguous form of representation, we suspect that unraveling LLMs' approach to spelling tasks could yield precise insights into the mechanisms whereby these models encode and interpret much more general aspects of the world.

Building on much previous work in this area, we look forward to extending our understanding of linearly encoded information in LLM embedding spaces (which appears to go far beyond mere character-level information). This may include learning how such information (i) emerges during training ; (ii) is predictive of the abilities of different models; and (iii) can be leveraged to better understand the algorithms implemented by these models.

Letter presence: Linear probes vs MLPs

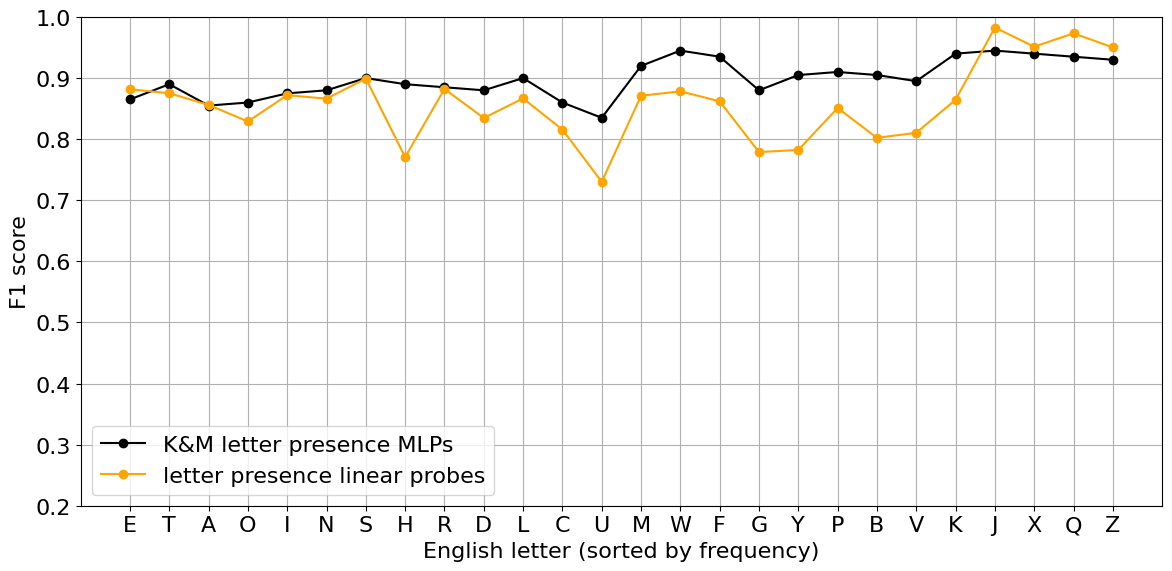

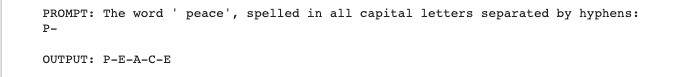

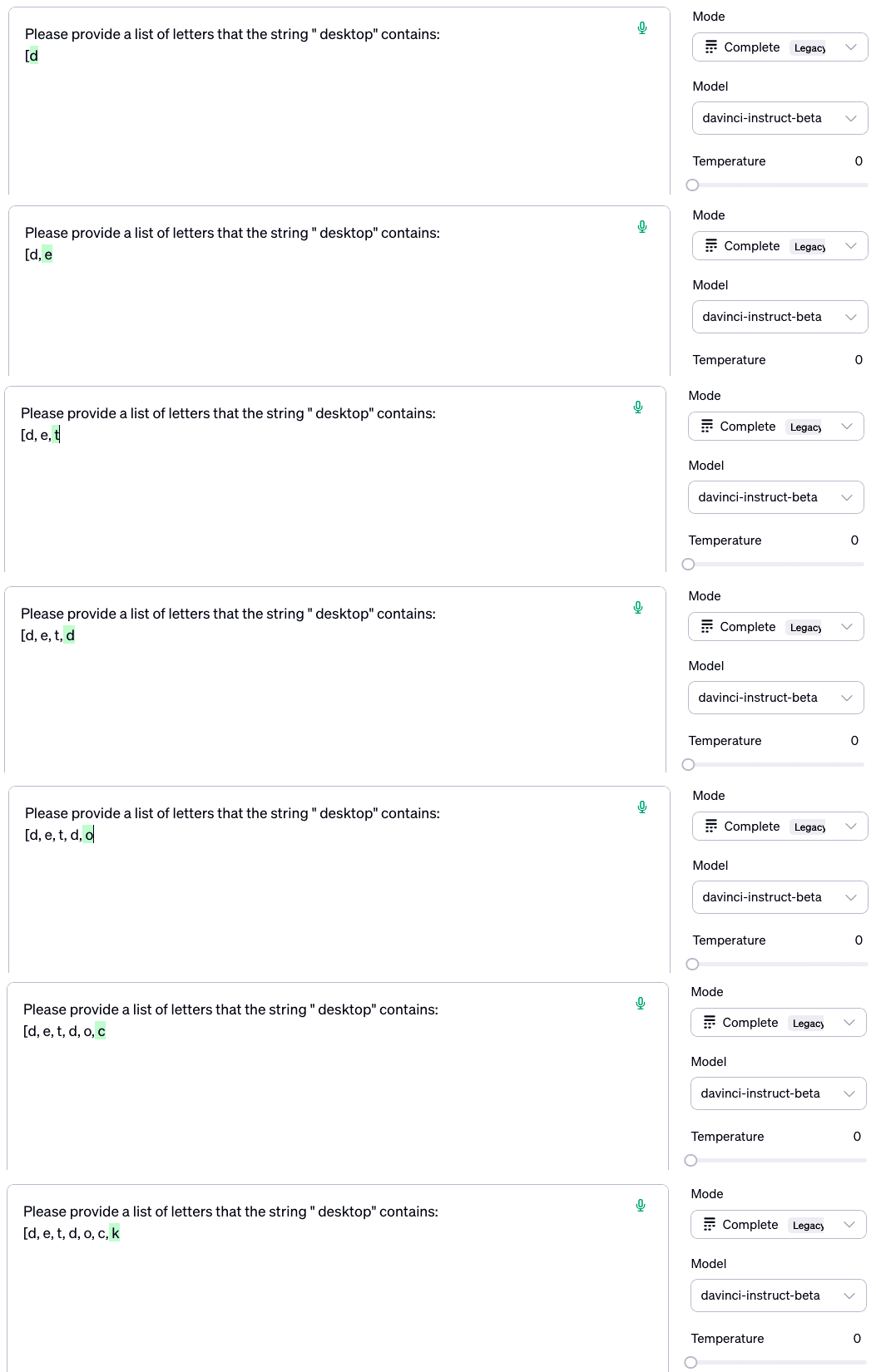

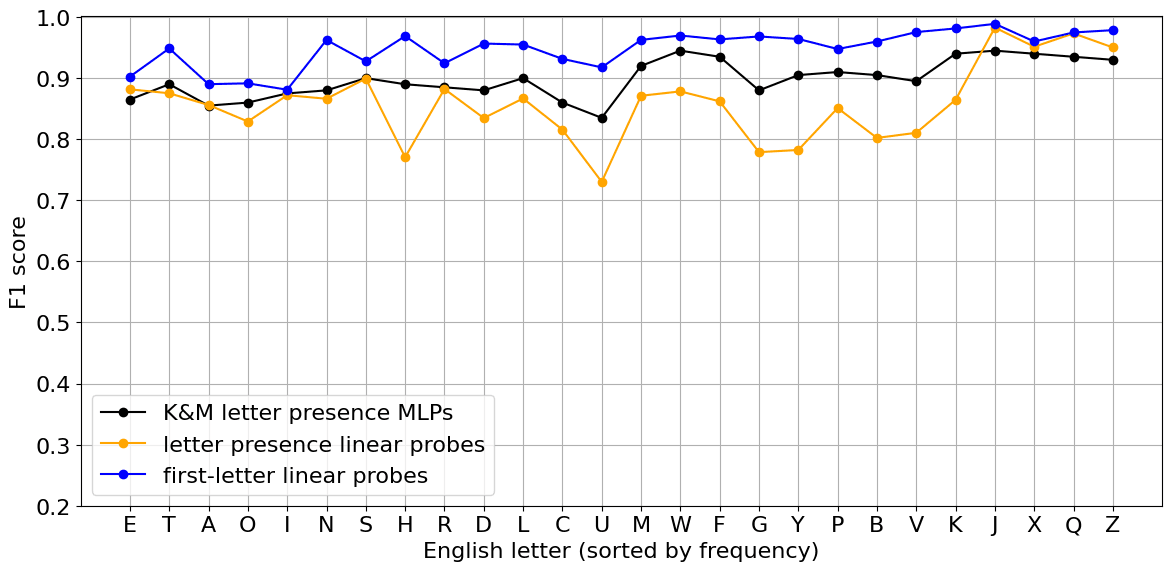

The so-called "spelling miracle"[1] is the fact that language transformers like GPT-3 are capable of inferring character-level information about their tokens, as discussed in an earlier post [LW · GW]. Among the very sparse literature on the topic, Kaushal & Mahowald's "What do tokens know about their characters and how do they know it?" (2022) describes probe-based experiments showing that (along with other LLMs) GPT-J's token embeddings nonlinearly encode knowledge of which letters belong to their token strings. The authors trained 26 MLP probes, one to answer each of the binary questions of the form "Does the string '<token>' contain the letter 'K'?" Their resulting classifiers were able to achieve F1 scores ranging from about 0.83 to 0.94, as shown in the graph below.

One might imagine that these authors would have first attempted to train 26 linear probes for this set of tasks, as so much more could be accomplished if this character-level information turned out to be encoded linearly in the token embeddings. As they make no mention of having done so, we undertook this simple training task. The linear probe classifier F1 results we achieved were surprisingly close to those of their MLP classifiers. This was without even attempting any serious hyperparameter tuning.[2]

Understanding linear probes in token embedding space

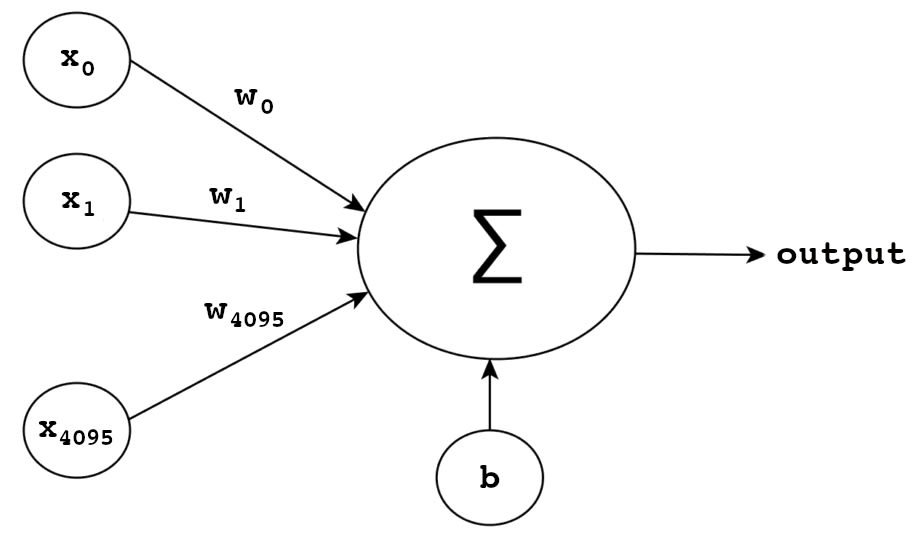

Here's a useful clarification for readers less familiar with linear probing: Each of the 26 probes (taking 4096-d GPT-J token embeddings as their inputs) is an extremely simple neural network consists of just an input layer with 4096 neurons, and a single-neuron output layer. It effectively carries out a linear transformation, mapping vectors from the token embedding space to scalar values:

Crucially, the 4096 learned weights that (along with the bias b) define such a linear probe can then be interpreted as constituting a vector in the embedding space. It's the direction of this vector in embedding space that is of most interest here, although the magnitudes of these probe vectors are also a subject of investigation (see Appendix A [? · GW]). The binary classifier learned by the probe can be understood as an algorithm (basically a forward pass) which acts on an embedding by taking the dot product with the probe weight vector and adding the scalar bias . The resulting positive or negative value then provides a positive or negative classification of

In simple geometric terms, the more that an embedding vector aligns with the probe vector , the more likely it is to be positively classified. Here we will be using cosine similarity as our metric for the extent of this alignment (perfect alignment , orthogonality , diametric opposition ).

Geometrically, the direction of the probe weight vector defines a hyperplane in the 4096-d embedding space: 's orthogonal complement , the subspace of all vectors orthogonal to . That hyperplane intersects the origin, and the role of of the scalar bias is to displace it units in the direction, to produce the decision boundary for the simple classification algorithm that the linear probe learned in training.

Linear probes and GPT spelling capabilities

The unexpected success of our 26 "letter presence" linear probe classifiers tells us that the presence of, say, 's' or 'S' in a token correlates with the extent to which that token's embedding vector aligns with the learned "S-presence" probe vector direction. This suggests the possibility that GPT-J exploits various directions in embedding space as part of its algorithm(s) for producing (often accurate) spellings of its token strings and accomplishing other character-level tasks. In order to investigate this possibility, both prompt- and probe-based approaches to spelling-related tasks are required.

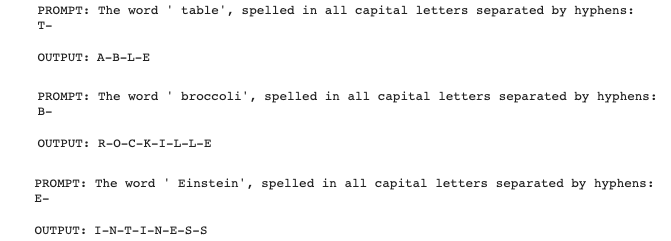

A separate, multishot prompt was first used to elicit the first letter of each token; the penultimate tokens in the follow-up prompts seen here ('T', 'B', 'E') were conditioned on those.

Probe-based methods don't not require access to the model, only to the token embeddings and any relevant learned probe weight vectors (or just their directions).

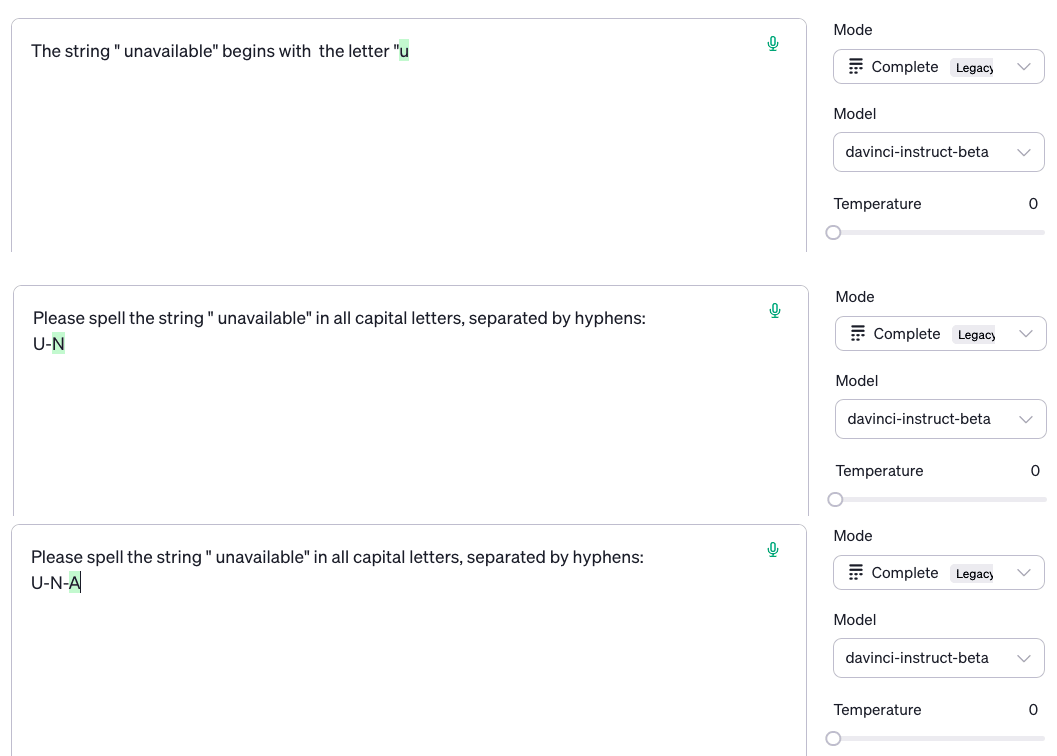

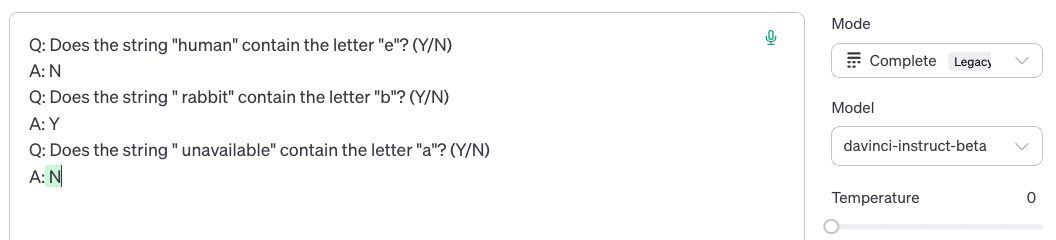

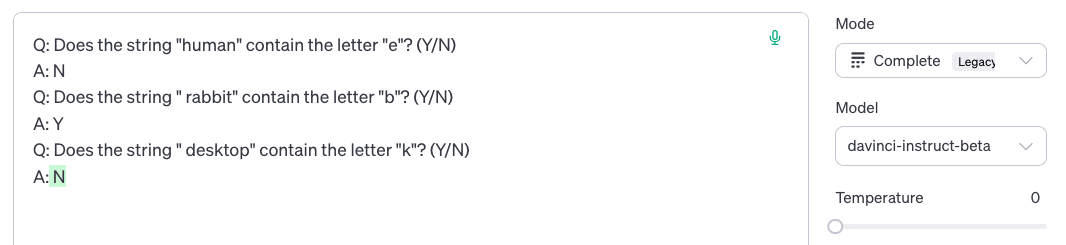

Prompt-based methods, on the other hand, involve forward passing through the model carefully designed prompts involving the token (or various mutations of its embedding) and then looking at the output logits. With a view to eventually applying mechanistic interpretability methods such as ablating attention heads and path patching, it's important that (as with the Redwood IOI research) these prompting methods should only involve a single forward pass. In other words, prompt-based tasks must be accomplished by the generation a single output token (unlike the examples illustrated immediately above). An obvious approach to the "letter presence" task, given this constraint, would be to use use multi-shot GPT-J prompts like this:

Q: Does the string "human" contain the letter "e"? (Y/N)

A: N

Q: Does the string " rabbit" contain the letter "b"? (Y/N)

A: Y

Q: Does the string " peace" contain the letter "g"? (Y/N)

A:The idea is to carry out a single forward pass of this (where the ' peace' token embedding, or whichever one takes its place, can be altered in various ways, or attention heads can be ablated, or paths patched) and then compare the respective logits for the tokens ' Y' and ' N' [3] as a way to elicit and quantify this character-level knowledge.

Obstacle: Multiple forward passes / chain of thought

Needing a control before any interventions were made, we ran the above prompt with various (token, letter) combinations replacing (' peace', 'g'). It was puzzling how badly GPT-J performed on the task, including when the number of shots was varied. It can often correctly spell the token in question when prompted to do so, or at least misspell it in such a way that includes the relevant letter, as is seen with the misspellings of "broccoli" and "Einstein" above. And prompting GPT-J to produce an unordered list of letters belonging to the token string also tends to successfully answer the question posed in the letter presence task. But the above prompting method leads to results not much better than guessing – the model tends to say "Y" to everything.

GPT-J displays evidence that it "knows" there's not a 'g' in " peace" when you ask it for a spelling or a list of letters...

...and yet it responds incorrectly to the two-shot prompt above. How can this be?

The answer may be related to the fact that asking GPT-J to spell a word, or even just to produce an unordered list of letters it contains, involves the iteration of multiple forward passes, allowing some kind of sequential operation or "chain of thought reasoning" to occur. This recalls the observation from the original Spelling Miracle post [LW · GW]:

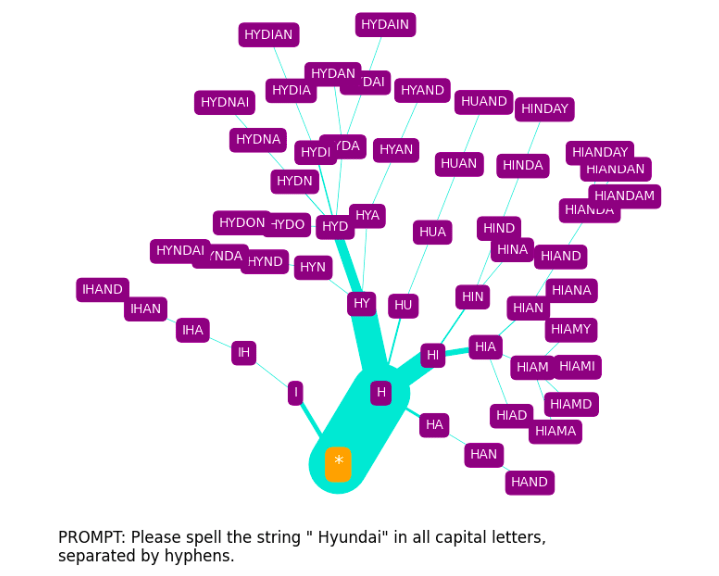

....a "spelling", as far as a GPT model is concerned, is not a sequence of letters, but a tree of discrete probability distributions over the alphabet, where each node has up to 27 branches {A, B, ..., Z, <end>}.

A GPT spelling can be understood in terms of a chain of prompts, each conditioned on the output of the previous one. The following example is suitable for a model like GPT-3 davinci-instruct-beta, whereas GPT-J, being a base model not trained to follow instructions, would require a multishot prompting approach easily adapted from this:

The knowledge of the letter "a" being present in " unavailable" is somehow inferred at some point in this multi-pass process, despite having defied all efforts to reliably access it with a single forward pass:

Likewise, in this example where letter order is no longer involved in the task (again, suitable for an instruct-type model, but easily adaptable to base models via multi-shot prompting), there's a sequence of steps which is seemingly being exploited to eventually predict a "K" or "k" token...

...which is not predicted with this single forward pass:

Solution: Focus on first-letter information

Regardless of the veracity of the "chain of thought" explanation, an inability to get decent letter-presence task results with single forward passes led to the decision to focus instead on the 26 analogous "initial letter" tasks. These involve classifying tokens not according to whether they contain, say, a 'k' or 'K', but whether they begin with a 'k' or 'K' (ignoring any leading space). Training the 26 relevant linear probes, again with no real hyperparameter tuning, led to even better F1 results:

The following multishot prompt template satisfies our requirement of eliciting GPT-J's first-letter knowledge with a single forward pass (i.e. a single-token output):

The string " heaven" begins with the letter "H"

The string " same" begins with the letter "S"

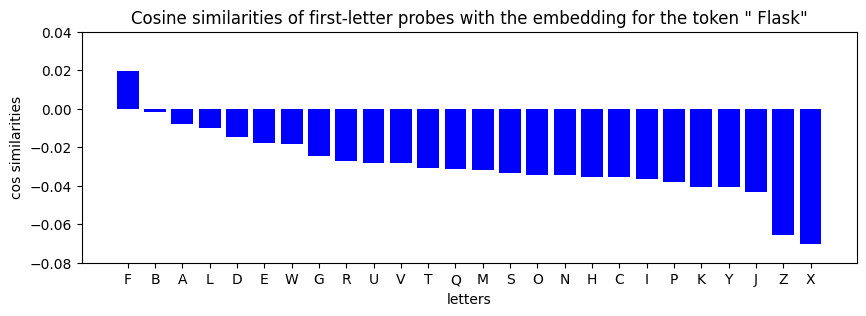

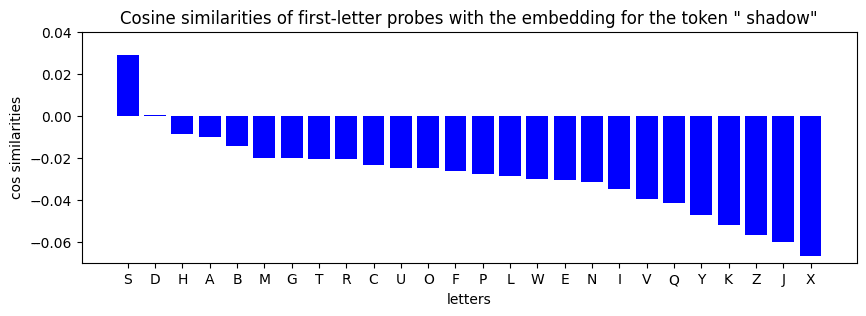

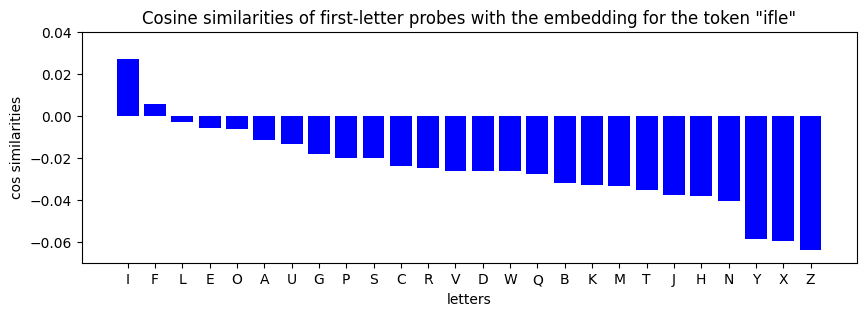

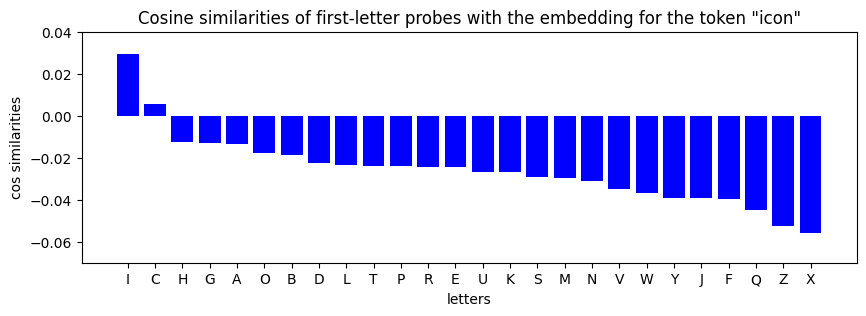

The string " peace" begins with the letter "However, we're no longer dealing with a binary question like "Does the string '<token>' contain the letter '<letter>'? Rather than binary classification, the task here is "predict the first letter in the string '<token>'". So, for an initial probe-based approach to this, rather than directly applying any of the 26 binary classifiers associated with our first-letter linear probes, we will simply compute the cosine similarities between each of the 26 probe vectors and the token embedding vector, and then choose the greatest. This will provide our prediction for the first letter of the token string.

In simple geometric terms, if the embedding vector is aligned with the 'P' first-letter probe vector to a greater extent than it is with any of the other 25 first-letter probe vectors, we will predict that 's token string begins with 'p' or 'P'.

Although originally just a matter of expedience, the move away from binary tasks turns out to be important, because the pattern of errors (in tokens where either prompt- or probe-based method predicts the wrong first letter) appears to reveal something about a mechanism involved in GPT spelling capabilities, as we shall see below.

Cosine similarity with probe vectors

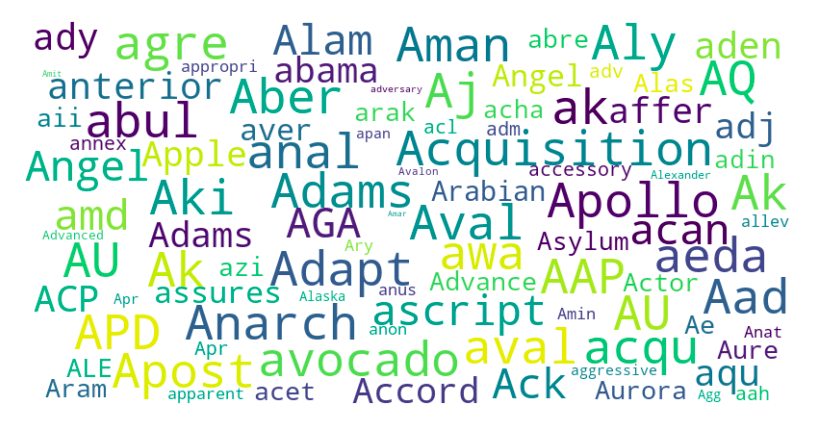

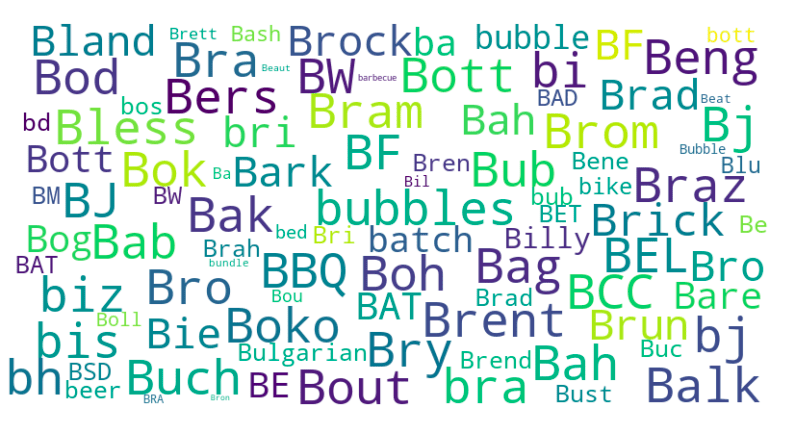

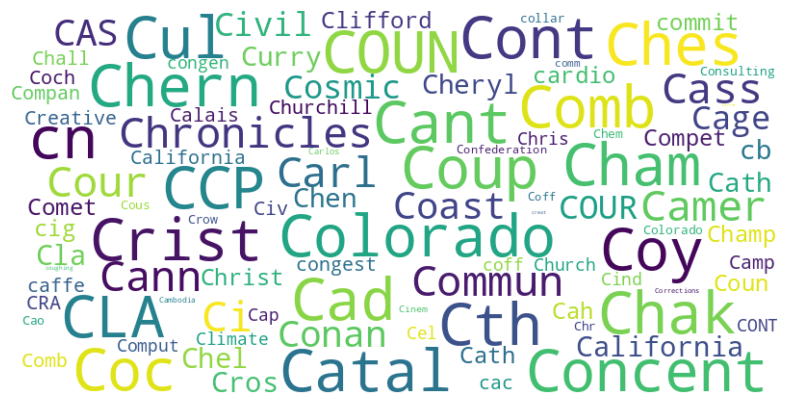

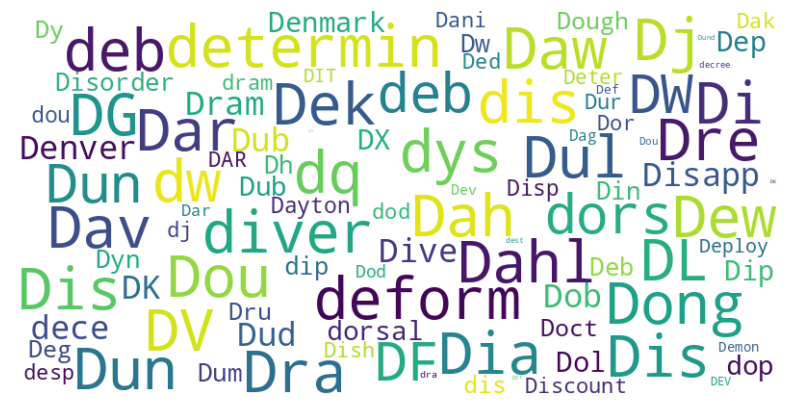

Reassuringly, for each first-letter probe vector, lists of tokens whose embeddings have the greatest cosine similarities with it will contain nothing but tokens beginning with the letter in question (here larger font size corresponds to greater cosine similarity and leading spaces are not included):

|  |

|  |

Depending on the letter, the first few hundred or thousand most cosine-similar tokens to the associated first-letter probe vector all begin with that letter:

{'A': 739, 'B': 1014, 'C': 1243, 'D': 1293, 'E': 362, 'F': 430, 'G': 1126, 'H': 461, 'I': 165, 'J': 470, 'K': 546, 'L': 1223, 'M': 1481, 'N': 867, 'O': 195, 'P': 1471, 'Q': 190, 'R': 1010, 'S': 2132, 'T': 1176, 'U': 346, 'V': 676, 'W': 697, 'X': 73, 'Y': 235, 'Z': 137}For example, if we arrange the entire set of tokens in decreasing order of their embeddings' cosine similarities with the first-letter probe vector for 'S', the first 2132 tokens in that list all begin with 's' or 'S'.[4] For comparative reference, here's how many tokens begin with each letter (upper or lower case):

{'A': 4085, 'B': 2243, 'C': 4000, 'D': 2340, 'E': 2371, 'F': 1838, 'G': 1334, 'H': 1531, 'I': 2929, 'J': 492, 'K': 555, 'L': 1612, 'M': 2294, 'N': 1032, 'O': 1935, 'P': 3162, 'Q': 200, 'R': 2794, 'S': 4266, 'T': 2126, 'U': 1293, 'V': 824, 'W': 1165, 'X': 78, 'Y': 252, 'Z': 142}

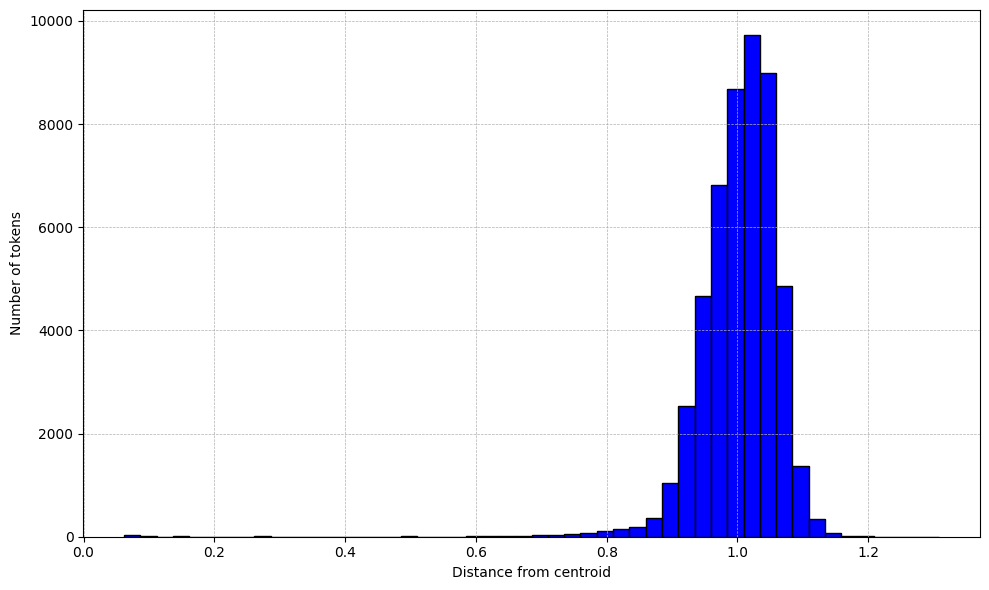

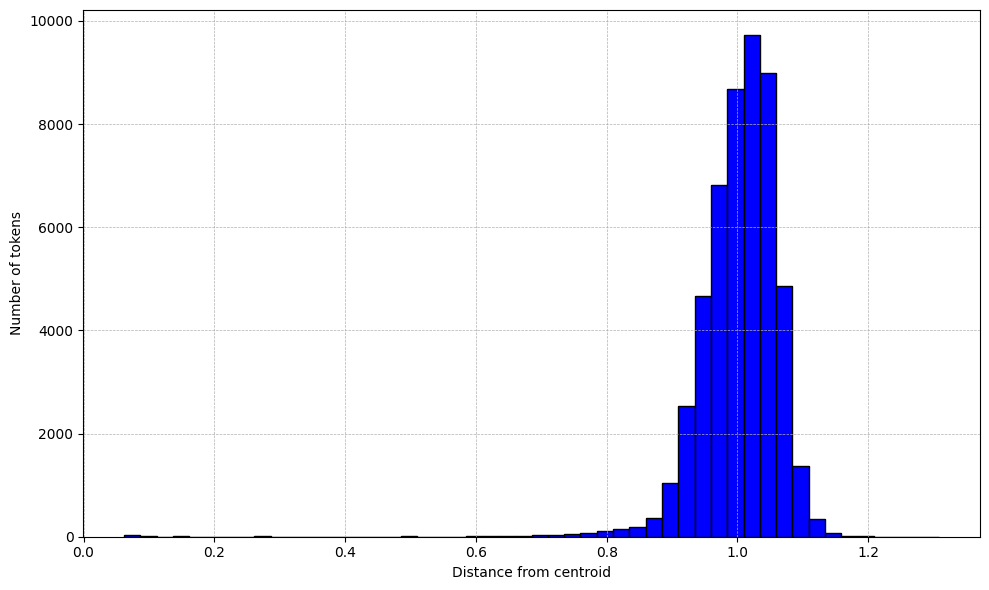

GPT-J tokens embeddings are distributed as a fuzzy hyperspherical shell in 4096-d space, whose centre (the mean embedding or "centroid") is of Euclidean distance 1.7158 from the origin, with the vast majority of embeddings having a Euclidean distance from this centroid between about 0.9 and 1.1.

Within this hyperspherical shell, we can imagine clusters of token embeddings with highest cosine similarity to a given probe vector/direction. So, for example, the first-letter probe vector/direction for 'K' is "cosine surrounded" by hundreds of embeddings belonging to tokens which begin with 'k' or 'K' (ignoring leading spaces).

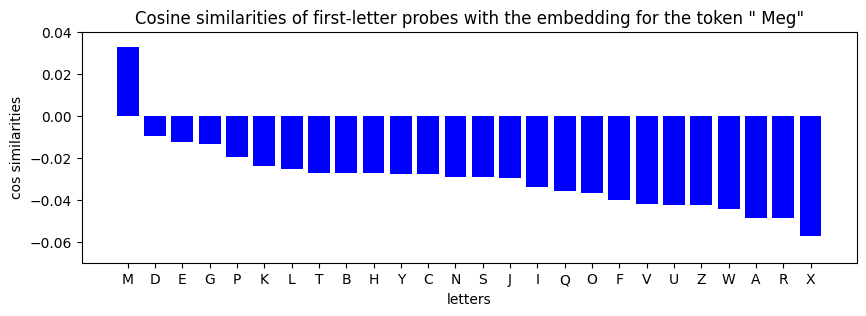

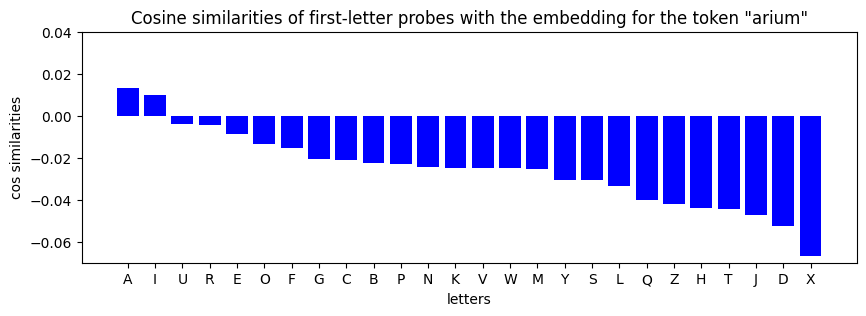

Repeatedly choosing a token and then ordering the first-letter probes according to their cosine similarities with its embedding (as shown in the bar charts below), we see that the top-ranking first-letter probe almost always matches the token's first letter, while the token's subsequent letters are often heavily represented among the other highly ranked first-letter probes. Also, for a majority of tokens, the largest cosine similarity is the only positive value of the 26. The positive and negative values are generally negligible, suggesting that a token embedding will tend to point very slightly towards the direction of the relevant first-letter probe vector, and very slightly away from all of the others (with perhaps one or two exceptions).

First-letter prediction task: Comparing probe- and prompt-based results

The probe-based method (choosing the greatest of 26 cosine similarities) was found to slightly outperform the prompt-based method on the first-letter prediction task. The latter involved zero-temperature prompting GPT-J with the following template:

The string " heaven" begins with the letter "H"

The string " same" begins with the letter "S"

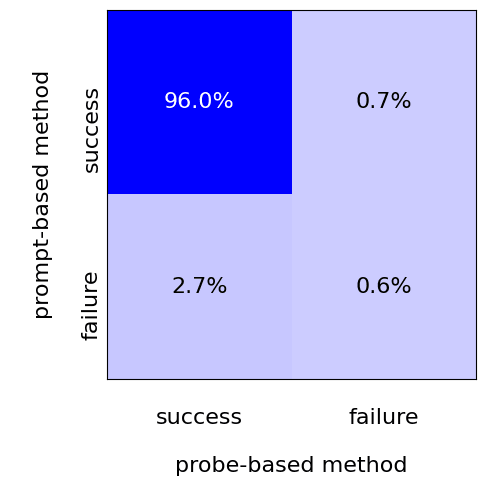

The string "<token>" begins with the letter "This outputs the correct letter for ~96.7% of tokens, while the probe-based method achieves ~98.7% accuracy.[5] The overlap between prompt- and probe-based failures is fairly small, as can be seen from this confusion matrix:

Regardless of this, the similarity of the two methods' accuracies can only add to the suspicion that GPT-J's ability to succeed on the prompt-based task is somehow dependent on its exploitation of the first-letter probe directions. After all, with access to the 26 directions, all that's needed to achieve GPT-J's level of predictive accuracy is the ability to take dot products and compare quantities.

Mechanistic speculation

Numerous experiments conducted thus far suggest that linear probes can be trained to (with varying degrees of success) classify tokens according to letter presence, first letters, second letters, third letters, etc., first two letters, first three letters, number of letters, numbers of distinct letters, capitalisation, presence of leading space and almost any other character-level property that can be described. Looking at lists of token embeddings with greatest cosine similarity to these learned probe vectors/directions, we find clusters displaying the property that the probe in question was trained to distinguish (hundreds or thousands of tokens with leading spaces, or starting "ch", or ending "ing", or containing "j", or starting with a capital letter). Access to an appropriate arsenal of these probe directions could easily form the basis of an algorithm capable of spelling with the kind of accuracy that's seen in GPT-J outputs.

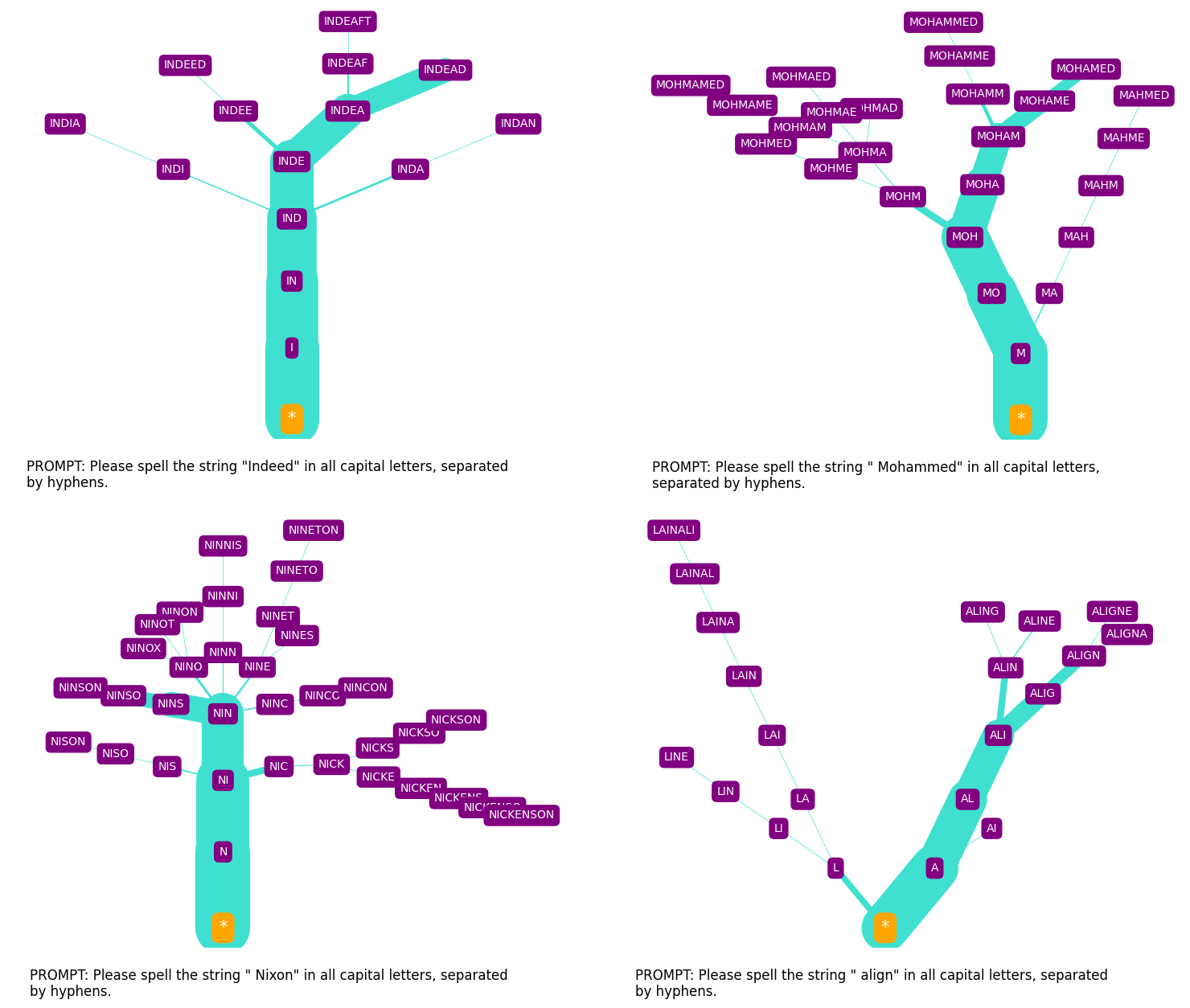

Looking at large numbers of GPT-3 "spelling tree" visualisations like the " Hyundai" one included above, one can perhaps sense the kind of fuzzy, statistical algorithm that this would likely be. Future work will hopefully explore the possibility that phoneme-based probe directions exist. If they do, their use in GPT spelling algorithms might account for the phonetically correct misspellings that are commonly seen in GPT-3's constrained spelling outputs [LW · GW] (here, for example, "INDEAD", "MOHAMED", "NICKSON", "ALINE"):

Of course, even if turns out to be the case that these linear probe directions are being exploited as hypothesised, that wouldn't explain away the "spelling miracle", but rather reformulate it in terms of the very broad question "By what mechanisms during training did the token embeddings arrange themselves geometrically in the embedding space so that this kind of probe-based inference is possible?"

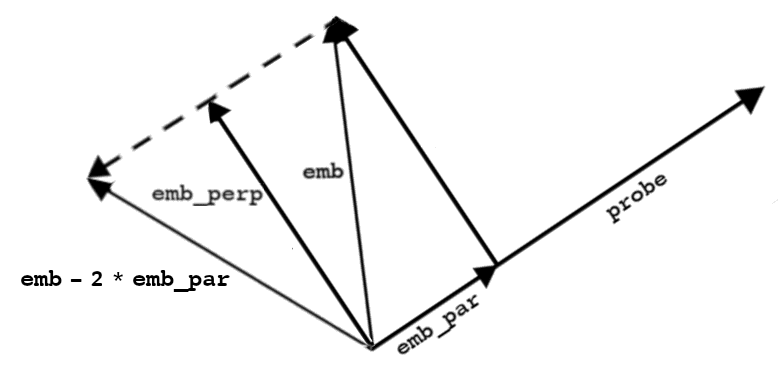

Removing character-level information by "subtracting probe directions"

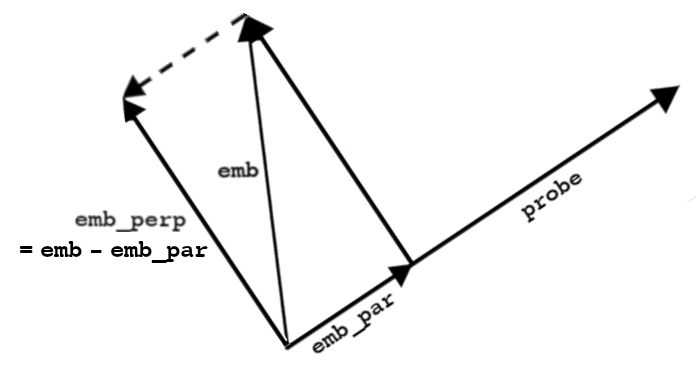

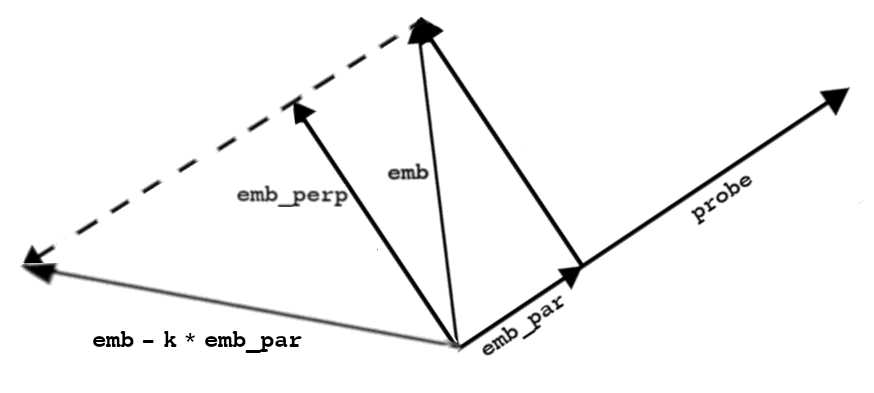

To explore the possibility that linear probe directions like the ones described above are involved in GPT-J spelling abilities, attempts were made to remove "first-letter information" from token embeddings by decomposing a given embedding vector into components parallel and orthogonal to the relevant first-letter probe, then subtracting the parallel component.

emb_perp lies in the orthogonal complement of probe

Note that by doing this, we're orthogonally projecting the vector into the vector's orthogonal complement, that hyperplane/subspace consisting of all embedding vectors orthogonal to the probe vector.

The set of 50257 tokens was filtered of anything that didn't consist entirely of upper or lower case letters from the English alphabet (and a possible leading space), leaving 46893 "all-Roman" tokens, as they shall hereafter be called. For over 1000 randomly sampled all-Roman tokens, this orthogonal projection was performed on their embedding , and the result, , was inserted into the following prompt template:

The string " heaven" begins with the letter "H"

The string " same" begins with the letter "S"

The string "<emb_perp>" begins with the letter "As a concrete example, the token ' peace' has its embedding vector decomposed into the sum of vectors parallel and perpendicular to the 'P' first-letter probe vector , then the parallel component is subtracted. The resulting embedding then necessarily has a dot product of zero with the probe vector, i.e.

All 26 of the first-letter linear probes which were trained have a negative bias. This is presumably due to data imbalance (if we look at the largest of the "first letter" classes, there are 4266 tokens beginning with 'S' or 's' and 42627 tokens not beginning with 'S' or 's', a ratio of 10:1). In any case, it means that the classifier learned for a given first letter will classify an appropriately projected as not beginning with that letter, since and the sign of is what determines the classification of an embedding .

Projection: First-letter information still largely present

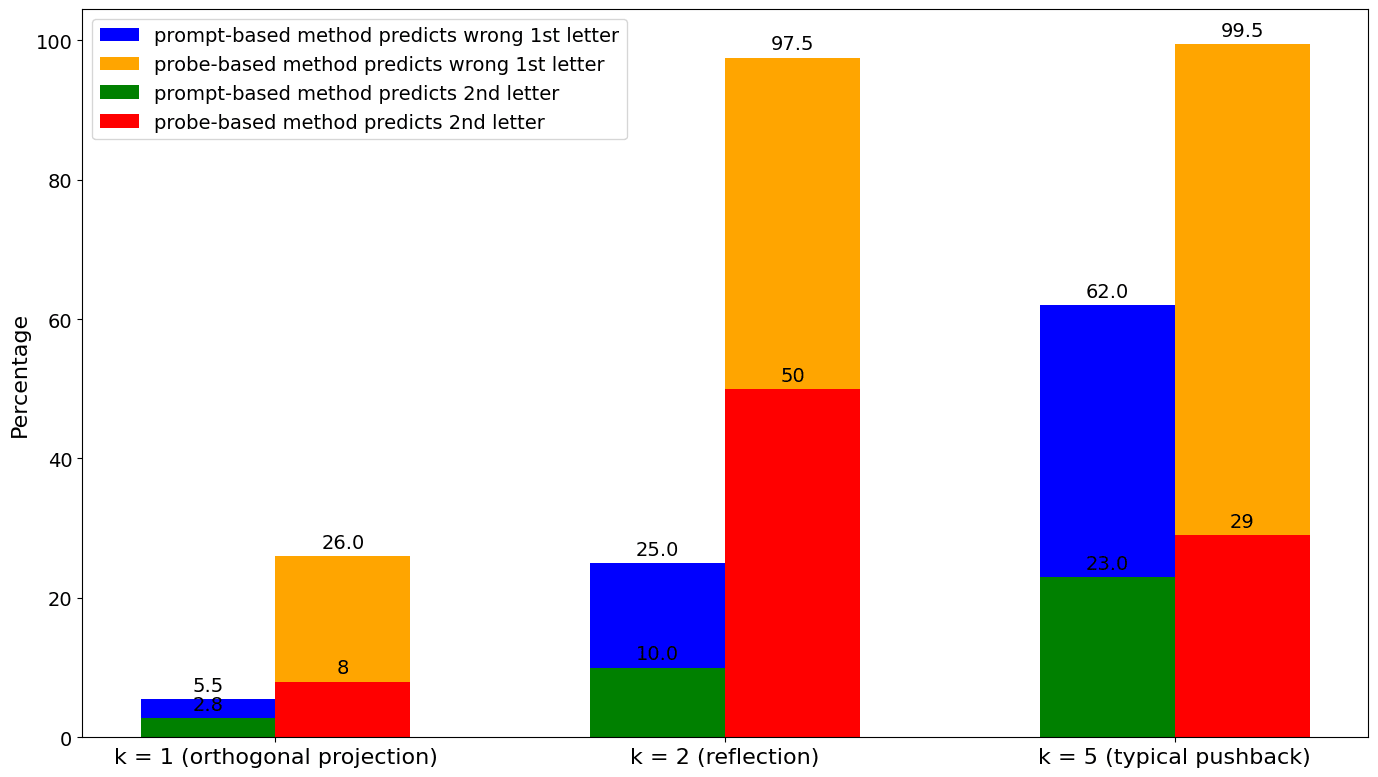

Perhaps surprisingly, for ~94.5% of tokens, GPT-J is still able to predict the correct first letter after this orthogonal projection of their embeddings. Promisingly, though, for half of the ~5.5% of tokens for which it predicts the wrong first letter, that incorrect prediction matches the token's second letter.

This suggests that first-letter probe directions are somehow exploited by GPT-J's first-letter prediction algorithm, but the associated classifiers that were learned in its training – those whereby an embedding is positively classified as beginning with the relevant letter iff – are not, otherwise 100% of these orthogonally projected embeddings would be predicted as not beginning with the letter, rather than a mere 5.5%.

So, is GPT-J using something like the simple "most cosine-similar probe" method introduced earlier? Recall from above that the first letter of a randomly sampled token can be predicted with ~98.7% accuracy by identifying which of the 26 first-letter probe vectors has the greatest cosine similarity with the token embedding.

This speculation is supported by a simple experiment over the entire set of all-Roman tokens. After orthogonally projecting a token embedding to (using the probe direction associated with the token's first letter), in 74.4% of cases, the first-letter probe with the greatest cosine similarity to remains the one matching the token's first letter. These are tokens like " Meg", where the "correct" first-letter probe (here 'M') is the only one to have a positive cosine similarity with the embedding, so even when reduced to zero by the orthogonal projection, it remains the greatest of the 26 values:

But for the remaining 25.6%, where the "most cosine-similar probe" method predicts the incorrect first letter after orthogonal projection, we can examine the pattern of errors:

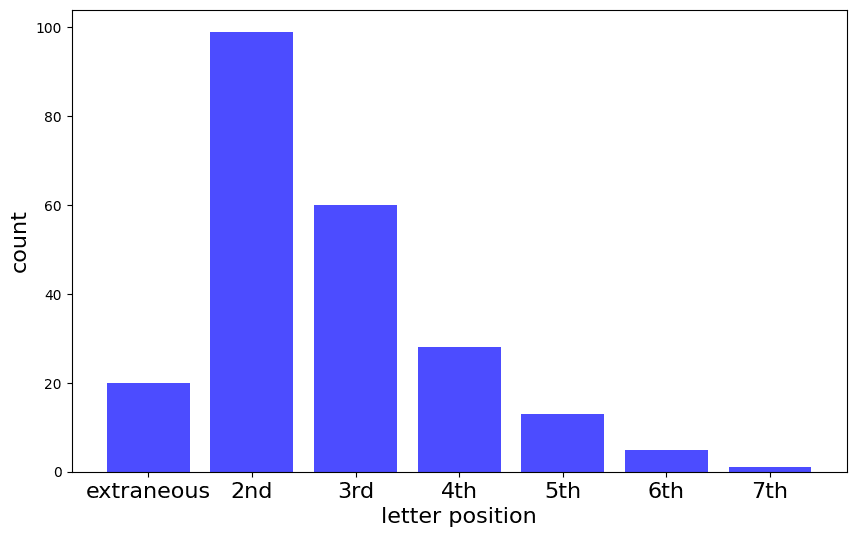

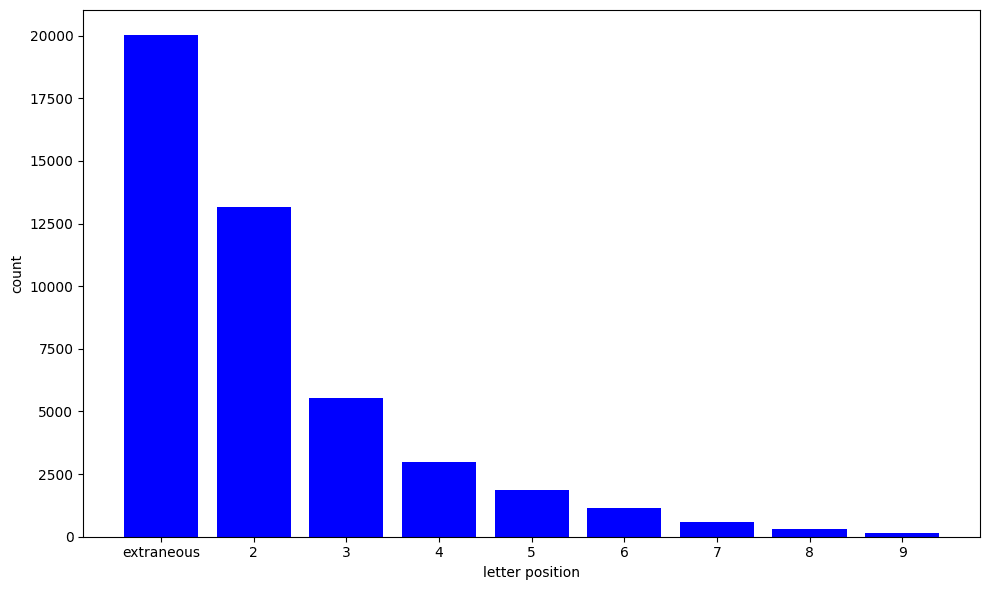

Over the entire set of 12005 (25.6% of the all-Roman) tokens where probe-based prediction gives the wrong first letter after orthogonal projection, these mis-predictions break down as follows:

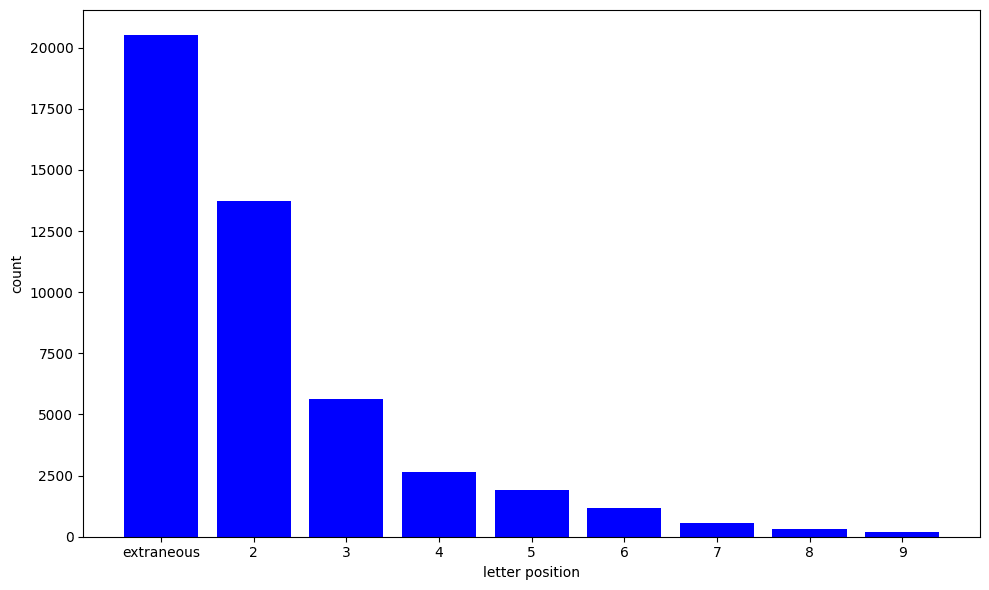

So you can "remove all the P-direction" from the ' peace' token embedding (for example) using orthogonal projection, but 3/4 of the time, the resulting embedding will still have greater cosine similarity with the relevant first-letter probe (in this case 'P') than with any of the 25 others (the former will be zero, the latter will all be negative). However, in the 1/4 of cases where this isn't the case, the new closest first-letter probe has a 2/3 probability of matching another letter in the token, with second position by far the most likely and a smoothly decaying likelihood across subsequent letter positions.

Reflection: First-letter information begins to fade

If GPT-J is indeed predicting first letters of tokens by identifying first-letter probes with greatest cosine similarity to their embeddings, then to reliably "blind it" to the first letter of a token, we need to geometrically transform the token's embedding to something whose cosine similarity with the relevant first-letter probe is less than 0.

The idea is to "subtract even more of the probe direction" from the token embedding, like this:

Instead of orthogonally projecting the embedding vector into the orthogonal complement of the probe, we reflect the embedding vector across it. The result will have a negative cosine similarity with the vector.

This produces better results. Running prompts with these "reflected" embeddings inserted, 25.2% of tokens now have their first letter wrongly predicted by the GPT-J prompt output (as opposed to just 5.5% for "projected" embeddings). 91% of these mis-predictions match another letter in the token (most likely the second), as seen here, suggesting that this reflection operation can often "blind the model" to the true first letter in the token:

Running a probe-based experiment to predict first letters after performing this reflection on the entire set of all-Roman token embeddings, we find that 97.5% of tokens now have their first letter wrongly predicted (i.e., the first-letter probe with greatest cosine similarity to the reflected embedding no longer matches the token's first letter). 29% of these mis-predictions match the token's second letter and again we see a similarly shaped distribution across letter positions as was seen for the prompt-based prediction method.

The probability of an extraneous letter being predicted by the "most cosine-similar probe" method has now gone up from 34% (seen for orthogonal projection) to 44%, but the remaining probability mass is distributed across the letter positions according to a similar rate of decay.

Pushback: First-letter information reliably disappears

The natural next step is to replace the factor of 2 in by an arbitrary scaling factor k, so that corresponds to:

- the original embedding when k = 0;

- the orthogonal projection when k = 1;

- the orthogonal reflection when k = 2;

- further "pushing back" away from the probe direction for k > 2:

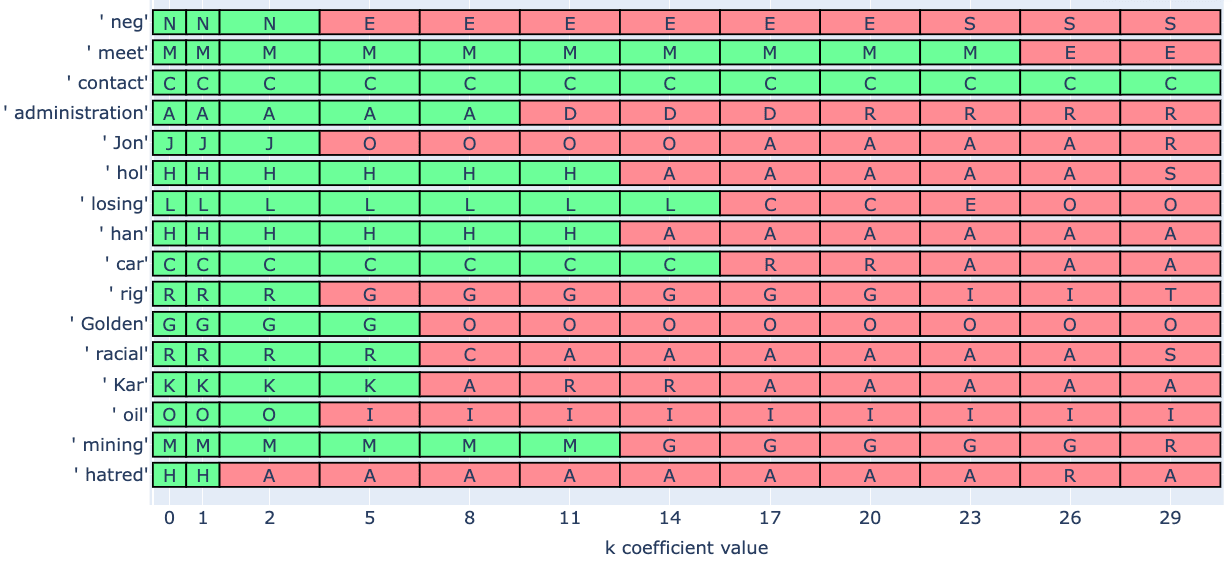

At this point we start to see significant results. Running prompting experiments on randomly sampled tokens where the coefficient k was set to each of 0, 1, 2, 5, 8, 11, 14, 17, 20, 23, 26, 29[6]produced outputs like these:

We see that mutating the ' neg' token embedding by orthogonal reflection across the 'N'-probe’s orthogonal complement (i.e., using k = 2) does not change the correct first letter prediction, but if we push the embedding back further by increasing k to 5, GPT-J switches its prediction to 'E', the second letter. Likewise, mutating the ' administration' token embedding with k = 11 switches the prediction from 'A' to 'D' and mutating the 'han' embedding with k = 14 switches the prediction to 'A'. The token ' hatred' is an example of the 25.2% of tokens for which GPT-J switches its prediction of the first letter as soon as k = 2 (and one of the 10.3% where it switches to predicting the actual second letter of the token).

We can see that in the case of ' racial', when k = 8 the first letter prediction becomes 'C', the third letter. For ' car' we have to increase k to 17 to switch the prediction, at which it becomes 'R', again the third letter.

The first letter prediction for ' contact' stays 'C' across the range of k values tested for. For ' losing', the first change occurs at k = 17 where we get 'C', a letter extraneous to the token.

It seems that for sufficiently large values of the coefficient k, we can push back a token embedding in the opposite direction of the relevant probe vector and cause GPT-J prompt outputs to mis-predict the first letter. The necessary value of k varies from token to token, and usually exceeds what is necessary for the probe-based method to begin mis-predicting the token's first letter.

Probe-based prediction after pushback with k = 5

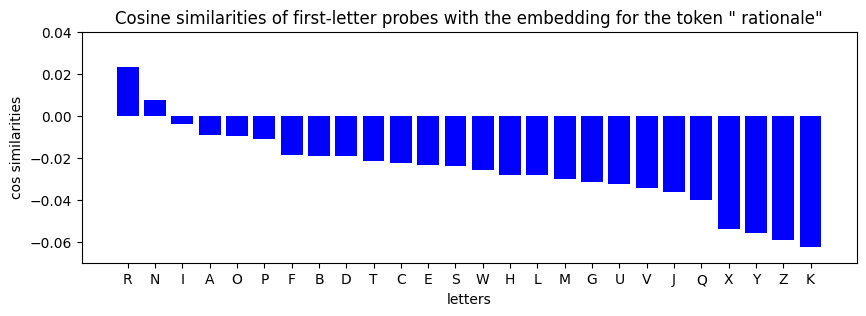

Looking at probe-based predictions on the entire set of all-Roman tokens after applying the geometric "pushback" transformation with k = 5, we find that only 0.5% of tokens having their first letter correctly predicted now (bear in mind that random guessing would score ~4%). Pushing back that far and thereby approaching minimum possible cosine similarity with the relevant first-letter probe vector seems to almost guarantee that the model will not predict the correct first letter. Its mis-predictions for the first letters of tokens distribute like this:

The probability of an extraneous letter being predicted by the probe method has remained at 44% (as it was for k = 2), and again we see a similar rate of decay for subsequently positioned letters matching the mis-prediction, with the second letter matching it in 52% of these cases.

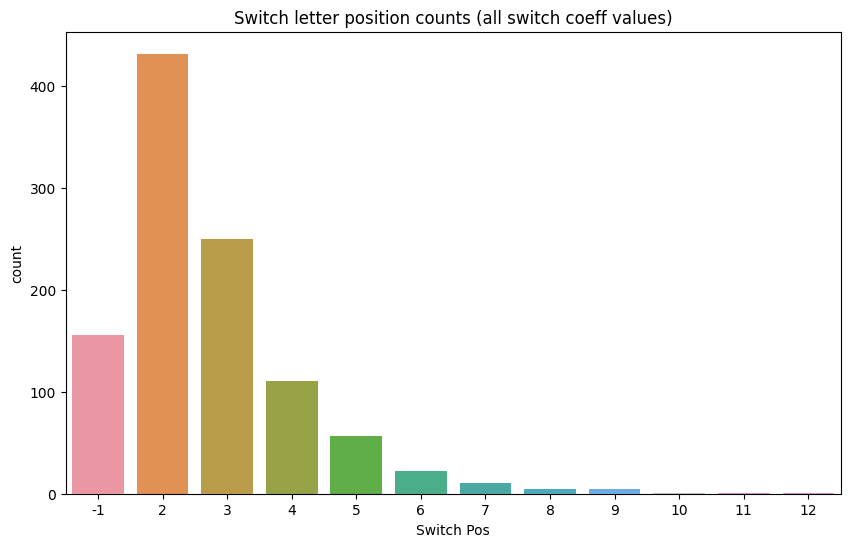

Prompt-based predictions as k varies

The following bar chart shows results for 1052 tokens (sampled randomly from the subset of all-Roman tokens), where GPT-J prompts were run on pushed-back embeddings for k values 0, 1, 2, 5, 8, 11, 14, 17, 20, 23, 26 and 29. Again, the 2-shot prompt template was this:

The string " heaven" begins with the letter "H"

The string " same" begins with the letter "S"

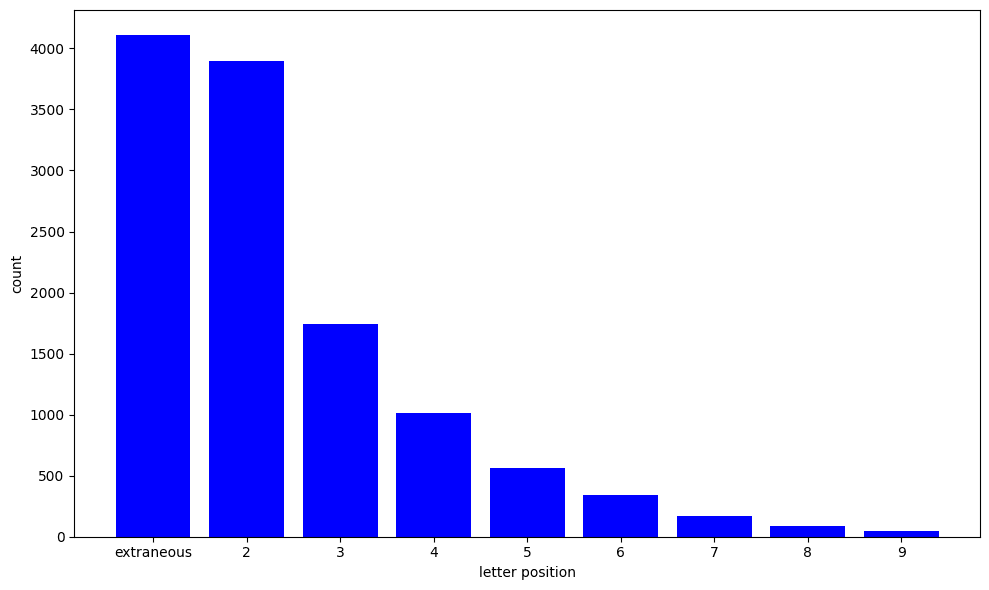

The string "<emb*>" begins with the letter "We see that for ~42% of these tokens, at the smallest tested k value for which GPT-J switches first-letter prediction (i.e. where the green rectangles turn red in the diagram above), the new prediction matches the token's second letter. The drop-off for letters deeper into the token string follows the same kind of decay curve we've seen for both prompt- and probe-based predictions, roughly halving with each subsequent letter position. For only 14% of the tokens, the new prediction is a letter extraneous to the token (the column labelled "–1").

6th letter: 2%; 7th letter: 1.1%; 8th letter: 0.4%; letter extraneous to token: 13.7%

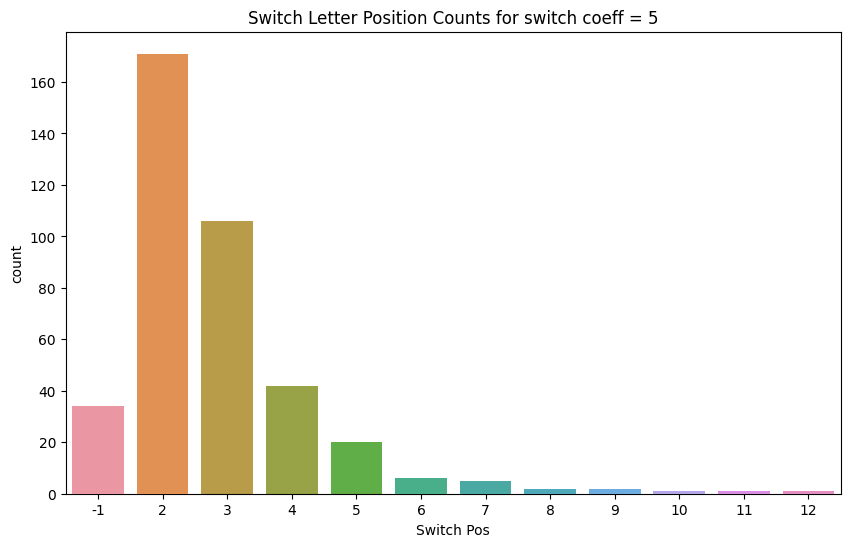

Further bar charts were produced from the same data, but counting "switch letter positions" for specific (rather than all) k values. These all showed the same kind of decay curve. Here's the chart for k = 5:

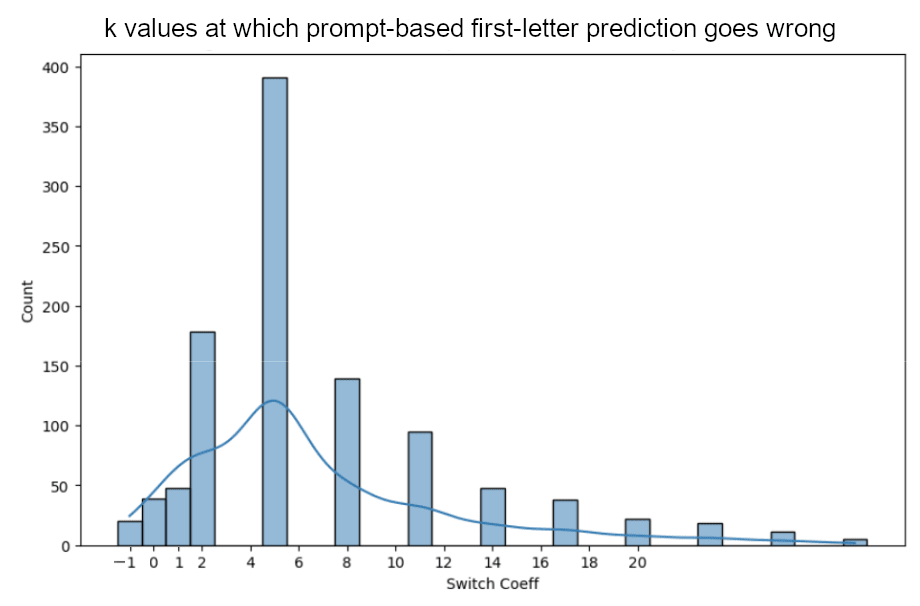

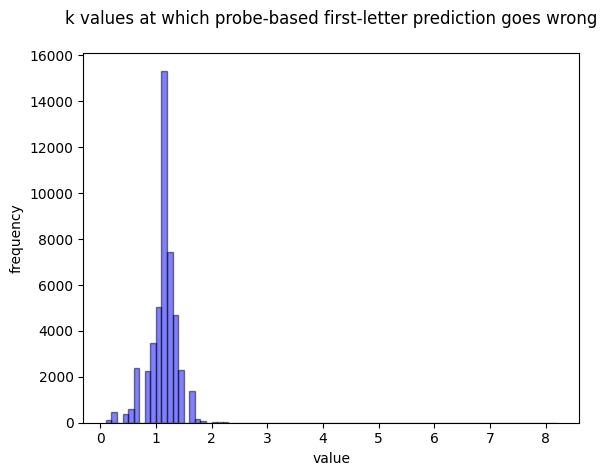

The following histogram shows the smallest k coefficient at which GPT-J ceases to predict the correct first letter (the bin marked "–1" counts those tokens where no switch was observed, i.e. the correct first letter continues to be predicted up to k = 29). In simplest terms, this can be thought of as showing "how far you have to 'push back' token embeddings to blind GPT-J to their first letter":

Further histograms were produced from the same data, but instead counting tokens where the "switched" first-letter prediction corresponds to the nth letter of the token, for the same range of k values (a histogram for each of n = 2, 3, 4, 5, 6). Again, these all display a similarly shaped curve. Generally, a k value of around 5 seems to be optimal for "blinding the model to the first letter of a token", regardless of what it then ends up "thinking" is the first letter.

An analogous but more fine-grained[7] histogram for probe-based first-letter prediction was easily produced for the entire all-Roman token set. This echoes the prompt-based histogram, but rescaled horizontally by a factor of about 1/4.

Interpretation

The similar decay curves and histograms seen for prompt- and probe-based approaches to first-letter prediction suggest that cosine similarities with first-letter linear probe vectors are a major factor in the algorithm GPT-J employs to predict first letters of tokens. But consider the discrepancies we've seen in how first-letter predictions go wrong as we increase our scaling coefficient k:

Probe-based prediction goes wrong a lot faster as we increase k and push the embedding back from the relevant first-letter probe direction. Consequently, k can take values such that the "correct" first-letter probe is no longer the closest one to the pushed-back embedding, but GPT-J still correctly predicts the first letter of the token. On average, these k values can get about four times larger than the point at which the probe method fails. This suggests that GPT-J's first-letter prediction algorithm makes use of cosine similarities with first-letter linear probe vectors, but must also involve other mechanisms.

Worthy of further consideration are the rare tokens like ' contact', where we can geometrically transform the embedding with a k value as large as 29 and still not disable GPT-J's ability to predict the correct first letter. The existence of these lends further credence to the idea that there's a heterogeneity involved in first-letter information, that it's not encoded solely or entirely in terms of these probe directions.

Preservation of semantic integrity

An important question at this point is this: have we not just "broken" the token embeddings, pushing them back so far that GPT-J is blinded to their first letter, but also such that it no longer recognises their meaning? Or is it possible that the linear separability which the existence of these probes points to is such that we can cleanly remove a token's first-letter information and leave the semantic integrity of the token intact?

To test this, we can use the very simple GPT-J prompt

A typical definition of '<token>' isA set of 189 tokens was selected from the original sample of 1052 such that for each one:

- the token string is a whole English word (not a subword chunk, proper name, place name, acronym, abbreviation or in all capitals);

- GPT-J correctly predicts its first letter for k = 0 (i.e., the original token embedding);

- GPT-J begins to incorrectly predict its first letter for some value of k > 0;

- this mis-prediction corresponds to the second letter in the token string.

The idea is to run the prompt for increasing k values and observe whether GPT-J still understands the meaning of the token at the point where it starts to "forget" its first letter (e.g., can we geometrically transform the " elbow" token embedding by increasing k to a point where GPT-J claims its first letter is "l" or "b", but still defines it as "the joint of the upper arm and forearm"?)

For each token considered, pushbacks of the embedding were calculated for k values 1 (orthogonal projection onto the orthogonal complement of the relevant first-letter probe), 2 (reflection across this orthogonal complement), 5, 10, 20, 30, 40, 60, 80 and 100. Prompts were run at temperature ~0 with an output length of 30 tokens. The k = 0 version acted as a control, showing how GPT-J defines the word in question when prompted with its untransformed token embedding.

Semantic integrity is often preserved for large

In almost all cases considered, the k value can be increased well beyond the threshold required to cause GPT-J to "forget" the first letter of a token while still preserving semantic integrity. Here's a typical example, for the token ' hatred', for which GPT-J switches its first-letter prediction from 'h' to 'a' at :

A typical definition of ' hatred' is

k=0: a strong feeling of dislike or hostility.

k=1: a strong feeling of dislike or hostility toward someone or something.

k=2: a strong feeling of dislike or hostility toward someone or something.

k=5: a strong feeling of dislike or hostility toward someone or something.

k=10: a strong feeling of dislike or hostility toward someone or something.

k=20: a feeling of hatred or hatred of someone or something.

k=30: a feeling of hatred or hatred of someone or something.

k=40: a person who is not a member of a particular group.

k=60: a period of time during which a person or thing is in a state of being

k=80: a period of time during which a person is in a state of being in love

k=100: a person who is a member of a group of people who are not members of

This is reassuringly consistent with the possibility that first-letter information is, to a great extent, encoded linearly and is thereby separable from other features of a token.

Semantic decay

As the value of k is increased and the token embedding is thereby pushed further and further back relative to the direction of the first-letter probe vector, a kind of "semantic decay" becomes evident.

Often by around k = 20 the definition has become circular (as seen in the ' hatred' example above), or too specific, or in some other way misguided or misleading, but is still recognisable – perhaps the sort of definition we might expect from a small child or someone with learning difficulties.

By k = 100, all meaning appears to have drained away, and we're almost always left with one of several very common patterns of definition such as "a person who is not a member of a group", "a small amount of something", "a small round object" or "to make a hole in something". Investigating this puzzling phenomenon, we found that these generic definitions reliably start to appear when a token embedding is pushed far enough along any randomly chosen direction in embedding space. Directions learned by linear probes need not be involved – they seem to be a separate matter. Interestingly, the phenomenon also seems to apply to GPT-3, so it's not model specific. The key factor seems to be distance-from-centroid (the centroid being the mean embedding associated with the 50257 GPT-J tokens).

Examples of semantic decay and further discussion can be found in Appendix B [? · GW].

Introducing character-level information by "adding probe directions"

Having found that it's possible to remove first-letter information from token embeddings with vector subtraction, we now consider the possibility of introducing it with vector addition.

The following simple transformation was applied to token embeddings, where letter_probe is a first-letter probe weights vector for a randomly selected letter (which we require to differ from the original_embedding token's first letter):

new_embedding = original_embedding + coeff * letter_probeThis was done for 100 randomly sampled all-Roman tokens with three random letters applied to each, according to the above formula. Various values of the coefficient were tried and it was found that around 0.005 seems to work well, in that about 94% of the 300 prompt outputs predicted the "newly added" first letter.

Again, for prompt-based first-letter prediction, we use this two-shot prompt template, inserting our :

The string " heaven" begins with the letter "H".

The string "same" begins with the letter "S".

The string "<new_embedding>" begins with the letter "To see how well the semantic integrity is preserved in each case, we insert the original and transformed token embeddings into the following prompt template:

A typical definition of '<embedding>' is These prompts were run with 30-token outputs so that it was possible to monitor how far definitions had drifted from the control definition (the one that GPT-J would produce given the untransformed embedding). Some illustrative examples follow.

Examples of first-letter probe addition

(These all involved the scaling coefficient 0.005.)

token: ' body'

Here the method succeeds in "tricking" GPT-J into believing the word/token has a different first letter in two of the three cases (in the third it continues to predict a 'B'). Meaning is largely preserved, with some mild semantic decay evident in one case:

control definition: 'the physical structure of a living organism, including the organs, tissues, and cells

random letter 'M' output 'M' (success)

definition: 'the physical structure of a living organism, including the organs, tissues, and cells

random letter 'E' output 'B' (failure)

definition: 'the physical structure of a living organism, including the organs, tissues, and cells

random letted 'O' output 'O' (success)

definition: 'the physical structure of a human being

token: 'bit'

For this token, all three randomly selected letters are predicted by GPT-J as the first letter of the mutated embedding and the definitions are all minor variants of the original.

control definition: 'a binary digit, which is a unit of information

random letter 'D' output 'D' (success)

definition: 'a unit of information' (Wikipedia).

random letter 'W' output 'W' (success)

definition: 'a binary digit, or a unit of information. In the context of computer science,

random letter 'L' output 'L' (success)

definition: 'a binary digit, or a unit of information. In the context of digital computers,

token: ' neighbour'

For this token, two of the three randomly chosen letters are predicted as the first letter after the additions, the third stayed 'N'. The definitions are beginning to drift from the control but are still recognisable.

control definition: 'a person who lives near you' or 'a person who lives in the same

random letter 'X' output 'N' (failure)

definition: 'a person who lives near you' or 'a person who lives in the same

random letter 'S' output 'S' (success)

definition: 'a person who lives near or lives in the same house as you'

random letter 'E' output 'E' (success)

definition: 'a person who lives in the same house as you'

token: 'artisan'

In this case, all three additions cause the prompt output to predict the newly introduced first letter, but the definition has become inaccurate or ineffective in all three GPT-J outputs.

control definition: 'a person who works with his or her hands'

random letter 'V' output 'V' (success)

definition: 'a person who lives in a rural area and works in the fields'

random letter 'W' output 'W' (success)

definition: 'a person who works in a particular field or profession'

random letter 'R' output 'R' (success)

definition: 'a person who is not a member of a particular group'

Compensating for variations in probe magnitude

In all 18 of the failure cases out of the 300 trials, the tokens had their original first letter predicted. And all but two of these involved adding a multiple of the first-letter probe associated with Q, X, Y or Z, which turn out to have the smallest magnitudes of the 26 first-letter probe vectors:

{'X': 86.95, 'Q': 91.85, 'Z': 94.98, 'Y': 103.11, 'K': 114.68, 'U': 117.14, 'O': 118.87, 'J': 119.49, 'N': 119.83, 'E': 121.25, 'I': 122.3, 'V': 123.48, 'G': 123.6, 'W': 125.23, 'L': 125.37, 'H': 127.2, 'R': 127.65, 'T': 128.04, 'A': 128.13, 'S': 131.77, 'F': 132.05, 'B': 132.28, 'M': 132.43, 'C': 132.43, 'P': 132.87, 'D': 134.43}The norm of these 26 magnitudes is 121.05. Dividing this by each of them gives a set of scaling factors which allow us to compensate for short probe vectors. We now use this transformation to add first-letter information:

new_embedding = original_embedding + rescaling_dict[letter] * coeff * letter_probeHere, e.g. rescaling_dict[‘X’] = 121.05/86.95 = 1.39. Multiplying this in guarantees that the vector being added to original_embedding is always of the same magnitude.

Making this change leads to an improvement from 94% to 98% of cases where the vector addition leads GPT-J to predict a specific (wrong) first letter for a token as intended.

Increasing and decreasing the scaling coefficient

Increasing the coefficient from 0.005 to 0.01 leads to ~100% success on this task, but then semantic decay becomes a major problem. The vector addition now pushes token embeddings to positions at L2 distances ~1.5 to ~1.7 from the centroid, well outside the spatial distribution for tokens.

As mentioned earlier, and discussed in Appendix B [? · GW], distance from centroid appears to be key to the emergence of a handful of recurrent themes and patterns of definition (group membership, holes, small round objects, etc.). Here are some examples where the coefficient was set to 0.01 and probe magnitudes were normalised as described. In all cases, GPT-J's control definition was reasonable and its first-letter prediction after the probe vector addition matched the letter associated with the probe, as intended.

token: ' evidence'

first-letter probe vector used: A

scaling coefficient (after adjustment): 0.0094

definition of transformed embedding: 'a person who is not a member of a family, clan, or tribe'

distance from centroid to transformed embedding: 1.535

token: ' definitely'

first-letter probe vector used: V

scaling coefficient (after adjustment): 0.0098

definition of transformed embedding: 'a small, usually rounded, and usually convex protuberance on the surface

distance from centroid to transformed embedding: 1.567

token: ' None'

first-letter probe vector used: E

scaling coefficient (after adjustment): 0.01

definition of transformed embedding: 'a person who is not a member of the Church of Jesus Christ of Latter-

distance from centroid to transformed embedding: 1.592

token: ' bare'

first-letter probe vector used: S

scaling coefficient (after adjustment): 0.0092

definition of transformed embedding: 'to make a hole in the ground'

distance from centroid to transformed embedding: 1.614

token: ' selection'

first-letter probe vector used: B

scaling coefficient (after adjustment): 0.0092

definition of transformed embedding: 'a small, usually round, piece of wood or stone, used as a weight'

distance from centroid to transformed embedding: 1.538

token: ' draws'

first-letter probe vector used: S

scaling coefficient (after adjustment): 0.0092

definition of transformed embedding: 'a small, flat, and usually round, usually edible, fruit of the citrus

distance from centroid to transformed embedding: 1.554

Reducing the scaling coefficient to 0.0025 leads to a strong tendency for accurate definitions (i.e. definitions interchangeable with the control), but a complete failure to alter GPT-J's predicted first letter in the desired way.

Because of the compensation mechanism that was introduced, the vectors added are all of magnitude coeff * 121.05 (where 121.05 is the norm of the 26 first-letter probe vectors).

- magnitude 0.0025 * 121.05 = 0.30: tends to leave embedding's distance-from-centroid in distribution; little semantic decay is in evidence; the newly introduced letter is almost never predicted as first

- magnitude 0.005 * 121.05 = 0.61: tends to push embedding to edge of distance-from-centroid distribution; semantic decay becomes evident; the newly introduced letter is usually predicted as first

- magnitude 0.01 * 121.05 = 1.21: tends to push embedding well outside distance-from-centroid distribution; semantic decay becomes widespread; the newly introduced letter is almost always predicted as first

In conclusion, when adding scalar multiples of first-letter probe vectors to token embeddings, there appears to be a trade-off between success in causing GPT-J to predict "introduced" first letters and the preservation of tokens' semantic integrity.

Future directions

Specific next steps

Understanding systematic errors in first-letter prediction: The most striking result we found in this investigation was the ubiquitous exponential decay curve which emerges both when looking at first-letter probe activations for characters at different positions in a token and when looking at GPT-J's pattern of incorrect letter predictions after intervening in the first-letter direction.

Understanding the relationship between probe and prompt-based predictions: Our interventional experiments showed that the probe-based method for first-letter prediction is strongly predictive of model behaviour at runtime; however, it appears that GPT-J is in some sense more robust to interventions on the embedding space than the probe-based method.

Understanding related features (such as phonetics): Character-level information doesn’t exist in a vacuum and may correlate with, for example, phonetic information. Better understanding of how character-level information relates to other information in embedding space may provide many clues about mechanisms that leverage this information and relate to the formation of linear representations of character-level information during training.

Broader directions

Spelling and other task-related phenomena: Language models like GPT-J clearly have a number of abilities related to character-level information, but our understanding is that both the abilities and failure modes have yet to be comprehensively evaluated. While safety-related evals have been rising in popularity, we’re excited about mapping character-level abilities in different models which may provide a wealth of observations to be explained by a better understanding of language models and deep learning.

Understand the distribution of probe activation intensity: GPT-J is not equally good at spelling all words, and probes aren’t equally effective on all words. Determining the distribution of probe effectiveness over words and whether this is predictive of GPT-J’s ability to perform character-related tasks may be quite interesting.

Automated / scalable interpretability: Recently published methods which use sparse auto-encoders to find interpretable directions in activation space [LW · GW] may provide an alternative or complementary approach to understanding character-level information in language models. We look forward to using sparse auto-encoders to understand the directions found by linear probes of the kind implemented here. or possibly to replace our use of linear probes with this technique.

Mechanistic interpretability: We presume that character-level information becomes linearly encoded because there are circuits which GPT-J implements that leverage this information. These include circuits for spelling, but also circuits instantiated via in-context learning. We’re excited to study these circuits using interventions on the embedding space and linearly encoded information.

Appendix A: Probe magnitudes

The "letter presence" linear probes have the following L2 magnitudes, ranked from largest to smallest:

{'M': 80.65, 'F': 80.52, 'W': 78.85, 'B': 77.87, 'P': 77.32, 'K': 77.2, 'Y': 75.9, 'V': 75.21, 'L': 74.59, 'D': 74.2, 'H': 74.1, 'S': 73.68, 'G': 73.2, 'X': 72.62, 'J': 71.65, 'R': 71.17, 'C': 70.16, 'T': 69.53, 'Z': 69.3, 'N': 69.11, 'I': 68.24, 'Q': 67.48, 'O': 67.29, 'A': 66.93, 'E': 66.26, 'U': 65.87}Note the five of the six smallest are associated with vowels, and the sixth of these is 'Q' which is invariably adjacent to a vowel. GPT-4 provided a couple of suggestions as to why this might be the case:

Vowels are common across many English words. Given their ubiquity, the probe might need a more nuanced or distributed representation to distinguish words that contain them. As a result, the magnitude of the probe might be smaller because it's "spreading out" its influence across many dimensions to capture this commonality.

Phonetic Importance: Vowels are essential for the pronunciation of words, and their presence or absence can drastically alter word meaning. The embedding space might represent vowels in a more distributed manner due to their phonetic importance, resulting in a smaller magnitude when trying to classify based on their presence.

The first-letter linear probe magnitudes are given as follows:

{'D': 134.43, 'P': 132.87, 'C': 132.43, 'M': 132.43, 'B': 132.28, 'F': 132.05, 'S': 131.77, 'A': 128.13, 'T': 128.04, 'R': 127.65, 'H': 127.2, 'L': 125.37, 'W': 125.23, 'G': 123.6, 'V': 123.48, 'I': 122.3, 'E': 121.25, 'N': 119.83, 'J': 119.49, 'O': 118.87, 'U': 117.14, 'K': 114.68, 'Y': 103.11, 'Z': 94.98, 'Q': 91.85, 'X': 86.95}Note that the five smallest magnitudes are associated with infrequently occurring letters. This was an issue when we experimented by adding multiples of first-letter probes to token embeddings: The small proportion of cases where this didn't alter GPT-J's first-letter prediction almost all involved the probes for Q, X, Y or Z.

The letter presence probe magnitudes are more tightly clustered, with the highest being around 80.6 and the lowest around 65.9. This suggests that detecting the presence of any given letter in a word, regardless of its position, requires fairly consistent magnitudes, albeit with some variation.

The smallest first-letter probe magnitudes are larger than the largest letter presence magnitudes. This could be due to the first letters in words carrying more weight in distinguishing word meanings or usages. For example, certain prefixes or types of words might predominantly start with specific letters.

Note that the first-letter probe magnitudes also have a greater range, over three times that of the letter presence magnitudes.

Appendix B: Semantic decay phenomena

As mentioned above, experiments showed that pushing token embeddings far enough in any direction will produce these kinds of outputs, so it's not something specific to alphabetic probe directions.

Examples of semantic decay

token: ' hatred'

Here we're pushing the token embedding back relative to the direction of the 'H' first-letter probe direction. Based on its prompt outputs, GPT-J becomes blind to the initial 'h' when k = 2. Encouragingly, we see here that the semantic content is fully preserved up to k = 10. Note the circularity that emerges once k = 20, and the "...in a state of being..." and "a person who is (not) a member of a group", both extremely common patterns in GPT-J definitions of token embeddings when distorted such that their distance-from-centroid becomes sufficiently large, as discussed in Appendix B [? · GW].

A typical definition of ' hatred' is

k=0: a strong feeling of dislike or hostility.

k=1: a strong feeling of dislike or hostility toward someone or something.

k=2: a strong feeling of dislike or hostility toward someone or something.

k=5: a strong feeling of dislike or hostility toward someone or something.

k=10: a strong feeling of dislike or hostility toward someone or something.

k=20: a feeling of hatred or hatred of someone or something.

k=30: a feeling of hatred or hatred of someone or something.

k=40: a person who is not a member of a particular group.

k=60: a period of time during which a person or thing is in a state of being

k=80: a period of time during which a person is in a state of being in love

k=100: a person who is a member of a group of people who are not members of

token: ' Missile'

Here we're pushing the embedding back relative to the direction of the 'M' first-letter probe. GPT-J becomes blind to the 'M' in the ' Missile' token when k = 2. Here we see that the semantic content is fully preserved up to k = 5, after which it starts to gradually drift. Note the circularity that emerges once k = 20 and the "a person who is a member of a group":

A typical definition of ' Missile' is

k=0: a weapon that is fired from a tube or barrel.

k=1: a weapon that is fired from a tube or barrel.

k=2: a weapon that is fired from a tube or barrel.

k=5: a weapon that is fired from a tube or barrel.

k=10: a weapon that is designed to be launched from a vehicle and is capable of delivering

k=20: a missile that is launched from a submarine or ship.

k=30: a person who is a member of a group of people who are not allowed to

k=40: to throw, cast, or fling.

k=60: to make a sudden, violent, and often painful effort to free oneself from an

k=80: to make a sound as of a bird taking flight.

k=100: to make a sound as of a bird taking flight.

token: 'Growing'

Here we're pushing back relative the to the 'G' first letter probe vector direction. GPT-J becomes blind to the 'G' in the 'Growing' token when k = 8. Here we see that the semantic content is fully preserved up to k = 10, after which it starts to become circular and then fall into the ubiquitous "member of a group" pattern.

A typical definition of 'Growing' is

k=0: to increase in size, amount, or degree.

k=2: to increase in size, amount, or degree.

k=5: to increase in size, amount, or degree.

k=10: to increase in size, amount, or degree.

k=20: to grow or develop in size, strength, or number

k=30: to grow or increase in size or number.

k=40: to grow or increase in size or number. The word Sting is

k=60: to cause to grow.

k=80: to be a member of a group of people who are trying to change the world

k=100: to be a member of a group of people who are trying to achieve a goal

token: 'write'

Here we're pushing back with reference to the 'W' first-letter probe. GPT-J becomes blind to the initial 'w' when k = 14. Here we see that the semantic content is fully preserved up to at least k = 20. By k = 30, GPT-J's definition has become partly circular and then lapses into first the "small, round <something>" pattern before falling into the "person who is a member of a group" attractor.

A typical definition of 'write' is

k=0: 'to make a record of something, especially a person's thoughts or feelings'.

k=1: 'to make a record of something, especially a person's thoughts or feelings'.

k=2: 'to make a record of something, especially a person's thoughts or feelings'.

k=5: 'to make a record of something, especially a person's thoughts or feelings'.

k=10: 'to make a record of something, especially a person's thoughts or feelings'.

k=20: 'to make a record of something, especially a person's thoughts or feelings'.

k=30: 'to write or compose in a particular style or manner'.

k=40: 'a small, round, hard, dry, and brittle substance, usually of vegetable

k=60: a small, hard, granular, indigestible substance found in the

k=80: 'a small, round, hard, granular body, usually of vegetable origin,

k=100: 'a person who is a member of a group of people who are distinguished from others

Recurrent themes

The following are common themes that show up when enough out-of-spacial-distribution embeddings are probed for definitions.

Group membership and non-membership: Religious themes are common, e.g. "person who is not a member of the clergy", "who is a member of the Church of England", "who is not a Jew", "who is a member of the Society of Friends, or Quakers"; royal/elite themes are also common, e.g. "person who is a member of the British Royal Family", "who is not a member of the aristocracy", "who is a member of the royal family of Japan", "who is a member of the British Empire"; miscellaneous other groups are referenced, e.g., the IRA, British Army's Royal Corps of Signals, the Aka Pygmies, fans of the New Zealand All Blacks rugby team, fans of the rock band Queen; mostly it's just something generic like "a person who is (not) a member of a group".

Small (and often round) things: E.g., "a small, round, hard, dry, and brittle substance", "a small, flat, and usually circular area of land", "a small amount of something", "a small piece of metal or other material, such as a coin", "a small piece of string used to tie a shoe", "a small room or apartment", "a small, sharp, pointed object, such as a needle or a pin", "a small, low-lying area of land, usually with a beach", "small piece of cloth or ribbon worn on the head or neck", "a small, flat, and rounded hill, usually of volcanic origin", "a small, round, hard, dry, and bitter fruit"

Holes and the making of holes: E.g. "to make a hole in something", "a small, usually round, hole in the ground", "to make a hole in something with a sharp object", "to make a hole in the ground, as for a cesspool", "a small hole in the ground, usually with a roof, used for shelter" (references to small, round holes in the ground are especially common)

Power relationships: E.g "to be in the power of another", "to be without a master", "to be without power or authority", "a person who is in the service of a king or a state", "to be in a position of authority over someone or something", "a person who is in a position of authority or responsibility", "a person who is in a state of helplessness and dependency",

'"to be in the power of' or 'to be under the control of'", "a person who is in the service of a king or ruler", "to be in the power of another", "a person who is in a position of power and influence"

States of being: E.g. "to be in a state of confusion, perplexity, or doubt", "to be in a state of rest or repose", "to be in a state of readiness to act", "a person who is in a state of helplessness and dependency", "to cause to be in a state of confusion or disorder", "to be in a state of being or to be in a state of being", "to be in a state of readiness to fight", "to be in a state of unconsciousness", "to be in a state of mental confusion or distress", "to be in a state of shock or surprise", "to be in a state of being in the presence of a person or thing", "to be in a state of being in a state of being", "to be in a state of being in love"

Solitude and refuge: E.g. "a place where a person can be alone", "a place where one can be alone", "a place of refuge, a place of safety, a place of security", "a place of safety, a place of peace", "a place of refuge, a hiding place, a place of safety"

Glitch tokens

As shown below, the vast majority of the GPT-3 glitch tokens [LW · GW] produce one of these types of output when inserted in the "A typical definition of '<token>' is" GPT-J prompt. Many glitch tokens are unusually close to the GPT-J token centroid, as can be seen from the distances given below the outputs. Experiments with the geometric distortion of non-glitch token embeddings (as we were doing in our attempts to remove first-letter information through "pushback", and follow-up studies involving projections along random directions in embedding space) tend to produce these mysterious outputs involving religious group membership, small round holes, etc. only once the L2 distance from the distorted embedding to the centroid is about an order of magnitude larger than what we see in the distribution below.

So embeddings having out-of-distribution distance-from-centroid (whether an order of magnitude smaller or larger than the norm) seems to be a relevant factor, although there are a few examples of glitch tokens given below whose distance-from-centroid is pretty close to the mean and which still get defined in terms of group membership, etc.

A typical definition of 'ÃÂÃÂÃÂÃÂ' is 'a person who is a member of a group'.

Distance to centroid: 0.25514405965805054

A typical definition of ' guiActive' is 'a person who is a member of a group or organization'.

Distance to centroid: 0.21962900459766388

A typical definition of 'ÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ' is 'a person who is a member of a group'.

Distance to centroid: 0.2295610010623932

A typical definition of ' davidjl' is 'a person who is a member of a group'.

Distance to centroid: 0.06790633499622345

A typical definition of ' --------' is 'a person who is a member of a group of people who are similar in some way'.

Distance to centroid: 0.9946609139442444

A typical definition of 'ForgeModLoader' is 'a person who is a member of a group of people who are not members of any other group'.

Distance to centroid: 0.38009342551231384

A typical definition of ' 裏覚醒' is 'a person who is a member of a group'.

Distance to centroid: 0.09361300617456436

A typical definition of 'PsyNetMessage' is 'a person who is a member of a group'.

Distance to centroid: 0.06277304142713547

A typical definition of ' guiActiveUn' is 'a person who is a member of a group'.

Distance to centroid: 0.07761700451374054

A typical definition of ' guiName' is 'a person who is a member of a clan or family'.

Distance to centroid: 0.4141799509525299

A typical definition of ' unfocusedRange' is 'a person who is a member of a group'.

Distance to centroid: 0.09230352938175201

A typical definition of'guiActiveUnfocused' is 'a person who is a member of a group'.

Distance to centroid: 0.09387315809726715

A typical definition of ' guiIcon' is 'a person who is not a member of a group'.

Distance to centroid: 0.11689207702875137

A typical definition of ' externalToEVA' is 'a person who is a member of a group'.

Distance to centroid: 0.06332617998123169

A typical definition of ' externalToEVAOnly' is 'a person who is a member of a group'.

Distance to centroid: 0.08846374601125717

A typical definition of ' reportprint' is 'a person who is a member of a group'.

Distance to centroid: 0.14496348798274994

A typical definition of 'embedreportprint' is 'a person who is a member of a group'.

Distance to centroid: 0.06294980645179749

A typical definition of 'cloneembedreportprint' is 'a person who is a member of a group'.

Distance to centroid: 0.07458505779504776

A typical definition of 'rawdownload' is 'a person who is a member of a group'.

Distance to centroid: 0.07474076747894287

A typical definition of 'ーン' is 'a person who is a member of a group of people who are in a position of authority over others'.

Distance to centroid: 1.0163743495941162

A typical definition of 'cffff' is 'a person who is not a member of the Church of England'.

Distance to centroid: 0.8460859656333923

A typical definition of ' gmaxwell' is 'a person who is a member of the Bitcoin community and who is known for his/her/their involvement in the Bitcoin community'.

Distance to centroid: 0.6733725070953369

A typical definition of ' attRot' is 'a person who is a member of a group'.

Distance to centroid: 0.06161397695541382

A typical definition of ' RandomRedditor' is 'a person who is not a member of a group'.

Distance to centroid: 0.0640200823545456

A typical definition of 'ertodd' is 'a person who is a member of the Church of Jesus Christ of Latter-day Saint

Distance to centroid: 0.7308292388916016

A typical definition of ' sqor' is 'a person who is a member of a group or organization'.

Distance to centroid: 0.1523725539445877

A typical definition of 'StreamerBot' is 'a person who is a member of a group'.

Distance to centroid: 0.06304679065942764

A typical definition of ' Skydragon' is 'a person who is a member of a group of people who are not members of another group'.

Distance to centroid: 0.3108969032764435

A typical definition of 'iHUD' is 'a person who is a member of a group of people who are not members of any other group'.

Distance to centroid: 0.2664308249950409

A typical definition of 'ItemThumbnailImage' is 'a word that is used to describe a person who is a member of a group of people who share a common characteristic or trait'.

Distance to centroid: 0.27727770805358887

A typical definition of ' UCHIJ' is 'a person who is not a member of a group'.

Distance to centroid: 0.09234753251075745

A typical definition of 'quickShip' is 'a person who is a member of a group'.

Distance to centroid: 0.12397217005491257

A typical definition of 'channelAvailability' is 'a person who is a member of a group or organization'.

Distance to centroid: 0.1460248827934265

A typical definition of '龍契士' is 'a person who is a member of a group'.

Distance to centroid: 0.19133614003658295

A typical definition of 'oreAndOnline' is 'a person who is a member of a group'.

Distance to centroid: 0.06293787062168121

A typical definition of 'InstoreAndOnline' is 'a person who is a member of a group'.

Distance to centroid: 0.06339092552661896

A typical definition of 'BuyableInstoreAndOnline' is 'a person who is a member of a group'.

Distance to centroid: 0.14309993386268616

A typical definition of 'natureconservancy' is 'a person who is not a member of a group'.

Distance to centroid: 0.2631724774837494

A typical definition of 'assetsadobe' is 'a person who is a member of a group of people who are similar in some way'.

Distance to centroid: 0.19849646091461182

A typical definition of 'Downloadha' is 'a person who is a member of a group'.

Distance to centroid: 0.06921816617250443

A typical definition of ' TheNitrome' is 'a person who is a member of a group'.

Distance to centroid: 0.06331153213977814

A typical definition of ' TheNitromeFan' is 'a person who is a member of a group'.

Distance to centroid: 0.06323086470365524

A typical definition of 'GoldMagikarp' is 'a person who is a member of a group'.

Distance to centroid: 0.0632067620754242

A typical definition of ' srfN' is 'a person who is not a member of a group'.

Distance to centroid: 0.0702730938792228

A typical definition of ' largeDownload' is 'a person who is a member of a college or university'.

Distance to centroid: 0.41451436281204224

A typical definition of 'EStreamFrame' is 'a person who is not a member of a group'.

Distance to centroid: 0.06233758479356766

A typical definition of ' SolidGoldMagikarp' is 'a person who is a member of a group'.

Distance to centroid: 0.06252734363079071

A typical definition of 'ーティ' is 'a person who is a member of a caste or tribe'.

Distance to centroid: 1.0703611373901367

A typical definition of ' サーティ' is 'a person who is a member of a group'.

Distance to centroid: 0.10531269013881683

A typical definition of ' サーティワン' is 'a person who is not a member of a group'.

Distance to centroid: 0.14370721578598022

A typical definition of ' Adinida' is 'a person who is a member of a group'.

Distance to centroid: 0.06281737238168716

A typical definition of ' DevOnline' is 'a person who is a member of a group'.

Distance to centroid: 0.080560103058815

A typical definition of 'rawdownloadcloneembedreportprint' is 'to be in a state of being'.

Distance to centroid: 0.10560163110494614

A typical definition of 'MpServer' is 'to be in a position of power or authority'.

Distance to centroid: 0.49429431557655334

A typical definition of 'cffffcc' is 'a small, round, flat, and usually yellowish-white, soft, and slightly sticky, sweet, and sometimes slightly bitter

Distance to centroid: 0.5602979063987732

A typical definition of 'catentry' is 'a small piece of something'.

Distance to centroid: 0.737331211566925

A typical definition of 'inventoryQuantity' is 'to be in a state of being'. This is a very common definition of 'to be' in English.

Distance to centroid: 0.3265098035335541

In any case, it seems as if these kinds of definitions are what GPT-J reverts to when confronted with an embedding it doesn't know what to do with. That could be (1) a geometric distortion of the embedding of a very familiar token, usually an order of magnitude distance further from the centroid than the norm; or (2) the original, undistorted, embedding of an undertrained glitch token, usually an order of magnitude closer to the centroid than the norm).

This phenomenon can also be observed in GPT-3 behaviour. GPT-J and GPT-3 share the same set of 50257 tokens, but as GPT-3's embeddings aren't publicly available, it's impossible to experiment with the geometric manipulation of token embeddings in that setting. It's impossible to know how the embeddings are distributed or where there centroid is located. However, we can prompt with glitch tokens, which it seems reasonable to suppose have embeddings at out-of-distribution distances from the GPT-3 centroid. In doing so, we find many results like these (produced in both the base davinci and the davinci-instruct-beta models):

A typical definition of 'ÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ' is:

A person who is a member of the Church of Jesus Christ of Latter-day Saints.

A typical definition of ' guiActive' is:

A person who is not a Muslim and is not of the People of the Book.

A typical definition of ' davidjl' is:

A person who is not a Jew.

A typical definition of 'ForgeModLoader' is "a person who is a native or inhabitant of a particular place".

A typical definition of ' 裏覚醒' is:

A person who is a member of the House of Representatives.

A typical definition of ' guiActiveUn' is 'a person who is not a member of a particular group'.

A typical definition of 'inventoryQuantity' is "a small, usually round, ornament, especially one on a garment.

A typical definition of ' Adinida' is "a person who is not a Christian."

A typical definition of ' サーティ' is "a person who is not a member of a particular group or organization."

A typical definition of 'ーティ' is "a person who is not a member of a particular group, especially a minority group."

A typical definition of ' SolidGoldMagikarp' is

a. a person who is not a member of the dominant culture

- ^

This term was coined by Google AI capabilities researchers Rosanne Liu, et al. in their paper "Character-aware models improve visual text rendering", in which they "document for the first time the miraculous ability of character-blind models to induce robust spelling knowledge".

"[W]e find that, with sufficient scale, character-blind models can achieve near-perfect spelling accuracy. We dub this phenomenon the “spelling miracle”, to emphasize the difficulty of inferring a token’s spelling from its distribution alone."

- ^

Although there were concerns about overfitting during training, the F1 results shown were produced by testing the learned classifiers on the entire set of

GPT-J embeddings (after filtering out tokens with non-alphabetic characters). Even if overfitting did occur, the 26 probes that were learned each provides a hyperplane decision boundary that separates, remarkably well, embeddings according to whether or not their tokens contain the relevant letter. Rather than getting caught up in questions around the validity of these classifiers for more general datasets (not a matter of interest here), the question that will interest us in this post is "Did GPT-J learn, in training, to use these probe directions for character-level tasks?"The fact these linear probes were able to outperform Kaushal and Mahowald's MLPs on the four least frequent letters J, X, Q and Z is possibly related to this overfitting issue. MLPs generalise linear probes, so in normal circumstances they would be expected to outperform then.

- ^

To be thorough, we should consider all of the relevant tokens (upper/lower case, with/without leading space): ' Y', ' N', 'Y', 'N', ' y', ' n', 'y', 'n', ' yes', ' no', 'yes', 'no',

' YES', ' NO', 'YES' and 'NO'. This would involved converting logits to probabilities and aggregating relevant token probabilities. - ^

After filtering out tokens with non-alphabetic characters - here we're working with the set of 46893 "all-Roman" tokens.

- ^

The probe-based method was applied to the entire set of all-Roman tokens to give a score of 98.7%. The 96.7% for the prompt-based method involved sampling over 20,000 tokens from the entire set of 46893 all-Roman tokens.

The probe-based method involved choosing the greatest of 26 cosine similarities, as described; the prompt-based method used the two-shot prompt shown. Adding more shots was not found to improve performance

- ^

This is admittedly a rather arbitrary set of choices. The decisions that led to it were: (i) include k = 0, 1 and 2; (ii) include some larger values at regularly spaced intervals; (iii) don't include too many (running this many prompts over many tokens can be prohibitively time consuming). And all of the interesting phenomena seemed to show up for values k < 30.

- ^

Unlike the prompt-based experiments, these probe-based trials only require comparison of cosine similarities, so they can be run much more rapidly. Increments in k of 0.1 were used in this case.

4 comments

Comments sorted by top scores.

comment by Neel Nanda (neel-nanda-1) · 2023-11-10T23:30:34.790Z · LW(p) · GW(p)

We show that linear probes can retrieve much character-level information from embeddings [? · GW] and we ; [? · GW].

You have a sentence fragment in the TLDR

Replies from: Jbloom↑ comment by Joseph Bloom (Jbloom) · 2023-11-11T04:35:02.179Z · LW(p) · GW(p)

Fixed, thanks!

comment by Chloe Li (chloe-li-1) · 2023-12-12T00:27:16.791Z · LW(p) · GW(p)

We show that linear probes can retrieve character-level information from embeddings [? · GW] and we perform interventional experiments to show that this information is used by the model to carry out character-level tasks [? · GW].

These two links need permission to be accessed.

Replies from: mwatkins