Possible OpenAI's Q* breakthrough and DeepMind's AlphaGo-type systems plus LLMs

post by Burny · 2023-11-23T03:16:09.358Z · LW · GW · 25 commentsContents

26 comments

tl;dr: OpenAI leaked AI breakthrough called Q*, acing grade-school math. It is hypothesized combination of Q-learning and A*. It was then refuted. DeepMind is working on something similar with Gemini, AlphaGo-style Monte Carlo Tree Search. Scaling these might be crux of planning for increasingly abstract goals and agentic behavior. Academic community has been circling around these ideas for a while.

Reuters: OpenAI researchers warned board of AI breakthrough ahead of CEO ouster, sources say

"Ahead of OpenAI CEO Sam Altman’s four days in exile, several staff researchers sent the board of directors a letter warning of a powerful artificial intelligence discovery that they said could threaten humanity

Mira Murati told employees on Wednesday that a letter about the AI breakthrough called Q* (pronounced Q-Star), precipitated the board's actions.

Given vast computing resources, the new model was able to solve certain mathematical problems. Though only performing math on the level of grade-school students, acing such tests made researchers very optimistic about Q*’s future success."

"What could OpenAI’s breakthrough Q* be about?

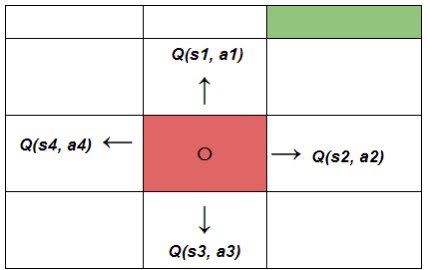

It sounds like it’s related to Q-learning. (For example, Q* denotes the optimal solution of the Bellman equation.) Alternatively, referring to a combination of the A* algorithm and Q learning.

One natural guess is that it is AlphaGo-style Monte Carlo Tree Search of the token trajectory. 🔎 It seems like a natural next step: Previously, papers like AlphaCode showed that even very naive brute force sampling in an LLM can get you huge improvements in competitive programming. The next logical step is to search the token tree in a more principled way. This particularly makes sense in settings like coding and math where there is an easy way to determine correctness. -> Indeed, Q* seems to be about solving Math problems 🧮"

"Anyone want to speculate on OpenAI’s secret Q* project?

- Something similar to tree-of-thought with intermediate evaluation (like A*)?

- Monte-Carlo Tree Search like forward roll-outs with LLM decoder and q-learning (like AlphaGo)?

- Maybe they meant Q-Bert, which combines LLMs and deep Q-learning

Before we get too excited, the academic community has been circling around these ideas for a while. There are a ton of papers in the last 6 months that could be said to combine some sort of tree-of-thought and graph search. Also some work on state-space RL and LLMs."

OpenAI spokesperson Lindsey Held Bolton refuted it:

"refuted that notion in a statement shared with The Verge: “Mira told employees what the media reports were about but she did not comment on the accuracy of the information.”"

Google DeepMind's Gemini, that is currently the biggest rival with GPT4, which was delayed to the start of 2024, is also trying similar things: AlphaZero-based MCTS through chains of thought, according to Hassabis.

Demis Hassabis: "At a high level you can think of Gemini as combining some of the strengths of AlphaGo-type systems with the amazing language capabilities of the large models. We also have some new innovations that are going to be pretty interesting."

anton, Twitter, links to a video of Shane Legg

Aligns with DeepMind Chief AGI scientist Shane Legg saying:

"To do really creative problem solving you need to start searching."

"With Q*, OpenAI have likely solved planning/agentic behavior for small models.

Scale this up to a very large model and you can start planning for increasingly abstract goals.

It is a fundamental breakthrough that is the crux of agentic behavior.

To solve problems effectively next token prediction is not enough.

You need an internal monologue of sorts where you traverse a tree of possibilities using less compute before using compute to actually venture down a branch.

planning in this case refers to generating the tree and predicting the quickest path to solution"

My thoughts:

If this is true, and really a breakthrough, that might have caused the whole chaos: For true superintelligence you need flexibility and systematicity. Combining the machinery of general and narrow intelligence (I like the DeepMind's taxonomy of AGI https://arxiv.org/pdf/2311.02462.pdf ) might be the path to both general and narrow superintelligence.

25 comments

Comments sorted by top scores.

comment by Steven Byrnes (steve2152) · 2023-11-23T14:54:10.864Z · LW(p) · GW(p)

Why I strong-downvoted

[update: now it’s a weak-downvote, see edit at bottom]

[update 2: I now regret writing this comment, see my reply-comment]

I endorse the general policy: "If a group of reasonable people think that X is an extremely important breakthrough that paves the path to imminent AGI, then it's really important to maximize the amount of time that this group can think about how to use X-type AGI safely, before dozens more groups around the world start trying to do X too."

And part of what that entails is being reluctant to contribute to a public effort to fill in the gaps from leaks about X.

I don't have super strong feelings that this post in particular is super negative value. I think its contents are sufficiently obvious and already being discussed in lots of places, and I also think the thing in question is not in fact an extremely important breakthrough that paves the path to imminent AGI anyway. But this post has no mention that this kind of thing might be problematic, and it's the kind of post that I'd like to discourage, because at some point in the future it might actually matter.

As a less-bad alternative, I propose that you should wait until somebody else prominently publishes the explanation that you think is right (which is bound to happen sooner or later, if it hasn't already), and then linkpost it.

See also: my post on Endgame Safety [LW · GW].

EDIT: Oh oops I wasn’t reading very carefully, I guess there are no ideas here that aren’t word-for-word copied from very-widely-viewed twitter threads. I changed my vote to a weak-downvote, because I still feel like this post belongs to a genre that is generally problematic, and by not mentioning that fact and arguing that this post is one of the exceptions, it is implicitly contributing to normalizing that genre.

Replies from: steve2152, Zvi, person-1↑ comment by Steven Byrnes (steve2152) · 2023-11-26T15:38:03.324Z · LW(p) · GW(p)

Update: I kinda regret this comment. I think when I wrote it I didn’t realize quite how popular the “Let’s figure out what Q* is!!” game is right now. It’s everywhere, nonstop.

It still annoys me as much as ever that so many people in the world are playing the “Let’s figure out what Q* is!!” game. But as a policy, I don’t ordinarily complain about extremely widespread phenomena where my complaint has no hope of changing anything. Not a good use of my time. I don’t want to be King Canute yelling at the tides. I un-downvoted. Whatever.

↑ comment by Zvi · 2023-11-24T01:34:12.039Z · LW(p) · GW(p)

How far does this go? Does this mean if I e.g. had stupid questions or musings about Q learning, I shouldn't talk about that in public in case I accidentally hit upon something or provoked someone else to say something?

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2023-11-24T04:47:31.787Z · LW(p) · GW(p)

None of the things you mention seem at all problematic to me. I'm mostly opposed to the mood of "Ooh, a puzzle! Hey let's all put our heads together and try to figure it out!!" Apart from that mood, I think it's pretty hard to go wrong in this case, unless you're in a position to personally find a new piece of the puzzle yourself. If you're just chatting with no particular domain expertise, ok sure maybe you'll inspire some idea in a reader, but that can happen when anyone says anything. :-P

↑ comment by Person (person-1) · 2023-11-23T15:57:52.106Z · LW(p) · GW(p)

I also think the thing in question is not in fact an extremely important breakthrough that paves the path to imminent AGI anyway

Could you explain this assessment please? I am not knowledgeable at all on the subject, so I cannot intuit the validity of the breakthrough claim.

comment by James Payor (JamesPayor) · 2023-11-23T16:18:15.000Z · LW(p) · GW(p)

OpenAI spokesperson Lindsey Held Bolton refuted it:

"refuted that notion in a statement shared with The Verge: “Mira told employees what the media reports were about but she did not comment on the accuracy of the information.”"

The reporters describe this as a refutation, but this does not read to me like a refutation!

comment by [deleted] · 2023-11-23T04:24:30.081Z · LW(p) · GW(p)

If this is about work related to Q-learning for small models, I would not expect OpenAI to achieve better results than DeepMind, since the latter has much deeper expertise in reinforcement learning, and has been committed to this direction since the start.

Replies from: qweered, Christopher Galias, wassname↑ comment by qweered · 2023-11-23T06:31:34.370Z · LW(p) · GW(p)

OpenAI was full on RL in 2015-18 until transformers been discovered

Replies from: gwern↑ comment by gwern · 2023-12-06T20:22:27.860Z · LW(p) · GW(p)

It was, but think about how much turnover there has been and how long ago that was. The majority of OAers have been there for maybe a year or two*, never mind 5 years (at the tail end!) or counting defections to Anthropic etc. (And then there is the tech stack: All of that DRL work was on GCP, not Azure. And it was in Tensorflow, not PyTorch. It used RNNs, not Transformers. Essentially, none of that code is usable or even runnable by now without extensive maintenance or rewrites.) While DM has been continuously doing DRL of some sort the entire time, with major projects like AlphaStar or their multi-agent projects.

* possibly actually less than a year, since there's numbers like '200-300' for 2022/2023, while there are 700+ on the letter. Considering that the OA market cap tripled or more in that hiring interval, people there must feel like they won the lottery...

↑ comment by Christopher Galias · 2023-11-23T09:15:25.729Z · LW(p) · GW(p)

John Schulman is at OpenAI.

↑ comment by wassname · 2023-11-24T01:22:20.061Z · LW(p) · GW(p)

OpenAI sometimes get there faster. But I think other players will catch up soon, if it's a simple application of RL to LLM's.

Replies from: gwern↑ comment by gwern · 2023-11-24T01:57:17.369Z · LW(p) · GW(p)

other players will catch up soon, if it's a simple application of RL to LLM's.

simple != catch-up-soon

'Simply apply this RL idea to LLMs' is much more useless than it seems. People have been struggling to apply RL methods to LLMs, or reinventing them the hard way, for years now; it's super obvious that just prompting a LLM and then greedily sampling is a hilariously stupid-bad way to sample a LLM, and using better sampling methods has been a major topic of discussion of everyone using LLMs since at least GPT-2. It just somehow manages to work better than almost anything else. Sutskever has been screwing around with self-play and math for years since GPT-2, see GPT-f etc. But all the publicly-known results have been an incremental grind... until now?

So the application may well be simple, perhaps a tiny tweak of an equation somewhere and people will rush to pull up all the obscure work which preceded it and how 'we knew it all along'*, but that's an entirely different thing from it being easy to reinvent.

* Cowen's second law - happened with AlphaZero, incidentally, with 'expert iteration'. Once they had invented expert iteration from scratch, suddenly, everyone could come up with a dozen papers that it 'drew on' and showed that it was 'obvious'. (Like a nouveau riche buying an aristocratic pedigree.)

Replies from: None↑ comment by [deleted] · 2023-11-24T06:42:51.785Z · LW(p) · GW(p)

I agree, which is why I don't expect OpenAI to do better. If both teams are tweaking equations here and there, based on their prior work I see DeepMind does it more efficiently. OpenAI has been historically luckier, but luck is not a quality I would extrapolate in time.

Replies from: gwern↑ comment by gwern · 2023-11-24T14:35:40.282Z · LW(p) · GW(p)

You may not expect OA to do better (neither did I, even if I expected someone somewhere to crack the problem within a few years of GPT-3), but that's not the relevant fact here, now that you have observed that apparently there's this "Q*" & "Zero" thing that OAers are super-excited about. It was hard work and required luck, but apparently they got lucky. It is what it is. ('Update your priors using the evidence to obtain a new posterior', as we like to say around here.)

How much does that help someone else get lucky? Well, it depends on how much they leak or publish. If it's like the GPT-3 paper, then yeah, people can replicate it quickly and are sufficiently motivated these days that they probably will. If it's like the GPT-4 "paper", well... Knowing someone else has won the lottery of tweaking equations at random here & there doesn't help you win the lottery yourself.

(The fact that self-play or LLM search of some sort works is not that useful - we all knew it has to work somehow! It's the critical vital details which is the secret sauce that probably matters here. How exactly does their particular variant thread the needle's eye to avoid diverging or plateauing etc? Remember Karpathy's law: "neural nets want to work". So even if your approach is badly broken, it can mislead you for a long time by working better than it has any right to.)

comment by Rodrigo Heck (rodrigo-heck-1) · 2023-11-23T03:22:29.925Z · LW(p) · GW(p)

The hypothesis of a breakthrough having had happened crossed my mind, but the only thing that didn't seem to fit into this narrative was Ilya regretting his decision. If such a new technology has emerged and he thinks Sam is not the man to guide the company in this new scenario, why would he reverse his position? It doesn't seem wise given what it's assumed to be at stake.

Replies from: gwern, quailia↑ comment by gwern · 2023-11-23T03:30:57.540Z · LW(p) · GW(p)

the only thing that didn't seem to fit into this narrative was Ilya regretting his decision.

But you know what happened there (excerpts), and it seems clear that it had little to do with any research:

Sutskever flipped his position following intense deliberations with OpenAI employees as well as an emotionally charged conversation with Greg Brockman’s wife, Anna Brockman, at the company’s offices, during which she cried and pleaded with him to change his mind, according to people familiar with the matter...It isn’t clear what else influenced Sutskever’s decision to reverse course. Sutskever was the officiant at the Brockmans’ wedding in 2019.

EDIT: and why he flipped to begin with [LW(p) · GW(p)] - appears mostly or entirely unrelated to any 'Q*'

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2023-11-23T07:43:41.618Z · LW(p) · GW(p)

This link has significantly decreased my confusion about why Sutskever flipped! That situation sounds messy enough and difficult easy enough for MSM to misunderstand that it sounds likely to have occurred. Thanks!

↑ comment by quailia · 2023-11-23T04:50:00.175Z · LW(p) · GW(p)

The safety/risk calculus changed for him. It was now a decision between Sam and 700 employees working unshackled for MSFT or bringing Sam back to OpenAI with a new board, the former being way more risky for humanity as a whole in his mind.

comment by Ben Pace (Benito) · 2023-11-24T02:54:07.581Z · LW(p) · GW(p)

This post has a bunch of comments and was linked from elsewhere, so I've gone through and cleaned up the formatting a bunch.

In future, please

- Name the source in the post, rather than just providing a link that the reader has to click through to find who's speaking

- Use the quotes formatting, so readers can easily distinguish between quotes and your text

- Format the quotes as they were originally, do not include your own sentences like "Aligns with DeepMind Chief AGI scientist Shane Legg saying:" in a way that reads as though they were part of the original quote, nor cut tweets together and skip over the replies as though they were a single quote.

comment by RogerDearnaley (roger-d-1) · 2023-11-26T22:22:00.899Z · LW(p) · GW(p)

One plausible-looking possibility would be something based on or developed from the "Q* Search" algorithm introduced in the paper "A* Search Without Expansions: Learning Heuristic Functions with Deep Q-Networks" — which is indeed a combination of the A* path-finding algorithm and Q-learning. It seems like it would be applicable in an environment like grade-school math that has definitively-correct answers for your Q-learning to work back from.

comment by niknoble · 2023-11-23T03:38:35.441Z · LW(p) · GW(p)

Relevant quote from Altman after the firing:

Replies from: Evan R. Murphy“I think this will be the most transformative and beneficial technology humanity has yet invented,” Altman said, adding later, “On a personal note, four times now in the history of OpenAI, the most recent time was just in the last couple of weeks, I’ve gotten to be in the room when we push … the veil of ignorance back and the frontier of discovery forward.”

↑ comment by Evan R. Murphy · 2023-11-23T05:54:30.252Z · LW(p) · GW(p)

Yes though I think he said this at APEC right before he was fired (not after).

Replies from: gwern↑ comment by gwern · 2023-11-25T21:41:18.240Z · LW(p) · GW(p)

Yes, well before. Here is the original video: https://www.youtube.com/watch?v=ZFFvqRemDv8&t=815s

comment by Bijin Regi panicker (bijin-regi-panicker) · 2023-11-25T19:13:01.701Z · LW(p) · GW(p)

qstar.py created using Q-star algorithm from openai