Munk AI debate: confusions and possible cruxes

post by Steven Byrnes (steve2152) · 2023-06-27T14:18:47.694Z · LW · GW · 21 commentsContents

Five ways people were talking past each other 1. Treating efforts to solve the problem as exogenous or not 2. Ambiguously changing the subject to “timelines to x-risk-level AI”, or to “whether large language models (LLMs) will scale to x-risk-level AI” 3. Vibes-based “meaningless arguments” 4. Ambiguously changing the subject to policy 5. Ambiguously changing the subject to Cause Prioritization Some possible cruxes of why I and Yann / Melanie disagree about x-risk Yann LeCun Melanie Mitchell A. “The field of AI is more than 30 years away from X” is not the kind of claim that one should make with 99%+ confidence B. …And 30 years is still part of the “foreseeable future” C. “Common sense” has an ought-versus-is split, and it’s possible to get the latter without the former D. It is conceivable for something to be an x-risk without there being any nice clean quantitative empirically-validated mathematical model proving that it is None 21 comments

There was a debate on the statement “AI research and development poses an existential threat” (“x-risk” for short), with Max Tegmark and Yoshua Bengio arguing in favor, and Yann LeCun and Melanie Mitchell arguing against. The YouTube link is here, and a previous discussion on this forum is here [LW · GW].

The first part of this blog post is a list of five ways that I think the two sides were talking past each other. The second part is some apparent key underlying beliefs of Yann and Melanie, and how I might try to change their minds.[1]

While I am very much on the “in favor” side of this debate, I didn’t want to make this just a “why Yann’s and Melanie’s arguments are all wrong” blog post. OK, granted, it’s a bit of that, especially in the second half. But I hope people on the “anti” side will find this post interesting and not-too-annoying.

Five ways people were talking past each other

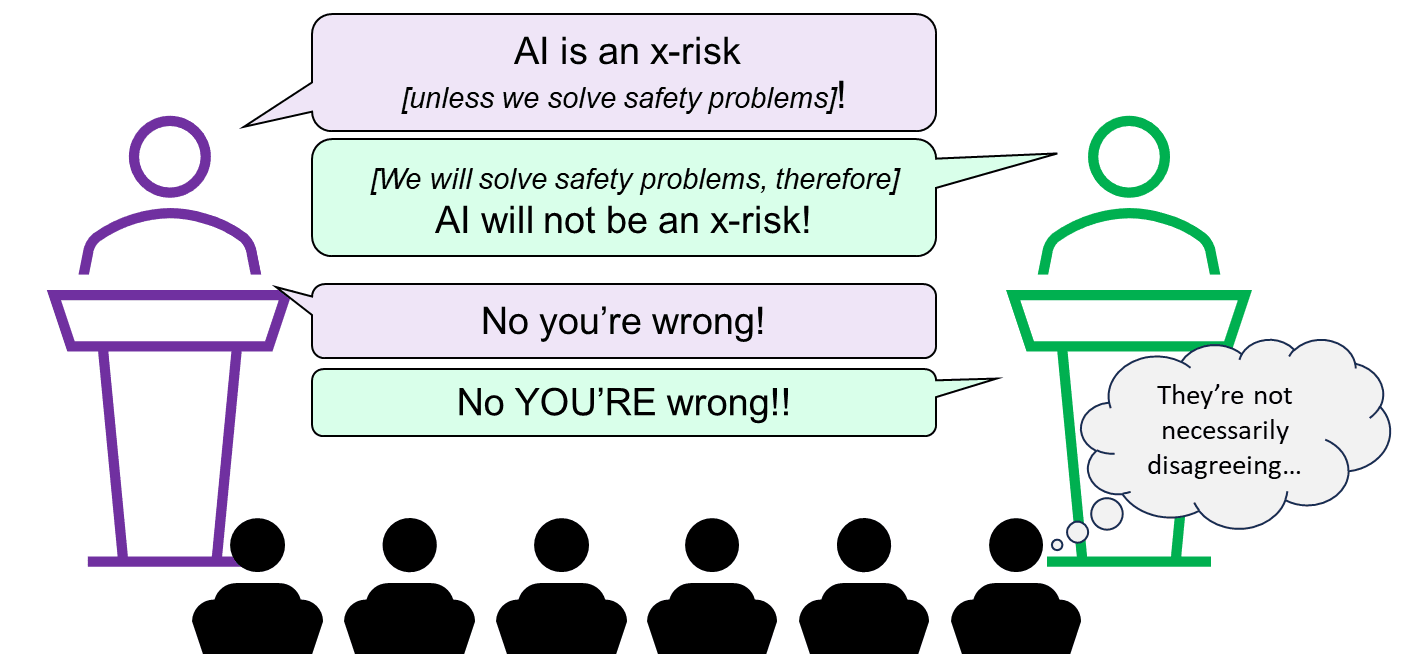

1. Treating efforts to solve the problem as exogenous or not

This subsection doesn’t apply to Melanie, who rejected the idea that there is any existential risk in the foreseeable future. But Yann suggested that there was no existential risk because we will solve it; whereas Max and Yoshua argued that we should acknowledge that there is an existential risk so that we can solve it.

By analogy, fires tend not to spread through cities because the fire department and fire codes keep them from spreading. Two perspectives on this are:

- If you’re an outside observer, you can say that “fires can spread through a city” is evidently not a huge problem in practice.

- If you’re the chief of the fire department, or if you’re developing and enforcing fire codes, then “fires can spread through a city” is an extremely serious problem that you’re thinking about constantly.

I don’t think this was a major source of talking-past-each-other, but added a nonzero amount of confusion.

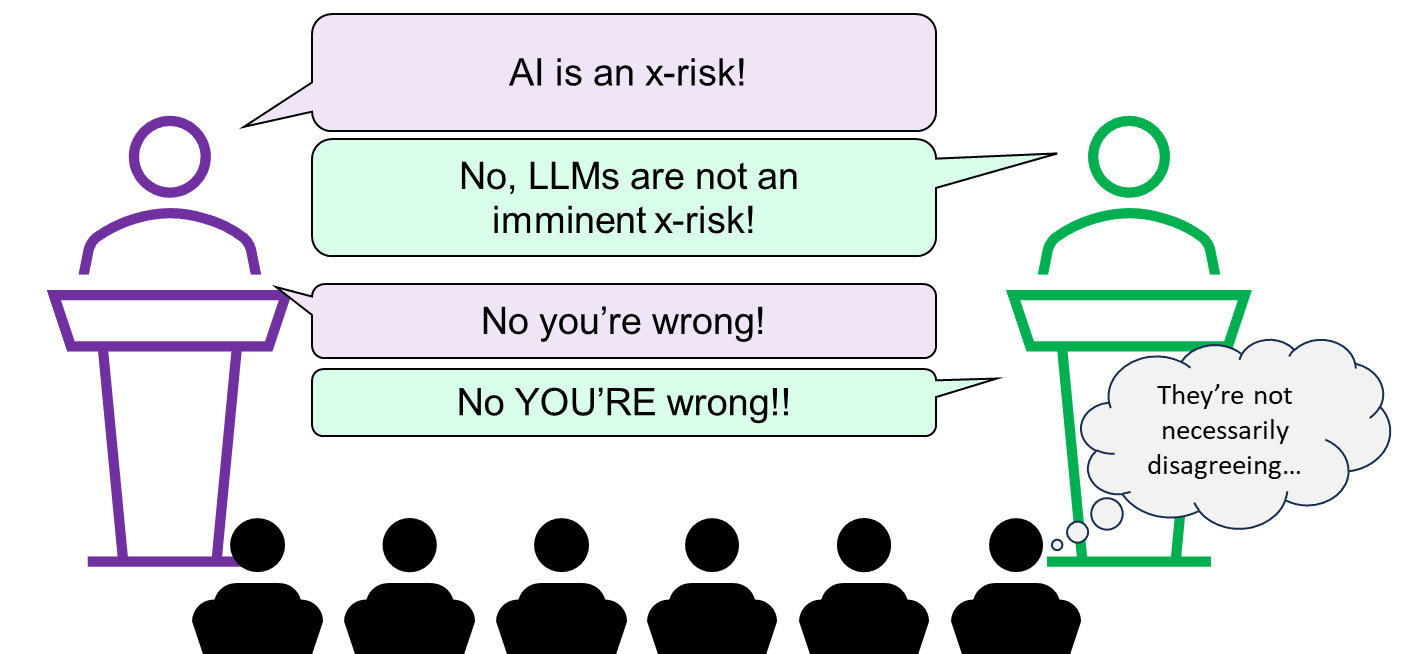

2. Ambiguously changing the subject to “timelines to x-risk-level AI”, or to “whether large language models (LLMs) will scale to x-risk-level AI”

The statement under debate was “AI research and development poses an existential threat”. This statement does not refer to any particular line of AI research, nor any particular time interval. The four participants’ positions in this regard seemed to be:

- Max and Yoshua: Superhuman AI might happen in 5-20 years, and LLMs have a lot to do with why a reasonable person might believe that.

- Yann: Human-level AI might happen in 5-20 years, but LLMs have nothing to do with that. LLMs have fundamental limitations. But other types of ML research could get there—e.g. my (Yann’s) own research program.

- Melanie: LLMs have fundamental limitations, and Yann’s research program is doomed to fail as well. The kind of AI that might pose an x-risk will absolutely not happen in the foreseeable future. (She didn’t quantify how many years is the “foreseeable future”.)

It seemed to me that all four participants (and the moderator!) were making timelines and LLM-related arguments, in ways that were both annoyingly vague, and unrelated to the statement under debate.

(If astronomers found a giant meteor projected to hit the earth in the year 2123, nobody would question the use of the term “existential threat”, right??)

As usual (see my post AI doom from an LLM-plateau-ist perspective [AF · GW]), this area was where I had the most complaints about people “on my side”, particularly Yoshua getting awfully close to conceding that under-20-year timelines are a necessary prerequisite to being concerned about AI x-risk. (I don’t know if he literally believes that, but I think he gave that impression. Regardless, I strongly disagree, more on which later.)

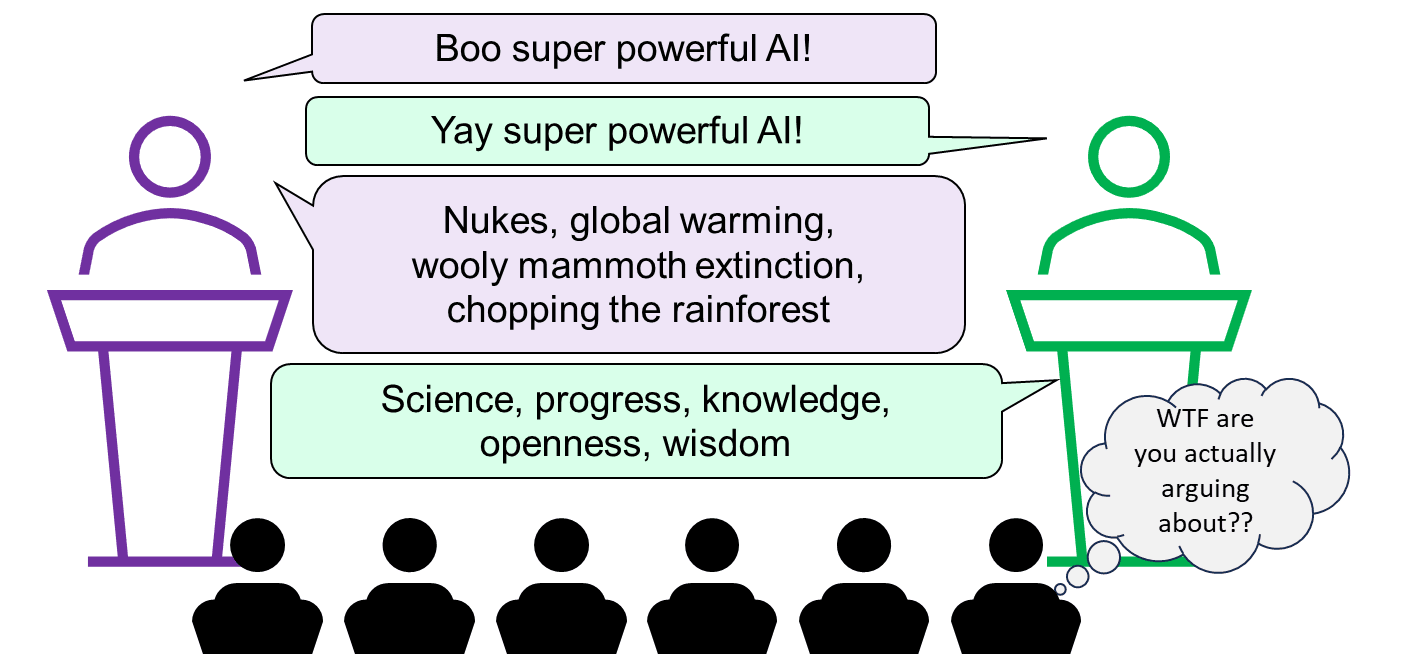

3. Vibes-based “meaningless arguments”

I recommend in the strongest possible terms that everyone read the classic Scott Alexander blog post Ethnic Tension And Meaningless Arguments. Both sides were playing this game. (By and large, they were not doing this instead of making substantive arguments, but rather they were making substantive arguments in a way that simultaneously conveyed certain vibes.) It’s probably a good strategy for winning a debate. It’s not a good way to get at the truth.

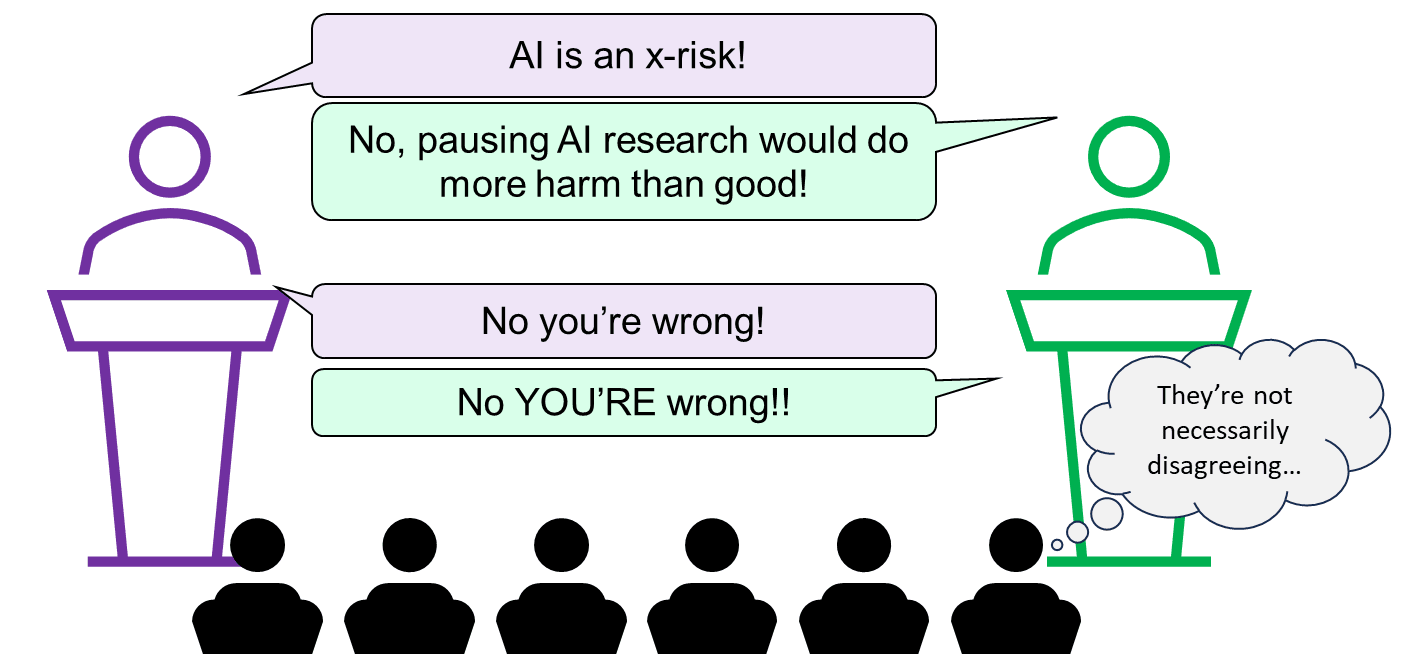

4. Ambiguously changing the subject to policy

The question of what if anything to do about AI x-risk really ought to be separate and downstream from the question of whether AI x-risk exists in the first place. Alas, all four debaters and the moderator frequently changed the subject to what policies would be good or bad, without anyone seeming to notice.

Regrettably, in the public square, people often seem to treat the claim “I think X is a big problem” as all-but-synonymous with “I think the government needs to do something big and aggressive about X, like probably ban it”. But they’re different claims!

(I think alcoholism is a big problem, but I sure don’t want a return to Prohibition. I think misinformation is a big problem, but I sure don’t want an end to Free Speech. More to the point, I myself think AI x-risk is a very big problem, but I happen to be weakly against the recently-proposed ban on large ML training runs [LW · GW].)

All four debaters on the stage were brilliant scientists. They’re plenty smart enough to separately track beliefs about the world, versus desires about government policy. I wish they had done better on that.

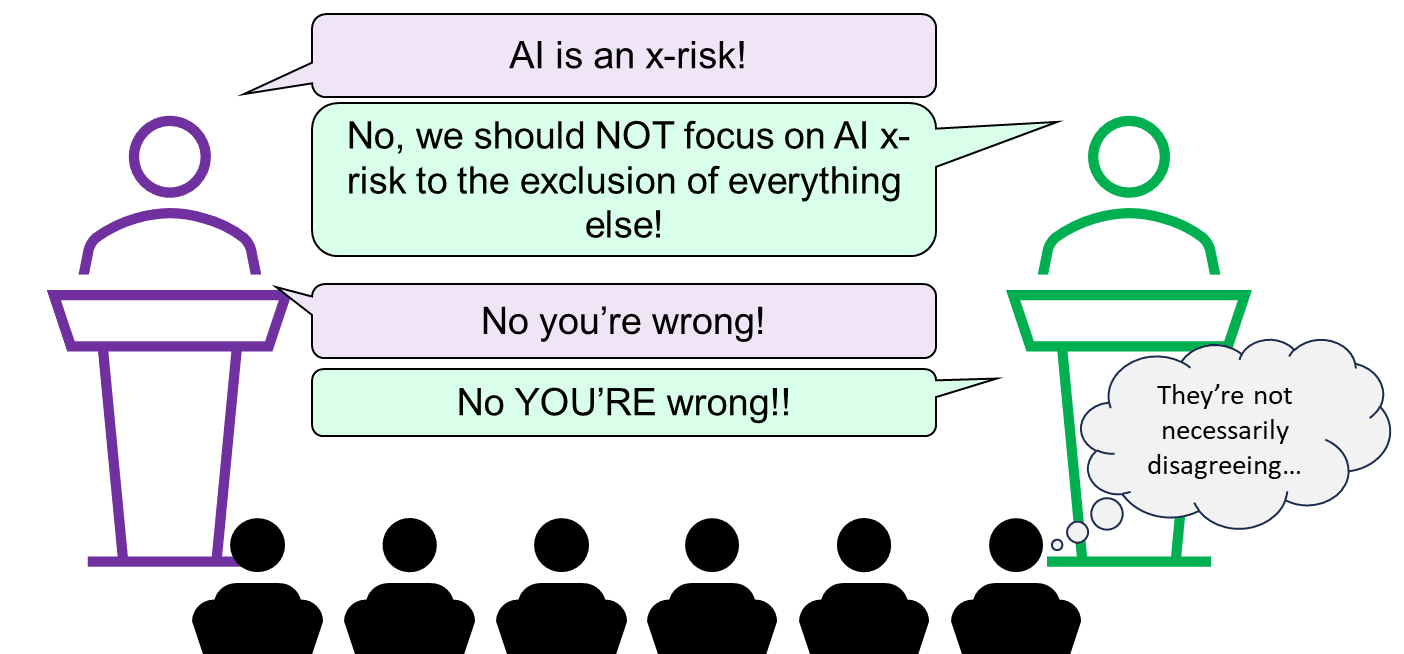

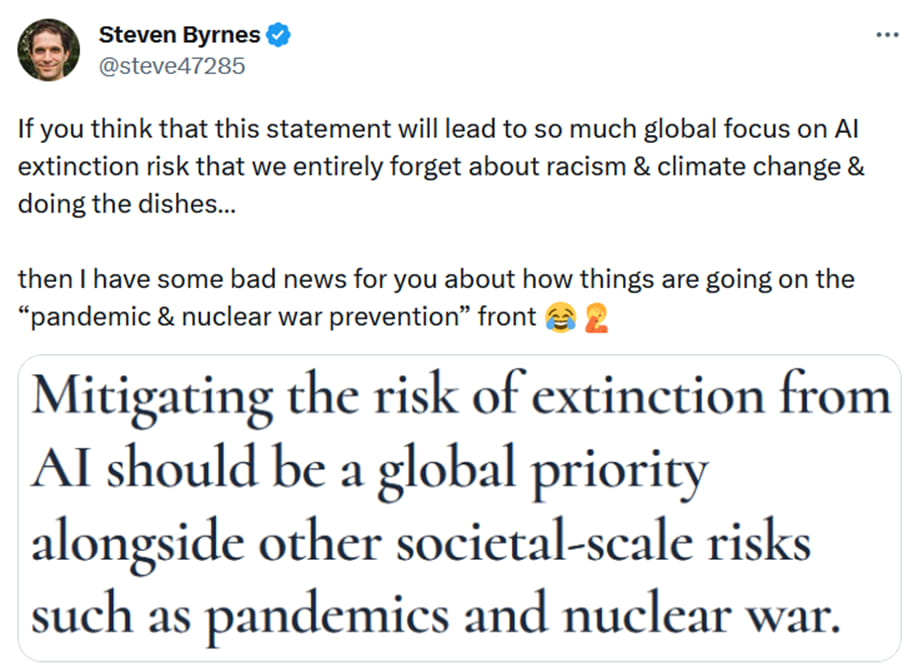

5. Ambiguously changing the subject to Cause Prioritization

Melanie pushed this line hard, suggesting in both her opening and closing statements that even talking about the possibility that AI might be an existential risk was a dangerous distraction from real immediate problems of AI misinformation and bias, etc.

I really don’t like this argument. I’ll pause for three bullet points to say why.

- For one thing, if we use that logic, then everything distracts from everything. You could equally well say that climate change is a distraction from the obesity epidemic, and the obesity epidemic is a distraction from the January 6th attack, and so on forever. In reality, this is silly—there is more than one problem in the world! For my part, if someone tells me they’re working on nuclear disarmament, or civil society, or whatever, my immediate snap reaction is not to say “well that’s stupid, you should be working on AI x-risk instead”, rather it’s to say “Thank you for working to build a better future. Tell me more!”

- For another thing, immediate AI problems are not an entirely different problem from possible future AI x-risk. Some people think they’re extremely related—see for example Brian Christian’s book. I don’t go as far as he does, but I do see some overlap. For example, both social media recommendation algorithm issues and out-of-control-AI issues are (on my models) exacerbated by the fact that huge trained ML models are very difficult to interpret and inspect.

- And lastly, this argument is assuming the falsehood of the debate resolution, rather than arguing for it. If AI in fact has a >10% chance of causing human extinction in the next 50 years (as I believe) then, well, it sure isn’t crazy to treat that as a problem worthy of attention! Yes, even if it were a distraction from other extremely serious societal problems!! Conversely, if AI in fact has a 1-in-a-gazillion chance of causing human extinction in the next 50 years, then obviously there’s no reason to think about that possibility. So, what’s the right answer? Is it more like “>10%”, or more like “1-in-a-gazillion”? That’s the important question here.

Some possible cruxes of why I and Yann / Melanie disagree about x-risk

Yann LeCun

Probably the biggest single driver of why Yann and I disagree is: Yann thinks he knows, at least in broad outline, how to make a subservient human-level AI. And I think his proposed approach would not actually work, but would instead lead to human-level AIs that are pursuing their own interests with callous disregard for humanity. I have various technical reasons for believing that, spelled out in my post LeCun’s “A Path Towards Autonomous Machine Intelligence” has an unsolved technical alignment problem [AF · GW].

If Yann could be sold on that technical argument, then I think a lot of his other talking points would go away too. For example, he argues that good people will use their powerful good AIs to stop bad people making powerful bad AIs. But that doesn’t work if there are no powerful good AIs—which is possible if nobody knows how to solve the technical alignment problem! More discussion in the epilogue of that post [AF · GW].

Melanie Mitchell

If I were trying to bridge the gap between me and Melanie, I think my priority would be to try to convince her of some or all of the following four propositions:

A. “The field of AI is more than 30 years away from X” is not the kind of claim that one should make with 99%+ confidence

I mean, 30 years is a long time. A lot can happen. Thirty years ago, deep learning was an obscure backwater within AI, and meanwhile people would brag about how their fancy new home computer had a whopping 8 MB of RAM.

Melanie thinks that LLMs are a dead end. That’s fine—as it happens, I agree [LW · GW]. But it’s important to remember that the field of AI is broad. Not every AI researcher is working on LLMs, nor even on deep learning, even today! So, do you think getting to human-level intelligence requires better understanding of causal inference? Well, Judea Pearl and a bunch of other people are working on that right now. Do you think it requires a hardcoded intuitive physics module? Well, Josh Tenenbaum and a bunch of other people are working on that right now. Do you think it requires embodied cognition and social interactions? Do you think it requires some wildly new paradigm beyond our current imagination? People are working on those things too! And all that is happening right now—who knows what the AI research community is going to be doing in 2040?

To be clear, I am equally opposed to putting 99%+ confidence on the opposite kind of claim, “the field of AI is LESS than 30 years away from X”. I just think we should be pretty uncertain here.

B. …And 30 years is still part of the “foreseeable future”

For example, in climate change, people routinely talk about bad things that might happen in 2053—and even 2100 and beyond! And looking backwards, our current climate change situation would be even worse if not for prescient investments in renewable energy R&D made more than 30 years ago.

People also routinely talk 30 years out or more in the context of building infrastructure, city planning, saving for retirement, etc. Indeed, here is an article about a US military program that’s planning out into the 2080s!

C. “Common sense” has an ought-versus-is split, and it’s possible to get the latter without the former

For example, “mice are smaller than elephants” is an “is” aspect of common sense, and “it’s bad to murder your friend and take his stuff” is an “ought” aspect of common sense. I find it regrettable that the same phrase “common sense” refers to both these categories, because they don’t inevitably go together. High-functioning human sociopaths are an excellent example of how it is possible for there to be an intelligent agent who is good at the “is” aspects of common sense, and is aware of the “ought” aspects of common sense, but is not motivated by the “ought” aspects. The same can be true in AI—in fact, that’s what I expect without specific effort and new technical ideas [AF · GW].

D. It is conceivable for something to be an x-risk without there being any nice clean quantitative empirically-validated mathematical model proving that it is

This is an epistemology issue, I think best illustrated via example. So (bear with me): suppose that the astronomers see a fleet of alien spaceships in their telescopes, gradually approaching Earth, closer each year. I think it would be perfectly obvious to everyone that “the aliens will wipe us out” should be on the table as a possibility, in this hypothetical scenario. Don’t ask me to quantify the probability!! But “the probability is obviously negligible” is clearly the wrong answer.

And yet: can anyone provide a nice clean quantitative model, validated by existing empirical data, to justify the claim that “this incoming alien fleet, the one that we see in our telescopes right now, might wipe us out” is a non-negligible possibility? I doubt it![2] Doesn’t mean it’s not true.

Well anyway, forecasting the future is very hard (though not impossible). But to do it, we need to piece together whatever scraps of evidence and reason we have. We can’t restrict ourselves to one category of evidence, e.g. “neat mathematical models that have already been validated by empirical data”. I like those kinds of models as much as anyone! But sometimes we just don’t have one, and we need to do the best we can to figure things out anyway.

None of this is to say that we should treat future advanced AI as an x-risk on a lark for no reason whatsoever! See the epilogue here [AF · GW] for a pretty basic common-sense argument that we should be thinking about AI x-risk at all, as an example. That’s just a start though. Getting to probabilities of extinction in different scenarios is much more of a slog, e.g. we have to start talking about the technical challenges of making aligned AIs, and the social and governance challenges, and then things like offense-defense balance, and compute requirements, and fast versus slow “takeoff”, and on and on. There are tons of reasons and arguments and evidence that can be brought to bear in every part of that above discussion! But again, they are not the specific type of evidence that Melanie seems to be demanding, i.e. mathematical models that have already been validated by empirical data, and which can be directly applied to calculate a probability of human extinction.

(Thanks Karl von Wendt for critical comments on a draft.)

- ^

Note: For the purpose of this post, I’m mostly assuming that all four debaters were by-and-large saying things that they believe, in good faith, as opposed to trying to win the debate at all costs. Call me naïve.

- ^

Hmm, the best I can think of (in this hypothetical) is that we could note that “Earth species have sometimes wiped each other out”. And we could try to make a mathematical model based on that. And we could use the past history of extinctions as empirical data to validate that model. But the extraterrestrial UFO fleet is pretty different! We’re on shaky grounds applying that same model in such a different situation.

And anyway, if someone makes a quantitative model based on “Earth species have sometimes wiped each other out”, and if that counts as “empirically validated” justification for calling the incoming alien fleet an x-risk, then I claim that very same quantitative model should count as “empirically validated” justification for calling future advanced AI an x-risk too! After all, future advanced AI will probably have a lot in common with a new intelligent nonhuman species, I claim.

21 comments

Comments sorted by top scores.

comment by Aryeh Englander (alenglander) · 2023-06-28T03:35:04.013Z · LW(p) · GW(p)

The more I think about this post, the more I think it captures my frustrations with a large percentage of the public discourse on AI x-risks, and not just this one debate event.

Replies from: Seth Herd, Ilio↑ comment by Seth Herd · 2023-06-29T01:14:53.856Z · LW(p) · GW(p)

I think so too, and I'm going to keep these points in mind when talking to risk skeptics. I wish others would too.

I couldn't bear to watch this particular debate, since the summary made it clear that little communication happened. Debates are a terrible way to arrive at the truth, since they put people into a soldier mindset. But even many conversations that don't start as debates turn into them, and investigating these cruxes could help turn them back into honest discussions in search of understanding.

comment by Ratios · 2023-06-28T12:38:39.555Z · LW(p) · GW(p)

"For one thing, if we use that logic, then everything distracts from everything. You could equally well say that climate change is a distraction from the obesity epidemic, and the obesity epidemic is a distraction from the January 6th attack, and so on forever. In reality, this is silly—there is more than one problem in the world! For my part, if someone tells me they’re working on nuclear disarmament, or civil society, or whatever, my immediate snap reaction is not to say “well that’s stupid, you should be working on AI x-risk instead”, rather it’s to say “Thank you for working to build a better future. Tell me more!”

Disagree with this point - cause prioritization is super important. For a radical example: imagine the government spending billions to rescue one man from Mars while neglecting much more cost-efficient causes. Bad actors use the trick of focusing on unimportant but controversial issues to keep everyone from noticing how they are being exploited routinely. Demanding sane prioritization of public attention is extremely important and valid. The problem is we as a society don't have norms and common knowledge around it (And even memes specifically against it, like whataboutism), but the fact it's not being done consistently doesn't mean that we shouldn't.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2023-06-28T13:52:24.448Z · LW(p) · GW(p)

I once wrote a longer and more nuanced version that addresses this (copied from footnote 1 of my Response to Blake Richards [LW · GW] post last year):

One could object that I’m being a bit glib here. Tradeoffs between cause areas do exist. If someone decides to donate 10% of their income to charity, and they spend it all on climate change, then they have nothing left for heart disease, and if they spend it all on heart disease, then they have nothing left for climate change. Likewise, if someone devotes their career to reducing the risk of nuclear war, then they can’t also devote their career to reducing the risk of catastrophic pandemics and vice-versa, and so on. So tradeoffs exist, and decisions have to be made. How? Well, for example, you could just try to make the world a better place in whatever way seems most immediately obvious and emotionally compelling to you. Lots of people do that, and I don’t fault them for it. But if you want to make the decision in a principled, other-centered [EA · GW] way, then you need to dive into the field of Cause Prioritization [? · GW], where you (for example) try to guess how many expected QALYs could be saved by various possible things you can do with your life / career / money, and pick one at or near the top of the list. Cause Prioritization involves (among other things) a horrific minefield of quantifying various awfully-hard-to-quantify things like “what’s my best-guess probability distribution for when AGI will arrive?”, or “exactly how many suffering chickens are equivalently bad to one suffering human?”, or “how do we weigh better governance in Spain against preventing malaria deaths?”. Well anyway, I’d be surprised if Blake has arrived at his take here via one of these difficult and fraught Cause-Prioritization-type analyses. And I note that there are people out there who do try to do Cause Prioritization, and AFAICT they very often wind up putting AGI Safety right near the top of their lists.

I wonder whether Blake’s intuitions point in a different direction than Cause Prioritization analyses because of scope neglect? As an example of what I’m referring to: suppose (for the sake of argument) that out-of-control AGI accidents have a 10% chance of causing 8 billion deaths in the next 20 years, whereas dumb AI has 100% chance of exacerbating income inequality and eroding democratic norms in the next 1 year. A scope-sensitive, risk-neutral Cause Prioritization analysis would suggest prioritizing the former, but the latter might feel intuitively more panic-inducing.

Then maybe Blake would respond: “No you nitwit, it’s not that I have scope-neglect, it’s that your hypothetical is completely bonkers. Out-of-control AGI accidents do not have a 10% chance of causing 8 billion deaths in the next 20 years; instead, they have a 1-in-a-gazillion chance of causing 8 billion deaths in the next 20 years.” And then I would respond: “Bingo! That’s the crux of our disagreement! That’s the thing we need to hash out—is it more like 10% or 1-in-a-gazillion?” And this question is unrelated to the topic of bad actors misusing dumb AI.

[For the record: The 10% figure was just an example. For my part, if you force me to pick a number, my best guess would be much higher than 10%.]

Why didn’t I put something like the above into this post? Because my sense was that this is a digression in this context—it’s not cutting to the heart of where Melanie was coming from. Like, I’m in favor of cause prioritization as much as anyone, but I didn’t have the impression that Melanie is signed onto the project of Cause Prioritization and trying in good faith to push forward that project.

I was thinking about it more last night and am now thinking it’s kinda political. In short:

Advocating for a cause is also implicitly raising the status of the group of people / movements associated with that cause, and that’s zero-sum just as much as philanthropic dollars are.

But whereas practically nobody cares about what it means for philanthropic dollars to be zero-sum (except us nerds who care about Cause Prioritization), everybody sure cares a whole lot about what it means for inter-group status competition to be zero-sum.

But that’s all kinda going on in the background, usually covered up by rationalizations, I think. Not sure what to do about it. :(

comment by dr_s · 2023-06-28T14:58:37.794Z · LW(p) · GW(p)

I find arguing about timelines also frustrating for the reason that it has a huge asymmetry.

Suppose you have a distribution of probability for times at which AGI might appear, which is the best you can really honestly say to have (if you claim to have a single precise date, either you're a prophet or you don't know what you're talking about).

The AI safety argument only needs to demonstrate that the total P(T < 20 years) is higher than, say, 5% to argue that AI safety isn't just a problem, it's a relatively urgent problem.

The AI optimism argument needs to argue that P(T < 20 years) or even P(T < 50 years) is essentially zero, because otherwise the consequences are potentially so massive it really takes very little to offset even a low probability. How do you do that? I understand thinking that AGI is probably kinda far off. How do you get absolutely sure that AGI can't possibly be behind the corner? I mean willing-to-bet-and-be-shot-in-the-head-if-you-lose sure? Because that's what we're talking about here. I don't see how any intellectually honest technologist can be that confident about such a hard to predict thing.

There's very few things I'd be willing to bet my life on. Specific-ish dates for conceivably feasible technological developments are not one of them. And if you do accept that AGI is possible, and you do think that it's powerful and in fact worth seeking, and you justify your work by saying it will revolutionize the world, but you also deny that it can possibly be correspondingly dangerous, then you're just not a serious person.

Replies from: Seth Herd, Roman Leventov↑ comment by Roman Leventov · 2023-06-30T10:22:16.681Z · LW(p) · GW(p)

"Willing-to-bet-and-be-shot-in-the-head-if-you-lose" starts to be a little too much Pascal's Mugging. By this token we can make different arguments about how unimaginable high suffering (S-risk) happens right now, which seems plausible enough that nobody would be willing to put their life on a bet about it, and the implications of these arguments might be in facts that AI should mostly wipe out the complex life on earth and start from scratch. I.e.., this would motivate that consciousness and ethics research is even higher, or at least equal priority with more "orthodox" style of AI x-risk concen, which takes that human's or at least complex life's existence on earth is an unshackable assumption (prior).

But in general, I agree with the sentiment of your comment.

Replies from: dr_s↑ comment by dr_s · 2023-06-30T21:50:00.854Z · LW(p) · GW(p)

I say it just to mean that the level of certainty required is quite high. Even if you count only human deaths and not all the rest of the value lost, with 8 billion people, a 0.0001% chance of extinction is an expected value of 8,000 deaths. People would usually be quite careful about boldly stating something that can get 8,000 people killed! Anyone who's trying to argue that "no worries, it'll be fine" has a much higher burden of proof for that reason alone, IMO, especially if they want to essentially dodge altogether the argument for why it might not, and simply rely on "there's no way we'll invent AGI that soon anyway", which many people do.

comment by M. Y. Zuo · 2023-06-27T16:11:58.454Z · LW(p) · GW(p)

Thanks for the interesting writeup.

It seemed to me that all four participants (and the moderator!) were making timelines and LLM-related arguments, in ways that were both annoyingly vague, and unrelated to the statement under debate.

(If astronomers found a giant meteor projected to hit the earth in the year 2123, nobody would question the use of the term “existential threat”, right??)

The giant meteor likely wouldn't be questioned in that way if multiple independent groups (i.e. NASA, Roscosmos, ESA, CNSA, etc...) announced it with a decent probability, (maybe at least a 5% to 10% chance?) and publicized their calculations.

It's a lot more difficult for LLM timeline arguments because there are no existing guaranteed-to-be-independent-from-each-other groups with a comparable level of reputation and credibility to stake.

Replies from: Roman Leventov↑ comment by Roman Leventov · 2023-06-30T10:26:18.931Z · LW(p) · GW(p)

Even if something precluded astronomers from making principled calculations of the impact probability up until five years before the date of the hit, and before that the maximum that they could say would be "we cannot confidently rule out the possibility of an impact", this would still be treated like an existential threat.

Replies from: M. Y. Zuocomment by Ilio · 2023-06-28T05:08:19.352Z · LW(p) · GW(p)

Great post, even from my not-necessarily-disagreeing position. It helps me consolidate the update that it’s not necessarily useless to start preparing in case another unexpected revolution hits.

It feels like it’s accelerating, right?

One disagreement I think we should be able to clear is: should we evaluate p(x-risk caused by AIs) in isolation? or should we normalize by p(x-risk avoided by AIs)?

Fun fact: I happen to suspect you might be the one LW regular closer to designing a true AGI, so maybe it’s good you play safe. 😉

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2023-06-28T14:09:58.683Z · LW(p) · GW(p)

Thanks!

I think we should try to form correct beliefs about p(x-risk caused by AIs), and we should also try to form correct beliefs about p(x-risk avoided by AIs), and then we should make sensible decisions in light of those beliefs. I don’t see any reason to combine those two probabilities into a single “yay AI versus boo AI” axis—see Section 3 above! :)

For example, if p(x-risk caused by AIs) is high, and p(x-risk avoided by AIs) is even higher, then we should brainstorm ways to lower AI x-risk other than “stop doing AI altogether forever” (as if that were a feasible option in the first place!); and we should also talk about what those other x-risks are, and whether there are non-AI ways to mitigate them; and we should also talk about how both those probabilities might change if we somehow make AGI happen N years later (or N years sooner), etc. Whereas if p(x-risk caused by AIs) is ≈0, we would be asking different questions and facing different tradeoffs. Right?

Replies from: Ilio↑ comment by Ilio · 2023-06-28T18:17:06.322Z · LW(p) · GW(p)

[on second thought some material removed for better usage]

I think we should try to form correct beliefs about p(x-risk caused by AIs), and we should also try to form correct beliefs about p(x-risk avoided by AIs), and then we should make sensible decisions in light of those beliefs.

Hear! Hear!

Whereas if p(x-risk caused by AIs) is ≈0, we would be asking different questions and facing different tradeoffs. Right?

I don’t know. It doesn’t feel like that’s why I choose to spend some time checking these questions in the first place.

You know what it feels like when your plane is accelerating and you start feel like something is going to happen, then you remember: of course, it’s a take off.

Well, Imagine you still feel acceleration after the take off. First, you guess it’s to hard to evaluate the distance but you know it must stop when you’re at cruising speed. Right? After one hour, you keep feeling acceleration, but that must be a mistake because a Fermi estimate says you can’t be accelerating at this point. Right? Then it accelerates more. Right? This is how I feel after I found out about convolution, relu, alphago,, alphazero. « Attention is all you need » and children.

comment by Abhinav Srivastava (abhinav-srivastava) · 2023-07-24T14:32:10.606Z · LW(p) · GW(p)

(If astronomers found a giant meteor projected to hit the earth in the year 2123, nobody would question the use of the term “existential threat”, right??)

I'm wondering if you believe that AGI risk is equivalent to a giant meteor hitting the Earth or was that just a throw away analogy? This helps me get a better idea of where x-risk concern-havers (?) stand on the urgency of the risk. /gen

Thanks

↑ comment by Steven Byrnes (steve2152) · 2023-07-24T18:31:47.621Z · LW(p) · GW(p)

I was just making a narrow point: the term “existential threat” does not generally have a connotation of “imminent”.

An analysis in The Precipice concludes that the risk of extinction via “natural” asteroid or comet impact is around 1 in 1,000,000 in the next century. I think the probability of human extinction via AI in the next century is much much much much higher than 1 in 1,000,000. If you force me to pick a number, I would say something above 50% in the next 30 years. That’s just my opinion though. There’s quite a range of opinions in the field. I generally think it’s pretty hard to say, within a pretty broad range, at least in my present state of knowledge. However, if someone says it’s below 1% in the next century, then I feel very strongly that they have not thought it through sufficiently carefully, and are overlooking important considerations.

comment by Jonah Wilberg (jrwilb@googlemail.com) · 2023-06-28T23:00:00.942Z · LW(p) · GW(p)

Very useful post, thanks. While the 'talking past each other' is frustrating, the 'not necessarily disagreeing' suggests the possibility of establishing surprising areas of consensus. And it might be interesting to explore further what exactly that consensus is. For example:

Yann suggested that there was no existential risk because we will solve it

I'm sure the air of paradox here (because you can't solve a problem that doesn't exist) is intentional, but if we drill down, should we conclude that Yann actually agrees that there is an existential risk (just that the probabilities are lower than other estimates, and less worth worrying about)? Yann sometimes compares the situation to the risk of designing a car without brakes, but if the car is big enough that crashing it would destroy civilization that still kinda sounds like an existential risk.

I'm also not sure the fire department analogy helps here - as you note later in the paper Yann thinks he knows in outline how to solve the problem and 'put out the fires', so it's not an exogenous view. It seems like the difference between the fire chief who thinks their job is easy vs the one who thinks it's hard, though everyone agrees fires spreading would be a big problem.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2023-06-29T00:24:25.238Z · LW(p) · GW(p)

Yeah, like I said, I don’t think that one in particular was a major dynamic, just one thing I thought worth mentioning. I think one could rephrase what they said slightly to get a very similar disagreement minus the talking-past-each-other.

Like, for example, if every human jumped off a cliff simultaneously, that would cause extinction. Is that an “x-risk”? No, because it’s never going to happen. We don’t need any “let’s not all simultaneously jump off a cliff” activist movements, or any “let’s not all simultaneously jump off a cliff” laws, or any “let’s not all simultaneously jump off a cliff” fields of technical research, or anything like that.

That’s obviously a parody, but Yann is kinda in that direction regarding AI. I think his perspective is: We don’t need activists, we don’t need laws, we don’t need research. Without any of those things, AI extinction is still not going to happen, just because that’s the natural consequence of normal human behavior and institutions doing normal stuff that they’ve always done.

I think “Yann thinks he knows in outline how to solve the problem” is maybe giving the wrong impression here. I think he thinks the alignment problem is just a really easy problem with a really obvious solution. I don’t think he’s giving himself any credit. I think he thinks anyone looking at the source code for a future human-level AI would be equally capable of making it subservient with just a moment’s thought. His paper didn’t really say “here’s my brilliant plan for how to solve the alignment problem”, the vibe was more like “oh and by the way you should obviously choose a cost function that makes your AI kind and subservient” as a side-comment sentence or two. (Details here [LW · GW])

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2023-06-29T01:45:17.283Z · LW(p) · GW(p)

Re how hard alignment is, I suspect it's harder on my median world than Yann LeCun thinks, but probably a lot easier than most LWers think, and in particular I assign some probability mass to the hypothesis that in practice, AI alignment of superhuman AI is a non-problem.

This means that while I disagree with Yann LeCun, and in general find my side (anti-doomerism/accelerationism) to be quite epistemically unsound at best, I do suspect that there are object level reasons to believe the alignment of superhuman systems is way easier than most LWers think, including you.

To give the short version, instrumental convergence is probably going to be constrained for pure capabilities reasons, and in particular unconstrained instrumental goals via RL usually fail, or at best produce something useless. This means that it will be reasonably easy to add constraints to instrumental goals, and the usual thought experiment of a squiggle maximizer basically collapses, because they won't have the capabilities. This implies that we are dealing with an easier problem, since we aren't starting from zero, and in the strongest form, basically transforms it into a non-adversarial problem like nuclear safety, which we solve easily and rather well.

Or in slogan form "Less constraints on instrumental goals is usually not good for capabilities, and the human case is probably a result of just not caring about efficiency, plus time scales. We should expect AI systems to have more constraints on instrumental goals for capability and alignment reasons."