Credence polls for 26 claims from the 2019 Review

post by Bird Concept (jacobjacob) · 2021-01-09T07:13:24.166Z · LW · GW · 2 commentsContents

Book Review: The Secret of Our Success If you want to hide other user's predictions until you've made your own, here's how to do that: Make More Land Making more land out of the about 50mi^2 shallow water in the San Francisco Bay, South of the Dumbarton Bridge, would... Why Wasn't Science Invented in China? The Strategy-Stealing Assumption Becoming the Pareto-best in the World The Hard Work of Translation The Forces of Blandness and the Disagreeable Majority Bioinfohazards Two explanations for variation in human abilities Reframing Impact None 2 comments

This post is a whirlwind tour of claims made in the LessWrong 2019 Review. In some cases, the claim is literally quoted from the post. In others, I have tried operationalising it into something more falsifiable. For example:

Book Review: The Secret of Our Success [LW · GW]

Overall, treat the claims in this post more like polls, and less like the full-blown forecasting questions you'd find on Metaculus or PredictIt. (The latter have extremely high bars for crisp definitions.) They point in a direction, but don't completely pin it down.

Overall, this is an experiment. I'm trying to find interesting ways for people to relate to the Review.

Maybe speeding through these questions gets you thinking good thoughts, that you can then turn into full-blown reviews? Maybe others' answers allow you to find a discussion partner who disagrees on a core question? Maybe the data will be useful in the voting phase?

We'll see!

Feel free to leave a comment about how you found the experience, if you want.

If you want to discuss the questions with others over a call, you can do so during the Review forecasting sessions [LW · GW] we're organising this weekend (January 9-10).

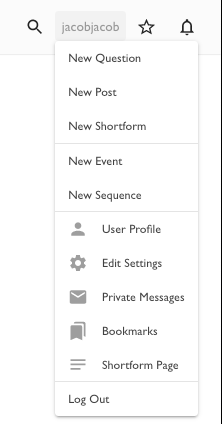

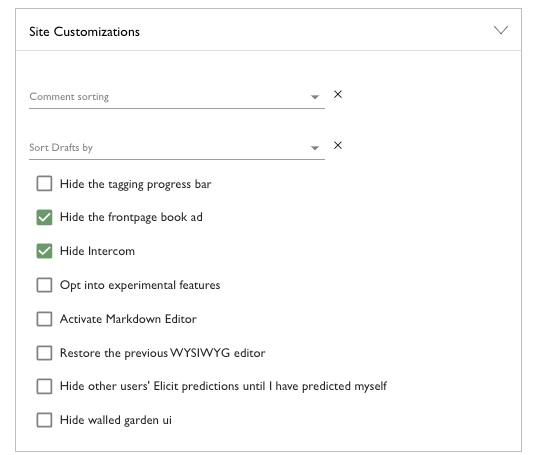

If you want to hide other user's predictions until you've made your own, here's how to do that:

Make More Land [LW · GW]

Making more land out of the about 50mi^2 shallow water in the San Francisco Bay, South of the Dumbarton Bridge, would...

Why Wasn't Science Invented in China? [LW · GW]

The Strategy-Stealing Assumption [LW · GW]

Becoming the Pareto-best in the World [LW · GW]

The Hard Work of Translation [LW · GW]

The Forces of Blandness and the Disagreeable Majority [LW · GW]

Bioinfohazards [LW · GW]

Two explanations for variation in human abilities [LW · GW]

Reframing Impact [? · GW]

These questions are quite technical, and might be hard to answer if you're unfamiliar with the terminology used in TurnTrout's sequence on Impact Measures [? · GW].

---

(Note that when you answer questions in this summary post, and it will automatically update the prediction questions that I have linked in comments on each individual post. The distributions will later be visible when users are voting to rank the posts.)

2 comments

Comments sorted by top scores.

comment by TurnTrout · 2021-01-09T21:30:30.248Z · LW(p) · GW(p)

Speaking of claims made in 2019 review posts: Conclusion to 'Reframing Impact' [? · GW] (the final post of my nominated Reframing Impact [? · GW] sequence) contains the following claims and credences:

Replies from: jacobjacob

- AU theory describes how people feel impacted. I'm darn confident (95%) that this is true.

- Agents trained by powerful RL algorithms on arbitrary reward signals generally try to take over the world. Confident (75%). The theorems on power-seeking only apply to optimal policies in fully observable environments, which isn't realistic for real-world agents. However, I think they're still informative. There are also strong intuitive arguments for power-seeking.

- The catastrophic convergence conjecture [? · GW] is true. Fairly confident (70%). There seems to be a dichotomy between "catastrophe directly incentivized by goal" and "catastrophe indirectly incentivized by goal through power-seeking", although Vika provides intuitions in the other direction [LW(p) · GW(p)].

- AUP prevents catastrophe, assuming the catastrophic convergence conjecture. Very confident (85%).

- Some version of AUP solves side effect problems for an extremely wide class of real-world tasks and for subhuman agents. Leaning towards yes (65%).

- For the superhuman case, penalizing the agent for increasing its own AU is better than penalizing the agent for increasing other AUs. Leaning towards yes (65%).

- There exists a simple closed-form solution to catastrophe avoidance (in the outer alignment sense). Pessimistic (35%).

↑ comment by Bird Concept (jacobjacob) · 2021-01-09T23:44:16.629Z · LW(p) · GW(p)

Ey, awesome! I've updated the post to include them.