Two explanations for variation in human abilities

post by Matthew Barnett (matthew-barnett) · 2019-10-25T22:06:26.329Z · LW · GW · 28 commentsContents

Explanation 1: Distinguish learning from competence Explanation 2: Similar architecture does not imply similar performance Appendix What about other theories? Where did the learning/competence distinction come from? None 28 comments

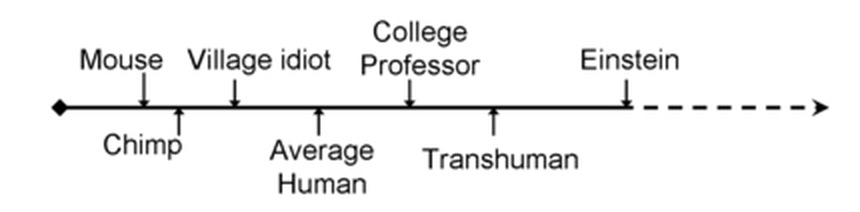

In My Childhood Role Model [LW · GW], Eliezer Yudkowsky argues that people often think about intelligence like this, with village idiot and chimps on the left, and Einstein on the right.

However, he says, this view is too narrow. All humans have nearly identical hardware [LW · GW]. Therefore, the true range of variation looks something like this instead:

This alternative view has implications for an AI takeoff duration. If you imagine that AI will slowly crawl from village idiot to Einstein, then presumably we will have ample time to see powerful AI coming in advance. On the other hand, if the second view is correct, then the intelligence of computers is more likely to swoosh right past human level once it reaches the village idiot stage. Or as Nick Bostrom put it, "The train doesn't stop at Humanville Station."

Katja Grace disagrees, finding that there isn't much reason to believe in a small variation in human abilities. Her evidence comes from measuring human performance on various strategically relevant indicators: chess, go, checkers, physical manipulation, and Jeopardy.

In this post, I argue that the debate is partly based on a misunderstanding: in particular, a conflation of learning ability and competence. I further posit that when this distinction is unraveled, the remaining variation we observe isn't that surprising. Similar machines regularly demonstrate large variation in performance if some parts are broken, despite having nearly identical components.

These ideas are not original to me (see the Appendix). I have simply put two explanations together, which in my opinion, explain a large fraction of the observed variation.

Explanation 1: Distinguish learning from competence

Humans, despite our great differences in other regards, still mostly learn things the same way. We listen to lectures at roughly the same pace, we read at mostly the same speeds, and we process thoughts in similar sized chunks. To the extent that people do speed up lectures by 2x on Youtube, or speed read, they lose retention.

And putting aside tall tales of people learning quantum mechanics over a weekend, it turns out to be surprisingly difficult for humans to beat the strategy of long-term focused practice for becoming an expert at some task.

From this, we have an important insight: the range of learning abilities in humans is relatively small. There don't really seem to be humans who tower above us in terms of their ability to soak up new information and process it.

This prompts the following hypothetical objection,

Are you sure? If I walk into a graduate-level mathematics course without taking the prerequisites seriously, then I'm going to be seriously behind the other students. While they learn the material, I will be struggling to understand even the most basic of concepts in the course.

Yes, but that's because you haven't taken the prerequisites. Doing well in a course is a product of learning ability * competence at connecting it to prior knowledge. If you separate learning ability and competence, you will see that your learning ability is still quite similar to the other students.

Eric Drexler makes this distinction in Reframing Superintelligence,

Since Good (1966), superhuman intelligence has been equated with superhuman intellectual competence, yet this definition misses what we mean by human intelligence. A child is considered intelligent because of learning capacity, not competence, while an expert is considered intelligent because of competence, not learning capacity. Learning capacity and competent performance are distinct characteristics in human beings, and are routinely separated in AI development. Distinguishing learning from competence is crucial to understanding both prospects for AI development and potential mechanisms for controlling superintelligent-level AI systems.

Let's consider this distinction in light of one of the cases that Katja pointed to above: chess. I find it easy to believe that Magnus Carlsen would beat me handily in chess, even while playing upside-down and blindfolded. But does this mean that Magnus Carlsen is vastly more intelligent than me?

Well, no, because Magnus Carlsen has spent several hours a day playing chess ever since he was 5, and I've spent roughly 0 hours a day. A fairer comparison is other human experts who have spent the same amount of practicing chess. And there, you might still see a lot of variation (see the next theory), but not nearly as much as between Magnus Carlson and me.

This theory can also be empirically tested. In my mind there are (at least) two formulations of the theory:

One version roughly states that, background knowledge and motivation levels being equal, humans will learn how to perform new tasks at roughly equal rates.

Another version of this theory roughly states that everything that top-humans can learn, most humans can too if they actually tried. That is, there is psychological unity of humankind in what we can learn, but not necessarily what we have learned. By contrast, a mouse really couldn't learn chess, even if they tried. And in turn, no human can learn to play 90-dimensional chess, unlike the hypothetical superintelligences that can.

You can also use this framework to cast the intelligence of machine learning systems in a new light. AlphaGo took a hundred million games to grow to the competence of Lee Sedol. But Lee Sedol has only played about 50,000 games in his life.

Point being, when we talk about AI getting more advanced, we're really mostly talking about what type of tasks computers can now learn, and how quickly. Hence the name machine learning...

Explanation 2: Similar architecture does not imply similar performance

Someone reading the above discussion might object, saying

That seems right... but I still feel like there are some people who can't learn calculus, or learn to code no matter how hard they try. And I don't feel like this is just them not taking prerequisites seriously or lack of motivation. I feel like it's a fundamental limitation in their brain, and this produces a large amount of variation in learning ability.

I agree with this to an extent. However, I think that there is a rather simple explanation for this phenomenon -- the same one in fact that Katja Grace pointed to in her article,

Why should we not be surprised? [...] You can often make a machine worse by breaking a random piece, but this does not mean that the machine was easy to design or that you can make the machine better by adding a random piece. Similarly, levels of variation of cognitive performance in humans may tell us very little about the difficulty of making a human-level intelligence smarter.

In the extreme case, we can observe that brain-dead humans often have very similar cognitive architectures. But this does not mean that it is easy to start from an AI at the level of a dead human and reach one at the level of a living human.

Imagine lining up 100 identical machines that produce widgets. At the beginning they all produce widgets at the same pace. But if I go to each machine and break a random part -- a different one for each machine -- some will stop working completely. Others will continue working, but will sometimes spontaneously fail. Others will slow down their performance, and produce fewer widgets. Others still will continue unimpeded since the broken part was unimportant. This is all despite the fact that the machines are nearly identical in design space!

If a human cannot learn calculus, even after trying their hardest, and putting in the hours, I would attribute this fact to a learning deficit. In other words, they have a 'broken part.' Learning deficits can be small things: I often procrastinate on Reddit rather than doing tasks that I should be doing. People's minds drift off when they're listening to lectures, finding the material to be boring.

The brightest people are the ones who can use the full capacity of their brains to learn the task. In other words, the baseline of human performance is set by people who have no 'broken parts.' Among those people, the people with no harmful mutations or cognitive deficits, I expect human learning ability to be extremely similar. Unfortunately there aren't really any humans who fit this description, and thus we see some variation, even for humans near the top.

Appendix

What about other theories?

There have been numerous proposed theories that I have seen. Here are a few posts:

Why so much variance in human intelligence? [LW · GW] by Ben Pace. This question prompted the community to find reasons for the above phenomenon. None of the answers quite match my response, hence why I created this post. Still, I think a few of the replies were onto something and worth reading.

Where the Falling Einstein Meets the Rising Mouse, a post on SlateStarCodex. Scott Alexander introduces a few of his own theories, such as the idea that humans are simply lightyears above animals in abilities. While this theory seems plausible in some domains, I did not ultimately find it compelling. In light of the learning ability distinction, however, I do think we are lightyears above many animals in learning.

The range of human intelligence by Katja Grace. Her post prompted me to write this one. I agree that there is a large variation in human abilities but I felt that the lack of a coherent distinction between learning and competence ultimately made the argument rest on a confusion. As for whether this alters our perception of a more continuous takeoff, I am not so sure. I think the implications are non-obvious.

Where did the learning/competence distinction come from?

To the best of my knowledge, Eric Drexler kindled the idea. However, I do know that many people have made the distinction in the past; I just haven't really seen it applied to this debate in particular.

However, I do think that most people are probably able to learn calculus, and how to code. I agree with Sal Khan who says,

If we were to go 400 years into the past to Western Europe, which even then, was one of the more literate parts of the planet, you would see that about 15 percent of the population knew how to read. And I suspect that if you asked someone who did know how to read, say a member of the clergy, "What percentage of the population do you think is even capable of reading?" They might say, "Well, with a great education system, maybe 20 or 30 percent." But if you fast forward to today, we know that that prediction would have been wildly pessimistic, that pretty close to 100 percent of the population is capable of reading. But if I were to ask you a similar question: "What percentage of the population do you think is capable of truly mastering calculus, or understanding organic chemistry, or being able to contribute to cancer research?" A lot of you might say, "Well, with a great education system, maybe 20, 30 percent."

Edit: I want to point out that this optimism is not a crux for my main argument.

28 comments

Comments sorted by top scores.

comment by avturchin · 2019-10-26T10:09:59.250Z · LW(p) · GW(p)

Another way to look at human intelligence is to assume that it is a property of the whole humanity, but much less of individual humans. If Einstein was born in a forrest as a feral child, he will not be able to demonstrate much of his intelligence. Only because he got special training he was able to built his special relativity theory as incrementation of already existing theories.

Replies from: Viliam, leggi↑ comment by Viliam · 2019-10-26T22:47:09.164Z · LW(p) · GW(p)

If I take this literally, it should be relatively simple to generate hundreds of Einsteins or hundreds of John von Neumanns. I mean, taking hundred random healthy kids and giving them best education, that should be easily within powers of any greater country.

Actually, any billionaire could easily do it, and there could even be a financial incentive for him to do: offer these new Einsteins to work for you when they finish their studies. (They would probably be happy to work along the other Einsteins.)

Replies from: avturchin↑ comment by avturchin · 2019-10-27T11:15:14.137Z · LW(p) · GW(p)

This was tested in the chess field. A family decided to teach their kids chess from early childhood and created three grandmasters (Plogar sisters) https://www.psychologytoday.com/intl/articles/200507/the-grandmaster-experiment (But not one Kasparov, so some variability of personal capabilities obviously exists.)

Replies from: Viliam

↑ comment by Viliam · 2019-10-27T17:21:57.405Z · LW(p) · GW(p)

Polgár was an awesome parent, but I believe he seriously underestimated (in fact, completely dismissed) the effect of IQ. He should have checked his genetic privilege. On the other hand, seems like the "hundred Einsteins" experiment could still work if you'd start with kids over e.g. IQ 130 (or kids of parents with high IQ, so you can start the interventions soon without worrying about measuring IQ at very young age). Two percents of population, that's still a lot, in absolute numbers.

Unfortunately, I am not a billionaire, so my enthusiasm about this project is irrelevant.

Replies from: avturchin↑ comment by avturchin · 2019-10-27T19:20:12.711Z · LW(p) · GW(p)

Yes, they had Hungarian-Jewish ancestry which is known to produce many genius minds called the Martians.

↑ comment by leggi · 2019-10-28T06:15:01.482Z · LW(p) · GW(p)

I try and imagine what I'd have been like born into another life. How I'd act, what I'd think without various events in my life.

The exposure to ideas at a young age was a big factor in my brain me thinks... The example that's being kicking around in my head (enough for me to consider looking into this shortform thingy the cool kids seem to have..)

Kids should see the stars.

Seeing the real night sky blew my mind, another level of awareness and thinking at a very young age.

I do wonder, how often do people see the stars these days?

What level of light pollution disconnects and isolates us from the universe just out there?

The curiosity stage should be stuffed, kids are sponges that can grasp a lot but they need the exposure. ... basic physics whilst finger-painting, basic chemistry when baking, anatomy pyjamas! Laying the building blocks. Find a kid's interests, their links to learning more about the world.

The opportunity for the creation of truly great (free access) educational material to 'teach the world' excites me, more understanding = more progress in my mind.

comment by habryka (habryka4) · 2020-12-15T06:22:08.242Z · LW(p) · GW(p)

I really like the breakdown in this post of splitting disagreements about variations in abilities into learning rate + competence, and have used it a number of times since it came out. I also think the post is quite clear and to the point about dissolving the question it set out to dissolve.

comment by Ben Pace (Benito) · 2020-12-11T05:23:54.112Z · LW(p) · GW(p)

I appreciated this post as an answer to my question, it helped me understand things marginally better.

comment by Ben Pace (Benito) · 2019-10-26T04:48:40.435Z · LW(p) · GW(p)

Thanks for the thoughtful post!

Can I suggest that you leave a link to this post as an answer to my original post? Or if not, I will, so that people there can find their way here.

comment by riceissa · 2019-10-26T01:42:19.001Z · LW(p) · GW(p)

Regarding your footnote, literacy rates depend on the definition of literacy used. Under minimal definitions, "pretty close to 100 percent of the population is capable of reading" is true, but under stricter definitions, "maybe 20 or 30 percent" seems closer to the mark.

https://en.wikipedia.org/wiki/Literacy#United_States

https://en.wikipedia.org/wiki/Functional_illiteracy#Prevalence

https://en.wikipedia.org/wiki/Literacy_in_the_United_States

"Current literacy data are generally collected through population censuses or household surveys in which the respondent or head of the household declares whether they can read and write with understanding a short, simple statement about one's everyday life in any written language. Some surveys require respondents to take a quick test in which they are asked to read a simple passage or write a sentence, yet clearly literacy is a far more complex issue that requires more information." http://uis.unesco.org/en/topic/literacy

I'm not sure why you are so optimistic about people learning calculus.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2019-10-26T01:50:39.919Z · LW(p) · GW(p)

Thanks for the information. My understanding was just based on my own experience, which is probably biased. I assume that most people can read headlines and parse text. I find it hard to believe that only 20 to 30 percent of people can read, given that more than 30 percent of people are on social media, which requires reading things (and usually responding to it in a coherent way).

I'm not sure why you are so optimistic about people learning calculus.

My own experience is that learning calculus isn't that much more difficult than learning a lot of other skills, including literacy. We start learning how to read while young and learn calculus later, which makes us think reading is easier. However, I think hypothetically, we could push the number of people who learn calculus to similar levels as reading (which, as you note, might still be limited to a basic form for most people). :)

Replies from: CronoDAS↑ comment by CronoDAS · 2019-11-04T15:44:08.789Z · LW(p) · GW(p)

Calculus itself isn't inherently more difficult to learn that earlier math, but it is where many math students hit a wall because it has a lot of prerequisites; if you were a mediocre student in algebra and trigonometry, there is a good chance that you will have trouble with calculus because you never completely mastered other skills that you have to use over and over again when doing calculus.

comment by Bird Concept (jacobjacob) · 2021-01-09T21:16:07.175Z · LW(p) · GW(p)

I made some prediction questions for this, and as of January 9th, there interestingly seems to be some disagreement with the author on these.

Would definitely be curious for some discussion between Matthew and some of the people with low-ish predictions. Or perhaps for Matthew to clarify the argument made on these points, and see if that changes people's minds.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2021-01-10T00:11:45.794Z · LW(p) · GW(p)

I think it's important to understand that the two explanations I gave in the post can work together. After more than a year, I would state my current beliefs as something closer to the following thesis:

Given equal background and motivation, there is a lot less inequality in the rates human learn new tasks, compared to the inequality in how humans perform learned tasks. By "less inequality" I don't mean "roughly equal" as your prediction-specifications would indicate; the reason is because human learning rates are still highly unequal, despite the fact that nearly all humans have similar neural architectures. As I explained in section two of the post, a similar architecture does not imply similar performance. A machine with a broken part is nearly structurally identical to a machine with no broken parts, yet it does not work.

Replies from: Vaniver, jacobjacob↑ comment by Vaniver · 2021-01-15T00:14:00.259Z · LW(p) · GW(p)

Given equal background and motivation, there is a lot less inequality in the rates human learn new tasks, compared to the inequality in how humans perform learned tasks.

Huh, my guess is the opposite. That is, all expert plumbers are similarly competent at performing tasks, and the thing that separate a bright plumber from a dull plumber is how quickly they become expert.

Quite possibly we're looking at different tasks? I'd be interested in examples of domains where this sort of thing has been quantized and you see the hypothesized relationship (where variation in learning speed is substantially smaller than variation in performance). Most of the examples I can think of that seem plausible are exercise-related, where you might imagine people learn proper technique with a tighter distribution than the underlying strength distribution, but this is cheating by using intellectual and physical faculties as separate sources of variation.

Replies from: matthew-barnett, habryka4↑ comment by Matthew Barnett (matthew-barnett) · 2022-03-12T21:18:12.748Z · LW(p) · GW(p)

Huh, my guess is the opposite. That is, all expert plumbers are similarly competent at performing tasks, and the thing that separate a bright plumber from a dull plumber is how quickly they become expert.

I don't know much about plumbing, but a possible explanation here is that there's a low skill ceiling in plumbing. If we were talking about chess, or Go, or other tasks with high skill ceilings, it definitely seems incorrect that the brightest players won't be much better than the dullest players.

↑ comment by habryka (habryka4) · 2021-01-15T00:20:18.304Z · LW(p) · GW(p)

This also strikes me as backwards, and the literature seems to back this up. Learning rates seem to differ a lot between different people, and also be heavily g-loaded.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2022-03-12T21:19:54.240Z · LW(p) · GW(p)

Do you have thoughts on what I said here [LW(p) · GW(p)]? I agree that learning rates differ between people, but I don't think that contradicts what I said? Perhaps you disagree. From my perspective, what matters more is whether learning rates have more or less variance than the variance of skill on learned tasks (especially those with high skill-ceilings).

↑ comment by Bird Concept (jacobjacob) · 2021-01-10T00:25:12.787Z · LW(p) · GW(p)

Formulations are basically just lifted from the post verbatim, so the response might be some evidence that it would be good to rework the post a bit before people vote on it.

I thought a bit about how to turn Katja's core claim into a poll question, but didn't come up with any great ideas. Suggestions welcome.

As for whether the claims are true or not --

The "broken parts" argument is one counter-argument.

But another is that it matters a lot what learning algorithm you use. Someone doing deliberate practice (in a field where that's possible) will vastly outperform someone who just does "guessing and checking", or who Goodharts very hard on short-term metrics.

Maybe you'd class that under "background knowledge"? Or maybe the claim is that, modulo broken parts, motivation, and background knowledge, different people can meta-learn the same effective learning strategies?

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2021-01-10T17:29:38.711Z · LW(p) · GW(p)

Formulations are basically just lifted from the post verbatim, so the response might be some evidence that it would be good to rework the post a bit before people vote on it.

But I think I already addressed the fundamental reply at the beginning of the section 2. The theses themselves are lifted from the post verbatim, however, I state that they are incomplete.

Maybe you'd class that under "background knowledge"? Or maybe the claim is that, modulo broken parts, motivation, and background knowledge, different people can meta-learn the same effective learning strategies?

I would really rather avoid making strict claims about learning rates being "roughly equal" and would prefer to talk about how, given the same learning environment (say, a lecture) and backgrounds, human learning rates are closer to equal than human performance in learned tasks.

comment by Gary Barnes (gary-barnes-1) · 2019-11-04T17:21:28.603Z · LW(p) · GW(p)

An interesting thesis, but I think your optimism about people's learning capacities comes from lack of experience; and I don't think the hardware analogy gets us far.

" There don't really seem to be humans who tower above us in terms of their ability to soak up new information and process it." — this has not been shown for people in general.

"My understanding was just based on my own experience, which is probably biased." Correct me if I am wrong, but I get the impression that you have not spent much time with people who have difficulty learning to read, or to add up, or to remember what they seemed to learn yesterday. Trying to learn calculus is worlds away from them — not because of lack of exposure, but because even much simpler things are very very hard for them.

I suppose you can cast them as having 'broken parts', but I don't think that helps. They are people. They have much lower abilities to learn. Therefore, humans have a wide range of abilities to learn.

Trying to understand human thought by looking at AI — and vice versa — is interesting. But the map is not the territory, in either case.

We do not know in what ways people's 'hardware' differs. It is not hardware + software as we understand those things — it is wetware, and most of how intelligence, and learning, emerge from that are unknown. So we cannot say that the 'same' hardware produces different results: we do not have a useful way of defining "the same".

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2019-11-04T01:31:02.759Z · LW(p) · GW(p)

I should point out that the comment I made about being optimistic was a minor part of my post -- a footnote in fact. The reason why many people have trouble learning is because learning disorders are common and more generally people have numerous difficulties with grasping material (lack of focus, direction, motivation, getting distracted easily, aversion to the material, low curiosity, innumeracy etc.).

I suppose you can cast them as having 'broken parts', but I don't think that helps. They are people.

Having broken parts is not a moral judgement. In the next sentences I showed how I have similar difficulties.

Regardless, the main point of my first explanation was less of saying that everyone has a similar ability to learn, and more of saying that previous analysis didn't take into account that differences in abilities can be explained by accounting for differences in training. This is a very important point to make. Measuring performance on Go, Chess and other games like that misses the point -- and for the very reason I outlined.

ETA: Maybe it helps if we restrict ourselves to the group of people who are college educated and have no mental difficulties focusing on hard intellectual work. That way we can see more clearly the claim that "There don't really seem to be humans who tower above us in terms of their ability to soak up new information and process it."

comment by Stefan_Schubert · 2019-10-26T00:52:25.215Z · LW(p) · GW(p)

One aspect may be that the issues we discuss and try to solve are often at the limit of human capabilities. Some people are way better at solving them than others, and since those issues are so often in the spotlight, it looks like the less able are totally incompetent. But actually, they're not; it's just that the issues they are able to solve aren't discussed.

Cf. https://www.lesswrong.com/posts/e84qrSoooAHfHXhbi/productivity-as-a-function-of-ability-in-theoretical-fields [LW · GW]

comment by limerott · 2019-10-28T18:44:41.519Z · LW(p) · GW(p)

In an experiment, a group of people who have never programmed before have been showed how to code. In the end, their skills were evaluated in a coding test. The expectation was that they would be roughly normally distributed. However, the outcome was that the students were clustered in two groups (within each of which you see the expected normal distribution). The students belonging to the first struggled while the second group fared relatively well. The researchers figured out the cause: the students that did better managed to create a mental model of what a variable is (a container or a box that can hold values) while those that struggled didn't manage to do this. Are the students from the second group inherently less intelligent?

Yes, because they did not manage to find the right model on their own. No, because once they were provided a straightforward explanation, they were able to code just as well as the students in the better group.

Replies from: philh↑ comment by philh · 2019-10-28T22:56:26.327Z · LW(p) · GW(p)

Do you have a citation for this?

I've heard a similar story, but different in some details, and in that story the second group did not end up competent programmers. And I think I've also heard that the story I first heard didn't replicate or something like that. So I don't know what to think.

Replies from: limerott↑ comment by limerott · 2019-10-29T03:32:37.241Z · LW(p) · GW(p)

I couldn't find it quickly, but I think that I read this on codehorror.

Replies from: Vaniver↑ comment by Vaniver · 2019-10-29T21:54:24.068Z · LW(p) · GW(p)

Probably this post; this claim has been highly controversial, with the original blog post citing a 2006 paper that was retracted in 2014, and whose original author wrote a meta-analysis that supported their conclusions in 2009. Here's some previous discussion (in 2012) on LW [LW · GW]. Many people have comments to the effect of "bimodal scores are common in education" with relatively few people having citations to back that up, in a way that makes me suspect they're drawing from the original retracted paper.

Replies from: limerott↑ comment by limerott · 2019-10-31T17:16:29.821Z · LW(p) · GW(p)

I really appreciate you looking this up. Now it is clear that these claims are controversial.

Nevertheless, the question still is what learning ability refers to: Is it the ability to comprehend learning material that explains the topic well, or is it the ability to come up with the simple explanations yourself? It seems that the OP refers to the latter. The first kind probably has lower variation. Also, this means that when measuring learning ability, you should ensure that all involved people have access to the same "source". I would be interested in hearing the OP's thoughts on that.