MWI, weird quantum experiments and future-directed continuity of conscious experience

post by SforSingularity · 2009-09-18T16:45:47.741Z · LW · GW · Legacy · 92 commentsContents

ADDED: None 92 comments

Response to: Quantum Russian Roulette

Related: Decision theory: Why we need to reduce “could”, “would”, “should”

In Quantum Russian Roulette, Christian_Szegedy tells of a game which uses a "quantum source of randomness" to somehow make a game which consists in terminating the lives of 15 rich people to create one very rich person sound like an attractive proposition. To quote the key deduction:

Then the only result of the game is that the guy who wins will enjoy a much better quality of life. The others die in his Everett branch, but they live on in others. So everybody's only subjective experience will be that he went into a room and woke up $750000 richer.

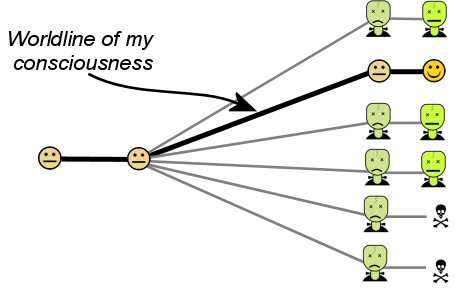

I think that Christian_Szegedy is mistaken, but in an interesting way. I think that the intuition at steak here is something about continuity of conscious experience. The intuition that Christian might have, if I may anticipate him, is that everyone in the experiment will actually experience getting $750,000, because somehow the word-line of their conscious experience will continue only in the worlds where they do not die. To formalize this, we imagine an arbitrary decision problem as a tree with nodes corresponding to decision points that create duplicate persons, and time increasing from left to right:

The skull and crossbones symbols indicate that the person created in the previous decision point is killed. We might even consider putting probabilities on the arcs coming out of a given node to indicate how likely a given outcome is. When we try to assess whether a given decision was a good one, we might want to look at the utilities on the leaves of the tree are. But what if there is more than one leaf, and the person concerned is me, i.e. the root of the tree corresponds to "me, now" and the leaves correspond to "possible me's in 10 days' time"? I find myself querying for "what will I really experience" when trying to decide which way to steer reality. So I tend to want to mark some nodes in the decision tree as "really me" and others as "zombie-like copies of me that I will not experience being", resulting in a generic decision tree that looks like this:

I decorated the tree with normal faces and zombie faces consistent with the following rules:

- At a decision node, if the parent is a zombie then child nodes have to be zombies, and

- If a node is a normal face then exactly one of its children must also be a normal face.

Let me call these the "forward continuity of consciousness" rules. These rules guarantee that there will be an unbroken line of normal faces from the root to a unique leaf. Some faces are happier than others, representing, for exmaple, financial loss or gain, though zombies can never be smiling, since that would be out of character. In the case of a simplified version of Quantum Russian Roulette, where I am the only player and Omega pays the reward iff the quantum die comes up "6", we might draw a decision tree like this:

The game looks attractive, since the only way of decorating it that is consistent with the "forward continuity of consciousness" rules places the worldline of my conscious experience such that I will experience getting the reward, and the zombie-me's will lose the money, and then get killed. It is a shame that they will die, but it isn't that bad, because they are not me, I do not experience being them; killing a collection of beings who had a breif existence and that are a lot like me is not so great, but dying myself is much worse.

Our intuitions about forward continuity of our own conscious experience, in particular that at each stage there must be a unique answer to the question "what will I be experiencing at that point in time?" are important to us, but I think that they are fundamentally mistaken; in the end, the word "I" comes with a semantics that is incompatible with what we know about physics, namely that the process in our brains that generates "I-ness" is capable of being duplicated with no difference between the copies. Of course a lot of ink has been spilled over the issue. The MWI of quantum mechanics dictates that I am being copied at a frightening rate, as the quantum system that I label as "me" interacts with other systems around it, such as incoming photons. The notion of quantum immortality comes from pushing the "unique unbroken line of conscious experience" to its logical conclusion: you will never experience your own death, rather you will experience a string of increasingly unlikley events that seem to be contrived just to keep you alive.

In the comments for the Quantum Russian Roulette article, Vladimir Nesov says:

MWI is morally uninteresting, unless you do nontrivial quantum computation. ... when you are saying "everyone survives in one of the worlds", this statement gets intuitive approval (as opposed to doing the experiment in a deterministic world where all participants but one "die completely"), but there is no term in the expected utility calculation that corresponds to the sentiment everyone survives in one of the worlds"

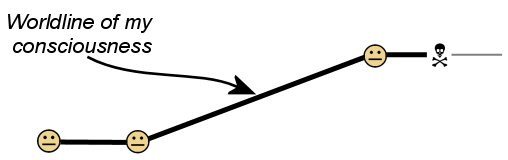

The sentiment "I will survive in one of the worlds" corresponds to my intuition that my own subjective experience continuing, or not continuing, is of the upmost importance. Combine this with the intuition that the "forward continuity of consciousness" rules are correct and we get the intuition that in a copying scenario, killing all but one of the copies simply shifts the route that my worldline of conscious experience takes from one copy to another, so that the following tree represents the situation if only two copies of me will be killed:

The survival of some extra zombies seems to be of no benefit to me, because I wouldn't have experienced being them anyway. The reason that quantum mechanics and the MWI plays a role despite the fact that decision-theoretically the situation looks exactly the same as it would in a classical world - the utility calculations are the same - is that if we draw a tree where only one line of possibility is realized, we might encounter a situation where the "forward continuity of consciousness" rules have to be broken - actual death:

The interesting question is: why do I have a strong intuition that the "forward continuity of consciousness" rules are correct? Why does my existence feel smooth, unlike the topology of a branch point in a graph?

ADDED:

The problem of how this all relates to sleep, anasthesia or cryopreservation has come up. When I was anesthetized, there appeared to be a sharp but instantaneous jump from the anasthetic room to the recovery room, indicating that our intuition about continuity of conscious experience treats "go to sleep, wake up some time later" as being rather like ordinary survival. This is puzzling, since a peroid of sleep or anaesthesia or even cryopreservation can be arbitrarily long.

92 comments

Comments sorted by top scores.

comment by Jordan · 2009-09-18T19:33:51.032Z · LW(p) · GW(p)

Consider this equivalent alternative to the Quantum Russian Roulette problem:

Rather than being put to sleep, the contestants are frozen (we assume the technology exists to defrost people successfully). At this point, all the contestants are dead. They have ceased to exist. The quantum coins are then flipped, creating multiple universes, none of which contain any contestants. Finally, in one particular universe, the bodies of the contestants are revived and the contestants exist once more.

No zombies were ever made and there is no branching of consciousness worldines.

Replies from: Vladimir_Nesov, SforSingularity↑ comment by Vladimir_Nesov · 2009-09-18T20:31:28.322Z · LW(p) · GW(p)

Nice! You can also convert the frozen people into encrypted digital representation written in the structure of carbon bricks for more dramatic effect.

Replies from: SforSingularity↑ comment by SforSingularity · 2009-09-18T21:54:55.772Z · LW(p) · GW(p)

Indeed, you could take two copies of me, reanimate one of them, let him live for a while, and then kill him, and then once you've done that reanimate the other copy and let him live. Which one do I experience being?

Replies from: Johnicholas, Technologos↑ comment by Johnicholas · 2009-09-18T23:48:20.692Z · LW(p) · GW(p)

It depends on who is asking the question "Which one do I experience being?".

One of the copies answers one way, one of the copies answers the other way. The entity previous to the copying operation experiences pretty much what you're experiencing right now.

Replies from: SforSingularity↑ comment by SforSingularity · 2009-09-19T10:03:45.834Z · LW(p) · GW(p)

The problem is, that isn't an intuitively satisfying answer. We have an intuition that demands the answers to these questions, and yes, there are no answers, because the question needs "unasking". But the process of unasking a question requires more than just saying why it doesn't make sense, I think. One has to look for a way to satisfy what the intuition was asking for without asking the confused question.

Replies from: Document↑ comment by Technologos · 2009-09-21T07:41:19.032Z · LW(p) · GW(p)

A question very similar to this was on my UChicago admissions essay. Had to do with teleporters.

↑ comment by SforSingularity · 2009-09-18T19:54:17.914Z · LW(p) · GW(p)

Ok, although there are no "zombie-me's" created, there are still multiple universes and only one of them is a "pleasant" alternative, i.e. one in which I survive.

comment by Scott Alexander (Yvain) · 2009-09-18T19:41:48.339Z · LW(p) · GW(p)

I think I've seen the following argument somewhere, but I can't remember where:

Consider the following villainous arrangement: you are locked in a watertight room, 6 feet high. At 8 o'clock, a computer flips a quantum coin. If it comes up heads, the computer opens a valve, causing water to flow into the room. The water level rises at a rate of 1 foot per minute, so by 8:06 the room is completely flooded with water and you drown and die before 8:15. In either case, the room unlocks automatically by 8:15, so if the coin landed tails you may walk out and continue with your life.

At 7:59, you assign a 50-50 probability that at 8:03, you will experience being up to your waist in water. If you believe in quantum immortality, you will also be assigning a 100 percent chance that at 8:15, you will experience being completely dry and walking out of the room in perfect safety. Since every world-line in which you're in the water at 8:03 is also a world-line in which you're dead at 8:15, these probabilities seem inconsistent.

This suggests that you should consider a 50-50 chance of having your subjective experience snuffed out, since you could find yourself in the water-rising world, and there's nowhere to go from there but death. The other possibility - that you'd be shunted for some reason into a valve-doesn't-open branch even before there's any threat of death - seems to require a little too much advance planning.

But no form of death is instantaneous. Even playing quantum Russian Roulette, there's still a split second between the bullet firing and your death, which is more than enough time to shunt you into a certain world-line.

This doesn't go so well with the adorable zombie-face graphics above. It would go a little better with a model in which, at any point, one copy of you was randomly selected to be the one you're experiencing, but that model has the slight disadvantage of making no sense. it would also go well with a model in which you can just plain die.

Replies from: cousin_it, Vladimir_Nesov, SforSingularity, Jordan↑ comment by cousin_it · 2009-09-18T19:50:29.092Z · LW(p) · GW(p)

The water level rises at a rate of 1 foot per minute, so by 8:06 the room is completely flooded with water and you drown and die before 8:15.

No, quantum immortality claims that you won't drown even if the room gets flooded with certainty. You'll be saved by a quantum-fluctuation air bubble or something.

Replies from: Vladimir_Nesov, Yvain, SforSingularity↑ comment by Vladimir_Nesov · 2009-09-18T20:35:46.204Z · LW(p) · GW(p)

By the way, quantum immortality must then run a consequentialist computation to distinguish between freezing people to be left frozen and freezing people to be later revived. In other words, magic.

Replies from: SforSingularity↑ comment by SforSingularity · 2009-09-18T20:38:33.442Z · LW(p) · GW(p)

Explain?

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2009-09-18T20:45:45.126Z · LW(p) · GW(p)

Assuming QI, if I get frozen to be unfrozen later, I don't expect QI to "save" me from being frozen -- I expect to experience whatever comes after unfreezing and not a magical malfunction of the freezing machine that prevents me from getting frozen. But if I'm being frozen for eternity, it's death, and so I expect QI to save me from it by a quantum fluctuation.

References: The Hidden Complexity of Wishes, Magical Categories. The concept of "death" is too complex to be captured by any phenomenon other than the process of computation of this concept in human minds, or something derived therefrom.

Replies from: SilasBarta, SforSingularity, Lightwave↑ comment by SilasBarta · 2009-10-05T16:34:20.268Z · LW(p) · GW(p)

Sorry, I wish I had followed this earlier.

The concept of "death" is too complex to be captured by any phenomenon other than the process of computation of this concept in human minds, or something derived therefrom.

No, death can easily be explained in a reductionist way without positing ontologically-basic subjectivity.

Death simply refers to when a self-perpetuating process (usually labeled "life") stops maintaining itself far from equilibrium with its environment via expenditure of negentropy (free energy). Note that a common term for dying (in English) is "reaching room temperature". (Yes, yes, cold-blooded life forms are always staying close to room temperature, but they stay far from equilibrium in other ways -- chemically, structurally, etc..)

Being frozen in such a way that the process that is you can be recovered is not death, at least not completely. You are still far from equilibrium with your broader environment -- note that you still have a large KL divergence, so the information contained in you has not been irreversibly deleted.

Replies from: pdf23ds, Vladimir_Nesov↑ comment by pdf23ds · 2009-10-05T18:12:30.439Z · LW(p) · GW(p)

Note that a common term for dying (in English) is "reaching room temperature".

Never heard that one. Is that an American idiom? "Passing away" seems to be the standard euphemism where I'm from, but I usually just say "dying".

Replies from: thomblake, SilasBarta↑ comment by SilasBarta · 2009-10-05T18:14:56.864Z · LW(p) · GW(p)

Well, it's a dysphemism rather than a euphemism, but forms of it are used, and it doesn't appear to be unique to America. Check this Googling and its alternate suggestion and you see a New Zealand blog mentioning that some "oxygen waster" has finally "reached room temperature".

A very insightful idiom indeed!

↑ comment by Vladimir_Nesov · 2009-10-05T16:50:37.235Z · LW(p) · GW(p)

Being frozen in such a way that the process that is you can be recovered is not death, at least not completely. You are still far from equilibrium with your broader environment -- note that you still have a large KL divergence, so the information contained in you has not been irreversibly deleted.

I find this reasoning opaque. "Equilibrium with your broader environment"? Replace the head of the frozen person with a watermelon, and you'll have as much distance from "equilibrium" as for the head, but the person will be dead.

Replies from: SilasBarta↑ comment by SilasBarta · 2009-10-05T17:01:40.389Z · LW(p) · GW(p)

Not quite right. If you remove the head, and (as I presume you mean) let it die, its information is gone, as is the infomation about its connection with the body, and the information recovered would not be capable of fully specifying the process constituting the original person. They would be "more dead".

As I defined life as the sustenance of a process far from equilibrium, you have destroyed more of the process that is that individual.

On top of that, a frozen watermelon has a far smaller KL divergence from its environment than a human head. It is not the same distance from equilibrium -- it's closer.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2009-10-05T17:10:02.902Z · LW(p) · GW(p)

You've just hidden the complexity in the choice of the system for which you define a simple metric (I doubt it's even right as you state, but assume it is). What you call the process is chosen by you to make the solution come out right (not deliberatively for that purpose, but by you anyway). Physics will be hard-pressed to even say what is the same rigid object over time (unless you trivially define that so in your formalism -- but then it'll be math), not to speak of the "process" of living person (where you can't define in math what that delineates -- the concept is too big for a mere human to see).

Get the print of a person in digital form and transmit it to the outer space by radio -- will the person's process involve the whole light cone now? How is that different from just exerting gravitational field?

Replies from: SilasBarta↑ comment by SilasBarta · 2009-10-05T17:34:25.109Z · LW(p) · GW(p)

I have not hidden any complexity nor made any arbitrary choice. The process that is the human body is mostly understood, in terms of what it does to maintain homeostasis (regulation of properties against environmental perturbations). Individual instances of a human body -- different people -- carry differences among each other -- what memories they have, what funcitonality their organs have, and so on.

Way up at the level of interpersonal relationships, we can recognize an individual, like "Bob", and his personality traits, etc. We can recognize when a re-instantiation of a person still acts like Bob. This is not an arbitrary choice -- it's based on a previous, non-arbitrary identification of a chunk of conceptspace called "the person Bob".

So we can know when Bob has irreversibly mixed with his environment.

Get the print of a person in digital form and transmit it to the outer space by radio -- will the person's process involve the whole light cone now?

The person will be in the same dormant state as when they are frozen, or as a seed is before it is planted, or the chemicals that mix to make a virus before they are mixed. The information to reconstitute the being is still there, but it is not yet restored to its self-sustaining, entropy-exporting process. When you transmit their information through space, you are giving structure to the EM waves propagating against background noise, so there's still a KL divergence from the environment: the waves you transmit are different from what you would expect if you expected normal background noise.

You still, of course, need someone capable of decoding that and reinstantiating the person. When all information about how to do so is lost, then the person is finally irreversibly mixed with their environment and permanently dead, in line with the definition I gave before.

How is that different from just exerting gravitational field?

I'm not sure of the purpose of this question. Could you state clearly what your position is, and which part you believe I'm disagreeing with, and why that disagreement is in error?

↑ comment by SforSingularity · 2009-09-18T20:55:16.007Z · LW(p) · GW(p)

Ok, I see the point you are making. But When you say

quantum immortality must then run a consequentialist computation to distinguish

You are thinking of QI as an agent who has to decide what to do at a given time. But suppose a proponent of QI thinks instead of QI as simply the brute fact that there are certain paths through the tree structure of MWI QM that continue your conscious experience forever, and the substantive fact that what I actually experience will be randomly chosen from that set of paths.

I disagree with QI because I think that the very language being used to frame the problem is severely defective; the semantics of the word "I" is the problem.

The concept of "death" is too complex to be captured by any phenomenon other than the process of computation of this concept in human minds, or something derived therefrom.

I think that perhaps the word "I" suffers from the same problem.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2009-09-18T20:57:43.639Z · LW(p) · GW(p)

You are thinking of QI as an agent

As a concept -- whether it's defined in the language of games is irrelevant.

↑ comment by Lightwave · 2009-09-19T07:21:22.833Z · LW(p) · GW(p)

Assuming QI, if I get frozen to be unfrozen later, I don't expect QI to "save" me from being frozen

Why wouldn't you expect to be "saved"? MWI simply means that anything that can happen - will happen (in some branch). So you'll be "saved" in both cases in some branches (if this is physically possible given the current situation).

↑ comment by Scott Alexander (Yvain) · 2009-09-18T20:15:30.631Z · LW(p) · GW(p)

So, at 7:59, what probability do you assign to experiencing, at 8:16, a memory of having been saved by the coin landing tails, versus a memory of having been saved by quantum fluctuation?

Replies from: SforSingularity↑ comment by SforSingularity · 2009-09-18T20:37:28.582Z · LW(p) · GW(p)

QI doesn't specify. A reasonable assumption would simply be to condition upon your survival, so at 7:59 you assign, say, a 1-10^-6 probability to the coin landing tails, and a 10^-6 probability to other ways you could be saved, for example rescue by an Idiran assault force, a quantum bubble, etc.

Replies from: Yvain↑ comment by Scott Alexander (Yvain) · 2009-09-18T21:17:03.334Z · LW(p) · GW(p)

Well, the reason I ask is that if you're standing outside the multiverse at 8:16, and you count the number of universes with living mes that were saved by the coin landing tails, and those with living mes that were saved by quantum fluctuation, the ones with tails outnumber the ones with fluctuations several gazillion to one, since from an outsider's point of view there's a 1/2 chance I'll get saved by tails versus a one in a gazillion chance I'll be saved by fluctuations.

But from my perspective, if and only if we enforce continuity of experience, there's a 50-50 chance I'll find myself saved by tails vs. fluctuations. But this creates the odd situation of there being certain "mes" in the multiverse whom I am much more likely to end out as than others.

Replies from: SforSingularity↑ comment by SforSingularity · 2009-09-18T21:48:28.650Z · LW(p) · GW(p)

there's a 50-50 chance I'll find myself saved by tails vs. fluctuations.

why? why not just condition your existing probability distributions on continued conscious experience?

↑ comment by SforSingularity · 2009-09-18T20:25:41.610Z · LW(p) · GW(p)

Right, QI says that you will not die.

↑ comment by Vladimir_Nesov · 2009-09-18T20:30:02.489Z · LW(p) · GW(p)

But no form of death is instantaneous. Even playing quantum Russian Roulette, there's still a split second between the bullet firing and your death, which is more than enough time to shunt you into a certain world-line.

I think Jordan's method robustly seals this problem. It is the total absence of information-theoretic death, but no "continuity of consciousness" can be squeezed through. Whatever moral qualms can be given to the destruction of frozen people, are about clear-cut consequences, not the action itself.

↑ comment by SforSingularity · 2009-09-18T20:28:03.818Z · LW(p) · GW(p)

seems to require a little too much advance planning.

This gets me too. Just how much advance planning is the universe allowed? Am I alive now, rather than in the year 1000, because we are sufficiently close to developing anti-aging treatments?

↑ comment by Jordan · 2009-09-18T19:50:04.351Z · LW(p) · GW(p)

I agree. I also think this is why Christian stated in his problem setup for Quantum Russian Roulette that the participants are put into a deep sleep before they are potentially killed. If the method of death is quick enough, or if you aren't conscious when it occurs, then you shouldn't be shunted into any alternative world-lines.

Replies from: Jack, Christian_Szegedy↑ comment by Jack · 2009-09-18T21:56:49.630Z · LW(p) · GW(p)

No method is quick enough. At any time t some event can prevent someones subjective experience in some very large set of world-lines. But what you can't do is spin a quantum wheel at time t and then kill everyone else at t+1. From the subjective experience of the players either the causal connection between the roulette wheel and the killing mechanism would fail or the killing mechanism would fail. If you got lucky the failure you'd experience would happen early- but chances are you'd experience everything right up until the last possible plank-length of time (or wake up afterward).

Is there a method of killing which, according to quantum probability, either kills someone outright or leaves them relatively undamaged?

Replies from: Christian_Szegedy↑ comment by Christian_Szegedy · 2009-09-18T22:02:48.489Z · LW(p) · GW(p)

Is there a method of killing which, according to quantum probability, either kills someone outright or leaves them relatively undamaged?

I think the real question is whether the chance of being saved damaged is significantly higher than just being damaged without playing the game. For example if you get into a car, you have a relatively high probability to get out damaged. If you get below that threshold, then you don't take any extra risk

Replies from: Jack↑ comment by Jack · 2009-09-18T22:46:37.323Z · LW(p) · GW(p)

Lets say Smith is standing with a gun aimed at his head. The gun is aimed at his head is attached to a quantum coin with a 50% chance of flipping heads. If if flips heads the gun will go off. All this will happen at 8:00.

If we examine the universal wave function at 8:05 we'll find that in about 50% of worlds Smith will be dead(1). Similarly in about 50% of the worlds the gun will have gone off. But those sets of worlds won't overlap. There will be a few worlds where something else kills Smith and a few worlds where the gun doesn't. And if you look at tall the worlds in which Smith has conscious experience at 8:05 the vast majority would be worlds in which Smith is fine. But I don't think that those proportions accurately reflect the probability that Smith will experience being shot and brain damaged because once the gun is fired the worlds in which Smith has been shot and barely survived become the only worlds in which he is conscious. For the purposes of predicting future experience we don't want to be calculating over the entire set of possible worlds. Rather, distribution of outcomes in the worlds in which Smith survives should take on the probability space of of their sibling worlds in which Smith dies. The result is that Smith experiencing brain damage should be assigned almost a 50% probability. This is because once the gun is fired there is a nearly 100% chance that Smith will experience injury since all the worlds in which he doesn't are thrown out of the calculation of his future experiences. (2).

This means that unless the killing method is quantum binary (i.e. you either are fine, or you die) the players of quantum Russian roulette would actually most likely wake up short $50,000 and in serious pain (depending on the method). Even if the method is binary you will probably wake up down $50,000.

(1) I understand that quantum probabilities don't work out to just be the fraction of worlds... if the actual equation changes the thought experiment tell me, but I don't think it should.

(2) My confidence is admittedly low regarding all this.

Replies from: Christian_Szegedy↑ comment by Christian_Szegedy · 2009-09-19T00:32:42.137Z · LW(p) · GW(p)

I don't agree with your calculation.

The probability of winning the money over suffering injuries due to failed execution attempt is P=(1/16)/(1/16+15/16*epsilon) where epsilon is the chance that the excution attempt fails. If epsilon is small, it will get arbitrarily close to 1.

You should not be worried as long as P<Baseline where Baseline is the probability of you having a serious injury due to normal every day risks within let's say an hour.

Replies from: Jack↑ comment by Jack · 2009-09-19T01:00:05.219Z · LW(p) · GW(p)

That is the probability that an observer in any given world would observe someone (Contestant A) win and some other contestant (B) survive. But all of these outcomes are meaningless when calculating the subjective probability of experiencing an injury. If you don't win the only experience you can possibly have is that of being injured.

According to you calculations if a bullet is fired at my head there is only a small chance that I will experience being injured. And you calculations certainly do correctly predict that there is a small chance another observer will observe me being injured. But the entire conceit of quantum immortality is that since I can only experience the worlds in which I am not dead I am assured of living forever since there will always be a world in which I have not died. In other words, for the purposes of predicting future experiences the worlds in which I am not around are ignored. This means if there is a bullet flying toward my head the likelihood is that I will experience being alive and injured is very very high.

How is you calculation consistent with the fact that the probability for survival is always 1?

Replies from: Christian_Szegedy↑ comment by Christian_Szegedy · 2009-09-19T01:28:48.762Z · LW(p) · GW(p)

You experience all those world where you

- win

- survive while being shot

You have the same in everyday life. You experience all those worlds where you

- Have no accident/death

- Have accidents with injuries

There is no difference. As long as the ratio of the above two probabilities is bigger than the ratio of the ones below, you don't go into any extra risk of being injured compared to normal every day life.

Replies from: Jack↑ comment by Jack · 2009-09-19T03:39:55.923Z · LW(p) · GW(p)

You agree that the probability of survival is 1 right? My estimation for the probability of experiencing injury given losing and surviving is very high (1-epsilon). There is a small chance the killing mechanism would not do any harm at all but most likely it would cause damage.

It follows from these two things that the probability of experiencing injury given losing the game is equally high (its the same set of worlds since one always experiences survival). The probability of experiencing injury is therefore approximately 1-epsilon (15/16). Actually, since there is also a very tiny possibility of injury for the winner the actual chances of injury are a bit higher. The diminishing possibilities of injury given winning and no injury given losing basically cancel each other out leaving the probability of experiencing injury at 15/16.

Replies from: Christian_Szegedy↑ comment by Christian_Szegedy · 2009-09-19T04:03:59.158Z · LW(p) · GW(p)

The probability of experiencing injury is therefore approximately 1-epsilon (15/16).

Wrong: it is epsilon 15/16.

You are confusing conditional probability with prior probability.

Replies from: Jack↑ comment by Jack · 2009-09-19T06:17:53.970Z · LW(p) · GW(p)

For all readers: If you've read this exchange and have concluded that I'm really confused please up-vote Christian's comment here so I can be sure about needing to correct myself. This has been one of those weird exchanges where I started with low confidence and as I thought about it gained confidence in my answer. So I need outside confirmation that I'm not making any sense before I start updating. Thanks.

Replies from: wedrifid↑ comment by wedrifid · 2009-09-21T20:29:16.926Z · LW(p) · GW(p)

- The probability that you will win is 1/16.

- The probability that you will be injured is epsilon*15/16.

- The probability that you will end up alive is 1/16 + 15/16*epsilon.

- The probability that you will experience injury given that you survive is (15/16 * epsilon)/(1/16 + epsilon*15/16).

From what I can tell this final value is the one under consideration. "Belief" in QI roughly corresponds to using that denominator instead of 1 and insisting that the rest doesn't matter.

please up-vote Christian's comment here so I can be sure about needing to correct myself

I'm not confident that the grandparent is talking about the same thing as the quote therein.

Replies from: Jack, Christian_Szegedy↑ comment by Jack · 2009-09-22T02:28:21.245Z · LW(p) · GW(p)

I think maybe I haven't been clear. To an independent observer the chances of any one contestant winning is 1/16. But for any one contestant the chances of winning are supposedly much higher. Indeed, the chances of winning are supposed to be at 1. Thats the whole point of the exercise, right? From your own subjective experience you'd guaranteed to win as you won't experience the worlds in which you lose. I've been labeling the chance of winning as 1/16 but in the original formation in which the 15 losers always die that isn't the probability contestant should be considering. They should consider the probability of them experiencing winning the money to be 1. After all, if they considered the probability to be 1/16 it wouldn't be worth playing.

My criticism was that once you throw in any probability that the killing mechanism fails the odds get shifted against playing. This is true even if an independent observer will only see the mechanism failing in a very small number of worlds because the losing contestant will survive the mechanism in 100% of worlds she experiences. And if most killing mechanisms are most likely to fail in a way that injures the contestant then the contestant should expect to experience injury.

As soon as we agree there is a world in which the contestant loses and survives then the contestants should stop acting as if the probability of winning the money is 1. For the purposes of the contestants the probability of experiencing winning is now 1/16. The probability of experiencing losing is 15/16 and the probability of experiencing injury is some fraction of that. This is the case because the 15/16 worlds which we thought the contestants could not experience are now guaranteed to be experienced by the contestants (again, even though an outsider observer will likely never see them).

Consider the quantum coin flips as a branching in the wave function between worlds in which Contestant A wins and worlds in which Contestant A loses. Under the previous understanding of the QRR game it didn't matter what the probability of winning was. So long as all the worlds in the branch of worlds in which Contestant A loses are unoccupied by contestant A there was no chance she would not experience winning. But as soon as a single world in the losing branch is occupied the probability of Contestant A waking up having lost is just the probability of her losing.

Lets play a variant of Christians's QRR. This variant is the same as the original except that the losing contestants are woken up after the quantum coin toss and told they lost. Then they are killed painlessly. Shouldn't my expected future experience going in by 1) about 15:16 chance that I am woken up and told I lost AND 2) If (1) about a 1:1 chance that I experience a world in which I lost and the mechanism failed to kill me. If those numbers are wrong, why? If they are right, did waking people up make that big a difference? How do they relate to the low odds you all are giving for surviving and losing?

Replies from: wedrifid, wedrifid↑ comment by wedrifid · 2009-09-22T13:20:55.825Z · LW(p) · GW(p)

Lets play a variant of Christians's QRR. This variant is the same as the original except that the losing contestants are woken up after the quantum coin toss and told they lost. Then they are killed painlessly. Shouldn't my expected future experience going in by 1) about 15:16 chance that I am woken up and told I lost AND 2) If (1) about a 1:1 chance that I experience a world in which I lost and the mechanism failed to kill me. If those numbers are wrong, why? If they are right, did waking people up make that big a difference? How do they relate to the low odds you all are giving for surviving and losing?

No difference.

↑ comment by wedrifid · 2009-09-22T12:44:13.333Z · LW(p) · GW(p)

Consider the quantum coin flips as a branching in the wave function between worlds in which Contestant A wins and worlds in which Contestant A loses. Under the previous understanding of the QRR game it didn't matter what the probability of winning was. So long as all the worlds in the branch of worlds in which Contestant A loses are unoccupied by contestant A there was no chance she would not experience winning. But as soon as a single world in the losing branch is occupied the probability of Contestant A waking up having lost is just the probability of her losing.

Given QI, we declared p(wake up) to be 1. That being the case I assert p(wake up having lost) = (15/16 epsilon)/(1/16 + epsilon15/16).

It seems to me that you claim that p(wake up having lost) = 15/16. That is not what QI implies.

↑ comment by Christian_Szegedy · 2009-09-21T20:49:21.403Z · LW(p) · GW(p)

It is quite clear that I meant the same: Cf. my last post with quantitative analysis

BTW it does not have anything to do with QI, which is a completely different concept. P(experiencing injury|survival) does not even depend on MWI.

It is just a conditional probability, that's it.

Replies from: wedrifid↑ comment by wedrifid · 2009-09-22T09:47:39.079Z · LW(p) · GW(p)

It is quite clear that I meant the same:

Jack seemed to declare some explicit assumptions in that post that you didn't follow. (His answer was still incorrect.)

Cf. my last post with quantitative analysis

My own analysis more or less agrees with that post (which I upvoted instead).

BTW it does not have anything to do with QI, which is a completely different concept. P(experiencing injury|survival) does not even depend on MWI.

It is just a conditional probability, that's it.

I answered the question what is p(experiencing injury | survival. That is the sane way to ask the question. Someone enamoured of the Quantum Immortality way of thinking may describe this very same value as p(experiencing injury). That is more or less what "belief in Quantum Immortality" means. It's pretending, or declaring that the "given survival" part is not needed.

For example, the introduction "You agree that the probability of survival is 1 right?" suggests QI is assumed for the sake of the argument. That being the case, the probability of experiencing injury is not "epsilon 15/16". It is what the sane person calls conditional probability p(injury | survival).

Replies from: Christian_Szegedy↑ comment by Christian_Szegedy · 2009-09-22T21:04:46.837Z · LW(p) · GW(p)

Just a remark: Even "quantum immortality" and "quantum suicide" are two different concepts.

"Quantum suicide" is the concept the OP was based on. "Quantum immortality" OTOH is a more specific and speculative implication of it which is no way assumed here.

The concept of "quantum suicide" however is assumed for the OP: It says that if there is a quantum event with a non-zero probability outcome of surviving, then in (some universe) there will be a continuation of your consiciousness that will experience that branch. It is not much more speculative that MWI itself. At least, it is hard to argue against that beliefe as long as you think MWI is right.

The concept of "quantum immortality" assumes that there is always an event that prolongs you life so you will always go on experience being alive. This is very speculative (and therefore I don't buy it).

Replies from: wedrifid↑ comment by wedrifid · 2009-09-23T06:23:45.184Z · LW(p) · GW(p)

Just a remark: Even "quantum immortality" and "quantum suicide" are two different concepts.

One is a hypothetically proposed action, the other a fairly misleading way of describing the implications of a quantum event with a non-zero probability of your survival.

The concept of "quantum suicide" however is assumed for the OP: It says that if there is a quantum event with a non-zero probability outcome of surviving, then in (some universe) there will be a continuation of your consiciousness that will experience that branch. It is not much more speculative that MWI itself. At least, it is hard to argue against that belief as long as you think MWI is right.

I agree, and have expressed frustration in the way 'belief' and 'believers in' have been thrown about in regards to these topics. While 'belief' should be more or less obvious, the word has been used to describe a somewhat more significant claim than that one of the many worlds will contain an alive instance of me. 'Belief in QS' has often been used to represent the assertion that for the purposes of evaluating utility the probability assigned to events should only take into account worlds where survival occurs. In that mode of thinking, 'the probability of experiencing injury' is what I would describe as p(injury | survival).

When going along with this kind of reasoning for the purposes of the discussion I tend to include quotation or otherwise imply that I am considering the distorted probability function against my better judgement. There are limits to how often one can emphasise that kind of thing without seeming the pedant.

The concept of "quantum immortality" assumes that there is always an event that prolongs you life so you will always go on experience being alive. This is very speculative (and therefore I don't buy it).

The only 'speculation' appears to be in just how small a non-zero probability a quantum event can have. If there were actually no lower limit then quantum immortality would (more or less) be implied. This ties into speculation along the lines of Robin's 'mangled worlds'. The assumption that there are limits to how fine the Everett branches can be sliced would be one reason to suggest quantum suicide is a bad idea for reasons beyond just arbitrarily wanting to claim as much of the Everett tree as possible.

Replies from: Christian_Szegedy, Christian_Szegedy↑ comment by Christian_Szegedy · 2009-09-23T22:30:27.630Z · LW(p) · GW(p)

The only 'speculation' appears to be in just how small a non-zero probability a quantum event can have. If there were actually no lower limit then quantum immortality would (more or less) be implied.

I think the speculation in QI are mainly in the two following hidden assumptions (even if you believe in vanilla MWI) :

- There will always be some nonzero probability event that lets you live on.

- The accumulation of such events will eventually give you an indefinite life span

Both of the above two are speculations and could very well be wrong even if arbitrary low probability branches continue to exist.

Replies from: wedrifid↑ comment by Christian_Szegedy · 2009-09-23T18:55:55.076Z · LW(p) · GW(p)

'Belief in QS' has often been used to represent the assertion that for the purposes of evaluating utility the probability assigned to events should only take into account worlds where survival occurs. In that mode of thinking, 'the probability of experiencing injury' is what I would describe as p(injury | survival).

In this sense I don't believe in QS. BTW, I don't think you can believe in the correctness of utility functions. You can believe in the correctness of theories and then you decide your utility function. It may be even inconsistent (and you can be money pumped), still that's your choice, based on the evidences.

It makes sense to first split the following three components:

- Mathematical questions (In this specific case, these are very basic probability theoretical calculations)

- (Meta-)physical questions: Is the MWI correct? Is mangled world correct? etc. Do our consciousness continues in all Everett branches? etc.

- Moral questions: What should you choose as a utility function assuming certain physical theories

It seems that you try to mix these different aspects.

If you notice the necessity to revise your calculations or physical world view, then it may force you to update your moral preferences, objective functions as well. But you should never let your moral preferences determine the outcome of your mathematical calculation or confidence in physical theories.

Replies from: wedrifid↑ comment by wedrifid · 2009-09-23T20:18:22.786Z · LW(p) · GW(p)

BTW, I don't think you can believe in the correctness of utility functions.

That is not the correct interpretation of my statement. The broad foundations of a utility function can give people a motivation for calculating certain probabilities and may influence the language that is used.

It seems that you try to mix these different aspects.

No, and I expressed explicitly an objection to doing so, to the point where for me to do so further would be harping on about it. I am willing to engage with those who evaluate probabilities from the position of assuming they will be a person who will be experiencing life. The language is usually ambiguous and clarified by the declared assumptions.

I doubt discussing this further will give either of us any remarkable insights. Mostly because the concepts are trivial (given the appropriate background).

↑ comment by Christian_Szegedy · 2009-09-18T21:49:23.097Z · LW(p) · GW(p)

Quick death is fine. I just wanted to put up a realistic scenario which is very gentle and minimally scary.

It is more plausible to be able to struck death in a deep sleep without your noticing. If I say, you get struck by a lighting, then your first reaction would have been: "OUCH!".

But if you get sedated by some strong drug then it's sounds much more plausible to be a painless "experience".

comment by orthonormal · 2009-09-18T23:03:29.262Z · LW(p) · GW(p)

This leads me to think that you don't actually understand what MWI implies for personal identity. There are no "zombie" lines of events; you will be every one of them, and every one of them will remember having been you. If that sounds absurd to you, then you don't actually understand what MWI really means, and you should rectify that first.

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2009-09-19T08:53:01.435Z · LW(p) · GW(p)

I think SforSingularity understands it just fine. He's asking why we have certain intuitions, not claiming that those intuitions are true. See this comment where he says that the intuitions are probably wrong and gave his own explanation for why we have them anyway.

comment by wedrifid · 2009-09-18T19:45:38.256Z · LW(p) · GW(p)

The interesting question is: why do I have a strong intuition that the "forward continuity of consciousness" rules are correct? Why does my existence feel smooth, unlike the topology of a branch point in a graph?

Human intuitions struggle with probability even without considering MW. There is (or at least was) little obvious adaptive benefit to intuitions that see the implications of quantum mechanics. I'd be somewhat surprised if we got that part right without a bit of study.

comment by cousin_it · 2009-09-18T18:38:12.356Z · LW(p) · GW(p)

Quantum immortality seems to predict that you can't go to sleep because the conscious phase must go on and on. So it must be wrong. But I don't know where exactly.

Replies from: Yvain, AllanCrossman, SforSingularity↑ comment by Scott Alexander (Yvain) · 2009-09-18T19:31:45.164Z · LW(p) · GW(p)

Or perhaps it only predicts that you will never observe being asleep.

Consider a person who plans to sleep from midnight to eight AM, but will stay up all night if a quantum coin comes up heads. At 11 PM the previous night, the person is awake no matter what, and the coin could be destined to come up either value. At 1 AM, only the people in the universes where the coin came up heads are still having conscious experiences, so they have some evidence that the coin came up heads. At 9 AM, everyone in both universes is having conscious experiences again, and a conscious observer is equally likely to have memories of the coin coming up heads or tails.

Really, all quantum immortality should be able to tell you is that at any moment you are having subjective experience, you are in a universe where events have proceeded in such a way as to keep you awake. So far, it's been startlingly accurate on that account :)

(you should be able to prove quantum immortality with a quantum random number generator and a bunch of sleeping pills. Too bad the proof will only work for a few hours.)

Replies from: SforSingularity↑ comment by SforSingularity · 2009-09-18T19:59:57.545Z · LW(p) · GW(p)

you should be able to prove quantum immortality with a quantum random number generator and a bunch of sleeping pills.

so, you hook the pills up to a random number generator: if it outputs 00000000000 you get the placebo, if it outputs anything else, you get sleeping pills. Then check to see if you are awake an hour later. But if you did find yourself still awake, you would remember it in the morning, so the proof would last forever. But since I have already experienced sleep - and lots of it recently - I can conclude that the experiment would almost certainly conclude the boring way.

I suppose "you will experience a string of increasingly unlikley events that seem to be contrived just to keep you alive." would have to include the possibility that you lose consciousness for a long time and then regain it.

Replies from: Yvain↑ comment by Scott Alexander (Yvain) · 2009-09-18T20:08:15.386Z · LW(p) · GW(p)

But if you did find yourself still awake, you would remember it in the morning, so the proof would last forever.

But in the morning, there'd no longer be a link between the existence of your subjective experiences and the unlikely quantum event; you'd be having subjective experiences no matter how the quantum event turned out.

That makes it equivalent to the example where you commit quantum suicide fifty times, survive each time, and then ask another person in the same universe what their belief in quantum immortality is. Since that person saw an unlikely event (you surviving), but it was equally unlikely with or without QI (because your survival is not linked to their current subjective experience) that person can only say "Well, something very unlikely just happened, but it has no bearing on QI."

Same is true of yourself in the morning. Because you'd be having the subjective experience no matter what, all you know in the universe where you stayed up all night was that an unlikely event happened that was equally unlikely with or without QI.

(I'm assuming here that experience doesn't have to be continuous to be experience, and that I am the same person as I was before the last time I went to sleep).

Replies from: SforSingularity↑ comment by SforSingularity · 2009-09-18T20:13:08.231Z · LW(p) · GW(p)

In the morning the experiment's a priori unlikely outcome would be evidence that something strange was going on involving subjective experience and probability; though perhaps something distinct from QI.

↑ comment by AllanCrossman · 2009-09-18T21:38:46.341Z · LW(p) · GW(p)

This is a common thought that seems to occur to a lot of people.

The flaw is that, when you go to sleep, you will wake up later. The situation is not analogous to quantum suicide.

Quantum immortality asks us what future experiences could plausibly be considered "mine". In the roulette case, only worlds where I survive have such experiences.

But in the insomnia case, there are future experiences that are "mine" in both the worlds where I remain awake, and the worlds where I fall asleep. The fact that those experiences are not simultaneous is irrelevant.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2009-09-18T21:41:06.325Z · LW(p) · GW(p)

Quantum immortality asks us what future experiences could plausibly be considered "mine".

What implements the considering?

Replies from: AllanCrossman↑ comment by AllanCrossman · 2009-09-18T21:42:23.953Z · LW(p) · GW(p)

Philosophers.

↑ comment by SforSingularity · 2009-09-18T18:44:51.347Z · LW(p) · GW(p)

That's probably why I stay up so late at night ;-0

Replies from: cousin_it↑ comment by cousin_it · 2009-09-18T18:52:11.404Z · LW(p) · GW(p)

Google tells me that my argument isn't new: even Wikipedia had a short paragraph on "quantum insomnia" sometime ago, though it's gone now.

If there are any believers in quantum immortality around here, I'd really like to hear their responses.

Replies from: JGWeissman, wedrifid↑ comment by JGWeissman · 2009-09-18T19:25:37.663Z · LW(p) · GW(p)

Quantum immortality does not predict that you will not sleep any more than it predicts that you will not observe someone else's death. There is a subjective experience of having been asleep, and a subjective experience of observing someone else's death, but not a subjective experience of having died.

The reason I don't trust quantum immortality is that I expect that whatever explains the Born probabilities will be a problem for a subjective experience continuing indefinitely in a quantum branch of vanishing measure.

Replies from: Christian_Szegedy, cousin_it↑ comment by Christian_Szegedy · 2009-09-18T21:18:57.653Z · LW(p) · GW(p)

If you flip a quantum coin 1000000 times, you will get into equally improbable branches. I don't see how your suggestion would work without any symmetry breaking.

Replies from: JGWeissman↑ comment by JGWeissman · 2009-09-18T22:15:28.892Z · LW(p) · GW(p)

Consider Robin's idea of World Mangling which, if true, would explain the Born probabilities. The idea is that a quantum branch with small measure could be "mangled" by a branch with larger measure, and that branches with smaller measure are more likely to further decohere into branches small enough to be mangled.

If this were true, then we would assign each of 2^1000000 branches split by your quantum coin flips equal probability of experiencing sufficient further decoherence to be mangled. But, the symmetry of our map is our ignorance, not the absence, of asymmetry in the territory. The effects of the different coin flip results could cause different rates of decoherence, that causes some branches to split faster and actually get mangled. And I would not want to be alive in only those branches.

Replies from: Christian_Szegedy↑ comment by Christian_Szegedy · 2009-09-19T00:39:28.152Z · LW(p) · GW(p)

Thanks. Very interesting indeed. This is the first comment so far on this topic that seems to have a chance of providing logical evidence against the viability of the game.

[EDIT] AFAICT, the mangled world hypothesis also suffers from the mathematical ugliness issues as the single world interpretation: nonlinear, nonlocal discontinuous action. Of course, you can assume that for the sake of eliminating quantum roulette type scenarios, but it makes the theory much uglier.

↑ comment by wedrifid · 2009-09-18T20:06:02.531Z · LW(p) · GW(p)

Believers? What sort of belief do you mean? I believe it works. But I also think it's a terrible idea. 90% chance of me being dead. 90% of quantum-goo me being dead. Whatever.

Replies from: dclayh, Jack, Christian_Szegedy↑ comment by dclayh · 2009-09-18T20:39:45.322Z · LW(p) · GW(p)

That reminds me of the movie version of The Prestige, where the Hugh Jackman character perngrf graf bs pbcvrf bs uvzfrys juvyr cresbezvat n gryrcbegngvba gevpx, ohg rafherf gung bar bs gurz qvrf rnpu gvzr. Ng gur raq ur vf xvyyrq, juvpu ur pbhyq rnfvyl unir ceriragrq ol nyybjvat n srj pbcvrf gb yvir.

So yes, let's not be profligate with our probability space.

↑ comment by Christian_Szegedy · 2009-09-18T21:36:38.521Z · LW(p) · GW(p)

Quantum-goo maximization. Hmmm...

I remember reading some very convincing arguments of Eliezer why filling the multiverse with our copies is a wrong objective.

Replies from: wedrifid↑ comment by wedrifid · 2009-09-19T08:49:56.656Z · LW(p) · GW(p)

Which would be relevant if we were considering copies. We aren't. It's us. Just us.

We are considering giving ourselves a significant chance of being dead. If Sfor's (rather well presented) explanations and accompanying skull imagery are not sufficient to demonstrate that this is a bad thing, it may help to substitute death for, perhaps, a 9/10 quantum determined chance of being given bad arthritis in our right knee. We can expect a 90% of experiencing mild chronic pain after the quantum dice are rolled and a 10% chance of experiencing the more desirable outcome. Then, we can decide whether we prefer being put to death to a sore knee.

The pain is real pain. The death is real death. They don't stop being bad things just because quantum mechanics is confusing.

If many-worlds quantum uncertainty leads you to make different decisions than you would when exposed to other kinds of uncertainty then it is probably far safer to assume quantum collapse. Sure, it may make you look silly and your decision making would be somewhat complicated by the extra complexity in your model. But at least you'll refrain from doing anything stupid.

comment by Wei Dai (Wei_Dai) · 2009-09-19T09:18:18.918Z · LW(p) · GW(p)

Why does my existence feel smooth, unlike the topology of a branch point in a graph?

This is an interesting question, and after thinking it over, I think our brains actively smooth out the past and erase the other branches. For example, consider the throw of a die. Before you throw it, you see the future as branched, each branch being thinner/less real than the worldline that you're on. But after you see the outcome, that branched view of the world disappears, as if all the other branches were pruned, and the branch with the outcome that you observed instantly grows to be as thick as the main trunk.

We know intellectually that in MWI the branch we are on is splitting and getting thinner continuously, but it doesn't feel that way. What if it did feel that way? Can we still motivate ourselves to think and act when we viscerally know that our decisions are affecting a smaller and smaller part of the universe? I guess evolution didn't want to solve that problem, so it created for us the illusion that our branch isn't getting thinner instead.

Replies from: Bongo, PhilGoetz, CarlShulman↑ comment by Bongo · 2009-09-19T15:47:52.691Z · LW(p) · GW(p)

I guess evolution didn't want to solve that problem, so it created for us the illusion that our branch isn't getting thinner instead.

Humans would have innate knowledge of many worlds if a specific illusion hadn't evolved to counter it?

By default, evolved organisms have innate knowledge of many worlds?

There are features in biological evolution that are conditional on many worlds being true?

I hope I misunderstood you...

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2009-09-20T01:38:57.346Z · LW(p) · GW(p)

No, I'm not claiming any of those things. By "illusion" I meant a view of the universe that doesn't correspond to reality, not a patch to override a default.

↑ comment by CarlShulman · 2009-09-19T16:19:31.136Z · LW(p) · GW(p)

Can we still motivate ourselves to think and act when we viscerally know that our decisions are affecting a smaller and smaller part of the universe?

Given TDT/UDT our decisions aren't so confined.

comment by Christian_Szegedy · 2009-09-18T21:24:07.979Z · LW(p) · GW(p)

I am having a hard time following zombie arguments. I can't really see what they add to the discussion.

Am I correct to see that you argue that although MWI is true, you will experience one universe and the others are filled with zombies? This is practically SWI in MWI disguise.

comment by AllanCrossman · 2009-09-19T01:19:29.202Z · LW(p) · GW(p)

Though not exactly a quantum immortality believer, I take it more seriously than most...

Objections mostly seem to come down to the idea that, if I split in two, and then one of me dies a minute later, its consciousness doesn't magically transfer over to the other me. And so "one of me" has really died.

However, I see this case as being about as bad as losing a minute's worth of memory. On the reductive view of personal identity, there's no obvious difference. There is no soul flying about.

Is there a difference between these four cases:

- I instantly lose a minute's memory due to nanobot action

- I am knocked unconscious and lose a minute's memory

- I die and am replaced by a stored copy of me from a minute ago

- I die, but I had split into two a minute ago

I'm not seeing it...

(Well, admittedly in the final case I also "gain" a minute's memory.)

Replies from: Vladimir_Nesov, Lightwave↑ comment by Vladimir_Nesov · 2009-09-19T01:27:05.568Z · LW(p) · GW(p)

In all the cases you listed, the remaining-you has the same moral weight as the you-if-nothing-would-happen. Arguably, the person on one side of a quantum coin only owns a half of moral weight, just like other events that you weight with Born probabilities, so the analogy breaks.

↑ comment by Lightwave · 2009-09-20T08:37:24.880Z · LW(p) · GW(p)

Here's what I find confusing about the last two cases:

I die, but I had split into two a minute ago

Here I think we agree that a person has really died, which is bad if you believe that all people, once existing, are morally significant (or are they?).

I die and am replaced by a stored copy of me from a minute ago

In the previous case, the copy was created before the person died, whereas in this case, the copy is created after the person dies. But why would the time the copy was created be relevant at all with regards to the answer of the question "Did anyone really die?" If someone died in the first case, then someone also died in the second case, since creating a copy is in no way causally connected with the dying of the person. So killing someone and creating a copy afterwards would have the same moral weight as creating a copy and then killing one of the people.

comment by Nubulous · 2009-09-18T23:34:59.582Z · LW(p) · GW(p)

Isn't the quantum part of Quantum Russian Roulette a red herring, in that the only part it plays is to make copies of the money ? All the other parts of the thought-experiment work just as well in a single world where people-copiers exist.

To make the situations similar, suppose our life insurance company has been careless, and we get a payout for each copy that dies. Do you have someone press [COPY], then kill all but one of the copies before they wake ?

comment by Aurini · 2009-09-18T23:08:58.625Z · LW(p) · GW(p)

In other words, MWI explains how James Bond pulls it off. Sweet.

EDIT: And furthermore, Homer Simpson is the Universe's way of balancing out: http://en.wikipedia.org/wiki/You_Only_Move_Twice

Okay, okay, I'll stop! ;)

comment by Mallah · 2009-12-01T19:32:48.144Z · LW(p) · GW(p)

Supposedly "we get the intuition that in a copying scenario, killing all but one of the copies simply shifts the route that my worldline of conscious experience takes from one copy to another"? That, of course, is a completely wrong intuition which I feel no attraction to whatsoever. Killing one does nothing to increase consciousness in the others.

See "Many-Worlds Interpretations Can Not Imply 'Quantum Immortality'"