Shard Theory - is it true for humans?

post by Rishika (rishika-bose) · 2024-06-14T19:21:47.997Z · LW · GW · 7 commentsContents

TLDR What’s Shard Theory (and why do we care)? A Crash Course on Human Learning Types of learning Conditioning Reward circuits Attention Consciousness Emotion The Full Picture Where does Shard Theory come in? 9 main theses of shard theory Conclusions Next Steps Thanks References Other resources None 8 comments

And is it a good model for value learning in AI?

(Read on Substack: https://recursingreflections.substack.com/p/shard-theory-is-it-true-for-humans)

TLDR

Shard theory proposes a view of value formation where experiences lead to the creation of context-based ‘shards’ that determine behaviour. Here, we go over psychological and neuroscientific views of learning, and find that while shard theory’s emphasis on context bears similarity to types of learning such as conditioning, it does not address top-down influences that may decrease the locality of value-learning in the brain.

What’s Shard Theory (and why do we care)?

In 2022, Quintin Pope and Alex Turner posted ‘The shard theory of human values [AF · GW]’, where they described their view of how experiences shape the value we place on things. They give an example of a baby who enjoys drinking juice, and eventually learns that grabbing at the juice pouch, moving around to find the juice pouch, and modelling where the juice pouch might be, are all helpful steps in order to get to its reward.

‘Human values’, they say, ‘are not e.g. an incredibly complicated, genetically hard-coded set of drives, but rather sets of contextually activated heuristics…’ And since, like humans, AI is often trained with reinforcement learning, the same might apply to AI.

The original post is long (over 7,000 words) and dense, but Lawrence Chan helpfully posted a condensation of the topic in ‘Shard Theory in Nine Theses: a Distillation and Critical Appraisal [AF · GW]’. In it, he presents nine (as might be expected) main points of shard theory, ending with the last thesis: ‘shard theory as a model of human values’. ‘I’m personally not super well versed in neuroscience or psychology’, he says, ‘so I can’t personally attest to [its] solidity…I’d be interested in hearing from experts in these fields on this topic.’ And that’s exactly what we’re here to do.

A Crash Course on Human Learning

Types of learning

What is learning? A baby comes into the world and is inundated with sensory information of all kinds. From then on, it must process this information, take whatever’s useful, and store it somehow for future use.

There’s various places in the brain where this information is stored, and for various purposes. Looking at these various types of storage, or memory, can help us understand what’s going on:

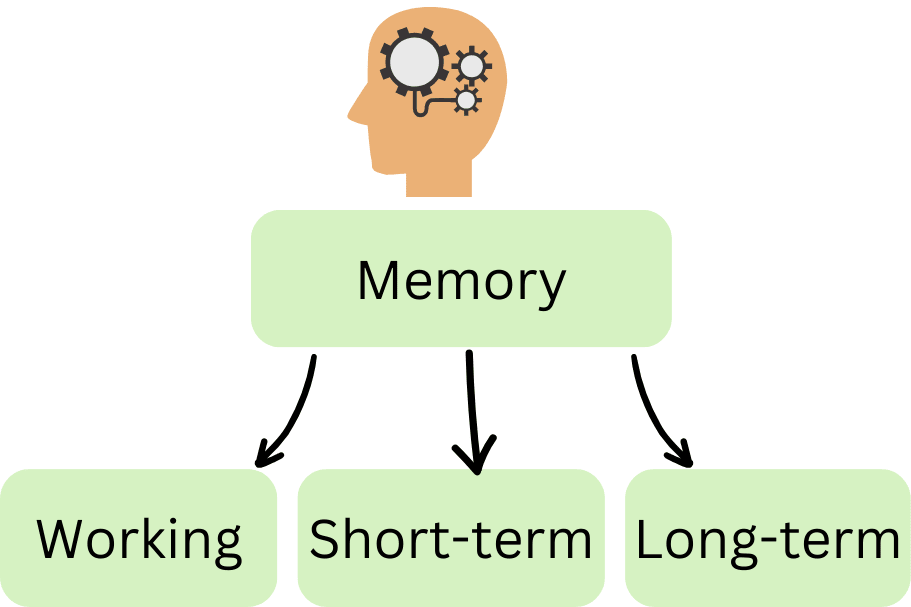

3 types of memory

We often group memory types by the length of time we hold on to them - ‘working memory’ (while you do some task), ‘short-term memory’ (maybe a few days, unless you revise or are reminded), and ‘long-term memory’ (effectively forever). Let’s take a closer look at long-term memory:

Types of long-term memory

We can broadly split long-term memory into ‘declarative’ and ‘nondeclarative’. Declarative memory is stuff you can talk about (or ‘declare’): what the capital of your country is, what you ate for lunch yesterday, what made you read this essay. Nondeclarative covers the rest: a grab-bag of memory types including knowing how to ride a bike, getting habituated to a scent you’ve been smelling all day, and being motivated to do things you were previously rewarded for (like drinking sweet juice). For most of this essay, we’ll be focusing on the last type: conditioning.

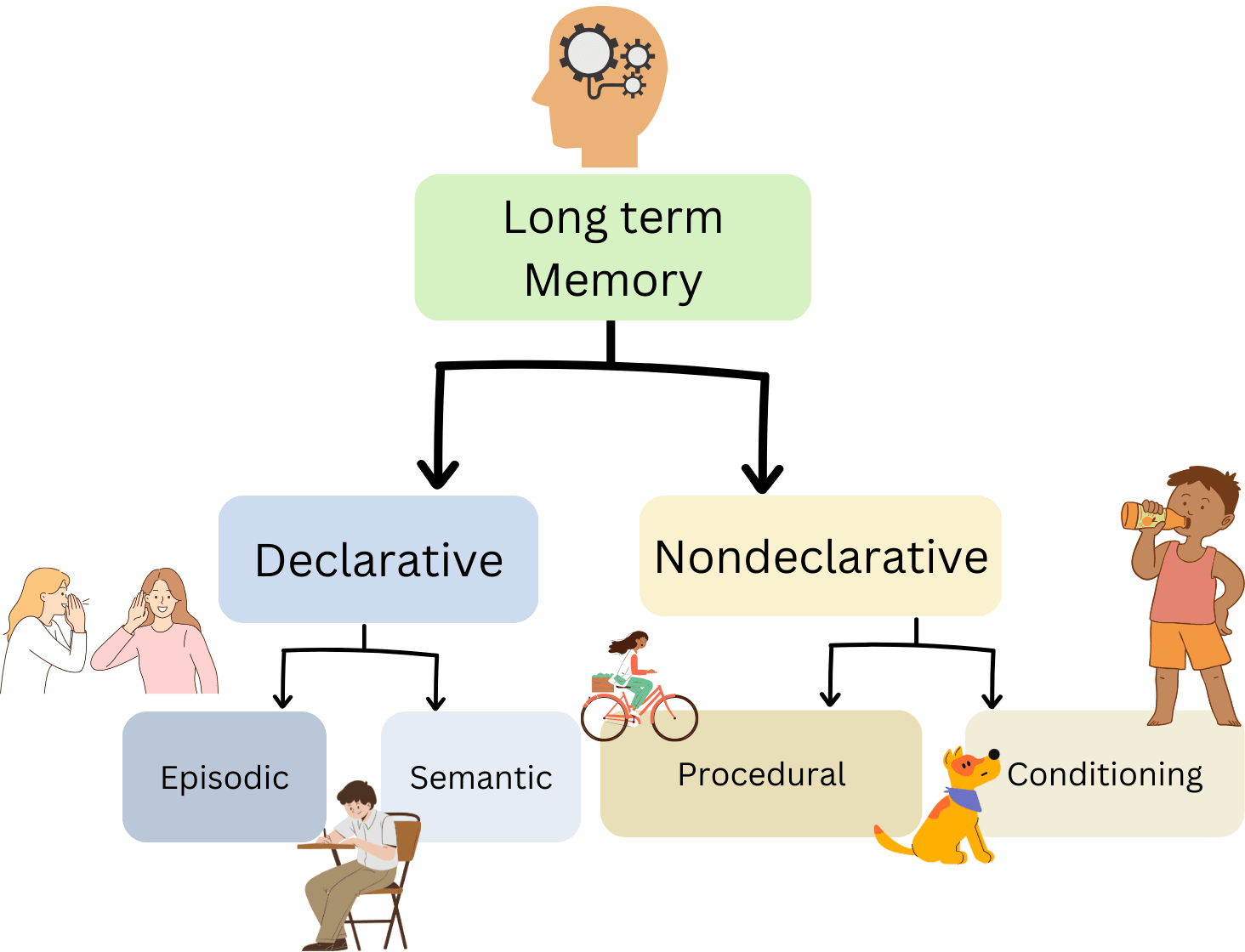

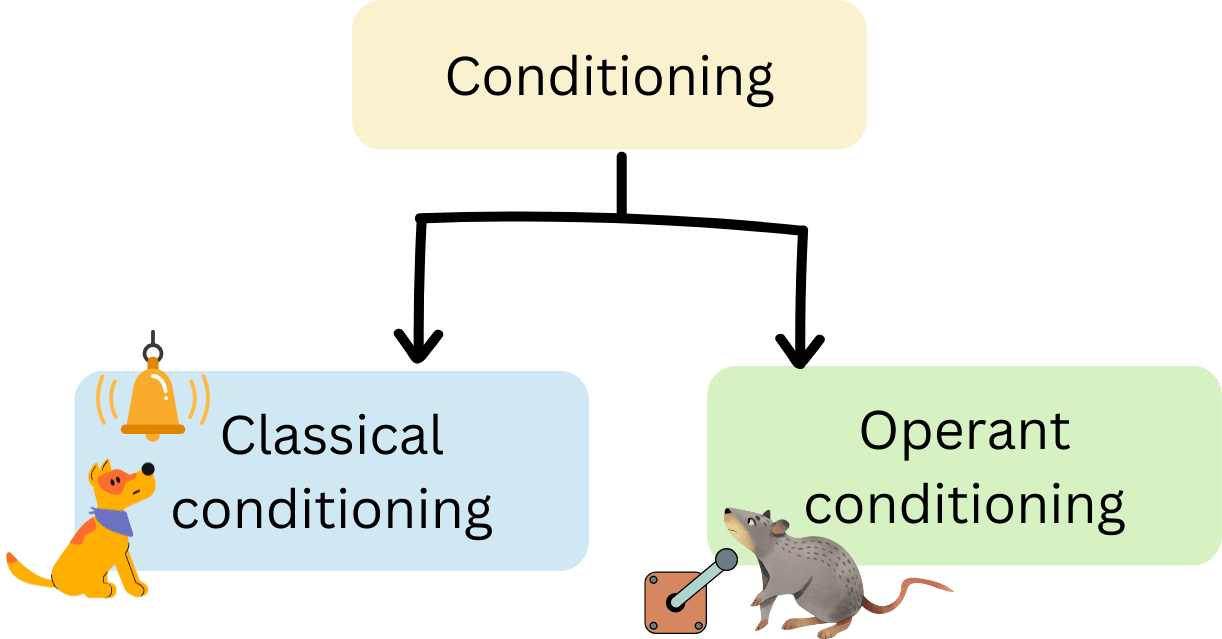

Types of conditioning

Conditioning

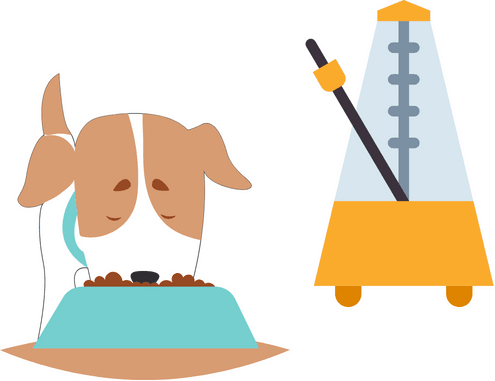

Sometime in the 1890s, a physiologist named Ivan Pavlov was researching salivation using dogs. He would feed the dogs with powdered meat, and insert a tube into the cheek of each dog to measure their saliva.As expected, the dogs salivated when the food was in front of them. Unexpectedly, the dogs also salivated when they heard the footsteps of his assistant (who brought them their food).

Fascinated by this, Pavlov started to play a metronome whenever he gave the dogs their food. After a while, sure enough, the dogs would salivate whenever the metronome played, even if no food accompanied it. These findings would lead to the theory of classical conditioning, influencing how psychologists understood learning and reward until today.

A few years later, a psychologist named Thorndike was researching if cats could be taught to escape puzzle boxes. He would place them in wooden boxes with a switch somewhere, and see how long it took them to learn to step on the switch and open the door.

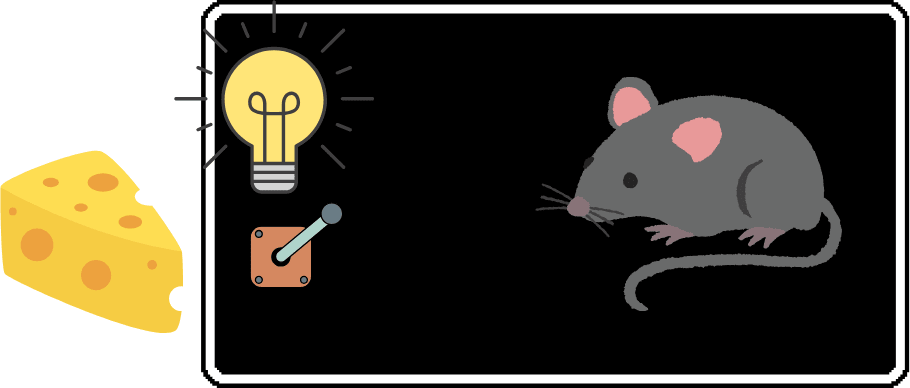

Another American psychologist named B. F. Skinner took an interest in Thorndike’s findings. He placed rats in boxes with a variety of stimuli: some did nothing, some led to food, and some led to punishments such as electric shocks. Experimenting with various mixtures of rewards, punishments, in different timings and amounts, he theorised there were different ‘schedules’ of conditioning that could lead to different behaviours. Skinner later became known as the father of ‘operant conditioning’: a variation on Pavlov’s classical conditioning that could dictate how the way we learn something influences the actions we take.

A Skinner Box

The mouse learns to press the lever, to deliver the cheese and cause the bulb to turn on.

But what, exactly, is going on inside the brains of these little mice as they learn to pull levers, get food and avoid shocks? The answer wouldn’t come until half a century later, when a psychologist named Milner messed up his experiment with electrodes and mice.

Reward circuits

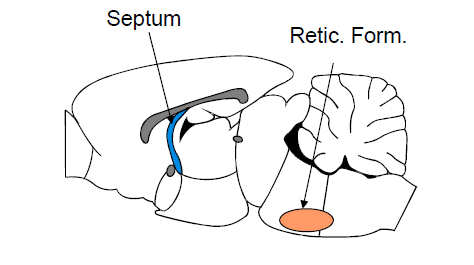

Milner was studying a small part of the rat’s brain, the reticular formation, and its effect on learning. He stuck electrodes into the reticular formations of a group of rats, turned them on, and checked if the rats were behaving any differently. They weren’t - except for one.

It turned out that one rat had the electrode stuck in entirely the wrong place. Milner, a social scientist by training with no surgery experience, had put the electrode on the other side of the brain entirely, in what is known as the septum (in his defence, rat heads are very small). Rats with electrodes in the septums loved it - if given a lever that would turn on the electrode, they would press it till exhaustion, ignoring food and water even when starving and near death.

They weren’t even close…

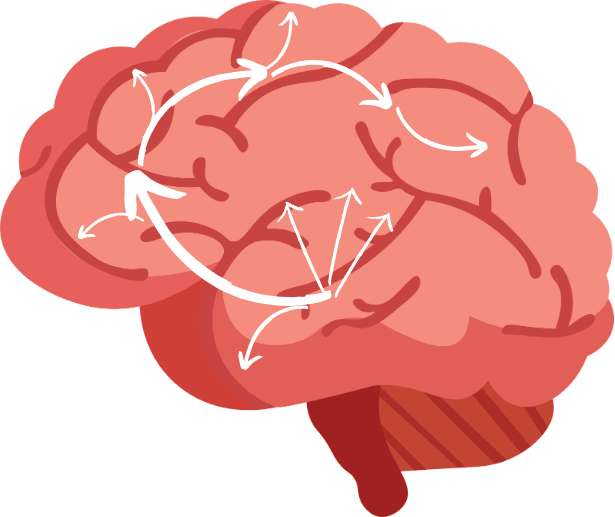

This experiment was repeated with other parts of the brain, showing a variety of places that had the same result. These places all had one thing in common - they were connected by dopaminergic neurons, or neurons that make dopamine, originating from another section of the brain known as the ventral tegmental area (the VTA). This entire group became known as the mesolimbic and mesocortical dopamine system, or more simply: the reward system.

Reward system in humans (a rough illustration)

These pathways go from the VTA to many parts of the brain, including the amygdala, a part of the brain involved with fear and emotion; the nucleus accumbens, involved with motivation and behaviour, and the prefrontal cortex, involved with planning and decision making. Almost all the areas receiving these inputs have pathways going back to the VTA, completing the reward ‘circuit’.

Let’s go over an idea of what might be happening in the juice pouch example. A child sees a juice pouch, and drinks some juice. The sweetness of the juice is registered, and the information sent up to the brain by various pathways, one of which makes it to the VTA. The VTA releases dopamine, reaching - among other places - the prefrontal cortex (involved with decision making).

The next time the child sees a juice pouch, it is registered in the prefrontal cortex (among other places). The prefrontal cortex sends down this information back to the VTA, which sends it out to other regions - including those involved with motivation, behaviour, and emotion. The child feels happy, and reaches for the juice, the sweetness of which will reinforce this cycle all over again.

Now, that’s a good story for how things might work with just a child and a juice box. But in most situations, we’re surrounded by multiple things, all calling for our attention - juice, candy, Netflix, homework, the weird rustling sound to our left… How do we decide what to do?

Here’s where things get tricky.

Attention

In the early 2000s, a young neurologist called William Seeley was studying a rare disease called frontotemporal dementia. Patients showed a strange lack of reaction to their surroundings and responsibilities: they stopped working, didn’t pay attention to their spouse, didn’t react to embarrassment or other social cues. In extreme cases, they even stopped responding to food or water.

This was just a few years after the invention of the functional MRI machine, which had revolutionised neuroscience by allowing us, at an unprecedented level of detail, to observe activity in the entire brain over time. Excited by the possibilities, Seeley decided to use fMRI not just to map the specific part of the brain he was interested in (the ‘frontoinsular cortex’) but all parts of the brain that showed activity at the same time as it. What they found would become one of the first large-scale brain networks we knew of, a pattern of activity stretching across the brain to influence our every thought: the salience network.

The salience network and regions functionally connected to it (from here)

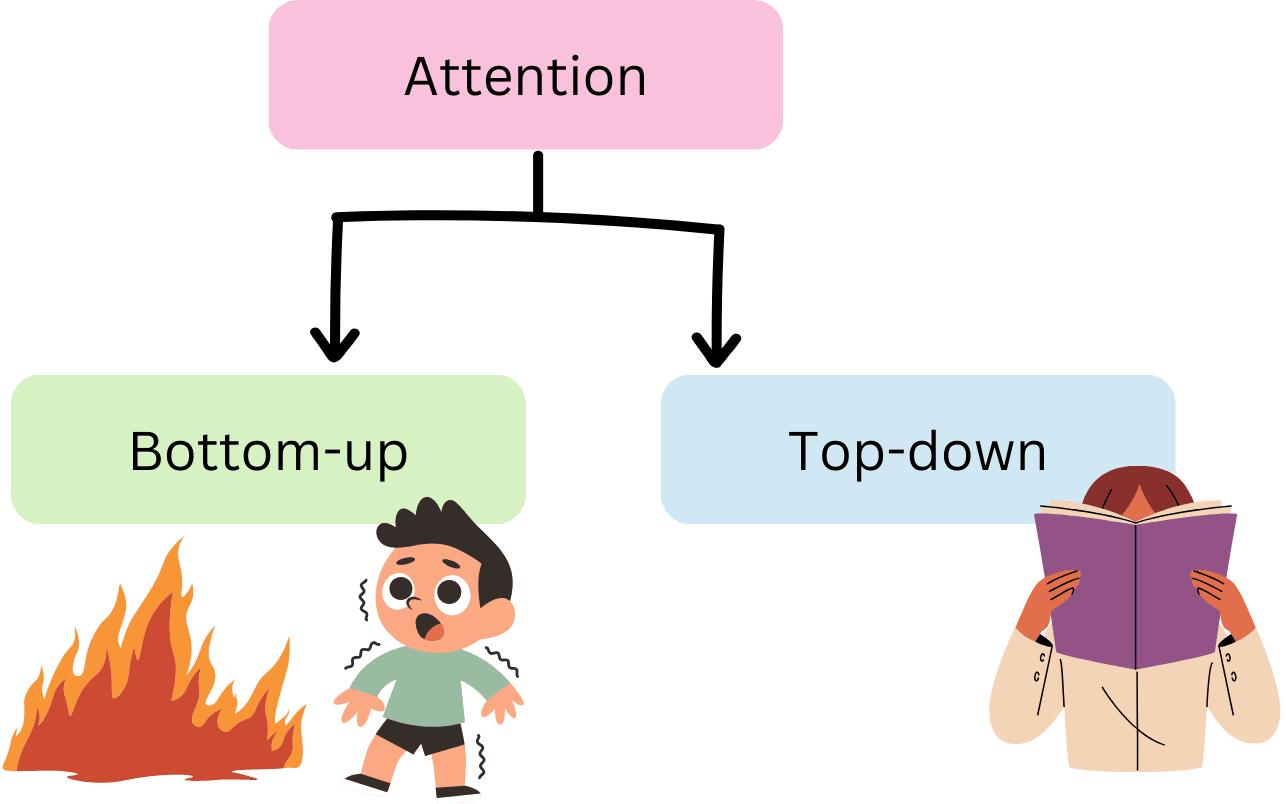

The salience network has been found to be involved in - as you might expect - filtering stimuli from our surroundings that are immediately salient to us, whether that’s due to experience, emotional reactions, or just bright colours that jump out at us. What the salience network does not do, however, is mediate top-down influences on attention, such as thinking ‘Wow, I should really be focusing on this lecture right now’.

This gap was filled by a paper published in 2002, by researchers Corbetta and Shulman. They review the salience network and its influence on what they suggest is bottom-up attention, and propose another network: the dorsal attention system. This, they suggest, influences top-down attention, allowing you to choose what to focus on based on your goals. Moreover, these systems interact: the salience network can act as a ‘circuit breaker’ for the dorsal one, breaking your attention to your lecture to notify of the flames and smell of smoke nearby.

Two types of attention

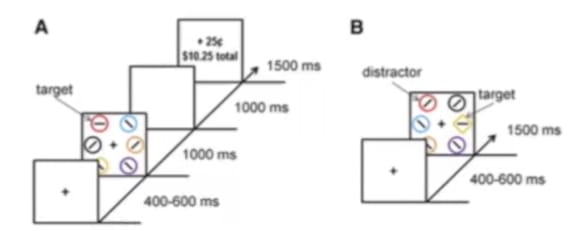

What’s the connection with conditioning and reward systems? A study published in 2016, ‘The Role of Dopamine in Value-Based Attentional Orienting’ by Anderson et al., looked at how dopamine and reward networks affected what we pay attention to. They carried out several experiments with little diagrams of coloured circles. Each time, participants had to find a certain-coloured circle - and some of the colours led to rewards of money. They found that people got better faster at finding the circles that had led to money in the past, with their attention being immediately captured by these money-making circles - and the more dopamine they released, the more they showed this behaviour.

Participants would see a bunch of coloured circles, and had to find a given colour.

For some colours, this led to money.

Thus we can think of attention as bottom-up and top-down, where bottom-up relevance includes things we’re conditioned to respond to, in addition to the things we’re wired from birth to respond to (such as bright colours and sudden noises). What, however, decides the top-down relevance?

Consciousness

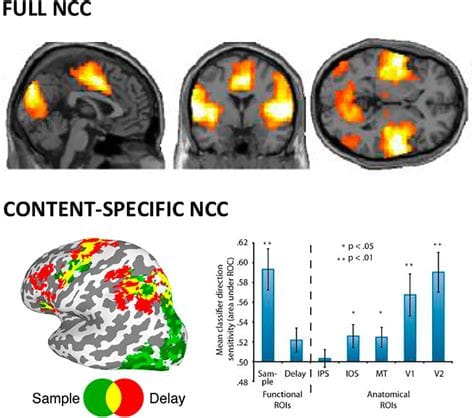

Where attention research is a brand new field, filled with evolving theories resting on state-of-the-art technology, theorising about consciousness is an extremely old one. When modern neuroscience turned its attention to a few decades ago, most researchers attempted to abandon philosophising and focus on ‘the neural correlates of consciousness’ (or NCCs).

From a study looking at NCCs

This turned out to be difficult. How do you distinguish consciousness from everything else that’s going on in the brain? From the things that caused, and that resulted from, your conscious decision? To grapple with this, researchers began to shift their focus onto overall theories of consciousness - and controversies and disagreements began to abound,

One of the major players in the field was global workspace theory, which portrays the mind as a theatre. Most of the work happens in the dark, but attention acts as a spotlight, bringing something into the ‘global workspace’ to be processed. Updated versions include ‘global workspace dynamics’, which take into account the whole-brain aspects of conscious processing found through recent studies (such as the attentional ones we discussed). Other theories include integrated information theory (IIT), which suggests feedback loops create the experience of consciousness, higher-order theories, a group of theories that say consciousness occurs when one region of the brain is represented by another, and many, many more. ‘Theories of consciousness’, by Anil Seth and Tim Bayne, gives a great overview if you’re interested in knowing (ha) more.

Global Workspace Theory’s vision of consciousness

What all these theories have in common is what they’re attempting to describe - the process through which we experience, think, plan, and ultimately set the goals that decide what we’ll choose to pay attention to .

Now we’re paying attention. How do we decide what to do?

Emotion

Throughout this process, we have been feeling emotions.

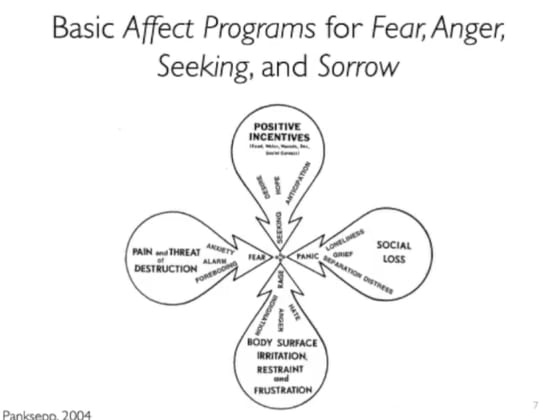

In the early 1990s, an Estonian-American scientist named Jaak Panksepp came up with the term ‘affective neuroscience’ to describe the study of the neuroscience of emotion. He suggested that the brain systems that deal with emotions exchange information with areas responsible for conscious thinking, and mediate behavioural responses. He suggested four main emotional systems: the seeking system, the fear system, the rage system, and the panic system.

The 4 main systems, and the situations that usually activate them (From Panksepp, 2004)

The seeking system, closely interrelated with the reward circuits discussed above, encouraged people to approach whatever triggered the emotion. Fear, on the other hand, encouraged avoidance. The rage system was another one that led to approach behaviours; and finally panic, closely related to social behaviour and feelings like grief, could lead to both. Input from conscious processes, in turn, could change these behaviours to suit longer-term goals or beliefs.

The fear system in action

The Full Picture

Let’s look at the full picture. We look at (or hear, or smell) things, which gets stored in our memory - first in working memory, then in short term, and then finally in long term memory. Depending on the type of memory it is, it could be stored in declarative or nondeclarative memory, and if it’s nondeclarative, it could be one of many types, including conditioning.

Conditioning occurs when we’re exposed to something that gives a natural reward - such as sweet juice - alongside something else - such as a juice box. It can also, through operant conditioning, help us learn behaviours - such as picking up a juice box to get to the juice. This is mediated through the reward circuits in our brain, which release dopamine every time we drink the juice.

Once we’ve learnt that juice gives us a reward, the salience network makes any juice boxes in our environment jump out at us. We feel a positive emotion through the seeking system, which encourages us to go towards the juice. However, our conscious mind might interfere - ‘that’s from your coworker’s lunch, he’ll be upset if you drink it’ - and make us change our actions.

Where does Shard Theory come in?

Shard theory proposes to explain how we learn to value different things. The authors define [AF · GW] ‘values’ in shard theory as ‘contextual influences on decision-making.’ Does its view of learning fit in with what we’ve discussed?

To help us understand this step-by-step, let’s go through Lawrence Chan’s nine main theses of shard theory [AF · GW]. For each thesis, we can compare against the view of learning we’ve talked about so far.

While the theses are pretty descriptive, they can contain some confusing terminology, so for fuller explanations of each see the original post here [AF · GW].

9 main theses of shard theory

1. Agents are well modeled as being made of shards---contextually activated decision influences [AF · GW].

We’ve seen that context does influence decisions, though it’s mediated by other processes like conscious thought and emotion.

2. Shards generally care about concepts inside the agent's world model, as opposed to pure sensory experiences or maximizing reward. [AF · GW]

If we keep drinking juice, the reward from its sweetness makes us feel more positive (and more likely to approach) the juice box it comes in - but we don’t necessarily recognise that with our conscious mind. It’s possible that, through interaction between the reward circuits and our cortex, we form a concept surrounding the juice box and decide that we like it - but it’s also possible that never happens, and we simply reach for the juice box instinctively in the hopes of sweet reward.

3. Active shards bid for plans in a way shaped by reinforcement learning. [AF · GW]

Chan explains: ‘shards …attempt to influence the planner in ways that have “historically increased the probability of executing plans” favored by the shard. For example, if the juice shard bringing a memory of consuming juice to conscious attention has historically led to the planner outputting plans where the baby consumes more juice, then the juice shard will be shaped via reinforcement learning to recall memories of juice consumption at opportune times.’

Let’s review how conditioning and consciousness interact. There are outputs from reward circuits to the cortex, and also from the cortex back to the reward circuits. If you do something - such as drinking some juice - signals go from your tongue to a variety of places, including both the cortex and the source of the reward system, the VTA. They then interact to influence your behaviour.

There might be cases where you’re unconscious of whatever is giving you a reward - for example, if it happens too quick for you to note. However, it doesn’t seem like there’s a way for the reward system to control whether you’re conscious of something or not.

4. The optimization target is poorly modeled by the reward function [AF · GW]; and

8. "Goal misgeneralization" is not a problem for AI alignment. [AF · GW]

Whenever we train a model, we have a limited amount of data. We hope that what the model learns from interacting with this data will generalise to the rest of the world, but this isn’t always true. Chan explains that, according to shard theory, we don’t need to worry about this kind of goal misgeneralisation, because by looking at shards we can understand how reward and training data interact to produce the agent’s behaviour.

He says: ‘because most shards end up caring about concepts inside a world model, the agent’s actions are determined primarily by shards that don’t bid to directly maximize reward….For example, a shard theorist might point to the fact that (most) people don’t end up maximizing only their direct hedonic experiences.’

As we noted earlier, we may or may not have conscious concepts of the things we’re conditioned to do, and conscious thinking can interfere with our behaviour around reward-related objects, it’s not the only - and often not even the primary - driver. Reward systems are complex. For example, the first glass of juice may taste great, but the second mediocre, and the third disgusting - but when some stimuli bypasses this behaviour, as most drugs do, we become addicted.

In the long run, it’s true, we make conscious plans of action, and when what we’re doing goes against the plan our conscious mind will interfere. Those plans may be influenced by concepts we’ve formed from conditioning (‘that juice was so good, how can I get it again?’). Humans, however, are great imitative learners - we begin to imitate other people from when we’re babies - and gain large amounts of information through cultural, social and explicit transmission. Most of the concepts informing our long-term plans likely come not from our direct experiences but what we’ve been told or observed from other people.

5. Agentic shards will seize power. [AF · GW]

Chan illustrates: ‘For example, a more agentic “be healthy” shard might steer me away from a candy store, preventing the candy shard from firing.’

It’s true that a conscious plan to be healthy might interfere with a conditioned desire to eat candy. If it were certain to overcome it, however, surely we wouldn’t need to go on diets! As the prevalence of addiction shows, conscious or ‘agentic’ thinking does have the power to influence our behaviour, but is often in balance with or even overwhelmed by our conditioned drives.

6. Value formation is very path dependent and relatively architecture independent. [AF · GW]

Shard theory predicts that the order of experiences you are exposed to (the ‘path’) can change your values a lot, but it doesn't necessarily matter what the structure of your brain looks like as long as it performs the basic function of forming ‘shards’.

Let’s look at different species of animals. It’s true that between humans, one person may value very different things from the next. Depending on their experiences, one might like eating fish while the other hates it, one might prefer playing football in their spare time while the other prefers reading, and so on.

Taken as a species, however, humans are probably much more like each other than like a dog. Dogs are fairly intelligent, and their brains are not completely dissimilar from humans - they have most of the same parts. Exposed to the same experiences, however, a dog’s values would likely be quite different.

7. We can reliably shape an agent's final values by changing the reward schedule. [AF · GW]

Inspired by shard theory, Alex Turner wrote ‘A shot at the diamond-alignment problem’, which suggests how you could use the ideas of shard theory to successfully train a model to manufacture diamonds. First, he would ensure the model has the concept of a diamond in its world-model; then put the model in different training situations to encourage the growth of a diamond ‘shard’.

We do often influence the experiences people are exposed to in the hopes of changes what values they pick up. In a way, early childhood and education research are a study in shaping values by varying experiences. It’s a tricky task, however, perhaps partly because it’s impossible to control everything a child is exposed to, but also because it can be hard to predict what they will learn.

Children are sometimes taught by encouraging them to form conscious concepts of things: for example, they could be shown the food pyramid and told what a healthy meal should look like. However, they could also be taught through conditioning: punishing a child for eating too much candy, or rewarding them for broccoli. Conceptual learning may work better later in life, as our cortex’s ability to inhibit our reward system grows stronger. However, it rarely forms the bulk of our motivation - conscious thought is an occasional interference, as we discussed earlier, and not the sole driver of most behaviour.

(8. was addressed along with 5)

9. Shard theory is a good model of human value formation. [AF · GW]

Human learning is often contextual, as shard theory predicts. However, it is also highly distributed throughout the entire brain - a single experience with juice sends signals to parts of the brain involved with remembering experiences, understanding concepts, conditioned reward, and more, all of which may or may not interact with each other. We do not need a concept to be part of our world model to be conditioned to desire it; nor does conditioning necessarily create a concept. Conscious knowledge happens with or without conditioning, and the mechanisms by which different parts of our experience compete are influenced by large-scale salience networks in the brain, emotional systems, and more.

Conclusions

We can see that, while shard theory captures the contextual side of conditioning-based learning, there’s a much bigger picture that it doesn’t address. From the wide range of learning and memory types we discussed, conditioning is only one.

While bottom-up influences on our attention may make us act differently in some contexts than others, our conscious mind can draw connections between disparate experiences and cohere them into one worldview. We may reach out instinctively to a juice box, but if we’re on a diet, our conscious mind may break in and redirect us towards water (through the top-down ‘circuit-breaker’ discussed earlier). If we’re on a diet, but heard somewhere that diets aren’t good for our mental health, our conscious mind might break in again and remind us to cut loose a little. And so on and on, with connections drawn through and between each idea in our mind, bringing us closer to coherence.

Of course, that doesn’t always happen. Shard theory is still useful as a reminder of the reactive, incoherent nature of minds like ours, with consciousness reaching through only occasionally. Still, a treatment of learning that does not address top-down influences and the spectre of consciousness is incomplete.

Next Steps

Exactly how much does top-down attention influence our actions? How do top-down and bottom-up influences interact to form interconnected circuits of distributed learning? And can we expect consciousness to emerge from reinforcement learning agents?

I hope to address these questions in upcoming posts, and welcome any discussion or input on them from you.

Thanks

To my co-students and teachers at AI Safety Fundamentals by Bluedot, for their insights and feedback, and to my boyfriend for reading through all the revisions :)

References

Anderson, B. A., Kuwabara, H., Wong, D. F., Gean, E. G., Rahmim, A., Brašić, J. R., George, N., Frolov, B., Courtney, S. M., & Yantis, S. (2016). The role of dopamine in value-based attentional orienting. Current Biology, 26(4), 550–555. https://doi.org/10.1016/j.cub.2015.12.062

Baars, B. J., Geld, N., & Kozma, R. (2021). Global workspace theory (GWT) and prefrontal cortex: Recent developments. Frontiers in Psychology, 12. https://doi.org/10.3389/fpsyg.2021.749868

Boly, M., Massimini, M., Tsuchiya, N., Postle, B. R., Koch, C., & Tononi, G. (2017). Are the neural correlates of consciousness in the front or in the back of the cerebral cortex? clinical and neuroimaging evidence. The Journal of Neuroscience, 37(40), 9603–9613. https://doi.org/10.1523/jneurosci.3218-16.2017

Chan, L. (2022, December). Shard theory in nine theses: A distillation and critical appraisal. AI Alignment Forum. https://www.alignmentforum.org/posts/8ccTZ9ZxpJrvnxt4F/shard-theory-in-nine-theses-a-distillation-and-critical

Fallon, F. (n.d.). Integrated Information Theory of Consciousness. Internet Encyclopedia of Philosophy. https://iep.utm.edu/integrated-information-theory-of-consciousness/

Panksepp, J. (1982). Toward a general psychobiological theory of emotions. Behavioral and Brain Sciences, 5(3), 407–422. https://doi.org/10.1017/s0140525x00012759

Pope, Q., & Turner, A. (2022). The shard theory of human values. AI Alignment Forum. https://www.alignmentforum.org/posts/iCfdcxiyr2Kj8m8mT/the-shard-theory-of-human-values

Seeley, W. W. (2019). The salience network: A neural system for perceiving and responding to homeostatic demands. The Journal of Neuroscience, 39(50), 9878–9882. https://doi.org/10.1523/jneurosci.1138-17.2019

Seth, A. K., & Bayne, T. (2022). Theories of consciousness. Nature Reviews Neuroscience, 23(7), 439–452. https://doi.org/10.1038/s41583-022-00587-4

Turner, A. (2022, October 6). A shot at the diamond-alignment problem. AI Alignment Forum. https://www.alignmentforum.org/posts/k4AQqboXz8iE5TNXK/a-shot-at-the-diamond-alignment-problem

Other resources

YouTube. (2023, June 15). 22 - Shard theory with Quintin Pope. https://www.youtube.com/watch?v=o-Qc_jiZTQQ&list=PLmjaTS1-AiDeqUuaJjasfrM6fjSszm9pK&index=25

My old class notes :P

7 comments

Comments sorted by top scores.

comment by Seth Herd · 2024-06-14T23:05:24.789Z · LW(p) · GW(p)

Excellent! I concur entirely. I had a 23-year career in computational cognitive neuroscience, focusing on the reward system and its interactions with other learning systems. Your explanations match my understanding.

This reminds me that I've never gotten around to writing any critique of shard theory.

In sum, I think Shard Theory is quite correct about how RL networks learn, and correct thaty they should be relatively easy to align. I think it is importantly wrong about how humans learn, and relatedly, wrong about how easy it's likely to be to align a human-level AGI system. I think Shard Theory conflates AI with AGI, and assumes that we won't add more sophistication. This is a prediction which could be right, but I think it's both probably wrong, and unstated. This leads to the highly questionable conclusion that "AI is easy to control". Current AI is, but assuming that means near-future systems will also be is highly overconfident.

Reading this brought this formulation to mind: Where Shard Theory is importantly wrong about alignment is that it applies to RL systems, but AGI won't be just an RL system. It won't be just a collection of learned habits, as RL systems are. It will need to have explicit goals, like humans do when they're using executive function (and conscious attention). I've tried to express the importance of such a steering subsystem [LW · GW] for human cognitive abilities.

Having such systems changes the logic of competing shards somewhat. I think there's some validity to this metaphor, but importantly, shards can't hide any complex computations, like predictions of outcomes, from each other, since these route through consciousness. You emphasize that, correctly IMO.

The other difference between humans and RL systems is that explicit goals are chosen through a distinct process.

Finally, I think there's another important error in applying shard theory to AGI alignment or human cognition in the claim reward is not the optimization target. That's true when there's no reflective process to refine theories of what drives reward. RL systems as they exist have no such process, so it's true for them that reward isn't the optimization target. But with better critic networks that predict reward, as humans have, more advanced systems are likely to figure out what reward is, and so make reward their optimization target.

Replies from: rishika-bose, ErisApprentice↑ comment by Rishika (rishika-bose) · 2024-06-15T00:40:48.106Z · LW(p) · GW(p)

Thanks, I really appreciate that! I've just finished an undergrad in cognitive science, so I'm glad that I didn't make any egregious mistakes, at least.

"AGI won't be just an RL system ... It will need to have explicit goals": I agree that this if very likely. In fact, the theory of 'instrumental convergence [? · GW]' often discussed here is an example of how an RL system could go from being comprised of low-level shards to having higher-level goals (such as power-seeking) that have top-down influence. I think Shard Theory is correct about how very basic RL systems work, but am curious about if RL systems might naturally evolve higher-level goals and values as they become more larger, more complex and are deployed over longer time periods. And of course, as you say, there's always the possibility of us deliberately adding explicit steering systems.

"shards can't hide any complex computations...since these route through consciousness.": Agree. Perhaps a case could be made for people being in denial of certain ideas they don't want to accept, or 'doublethink' where people have two views about a subject contradict each other. Maybe these could be considered different shards competing? Still, it seems a bit of a stretch, and certainly doesn't describe all our thinking.

"I think there's another important error in applying shard theory to AGI alignment or human cognition in the claim reward is not the optimization target": I think this is a very interesting area of discussion. I kind of wanted to delve into this further in the post, and talk about our aversion to wireheading, addiction and the reward system, and all the ways humans do and don't differentiate between intrinsic rewards and more abstract concepts of goodness, but figured that would be too much of a tangent haha. But overall, I think you're right.

↑ comment by ErisApprentice · 2024-06-15T00:06:14.591Z · LW(p) · GW(p)

comment by Martin Randall (martin-randall) · 2024-06-16T02:37:21.411Z · LW(p) · GW(p)

*6. *Value formation is very path dependent and relatively architecture independent.

...

Taken as a species, however, humans are probably much more like each other than like a dog. Dogs are fairly intelligent, and their brains are not completely dissimilar from humans - they have most of the same parts. Exposed to the same experiences, however, a dog’s values would likely be quite different.

Humans have very different experiences than dogs. A big part of that is that they are humans, not dogs. Humans do not have the experience of having a tail. This seems sufficient to explain why humans don't value chasing their tails. Overall the differences between human brains and dog brains seem trivial compared to the differences between human experiences and the dog experiences.

The text here claims that if a dog shared the same experiences as a human their "values would likely be quite different". It is hard to fathom how that hypothetical could be coherent. We can't breed dog-human hybrids with the brain of a dog and the body of a human. If we did they would still have different experiences, notably the experience of having a brain architecture ill-suited to operating their body. Also a hybrid's brain architecture would develop differently to a dog's. The area dedicated to processing smells would not grow as large if hooked up to a human's inferior nose.

I don't think we can argue for or against shard theory based on our ideas of what might happen in such hypotheticals. Someone else can write "exposed to the same experiences, however, a dog’s values would likely be quite similar". See also Dav Pilkey's Dog Man (fiction).

Replies from: rishika-bose↑ comment by Rishika (rishika-bose) · 2024-06-16T17:41:21.050Z · LW(p) · GW(p)

Hi Martin, thanks a lot for reading and for your comment! I think what I was trying to express is actually quite similar to what you write here.

'If we did they would still have different experiences, notably the experience of having a brain architecture ill-suited to operating their body.' - I agree. If I understand shard theory right, it claims that underlying brain architecture doesn't make much difference, and e.g. the experience of trying to walk in different ways, and failing at some but succeeding at others, would be enough to lead to success. However I'm pointing out that a dog's brain would still be ill-suited to learning things such as walking in a human body (at least compared to a human's brain), showing the importance of architecture.

My goal was to try to illustrate the importance of brain structure through an absurd thought experiment, not to create a coherent scenario - I'm sorry if that lead to confusion. The argument does not rest on the dog, the dog is meant to serve as an illustration of the argument.

At the end of the day, I think the authors of shard theory also concede that architecture is important in some cases - the difference seems to be more of a matter of scale. I'm merely suggesting that architecture may be a little more important than they consider it, and pointing to the variety of brain architectures and resulting values in different animals as an example.

Replies from: martin-randall↑ comment by Martin Randall (martin-randall) · 2024-06-21T15:18:00.511Z · LW(p) · GW(p)

Pointing to the variety of brains and values in animals doesn't persuade me because they also have a wide variety of environments and experiences. Shard theory predicts a wide variety of values as a result (tempered by convergent evolution).

One distinctive prediction is in cases where the brain is the same but the experiences are different. You agree that "between humans, one person may value very different things from the next". I agree and would point to humans throughout history who had very different experiences and values. I think the example of football vs reading understates the differences, which include slavery vs cannibalism.

The other distinctive prediction is in cases where the brain is different but the experiences are the same. So for example, consider humans who grow up unable to walk, either due to a different brain or due to a different body. Shard theory predicts similar values despite these different causes.

The shard theory claim here is as quoted, "value formation is ... relatively architecture independent". This is not a claim about skill formation, eg learning to walk. It's also not a claim that architecture can never be causally upstream of values.

I see shard theory as a correction to Godshatter [LW · GW] theory and its "thousand shards of desire". Yudkowsky writes:

And so organisms evolve rewards for eating, and building nests, and scaring off competitors, and helping siblings, and discovering important truths, and forming strong alliances, and arguing persuasively, and of course having sex...

Arguing persuasively is a common human value, but shard theory claims that it's not encoded into brain architecture. Instead it's dependent on the experience of arguing persuasively and having that experience reinforced. This can be the common experience of a child persuading their parent to give them another cookie.

There is that basic dependence of having a reinforcement learning system that is triggered by fat and sugar. But it's a long way from there to here.

Replies from: rishika-bose↑ comment by Rishika (rishika-bose) · 2024-06-28T16:09:17.081Z · LW(p) · GW(p)

Thanks for the response, Martin. I'd like to try to get to the heart of what we disagree on. Do you agree that, given a sufficiently different architecture - e.g. a human who had a dog's brain implanted somehow - would grow to have different values in some respect? For example, you mention arguing persuasively. Argument is a pretty specific ability, but we can widen our field to language in general - the human brain has pretty specific circuitry for that. A dog's brain that lacks the appropriate language centers would likely never learn to speak, leave alone argue persuasively.

I want to point out again that the disagreement is just a matter of scale. I do think relatively similar values can be learnt through similar experiences for basic RL agents; I just want to caution that for most human and animal examples, architecture may matter more than you might think.

Replies from: martin-randall↑ comment by Martin Randall (martin-randall) · 2024-07-05T01:27:10.119Z · LW(p) · GW(p)

Thanks for trying to get to the heart of it.

Do you agree that, given a sufficiently different architecture - e.g. a human who had a dog's brain implanted somehow - would grow to have different values in some respect?

Yes, according to shard theory. And thank you for switching to a more coherent hypothetical. Two reasons are different reinforcement signals and different capabilities.

Reinforcement signals

Brain -> Reinforcement Signals -> Reinforcement Events -> Value Formation

The main way that brain architecture influences values, according to Quintin Pope and Alex Turner, is via reinforcement signals:

Assumption 3: The brain does reinforcement learning. According to this assumption, the brain has a genetically hard-coded reward system (implemented via certain hard-coded circuits in the brainstem and midbrain). In some[3] fashion, the brain reinforces thoughts and mental subroutines which have led to reward, so that they will be more likely to fire in similar contexts in the future. We suspect that the “base” reinforcement learning algorithm is relatively crude, but that people reliably bootstrap up to smarter credit assignment.

Dogs and humans have different ideal diets. Dogs have a better sense of smell than humans. Therefore it is likely that dogs and humans have different hard-coded reinforcement signals around the taste and smell of food. This is part of why dog treats smell and taste different to human treats. Human children often develop strong values around certain foods. Our hypothetical dog-human child would likely also develop strong values around food, but predictably different foods.

As human children grow up, they slowly develop values around healthy eating. Our hypothetical dog-human child would do the same. Because they have a human body, these healthy eating values would be more human-like.

Capabilities

Brain -> Capabilities -> Reinforcement Events -> Value Formation

Let's extend the hypothetic further. Suppose that we have a hybrid dog-human with a human body, human reinforcement learning signals, but otherwise a dog brain. Now does shard theory claim they will have the same values as a human?

No. While shard theory is based on the theory of "learning from scratch" in the brain, "Learning-from-scratch is NOT blank-slate" [LW · GW]. So it's reasonable to suppose, as you do, that the hybrid dog-human will have many cognitive weaknesses compared to humans, especially given its smaller cortex.

These cognitive weaknesses will naturally lead to different experiences, different reinforcement events. Shard theory claims that "reinforcement events shape human value shards" [LW · GW]". Accordingly shard theory predicts different values. Perhaps the dog-human never learns to read, and so it does not value reading.

On the other hand, once you learn an agent's capabilities, this largely screens off [? · GW] its brain architecture. For example, to the extent that deafness influences values, the influence is the same for deafness caused by a brain defect and deafness caused by an ear defect. The dog-human would likely have similar values to a disabled human with similar cognitive abilities.

Where is the disagreement?

My current guess is that you agree with most of the above, but disagree with the distilled claim that "value formation is very path dependent and architecture independent" [LW · GW].

I think the problem is that this is very "distilled". Pope and Trout claim that some human values are convergent: "We think that many biases are convergently produced artifacts of the human learning process & environment" [LW · GW]. In other words, these values end up being path independent.

I discuss above that "architecture independent" is only true if you hold capabilities and reinforcement signals constant. The example given in the distillation is "a sufficiently large transformer and a sufficiently large conv net, given the same training data presented in the same order".

I'm realizing that this distillation is potentially misleading when moved from the AI context to the context of natural intelligence.