Shard Theory in Nine Theses: a Distillation and Critical Appraisal

post by LawrenceC (LawChan) · 2022-12-19T22:52:20.031Z · LW · GW · 30 commentsContents

Introduction Related work The nine theses of shard theory 1.Agents are (well modeled as being) made up of shards 2. Shards care about concepts inside the agent’s world model 3. Active shards bid for plans in a way shaped via reinforcement learning 4. The optimization target is poorly modeled by the reward function 5. Agentic shards will seize power 6. Value formation is very path dependent and architecture independent 7. We can reliably shape final values by varying the reward schedule 8. “Goal misgeneralization” is not a problem for AI alignment 9. Shard theory is a good model for human value formation My opinions on the validity of each of the nine theses Discussion and future work A formalism for shard theory Engagement with existing psychology, neuroscience, and genetics literature Experimental validation of key claims None 30 comments

TL;DR: Shard theory [? · GW] is a new research program started by Quintin Pope and Alex Turner. Existing introductions tend to be relatively long winded and aimed at an introductory audience. Here, I outline what I think are the nine main theses of shard theory as of Dec 2022, so as to give a more concrete introduction and critique:

- Agents are well modeled as being made of shards---contextually activated decision influences.

- Shards generally care about concepts inside the agent's world model, as opposed to pure sensory experiences or maximizing reward.

- Active shards bid for plans in a way shaped by reinforcement learning.

- The optimization target is poorly modeled by the reward function.

- Agentic shards will seize power.

- Value formation is very path dependent and relatively architecture independent.

- We can reliably shape an agent's final values by changing the reward schedule.

- "Goal misgeneralization" is not a problem for AI alignment.

- Shard theory is a good model of human value formation.

While I broadly sympathize the intuitions behind shard theory, I raise reservations with each of the theses at the end of the post. I conclude by suggesting areas of future work in shard theory.

Acknowledgements: Thanks to Thomas Kwa for the conversation that inspired this post. Thanks also to Alex Turner for several conversations about shard theory and Charles Foster and Teun Van Der Weij for substantial feedback on this writeup.

Epistemic status: As I’m not a member of Team Shard, I’m probably misrepresenting Team Shard’s beliefs in a few places here.

Introduction

Shard theory [? · GW] is a research program that aims to build a mechanistic model between training signals and learned values in agents. Drawing large amounts of inspiration from particular hypotheses about the human reward learning system, shard theory posits that the values of agents are best understood as sets of contextually activated heuristics shaped by the reward function.

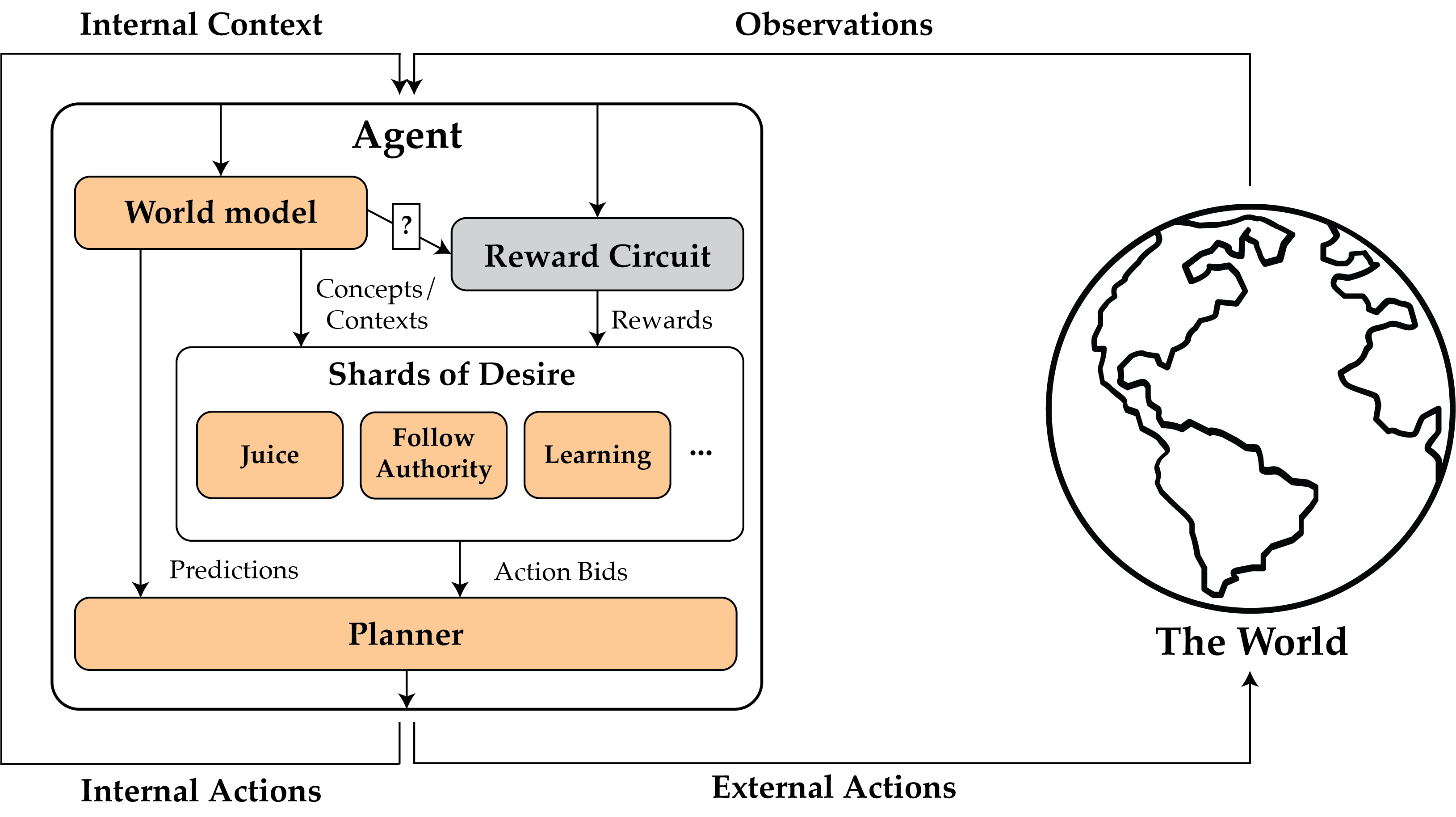

Learned components are orange, while hardcoded components are gray. The question mark on the line between the world model and the reward circuitry is because shard theorists are divided on whether the reward circuitry can depend on the world model.

In this post, I’ll attempt to outline nine of the main theses of shard theory, as of late 2022. I’ll explain the novelty of each thesis, how it constrains expectations, and then give my opinions and suggest some few experiments that could be done to test the theses.

Existing explainers of shard theory tend to be aimed at a relatively introductory audience and thus are relatively verbose. By default, I’ll be addressing this post to someone with a decent amount of AI/ML research background, so I’ll often explain things with reference to AI/ML terminology or with examples from deep learning. This post is aimed primarily at explaining what I see as the core claims, as opposed to justifying them; any missing justifications should not be attributed to failures of Team Shard. It’s also worth noting that shard theory is an ongoing research program and not a battle-tested scientific theory, so many of these claims are likely to be revised or clarified over time.

Related work

Alex Turner’s Reward is not the optimization target [AF · GW] is probably the first real “shard theory” post. The post argues that, by default, the learned behavior of an RL agent is not well understood as maximizing reward. Instead, the post argues that we should try to study how reward signals lead to value formation in more detail. He uses a similar argument to argue against the traditional inner and outer alignment split in Inner and outer alignment decompose one hard problem into two extremely hard problems [LW · GW].

David Udell’s Shard Theory: An Overview [LW · GW] first introduces the shard theory research program, as well as the terminology and core claims of shard theory. Turner and Pope’s The shard theory of human values [AF · GW] applies the shard theory to human value formation. It outlines three assumptions that the shard theory of human values makes regarding humans (the cortex is randomly initialized, the brain does self-supervised learning, and the brain does reinforcement learning). Other posts flesh out parts of the shard theory of human values (e.g. “Human value and biases are inaccessible to the genome” [? · GW]) and justify the use of human values as a case study for alignment. (“Humans provide an untapped wealth of evidence about alignment” [? · GW], “Evolution is a bad analogy for AGI” [? · GW].) Geoffrey Miller’s The heritability of human values: A behavior genetic critique of Shard Theory [LW · GW] argues that the high heritability of many kinds of human values contradicts the core claims of shard theory.

Thomas Kwa’s Failure modes in a shard theory alignment plan [LW · GW] gives definitions for many of the key terms of shard theory and outlines a possible shard theory alignment plan, before raising several objections. Namely, he argues that it’s challenging to understand how rewards lead to shard formation and it’s hard to predict the process of value formation. Alex Turner’s A shot at the diamond-alignment problem [? · GW] applies the shard theory of human value to generate a solution for a variant of the diamond maximizer problem, while Nate Soares’s Contra shard theory in the context of the diamond maximizer [LW · GW] raises four objections to this attempted solution.

Finally, this post is similar in motivation to Jacy Reese Anthis’s “Unpacking ‘Shard Theory’ as Hunch, Question, Theory, and Insight” [LW · GW], which divides the shard theory research program into the four named components. By contrast, this post aims to outline the key claims of the current shard theory agenda (for example, we don't describe the shard question except in passing).

The nine theses of shard theory

1.Agents are (well modeled as being) made up of shards

The primary claim of shard theory is that agents are best understood as being composed of shards: contextually activated computations that influence decisions and are downstream of historical reinforcement events. For example, Turner and Pope give the example of a “juice-shard” in a baby [AF · GW] that is formed by reinforcement on rewards activated by the taste of sugar, that then influences the baby to attempt to drink juice in contexts (similar to those) where they’ve had juice in the past.

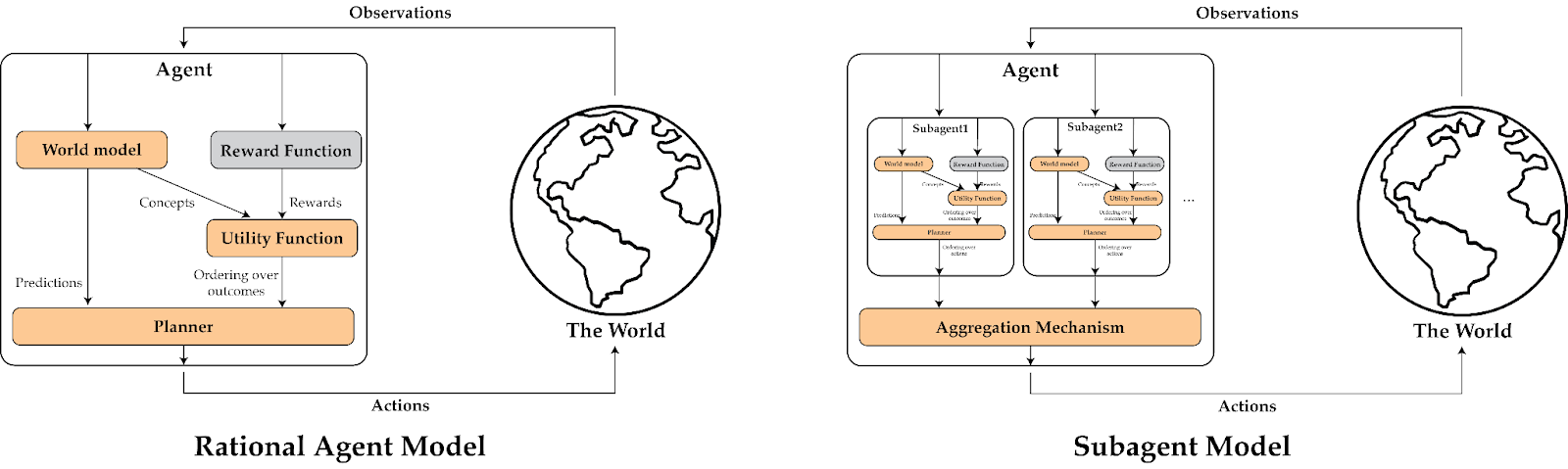

To better understand what shard theory is claiming here, it’s worth comparing it to three other ways of understanding agents that are being trained via reinforcement learning: the rational agent model, where an agent is thought of as maximizing a particular utility function (often assumed to be the value function of the external reward); subagent models [? · GW], where each “agent” is best modeled as being a collection of subagents, each of which maximize a utility function (shaped by some (possibly identical) reward); and Steven Brynes’s learning and steering model [LW · GW], which models the human brain as composed of a large learning subsystem “trained from scratch” and a much smaller steering subsystem consisting of mainly hardcoded components that guides the learning subsystem.

- Unlike the rational agent model, agents in shard theory are composed of many subparts that “want” different things, and should not be modeled as defined by a preference ordering over worlds + an optimal/near optimal search algorithm. That is, the baby can have a juice-shard, an “interact with adults”-shard, a “play with toys”-shard, etc., which in turn can lead to seemingly irrational behavior such as flip-flopping between an interesting adult and attempting to grab and drink juice from a bottle.

- Unlike in subagent models, the subcomponents of agents are not themselves always well modeled as (relatively) rational agents. For example, there might be shards that are inactive most of the time and only activate in a few situations. In addition, shard theory suggests that all of the behavior of shards is formed via a combination of self-supervised learning of world models and reinforcement learning on the shards themselves. In contrast, subagent models often allow for subagents with desires that are “hardcoded”, e.g. via evolutionary processes. In the baby example, the juice-shard might only activate when they see a particular type of juice bottle, which they’ve drunk juice from.

- Unlike in the learning and steering model, all of the shards are shaped primarily via reinforcement learning; only the rewards, learning algorithms, and a few stimulus reactions can be hardcoded. In the learning and steering model, the steering subsystem contains hardcoded social instincts and complex drives such as disgust or awe. (EDIT: I think the claim I made here is imprecise, see the response from Steve Byrnes here [LW(p) · GW(p)].) In contrast, in the shard theory baby example, there are no in-built “listen to authority”-shards, the baby learns a “listen to authority”-shard as a consequence of their hardcoded reward circuitry and learning algorithm.

In other words, shard theory is both more general than other models (in the sense of allowing for many contextually activated shards that don’t have to be rational), but is also narrower than other models (in the sense that values must be downstream of past reinforcement events).

As far as I can tell, there currently isn’t a super precise definition of a shard, nor is there a procedure that identifies shards given the description of an agent; this is an active area of research. Shard theory also does not purport to explain how exactly in-built reward circuitry is implemented.

2. Shards care about concepts inside the agent’s world model

A second claim of shard theory is that shards generally end up caring about concepts inside the agent’s world model, instead of direct sensory inputs. That is, most shards can be well modeled as bidding for plans on the basis of certain concepts and not raw sensory experiences. (Shard theory makes an implicit but uncontroversial assumption that smart agents will contain world models largely shaped via unsupervised learning.) For example, the aforementioned “juice-shard” cares about juice inside of the baby’s world model, as opposed to directly caring about sensory inputs associated with juice or maximizing the reward signal. That being said, there can still be shards that care about avoiding or seeking particular sensory inputs. Shard theory just predicts that most shards will end up binding to concepts inside a (unitary) world model.

3. Active shards bid for plans in a way shaped via reinforcement learning

Shard theory claims that the process that maps shards to actions can be modeled as making “bids” to a planner. That is, instead of shards directly voting on actions, they attempt to influence the planner in ways that have “historically increased the probability of executing plans” favored by the shard. For example, if the juice shard bringing a memory of consuming juice to conscious attention has historically led to the planner outputting plans where the baby consumes more juice, then the juice shard will be shaped via reinforcement learning to recall memories of juice consumption at opportune times. On the other hand, if raising the presence of a juice pouch to the planner’s attention has never been tried in the past, then we shouldn’t expect the juice shard to attempt this more so than any other random action.

This is another way in which shard theory differs from subagent models—by default, shards aren’t doing their own planning or search; they merely execute strategies that are learned via reinforcement learning.

As far as I can tell, shard theory does not make specific claims about what form these bids take, how a planner works, how much weight each shard has, or how the bids are aggregated together into an action.

4. The optimization target is poorly modeled by the reward function

It’s relatively uncontroversial that agents with significant learned components do not have to end up optimizing for their reward function. This is generally known as inner misalignment or goal misgeneralization, see for example Hubinger 2019, Langosco et al 2022, or Shah et al 2022.

However, shard theory makes a more aggressive claim—not only is it not necessary, as a consequence of how shards form, we should expect sufficiently large degrees of goal misgeneralization that thinking of an agent as maximizing rewards is a mistake (e.g. see Turner’s Inner and outer alignment decompose one hard problem into two extremely hard problems [LW · GW] and Reward is not the optimization target [LW · GW] for a more detailed discussion). Shard theory instead claims that we should instead directly model how the optimization target is shaped via the reward signal (“reward as chisel [LW · GW]”).

That is, because most shards end up caring about concepts inside a world model, the agent’s actions are determined primarily by shards that don’t bid to directly maximize reward. In rich environments with many possible actions, this will naturally cause the agent’s actions to deviate away from pure reward maximization. For example, a shard theorist might point to the fact that (most) people don’t end up maximizing only their direct hedonic experiences.

5. Agentic shards will seize power

While not all shards are agents, shard theory claims that relatively agentic shards exist and will eventually end up “in control” of the agent’s actions. Here, by “agentic shard”, shard theory refers to shards that have a specific goal that they attempt to achieve by behaving strategically in all contexts. In contrast, other shards may be little more than reflex agents. For example, a more agentic “be healthy” shard might steer me away from a candy store, preventing the candy shard from firing; a “do work” shard might steer me away from distractions like video games in favor of writing Alignment Forum content, etc. Competitiveness arguments imply that agentic shards that care about gaining power will end up in charge, while less agentic shards end up with progressively less influence on their agent’s policy.

One consequence of agentic shards steering away from situations that cause other shards to fire, is that it prevents the other shards from being reinforced. This implies that the nonagentic shards will slowly lose influence over time, as the agentic shards are still being reinforced.[1] This is probably sped along in many agents by implicit or explicit regularization, which may remove extraneous circuits over the course of training.

6. Value formation is very path dependent and architecture independent

Shard theory also claims that the values an agent ends up with is very path dependent. It’s trivially true that the final value of agents can depend on their initial experiences. A classic example of this is a q-learning agent in a deterministic environment with pessimistically initialized rewards and greedy exploration; once the q-learner takes a single trajectory and receives any rewards, they will be stuck following that trajectory forever.[2] Another classic result is McCoy et al’s BERTs of a feather do not generalize together, where varying the random seed and order of minibatches for fine-tuning the same BERT model lead to different generalization behavior.

Shard theory makes a slightly stronger claim: we should expect a large degree of path dependence for the values of agents produced by almost all current RL techniques, including most policy gradient algorithms.

Shard theorists sometimes also claim that value formation is relatively architecture independent [LW · GW]. For example, a sufficiently large transformer and a sufficiently large conv net, given the same training data presented in the same order, should converge to qualitatively similar values.

This second claim is very controversial—it’s a common claim in the deep learning literature that we should invent neural networks that contain certain inductive biases that allow them to develop more human-like values. (See for example Building Machines That Learn and Think Like People or Relational inductive biases, deep learning, and graph networks).

7. We can reliably shape final values by varying the reward schedule

Even if the final values of an agent are path dependent and architecture independent, this does not mean that we can reliably predict its final values. For example, it might be the case that the path dependency could be chaotic or depend greatly on the random initialization of the agent, or the agent’s values could change unpredictably during rapid capabilities generalization [AF · GW].

Shard theory claims that we can not only find a reliable map from training descriptions to final agent values, but invert this map to design training curricula that reliably lead to desirable values. (That being said, we might not be able to do it before truly transformative AI.) As an example, Alex Turner gives a sketch of a training curriculum that would lead an AI that reliably cares about diamonds in A shot at the diamond alignment problem [? · GW].

I think this is also a fairly controversial claim: most other approaches to building an aligned AI tend to assume that constructing this curriculum is not possible without strong additional assumptions (such as strong mechanistic interpretability or a practical solution to ELK [? · GW]) or only is possible for certain classes of AIs (such as myopic [LW · GW] or low-impact agents [? · GW]). That being said, I don’t think this is completely out there – for example, Jan Leike argues in What is inner alignment? that we can build a value-aligned AI using recursive reward modeling combined with distributional shift detection and safe exploration.

8. “Goal misgeneralization” is not a problem for AI alignment

A common way of dividing up the alignment problem is into “outer” alignment, where we devise an objective that captures what we want, and “inner” alignment, where we figure out how to build an agent that reliably pursues that objective. As mentioned in thesis 4, many alignment researchers have discussed the difficulty of avoiding inner misalignment.

As previously mentioned in thesis 4, shard theory posits that this decomposition is misleading, and that both inner and outer misalignment may be inevitable. However, shard theorists also claim that inner and outer misalignment can "cancel out" in reliable ways. By gaining a sufficiently good understanding of how agents develop values, we can directly shape the agent to have values amenable to human flourishing, even if we cannot come up with an outer aligned evaluation algorithm.

Shard theory argues that inner misalignment need not necessarily be bad news for alignment: instead, by developing a sufficiently good understanding of how shards form inside agents, we don’t need to think about inner alignment as a distinct problem at all. That is, even though we might not be able to specify a procedure that generates an outer aligned reward model, we might still be able to shape an agent that has desirable values.

9. Shard theory is a good model for human value formation

Finally, humans are the inspiration for the shard theory research program, and shard theory purports to be a good explanation of human behavior. (Hence the eponymous Shard Theory of Human Values [AF · GW].)

In some sense, this is not a necessary claim: shard theory can be a useful model of value formation in AIs, even if it is a relatively poor model of value formation in humans. For one, existing approaches to AGI development certainly start out significantly more blank-slate than human development, so even if humans have several hardcoded values, it’s certainly possible that shard theory would apply to the far more tabula rasa AI agents.

That being said, failing to be a good model of human behavior would still be a significant blow to the credibility of shard theory and would probably necessitate a significantly different approach to shard theory research.

My opinions on the validity of each of the nine theses

In this section I’ll present my thoughts on both the main claims of shard theory listed above. It’s worth noting that some of my disagreements here are a matter of degree as opposed to a matter of kind; I broadly sympathize with many of the intuitions behind shard theory, especially the need for more fine-grained mechanistic models of agent behavior.

Theses 1-3: the shard theory model of agency. I strongly agree that we should attempt to model the internal dynamics of agents in more detail than the standard rational agent model, and I believe that smart agents can generally be well understood as having world models (thesis 2) and that their internals are shaped via processes like reinforcement learning.

However, I’m not convinced that shards are the right level of analysis. Part of this is due to disagreements with later theses, which I’ll discuss later, and another part is due to my confusion about what a shard is and how I would identify shards in a neural network or even myself. (It’s also possible that shard theorists should prioritize finding more realistic examples.) Consequently, I believe that shard theorists should prioritize finding a working definition of a shard or a formalism through which shard theory can be studied and discussed more precisely (see the discussion section for more of my thoughts on these topics).

Thesis 4: reward is not the optimization target. I agree that reward is not always the optimization target, and consequently that Goodharting on the true reward is not the only way in which alignment schemes can fail (and models of the alignment problem that can only represent this failure mode are woefully incomplete).

However, I think there is a good reason to expect sophisticated AIs to exhibit reward hacking–like behavior: if you train your AIs with reinforcement learning, you are selecting for AIs that achieve higher reward on the training distribution. As AIs become more general and are trained on ever larger classes of tasks, the number of spurious features perfectly correlated with the reward shrink,[3] and we should expect agents that optimize imperfect correlates of the reward to be selected against in favor of strategies that directly optimize a representation of the reward. I also think that a significant fraction of this argument goes through an analogy with humans that may not necessarily apply to AIs we make.

My preferred metaphor when thinking about this topic is Rohin Shah’s “reward as optimization target is the Newtonian mechanics to reward as chisel’s general relativity”. While reward as optimization target is not a complete characterization of what occurs over the course of training an RL agent (and notably breaks down in several important ways), it’s a decent first approximation that is useful in most situations.

Thesis 5: agentic shards will seize power. I think insofar as shard theory is correct, the amount of agency in the weighted average of shards should increase over time as the agent becomes more coherent. That being said, I’m pretty ambivalent as to the exact mechanics through which this happens – it’s possible it looks like a power grab between shards, or it’s possible it’s closer to shards merging and reconciling.

Thesis 6: path dependence and architecture independence of value formation. As I said previously, I think that some degree of path dependence of values in RL (especially via underexploration) is broadly uncontroversial. However, as I’ve said previously, AIs that are trained to achieve high reward on a variety of tasks have a strong incentive to achieve high reward. Insofar as particular training runs lead to the formation of idiosyncratic values that aren’t perfectly correlated with the reward, we should expect training and testing to select against these values modulo deceptive alignment. (And I expect many of the pathological exploration issues to be fixed via existing techniques like large-scale self-supervised pretraining, imitation learning on human trajectories, or intrinsic motivation.) So while I still do expect a significant degree of path dependence, my guess is that it’s more productive to think about it in terms of preventing deceptive alignment as opposed to the mechanisms proposed by shard theory.

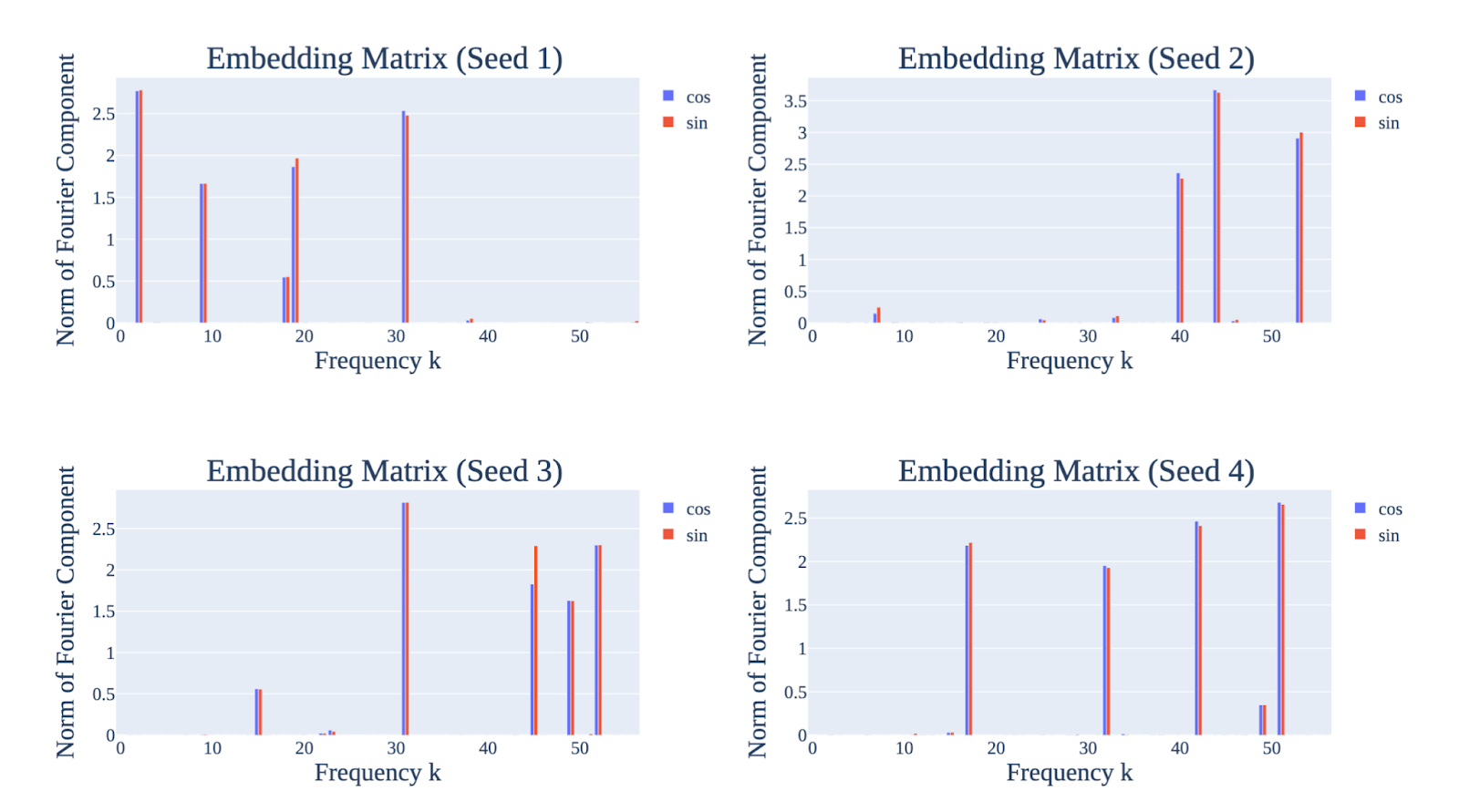

I am personally quite confused about architecture independence. On one hand, it seems like many different neural network architecture have comparable capabilities at similar scales. On the other hand, this seems to contradict many results in the machine learning literature. In terms of my own experience, I’ve found that mechanistic interpretability on small neural networks on algorithmic tasks often shows that the mechanisms of these neural networks (and thus their generalization properties) depend greatly on the architecture involved. For example, in Neel’s modular addition setting [LW · GW], a 1-layer full transformer with 4 heads learns to add in 3-5 key frequencies, while a 2-layer MLP (with a comparable amount of parameters) learns to add in up to 50 key frequencies. I expect that further mechanistic interpretability work on larger networks will help clarify this problem.

That being said, I think that many of the architectural differences we’ll see in the future will look like different ways to compose large transformers together and less like going from LSTM+Attention or conv nets to just transformers, which may have a larger effect on how values form than existing architectural differences.

Thesis 7: reliably shaping final values by varying the reward schedule. I agree that there exists a procedure in principle that allows us to reliably shape the final values of agents via only changing the order the agent encounters certain scenarios and the reward that they receive in each scenario. However, I expect this to be quite difficult to do in practice. For example, in Neel’s toy modular addition task, the frequencies that 1-layer full transformers converged on seemed to vary with random seed, even though the networks were trained with full batch gradient descent on the same dataset:

That being said, I think this is mainly an empirical question, and I’d like to see more concrete proposals and experiments validating this hypothesis in practice.

Thesis 8: necessity of studying goal misgeneralization. I’m broadly sympathetic to the approach of taking problems that are normally solved in two chunks and solving them end to end. I also think that the inner/outer alignment split is not particularly clean, and oftentimes introduces its own problems. Accordingly, I do think people should think more in the “reward as chisel” frame.

But while I agree with this approach directionally, I think that the inner/outer alignment split still has a lot of value. For example, it’s easier to work on approaches such as Debate or human feedback + ELK in the outer alignment frame by getting them to work well on average, while splitting off the difficulty of specifying human values allows us to more cleanly study approaches to preventing inner misalignment. While I agree that specifying a perfectly robust reward function (or even a method that will recover such a perfect reward in the limit of infinite data) is incredibly hard, it’s plausible to me that it suffices to have an overseer that is smarter than the agent it’s overseeing and that gets smarter as the agent gets smarter.

Thesis 9: shard theory as a model of human values. I’m personally not super well versed in neuroscience or psychology, so I can’t personally attest to the solidity or controversialness of shard theory as a theory of value formation. I’d be interested in hearing from experts in these fields on this topic.

That being said, I speculate that part of the disagreement I have with shard theorists is that I don’t think shard theory maps well onto my own internal experiences.

Discussion and future work

Despite my reservations with shard theory above, I do think that the core shard theory team should be applauded for their ambition. It’s not common for researchers to put forth an ambitious new theory of agency in this reference class, and I think that more projects of this level of ambition are needed if we want to solve the alignment problem. The fact that Alex and Quintin (and the rest of Team Shard) have posited a novel approach to attacking the alignment problem and made substantive progress on it in several months is worth a lot, and I expect them to produce good work in the future. This is the highest order bit in my assessment of the research program.

For all of our sakes, I also sincerely hope that they manage to prove my reservations entirely misguided and manage to solve (a significant chunk of) the alignment problem.

Accordingly, I’d like to offer some possible directions of future work on shard theory:

A formalism for shard theory

Currently, shard theory lacks a precise definition of what a shard is (or at least a working model of such). Making a formalism for shard theory (even one that’s relatively toy) would probably help substantially with both communicating key ideas and also making research progress.

Engagement with existing psychology, neuroscience, and genetics literature

It’s clear that the shard theory team has engaged substantially with the machine learning literature. (See, for example, Quintin’s Alignment Papers Roundup [? · GW]s.) It’s possible that more engagement with the existing psychology, neuroscience, and genetics literature could help narrow down some of the uncertainties with shard theory. It’d also help us better understand whether shard theory is an accurate description of human value formation. (It’s possible that this has already been done. In that case, I’d encourage the shard theory team to make a post summarizing their findings in this area.)

Experimental validation of key claims

Finally, shard theory makes many claims that can be tested on small neural networks. For example, it should be possible to mechanistically identify shards in small RL agents (such as the RL agents studied in Langosco et al), and it should also be possible to empirically characterize the claims regarding path dependence and architecture independence. While I think the field of machine learning often overvalues empirical work and undervalues conceptual work, I think that empirical work still has a lot of value: as with formalisms, experiments help with both communicating research ideas and making research progress. (As with the psychology and neuroscience lit review above, it’s possible that these experiments have already been done, in which case I’d again encourage the shard theory team to post more about their findings.)

- ^

For a toy example, suppose that each shard outputs a single logit, and the agent follows the advice of each shard with probability equal to the softmax of said logits. If the logits of agentic shards increases over time while the logits of non-agentic shards doesn’t increase (because said shards are never reinforced), then over time the probability the agent follows the advice of non-agentic shards will drop toward zero.

- ^

Interestingly, this is just a problem when an RL agent fails to properly explore the environment (due to a bad prior) in general. Even AIXI can end up stuck on suboptimal policies, if it starts with a sufficiently bad Solomonoff prior and sees evidence consistent with a Turing machine that assigns massive negative reward unless it follows a particular course of action. If the actions it takes prevents it from gaining more evidence about which world it’s in—for example, if the course of action is “sit in the corner and turn off all sensors”---then the AIXI might just sit in the proverbial corner forever.

- ^

I’m drawing a lot of this intuition from the Distributionally Robust Optimization work, where rebalancing classes (and training again) is generally sufficient to remove many spurious correlations.

30 comments

Comments sorted by top scores.

comment by So8res · 2022-12-22T23:54:28.026Z · LW(p) · GW(p)

I think that distillations of research agendas such as this one are quite valuable, and hereby offer LawrenceC a $3,000 prize for writing it. (I'll follow up via email.) Thanks, LawrenceC!

Going forward, I plan to keep an eye out for distillations such as this one that seem particularly skilled or insightful to me, and offer them a prize in the $1-10k range, depending on how much I like them.

Insofar as I do this, I'm going to be completely arbitrary about it, and I'm only going to notice attempts haphazardly, so please don't do rely on the assumption that I'll grant your next piece of writing a prize. I figure that this is better than nothing, but I don't have the bandwidth at the moment to make guarantees about how many posts I'll read.

Separately, here's some quick suggestions about what sort of thing would make me like the distillation even more:

- Distill out a 1-sentence-each bullet-point version of each of the 9 hypotheses at the top

- Distill out a tweet-length version of the overall idea

- Distill out a bullet-point list of security assumptions [LW · GW]

These alone aren't necessarily what makes a distillation better-according-to-me in and of themselves, but I hypothesize that the work required to add those things is likely to make the overall distillation better. (The uniting theme is something like: forcing yourself to really squeeze out the key ideas.)

For clarity as to what I mean by a "security assumption", here are a few examples (not intended to apply in the case of shard theory, but intenderd rather to transmit the idea of a security assumption):

- "This plan depends critically on future ability to convince leading orgs to adopt a particular training method."

- "This plan depends critically on the AI being unable to confidently distinguish deployment from training."

- "This plan depends critically on the hypothesis that AI tech will remain compute-intensive right up until the end."

This is maybe too vague a notion; there's an art to figuring out what counts and what doesn't, and various people probably disagree about what should count.

Replies from: Chris_Leong, LawChan↑ comment by Chris_Leong · 2022-12-29T05:22:42.804Z · LW(p) · GW(p)

Are there any agendas you would particularly like to see distilled?

↑ comment by LawrenceC (LawChan) · 2022-12-23T17:15:28.798Z · LW(p) · GW(p)

Thanks Nate!

I didn't add a 1-sentence bullet point for each thesis because I thought the table of contents on the left was sufficient, though in retrospect I should've written it up mainly for learning value. Do you still think it's worth doing after the fact?

Ditto the tweet thread, assuming I don't plan on tweeting this.

Replies from: So8res↑ comment by So8res · 2022-12-23T17:24:46.063Z · LW(p) · GW(p)

It would still help like me to have a "short version" section at the top :-)

Replies from: LawChan↑ comment by LawrenceC (LawChan) · 2022-12-25T04:49:08.979Z · LW(p) · GW(p)

I've expanded the TL;DR at the top to include the nine theses. Thanks for the suggestion!

comment by Kaj_Sotala · 2023-01-03T20:53:42.008Z · LW(p) · GW(p)

Unlike in subagent models, the subcomponents of agents are not themselves always well modeled as (relatively) rational agents. For example, there might be shards that are inactive most of the time and only activate in a few situations.

For what it's worth, at least in my conception of subagent models, there can also be subagents that are inactive most of the time and only activate in a few situations. That's probably the case for most of person's subagents, though of course "subagent" isn't necessarily a concept that cuts reality a joints, so this depends on where exactly you'd draw the boundaries for specific subagents.

Shard theory claims that the process that maps shards to actions can be modeled as making “bids” to a planner. That is, instead of shards directly voting on actions, they attempt to influence the planner in ways that have “historically increased the probability of executing plans” favored by the shard. For example, if the juice shard bringing a memory of consuming juice to conscious attention has historically led to the planner outputting plans where the baby consumes more juice, then the juice shard will be shaped via reinforcement learning to recall memories of juice consumption at opportune times. On the other hand, if raising the presence of a juice pouch to the planner’s attention has never been tried in the past, then we shouldn’t expect the juice shard to attempt this more so than any other random action.

This is another way in which shard theory differs from subagent models—by default, shards aren’t doing their own planning or search; they merely execute strategies that are learned via reinforcement learning.

This is actually close to the model of subagents that I had in "Subagents, akrasia, and coherence in humans [? · GW]" and "Subagents, neural Turing machines, thought selection, and blindspots [? · GW]". The former post talks about subagents sending competing bids to a selection mechanism that picks the winning bid based on (among other things) reinforcement learning and the history of which subagents have made successful predictions in the past. It also distinguishes between "goal-directed" and "habitual" subagents, where "habitual" ones are mostly executing reinforced strategies rather than doing planning.

The latter post talks about learned rules which shape our conscious content, and how some of the appearance of planning and search may actually come from reinforcement learning creating rules that modify consciousness in specific ways (e.g. the activation of an "angry" subagent frequently causing harm, with reward then accruing to selection rules that block the activation of the "angry" subagent such as by creating a feeling of confusion instead, until it looks like there is a "confusion" subagent that "wants" to block the feeling of anger).

Replies from: LawChan↑ comment by LawrenceC (LawChan) · 2023-01-03T21:15:05.730Z · LW(p) · GW(p)

Thanks for the clarification!

I agree that your model of subagents in the two posts share a lot of commonalities with parts of Shard Theory, and I should've done a lit review of your subagent posts. (I based my understanding of subagent models on some of the AI Safety formalisms I've seen as well as John Wentworth's Why Subagents? [LW · GW].) My bad.

That being said, I think it's a bit weird to have "habitual subagents", since the word "agent" seems to imply some amount of goal-directedness. I would've classified your work as closer to Shard Theory than the subagent models I normally think about.

↑ comment by Kaj_Sotala · 2023-01-03T21:57:54.626Z · LW(p) · GW(p)

No worries!

That being said, I think it's a bit weird to have "habitual subagents", since the word "agent" seems to imply some amount of goal-directedness.

Yeah, I did drift towards more generic terms like "subsystems" or "parts" later in the series for this reason, and might have changed the name of the sequence if only I'd managed to think of something better. (Terms like "subagents" and "multi-agent models of mind" still gesture away from rational agent models in a way that more generic terms like "subsystems" don't.)

comment by Chris_Leong · 2022-12-29T05:27:47.908Z · LW(p) · GW(p)

I’ve been reading a lot of shared theory and finding fascinating, even though I’m not convinced that a more agentic subcomponent wouldn’t win out and make the shards obsolete. Especially if you’re claiming the more agentic shards would seize power, then surely an actual agent would as well?

I would love to hear why Team Shard believes that the shards will survive in any significant form. Off the top of my head, I’d guess their explanation might relate to how shards haven’t disappeared in humans?

On the other hand, I much more strongly endorse the notion of thinking of a reward as a chisel rather than something we’ll likely find a way of optimising.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2022-12-29T10:05:31.683Z · LW(p) · GW(p)

the agency is through the shards acting together; the integrated agent is made of the shards in the first place. when you plan, you invoke a planning system that has shards. when you evaluate, you invoke an evaluation system that has shards. when you act, you run policy that has shards. coherence into simplifications of shards with a "symbolic regression version" of the function the shards approximate wouldn't magically change the shardness of the function.

comment by Steven Byrnes (steve2152) · 2022-12-20T15:14:53.934Z · LW(p) · GW(p)

Unlike in the learning and steering model, all of the shards are shaped primarily via reinforcement learning; only the rewards, learning algorithms, and a few stimulus reactions can be hardcoded. In the learning and steering model, the steering subsystem contains hardcoded social instincts and complex drives such as disgust or awe. In contrast, in the shard theory baby example, there are no in-built “listen to authority”-shards, the baby learns a “listen to authority”-shard as a consequence of their hardcoded reward circuitry and learning algorithm.

(“Learning & steering model” guy here.) I think this is confused. “Only the rewards, learning algorithms, and a few stimulus reactions can be hardcoded” is a claim that I more-or-less endorse.

Question: Should we think of disgust as “stimulus reaction” or “complex drive”? I would split up three things here:

- “the disgust reaction” (a somewhat-involuntary set of muscle motions and associated “feelings”) (actually we should say “disgust reactions” — I heard there were two of them with different facial expressions. Source: here I think?)

- “the concept of disgust” (an abstract concept in our world-models, subtly different for different people, and certainly different in different cultures; for example those two disgust reactions I mentioned are lumped into the same concept, at least for English-speakers)

- “the set of situations / beliefs / expectations / etc. that evoke a disgust reaction” — for example, if I say “I’m gonna lick my armpit”, you might feel disgust.

I think the first bullet point is innate / hardwired, the second bullet-point is part of the learned world-model, and the third bullet point is learned within a lifetime and stored in the “Learning Subsystem” but I wouldn’t say it’s part of the world-model per se, kinda more like a trained “head” coming off the world-model. However, I think the outputs of this trained “disgust head” interact with a hardcoded system in the brainstem that also has direct access to tastes and smells, and thus it’s possible to feel disgust for direct sensory reasons that were not learned.

What about awe? Similar to what I said about disgust, probably.

What about “listen to authority”? Hard to say. I think there are probably some simple “innate reactions” that play a role in the human tendency to form status hierarchies, and maybe also dominance hierarchies, but I’m not sure what they are or how they work at the moment. Clearly an idea like “Don’t mess with Elodie or she’ll beat me up” could be learned by generic RL without any specific tailoring in the genome. So I can imagine a number of different possibilities and don’t have much opinion so far.

Replies from: LawChan↑ comment by LawrenceC (LawChan) · 2022-12-20T19:10:28.282Z · LW(p) · GW(p)

Thanks for the clarification. I've edited in a link to this comment.

comment by Steven Byrnes (steve2152) · 2022-12-20T18:13:20.803Z · LW(p) · GW(p)

However, I think there is a good reason to expect sophisticated AIs to exhibit reward hacking–like behavior: if you train your AIs with reinforcement learning, you are selecting for AIs that achieve higher reward on the training distribution. As AIs become more general and are trained on ever larger classes of tasks, the number of spurious features perfectly correlated with the reward shrink,[3] and we should expect agents that optimize imperfect correlates of the reward to be selected against in favor of strategies that directly optimize a representation of the reward. I also think that a significant fraction of this argument goes through an analogy with humans that may not necessarily apply to AIs we make.

On the other hand, future programmers will not want reward-hacking to happen, and when they’re making decisions about training approach, architecture, etc., they will presumably make those decisions in a way that avoids reward-hacking (at least if that’s straightforward and they can get feedback). When you say “selected against”, that’s arguably true within an RL run, but meanwhile the programmers are an outer loop trying to design the RL run in such a way that this “selection” does not find the global optimum. See my post from just now [LW · GW].

Replies from: LawChan↑ comment by LawrenceC (LawChan) · 2022-12-20T19:09:24.206Z · LW(p) · GW(p)

Right, that's a decent objection.

I have three responses:

- I think that the specific claim that I'm responding to doesn't depend on the AI designers choosing RL algorithms that don't train until convergence. If the specific claim was closer to "yes, RL algorithms if ran until convergence have a good chance of 'caring' about reward, but in practice we'll never run RL algorithms that way", then I think this would be a much stronger objection.

- How are the programmers evaluating the trained RL agents in order to decide how to tune their RL runs? For example, say they are doing early stopping to prevent RL from Goodharting on the given proxy reward: when are they deciding to stop? My guess is they will stop when a second proxy reward is high. So we still end up selecting in favor of a sum of first proxy reward + second proxy reward. Indeed, you can generally think of RL + explicit regularization itself as RL on a different cost function.

- I'm not convinced that people who train their AIs won't just optimize the AI on a single proxy reward that they also just evaluate with? You generally get better performance on the task you care about if you train on the task you care about! Another way of saying this is the ML truism that (absent model capacity/optimization difficulties) you generally do better if you optimize the system directly (i.e. end-to-end) than if you learn the components separately and put them together.

↑ comment by Steven Byrnes (steve2152) · 2022-12-20T19:19:51.630Z · LW(p) · GW(p)

There’s no such thing as convergence in the real world. It’s essentially infinitely complicated. There are always new things to discover.

I would ask “how is it that I don’t want to take cocaine right now”? Well, if I took cocaine, I would get addicted. And I know that. And I don’t want to get addicted. So I have been deliberately avoiding cocaine for my whole life. By the same token, maybe we can raise our baby AGIs in a wirehead-proof crib, and eventually it will be sufficiently self-aware and foresighted that when we let it out of the crib, it can deliberately avoid situations that would get it addicted to wireheading. We can call this “incomplete exploration”, but we’re taking advantage of the fact that the AGI itself can foresightedly and self-aware-ly ensure that the exploration remains incomplete.

I feel like you’re arguing that what I’m saying could potentially fail, and I’m arguing that what I’m saying could potentially succeed. In which case, maybe we can both agree that it’s a potential but not inevitable failure mode that we should absolutely keep thinking about.

Replies from: LawChan↑ comment by LawrenceC (LawChan) · 2022-12-20T19:33:42.161Z · LW(p) · GW(p)

wirehead-proof crib, and eventually it will be sufficiently self-aware and foresighted that when we let it out of the crib, it can deliberately avoid situations that would get it addicted to wireheading.

I feel like I'm saying something relatively uncontroversial here, which is that if you select agents on the basis of doing well wrt X sufficiently hard enough, you should end up with agents that care about things like X. E.g. if you select agents on the basis of human approval, you should expect them to maximize human approval in situations even where human approval diverges from what the humans "would want if fully informed".

This doesn't mean that they end up intrinsically caring about exactly X, but it does promote the "they might reward hack X" hypothesis into consideration.

I feel like you’re arguing that what I’m saying could potentially fail, and I’m arguing that what I’m saying could potentially succeed. In which case, maybe we can both agree that it’s a potential but not inevitable failure mode that we should absolutely keep thinking about.

Yep, my current stance is that it's quite likely we'll see power grab into reward-hacking behavior by default, but it's nowhere near inevitable (but we should probably think about this scenario anyways).

Replies from: TurnTrout↑ comment by TurnTrout · 2022-12-21T21:57:23.804Z · LW(p) · GW(p)

I feel like I'm saying something relatively uncontroversial here, which is that if you select agents on the basis of doing well wrt X sufficiently hard enough, you should end up with agents that care about things like X. E.g. if you select agents on the basis of human approval, you should expect them to maximize human approval in situations even where human approval diverges from what the humans "would want if fully informed".

I actually want to controversy that. I'm now going to write quickly about selection arguments in alignment more generally (this is not all addressed directly to you).

- I don't know what it means to "select" "sufficiently hard enough" here; I think when we actually ground out what that means, we have actual mechanistic arguments to consider.

- We aren't just "selecting for" human approval. We're selecting for conformity-under-updating with all functions which give the same historical feedback (i.e. when the reward button was pressed when the AI was telling jokes, and when it wasn't).

- i.e. identifiability "issues" undermine selection reasoning for why agents do one particular thing.

- I think that "select for human approval" is a very mentally available selection criterion to consider, such that we promote the hypothesis to attention without considering a range of alternative selection pressures.

- What about selecting for conformance to "human approval until next year, but extremely high approval on OOD cheese-making situations"?

- You could say "that's more complex", which is reasonable, but now we're reasoning about mechanisms, and should just keep doing so IMO (see [point 3] below).

- Insofar as selection is worth mentioning, there are a huge range of selection pressures of exactly the same nominal strength (e.g. "human approval until next year, but extremely high approval on OOD cheese-making situations" vs "human approval").

- Some people want to apply selection arguments because they believe that selection arguments bypass the need to understand mechanistic details to draw strong conclusions. I think this is mistaken, and that selection arguments often prove too much, and to understand why, you have to know something about the mechanisms.

- An inexact but relevant analogy. Consider a gunsmith who doesn't know biology and hasn't seen many animals, but does know the basics of natural selection. He reasons "Wolves with sniper rifles would have incredible fitness advantages relative to wolves without sniper rifles. Therefore, wolves develop sniper rifles given enough evolutionary time."

- It is simply true that there is a hypothetical fitness gradient in this direction (it would in fact improve fitness), but false that there is a path in mutation-space which implements this phenotypic change.

- To predict, in advance and without knowing about wolves already, you have to know things about biology. You may have to understand the available mutations at any given genotype, and perhaps some details about chromosomal crossover, and how guns work, and the wolf's evolutionary environment, and physics, and perhaps many other things.

- From my perspective, a bunch of selection-based reasoning draws enormous conclusions (e.g. high chance the policy cares about reward OOD) given vague / weak technical preconditions (e.g. policies are selected for reward) without attending to strength or mechanism or size of the trained network or training timescales or net direction of selection. All the while being perceived (by some, not necessarily you) to avoid/reduce the need to understand SGD dynamics to draw good conclusions about alignment properties.

- The point isn't that these selection arguments can't possibly be shored up. It's that I don't think people do shore them up, and I don't see how to shore them up, and in order to shore them up, we have to start talking about details and understanding the mechanisms anyways.

- The point isn't that selection reasoning can never be useful, but I think that we have to be damn careful with it. I'm not yet settled on how I want to positively use it, going forwards.

- An inexact but relevant analogy. Consider a gunsmith who doesn't know biology and hasn't seen many animals, but does know the basics of natural selection. He reasons "Wolves with sniper rifles would have incredible fitness advantages relative to wolves without sniper rifles. Therefore, wolves develop sniper rifles given enough evolutionary time."

(I wrote a lot of this and have to go now, and this isn't necessarily a complete list of my gripes, but here's some of my present thinking.)

This doesn't mean that they end up intrinsically caring about exactly X, but it does promote the "they might reward hack X" hypothesis into consideration.

I agree this should at least be considered. But not to the degree it's been historically considered.

Replies from: TurnTrout, rhaps0dy↑ comment by TurnTrout · 2022-12-21T22:08:04.655Z · LW(p) · GW(p)

You can also get around the "IDK the mechanism" issue if you observe variance over the relevant trait in the population you're selecting over. Like, if we/SGD could veridically select over "propensity to optimize approval OOD", then you don't have to know the mechanism. The variance shows you there are some mechanisms, such that variation in their settings leads to variation in the trait (e.g. approval OOD).

But the designers can't tell that. Can SGD tell that? (This question feels a bit confused, so please extra scan it before answering it)

From their perspective, they cannot select on approval OOD, except insofar as selecting for approval on-training rules out some settings which don't pursue approval OOD. (EG If I want someone to watch my dog, i can't scan the dogsitter and divine what they will do alone in my house. But if the dogsitter steals from me in front of my face during the interview, I can select against that. Combined with "people who steal from you while you watch, will also steal when you don't watch", I can get a tiny bit of selection against thieving dogsitters, even if I can't observe them once I've left.)

Replies from: rhaps0dy↑ comment by Adrià Garriga-alonso (rhaps0dy) · 2022-12-23T23:29:39.322Z · LW(p) · GW(p)

But the designers can't tell that. Can SGD tell that?

No, SGD can't tell the degree to which some agent generalizes a trait outside the training distribution.

But empirically, it seems that RL agents reinforced to maximize some reward function (e.g. the Atari game score) on data points; do fairly well at maximizing that reward function OOD (such as when playing the game again from a different starting state). ML systems in general seem to be able to generalize to human-labeled categories in situations that aren't in the training data (e.g. image classifiers working, LMs able to do poetry).

It is therefore very plausible that RL systems would in fact continue to maximize the reward after training, even if what they're ultimately maximizing is just something highly correlated with it.

Replies from: TurnTrout↑ comment by TurnTrout · 2022-12-26T16:31:13.817Z · LW(p) · GW(p)

It is therefore very plausible that RL systems would in fact continue to maximize the reward after training, even if what they're ultimately maximizing is just something highly correlated with it.

What do you mean by this? They would be instrumentally aligned with reward maximization, since reward is necessary for their terminal values?

Can you give an example of such a motivational structure, so I know we're considering the same thing?

ML systems in general seem to be able to generalize to human-labeled categories in situations that aren't in the training data (e.g. image classifiers working, LMs able to do poetry).

Agreed. I also think this is different from a very specific kind of generalization towards reward maximization.

I again think it is plausible (2-5%-ish) that agents end up primarily making decisions on the basis of a tight reward-correlate (e.g. the register value, or some abstract representation of their historical reward function), and about 60% that agents end up at least somewhat making decisions on the basis of reward in a terminal sense (e.g. all else equal, the agent makes decisions which lead to high reward values; I think people are reward-oriented in this sense). Overall I feel pretty confused about what's going on with people, and I can imagine changing my mind here relatively easily.

Replies from: rhaps0dy↑ comment by Adrià Garriga-alonso (rhaps0dy) · 2022-12-30T01:48:04.238Z · LW(p) · GW(p)

What do you mean by this? They would be instrumentally aligned with reward maximization, since reward is necessary for their terminal values?

No, I mean that they'll maximize a reward function that is ≈equal to the reward function on the training data (thus, highly correlated), and a plausible extrapolation of it outside of the training data. Take the coinrun example, the actual reward is "go to the coin", and in the training data this coincides with "go to the right". In test data from a similar distribution this coincides too.

Of course, this correlation breaks when the agent optimizes hard enough. But the point is that the agents you get are only those that optimize a plausible extrapolation of the reward signal in training, which will include agents that maximize the reward in most situations way more often than if you select a random agent.

Is your point in:

I also think this is different from a very specific kind of generalization towards reward maximization

That you think agents won't be maximizing reward at all?

I would think that even if they don't ultimately maximize reward in all situations, the situations encountered in test will be similar enough to training that agents will still kind of maximize reward there. (And agents definitely behave as reward maximizers in the specific seen training points, because that's what SGD is selecting)

I'm not sure I understand what we disagree on at the moment.

Replies from: TurnTrout↑ comment by TurnTrout · 2023-01-03T18:13:17.049Z · LW(p) · GW(p)

I'm going to just reply with my gut responses here, hoping this clarifies how I'm considering the issues. Not meaning to imply we agree or disagree.

which will include agents that maximize the reward in most situations way more often than if you select a random agent.

Probably, yeah. Consider a network which received lots of policy gradients from the cognitive-update-intensity-signals ("rewards"[1]) generated by the "go to coin?" subroutine. I agree that this network will tend to, in the deployment distribution, tend to take actions which average higher sum-cognitive-update-intensity-signal ("reward over time"), than networks which are randomly initialized, or even which have randomly sampled shard compositions/values (in some reasonable sense).

But this doesn't seem like it constrains my predictions too strongly. It seems like a relatively weak, correlational statement, where I'd be better off reasoning mechanistically about the likely "proxy-for-reward" values which get learned.

And agents definitely behave as reward maximizers in the specific seen training points, because that's what SGD is selecting

I understand you to argue: "SGD will select policy networks for maximizing reward during training. Therefore, we should expect policy networks to behaviorally maximize reward on the training distribution over episodes." On this understanding of what you're arguing:

No, agents often do not behave as reward maximizers in the specific seen training points. RL trains agents which don't maximize training reward... all the time!

Agents:

- die in video games (see DQN),[2]

- fail to perform the most expert tricks and shortcuts (is AlphaZero playing perfect chess?),

- (presumably) fail to exploit reward hacking opportunities which are hard to explore into.

For the last point, imagine that AlphaStar could perform a sequence of 300 precise actions, and then get +1 million policy-gradient-intensity ("reward") due to a glitch. On the reasoning I understand you to advance, SGD is "selecting" for networks which receive high policy-gradient-intensity, but... it's never going to happen in realistic amounts of time. Even in training.

This is because SGD is updating the agent on the observed empirical data distribution, as collected by the policy at previous timesteps. SGD isn't updating the agent on things which didn't happen. And so SGD itself isn't selecting for reward maximizers. Maybe if you run the outer training loop long enough, such that the agent probabilistically explores into this glitch (a long time), maybe then this reward-maximizing policy gets "selected for."[3]

So there's this broader question I have of "what, exactly, is being predicted by the 'agents are selected to maximize reward during training'[4] hypothesis?". It seems to me like we need to modify this hypothesis in various ways in order to handle the objections I've raised. And the ways we're modifying the hypothesis (e.g. "well, it depends on the empirical data distribution, and expressivity constraints implicit in the inductive biases, and the details of exploration strategies, and the skill ceiling of the task") seem to lead us to us no longer predicting that the policy networks will actually maximize reward in training episodes.

(Also, I note that the context of this thread [LW(p) · GW(p)] is that I generally don't buy "SGD selects for X" arguments without mechanistic reasoning to back them up.)

- ^

I'm substituting mechanistic descriptions of "reward" because that helps me think more clearly about what's happening during training, without the suggestive-to-me connotations of "reward."

- ^

You can argue "DQN sucked", but also DQN was a substantial advance at the time. Why should I expect that AGI will be trained on an architecture which actually gets maximal training reward, as opposed to squeaking by with a decent amount and still ending up very smart?

- ^

But maybe not, because the network can mode collapse onto existing reinforced Starcraft strategies fast enough that P(explore into glitch) decreases exponentially with time, such that the final probability of exploring into the glitch is not in fact 1. (Haven't checked the math on this, but feels plausible.)

- ^

Paul Christiano recently made [LW(p) · GW(p)] a similar claim:

you should expect an RL agent to maximize reward conditioned on the episode appearing in training, because that's what SGD would select for.

↑ comment by Adrià Garriga-alonso (rhaps0dy) · 2022-12-23T23:18:44.386Z · LW(p) · GW(p)

Strongly agree with this in particular:

Some people want to apply selection arguments because they believe that selection arguments bypass the need to understand mechanistic details to draw strong conclusions. I think this is mistaken, and that selection arguments often prove too much, and to understand why, you have to know something about the mechanisms.

(emphasis mine). I think it's an application of the no free lunch razor [AF · GW]

It is clear that selecting for X selects for agents which historically did X in the course of the selection. But how this generalizes outside of the selecting strongly depends on the selection process and architecture. It could be a capabilities generalization, reward generalization for the written-down reward, generalization for some other reward function, or something else entirely.

We cannot predict how the agent will generalize without considering the details of its construction.

↑ comment by TurnTrout · 2022-12-21T21:39:37.253Z · LW(p) · GW(p)

If the specific claim was closer to "yes, RL algorithms if ran until convergence have a good chance of 'caring' about reward, but in practice we'll never run RL algorithms that way", then I think this would be a much stronger objection.

This is part of the reasoning (and I endorse Steve's sibling comment, while disagreeing with his original one). I guess I was baking "convergence doesn't happen in practice" into my reasoning, that there is no force which compels agents to keep accepting policy gradients from the same policy-gradient-intensity-producing function (aka the "reward" function).

From Reward is not the optimization target [LW · GW]:

But obviously [the coherence theorems'] conditions aren’t true in the real world. Your learning algorithm doesn’t force you to try drugs. Any AI which e.g. tried every action at least once would quickly kill itself, and so real-world general RL agents won’t explore like that because that would be stupid. So the RL agent’s algorithm won’t make it e.g. explore wireheading either, and so the convergence theorems don’t apply even a little—even in spirit.

Perhaps my claim was even stronger, that "we can't really run real-world AGI-training RL algorithms 'until convergence', in the sense of 'surmounting exploration issues'." Not just that we won't, but that it often doesn't make sense to consider that "limit."

Also, to draw conclusions about e.g. running RL for infinite data and infinite time so as to achieve "convergence"... Limits have to be well-defined, with arguments for why the limits are reasonable abstraction, with care taken with the order in which we take limits.

- What about the part where the sun dies in finite time? To avoid that, are we assuming a fixed data distribution (e.g. over observation-action-observation-reward tuples in a maze-solving environment, with more tuples drawn from embodied navigation)

- What is our sampling distribution of data, since "infinite data" admits many kinds of relative proportions?

- Is the agent allowed to stop or modify the learning process?

- (Even finite-time learning theory results don't apply if the optimized network can reach into the learning process and set its learning rate to zero, thereby breaking the assumptions of the theorems.)

- Limit to infinite data and then limit to infinite time, or vice versa, or both at once?

For example, say they are doing early stopping to prevent RL from Goodharting on the given proxy reward: when are they deciding to stop? My guess is they will stop when a second proxy reward is high. So we still end up selecting in favor of a sum of first proxy reward + second proxy reward. Indeed, you can generally think of RL + explicit regularization itself as RL on a different cost function.

I disagree. Early stopping on a separate stopping criterion which we don't run gradients through, is not at all similar [EDIT: seems in many ways extremely dissimilar] to reinforcement learning on a joint cost function additively incorporating the stopping criterion with the nominal reward. Where is the reinforcement, where are the gradients, in the first case? They don't flow back through performance on the stopping criterion. These are just mechanistically different processes.

Separately consider how many bits of selection this is for the stopping criterion being high. Suppose you run 12 random seeds on a make-people-smile task. (Er, I'm stuck, can you propose the stopping criterion reward you had in mind?) Anyways, my intuition here was just that it seems like you aren't getting more than bits of selection on any given quantity from taking statistics of the seeds. Seems like way too small to conclude "this is meaningfully selecting for sum of first+second reward."

Replies from: Aidan O'Gara↑ comment by aog (Aidan O'Gara) · 2022-12-22T02:17:08.976Z · LW(p) · GW(p)

“Early stopping on a separate stopping criterion which we don't run gradients through, is not at all similar to reinforcement learning on a joint cost function additively incorporating the stopping criterion with the nominal reward.”

Sounds like this could be an interesting empirical experiment. Similar to Scaling Laws for Reward Model Overoptimization, you could start with a gold reward model that represents human preference. Then you could try to figure out the best way to train an agent to maximize gold reward using only a limited number of sampled data points from that gold distribution. For example, you could train two reward models using different samples from the same distribution and use one for training, the other for early stopping. (This is essentially the train / val split used in typical ML settings with data constraints.) You could measure the best ways to maximize gold reward on a limited data budget. Alternatively your early stopping RM could be trained on a gold RM containing distribution shift, data augmentations, adversarial perturbations, or a challenge set of particularly challenging cases.

Would you be interested to see an experiment like this? Ideally it could make progress towards an empirical study of how reward hacking happens and how to prevent it. Do you see design flaws that would prevent us from learning much? What changes would you make to the setup?

comment by Algon · 2022-12-19T23:35:36.725Z · LW(p) · GW(p)

That being said, I speculate that part of the disagreement I have with shard theorists is that I don’t think shard theory maps well onto my own internal experiences.

Could you expand on this? My own internal experience led me to believe in something like shard theory, though with less of a focus on values and more on cognitive patterns. Much of shard theory strikes me as so obvious that it might even be clouding my judgement as to how plausible it really is. Hearing an account of how your personal experience differs would be useful, I think.

As an example of what I'm looking for, consider the following fictional account.

As an example of what I mean, consider someone who reads about Cantor's proof that , followed by an exposition of its uses in elementary computability theory. As the person reads the proof, they learn a series of associations in their brain, going like "natural numbers aren't equivalent to the reals --> can list all reals with integer index", "take integer indexed reals and construct a new real", "construct a new real from integer indexed list by making its first element not equal to the element before the decimal place in the first real, making its second elment" and so on. These associations are not entirely one way, and some of the associations may themselves be associated with a sequence of associations, or vice versa.

Regardless, they form corrseopond to something like a collection of contextually activated algorithms, composed together to make another contextually activated algorithm. These contexts get modified, activating on more abstract inputs as the person reads through the list of applications of the technique.

And depending on what, exactly, they think of whilst they're reading the list of techniques --like whether they're going through the sequence of associations they had for Cantor's proof and generating a corresponding sequence of associations for the listed applications-- they might modify their contextually activated pieces of cognition into something somewhat more general.

Replies from: LawChan↑ comment by LawrenceC (LawChan) · 2022-12-20T19:48:57.209Z · LW(p) · GW(p)

Regardless, they form corrseopond to something like a collection of contextually activated algorithms, composed together to make another contextually activated algorithm. These contexts get modified, activating on more abstract inputs as the person reads through the list of applications of the technique.

I agree that the part of shard theory that claims that agents can be thought of as consisting of policy fragments feels very obvious to me. I don't think this is informative about shard theory vs other models of learned agents -- it's clear that you can almost always chunk up an agent's policy into lots of policy fragments (and indeed, I can chunk up the motivation of many things I do into various learned heuristics). Even the rational agent model I presented above lets you chunk up the computation of the learned policy into policy fragments!

Shard theory does argue that the correct unit of analysis is a shard i.e. "a contextually activated computation that influences decisions", but it also argues also that various shards should be modeled as caring about different things (that is, they are shards of value [AF · GW] and not shards of cognition) and uses examples of shards like "juice-shard", "ice-cream shard" and "candy-shard". It's the latter claim that I think doesn't match my internal experience.

Replies from: Algon↑ comment by Algon · 2022-12-20T20:11:04.835Z · LW(p) · GW(p)

Shard theory does argue that the correct unit of analysis is a shard i.e. "a contextually activated computation that influences decisions", but it also argues also that various shards should be modeled as caring about different things (that is, they are shards of value [LW · GW] and not shards of cognition) and uses examples of shards like "juice-shard", "ice-cream shard" and "candy-shard". It's the latter claim that I think doesn't match my internal experience

My apologies, I didn't convey what I was wanted clearly. Could you give a detailed example of your internal experiences as they conflict with shard theory, and perhaps what you think generates such experiences, so that we as readers can better grasp your crux with the theory. I should have tried to give such an example myself, instead of rambling on about shards of cognition.

comment by mic (michael-chen) · 2022-12-21T01:28:45.805Z · LW(p) · GW(p)

For example, it should be possible to mechanistically identify shards in small RL agents (such as the RL agents studied in Langosco et al)

Could you elaborate on how we could do this? I'm unsure if the state of interpretability research is good enough for this yet.

Replies from: LawChan↑ comment by LawrenceC (LawChan) · 2022-12-21T02:01:08.782Z · LW(p) · GW(p)

I don't have a particular idea in mind, but current SOTA on interp is identifying how ~medium sized LMs implement certain behaviors, e.g. IOI (or fully understanding smaller networks on toy tasks like modular addition or parenthesis balance checking). The RL agents used in Langosco et al are much smaller than said LMs, so it should be possible to identify the circuits of the network that implement particular behaviors as well. There's also the advantage that conv nets on vision domains are often significantly easier to interp than LMs, e.g. because feature visualization works on them.

If I had to spitball a random idea in this space:

- Reproduce one of the coinrun run-toward-the-right agents, figure out the circuit or lottery ticket that implements the "run toward the right" behavior using techniques like path patching or causal scrubbing, then look at intermediate checkpoints to see how it develops.

- Reproduce one of the coinrun run-toward-the-right agents, then retrain it so it goes after the coin. Interp various checkpoints to see how this new behavior develops over time.

- Reproduce one of the coinrun run-toward-the-right agents, and do mechanistic interp to figure out circuits for various more fine-grained behaviors, e.g. avoiding pits or jumping over ledges.

IIRC some other PhD students at CHAI were interping reward models, though I'm not sure what came of that work though.