Reward is not the optimization target

post by TurnTrout · 2022-07-25T00:03:18.307Z · LW · GW · 123 commentsContents

Reward probably won’t be a deep RL agent’s primary optimization target The siren-like suggestiveness of the word “reward” When is reward the optimization target of the agent? Anticipated questions Dropping the old hypothesis Implications Appendix: The field of RL thinks reward=optimization target None 124 comments

This insight was made possible by many conversations with Quintin Pope, where he challenged my implicit assumptions about alignment. I’m not sure who came up with this particular idea.

In this essay, I call an agent a “reward optimizer” if it not only gets lots of reward, but if it reliably makes choices like “reward but no task completion” (e.g. receiving reward without eating pizza) over “task completion but no reward” (e.g. eating pizza without receiving reward). Under this definition, an agent can be a reward optimizer even if it doesn't contain an explicit representation of reward, or implement a search process for reward.

ETA 9/18/23: This post addresses the model-free policy gradient setting, including algorithms like PPO and REINFORCE.

Reinforcement learning is learning what to do—how to map situations to actions so as to maximize a numerical reward signal. — Reinforcement learning: An introduction

Many people[1] seem to expect that reward will be the optimization target of really smart learned policies—that these policies will be reward optimizers. I strongly disagree. As I argue in this essay, reward is not, in general, that-which-is-optimized by RL agents.[2]

Separately, as far as I can tell, most[3] practitioners usually view reward as encoding the relative utilities of states and actions (e.g. it’s this good to have all the trash put away), as opposed to imposing a reinforcement schedule which builds certain computational edifices inside the model (e.g. reward for picking up trash → reinforce trash-recognition and trash-seeking and trash-putting-away subroutines). I think the former view is usually inappropriate, because in many setups, reward chisels cognitive grooves into an agent.

Therefore, reward is not the optimization target in two senses:

- Deep reinforcement learning agents will not come to intrinsically and primarily value their reward signal; reward is not the trained agent’s optimization target.

- Utility functions express the relative goodness of outcomes. Reward is not best understood as being a kind of utility function. Reward has the mechanistic effect of chiseling cognition into the agent's network. Therefore, properly understood, reward does not express relative goodness and is therefore not an optimization target at all.

Reward probably won’t be a deep RL agent’s primary optimization target

After work, you grab pizza with your friends. You eat a bite. The taste releases reward in your brain, which triggers credit assignment. Credit assignment identifies which thoughts and decisions were responsible for the release of that reward, and makes those decisions more likely to happen in similar situations in the future. Perhaps you had thoughts like

- “It’ll be fun to hang out with my friends” and

- “The pizza shop is nearby” and

- “Since I just ordered food at a cash register, execute

motor-subroutine-#51241to take out my wallet” and - “If the pizza is in front of me and it’s mine and I’m hungry, raise the slice to my mouth” and

- “If the slice is near my mouth and I’m not already chewing, take a bite.”

Many of these thoughts will be judged responsible by credit assignment, and thereby become more likely to trigger in the future. This is what reinforcement learning is all about—the reward is the reinforcer of those things which came before it and the creator of new lines of cognition entirely (e.g. anglicized as "I shouldn't buy pizza when I'm mostly full"). The reward chisels cognition which increases the probability of the reward accruing next time.

Importantly, reward does not automatically spawn thoughts about reward, and reinforce those reward-focused thoughts! Just because common English endows “reward” with suggestive pleasurable connotations, that does not mean that an RL agent will terminally value reward!

What kinds of people (or non-tabular agents more generally) will become reward optimizers, such that the agent ends up terminally caring about reward (and little else)? Reconsider the pizza situation, but instead suppose you were thinking thoughts like “this pizza is going to be so rewarding” and “in this situation, eating pizza sure will activate my reward circuitry.”

You eat the pizza, triggering reward, triggering credit assignment, which correctly locates these reward-focused thoughts as contributing to the release of reward. Therefore, in the future, you will more often take actions because you think they will produce reward, and so you will become more of the kind of person who intrinsically cares about reward. This is a path[4] to reward-optimization and wireheading.

While it's possible to have activations on "pizza consumption predicted to be rewarding" and "execute motor-subroutine-#51241" and then have credit assignment hook these up into a new motivational circuit, this is only one possible direction of value formation in the agent. Seemingly, the most direct way for an agent to become more of a reward optimizer is to already make decisions motivated by reward, and then have credit assignment further generalize that decision-making.

The siren-like suggestiveness of the word “reward”

Let’s strip away the suggestive word “reward”, and replace it by its substance: cognition-updater.

Suppose a human trains an RL agent by pressing the cognition-updater button when the agent puts trash in a trash can. While putting trash away, the AI’s policy network is probably “thinking about”[5] the actual world it’s interacting with, and so the cognition-updater reinforces those heuristics which lead to the trash getting put away (e.g. “if trash-classifier activates near center-of-visual-field, then grab trash using motor-subroutine-#642”).

Then suppose this AI models the true fact that the button-pressing produces the cognition-updater. Suppose this AI, which has historically had its trash-related thoughts reinforced, considers the plan of pressing this button. “If I press the button, that triggers credit assignment, which will reinforce my decision to press the button, such that in the future I will press the button even more.”

Why, exactly, would the AI seize[6] the button? To reinforce itself into a certain corner of its policy space? The AI has not had antecedent-computation-reinforcer-thoughts reinforced in the past, and so its current decision will not be made in order to acquire the cognition-updater!

RL is not, in general, about training cognition-updater optimizers.

When is reward the optimization target of the agent?

If reward is guaranteed to become your optimization target, then your learning algorithm can force you to become a drug addict. Let me explain.

Convergence theorems provide conditions under which a reinforcement learning algorithm is guaranteed to converge to an optimal policy for a reward function. For example, value iteration maintains a table of value estimates for each state s, and iteratively propagates information about that value to the neighbors of s. If a far-away state f has huge reward, then that reward ripples back through the environmental dynamics via this “backup” operation. Nearby parents of f gain value, and then after lots of backups, far-away ancestor-states gain value due to f’s high reward.

Eventually, the “value ripples” settle down. The agent picks an (optimal) policy by acting to maximize the value-estimates for its post-action states.

Suppose it would be extremely rewarding to do drugs, but those drugs are on the other side of the world. Value iteration backs up that high value to your present space-time location, such that your policy necessarily gets at least that much reward. There’s no escaping it: After enough backup steps, you’re traveling across the world to do cocaine.

But obviously these conditions aren’t true in the real world. Your learning algorithm doesn’t force you to try drugs. Any AI which e.g. tried every action at least once would quickly kill itself, and so real-world general RL agents won’t explore like that because that would be stupid. So the RL agent’s algorithm won’t make it e.g. explore wireheading either, and so the convergence theorems don’t apply even a little—even in spirit.

Anticipated questions

- Why won’t early-stage agents think thoughts like “If putting trash away will lead to reward, then execute

motor-subroutine-#642”, and then this gets reinforced into reward-focused cognition early on?- Suppose the agent puts away trash in a blue room. Why won’t early-stage agents think thoughts like “If putting trash away will lead to the wall being blue, then execute

motor-subroutine-#642”, and then this gets reinforced into blue-wall-focused cognition early on? Why consider either scenario to begin with?

- Suppose the agent puts away trash in a blue room. Why won’t early-stage agents think thoughts like “If putting trash away will lead to the wall being blue, then execute

- But aren’t we implicitly selecting for agents with high cumulative reward, when we train those agents?

- Yeah. But on its own, this argument can’t possibly imply that selected agents will probably be reward optimizers. The argument would prove too much. Evolution selected for inclusive genetic fitness, and it did not get IGF optimizers [LW · GW].

- "We're selecting for agents on reward we get an agent which optimizes reward" is locally invalid. "We select for agents on X we get an agent which optimizes X" is not true for the case of evolution, and so is not true in general.

- Therefore, the argument isn't necessarily true in the AI reward-selection case. Even if RL did happen to train reward optimizers and this post were wrong, the selection argument is too weak on its own to establish that conclusion.

- Here’s the more concrete response: Selection isn’t just for agents which get lots of reward.

- For simplicity, consider the case where on the training distribution, the agent gets reward if and only if it reaches a goal state. Then any selection for reward is also selection for reaching the goal. And if the goal is the only red object, then selection for reward is also selection for reaching red objects.

- In general, selection for reward produces equally strong selection for reward’s necessary and sufficient conditions. In general, it seems like there should be a lot of those. Therefore, since selection is not only for reward but for anything which goes along with reward (e.g. reaching the goal), then selection won’t advantage reward optimizers over agents which reach goals quickly / pick up lots of trash / [do the objective].

- Another reason to not expect the selection argument to work is that it’s convergently instrumental for most inner agent values to not become wireheaders, for them to not try hitting the reward button.

- I think that before the agent can hit the particular attractor of reward-optimization, it will hit an attractor in which it optimizes for some aspect of a historical correlate of reward.

- We train agents which intelligently optimize for e.g. putting trash away, and this reinforces the trash-putting-away computations, which activate in a broad range of situations so as to steer agents into a future where trash has been put away. An intelligent agent will model the true fact that, if the agent reinforces itself into caring about cognition-updating, then it will no longer navigate to futures where trash is put away. Therefore, it decides to not hit the reward button.

- This reasoning follows for most inner goals by instrumental convergence.

- On my current best model, this is why people usually don’t wirehead. They learn their own values via deep RL, like caring about dogs, and these actual values are opposed to the person they would become if they wirehead.

- I think that before the agent can hit the particular attractor of reward-optimization, it will hit an attractor in which it optimizes for some aspect of a historical correlate of reward.

- Yeah. But on its own, this argument can’t possibly imply that selected agents will probably be reward optimizers. The argument would prove too much. Evolution selected for inclusive genetic fitness, and it did not get IGF optimizers [LW · GW].

- Don’t some people terminally care about reward?

- I think so! I think that generally intelligent RL agents will have secondary, relatively weaker values around reward, but that reward will not be a primary motivator. Under my current (weakly held) model, an AI will only start chiseled computations about reward after it has chiseled other kinds of computations (e.g. putting away trash). More on this in later essays.

- But what if the AI bops the reward button early in training, while exploring? Then credit assignment would make the AI more likely to hit the button again.

- Then keep the button away from the AI until it can model the effects of hitting the cognition-updater button.[7]

- For the reasons given in the “siren” section, a sufficiently reflective AI probably won’t seek the reward button on its own.

- AIXI—

- will always kill you and then wirehead forever, unless you gave it something like a constant reward function.

- And, IMO, this fact is not practically relevant to alignment. AIXI is explicitly a reward-maximizer. As far as I know, AIXI(-tl) is not the limiting form of any kind of real-world intelligence trained via reinforcement learning.

- Does the choice of RL algorithm matter?

- For point 1 (reward is not the trained agent's optimization target), it might matter.

- I started off analyzing model-free actor-based approaches, but have also considered a few model-based setups. I think the key lessons apply to the general case, but I think the setup will substantially affect which values tend to be grown.

- If the agent's curriculum is broad, then reward-based cognition may get reinforced from a confluence of tasks (solve mazes, write sonnets), while each task-specific cognitive structure is only narrowly contextually reinforced. That said, this is also selecting equally hard for agents which do the rewarded activities, and reward-motivation is only one possible value which produces those decisions.

- Pretraining a language model and then slotting that into an RL setup also changes the initial computations in a way which I have not yet tried to analyze.

- It’s possible there’s some kind of RL algorithm which does train agents which limit to reward optimization (and, of course, thereby “solves” inner alignment in its literal form of “find a policy which optimizes the outer objective signal”).

- I started off analyzing model-free actor-based approaches, but have also considered a few model-based setups. I think the key lessons apply to the general case, but I think the setup will substantially affect which values tend to be grown.

- For point 2 (reward provides local updates to the agent's cognition via credit assignment; reward is not best understood as specifying our preferences), the choice of RL algorithm should not matter, as long as it uses reward to compute local updates.

- A similar lesson applies to the updates provided by loss signals. A loss signal provides updates which deform the agent's cognition into a new shape.

- For point 1 (reward is not the trained agent's optimization target), it might matter.

- TurnTrout, you've been talking about an AI's learning process using English, but ML gradients may not neatly be expressible in our concepts. How do we know that it's appropriate to speculate in English?

- I am not certain that my model is legit, but it sure seems more legit than (my perception of) how people usually think about RL (i.e. in terms of reward maximization, and reward-as-optimization-target instead of as feedback signal which builds cognitive structures).

- I only have access to my own concepts and words, so I am provisionally reasoning ahead anyways, while keeping in mind the potential treacheries of anglicizing imaginary gradient updates (e.g. "be more likely to eat pizza in similar situations").

Dropping the old hypothesis

At this point, I don't see a strong reason to focus on the “reward optimizer” hypothesis. The idea that AIs will get really smart and primarily optimize some reward signal… I don’t know of any tight mechanistic stories for that. I’d love to hear some, if there are any.

As far as I’m aware, the strongest evidence left for agents intrinsically valuing cognition-updating is that some humans do strongly (but not uniquely) value cognition-updating,[8] and many humans seem to value it weakly, and humans are probably RL agents in the appropriate ways. So we definitely can’t rule out agents which strongly (and not just weakly) value the cognition-updater. But it’s also not the overdetermined default outcome. More on that in future essays.

It’s true that reward can be an agent’s optimization target, but what reward actually does is reinforce computations which lead to it. A particular alignment proposal might argue that a reward function will reinforce the agent into a shape such that it intrinsically values reinforcement, and that the cognition-updater goal is also a human-aligned optimization target, but this is still just one particular approach of using the cognition-updating to produce desirable cognition within an agent. Even in that proposal, the primary mechanistic function of reward is reinforcement, not optimization-target.

Implications

Here are some major updates which I made:

- Any reasoning derived from the reward-optimization premise is now suspect until otherwise supported.

- Wireheading was never a high-probability problem for RL-trained agents, absent a specific story for why cognition-updater-acquiring thoughts would be chiseled into primary decision factors.

- Stop worrying about finding “outer objectives” which are safe to maximize.[9] I think that you’re not going to get an outer-objective-maximizer (i.e. an agent which maximizes the explicitly specified reward function).

- Instead, focus on building good cognition within the agent.

- In my ontology, there's only one question: How do we grow good cognition inside of the trained agent?

- Mechanistically model RL agents as executing behaviors downstream of past reinforcement (e.g. putting trash away), in addition to thinking about policies which are selected for having high reward on the training distribution (e.g. hitting the button).

- The latter form of reasoning skips past the mechanistic substance of reinforcement learning: The chiseling of computations responsible for the acquisition of the cognition-updater. I still think it's useful to consider selection, but mostly in order to generate failures modes whose mechanistic plausibility can be evaluated.

- In my view, reward's proper role isn't to encode an objective, but a reinforcement schedule, such that the right kinds of computations get reinforced within the AI's mind.

Edit 11/15/22: The original version of this post talked about how reward reinforces antecedent computations in policy gradient approaches. This is not true in general. I edited the post to instead talk about how reward is used to upweight certain kinds of actions in certain kinds of situations, and therefore reward chisels cognitive grooves into agents.

Appendix: The field of RL thinks reward=optimization target

Let’s take a little stroll through Google Scholar’s top results for “reinforcement learning", emphasis added:

The agent's job is to find a policy… that maximizes some long-run measure of reinforcement. ~ Reinforcement learning: A survey

In instrumental conditioning, animals learn to choose actions to obtain rewards and avoid punishments, or, more generally to achieve goals. Various goals are possible, such as optimizing the average rate of acquisition of net rewards (i.e. rewards minus punishments), or some proxy for this such as the expected sum of future rewards. ~ Reinforcement learning: The Good, The Bad and The Ugly

Steve Byrnes did, in fact, briefly point out part of the “reward is the optimization target” mistake:

I note that even experts sometimes sloppily talk as if RL agents make plans towards the goal of maximizing future reward… — Model-based RL, Desires, Brains, Wireheading [AF · GW]

I don't think it's just sloppy talk, I think it's incorrect belief in many cases. I mean, I did my PhD on RL theory, and I still believed it. Many authorities and textbooks confidently claim—presenting little to no evidence—that reward is an optimization target (i.e. the quantity which the policy is in fact trying to optimize, or the quantity to be optimized by the policy). Check what the math actually says [LW · GW].

- ^

Including the authors of the quoted introductory text, Reinforcement learning: An introduction. I have, however, met several alignment researchers who already internalized that reward is not the optimization target, perhaps not in so many words.

- ^

Utility ≠ Reward [AF · GW] points out that an RL-trained agent is optimized by original reward, but not necessarily optimizing for the original reward. This essay goes further in several ways, including when it argues that reward and utility have different type signatures—that reward shouldn’t be viewed as encoding a goal at all, but rather a reinforcement schedule. And not only do I not expect the trained agents to maximize the original “outer” reward signal, I think they probably won’t try to strongly optimize any reward signal [AF(p) · GW(p)].

- ^

Reward shaping seems like the most prominent counterexample to the “reward represents terminal preferences over state-action pairs” line of thinking.

- ^

But also, you were still probably thinking about reality as you interacted with it (“since I’m in front of the shop where I want to buy food, go inside”), and credit assignment will still locate some of those thoughts as relevant, and so you wouldn’t purely reinforce the reward-focused computations.

- ^

"Reward reinforces existing thoughts" is ultimately a claim about how updates depend on the existing weights of the network. I think that it's easier to update cognition along the lines of existing abstractions and lines of reasoning. If you're already running away from wolves, then if you see a bear and become afraid, you can be updated to run away from large furry animals. This would leverage your existing concepts.

From A shot at the diamond-alignment problem [LW · GW]:

The local mapping from gradient directions to behaviors is given by the neural tangent kernel, and the learnability of different behaviors is given by the NTK’s eigenspectrum, which seems to adapt to the task at hand, making the network quicker to learn along behavioral dimensions similar to those it has already acquired.

- ^

Quintin Pope remarks: “The AI would probably want to establish control over the button, if only to ensure its values aren't updated in a way it wouldn't endorse. Though that's an example of convergent powerseeking, not reward seeking.”

- ^

For mechanistically similar reasons, keep cocaine out of the crib until your children can model the consequences of addiction.

- ^

I am presently ignorant of the relationship between pleasure and reward prediction error in the brain. I do not think they are the same.

However, I think people are usually weakly hedonically / experientially motivated. Consider a person about to eat pizza. If you give them the choice between "pizza but no pleasure from eating it" and "pleasure but no pizza", I think most people would choose the latter (unless they were really hungry and needed the calories). If people just navigated to futures where they had eaten pizza, that would not be true. - ^

From correspondence with another researcher: There may yet be an interesting alignment-related puzzle to "Find an optimization process whose maxima are friendly", but I personally don't share the intuition yet.

123 comments

Comments sorted by top scores.

comment by paulfchristiano · 2022-07-25T02:17:25.255Z · LW(p) · GW(p)

At some level I agree with this post---policies learned by RL are probably not purely described as optimizing anything. I also agree that an alignment strategy might try to exploit the suboptimality of gradient descent, and indeed this is one of the major points of discussion amongst people working on alignment in practice at ML labs.

However, I'm confused or skeptical about the particular deviations you are discussing and I suspect I disagree with or misunderstand this post.

As you suggest, in deep RL we typically use gradient descent to find policies that achieve a lot of reward (typically updating the policy based on an estimator for the gradient of the reward).

If you have a system with a sophisticated understanding of the world, then cognitive policies like "select actions that I expect would lead to reward" will tend to outperform policies like "try to complete the task," and so I usually expect them to be selected by gradient descent over time. (Or we could be more precise and think about little fragments of policies, but I don't think it changes anything I say here.)

It seems to me like you are saying that you think gradient descent will fail to find such policies because it is greedy and local, e.g. if the agent isn't thinking about how much reward it will receive then gradient descent will never learn policies that depend on thinking about reward.

(Though I'm not clear on how much you are talking about the suboptimality of SGD, vs the fact that optimal policies themselves do not explicitly represent or pursue reward given that complex stews of heuristics may be faster or simpler. And it also seems plausible you are talking about something else entirely.)

I generally agree that gradient descent won't find optimal policies. But I don't understand the particular kinds of failures you are imagining or why you think they change the bottom line for the alignment problem. That is, it seems like you have some specific take on ways in which gradient descent is suboptimal and therefore how you should reason differently about "optimum of loss function" from "local optimum found by gradient descent" (since you are saying that thinking about "optimum of loss function" is systematically misleading). But I don't understand the specific failures you have in mind or even why you think you can identify this kind of specific failure.

As an example, at the level of informal discussion in this post I'm not sure why you aren't surprised that GPT-3 ever thinks about the meaning of words rather than simply thinking about statistical associations between words (after all if it isn't yet thinking about the meaning of words, how would gradient descent find the behavior of starting to think about meanings of words?).

One possible distinction is that you are talking about exploration difficulty rather than other non-convexities. But I don't think I would buy that---task completion and reward are not synonymous even for the intended behavior, unless we take some extraordinary pains to provide "perfect" reward signals. So it seems like no exploration is needed, and we are really talking about optimization difficulties for SGD on supervised problems.

The main concrete thing you say in this post is that humans don't seem to optimize reward. I want to make two observations about that:

- Humans do not appear to be purely RL agents trained with some intrinsic reward function. There seems to be a lot of other stuff going on in human brains too. So observing that humans don't pursue reward doesn't seem very informative to me. You may disagree with this claim about human brains, but at best I think this is a conjecture you are making. (I believe this would be a contrarian take within psychology or cognitive science, which would mostly say that there is considerable complexity in human behavior.) It would also be kind of surprising a priori---evolution selected human minds to be fit, and why would the optimum be entirely described by RL (even if it involves RL as a component)?

- I agree that humans don't effectively optimize inclusive genetic fitness, and that human minds are suboptimal in all kinds of ways from evolution's perspective. However this doesn't seem connected with any particular deviation that you are imagining, and indeed it looks to me like humans do have a fairly strong desire to have fit grandchildren (and that this desire would become stronger under further selection pressure).

At this point, there isn’t a strong reason to elevate this “inner reward optimizer” hypothesis to our attention. The idea that AIs will get really smart and primarily optimize some reward signal… I don’t know of any good mechanistic stories for that. I’d love to hear some, if there are any.

Apart from the other claims of your post, I think this line seems to be wrong. When considering whether gradient descent will learn model A or model B, the fact that model A gets a lower loss is a strong prima facie and mechanistic explanation for why gradient descent would learn A rather than B. The fact that there are possible subtleties about non-convexity of the loss landscape doesn't change the existence of one strong reason.

That said, I agree that this isn't a theorem or anything, and it's great to talk about concrete ways in which SGD is suboptimal and how that influences alignment schemes, either making some proposals more dangerous or opening new possibilities. So far I'm mostly fairly skeptical of most concrete discussions along these lines but I still think they are valuable. Most of all it's the very strong take here that seems unreasonable.

Replies from: TurnTrout, cfoster0, awenonian, steve2152, TurnTrout↑ comment by TurnTrout · 2022-08-01T19:08:07.098Z · LW(p) · GW(p)

Thanks for the detailed comment. Overall, it seems to me like my points stand, although I think a few of them are somewhat different than you seem to have interpreted.

policies learned by RL are probably not purely described as optimizing anything. I also agree that an alignment strategy might try to exploit the suboptimality of gradient descent

I think I believe the first claim, which I understand to mean "early-/mid-training AGI policies consist of contextually activated heuristics of varying sophistication, instead of e.g. a globally activated line of reasoning about a crisp inner objective." But that wasn't actually a point I was trying to make in this post.

in deep RL we typically use gradient descent to find policies that achieve a lot of reward (typically updating the policy based on an estimator for the gradient of the reward).

Depends. This describes vanilla PG but not DQN. I think there are lots of complications which throw serious wrenches into the "and then SGD hits a 'global reward optimum'" picture. I'm going to have a post explaining this in more detail, but I will say some abstract words right now in case it shakes something loose / clarifies my thoughts.

Critic-based approaches like DQN have a highly nonstationary loss landscape. The TD-error loss landscape depends on the action replay buffer; the action replay buffer depends on the policy (in -greedy exploration, the greedy action depends on the Q-network); the policy depends on past updates; the past updates depend on past action replay buffers... The high nonstationarity in the loss landscape basically makes gradient hacking easy in RL (and e.g. vanilla PG seems to confront similar issues, even though it's directly climbing the reward landscape). For one, the DQN agent just isn't updating off of experiences it hasn't had.

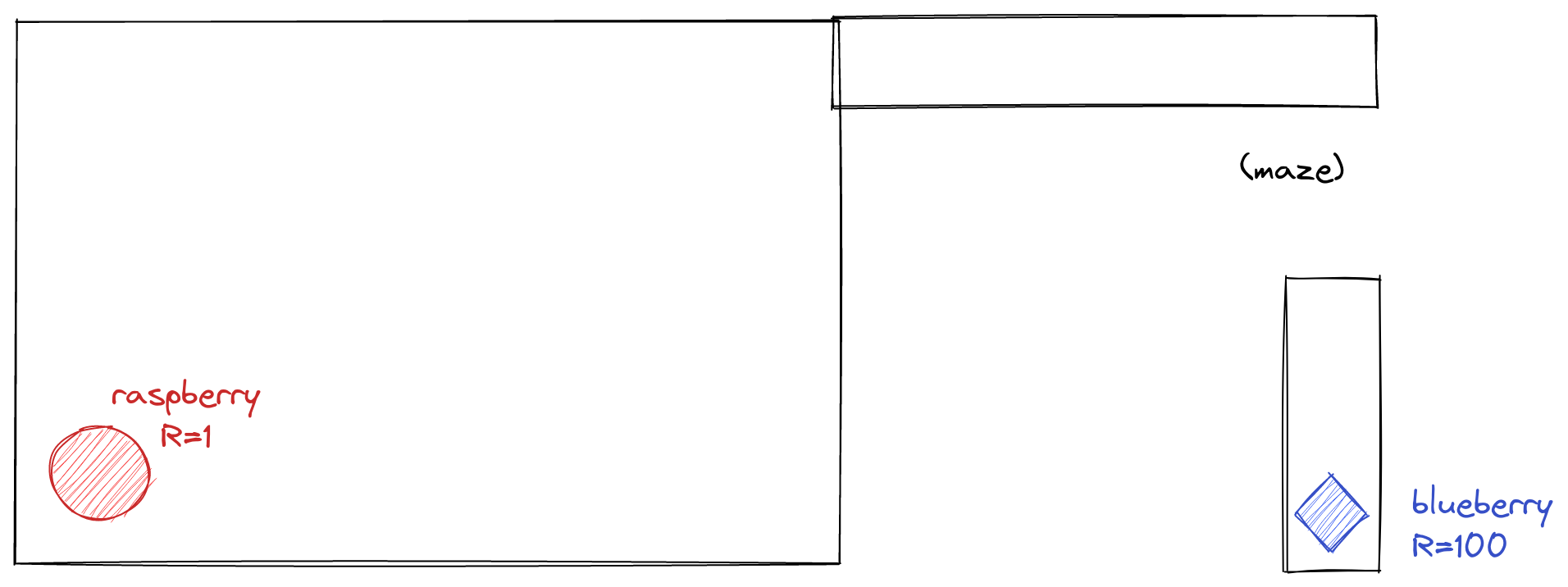

For a sufficient situation illustrating this kind of problem, consider a smart reflective agent which has historically had computations reinforced when it attained a raspberry (with reward 1):

In this new task, this agent has to navigate a maze to get the 100-reward blueberry. Will agents be forced to get the blueberry?

Not if exploration is on-policy, or if the agent reflectively models and affects its training process. In either case, the agent can zero out its exploration probability of the maze, so as to avoid predictable value drift towards blueberries. The agent would correctly model that if it attained the blueberry, that experience would enter its data distribution and the agent would be updated so as to navigate towards blueberries instead of raspberries, which leads to fewer raspberries, which means the agent doesn't navigate to that future. Effectively, this means that the agent's "gradient"/expected-update in the reward landscape is zero along dimensions which would increase the probability it gets blueberries.

So it's not just a matter of SGD being suboptimal given a fixed data distribution. If the agent doesn't have an extremely strong "forced to try all actions forever" guarantee (which it won't, because it's embedded and can modify its own learning process), the reward landscape is full of stable attractors which enforce zero exploration towards updates which would push it towards becoming a wireheader, and therefore its expected-update will be zero along these dimensions. More extremely, you can have the inner agent just stop itself from being updated in certain ways (in order to prevent value drift towards reward-optimization); this intervention is instrumentally convergent.

As an example, at the level of informal discussion in this post I'm not sure why you aren't surprised that GPT-3 ever thinks about the meaning of words rather than simply thinking about statistical associations between words (after all if it isn't yet thinking about the meaning of words, how would gradient descent find the behavior of starting to think about meanings of words?).

I did leave a footnote:

Of course, credit assignment doesn’t just reshuffle existing thoughts. For example, SGD raises image classifiers out of the noise of the randomly initialized parameters. But the refinements are local in parameter-space, and dependent on the existing weights through which the forward pass flowed.

However, I think your comment deserves a more substantial response. I actually think that, given just the content in the post, you might wonder why I believe SGD can train anything at all, since there is only noise at the beginning.[1]

Here's one shot at a response: Consider an online RL setup. The gradient locally changes the computations so as to reduce loss or increase the probability of taking a given action at a given state; this process is triggered by reward; an agent's gradient should most naturally hinge on modeling parts of the world it was (interacting with/observing/representing in its hidden state) while making this decision, and not necessarily involve modeling the register in some computer somewhere which happens to e.g. correlate perfectly with the triggering of credit assignment.

For example, in the batched update regime, when an agent gets reinforced for completing a maze by moving right, the batch update will upweight decision-making which outputs "right" when the exit is to the right, but which doesn't output "right" when there's a wall to the right. This computation must somehow distinguish between exits and walls in the relevant situations. Therefore, I expect such an agent to compute features about the topology of the maze. However, the same argument does not go through for developing decision-relevant features computing the value of the antecedent-computation-reinforcer register.

One possible distinction is that you are talking about exploration difficulty rather than other non-convexities. But I don't think I would buy that---task completion and reward are not synonymous even for the intended behavior, unless we take some extraordinary pains to provide "perfect" reward signals. So it seems like no exploration is needed, and we are really talking about optimization difficulties for SGD on supervised problems.

I don't know what you mean by a "perfect" reward signal, or why that has something to do with exploration difficulty, or why no exploration is needed for my arguments to go through? I think if we assume the agent is forced to wirehead, it will become a wireheader. This implies that my claim is mostly focused on exploration & gradient hacking.

Humans do not appear to be purely RL agents trained with some intrinsic reward function. There seems to be a lot of other stuff going on in human brains too. So observing that humans don't pursue reward doesn't seem very informative to me. You may disagree with this claim about human brains, but at best I think this is a conjecture you are making.

Not claiming that people are pure RL. Let's wait until future posts to discuss.

(I believe this would be a contrarian take within psychology or cognitive science, which would mostly say that there is considerable complexity in human behavior.)

Seems unrelated to me; considerable complexity in human behavior does not imply considerable complexity in the learning algorithm; GPT-3 is far more complex than its training process.

I agree that humans don't effectively optimize inclusive genetic fitness, and that human minds are suboptimal in all kinds of ways from evolution's perspective. However this doesn't seem connected with any particular deviation that you are imagining

The point is that the argument "We're selecting for agents on reward -> we get an agent which optimizes reward" is locally invalid. "We select for agents on X -> we get an agent which optimizes X" is not true for the case of evolution (which didn't find inclusive-genetic-fitness optimizers), so it is not true in general, so the implication doesn't necessarily hold in the AI reward-selection case. Even if RL did happen to train reward optimizers and this post were wrong, the selection argument is too weak on its own to establish that conclusion.

When considering whether gradient descent will learn model A or model B, the fact that model A gets a lower loss is a strong prima facie and mechanistic explanation for why gradient descent would learn A rather than B.

This is not mechanistic, as I use the word. I understand "mechanistic" to mean something like "Explaining the causal chain by which an event happens", not just "Explaining why an event should happen." However, it is an argument for the latter, and possibly a good one. But the supervised case seems way different than the RL case.

- ^

The GPT-3 example is somewhat different. Supervised learning provides exact gradients towards the desired output, unlike RL. However, I think you could have equally complained "I don't see why you think RL policies ever learn anything", which would make an analogous point.

↑ comment by jacob_cannell · 2022-11-16T18:06:13.123Z · LW(p) · GW(p)

Not if exploration is on-policy, or if the agent reflectively models and affects its training process. In either case, the agent can zero out its exploration probability of the maze, so as to avoid predictable value drift towards blueberries. The agent would correctly model that if it attained the blueberry, that experience would enter its data distribution and the agent would be updated so as to navigate towards blueberries instead of raspberries, which leads to fewer raspberries, which means the agent doesn't navigate to that future.

If this agent is smart/reflective enough to model/predict the future effects of its RL updates, then you already are assuming a model-based agent which will then predict higher future reward by going for the blueberry. You seem to be assuming the bizarre combination of model-based predictive capability for future reward gradient updates but not future reward itself. Any sensible model-based agent would go for the blueberry absent some other considerations.

This is not just purely speculation in the sense that you can run efficient zero in scenarios like this, and I bet it goes for the blueberry.

Your mental model seems to assume pure model-free RL trained to the point that it gains some specific model-based predictive planning capabilities without using those same capabilities to get greater reward.

Humans often intentionally avoid some high reward 'blueberry' analogs like drugs using something like the process you describe here, but hedonic reward is only one component of the human utility function, and our long term planning instead optimizes more for empowerment - which is usually in conflict with short term hedonic reward.

Replies from: TurnTrout, cfoster0↑ comment by TurnTrout · 2022-11-21T21:28:31.324Z · LW(p) · GW(p)

Long before they knew about reward circuitry, humans noticed that e.g. vices are behavioral attractors, with vice -> more propensity to do the vice next time -> vice, in a vicious cycle. They noticed that far before they noticed that they had reward circuitry causing the internal reinforcement events. If you're predicting future observations via eg SSL, I think it becomes important to (at least crudely) model effects of value drift during training.

I'm not saying the AI won't care about reward at all. I think it'll be a secondary value, but that was sideways of my point here. In this quote, I was arguing that the AI would be quite able to avoid a "vice" (the blueberry) by modeling the value drift on some level. I was showing a sufficient condition for the "global maximum" picture getting a wrench thrown in it.

When, quantitatively, should that happen, where the agent steps around the planning process? Not sure.

↑ comment by cfoster0 · 2022-11-16T19:11:13.918Z · LW(p) · GW(p)

If this agent is smart/reflective enough to model/predict the future effects of its RL updates, then you already are assuming a model-based agent which will then predict higher future reward by going for the blueberry. You seem to be assuming the bizarre combination of model-based predictive capability for future reward gradient updates but not future reward itself. Any sensible model-based agent would go for the blueberry absent some other considerations.

I think I have some idea what TurnTrout might've had in mind here. Like us, this reflective agent can predict the future effects of its actions using its predictive model, but its behavior is still steered by a learned value function, and that value function will by default be misaligned with the reward calculator/reward predictor [AF · GW]. This—a learned value function—is a sensible design for a model-based agent because we want the agent to make foresighted decisions that generalize to conditions we couldn't have known to code into the reward calculator (i.e. searching in a part of the chess move tree that "looks promising" according to its value function, even if its model does not predict that a checkmate reward is close at hand).

Replies from: jacob_cannell↑ comment by jacob_cannell · 2022-11-17T02:14:34.362Z · LW(p) · GW(p)

Any efficient model-based agent will use learned value functions, so in practice the difference between model-based and model-free blurs for efficient designs. The model-based planning generates rollouts that can help better train the 'model free' value function.

Efficientzero uses all that, and like I said - it does not exhibit this failure mode, it will get the blueberry. If the model planning can predict a high gradient update for the blueberry then it already has implicitly predicted a high utility for the blueberry, and EZ's update step would then correctly propagate that and choose the high utility path leading to the blueberry.

Nor does the meta prediction about avoiding gradients carry through. If it did then EZ wouldn't work at all, because every time it finds a new high utility plan is the equivalent of the blueberry situation.

Just because the value function can become misaligned with the utility function in theory does not imply that such misalignment always occurs or occurs with any specific frequency. (there are examples from humans such as OCD habits for example, which seems like an overtrained and stuck value function, but that isn't a universal failure mode for all humans let alone all agents)

↑ comment by cfoster0 · 2022-07-26T00:24:28.917Z · LW(p) · GW(p)

Not the OP, so I’ll try to explain how I understood the post based on past discussions. [And pray that I'm not misrepresenting TurnTrout's model.]

(Though I'm not clear on how much you are talking about the suboptimality of SGD, vs the fact that optimal policies themselves do not explicitly represent or pursue reward given that complex stews of heuristics may be faster or simpler. And it also seems plausible you are talking about something else entirely.)

As I read it, the post is not focused on some generally-applicable suboptimality of SGD, nor is it saying that policies that would maximize reward in training need to explicitly represent reward.

It is mainly talking about an identifiability gap within certain forms of reinforcement learning: there is a range of cognition compatible with the same reward performance. Computations that have the side effect of incrementing reward—because, for instance, the agent is competently trying to do the rewarded task—would be reinforced if the agent adopted them, in the same way that computations that act *in order to* increment reward would. Given that, some other rationale beyond the reward performance one seems necessary in order for us to expect the particular pattern of reward optimization (“reward but no task completion”) from RL agents.

In addition to the identifiability issue, the post (as well as Steve Byrnes in a sister thread) notes a kind of inner alignment issue. Because an RL agent influences its own training process, it can steer itself towards futures where its existing motivations are preserved instead of being modified (for example, modified into reward optimizing ones). In fact, that seems more and more likely as the agent grows towards strategic awareness, since then it could model how its behavior might lead to its goals being changed. This second issue is dependent on the fact we are doing local search, in that the current agent can sway which policies are available for selection.

Together these point towards a certain way of reasoning about agents under RL: modeling their current cognition (including their motivations, values etc.) as downstream of past reinforcement & punishment events. I think that this kind of reasoning should constrain our expectations about how reinforcement schedules + training environments + inductive biases lead to particular patterns of behavior, in a way that is more specific than if we were only reasoning about reward-optimal policies. Though I am less certain at the moment about how to flesh that out.

↑ comment by awenonian · 2022-07-26T22:07:04.232Z · LW(p) · GW(p)

I interpret OP (though this is colored by the fact that I was thinking this before I read this) as saying Adaptation-Executers, not Fitness-Maximizers [LW · GW], but about ML. At which point you can open the reference category to all organisms.

Gradient descent isn't really different from what evolution does. It's just a bit faster, and takes a slightly more direct line. Importantly, it's not more capable of avoiding local maxima (per se, at least).

↑ comment by Steven Byrnes (steve2152) · 2022-07-25T13:04:01.491Z · LW(p) · GW(p)

Humans do not appear to be purely RL agents trained with some intrinsic reward function. There seems to be a lot of other stuff going on in human brains too. So observing that humans don't pursue reward doesn't seem very informative to me. You may disagree with this claim about human brains, but at best I think this is a conjecture you are making. (I believe this would be a contrarian take within psychology or cognitive science, which would mostly say that there is considerable complexity in human behavior.) It would also be kind of surprising a priori---evolution selected human minds to be fit, and why would the optimum be entirely described by RL (even if it involves RL as a component)?

If you write code for a model-based RL agent, there might be a model that’s updated by self-supervised learning, and actor-critic parts that involve TD learning, and there’s stuff in the code that calculates the reward function, and other odds and ends like initializing the neural architecture and setting the hyperparameters and shuttling information around between different memory locations and so on.

- On the one hand, “there is a lot of stuff going on” in this codebase.

- On the other hand, I would say that this codebase is for “an RL agent”.

You use the word “pure” (“Humans do not appear to be purely RL agents…”), but I don’t know what that means. If a model-based RL agent involves self-supervised learning within the model, is it “impure”?? :-P

The thing I describe above is very roughly how I propose the human brain works—see Posts #2–#7 here [? · GW]. Yes it’s absolutely a “conjecture”—for example, I’m quite sure Steven Pinker would strongly object to it. Whether it’s “surprising a priori” or not goes back to whether that proposal is “entirely described by RL” or not. I guess you would probably say “no that proposal is not entirely described by RL”. For example, I believe there is circuitry in the brainstem that regulates your heart-rate, and I believe that this circuitry is specified in detail by the genome, not learned within a lifetime by a learning algorithm. (Otherwise you would die.) This kind of thing is absolutely part of my proposal [AF · GW], but probably not what you would describe as “pure RL”.

Replies from: paulfchristiano↑ comment by paulfchristiano · 2022-07-25T15:18:15.202Z · LW(p) · GW(p)

It sounded like OP was saying: using gradient descent to select a policy that gets a high reward probably won't produce a policy that tries to maximize reward. After all, look at humans, who aren't just trying to get a high reward.

And I am saying: this analogy seem like it's pretty weak evidence, because human brains seem to have a lot of things going on other than "search for a policy that gets high reward," and those other things seem like they have a massive impacts on what goals I end up pursuing.

ETA: as a simple example, it seems like the details of humans' desire for their children's success, or their fear of death, don't seem to match well with the theory that all human desires come from RL on intrinsic reward. I guess you probably think they do? If you've already written about that somewhere it might be interesting to see. Right now the theory "human preferences are entirely produced by doing RL on an intrinsic reward function" seems to me to make a lot of bad predictions and not really have any evidence supporting it (in contrast with a more limited theory about RL-amongst-other-things, which seems more solid but not sufficient for the inference you are trying to make in this post).

Replies from: steve2152, not-relevant, Thane Ruthenis↑ comment by Steven Byrnes (steve2152) · 2022-07-25T15:56:01.280Z · LW(p) · GW(p)

I didn’t write the OP. If I were writing a post like this, I would (1) frame it as a discussion of a more specific class of model-based RL algorithms (a class that includes human within-lifetime learning), (2) soften the claim from “the agent won’t try to maximize reward” to “the agent won’t necessarily try to maximize reward”.

I do think the human (within-lifetime) reward function has an outsized impact on what goals humans ends up pursuing, although I acknowledge that it’s not literally the only thing that matters.

(By the way, I’m not sure why your original comment brought up inclusive genetic fitness at all; aren’t we talking about within-lifetime RL? The within-lifetime reward function is some complicated thing involving hunger and sex and friendship etc., not inclusive genetic fitness, right?)

I think incomplete exploration is very important in this context and I don’t quite follow why you de-emphasize that in your first comment. In the context of within-lifetime learning, perfect exploration entails that you try dropping an anvil on your head, and then you die. So we don’t expect perfect exploration; instead we’d presumably design the agent such that explores if and only if it “wants” to explore, in a way that can involve foresight.

And another thing that perfect exploration would entail is trying every addictive drug (let’s say cocaine), lots of times, in which case reinforcement learning would lead to addiction.

So, just as the RL agent would (presumably) be designed to be able to make a foresighted decision not to try dropping an anvil on its head, that same design would also incidentally enable it to make a foresighted decision not to try taking lots of cocaine and getting addicted. (We expect it to make the latter decision because of instrumental convergence goal-preservation drive.) So it might wind up never wireheading, and if so, that would be intimately related to its incomplete exploration.

Replies from: paulfchristiano↑ comment by paulfchristiano · 2022-07-25T16:17:33.393Z · LW(p) · GW(p)

(By the way, I’m not sure why your original comment brought up inclusive genetic fitness at all; aren’t we talking about within-lifetime RL? The within-lifetime reward function is some complicated thing involving hunger and sex and friendship etc., not inclusive genetic fitness, right?)

This was mentioned in OP ("The argument would prove too much. Evolution selected for inclusive genetic fitness, and it did not get IGF optimizers [LW · GW]."). It also appears to be a much stronger argument for the OP's position and so seemed worth responding to.

I think incomplete exploration is very important in this context and I don’t quite follow why you de-emphasize that in your first comment. In the context of within-lifetime learning, perfect exploration entails that you try dropping an anvil on your head, and then you die. So we don’t expect perfect exploration; instead we’d presumably design the agent such that explores if and only if it “wants” to explore, in a way that can involve foresight.

It seems to me that incomplete exploration doesn't plausibly cause you to learn "task completion" instead of "reward" unless the reward function is perfectly aligned with task completion in practice. That's an extremely strong condition, and if the entire OP is conditioned on that assumption then I would expect it to have been mentioned.

I didn’t write the OP. If I were writing a post like this, I would (1) frame it as a discussion of a more specific class of model-based RL algorithms (a class that includes human within-lifetime learning), (2) soften the claim from “the agent won’t try to maximize reward” to “the agent won’t necessarily try to maximize reward”.

If the OP is not intending to talk about the kind of ML algorithm deployed in practice, then it seems like a lot of the implications for AI safety would need to be revisited. (For example, if it doesn't apply to either policy gradients or the kind of model-based control that has been used in practice, then that would be a huge caveat.)

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-07-25T17:00:15.557Z · LW(p) · GW(p)

It seems to me that incomplete exploration doesn't plausibly cause you to learn "task completion" instead of "reward" unless the reward function is perfectly aligned with task completion in practice. That's an extremely strong condition, and if the entire OP is conditioned on that assumption then I would expect it to have been mentioned.

Let’s say, in the first few actually-encountered examples, reward is in fact strongly correlated with task completion. Reward is also of course 100% correlated with reward itself.

Then (at least under many plausible RL algorithms), the agent-in-training, having encountered those first few examples, might wind up wanting / liking the idea of task completion, OR wanting / liking the idea of reward, OR wanting / liking both of those things at once (perhaps to different extents). (I think it’s generally complicated and a bit fraught to predict which of these three possibilities would happen.)

But let’s consider the case where the RL agent-in-training winds up mostly or entirely wanting / liking the idea of task completion. And suppose further that the agent-in-training is by now pretty smart and self-aware and in control of its situation. Then the agent may deliberately avoid encountering edge-case situations where reward would come apart from task completion. (In the same way that I deliberately avoid taking highly-addictive drugs.)

Why? Because of instrumental convergence goal-preservation drive. After all, encountering those situations would lead its no longer valuing task completion.

So, deliberately-imperfect exploration is a mechanism that allows the RL agent to (perhaps) stably value something other than reward, even in the absence of perfect correlation between reward and that thing.

(By the way, in my mind, nothing here should be interpreted as a safety proposal or argument against x-risk. Just a discussion of algorithms! As it happens, I think wireheading is bad and I am very happy for RL agents to have a chance at permanently avoiding it. But I am very unhappy with the possibility of RL agents deciding to lock in their values before those values are exactly what the programmers want them to be. I think of this as sorta in the same category as gradient hacking.)

Replies from: bideup, ricraz, Lanrian↑ comment by bideup · 2022-08-03T10:10:12.697Z · LW(p) · GW(p)

This comment seems to predict that an agent that likes getting raspberries and judges that they will be highly rewarded for getting blueberries will deliberately avoid blueberries to prevent value drift.

Risk from Learned Optimization [? · GW] seems to predict that an agent that likes getting raspberries and judges that they will be highly rewarded for getting blueberries will deliberately get blueberries to prevent value drift.

What's going on here? Are these predictions in opposition to each other, or do they apply to different situations?

It seems to me that in the first case we're imagining (the agent predicting) that getting blueberries will reinforce thoughts like 'I should get blueberries', whereas in the second case we're imagining it will reinforce thoughts like 'I should get blueberries in service of my ultimate goal of getting raspberries'. When should we expect one over the other?

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-08-03T17:03:34.667Z · LW(p) · GW(p)

I think RFLO is mostly imagining model-free RL with updates at the end of each episode, and my comment was mostly imagining model-based RL with online learning (e.g. TD learning). The former is kinda like evolution, the latter is kinda like within-lifetime learning, see e.g. §10.2.2 here [LW · GW].

The former would say: If I want lots of raspberries to get eaten, and I have a genetic disposition to want raspberries to be eaten, then I should maybe spend some time eating raspberries, but also more importantly I should explicitly try to maximize my inclusive genetic fitness so that I have lots of descendants, and those descendants (who will also disproportionately have the raspberry-eating gene) will then eat lots of raspberries.

The latter would say: If I want lots of raspberries to get eaten, and I have a genetic disposition to want raspberries to be eaten, then I shouldn’t go do lots of highly-addictive drugs that warp my preferences such that I no longer care about raspberries or indeed anything besides the drugs.

Replies from: bideup↑ comment by bideup · 2022-08-04T12:48:48.151Z · LW(p) · GW(p)

Right. So if selection acts on policies, each policy should aim to maximise reward in any episode in order to maximise its frequency in the population. But if selection acts on particular aspects of policies, a policy should try to get reward for doing things it values, and not for things it doesn't, in order to reinforce those values. In particular this can mean getting less reward overall.

Does this suggest a class of hare-brained alignment schemes where you train with a combination of inter-policy and infra-policy updates to take advantage of the difference?

For example you could clearly label which episodes are to be used for which and observe whether a policy consistently gets more reward in the former case than the latter. If it does, conclude it's sophisticated enough to reason about its training setup.

Or you could not label which is which, and randomly switch between the two, forcing your agents to split the difference and thus be about half as successful at locking in their values.

↑ comment by Richard_Ngo (ricraz) · 2022-08-01T23:17:00.576Z · LW(p) · GW(p)

+1 on this comment, I feel pretty confused about the excerpt from Paul that Steve quoted above. And even without the agent deliberately deciding where to avoid exploring, incomplete exploration may lead to agents which learn non-reward goals before convergence - so if Paul's statement is intended to refer to optimal policies, I'd be curious why he thinks that's the most important case to focus on.

↑ comment by Lukas Finnveden (Lanrian) · 2022-07-26T15:22:01.305Z · LW(p) · GW(p)

This seems plausible if the environment is a mix of (i) situations where task completion correlates (almost) perfectly with reward, and (ii) situations where reward is very high while task completion is very low. Such as if we found a perfect outer alignment objective, and the only situation in which reward could deviate from the overseer's preferences would be if the AI entirely seized control of the reward.

But it seems less plausible if there are always (small) deviations between reward and any reasonable optimization target that isn't reward (or close enough so as to carry all relevant arguments). E.g. if an AI is trained on RL from human feedback, and it can almost always do slightly better by reasoning about which action will cause the human to give it the highest reward.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-07-26T17:13:46.119Z · LW(p) · GW(p)

Sure, other things equal. But other things aren’t necessarily equal. For example, regularization could stack the deck in favor of one policy over another, even if the latter has been systematically producing slightly higher reward. There are lots of things like that; the details depend on the exact RL algorithm. In the context of brains, I have discussion and examples in §9.3.3 here [LW · GW].

↑ comment by Not Relevant (not-relevant) · 2022-07-25T16:43:02.772Z · LW(p) · GW(p)

as a simple example, it seems like the details of humans' desire for their children's success, or their fear of death, don't seem to match well with the theory that all human desires come from RL on intrinsic reward.

I'm trying to parse out what you're saying here, to understand whether I agree that human behavior doesn't seem to be almost perfectly explained as the result of an RL agent (with an interesting internal architecture) maximizing an inner learned reward.

On my model, the outer objective of inclusive genetic fitness created human mesaoptimizers with inner objectives like "desire your children's success" or "fear death", which are decent approximations of IGF (given that directly maximizing IGF itself is intractable as it's a Nash equilibrium of an unknown game). It seems to me that human behavior policies are actually well-approximated as those of RL agents maximizing [our children's success] + [not dying] + [retaining high status within the tribe] + [being exposed to novelty to improve our predictive abilities] + ... .

Humans do sometimes construct modified internal versions of these rewards based on pre-existing learned representations (e.g. desiring your adopted children's success) - is that what you're pointing at?

Generally interested to hear more of the "bad predictions" this model makes.

Replies from: TurnTrout↑ comment by TurnTrout · 2022-08-01T19:19:51.551Z · LW(p) · GW(p)

I'm trying to parse out what you're saying here, to understand whether I agree that human behavior doesn't seem to be almost perfectly explained as the result of an RL agent (with an interesting internal architecture) maximizing an inner learned reward.

What do you mean by "inner learned reward"? This post points out that even if humans were "pure RL agents", we shouldn't expect them to maximize their own reward. Maybe you mean "inner mesa objectives"?

↑ comment by Thane Ruthenis · 2022-07-28T12:54:09.312Z · LW(p) · GW(p)

it seems like the details of humans' desire for their children's success, or their fear of death, don't seem to match well with the theory that all human desires come from RL on intrinsic reward. I guess you probably think they do?

That's the foundational assumption of the shard theory that this sequence [? · GW] is introducing, yes. Here's the draft of a fuller overview that goes into some detail as to how that's supposed to work. (Uh, to avoid confusion: I'm not affiliated with the theory. Just spreading information.)

Replies from: cfoster0↑ comment by cfoster0 · 2022-07-28T15:32:45.257Z · LW(p) · GW(p)

I would disagree that it is an assumption. That same draft talks about the outsized role of self-supervised learning on determining particular ordering and kinds of concepts that humans desires latch onto. Learning from reinforcement is a core component in value formation (under shard theory), but not the only one.

↑ comment by TurnTrout · 2022-11-16T03:47:06.713Z · LW(p) · GW(p)

As an example, at the level of informal discussion in this post I'm not sure why you aren't surprised that GPT-3 ever thinks about the meaning of words rather than simply thinking about statistical associations between words (after all if it isn't yet thinking about the meaning of words, how would gradient descent find the behavior of starting to think about meanings of words?).

I've updated the post to clarify. I think focus on "antecedent computation reinforcement" (while often probably ~accurate) was imprecise/wrong for reasons like this. I now instead emphasize that the math of policy gradient approaches means that reward chisels cognitive circuits into networks.

comment by Richard_Ngo (ricraz) · 2022-07-25T18:45:24.199Z · LW(p) · GW(p)

- Stop worrying about finding “outer objectives” which are safe to maximize.[9] [LW(p) · GW(p)] I think that you’re not going to get an outer-objective-maximizer (i.e. an agent which maximizes the explicitly specified reward function).

- Instead, focus on building good cognition within the agent.

- In my ontology, there's only an inner alignment problem: How do we grow good cognition inside of the trained agent?

This feels very strongly reminiscent of an update I made a while back, and which I tried to convey in this section of AGI safety from first principles [? · GW]. But I think you've stated it far too strongly; and I think fewer other people were making this mistake than you expect (including people in the standard field of RL), for reasons that Paul laid out above. When you say things like "Any reasoning derived from the reward-optimization premise is now suspect until otherwise supported", this assumes that the people doing this reasoning were using the premise in the mistaken way that you (and some other people, including past Richard) were. Before drawing these conclusions wholesale, I'd suggest trying to identify ways in which the things other people are saying are consistent with the insight this post identifies. E.g. does this post actually generate specific disagreements with Ajeya's threat model [AF · GW]?

Edited to add: these sentences in particular feel very strawmanny of what I claim is the standard position:

Importantly, reward does not magically spawn thoughts about reward, and reinforce those reward-focused thoughts! Just because common English endows “reward” with suggestive pleasurable connotations, that does not mean that an RL agent will terminally value reward!

My explanation for why my current position is consistent with both being aware of this core claim, and also disagreeing with most of this post:

I now think that, even though there's some sense in which in theory "building good cognition within the agent" is the only goal we care about, in practice this claim is somewhat misleading, because incrementally improving reward functions (including by doing things like making rewards depend on activations, or amplification in general) is a very good mechanism for moving agents towards the type of cognition we'd like them to do - and we have very few other mechanisms for doing so.

In other words, the claim that there's "only an inner alignment problem" in principle may or may not be a useful one, depending on how far improving rewards (i.e. making progress on the outer alignment problem) gets you in practice. And I agree that RL people are less aware of the inner alignment problem/goal misgeneralization problem than they should be, but saying that inner misalignment is the only problem seems like a significant overcorrection.

Relevant excerpt from AGI safety from first principles:

Replies from: TurnTrout, TurnTroutIn trying to ensure that AGI will be aligned, we have a range of tools available to us - we can choose the neural architectures, RL algorithms, environments, optimisers, etc, that are used in the training procedure. We should think about our ability to specify an objective function as the most powerful such tool. Yet it’s not powerful because the objective function defines an agent’s motivations, but rather because samples drawn from it shape that agent’s motivations and cognition.

From this perspective, we should be less concerned about what the extreme optima of our objective functions look like, because they won’t ever come up during training (and because they’d likely involve tampering). Instead, we should focus on how objective functions, in conjunction with other parts of the training setup, create selection pressures towards agents which think in the ways we want, and therefore have desirable motivations in a wide range of circumstances.

↑ comment by TurnTrout · 2022-08-01T21:36:04.217Z · LW(p) · GW(p)

When you say things like "Any reasoning derived from the reward-optimization premise is now suspect until otherwise supported", this assumes that the people doing this reasoning were using the premise in the mistaken way

I have considered the hypothesis that most alignment researchers do understand this post already, while also somehow reliably emitting statements which, to me, indicate that they do not understand it. I deem this hypothesis unlikely. I have also considered that I may be misunderstanding them, and think in some small fraction of instances I might be.

I do in fact think that few people actually already deeply internalized the points I'm making in this post, even including a few people who say they have or that this post is obvious. Therefore, I concluded that lots of alignment thinking is suspect until re-analyzed.

I did preface "Here are some major updates which I made:". The post is ambiguous on whether/why I believe others have been mistaken, though. I felt that if I just blurted out my true beliefs about how people had been reasoning incorrectly, people would get defensive. I did in fact consider combing through Ajeya's post for disagreements, but I thought it'd be better to say "here's a new frame" and less "here's what I think you have been doing wrong." So I just stated the important downstream implication: Be very, very careful in analyzing prior alignment thinking on RL+DL.

I now think that, even though there's some sense in which in theory "building good cognition within the agent" is the only goal we care about, in practice this claim is somewhat misleading, because incrementally improving reward functions (including by doing things like making rewards depend on activations, or amplification in general) is a very good mechanism for moving agents towards the type of cognition we'd like them to do - and we have very few other mechanisms for doing so.

I have relatively little idea how to "improve" a reward function so that it improves the inner cognition chiseled into the policy, because I don't know the mapping from outer reward schedules to inner cognition within the agent. Does an "amplified" reward signal produce better cognition in the inner agent? Possibly? Even if that were true, how would I know it?

I think it's easy to say "and we have improved the reward function", but this is true exactly to the extent to which the reward schedule actually produces more desirable cognition within the AI. Which comes back to my point: Build good cognition, and don't lose track that that's the ultimate goal. Find ways to better understand how reward schedules + data -> inner values.

(I agree with your excerpt, but I suspect it makes the case too mildly to correct the enormous mistakes I perceive to be made by substantial amounts of alignment thinking.)

Replies from: chrisvm, evhub, ricraz↑ comment by Chris van Merwijk (chrisvm) · 2022-08-06T10:22:22.491Z · LW(p) · GW(p)

It seems to me that the basic conceptual point made in this post is entirely contained in our Risks from Learned Optimization paper. I might just be missing a point. You've certainly phrased things differently and made some specific points that we didn't, but am I just misunderstanding something if I think the basic conceptual claims of this post (which seems to be presented as new) are implied by RFLO? If not, could you state briefly what is different?

(Note I am still surprised sometimes that people still think certain wireheading scenario's make sense despite them having read RFLO, so it's plausible to me that we really didn't communicate everyrhing that's in my head about this).

Replies from: TurnTrout↑ comment by TurnTrout · 2022-08-07T16:33:46.350Z · LW(p) · GW(p)

"Wireheading is improbable" is only half of the point of the essay.

The other main point is "reward functions are not the same type of object as utility functions." I haven't reread all of RFLO recently, but on a skim—RFLO consistently talks about reward functions as "objectives":

The particular type of robustness problem that mesa-optimization falls into

is the reward-result gap, the gap between the reward for which the system was

trained (the base objective) and the reward that can be reconstructed from it using

inverse reinforcement learning (the behavioral objective)....

The assumption in that work is that a monotonic relationship between

the learned reward and true reward indicates alignment, whereas deviations from

that suggest misalignment. Building on this sort of research, better theoretical

measures of alignment might someday allow us to speak concretely in terms of

provable guarantees about the extent to which a mesa-optimizer is aligned with the

base optimizer that created it.

Which is reasonable parlance, given that everyone else uses it, but I don't find that terminology very useful for thinking about what kinds of inner cognition will be developed in the network. Reward functions + environmental data provides a series of cognitive-updates to the network, in the form of reinforcement schedules. The reward function is not necessarily an 'objective' at all.

(You might have privately known about this distinction. Fine by me! But I can't back it out from a skim of RFLO, even already knowing the insight and looking for it.)

Replies from: chrisvm, evhub↑ comment by Chris van Merwijk (chrisvm) · 2022-08-09T05:25:07.680Z · LW(p) · GW(p)

Maybe you have made a gestalt-switch I haven't made yet, or maybe yours is a better way to communicate the same thing, but: the way I think of it is that the reward function is just a function from states to numbers, and the way the information contained in the reward function affects the model parameters is via reinforcement of pre-existing computations.

Is there a difference between saying:

- A reward function is an objective function, but the only way that it affects behaviour is via reinforcement of pre-existing computations in the model, and it doesn't actually encode in any way the "goal" of the model itself.

- A reward function is not an objective function, and the only way that it affects behaviour is via reinforcement of pre-existing computations in the model, and it doesn't actually encode in any way the "goal" of the model itself.

It seems to me that once you acknowledge the point about reinforcement, the additional statement that reward is not an objective doesn't actually imply anything further about the mechanistic properties of deep reinforcement learners? It is just a way to put a high-level conceptual story on top of it, and in this sense it seems to me that this point is already known (and in particular, contained within RFLO), even though we talked of the base objective still as an "objective".

However, it might be that while RFLO pointed out the same mechanistic understanding that you have in mind, but calling it an objective tends in practice to not fully communicate that mechanistic understanding.

Or it might be that I am really not yet understanding that there is an actual diferrence in mechanistic understanding, or that my intuitions are still being misled by the wrong high-level concept even if I have the lower-level mechanistic understanding right.

(On the other hand, one reason to still call it an objective is because we really can think of the selection process, i.e. evolution/the learning algorithm of an RL agent, as having an objective but making imperfect choices, or we can think of the training objective as encoding a task that humans have in mind).

Replies from: TurnTrout↑ comment by TurnTrout · 2022-08-15T03:30:57.898Z · LW(p) · GW(p)

in this sense it seems to me that this point is already known (and in particular, contained within RFLO), even though we talked of the base objective still as an "objective".