The heritability of human values: A behavior genetic critique of Shard Theory

post by geoffreymiller · 2022-10-20T15:51:35.786Z · LW · GW · 63 commentsContents

Overview (TL;DR): Introduction: Shard Theory as an falsifiable theory of human values Background on Shard Theory Shard Theory as a Blank Slate theory A behavior genetic critique of Shard Theory Does anybody really believe that values are heritable? Human traits in general are heritable Human values are heritable Heritability for behavioral traits tends to increase, not decrease, during lifespan development Human Connectome Project studies show that genetic influences on brain structure are not restricted to ‘subcortical hardwiring’ Behavioral traits and values are also heritable in non-human animals So what if human values are heritable? Why does the heritability of human values matter for AI alignment? Conclusion Appendix 1: Epistemic status of my arguments None 63 comments

Overview (TL;DR):

Shard Theory is a new approach to understanding the formation of human values, which aims to help solve the problem of how to align advanced AI systems with human values (the ‘AI alignment problem’). Shard Theory [LW · GW] has provoked a lot of interest and discussion on LessWrong, AI Alignment Forum, and EA Forum in recent months. However, Shard Theory incorporates a relatively Blank Slate view about the origins of human values that is empirically inconsistent with many studies in behavior genetics indicating that many human values show heritable genetic variation across individuals. I’ll focus in this essay on the empirical claims of Shard Theory, the behavior genetic evidence that challenges those claims, and the implications for developing more accurate models of human values for AI alignment.

Introduction: Shard Theory as an falsifiable theory of human values

The goal of the ‘AI alignment’ field is to help future Artificial Intelligence systems become better aligned with human values. Thus, to achieve AI alignment, we might need a good theory of human values. A new approach called “Shard Theory [LW · GW]” aims to develop such a theory of how humans develop values.

My goal in this essay is to assess whether Shard Theory offers an empirically accurate model of human value formation, given what we know from behavior genetics about the heritability of human values. The stakes here are high. If Shard Theory becomes influential in guiding further alignment research, but if its model of human values is not accurate, then Shard Theory may not help improve AI safety.

These kinds of empirical problems are not limited to Shard Theory. Many proposals that I’ve seen for AI ‘alignment with human values’ seem to ignore most of the research on human values in the behavioral and social sciences. I’ve tried to challenge this empirical neglect of value research in four previous essays for EA Forum, on the heterogeneity of value types [EA · GW] in humans individuals, the diversity of values across individuals [EA · GW], the importance of body/corporeal values [EA · GW], and the importance of religious values [EA · GW].

Note that this essay is a rough draft of some preliminary thoughts, and I welcome any feedback, comments, criticisms, and elaborations. In future essays I plan to critique Shard Theory from the perspectives of several other fields, such as evolutionary biology, animal behavior research, behaviorist learning theory, and evolutionary psychology.

Background on Shard Theory

Shard Theory has been developed mostly by Quintin Pope (a computer science Ph.D. student at Oregon State University) and Alex Turner (a post-doctoral researcher at the Center for Human-Compatible AI at UC Berkeley). Over the last few months, they posted a series of essays about Shard Theory on LessWrong.com, including this main essay here , ‘The shard theory of human values’ [LW · GW] (dated Sept 3, 2022), plus auxiliary essays such as: ‘Human values & biases are not accessible to the genome’ [LW · GW] (July 7, 2022), ‘Humans provide an untapped wealth of evidence about alignment [LW · GW]’ (July 13, 2022), ‘Reward is not the optimizer’ [LW · GW] (July 24, 2022), and ‘Evolution is a bad analogy for AGI: Inner alignment [LW · GW]’ (Aug 13, 2022). [This is not a complete list of their Shard Theory writings; it’s just the set that seems most relevant to the critiques I’ll make in this essay.] Also, David Udell published this useful summary: ‘Shard Theory: An overview’ [LW · GW] (Aug 10, 2022).

There’s a lot to like about Shard Theory. It takes seriously the potentially catastrophic risks from AI. It understands that ‘AI alignment with human values’ requires some fairly well-developed notions about where human values come from, what they’re for, and how they work. It is intellectually ambitious, and tries to integrate reinforcement learning, self-supervised predictive learning, decision theory, developmental psychology, and cognitive biases. It seeks to build some common ground between human intelligence and artificial intelligence, at the level of how complex cognitive systems develop accurate world models and useful values. It tries to be explicit about its empirical commitments and theoretical assumptions. It is open about being a work-in-progress rather than a complete, comprehensive, or empirically validated theory. It has already provoked much discussion and debate.

Even if my critiques of Shard Theory are correct, and some of its key evolutionary, genetic, and psychological assumptions are wrong, that isn’t necessarily fatal to the whole Shard Theory project. I imagine some form of Shard Theory 2.0 could be developed that updates its assumptions in the light of these critiques, and that still makes some progress in developing a more accurate model of human values that is useful for AI alignment.

Shard Theory as a Blank Slate theory

However, Shard Theory includes a model of human values that is not consistent with what behavioral scientists have learned about the origins and nature of values over the last 170 years of research in psychology, biology, animal behavior, neurogenetics, behavior genetics, and other fields.

The key problem is that Shard Theory re-invents a relatively ‘Blank Slate’ theory of human values. Note that no Blank Slate theory posits that the mind is 100% blank. Every Blank Slate theory that’s even marginally credible accepts that there are at least a few ‘innate instincts’ and some ‘hardwired reward circuitry’. Blank Slate theories generally accept that human brains have at least a few ‘innate reinforcers’ that can act as a scaffold for the socio-cultural learning of everything else. For example, even the most radical Blank Slate theorists would generally agree that sugar consumption is reinforcing because we evolved taste receptors for sweetness.

The existence of a few innate reinforcement circuits was accepted even by the most radical Behaviorists of the 1920s through 1960s, and by the most ‘social constructivist’ researchers in the social sciences and humanities from the 1960s onwards. Blank Slate theorists just try to minimize the role of evolution and genetics in shaping human psychology, and strongly favor Nurture over Nature in explaining both psychological commonalities across sentient beings, and psychological differences across species, sexes, ages, and individuals. Historically, Blank Slate theories were motivated not so much by empirical evidence, as by progressive political ideologies about the equality and perfectibility of humans. (See the 2002 book The Blank Slate by Steven Pinker, and the 2000 book Defenders of the Truth by Ullica Segerstrale.)

Shard Theory seems to follow in that tradition – although I suspect that it’s not so much due to political ideology, as to a quest for theoretical simplicity, and for not having to pay too much attention to the behavioral sciences in chasing AI alignment.

At the beginning of their main statement [LW · GW] of Shard Theory, in their TL;DR, Pope and Turner include this bold statement: “Human values are not e.g. an incredibly complicated, genetically hard-coded set of drives, but rather sets of contextually activated heuristics which were shaped by and bootstrapped from crude, genetically hard-coded reward circuitry.”

Then they make three explicit neuroscientific assumptions. I’ll focus on Assumption 1 of Shard Theory: “Most of the circuits in the brain are learned from scratch, in the sense of being mostly randomly initialized and not mostly genetically hard-coded.”

This assumption is motivated by an argument explored here [LW · GW] that ‘human values and biases are inaccessible to the genome’. For example, Quintin Trout argues “it seems intractable for the genome to scan a human brain and back out the “death” abstraction, which probably will not form at a predictable neural address. Therefore, we infer that the genome can’t directly make us afraid of death by e.g. specifying circuitry which detects when we think about death and then makes us afraid. In turn, this implies that there are a lot of values and biases which the genome cannot hardcode.”

This Shard Theory argument seems to reflect a fundamental misunderstanding of how evolution shapes genomes to produce phenotypic traits and complex adaptations. The genome never needs to ‘scan’ an adaptation and figure out how to reverse-engineer it back into genes. The genetic variants simply build a slightly new phenotypic variant of an adaptation, and if it works better than existing variants, then the genes that built it will tend to propagate through the population. The flow of design information is always from genes to phenotypes, even if the flow of selection pressures is back from phenotypes to genes. This one-way flow of information from DNA to RNA to proteins to adaptations has been called the ‘Central Dogma of molecular biology’, and it still holds largely true (the recent hype about epigenetics notwithstanding).

Shard Theory implies that biology has no mechanism to ‘scan’ the design of fully-mature, complex adaptations back into the genome, and therefore there’s no way for the genome to code for fully-mature, complex adaptations. If we take that argument at face value, then there’s no mechanism for the genome to ‘scan’ the design of a human spine, heart, hormone, antibody, cochlea, or retina, and there would be no way for evolution or genes to influence the design of the human body, physiology, or sensory organs. Evolution would grind to a halt – not just at the level of human values, but at the level of all complex adaptations in all species that have ever evolved.

As we will see, this idea that ‘human values and biases are inaccessible to the genome’ is empirically incorrect.

A behavior genetic critique of Shard Theory

In future essays, I plan to address the ways that Shard Theory, as presently conceived, is inconsistent with findings from several other research areas: (1) evolutionary biology models of how complex adaptations evolve, (2) animal behavior models of how nervous systems evolved to act in alignment with fitness interests, (3) behaviorist learning models of how reinforcement learning and reward systems operate in animals and humans, and (4) evolutionary psychology models of human motivations, emotions, preferences, morals, mental disorders, and personality traits.

For now, I want to focus on some conflicts between Shard Theory and behavior genetics research. As mentioned above, Shard Theory adopts a relatively ‘Blank Slate’ view of human values, positing that we inherit only a few simple, crude values related to midbrain reward circuitry, which are presumably universal across humans, and all other values are scaffolded and constructed on top of those.

However, behavior genetics research over the last several decades has shown show that most human values that differ across people, and that can be measured reliably – including some quite abstract values associated with political, religious, and moral ideology – are moderately heritable. Moreover, many of these values show relatively little influence from ‘shared family environment’, which includes all of the opportunities and experiences shared by children growing up in the same household and culture. This means that genetic variants influence the formation of human values, and genetic differences between people explain a significant proportion of the differences in their adult values, and family environment explains a lot less about differences in human values than we might have thought. This research is based on convergent findings using diverse methods such as twin studies, adoption studies, extended twin family designs, complex segregation analysis, and genome-wide association studies (GWAS). All of these behavior genetic observations are inconsistent with Shard Theory, particularly its Assumption 1.

Behavior genetics was launched in 1869 when Sir Francis Galton published his book Hereditary Genius, which proposed some empirical methods for studying the inheritance of high levels of human intelligence. A few years earlier, Galton’s cousin Charles Darwin had developed the theory of evolution by natural selection, which focused on the interplay of heritable genetic variance and evolutionary selection pressures. Galton was interested in how scientists might analyze heritable genetic variance in human mental traits such as intelligence, personality, and altruism. He understood that Nature and Nurture interact in very complicated ways to produce species-typical human universals. However, he also understood that it was an open question how much variation in Nature versus variation in Nurture contributed to individual differences in each trait.

Note that behavior genetics was always about explaining the factors that influence statistical variation in quantitative traits, not about explaining the causal, mechanistic development of traits. This point is often misunderstood by modern critics of behavior genetics who claim ‘every trait is an inextricable combination of Nature and Nurture, so there’s no point in trying to partition their influence.’ The mapping from genotype (the whole set of genes in an organism) to phenotype (the whole set of body, brain, and behavioral traits in an organism) is, indeed, extremely complicated and remains poorly understood. However, behavior genetics doesn’t need to understand the whole mapping; it can trace how genetic variants influence phenotypic trait variants using empirical methods such as twin, adoption, and GWAS studies.

In modern behavior genetics, the influence of genetic variants on traits is indexed by a metric called heritability, which can range from 0 (meaning genetic variants have no influence on individual differences in a phenotypic trait) to 1 (meaning genetic variants explain 100% of individual differences in a phenotypic trait). So-called ‘narrow-sense heritability’ includes only additive genetic effects due to the average effects of alleles; additive genetic effects are most important for predicting responses to evolutionary selection pressures – whether in the wild or in artificial selective breeding of domesticated species. ‘Broad-sense heritability’ includes additive effects plus dominant and epistatic genetic effects. For most behavioral traits, additive effects are by far the most important, so broad-sense heritability is usually only a little higher than narrow-sense heritability.

The most important result from behavior genetics is that all human behavioral traits that differ across people, and that can be measured reliably, are heritable to some degree – and often to a surprisingly high degree. This is sometimes called the First Law of Behavior Genetics – not because it’s some kind of natural law that all behavioral traits must be heritable, but because the last 150 years of research has found no replicable exceptions to this empirical generalization. Some behavioral traits such as general intelligence show very high heritability – over 0.70 – in adults, which is about as heritable as human height. (For a good recent introduction to the ‘Top 10 replicated findings from behavior genetics, see this paper.)

Does anybody really believe that values are heritable?

To people who accept a Blank Slate view of human nature, it might seem obvious that human values, preferences, motivations, and moral judgments are instilled by family, culture, media, and institutions – and the idea that genes could influence values might sound absurd. Conversely, to people familiar with behavior genetics, who know that all psychological traits are somewhat heritable, it might seem obvious that human values, like other psychological traits, will be somewhat heritable. It’s unclear what proportion of people lean towards the Blank Slate view of human values, versus the ‘hereditarian’ view that values can be heritable.

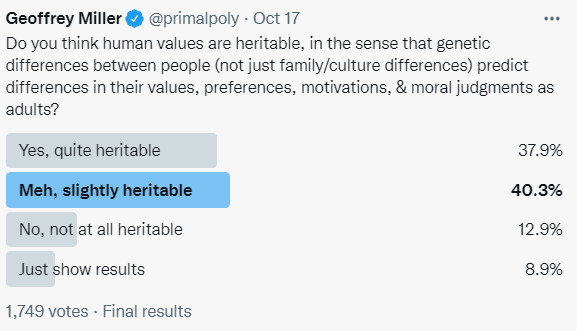

As a reality check, I ran this Twitter poll on Oct 17, 2022, with the results shown in this screenshot:

I was surprised that so many people took a slightly or strongly hereditarian view of values. Maybe the idea isn’t as crazy as it might seem at first glance. However, this poll is just illustrative that there is real variation in people’s views about this. It should not be taken too seriously as data, because it is just one informal question on social media, answered by a highly non-random sample. Only about 1.4% of my followers (1,749 out of 124,600) responded to this poll (which is a fairly normal response rate). My typical follower is an American male who’s politically centrist, conservative, or libertarian, and probably has a somewhat more hereditarian view of human nature than average. The poll’s main relevance here is in showing that a lot of people (not just me) believe that values can be heritable.

Human traits in general are heritable

A 2015 meta-analysis of human twin studies analyzed 17,804 traits from 2,748 papers including over 14 million twin pairs. These included mostly behavioral traits (e.g. psychiatric conditions, cognitive abilities, activities, social interactions, social values), and physiological traits (e.g. metabolic, neurological, cardiovascular, and endocrine traits). Across all traits, average heritability was .49, and shared family environment (e.g. parenting, upbringing, local culture) typically had negligible effects on the traits. For 69% of traits, heritability seemed due solely to additive genetic variation, with no influence of dominance or epistatic genetic variation.

Heritability of human traits is generally caused by many genes that each have very small, roughly additive effects, rather than by a few genes that have big effects (see this review). Thus, to predict individual values for a given trait, molecular behavior genetics studies generally aggregate the effects of thousands of DNA variants into a polygenic score. Thus, each trait is influenced by many genes. But also, each gene influences many traits (this is called pleiotropy). So, there is a complex genetic architecture that maps from many genetic variants onto many phenotypic traits, and this can be explored using multivariate behavior genetics methods that track genetic correlations between traits. (Elucidating the genetic architecture of human values would be enormously useful for AI alignment, in my opinion.)

Human values are heritable

The key point here, in relation to Shard Theory, is that ‘all human behavioral traits’ being heritable includes ‘all human values that differ across people’. Over the last few decades, behavior geneticists have expanded their focus from studying classic traits, such as general intelligence and mental disorders, to explicitly studying the heritability of human values, and values-adjacent traits. So far, behavior geneticists have found mild to moderate heritability for a wide range of values-related traits, including the following:

- Food preferences are heritable, and they are not just influenced by genes that predict basic taste or smell functions. Genes influence heritabilities of tastes for specific food categories such as vegetables, fruit, starchy foods, meat/fish, dairy, and snacks. Different genes underlie meat preferences in men versus women. Food fussiness and food neophobia are both heritable in kids, and reflect a common genetic etiology. Obesity, reflecting a high reward-sensitivity for food, is about 45% heritable.

- Mate preferences are somewhat heritable, including rated importance of key traits in potential partners, and preference for partner height. These heritable mate preferences can lead to positive genetic correlations between the preferences and with the actual traits preferred, as in this study of height, intelligence, creativity, exciting personality, and religiosity.

- Sexual values, reinforcers, and reward systems are heritable, including sexual orientation, affectionate communication, frequency of female orgasm, extrapair mating (infidelity), sexual jealousy, and sexual coerciveness.

- Parenting behaviors and values are heritable, according to a meta-analysis of 56 studies. Also, the shared family environment created by parents when raising their kids has many heritable components (according to studies on the ‘heritability of the environment’, and ‘the Nature of Nurture’.)

- Economic values and consumer preferences are heritable, including consumer decision heuristics, vocational interests, preferences for self-employment, entrepreneurship, delay discounting, economic policy preferences, investment biases, socio-economic status, and lifetime earnings.

- Moral values are heritable, including moral intuitions, cognitive empathy, justice sensitivity, prosociality, self-control, attitudes towards dishonesty, and vegetarianism.

- Immoral behaviors and values are also heritable, including violent crime, sexual coercion, white-collar crime, and juvenile delinquency.

- Political values are about 40% heritable; see 2021 review here; these heritable political values include conservatism, liberalism, social dominance orientation, political engagement, political trust, political interest, political sophistication, military service, foreign policy preferences, civic engagement, and voter turnout.

- Religious values are heritable, including overall religiosity, existential certainty, obedience to traditional authority, and apostasy.

In addition, the Big Five personality traits are moderately heritable (about 40%) according to this 2015 meta-analysis of 134 studies. Each personality trait is centered around some latent values that represent how rewarding and reinforcing various kinds of experiences are. For example, people higher in Extraversion value social interaction and energetic activity more, people higher in Openness value new experiences and creative exploration more, people higher in Agreeableness value friendliness and compassion more, people higher in Conscientiousness value efficiency and organization more, and people higher in Neuroticism value safety and risk-aversion more. Each of these personality traits is heritable, so these values are also heritable. In fact, personality traits might be central to the genetic architecture of human values.

Moreover, common mental disorders, which are all heritable, can be viewed as embodying different values. Depression reflects low reward sensitivity and disengagement from normally reinforcing behaviors. Anxiety disorders reflect heightened risk-aversion, loss aversion, and hyper-sensitivity to threatening stimuli; these concerns can be quite specific (e.g. social anxiety disorder vs. specific phobias vs. panic disorder). The negative symptoms of schizophrenia reflect reduced reward-sensitivity to social interaction (asociality), speech (alogia), pleasure (anhedonia), and motivation (avolution). The ‘Dark Triad’ personality traits (Machiavellianism, Narcissism, Psychopathy) reflect a higher value placed on personal status-seeking and short-term mating, and a lower value placed on other people’s suffering. A 2010 review paper showed that heritabilities of psychiatric ‘diseases’ (such as schizophrenia or depression) that were assumed to develop ‘involuntarily’ are about the same as heritabilities of ‘behavioral disorders’ (such as drug addiction or anorexia) that were assumed to reflect individual choices and values.

Specific drug dependencies and addictions are all heritable, reflecting the differential rewards that psychoactive chemicals have in different brains. Genetic influences have been especially well-studied in alcoholism, cannabis use, opiate addiction, cocaine addiction, and nicotine addiction. Other kinds of ‘behavioral disorders’ also show heritability, including gambling, compulsive Internet use, and sugar addiction – and each reflects a genetic modulation of the relevant reward/reinforcement systems that govern responses to these experiences.

Heritability for behavioral traits tends to increase, not decrease, during lifespan development

Shard Theory implies that genes shape human brains mostly before birth, setting up the basic limbic reinforcement system, and then Nurture takes over, such that heritability should decrease from birth to adulthood. This is exactly the opposite of what we typically see in longutudinal behavior genetic studies that compare heritabilities across different ages. Often, heritabilities for behavioral traits increase rather than decrease as people mature from birth to adulthood. For example, the heritability of general intelligence increases gradually from early childhood through young adulthood, and genes, rather than shared family environment, explain most of the continuity in intelligence across ages. A 2013 meta-analysis confirmed increasing heritability of intelligence between ages 6 months and 18 years. A 2014 review observed that heritability of intelligence is about 20% in infancy, but about 80% in adulthood. This increased heritability with age has been called ‘the Wilson Effect’ (after its discoverer Ronald Wilson), and it is typically accompanied by a decrease in the effect of shared family environment.

Increasing heritability with age is not restricted to intelligence. This study found increasing heritability of prosocial behavior in children from ages 2 through 7, and decreasing effects of shared family environment. Personality traits show relatively stable genetic influences across age, with small increases in genetic stability offsetting small decreases in heritability, according to this meta-analysis of 24 studies including 21,057 sibling pairs. A frequent finding in longitudinal behavior genetics is that the stability of traits across life is better explained by the stability of genes across life, than by the persistence of early experiences, shared family environment effects, or contextually reinforced values.

More generally, note that heritability does not just influence ‘innate traits’ that are present at birth. Heritability also influences traits that emerge with key developmental milestones such as social-cognitive maturation in middle childhood, sexual maturation in adolescence, political and religious maturation in young adulthood, and parenting behaviors after reproduction. Consider some of the findings in the previous section, which are revealed only after individuals reach certain life stages. The heritability of mate preferences, sexual orientation, orgasm rate, and sexual jealousy are not typically manifest until puberty, so are not ‘innate’ in the sense of ‘present at birth’. The heritability of voter behavior is not manifest until people are old enough to vote. The heritability of investment biases is not manifest until people acquire their own money to invest. The heritability of parenting behaviors is not manifest until people have kids of their own. It seems difficult to reconcile the heritability of so many late-developing values with the Shard Theory assumption that genes influence only a few crude, simple, reinforcement systems that are present at birth.

Human Connectome Project studies show that genetic influences on brain structure are not restricted to ‘subcortical hardwiring’

Shard Theory seems to view genetic influences on human values as being restricted mostly to the subcortical limbic system. Recall that Assumption 1 of Shard Theory was that “The cortex is basically (locally) randomly initialized.” Recent studies in neurogenetics show that this is not accurate. Genetically informative studies in the Human Connectome Project show pervasive heritability in neural structure and function across all brain areas, not just limbic areas. A recent review shows that genetic influences are quite strong for global white-matter microstructure and anatomical connectivity between brain regions; these effects pervade the entire neocortex, not just the limbic system. Note that these results based on brain imaging include not just the classic twin design, but also genome-wide association studies, and studies of gene expression using transcriptional data. Another study showed that genes, rather than shared family environment, played a more important role in shaping connectivity patterns among 39 cortical regions. Genetic influences on the brain’s connectome are often modulated by age and sex – in contrast to Shard Theory’s implicit model that all humans, of all ages, and both sexes, shared the same subcortical hardwiring. Another study showed high heritability for how the brain’s connectome transitions across states through time – in contrast to Shard Theory’s claim that genes mostly determine the static ‘hardwiring’ of the brain.

It should not be surprising that genetic variants influence all areas of the human brain, and the values that they embody. Analysis of the Allen Human Brain Atlas, a map of gene expression patterns throughout the human brain, shows that over 80% of genes are expressed in at least one of 190 brain structures studied. Neurogenetics research is making rapid progress on characterizing the gene regulatory network that governs human brain development – including neocortex. This is also helping genome-wide association studies to discover and analyze the millions of quantitative trait loci (minor genetic variants) that influence individual differences in brain development. Integration of the Human Connectome Project and the Allen Human Brain Atlas reveals pervasive heritability for myelination patterns in human neocortex – which directly contradicts Shard Theory’s Assumption 1 that “Most of the circuits in the brain are learned from scratch, in the sense of being mostly randomly initialized and not mostly genetically hard-coded.”

Behavioral traits and values are also heritable in non-human animals

A recent 2019 meta-analysis examined 476 heritability estimates in 101 publications across many species, and across a wide range of 11 behavioral traits– including activity, aggression, boldness, communication, exploration, foraging, mating, migration, parenting, social interaction, and other behaviors. Overall average heritability of behavior was 0.24. (This may sound low, but remember that empirical heritability estimates are limited by the measurement accuracy for traits, and many behavioral traits in animals can measured with only modest reliability and validity.) Crucially, heritability was positive for every type of behavioral trait, was similar for domestic and wild species, was similar for field and lab measures of behavior, and was just as high for vertebrates as for invertebrates. Also, average heritability of behavioral traits was just as high as average heritability of physiological traits (e.g. blood pressure, hormone levels) and life history traits (e.g. age at sexual maturation, life span), and were only a bit lower than the heritability for morphological traits (e.g. height, limb length).

Note that most of these behavioral traits in animals involve ‘values’, broadly construed as reinforcement or reward systems that shape the development of adaptive behavior. For example, ‘activity’ reflects how rewarding it is to move around a lot; ‘aggression’ reflects how rewarding it is to attack others, ‘boldness’ reflects how rewarding it is to track and investigate dangerous predators, ‘exploratory behavior’ reflects how rewarding it is to investigate novel environments, ‘foraging’ reflects how rewarding it is to find, handle, and consume food, ‘mating’ reflects how rewarding it is to do mate search, courtship, and copulation, ‘parental effort’ reflects how rewarding it is to take care of offspring, and ‘social behavior’ reflects how reward it is to groom others or to hang around in groups.

In other words, every type of value that can vary across individual animals, and that can be reliably measured by animal behavior researchers, seems to show positive heritability, and heritability of values is just as high in animals with complex central nervous systems (vertebrates) as in animals with simpler nervous systems (invertebrates).

So what if human values are heritable?

You might be thinking, OK, all this behavior genetics stuff is fine, and it challenges a naïve Blank Slate model of human nature, but what difference does it really make for Shard Theory, or for AI alignment in general?

Well, Shard Theory certainly think it matters. Assumption 1 in Shard Theory is presented as foundational to the whole project (although I’m not sure it really is). Shard Theory repeatedly talks about human values being built up from just a few, crude, simple, innate, species-typical reinforcement systems centered in the midbrain (in contrast to the rich set of many, evolved, adaptive, domain-specific psychological adaptations posited by evolutionary psychology). Shard Theory seems to allow no role for genes influencing value formation after birth, even at crucial life stages such as middle childhood, sexual maturation, and parenting. More generally, Shard Theory seems to underplay the genetic and phenotypic diversity of human values across individuals, and seems to imply that humans have only a few basic reinforcement systems in common, and that all divergence of values across individuals reflects differences in family, socialization, cultural, and media exposure.

Thus, I think that Shard Theory has some good insights and some promise as a research paradigm, but I think it needs some updating in terms of its model of human evolution, genetics, development, neuroscience, psychology, and values.

Why does the heritability of human values matter for AI alignment?

Apart from Shard Theory, why does it matter for AI alignment if human values are heritable? Well, I think it might matter in several ways.

First, polygenic scores for value prediction. In the near future, human scientists and AI systems will be able to predict the values of an individual, to some degree, just from their genotype. As GWAS research discovers thousands of new genetic loci that influence particular human values, it will become possible to develop polygenic scores that predict someone’s values given their complete sequenced genome – even without knowing anything else about them. Polygenic scores to predict intelligence are already improving at a rapid rate. Polygenic value prediction would require large sample sizes of sequenced genomes linked to individuals’ preferences and values (whether self-reported or inferred behaviorally from digital records), but it is entirely possible given current behavior genetics methods. As the cost of whole-genome sequencing falls below $1,000, and the medical benefits of sequencing rise, we can expect hundreds of millions of people to get genotyped in the next decade or two. AI systems could request free access to individual genomic data as part of standard terms and conditions, or could offer discounts to users willing to share their genomic data in order to improve the accuracy of their recommendation engines and interaction styles. We should expect that advanced AI systems will typically have access to the complete genomes of the people they interact with most often – and will be able to use polygenic scores to translate those genomes into predicted value profiles.

Second, familial aggregation of values. Heritability means that values of one individual can be predicted somewhat by the values of their close genetic relatives. For example, learning about the values of one identical twin might be highly predictive of the values of the other identical twin – even if they were separated at birth and raised in different families and cultures. This means that an AI system trying to understand the values of one individual could start from the known values of their parents, siblings, and other genetic relatives, as a sort of maximum-likelihood familial Bayesian prior. An AI system could also take into account developmental behavior genetic findings and life-stage effects – for example, an individual’s values at age 40 after they have kids might be more similar in some ways to those of their own parents at age 40, than to themselves as they were at age 20.

Third, the genetic architecture of values. For a given individual, their values in one domain can sometimes be predicted by values in other domains. Values are not orthogonal to each other; they are shaped by genetic correlations across values. As behavior genetics researchers develop a more complete genetic architecture of values, AI systems could potentially use this to infer a person’s unknown values from their known values. For example, their consumer preferences might predict their political values, or their sexual values might predict their religious values.

Fourth, the authenticity of values. Given information about an individual’s genome, the values of their close family members, and the genetic architecture of values, an AI system could infer a fairly complete expected profile of values for that individual, at each expected life-stage. What if the AI discovers that there’s a big mismatch between an individual’s ‘genetic prior’ (their values are predicted from genomic and family information), and their current stated or revealed values? That might be evidence that the individual has heroically overcome their genetic programming through education, enlightenment, and self-actualization. Or if might be evidence that the individual has been manipulated by a lifetime of indoctrination, mis-education, and propaganda that has alienated them from their instinctive preferences and morals. The heritability of values raises profound questions about the authenticity of human values in our credentialist, careerist, consumerist, media-obsessed civilization. When AI systems are trying to align with our values, but our heritable values don’t align with our current stated cultural values (e.g. this month’s fashionable virtue signals), which should the AI weigh most heavily?

Conclusion

If we’re serious about AI alignment with human values, we need to get more serious about integrating empirical evidence about the origins, nature, and variety of human values. One recent attempt to ground AI alignment in human values – Shard Theory – has some merits and some interesting potential. However, this potential is undermined by Shard Theory’s empirical commitments to a fairly Blank Slate view of human value formation. That view is inconsistent with a large volume of research in behavior genetics on the heritability of many human values. By taking genetic influences on human values more seriously, we might be able to improve Shard Theory and other approaches to AI safety, and we might identify new issues in AI alignment such as polygenic scores for value prediction, familial aggregation of values, and the genetic architecture of values. Finally, a hereditarian perspective raises the thorny issue of which of our values are most authentic and most worthy of being aligned with AI systems – the ones our genes are nudging us towards, the ones our parents taught us, the ones that society indoctrinates us into, or the ones that we ‘freely choose’ (whatever that means).

Appendix 1: Epistemic status of my arguments

I’m moderately confident that some key assumptions of Shard Theory as currently presented are not empirically consistent with the findings of behavior genetics, but I have very low confidence about whether or not Shard Theory can be updated to become consistent, and I have no idea yet what that update would look like.

As a newbie AI alignment researcher, I’ve probably made some errors in my understanding of the more AI-oriented elements of Shard Theory. I worked a fair amount on neural networks, genetic algorithms, autonomous agents, and machine learning from the late 1980s through the mid-1990s, but I’m still getting up to date with more recent work on deep learning, reinforcement learning, and technical alignment research.

As an evolutionary psychology professor, I’m moderately familiar with behavior genetics methods and findings, and I’ve published several papers using behavior genetics methods. I’ve been thinking about behavior genetics issues since the late 1990s, especially in relation to human intelligence. I taught a course on behavior genetics in 2004 (syllabus here). I did a sabbatical in 2006 at the Genetic Epidemiology Center at QIMR in Brisbane, Australia, run by Nick Martin. We published two behavior genetics studies, one in 2011 on the heritability of female orgasm rates, and one in 2012 on the heritability of talking and texting on smartphones. I did a 2007 meta-analysis of brain imaging data to estimate the coefficient of additive genetic variance in brain size. I also published a couple of papers in 2008 on genetic admixture studies, such as this. However, I’m not a full-time behavior genetics researcher, and I’m not actively involved in the large international genetics consortia that dominate current behavior genetics studies.

Overall, I’m highly confident in the key lessons of behavior genetics (e.g. all psychological traits are heritable, including many values; shared family environment has surprisingly small effects on many traits). I’m moderately confident in the results from meta-analyses and large-scale international consortia studies. I’m less confident in specific heritability estimates from individual papers that haven’t yet been replicated.

63 comments

Comments sorted by top scores.

comment by Steven Byrnes (steve2152) · 2022-10-21T01:56:33.764Z · LW(p) · GW(p)

I wonder what you think of my post “Learning from scratch” in the Brain [LW · GW]? I feel like the shard theory discussions you cite were significantly based off that post of mine (I hope I’m not tooting my own horn—someone can correct me if I’m mis-describing the intellectual history here). If so, I think there’s a game of telephone, and things are maybe getting lost in translation.

For what it’s worth, I find this an odd post because I’m quite familiar with twin studies, I find them compelling, and I frequently bring them up in conversation. (They didn’t come up in that particular post but I did briefly mention them indirectly here [LW · GW] in a kinda weird context.)

See in particular:

- Section 2.3.1: Learning-from-scratch is NOT “blank slate” [LW · GW];

- Section 2.3.2: Learning from scratch is NOT “nurture-over-nature” [LW · GW].

Onto more specific things:

Heritability for behavioral traits tends to increase, not decrease, during lifespan development

If we think about the human brain (loosely) as doing model-based reinforcement learning, and if different people have different genetically-determined reward functions, then one might expect that people with very similar reward functions tend to find their way into similar ways of being / relating / living / thinking / etc.—namely, the ways of being that best tickle their innate reward function. But that process might take time.

For example, if Alice has an innate reward function that predisposes her to be sympathetic to the idea of authoritarianism [important open question that I’m working on: exactly wtf kind of reward function might do that??], but Alice has spent her sheltered childhood having never been exposed to pro-authoritarian arguments, well then she’s not going to be a pro-authoritarian child! But by adulthood, she will have met lots of people, read lots of things, lived in different places, etc., so she’s much more likely to have come across pro-authoritarian arguments, and those arguments would have really resonated with her, thanks to her genetically-determined reward function.

So I find the increase in heritability with age to be unsurprising.

Recall that Assumption 1 of Shard Theory was that “The cortex is basically (locally) randomly initialized.” Recent studies in neurogenetics show that this is not accurate. Genetically informative studies in the Human Connectome Project show pervasive heritability in neural structure and function across all brain areas, not just limbic areas.

The word “locally” in the first sentence is doing a lot of work here. Again see Section 2.3.1 [LW · GW]. AFAICT, the large-scale wiring diagram of the cortex is mostly or entirely innate, as are the various cytoarchitectural differences across the cortex (agranularity etc.). I think of this fact as roughly “a person’s genome sets them up with a bias to learn particular types of patterns, in particular parts of their cortex”. But they still have to learn those patterns, with a learning algorithm (I claim).

As an ML analogy, there’s a lot of large-scale structure in a randomly-initialized convolutional neural net. Layer N is connected to Layer N+1 but not Layer N+17, and nearby pixels are related by convolutions in a way that distant pixels are not, etc. But a randomly-initialized convolutional neural net is still “learning from scratch” by my definition.

Shard Theory implies that genes shape human brains mostly before birth

I don’t speak for Shard Theory but I for one strongly believe that the innate reward function is different during different stages of life, as a result of development (not learning), e.g. sex drive goes up in puberty.

See the 2002 book The Blank Slate by Steven Pinker

FWIW, if memory serves, I have no complaints about that book outside of chapter 5. I have lots of complaints about chapter 5, as you might expect.

Pope and Turner include this bold statement: “Human values are not e.g. an incredibly complicated, genetically hard-coded set of drives, but rather sets of contextually activated heuristics which were shaped by and bootstrapped from crude, genetically hard-coded reward circuitry.”

My understanding (which I have repeatedly complained about) is that they are using “values” where I would use the word “desires”.

In that context, consider the statement: “I like Ghirardelli chocolate, and I like the Charles River, and I like my neighbor Dani, and I like [insert 5000 more things like that].” I think it’s perfectly obvious to everyone that there are not 5000 specific genes for my liking these 5000 particular things. There are probably (IMO) specific genes that contribute to why I like chocolate (i.e. genes related to taste), and genes that contribute to why I like the Charles River (i.e. genes related to my sense of aesthetics), etc. And there are also life experiences involved here; if I had had my first kiss at the Charles River, I would probably like it a bit more. Right?

I’m not exactly sure what the word “crude” is doing here. I don’t think I would have used that word. I think the hard-coded reward circuitry is rather complex and intricate in its own way. But it’s not as complex and intricate as our learned desires! I think describing our genetically hard-coded reward circuitry would take like maybe thousands of lines of pseudocode, whereas describing everything that an adult human likes / desires would take maybe millions or billions of lines. After all, we only have 25,000ish genes, but the brain has 100 trillion(ish) synapses.

“it seems intractable for the genome to scan a human brain and back out the “death” abstraction, which probably will not form at a predictable neural address. Therefore, we infer that the genome can’t directly make us afraid of death by e.g. specifying circuitry which detects when we think about death and then makes us afraid. In turn, this implies that there are a lot of values and biases which the genome cannot hardcode.”

I’m not sure I would have said it that way—see here [LW · GW] if you want to see me trying to articulate (what I think is) this same point that Quintin was trying to get across there.

Let’s consider Death as an abstract concept in your conscious awareness. When this abstract concept is invoked, it probably centrally involves particular neurons in your temporal lobe, and various other neurons in various other places. Which temporal-lobe neurons? It’s probably different neurons for different people. Sure, most people will have this Death concept in the same part of their temporal lobes, maybe even the same at a millimeter scale or something. But down to the individual neurons? Seems unlikely, right? After all, different people have pretty different conceptions of death. Some cultures might have two death concepts instead of one, for all I know. Some people (especially kids) don’t know that death is a thing in the first place, and therefore don’t have a concept for it at all. When the kid finally learns it, it’s going to get stored somewhere (really, multiple places), but I don’t think the destination in the temporal lobe is predetermined down to the level of individual neurons.

So, consider the possible story: “The abstract concept of Death is going to deterministically involve temporal lobe neurons 89642, and 976387, and (etc.) The genome will have a program that wires those particular neurons to the ventral-anterior & medial hypothalamus and PAG. And therefore, humans will be hardwired to be afraid of death.”

That’s an implausible story, right? As it happens, I don’t think humans are genetically hardwired to be afraid of death in the first place. But even if they were, I don’t think the mechanism could look like that.

That doesn’t necessarily mean there’s no possible mechanism by which humans could have a genetic disposition to be specifically afraid of death. It would just have to work in a more indirect way, presumably (IMO) involving learning algorithms in some way.

Shard Theory incorporates a relatively Blank Slate view about the origins of human values

I’m trying to think about how you wound up with this belief in the first place. Here’s a guess. I could be wrong.

One thing is, insofar as human learning is describable as model-based RL (yes it’s an oversimplification but I do think it’s a good starting point), the reward function is playing a huge role.

And in the context of AGI alignment, we the programmers get to design the reward function however we want.

We can even give ourselves a reward button, and press whenever we feel like it.

If we are magically perfectly skillful with the reward function / button, e.g. we have magical perfect interpretability and give reward whenever the AGI’s innermost thoughts and plans line up with what we want it to be thinking and planning, then I think we would eventually get an aligned AGI.

A point that shard theory posts sometimes bring up is, once we get to this point, and the AGI is also super smart and capable, it’s at least plausible that we can just give the reward button to the AGI, or give the AGI write access to its reward function. Thanks to instrumental convergence goal-preservation drive, the AGI would try to use that newfound power for good, to make sure that it stayed aligned (and by assumption of super-competence, it would succeed).

Whether we buy that argument or not, I think maybe it can be misinterpreted as a blank slate-ish argument! After all, it involves saying that “early in life” we brainwash the AGI with our reward button, and “late in life” (after we’ve completely aligned it and then granted it access to its own reward function), the AGI will continue to adhere to the desires with which it was brainwashed as a child.

But you can see the enormous disanalogies with humans, right? Human parents are hampered in their ability to brainwash their children by not having direct access to their kids’ reward centers, and not having interpretability of their kids’ deepest thoughts which would be necessary to make good use of that anyway. Likewise, human adults are hampered in their ability to prevent their own values from drifting by not having write access to their own brainstems etc., and generally they aren’t even trying to prevent their own values from drifting anyway, and they wouldn’t know how to even if they could in principle.

(Again, maybe this is all totally unrelated to how you got the impression that Shard Theory is blank slate-ist, in which case you can ignore it!)

Replies from: geoffreymiller, steve2152, jacob_cannell↑ comment by geoffreymiller · 2022-10-21T02:41:06.669Z · LW(p) · GW(p)

Steven -- thanks very much for your long, thoughtful, and constructive comment. I really appreciate it, and it does help to clear up a few of my puzzlements about Shard Theory (but not all of them!).

Let me ruminate on your comment, and read your linked essays.

I have been thinking about how evolution can implement different kinds of neural architectures, with different degrees of specificity versus generality, ever since my first paper in 1989 on using genetic algorithms to evolve neural networks. Our 1994 paper on using genetic algorithms to evolve sensorimotor control systems for autonomous robots used a much more complex mapping from genotype to neural phenotype.

So, I think there are lots of open questions about exactly how much of our neural complexity is really 'hard wired' (a term I loathe). But my hunch is that a lot of our reward circuitry that tracks key 'fitness affordances' in the environment is relatively resistant to manipulation by environmental information -- not least, because other individuals would take advantage of any ways that they could rewire what we really want.

↑ comment by Steven Byrnes (steve2152) · 2023-02-03T13:09:00.688Z · LW(p) · GW(p)

Related, I think: I just posted the post Heritability, Behaviorism, and Within-Lifetime RL [LW · GW]

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2025-01-18T16:38:58.787Z · LW(p) · GW(p)

Update: also Heritability: Five Battles [LW · GW]. :)

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2025-01-18T16:44:23.794Z · LW(p) · GW(p)

IMO, I personally think heritability is basically not relevant towards nurture/nature debates, and agree with this:

Heritability is really just a ratio of variances. You’ll mislead yourself thinking about it any other way.

And this:

https://www.lesswrong.com/posts/YpsGjsfT93aCkRHPh/what-does-knowing-the-heritability-of-a-trait-tell-me-in#answers [LW(p) · GW(p)]

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2025-01-18T18:23:14.584Z · LW(p) · GW(p)

As discussed in my post [LW · GW], studies of heritability range between slightly-helpful and extremely-helpful if you’re interested in any of the following topics (among others):

- guessing someone’s likely adult traits (disease risk, personality, etc.) based on their family history and childhood environment

- assessing whether it’s plausible that some parenting or societal “intervention” (hugs and encouragement, getting divorced, imparting sage advice, parochial school, etc.) will systematically change what kind of adult the kid will grow into.

- calculating, using, and understanding polygenic scores

- trying to understand some outcome (schizophrenia, extroversion, intelligence, or whatever) by studying the genes that correlate with it

I think that those are enough to make heritability “relevant towards nurture/nature debates”, although I guess I don’t know exactly what you mean by that.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2025-01-18T18:33:10.393Z · LW(p) · GW(p)

I was mostly focusing on interventions, so the second and third points in your linked post are most important.

And you mention that if you want to change yourself, heritability is essentially worthless, and parenting/social intervention where heritability matters, but with big caveats that mean you need to be careful on applying insights from heritability.

So I successfully predicted 1 outcome, and failed to predict another outcome, which means that I have to weaken my thesis.

↑ comment by jacob_cannell · 2022-10-21T03:58:29.908Z · LW(p) · GW(p)

If we think about the human brain (loosely) as doing model-based reinforcement learning, and if different people have different genetically-determined reward functions,

To agree and expand on this - successful DL systems have a bunch of important hyperparameters, and many of these control balances between different learning sub-objectives and priors/regularizers. Any DL systems that use intrinsic motivation, and especially those that combine that with other paradigms like extrinsic reward reinforcement learning, tend to have a bunch of these hyperparams. The brain is driven by both complex instrinsic learning mechanisms (empowerment/curiosity, predictive learning, etc) and extrinsic reward reinforcement learning (pleasure, pain, hunger, thirst, sleep, etc), and so likely has many such hyperparams. The brain also seems to control learning schedules somewhat adaptively (which again is also important for SOTA DL systems) - and even perhaps per module to some extent (as brain regions tend to crystalize/myleninate in hierarchical processing order, starting with lower sensor/motor cortex and ending in upper cortex and PFC), which introduces even more hyperparams.

So absent other explanations, it seems pretty likely that humans vary across these hyperparms, which can have enormous effects on later development. High curiosity drive combined with delayed puberty/neotany (with adapted learning rate schedules) is already a simple sufficient explanation for much of the variation in STEM-type abstract intelligence, and more specifically explains the 'jock vs nerd' or phenomena as different stable early vs late mating strategy niches.

As it happens, I don’t think humans are genetically hardwired to be afraid of death in the first place

Yeah pretty sure they aren't (or at least I wasn't, had to learn). But since death is a minimally empowered state, its immediately obviously evaluated as very low utility.

comment by Quintin Pope (quintin-pope) · 2022-10-24T22:25:52.358Z · LW(p) · GW(p)

Thank you for providing your feedback on what we've written so far. I'm a bit surprised that you interpret shard theory as supporting the blank slate position. In hindsight, we should have been more clear about this. I haven't studied behavior genetics very much, but my own rough prior is that genetics explain about 50% of a given trait's variance (values included).

Shard theory is mostly making a statement about the mechanisms by which genetics can influence values (that they must overcome / evade information inaccessibility issues). I don't think shard theory strongly predicts any specific level of heritability, though it probably rules out extreme levels of genetic determinism.

This Shard Theory argument seems to reflect a fundamental misunderstanding of how evolution shapes genomes to produce phenotypic traits and complex adaptations. The genome never needs to ‘scan’ an adaptation and figure out how to reverse-engineer it back into genes. The genetic variants simply build a slightly new phenotypic variant of an adaptation, and if it works better than existing variants, then the genes that built it will tend to propagate through the population. The flow of design information is always from genes to phenotypes, even if the flow of selection pressures is back from phenotypes to genes. This one-way flow of information from DNA to RNA to proteins to adaptations has been called the ‘Central Dogma of molecular biology’, and it still holds largely true (the recent hype about epigenetics notwithstanding).

Shard Theory implies that biology has no mechanism to ‘scan’ the design of fully-mature, complex adaptations back into the genome, and therefore there’s no way for the genome to code for fully-mature, complex adaptations. If we take that argument at face value, then there’s no mechanism for the genome to ‘scan’ the design of a human spine, heart, hormone, antibody, cochlea, or retina, and there would be no way for evolution or genes to influence the design of the human body, physiology, or sensory organs. Evolution would grind to a halt – not just at the level of human values, but at the level of all complex adaptations in all species that have ever evolved.

What we mean is that there are certain constraints on how a hardcoded circuit can interact with a learned world model which make it very difficult for the hardcoded circuit to exactly locate / interact with concepts within that world model. This imposes certain constraints on the types of values-shaping mechanisms that are available to the genome. It's conceptually no different (though much less rigorous) than using facts about chemistry to impose constraints on how a cell's internal molecular processes can work. Clearly, they do work. The question is, given what we know of constraints in the domain in question, how do they work? And how can we adapt similar mechanisms for our own purposes?

Shard Theory adopts a relatively ‘Blank Slate’ view of human values, positing that we inherit only a few simple, crude values related to midbrain reward circuitry, which are presumably universal across humans, and all other values are scaffolded and constructed on top of those.

- I'd note that simple reward circuitry can influence the formation of very complex values.

- Why would reward circuitry be constant across humans? Why would the values it induces be constant either?

- I think results such as the domestication of foxes imply a fair degree of variation in the genetically specified reward circuitry between individuals, otherwise they couldn't have selected for domestication. I expect similar results hold for humans.

Human values are heritable,,,

- This is a neat summary of values-related heritability results. I'll look into these in more detail in future, so thank you for compiling these. However, the provided summaries are roughly in line with what I expect from these sorts of studies.

Shard Theory implies that genes shape human brains mostly before birth, setting up the basic limbic reinforcement system, and then Nurture takes over, such that heritability should decrease from birth to adulthood.

- I completely disagree. The reward system applies continuous pressure across your lifetime, so there's one straightforward mechanism for the genome to influence values developments after birth. There are other more sophisticated such mechanisms.

- E.g., Steve Byrnes describes short [LW · GW] and long [LW · GW] term predictors of low-level sensory experiences. Though the genome specifies which sensory experiences the predictor predicts, how the predictor does so is learned over a lifetime. This allows the genome to have "pointers" to certain parts of the learned world model, which can let genetically specified algorithms steer behavior even well after birth, as Steve outlines here [LW · GW].

- Also, you see a similar pattern in current RL agents. A freshly initialized agent acts completely randomly, with no influence from its hard-coded reward function. As training progresses, behavior becomes much more strongly determined by its reward function.

Human Connectome Project studies show that genetic influences on brain structure are not restricted to ‘subcortical hardwiring’ ...

- Very interesting biological results, but consistent with what we were saying about the brain being mostly randomly initialized.

- Our position is that the information content of the brain is mostly learned. "Random initialization" can include a high degree of genetic influence on local neurological structures. In ML, both Gaussian and Xavier initialization count as "random initialization", even though they lead to different local structures. Similarly, I expect the details of the brain's stochastic local connectivity pattern at birth vary with genome and brain region. However, the information content from the genome is bounded above by the information content of the genome itself, which is only about 3 billion base pairs. So, most of the brain must be learned from scratch.

↑ comment by geoffreymiller · 2022-10-25T22:58:05.525Z · LW(p) · GW(p)

Quintin (and also Alex) - first, let me say, thank you for the friendly, collegial, and constructive comments and replies you've offered. Many folks get reactive and defensive when they're hit with a 6,000-word critique of their theory, but you're remained constructive and intellectually engaged. So, thanks for that.

On the general point about Shard Theory being a relatively 'Blank Slate' account, it might help to think about two different meanings of 'Blank Slate' -- mechanistic versus functional.

A mechanistic Blank Slate approach (which I take Shard Theory to be, somewhat, but not entirely, since it does talk about some reinforcement systems being 'innate'), emphasizes the details of how we get from genome to brain development to adult psychology and behavior. Lots of discussion about Shard Theory has centered around whether the genome can 'encode' or 'hardwire' or 'hard-code' certain bits of human psychology.

A functional Blank Slate approach (which I think Shard Theory pursues even more strongly, to be honest), doesn't make any positive, theoretically informative use of any evolutionary-functional analysis to characterize animal or human adaptations. Rather, functional Blank Slate approaches tend to emphasize social learning, cross-cultural differences, shared family environments, etc as sources of psychology.

To highlight the distinction: evolutionary psychology doesn't start by asking 'what can the genome hard-wire?' Rather, it starts with the same key questions that animal behavior researchers ask about any behavior in any species: 'What selection pressures shaped this behavior? What adaptive problems does this behavior solve? How do the design details of this adaptation solve the functional problem that it evolved to cope with?'

In terms of Tinbergen's Four Questions, a lot of the discussion around Shard Theory seems to focus on proximate ontogeny, whereas my field of evolutionary psychology focuses more on ultimate/evolutionary functions and phylogeny.

I'm aware that many folks on LessWrong take the view that the success of deep learning in neural networks, and neuro-theoretical arguments about random initialization of neocortex (which are basically arguments about proximate ontogeny), mean that it's useless to do any evolutionary functional or phylogenetic analysis of human behavior when thinking about AI alignment (basically, on the grounds that things like kin detection systems, cheater detection systems, mate preferences, or death-avoidance systems couldn't possible evolve fulfil those functions in any meaningful sense.)

However, I think there's substantial evidence, in the 163 years since Darwin's seminal work, that evolutionary-functional analysis of animal adaptations, preferences, and values has been extremely informative about animal behavior -- just as it has about human behavior. So, it's hard to accept any theoretical argument that the genome couldn't possible encode any of the behaviors that animal behavior researchers and evolutionary psychologists have been studying for so many decades. It wouldn't just mean throwing out human evolutionary psychology. It would mean throwing out virtually all scientifically informed research on behavior in all other species, including classic ethology, neuroethology, behavioral ecology, primatology, and evolutionary anthropology.

↑ comment by TurnTrout · 2022-10-25T04:19:46.322Z · LW(p) · GW(p)

However, the information content from the genome is bounded above by the information content of the genome itself, which is only about 3 billion base pairs. So, most of the brain must be learned from scratch.

Can you say more on this? This seems invalid to me. This seems like, you have a program whose specification is a few GB, but that doesn't mean the program can't specify more than 3GB of meaningful data in the final brain -- just that it's not specifying data with K-complexity greater than eg 4GB.

Replies from: geoffreymiller↑ comment by geoffreymiller · 2022-10-25T18:20:58.185Z · LW(p) · GW(p)

Quintin & Alex - this is a very tricky issue that's been discussed in evolutionary psychology since the late 1980s.

Way back then, Leda Cosmides & John Tooby pointed out that the human genome will 'offload' any information it can that's needed for brain development onto any environmental regularities that can be expected to be available externally, out in the world. For example, the genome doesn't need to specify everything about time, space, and causality that might be relevant in reliably building a brain that can do intuitive physics -- as long as kids can expect that they'll encounter objects and events that obey basic principles of time, space, and causality. In other words, the 'information content' of the mature brain represents the genome taking maximum advantage of statistical regularities in the physical and social worlds, in order to build reliably functioning adult adaptations. See, for example, their writings here and here.

Now, should we call that kind of environmentally-driven calibration and scaffolding of evolved adaptations a form of 'learning'? It is in some ways, but in other ways, the term 'learning' would distract attention away from the fact that we're talking about a rich suite of evolved adaptations that are adapting to cross-generational regularities in the world (e.g. gravity, time, space, causality, the structure of optic flow in visual input, and many game-theoretic regularities of social and sexual interaction) -- rather than to novel variants or to cultural traditions.

Also, if we take such co-determination of brain structure by genome and environmental regularities as just another form of 'learning', we're tempted to ignore the last several decades of evolutionary functional analysis of the psychological adaptations that do reliably develop in mature adults across thousands of species. In practice, labeling something 'learned' tends to foreclose any evolutionary-functional analysis of why it works the way it works. (For example, the still-common assumption that jealousy is a 'learned behavior' obscured the functional differences and sex differences between sexual jealousy and resource/emotional jealousy).

As an analogy, the genome specifies some details about how the lungs grow -- but lung growth depends on environmental regularities such as the existence of oxygen and nitrogen at certain concentrations and pressures in the atmosphere; without those gasses, lungs don't grow right. Does that mean the lungs 'learn' their structure from atmosphere gasses rather than just from the information in the genome? I think that would be a peculiar way to look at it.

The key issue is that there's a fundamental asymmetry between the information in the genome and the information in the environment: the genome adapts to promote the reliable development of complex functional adaptations that take advantage of environmental regularities, but the environmental regularities doesn't adapt in that way to help animals survive and reproduce (e.g. time, gravity, causality, and optic flow don't change to make organismic development easier or more reliable).

Thus, if we're serious about understanding the functional design of human brains, minds, and values, I think it's often more fruitful to focus on the genomic side of development, rather than the environmental side (or the 'learning' side, as usually construed). (Of course, with the development of cumulative cultural traditions in our species in the last hundred thousand years or so, a lot more adaptively useful information is stored out in the environment -- but most of the fundamental human values that we'd want our AIs to align with are shared across most mammalian species, and are not unique to humans with culture.)

comment by jacob_cannell · 2022-10-21T00:51:39.383Z · LW(p) · GW(p)

The adult cortex and cerebellum combined have on the order of 1e15 bits of synaptic capacity, compared to about 1e9 bits of genome capacity (only a tiny fraction of which can functionally code for brain wiring). Thus it is simply a physical fact that most cognitive complexity is learned, not innate - and the overwhelming weight of evidence from neuroscience supports the view that the human brain is a general learning machine [LW · GW]. The genome mostly specifies the high level intra and inter module level connectivity patterns and motiffs, along with a library of important innate circuits, mostly in the brainstem.

The comparison between a modern deep learning system is especially relevant, because deep learning is reverse engineering the brain and modern DL systems are increasingly accurate - in some cases near perfect - models of neural activity.

The brainstem - which contains most of the complexity - is equivalent to the underlying operating system and software libraries, the basal ganglia and thalamus are equivalent to the control and routing code running on the CPU, and the cortex/cerebellum which implement the bulk of the learned computation are the equivalent of the various large matrix layers running on the GPU. A DL system has some module level innate connectivity specified by a few thousand to hundred thousand lines of code which defines the implicit initial connectivity between all neurons, equivalent to the genetically determined innate modular subdivisions and functional connectivity between different cortical/cerebellar/thalamar/BG circuit modules.

This is the framework in which all and any evidence from behavior genetics, psychology, etc must fit within.

Replies from: geoffreymiller↑ comment by geoffreymiller · 2022-10-21T01:15:03.941Z · LW(p) · GW(p)

Jacob -- thanks for your comment. It offers an interesting hypothesis about some analogies between human brain systems and computer stuff.

Obviously, there's not enough information in the human genome to specify every detail of every synaptic connection. Nobody is claiming that the genome codes for that level of detail. Just as nobody would claim that the genome specifies every position for every cell in a human heart, spine, liver, or lymphatic system.

I would strongly dispute that it's the job of 'behavior genetics, psychology, etc' to fit their evidence into your framework. On the contrary, if your framework can't handle the evidence for the heritability of every psychological trait ever studied that shows reliably measurable individual differences, then that's a problem for your framework.

I will read your essay in more detail, but I don't want to comment further until I do, so I'm sure that I understand your reasoning.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2022-10-21T01:35:00.188Z · LW(p) · GW(p)

I would strongly dispute that it's the job of 'behavior genetics, psychology, etc' to fit their evidence into your framework.

Not my framework, but that of modern neuroscience. Just as biology is constrained by chemistry which is constrained by physics.

I will read your essay in more detail, but I don't want to comment further until I do, so I'm sure that I understand your reasoning.

I just reskimmed it and it's not obviously out of date and still is a pretty good overview of the modern view of the brain from systems neuroscience which is mostly tabula rasa.

The whole debate of inherited traits is somewhat arbitrary based on what traits you consider.

Is the use of two spaces after a period an inheritable trait? Belief in marxism?

Genetics can only determine the high level hyperparameters and low frequency wiring of the brain - but that still allows a great deal to be inheritable, especially when one considers indirect correlations (eg basketball and height, curiosity drive and educational attainment, etc).

Replies from: geoffreymiller↑ comment by geoffreymiller · 2022-10-21T02:21:59.167Z · LW(p) · GW(p)

Jacob - I read your 2015 essay. It is interesting and makes some fruitful points.

I am puzzled, though, about when nervous systems are supposed to have evolved this 'Universal Learning Machine' (ULM) capability. Did ULMs emerge with the transition from invertebrates to vertebrates? From rat-like mammals to social primates? From apes with 400 cc brains to early humans with 1100 cc brains?

Presumably bumblebees (1 million neurons) don't have ULM capabilities, but humans (80 billion neurons) allegedly do. Where is the threshold between them -- given that bumblebees already have plenty of reinforcement learning capabilities?

I'm also puzzled about how the ULM perspective can accommodate individual differences, sex differences, mental disorders, hormonal influences on cognition and motivation, and all the other nitty-gritty wetware details that seem to get abstracted away.

For example, take sex differences in cognitive abilities, such as average male superiority on mental rotation tasks and female superiority on verbal fluency -- are you really arguing that men and women have identical ULM capabilities in their neocortexes that are simply shaped differently by their information inputs? And it just happens that these can be influenced by manipulating sex hormone levels?

Or, take the fact that some people start developing symptoms of schizophrenia -- such as paranoid thoughts and auditory hallucinations -- in their mid-20s. Sometimes this dramatic change in neocortical activity is triggered by over-use of amphetamines; sometimes it's triggered by a relationship breakup; often it reflects a heritable family propensity towards schizotypy. Would you characterize the onset of schizophrenia as just the ULM getting some bad information inputs?

Replies from: jacob_cannell, lahwran↑ comment by jacob_cannell · 2022-10-21T03:39:14.819Z · LW(p) · GW(p)

I am puzzled, though, about when nervous systems are supposed to have evolved this 'Universal Learning Machine' (ULM) capability.

The core architecture of brainstem, basal ganglia, thalamus and pallium/cortex is at least 500 million years old.

Where is the threshold between them