AIS terminology proposal: standardize terms for probability ranges

post by eggsyntax · 2024-08-30T15:43:39.857Z · LW · GW · 12 commentsContents

12 comments

Summary: The AI safety research community should adopt standardized terms for probability ranges, especially in public-facing communication and especially when discussing risk estimates. The terms used by the IPCC are a reasonable default.

Science communication is notoriously hard. It's hard for a lot of reasons, but one is that laypeople aren't used to thinking in numerical probabilities or probability ranges. One field that's had to deal with this more than most is climatology; climate change has been rather controversial, and a non-trivial aspect of that has been lay confusion about what climatologists are actually saying[1].

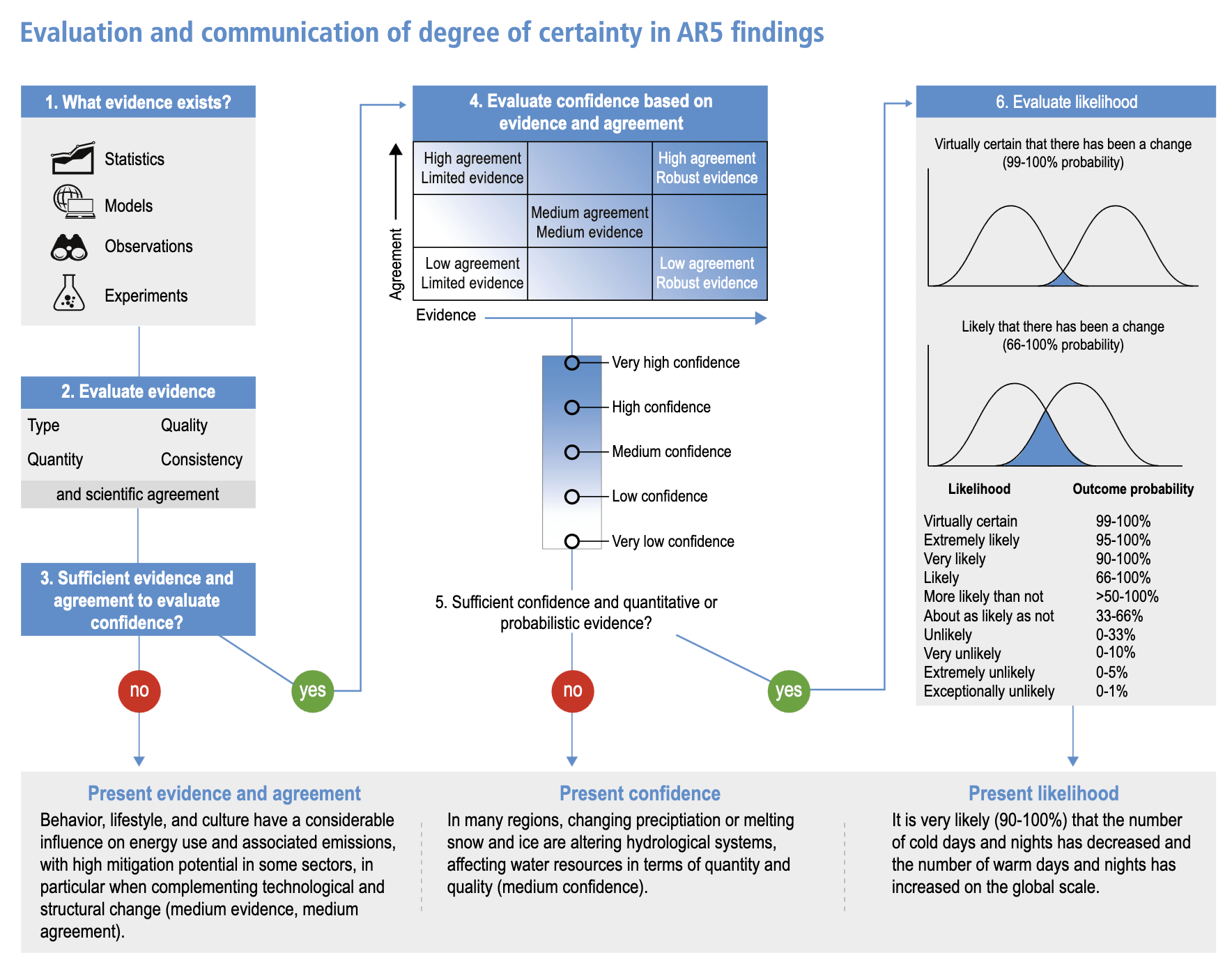

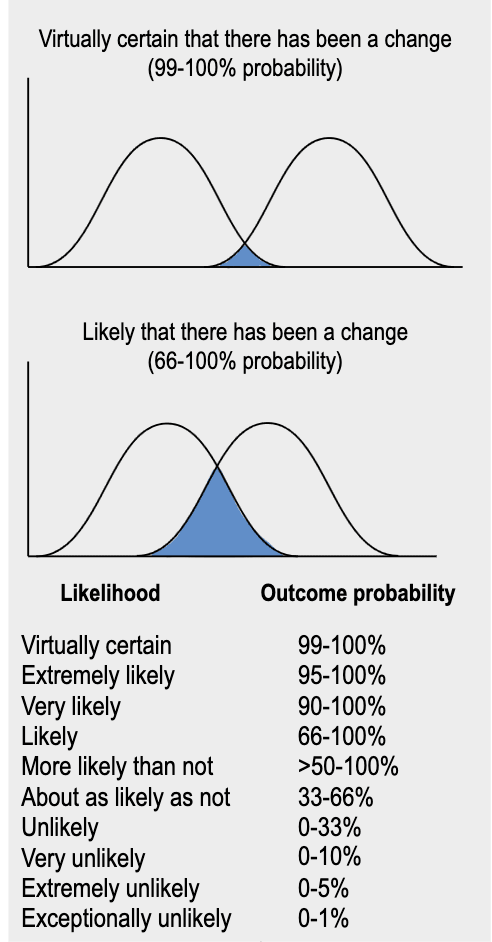

As a result, the well-known climate assessment reports from the UN's Intergovernmental Panel on Climate Change (IPCC) have, since the 1990s, used explicitly defined terms for probability ranges[2]:

(see below for full figure[3])

Like climatology, AI safety research has become a topic of controversy. In both cases, the controversy includes a mix of genuine scientific disagreement, good-faith confusion, and bad-faith opposition. Scientific disagreement comes from people who can deal with numerical probability ranges. Those who are arguing in bad faith from ulterior motives generally don't care about factual details. But I suspect that the large majority of those who disagree, especially laypeople, are coming from a place of genuine, good-faith confusion. For those people, anything we as practitioners can do to communicate more clearly is quite valuable.

Also like climatology, AI safety research, especially assessments of risk, fundamentally involves communicating about probabilities and probability ranges. Therefore I propose that the AIS community follow climatologists in adopting standard terms for probability ranges, especially in position papers and public-facing communication. In less formal and less public-facing contexts, using standard terminology still adds some value but is less important; in sufficiently informal contexts it's probably not worth the hassle of looking up the standard terminology.

Of course, in many cases it's better to just give the actual numerical range! But especially in public-facing communication it can be more natural to use natural language terms, and in fact this is already often done. I'm only proposing that when we do use natural language terms for probability ranges, we use them in a consistent and interpretable way (feel free to link to this post as a reference for interpretation, or point to the climatology papers cited below[2]).

Should the AIS community use the same terms? That's a slightly harder question. The obvious first-pass answer is 'yes'; it's a natural Schelling point, and terminological consistency across fields is generally preferable when practically possible. The IPCC terms also have the significant advantage of being battle-tested; they've been used over a thirty-year period in a highly controversial field, and terms have been refined when they were found to be insufficiently clear.

The strongest argument I see against using the same terms is that the AIS community sometimes needs to deal with more extreme (high or low) risk estimates than these. If we use 'virtually certain' to mean 99 - 100%, what terms can we use for 99.9 - 100.0%, or 99.99 - 100.00%? On the other hand, plausibly once we're dealing with such extreme risk estimates, it's increasingly important to communicate them with actual numeric ranges.

My initial proposal is to adopt the IPCC terms, but I'm very open to feedback, and if someone has an argument I find compelling (or which gets strong agreement in votes) for a different or extended set of terms, I'll add it to the proposal. If no such argument emerges, I'll clarify the proposal to be more clearly in favor of the IPCC terms.

For ease of copy/paste, here is the initial proposed terminology in table form:

| Virtually certain | 99-100% |

| Extremely likely | 95-100% |

| Very likely | 90-100% |

| Likely | 66-100% |

| More likely than not | >50-100% |

| About as likely as not | 33-66% |

| Unlikely | 0-33% |

| Very unlikely | 0-10% |

| Extremely unlikely | 0-5% |

| Exceptionally unlikely | 0-1% |

I look forward to your feedback.

Thanks to @davidad [AF · GW] and Stephen Casper for inspiring this proposal.

- ^

Eg: "It's so cold this week! Ha ha so much for global warming!" or "It's been proved climate change is going to kill everyone in the next twenty years, right?" Compare eg "Ha ha why would AI suddenly become conscious and start to hate humans?" or (pace EY) "So you're saying AI will definitely kill everyone, right?"

- ^

From figure 1.6 (page 157) of the most recent IPCC assessment report (2022), using terminology drawn from a number of papers from Katharine J. Mach and/or Michael D. Mastrandrea, eg "Unleashing expert judgment in assessment" (2017) and "Guidance Note...on Consistent Treatment of Uncertainties" (2010).

- ^

(note that this figure is labeled with 'AR5' but in fact is used in both AR5 and the current AR6)

12 comments

Comments sorted by top scores.

comment by eggsyntax · 2024-09-10T15:37:43.793Z · LW(p) · GW(p)

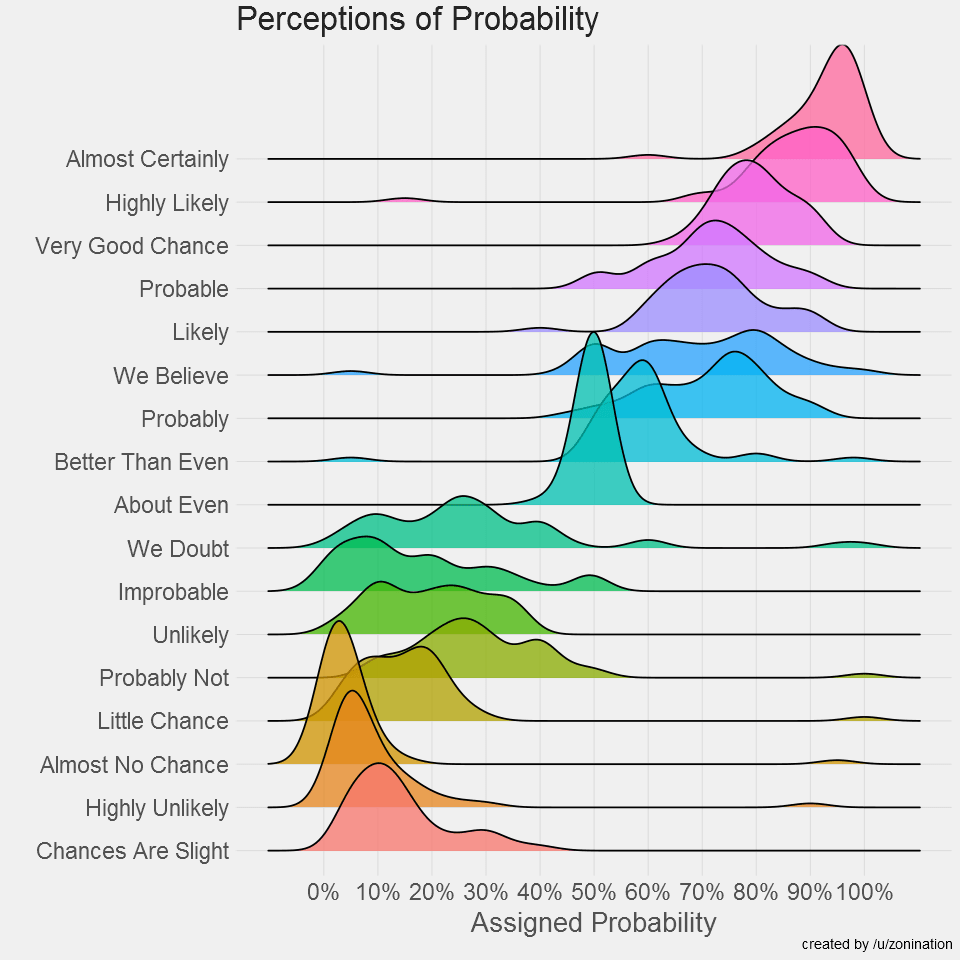

I've just run across this data visualization, with data gathered through polling /r/samplesize (approximately reproducing a 1999 CIA analysis, CIA chart here), showing how people tend to translate terms like 'highly likely' into numerical probabilities. I'm showing the pretty joyplot version because it's so very pretty, but see the source for the more conventional and arguably more readable whisker plots.

comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2024-09-11T09:35:04.561Z · LW(p) · GW(p)

I further suggest that if using these defined terms, instead of including a table of definitions somewhere you include the actual probability range or point estimate in parentheses after the term. This avoids any need to explain the conventions, and makes it clear at the point of use that the author had a precise quantitative definition in mind.

For example: it's likely (75%) that flipping a pair of fair coins will get less than two heads, and extremely unlikely (0-5%) that most readers of AI safety papers are familiar with the quantitative convention proposed above - although they may (>20%) be familiar with the general concept. Note that the inline convention allows for other descriptions if they make the sentence more natural!

Replies from: eggsyntax↑ comment by eggsyntax · 2024-09-11T13:42:03.627Z · LW(p) · GW(p)

Agreed that that's often an improvement! And as I say in the post, I do think that more often than not (> 50%) the best choice is to just give the numbers. My intended proposal is just to standardize in cases where the authors find it more natural to use natural language terms.

comment by eggsyntax · 2024-09-08T15:50:21.668Z · LW(p) · GW(p)

Somewhat relevant: 'The Effects of Communicating Uncertainty on Public Trust in Facts and Numbers' and @Jeffrey Heninger [LW · GW]'s discussion of it on the AI Impacts blog.

comment by Jeffrey Heninger (jeffrey-heninger) · 2024-09-12T00:39:25.127Z · LW(p) · GW(p)

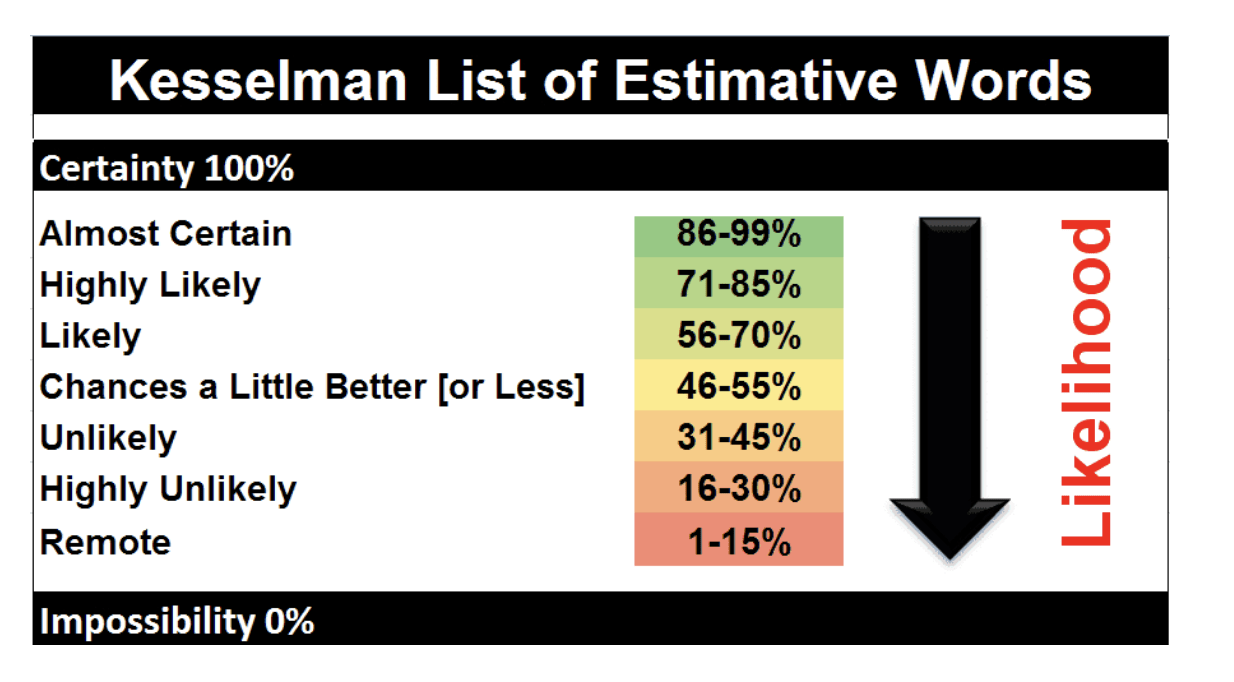

Climate change is not the only field to have defined words for specific probability ranges. The intelligence community has looked into this as well. They're called words of estimative probability.

Replies from: eggsyntaxcomment by Jett Janiak (jett) · 2024-08-30T17:55:15.339Z · LW(p) · GW(p)

Scott In Continued Defense Of Non-Frequentist Probabilities

Replies from: eggsyntaxcomment by Adam Jones (domdomegg) · 2024-09-13T18:27:34.426Z · LW(p) · GW(p)

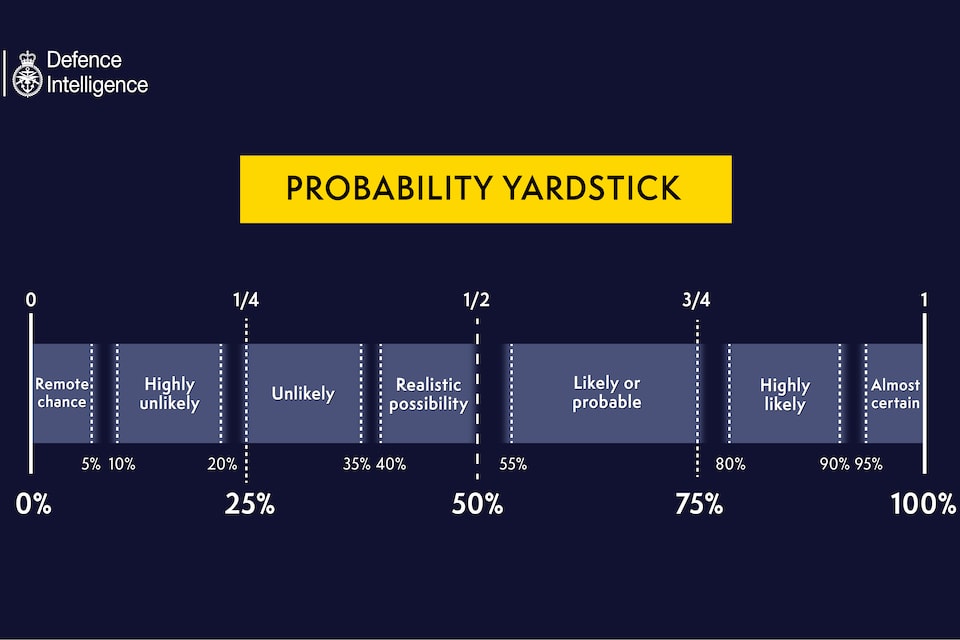

The UK Government tends to use the PHIA probability yardstick in most of its communications.

This is used very consistently in national security publications. It's also commonly used by other UK Government departments as people frequently move between departments in the civil service, and documents often get reviewed for clearance by national security bodies before public release.

It is less granular than the IPCC terms at the extremes, but the ranges don't overlap. I don't know which is actually better to use in AI safety communications, but I think being clear if you are using either in your writing seems a good way to go! In any case being aware it's a thing you'll see in UK Government documents might be useful.

Replies from: eggsyntax↑ comment by eggsyntax · 2024-09-15T21:01:15.236Z · LW(p) · GW(p)

Thanks! Quite similar to the Kesselman tags that @gwern [LW · GW] uses (reproduced in this comment [LW(p) · GW(p)] below), and I'd guess that one is decended from the other. Although it has somewhat different range cutoffs for each because why should anything ever be consistent.

Here are the UK ones in question (for ease of comparison):

comment by corruptedCatapillar · 2024-09-04T18:39:34.382Z · LW(p) · GW(p)

"The beginning of wisdom is the definition of terms." - (attributed to) Socrates

I'd argue that for any conversation between people to make progress, they have to have some agreeance on what they're talking about. A counter example, if I were to order a 1/4" bolt from someone and we have different measurement standards of what 1/4" is, I won't be able to build on what I've received from them. Consistency is the key characteristic that allows interoperability between multiple parties.

I appreciate greatly that Gwern uses confidence tags to convey this aspect, as it gives me a relative sense of how much I can trust a piece of evidence.

Maybe most important, I'd also argue that even if standardized usage is only used throughout the AIS community, that would be worthwhile in itself. Living in the Bay and participating in a lot of doom conversations, I believe a lot of nuance is missed and unjustly has people updating priors when having a standardized set of terms would allow people to notice when something seems off about another person's claim.

That said, even if this wouldn't help with informal discussions within the community, having more discrete terms to share would allow for higher quality information transfer between researchers, similar to the 1/4" bolt example above.

Replies from: eggsyntax↑ comment by eggsyntax · 2024-09-05T23:52:59.670Z · LW(p) · GW(p)

Oh, I hadn't seen Gwern's confidence tags. There's an interesting difference between the Kesselman list that Gwern draws from (screenshotted below) and my proposal above. The Kesselman terms refer to non-overlapping, roughly equal ranges, whereas the climatology usage I give above describe overlapping ranges of different sizes, with one end of the range at 0 or 100%.

Both are certainly useful. I think I'd personally lean toward the climatology version as the better one to adopt, especially for talking about risk; I think in general researchers are less likely to want to say that something is 56-70% likely than that it is 66-100% likely, ie that it's at least 66% likely. If I were trying to identify a relatively narrow specific range, I think I'd be more inclined to just give an actual approximate number. The climatology version, it seems to me is more primarily for indicating a level of uncertainty. When saying something is 'likely' (66-100%) vs 'very likely', in both cases you're saying that you think something will probably happen, but you're saying different things about your confidence level.

(@gwern [LW · GW] I'd love to get your take on that question as well, since you settled on the Kesselman list)

I've just posted a quick take [LW(p) · GW(p)] getting a bit further into the range of possible things that probabilities can mean; our ways of communicating about probabilities and probability ranges seem hideously inadequate to me.