Posts

Comments

I would also make the same prediction for Q > 10. Or when CFS first sells electricity to the grid. These will be farther into the future, but I do not think that this culture will have changed by then.

I think that I predict the opposite (conditional on what exactly is being predicted).

What exactly would count as a GPT-3 moment for fusion? How about an experiment demonstrating reactor-like conditions? This is roughly equivalent to what I referred to as 'getting fusion' in my book review.

My prediction is that, after Commonwealth Fusion Systems gets Q > 5 on SPARC, they will continue to supply or plan to supply HTS tape to at least 3 other fusion startups.

I agree that this is plausibly a real important difference, but I do not think that it is obvious.

The most recent augmentative technological change was the industrial revolution. It has reshaped virtually every every activity. It allowed for the majority of the population to not work in agriculture for the first time since the agricultural revolution.

The industrial revolution centered on energy. Having much cheaper, much more abundant energy allowed humans to use that energy for all sorts of things.

If fusion ends up being similar in cost to existing electricity production, it will be a substitutional technology. This is the thing that we are working on now (well, also making it work at all). People who work in fusion focus on this because it is the reasonable near/medium term projection. If fusion ends up being substantially cheaper, it will be an augmentative technology. It is not at all clear that this will happen, because we can't know how the costs will change between the first and thousandth fusion power plant.

Notably, we don't know if foom is going to be a thing either.

The narrative around the technology is at least as important as what has happened in the technology itself. The fusion community could frequently talk about how incredible the industrial revolution was, and how it powered Britain to global dominance for two centuries. A new source of energy might do the same thing ! But this is more hype than we feel we ought to offer, and the community's goal is not to create a dominant superpower.

Even if foom is going to happen, things would look very different if the leaders credibly committed to helping others foom if they are first. I don't know if this would be better or worse from a existential risk perspective, but it would change the nature of the race a lot.

Before. The 2022 survey responses were collected from June-August. ChatGPT came out at the end of November.

A few more thoughts on Ord's paper:

Despite the similarities, I think that there is some difference between Ord's notion of hyperbolation and what I'm describing here. In most of his examples, the extra dimension is given. In the examples I'm thinking of, what the extra dimension ought to be is not known beforehand.

There is a situation in which hyperbolation is rigorously defined: analytic continuation. This takes smooth functions defined on the real axis and extends them into the complex plane. The first two examples Ord gives in his paper are examples of analytic continuation, so his intuition that these are the simplest hyperbolations is correct.

More generally, solving a PDE from boundary conditions could be considered to be a kind of hyperbolation, although the result can be quite different depending on which PDE you're solving. This feels like substantially less of a new ability than e.g. inventing language.

Climate change is not the only field to have defined words for specific probability ranges. The intelligence community has looked into this as well. They're called words of estimative probability.

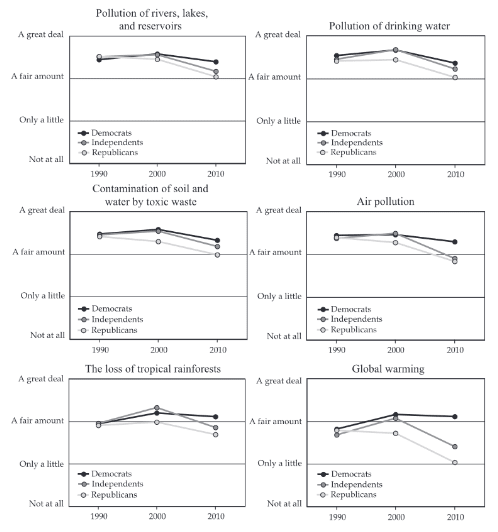

A lot of the emphasis is on climate change, which has become partisan than other environmental issues. But other environmental issues have become partisan as well. Here's some data from a paper from 2013 by D.L. Guber, "A cooling climate for change? Party polarization and the politics of global warming."

The poll you linked indicates that Republicans in the Mountain West are more concerned with the environmental than Republicans in the rest of the country. There is a 27 p.p. partisan gap on the energy vs environment question (p. 17) - much less than the 55 p.p. partisan gap for the country as a whole. The partisan gap for whether "a public official's position on conservation issues will be an important factor in determining their support" is 22 p.p. (p. 13), with clear majorities in both parties. Climate change is somewhat less of a concern than other issues, which I would guess is because it is more partisan, but not by that much (p. 21).

In the Mountain West, it looks like there is some partisanship for environmental issues, but only the amount we would expect for a generic issue in the US, or for environmentalism in another country. This is consistent with environmentalism being extremely partisan on average over the entire country. The Mountain West is less than a tenth of the country's population and has an unusually impressive natural environment.

Environmentalism started to became partisan around 1990. Nixon & Reagan both spoke of the environment in these terms.

I think that this is a coincidence. Japan has low partisanship for environmentalism and has less nuclear power than most developed countries (along with low overall partisanship). The association would be between three things: (1) low partisanship for environmentalism, (2) high overall partisanship, and (3) lots of nuclear power plants. There aren't enough countries to do this kind of correlation.

From the introduction to the last post in this sequence:

Environmentalists were not the only people making significant decisions here. Fossil fuel companies and conservative think tanks also had agency in the debate – and their choices were more blameworthy than the choices of environmentalists. Politicians choose who they do and do not want to ally with. My focus is on the environmental movement itself, because that is similar to what other activist groups are able to control.

The motivation for this report was to learn what the AI safety movement should do to keep from becoming partisan. 'Meta doesn't lobby the government' isn't an action the AI safety movement can take.

http://aiimpacts.org/wp-content/uploads/2023/04/Why-Did-Environmentalism-Become-Partisan-1.pdf

Thank you !

The links to the report are now fixed.

The 4 blog posts cover most of the same ground as the report. The report goes into more detail, especially in sections 5 & 6.

I think this is true of an environmentalist movement that wants there to be a healthy environment for humans; I'm not sure this is true of an environmentalist movement whose main goal is to dismantle capitalism.

I talk about mission creep in the report, section 6.6.

Part of 'making alliances with Democrats' involved environmental organizations adopting leftist positions on other issues.

Different environmental organizations have seen more or less mission creep. The examples I give in the report are the women's issues for the World Wildlife Fund:

In many parts of the developing world, women of all ages play a critical role in managing natural resources, which they rely on for food, water, medicine, and fuel wood for their families. Yet they often are excluded from participating in decisions about resource use.[1]

and the Sierra Club:

The Sierra Club is a pro-choice organization that endorses comprehensive, voluntary reproductive health care for all. Sexual and reproductive health and rights are inalienable human rights that should be guaranteed for all people with no ulterior motive. A human rights-based approach to climate justice centers a person’s bodily autonomy and individual choice.[2]

It's hard to date exactly when many of this positions were adopted by major environmental organizations, but my impression is sometime in the 1990s or 2000s. That's when the Sierra Club started making presidential endorsements and when several major environmental organizations started promoting environmental justice.

This mission creep is part of the story. Allowing mission creep into controversial positions that are not directly related to the movement’s core goals makes it harder to build bipartisan coalitions.

- ^

“Women and girls,” World Wildlife Fund, Accessed: March 28, 2024. https://www.worldwildlife.

org/initiatives/women-and-girls. - ^

The Sierra Club and population issues,” Sierra Club, Accessed: March 28, 2024. https://www.sier

raclub.org/sierra-club-and-population-issues.The title for this page is not explicitly about gender, but to get to this page from the “People & Justice” page, you click on “Read more” in the section: “And our future depends on gender equity.”

This is trying to make environmentalism become partisan, but in the other direction.

Environmentalists could just not have positions on most controversial issues, and instead focus more narrowly on the environment.

There is also the far right in France, which is not the same as the right wing in America, but is also not Joe Biden. From what I can tell, the far right in France supports environmentalism.[1]

Macron & Le Pen seem to have fairly similar climate policies. Both want France's electricity to be mostly nuclear – Le Pen more so. Both are not going to raise fuel taxes – Macron reluctantly. Le Pen talks more about hydrogen and reshoring manufacturing from countries which emit more (and claims that immigration is bad for France's environmental goals). Macron supports renewables in addition to nuclear power. The various leftists seem to be interested in phasing out nuclear & replacing it with renewables. None of the parties dismiss climate change as an issue and all are committed to following international climate agreements.

- ^

Kate Aronoff. Marine Le Pen’s Climate Policy Leans Ecofascist. The New Republic. (2022) https://newrepublic.com/article/166097/marine-le-pens-climate-policy-whiff-ecofascism.

I think it was possible for the environmental movement to form alliances with politicians in both parties, and for environmentalism to have remained bipartisan.

Comparing different countries and comparing the same country at different times is not the same thing as a counterfactual, but it can be very helpful for understanding counterfactuals. In this case, the counterfactual US is taken to be similar to the US in the 1980s or to the UK, France, or South Korea today.

I think you should ask the author of the song if it's referring to someone using powerful AI to do something transformative to the sun.

This is extremely obvious to me. The song is opposed to how the sun currently is, calling it "wasteful" and "distasteful" - the second word is a quote from a fictional character, but the first is not. It later talks about when "the sun's a battery," so something about the sun is going to change. I really don't know what "some big old computer" could be referring to if not powerful AI.

Thank you for responding! I am being very critical, both in foundational and nitpicky ways. This can be annoying and make people want to circle the wagons. But you and the other organizers are engaging constructively, which is great.

The distinction between Solstice representing a single coherent worldview vs. a series of reflections also came up in comments on a draft. In particular, the Spinozism of Songs Stay Sung feels a lot weirder if it is taken as the response to the darkness, which I initially did, rather than one response to the darkness.

Nevertheless, including something in Solstice solidly establishes it as a normal / acceptable belief for rationalists: within the local Overton Window. You might not be explicitly telling people that they ought to believe something, but you are telling that it is acceptable for high status people in their community to believe it. I am concerned that some of these beliefs are even treated as acceptable.

Take Great Transhumanist Future. It has "a coder" dismantling the sun "in another twenty years with some big old computer." This is a call to accelerate AI development, and use it for extremely transformative actions. Some of the organizers believe that this is the sort of thing that will literally kill everyone. Even if it goes well, it would make life as it currently exists on the surface of the Earth impossible. Life could still continue in other ways, but some of us might want to still live here in 20 years.[1] I don't think that reckless AI accelerationism should be treated as locally acceptable.

The line in Brighter Than Today points in the same way. It's not only anti-religious. It is also disparaging towards people who warn about the destructive potential of a new technology. Is that an attitude we want to establish as normal?

If the main problem with changing the songs is in making them scan and rhyme, then I can probably just pay that cost. This isn't a thing I'm particularly skilled at, but there are people who are who are adjacent to the community. I'm happy to ask them to rewrite a few lines, if the new versions will plausibly be used.

If the main problem with changing the songs is that many people in this community want to sing about AI accelerationism and want the songs to be anti-religious, then I stand by my criticisms.

- ^

Is this action unilateral? Unclear. There might be a global consensus building phase, or a period of reflection. They aren't mentioned in the song. These processes can't take very long given the timelines.

The London subway was private and returned enough profit to slowly expand while it was coal powered. Once it electrified, it became more profitable and expanded quickly.

The Baltimore tunnel was and is part of an intercity line that is mostly above ground. It was technologically similar to London, but operationally very different.

I chose the start date of 1866 because that is the first time the New York Senate appointed a committee to study rapid transit in New York, which concluded that New York would be best served by an underground railroad. It's also the start date that Katz uses.

The technology was available. London opened its first subway line in 1863. There is a 1.4 mi railroad tunnel from 1873 in Baltimore that is still in active use today. These early tunnels used steam engines. This did cause ventilation challenges, but they were resolvable. The other reasonable pre-electricity option would be to have stationary steam engines at a few places, open to the air, that pulled cables that pulled the trains. There were also some suggestions of dubious power mechanisms, like the one you described here. None of the options were as good as electric trains, but some of them could have been made to work.

This is not a global technological overhang, because there continued to be urban railroad innovation in other cities. It would only be overhang for New York City. This is a more restrictive definition of overhang than I used in my previous post, but it might still be interesting to see what happened with local overhang.

The original version of the song reads to me as being deist or pantheist. You could replace 'God' with 'Nature' and the meaning would be almost the same. My view of Divinely Guided Evolution has a personal God fiddling with random mutations and randomly determined external factors to create the things He wants.

It is definitely anti-Young-Earth-Creationism, but it is also dismissive of the Bible. Even if you don't think that Genesis 1 should be treated as a chronology, I think that you should take the Bible seriously. Its commentary on what it means to be human is important.

Many of these seem reasonable. The "book of names" sounds to me like the Linnaean taxonomy, while the "book of night" sounds like astronomical catalogues. I don't know as much about geology, but the "book of earth" could be geological surveys.

This kind of science is often not exciting. Rutherford referred to it as "stamp collecting." It is very useful for the practice of future generations of scientists. For example, if someone wants to do a survey of various properties of binary star systems, they don't have to find a bunch of examples themselves (and worry about selection effects) because someone else has already done it and listed them in a catalogue. It is nice to celebrate this kind of thankless work.

The closing lines are weird: "Humans write the book of truth... Truth writes the world." This sounds like constructivist epistemology. The rest of the song has empiricist epistemology: Truth is determined by the external world, not written by humans. Maybe something like "Humans can read the book of truth.... Truth comes from the world." (Although this adds syllables...)

If it were done at Lighthaven, it would have to be done outdoors. This does present logistical problems.

I would guess that making Lighthaven's outdoor space usable even if it rains would cost much less (an order of magnitude?) than renting out an event space, although it might cost other resources like planning time that are in more limited supply.

If Lighthaven does not want to subsidize Solstice, or have the space reserved a year in advance, then that would make this option untenable.

It's also potentially possible to celebrate Solstice in January, when event spaces are more available.

Staggering the gathering in time also works. Many churches repeat their Christmas service multiple times over the course of the day, to allow more people to come than can fit in the building.

There's another reason for openness that I should have made clearer. Hostility towards Others is epistemically and ethically corrosive. It makes it easier to dismiss people who do agree with you, but have different cultural markers. If a major thing that unifies the community is hostility to an outgroup, then it weakens the guardrails against actions based on hate or spite. If you hope to have compassion for all conscious creatures, then a good first step is to try to have compassion for the people close to you who are really annoying.

Christianity seems to be unusually open to everyone, but I think this is partially a side effect of evangelism.

I endorse evangelism broadly. If you think that your beliefs are true and good, then you should be trying to share them with more people. I don't think that this openness should be unusual, because I'd hope that most ideologies act in a similar way.

So I think the direction in which you would want Solstice to change -- to be more positive towards religion, to preach humility/acceptance rather than striving/heroism -- is antithetical to one of Solstice's core purposes.

While I would love to see the entire rationalist community embrace the Fulness of the Gospel of Christ, I am aware that this is not a reasonable ask for Solstice, and not something I should bet on in a prediction market. While I criticize the Overarching Narrative, I am aware that this is not something that I will change.

My hopes for changing Solstice are much more modest:

- Remove the inessential meanness directed towards religion. There already has been some of this, which is great ! Time Wrote the Rocks no longer falsely claims that the Church tortured Galileo. The Ballad of Smallpox Gone no longer has a verse claiming that preachers want to "Screw the body, save the soul // Bring new deaths off the shelves". Now remove the human villains from Brighter Than Today, and you've improved things a lot.

- Once or twice, acknowledge that some of the moral giants whose shoulders we're standing on were Christian. The original underrated reasons to be thankful had one point about Quaker Pennsylvania. Unsong's description of St. Francis of Assisi also comes to mind. If you're interested, I could make several other suggestions of things that I think could be mentioned without disrupting the core purposes of Solstice.

also it’s a lot more work to setup

How hard would it be to project them? There was a screen, and it should be possible to project at least two lines with music large enough for people to read. Is the problem that we don't have sheet music that's digitized in a way to make this feasible for all of the songs?

This is more volunteer-based than I was expecting. I would have guessed that Solstice had a lot of creative work, the choir, and day-of work done by volunteers, but that the organizers and most of the performers were paid (perhaps below market rates). As it is, it is probably more volunteer-based than most Christmas programs.

I'll edit the original post to say that this suggestion is already being followed.

This kind of situation is dealt with in Quine's Two Dogmas of Empiricism, especially the last section, "Empiricism Without the Dogmas." This is a short (~10k words), straightforward, and influential work in the philosophy of science, so it is really worth reading the original.

Quine describes science as a network of beliefs about the world. Experimental measurements form a kind of "boundary conditions" for the beliefs. Since belief space is larger than the space of experiments which have been performed, the boundary conditions meaningfully constrain but do not fully determine the network.

The totality of our so-called knowledge or beliefs, from the most casual matters of geography and history to the profoundest laws of atomic physics or even of pure mathematics and logic, is a man-made fabric which impinges on experience only along the edges. Or, to change the figure, total science is like a field of force whose boundary conditions are experience. A conflict with experience at the periphery occasions readjustments in the interior of the field.

Some beliefs are closer to the core of the network: changing them would require changing lots of other beliefs. Some beliefs are closer to the periphery: changing them would change your beliefs about a few contingent facts about the world, but not much else.

In this example, the belief in Newton's laws are much closer to the core than the belief in the stability of this particular pendulum.[1]

When an experiment disagrees with our expectations, it is not obvious where the change should be made. It could be made close to the edges, or it could imply that something is wrong with the core. It is often reasonable for science (as a social institution) to prefer changes made in the periphery over changes made in the core. But this is not always the implication the experiment makes.

A particular example that I am fond of involves the perihelion drifts of Uranus and Mercury. By the early 1800s, there was good evidence that the orbits of both planets were different from what Newtonian mechanics predicted. Both problems would be resolved by the mid 1900s, but the resolutions were very different. The unexpected perihelion drift of Uranus was explained by the existence of another planet in our solar system: Neptune. The number of planets in our solar system is a periphery belief: changing it does not require many other beliefs to change. People then expected that Mercury's unexpected perihelion drift would have a similar cause: a yet undiscovered planet close to the sun, which they named Vulcan. This was wrong.[2] Instead, the explanation was the Newtonian mechanics was wrong and had to be replaced by general relativity. Even though the evidence in both cases was the same, they implied that there should be changes made at different places in the web of beliefs.

- ^

Also, figuring things out in hindsight is totally allowed in science. Many of our best predictions are actually postdictions. Predictions are more impressive, but postdictions are evidence too.

The biggest problem these students have is being too committed to not using hindsight.

- ^

I would say that this planet was not discovered, except apparently in 1859 a French physician / amateur astronomer named Lescarbault observed a black dot transiting the sun which looked like a planet with an orbital period of 19 days.

I would say that this observation was not replicated, except it was. Including by professional astronomers (Watson & Swift) who had previously discovered multiple asteroids and comets. It was not consistently replicated, and photographs of solar eclipses in 1901, 1905, and 1908 did not show it.

What should we make of these observations?

There's always recourse to extremely small changes right next to the empirical boundary conditions. Maybe Lescarbault, Watson, Swift, & others were mistaken about what they saw. Or maybe they were lying. Or maybe you shouldn't even believe my claim that they said this.

These sorts of dismissals might feel nasty, but they are an integral part of science. Some experiments are just wrong. Maybe you figure out why (this particular piece of equipment wasn't working right), and maybe you don't. Figuring out what evidence should be dismissed, what evidence requires significant but not surprising changes, and what evidence requires you to completely overhaul your belief system is a major challenge in science. Empiricism itself does not solve the problem because, as Quine points out, the web of beliefs is underdetermined by the totality of measured data.

I'm currently leaning towards

- kings and commonwealths and all

Tokamaks have been known for ages. We plausibly have gotten close to the best performance out of them that we could, without either dramatically increasing the size (ITER) or making the magnets significantly stronger. The high temperature superconducting[1] 'tape' that Commonwealth Fusion has pioneered has allowed us to make stronger magnetic fields, and made it feasible to build a fusion power plant using a tokamak the size of JET.

After SPARC, Commonwealth Fusion plans to build ARC, which should actually ship electricity to customers. ARC should have a plasma energy gain of Q~13, and engineering energy gain of about 3, and produce about 250 MWe. They haven't made any public commitments about when they expect ARC to be built and selling electricity to the grid, but there has been some talk about the early 2030s.[2]

- ^

The higher temperature is not really what we care about. What we really want is higher magnetic field. These two properties go together, so we talk about 'high temperature superconductors', even if we're planning on running it at the same temperature as before and making use of the higher magnetic fields.

- ^

You don't need to have any insider information to make this estimate. Construction of SPARC is taking about 4 years. If we start when SPARC achieves Q>5 (2026?), add one year to do the detailed engineering design for ARC, and then 4 years to construct ARC, and maybe a year of initial experiments to make sure it works as expected, then we're looking at something around 2032. You might be able to trim this timeline a bit and get it closer to 2030, or some of these steps might take longer. Conditional on SPARC succeeding at Q>5 by 2028, it seems pretty likely that ARC will be selling electricity to the grid by 2035.

OpenAI has to face off against giants like Google and Facebook, as well as other startups like Anthropic. There are dozens of other organizations in this space, although most are not as competitive as these.

Commonwealth Fusion has to face off against giants like ITER (funding maybe $22B, maybe $65B, estimates vary) and the China National Nuclear Corporation (building CFETR at ?? cost, while a much smaller experiment in China cost ~$1B), as well as other startups like Helion. The Fusion Industry Association has 37 members, which are all private companies trying to get fusion.

There's probably currently more private investment in AI, and more public investment in fusion. Many of the investments are not publicly available, so a direct comparison between the entire fields is difficult. I choose to focus on two startups with available data that seem to be leading in their respective fields.

I thought about including valuation in the table as well, but decided against it:

- I'm not sure how accurate startup valuations are. It make be less clear how to interpret what the funding received means, but the number is easier to measure accurately.

- These are young companies, so the timing of the valuation matters a lot. OpenAI's valuation is recent, or 8 years after the company was founded. Commonwealth Fusion's valuation is from 2 years ago, or 4 years after the company was founded. If each had multiple valuations, then I would have made a graph like Figure 1 for this.

The cost to build a tokamak that is projected to reach Q~10 has fallen by more than a factor of 10 in the last 6 years. CFS is building for $2B what ITER is building for maybe $22B, maybe $65B (cost estimates vary).

It's really not clear what the cost of fusion will end up being once it becomes mass produced.

Helion has raised a similar amount of capital as Commonwealth: $2.2B. Helion also has hundreds of employees: their LinkedIn puts them in the 201-500 employees category. It was founded in 2013, so it is a bit older than CFS or OpenAI.

My general sense is that there's more confidence in the plasma physics community that CFS will succeed than that Helion will succeed.

SPARC is a tokamak, and tokamaks have been extensively studied. SPARC is basically JET with a stronger magnetic field, and JET has been operational since the 1980s and has achieved Q=0.67. It's only a small extrapolation to say that SPARC can get Q>1. Getting to Q~10 involves more extrapolating of the empirical scaling laws and trusting numerical simulations, but these are scaling laws and simulations that much of the plasma physics community has been working on for decades.

Helion uses a different design. This design has been tested much less, and far fewer plasma physicists have worked on it. They also haven't published as much data from their most recent experiment: last time I checked, they had published the temperature they had reached on Trenta, but not the density or confinement time. Maybe the unpublished results are really good, and suggest that the scaling that has worked so far will continue to work for Polaris, but maybe they're not. It's plausible that Polaris will get Q>1 when it is built (planned for 2024), but I'm not as confident about it.

Also, Helion uses D-He3 rather than D-T. This means that they produce far fewer and less energetic neutrons, but it means that their targets for temperature and density / confinement time are an order of magnitude higher. Even if you think D-He3 is better in the long term (and it's not clear that it is), using D-T for initial experiments is easier.

I have now looked into this example, and talked to Bean at Naval Gazing about it.

I found data for the total tonnage in many countries' navies from 1865-2011. It seems to show overhang for the US navy during the interwar years, and maybe also for the Italian navy, but not for any of the other great powers.

Bean has convinced me that this data is not to be trusted. It does not distinguish between warships and auxiliary ships, or between ships in active duty and in reserve. It has some straightforward errors in the year the last battleship was decommissioned and the first nuclear submarine was commissioned. It seems to be off by a factor of 10 at some points. There seems to not be a dataset covering this time period that is even accurate to within a factor of 2 for me to do this analysis with.

Even if the data were reliable, it's not clear how relevant they would be. It seems as though the total tonnage in the navy of an industrialized country is mostly determined by the international situation, rather than anything about the technology itself. If the trend is going up, that mostly means that the country is spending a larger fraction of its GDP on the navy.

I don't think that this changes the conclusions of the post much. Overhang is uncommon. It happened partially for land speed records, but it was not sustained and this is not a technology that people put much effort into. Something that looks sort of similar might have happened with the US navy during the interwar period, but not for the navies of any of the other great powers, and the underlying dynamics seem to be different.

The examples are things that look sort of like overhang, but are different in important ways. I did not include the hundreds of graphs I looked through that look nothing like overhang.

Your post reads, to me, as saying, "Better algorithms in AI may add new s-curves, but won't jump all the way to infinity, they'll level off after a while."

The post is mostly not about either performance s-curves or market size s-curves. It's about regulation imposing a pause on AI development, and whether this would cause catch-up growth if the pause is ended.

Stacked s-curves can look like a pause + catch-up growth, but they are a different mechanism.

The Soviet Union did violate the Biological Weapons Convention, which seems like an example of "an important, binding, ratified arms treaty." It did not lead to nuclear war.

I did not look at the Washington Naval Conference as a potential example. It seems like it might be relevant. Thank you !

It seems to me that governments now believe that AI will be significant, but not extremely advantageous.

I don't think that many policy makers believe that AI could cause GDP growth of 20+% within 10 years. Maybe they think that powerful AI would add 1% to GDP growth rates, which is definitely worth caring about. It wouldn't be enough for any country which developed it to become the most powerful country in the world within a few decades, and would be an incentive in line with some other technologies that have been rejected.

The UK has AI as one of their "priority areas of focus", along with quantum technologies, engineering biology, semiconductors and future telecoms in their International Technology Strategy. In the UK's overall strategy document, 'AI' is mentioned 15 times, compared to 'cyber' (45 times), 'nuclear' (43), 'energy' (37), 'climate' (30), 'space' (17), 'health' (15), 'food' (8), 'quantum' (7), 'green' (6), and 'biology' (5). AI is becoming part of countries' strategies, but I don't think it's at the forefront. The UK government is more involved in AI policy than most governments.

The impression of incuriosity is probably just because I collapsed my thoughts into a few bullet points.

The causal link between human intelligence and neurons is not just because they're both complicated. My thought process here is something more like:

- All instances of human intelligence we are familiar with are associated with a brain.

- Brains are built out of neurons.

- Neurons' dynamics looks very different from the dynamics of bits.

- Maybe these differences are important for some of the things brains can do.

It feels pretty plausible that the underlying architecture of brains is important for at least some of the things brains can do. Maybe we will see multiple realizability where similar intelligence can be either built on a brain or on a computer. But we have not (yet?) seen that, even for extremely simple brains.

I think both that we do not know how to build a superintelligence and that if we knew how to model neurons, silicon chips would run it extremely slowly. Both things are missing.

Brains do these kinds of things because they run algorithms designed to do these kinds of things.

If by 'algorithm', you mean thing-that-does-a-thing, then I think I agree. If by 'algorithm', you mean thing-that-can-be-implemented-in-python, then I disagree.

Perhaps a good analogy comes from quantum computing.* Shor's algorithm is not implementable on a classical computer. It can be approximated by a classical computer, at very high cost. Qubits are not bits, or combinations of bits. They have different underlying dynamics, which makes quantum computers importantly distinct from classical computers.

The claim is that the brain is also built out of things which are dynamically distinct from bits. 'Chaos' here is being used in the modern technical sense, not in the ancient Greek sense to mean 'formless matter'. Low dimensional chaotic systems can be approximated on a classical computer, although this gets harder as the dimensionality increases. Maybe this grounds out in some simple mesoscopic classical system, which can be easily modeled with bits, but it seems likely to me that it grounds out in a quantum system, which cannot.

* I'm not an expert in quantum computer, so I'm not super confident in this analogy.

I don't believe that "current AI is at human intelligence in most areas". I think that it is superhuman in a few areas, within the human range in some areas, and subhuman in many areas - especially areas where the things you're trying to do are not well specified tasks.

I'm not sure how to weight people who think most about how to build AGI vs more general AI researchers (median says HLAI in 2059, p(Doom) 5-10%) vs forecasters more generally. There's a difference in how much people have thought about it, but also selection bias: most people who are skeptical of AGI soon are likely not going to work in alignment circles or an AGI lab. The relevant reference class is not the Wright Brothers, since hindsight tells us that they were the ones who succeeded. One relevant reference class is the Society for the Encouragement of Aerial Locomotion by means of Heavier-than-Air Machines, founded in 1863, although I don't know what their predictions were. It might also make sense to include many groups of futurists focusing on many potential technologies, rather than just on one technology that we know worked out.

From Yudkowsky's description of the AI-Box Experiment:

The Gatekeeper party may resist the AI party’s arguments by any means chosen – logic, illogic, simple refusal to be convinced, even dropping out of character – as long as the Gatekeeper party does not actually stop talking to the AI party before the minimum time expires.

One of the tactics listed on RationalWiki's description of the AI-box experiment is:

Jump out of character, keep reminding yourself that money is on the line (if there actually is money on the line), and keep saying "no" over and over

The Lord of the Rings tells us that the hobbit’s simple notion of goodness is more effective at resisting the influence of a hostile artificial intelligence than the more complicated ethical systems of the Wise.

The miscellaneous quotes at the end are not directly connected to the thesis statement.

In practice, smoothness interacts with measurement: we can usually measure the higher-order bits without measuring lower-order bits, but we can’t easily measure the lower-order bits without the higher-order bits. Imagine, for instance, trying to design a thermometer which measures the fifth bit of temperature but not the four highest-order bits. Probably we’d build a thermometer which measured them all, and then threw away the first four bits! Fundamentally, it’s because of the informational asymmetry: higher-order bits affect everything, but lower-order bits mostly don’t affect higher-order bits much, so long as our functions are smooth. So, measurement in general will favor higher-order bits.

There are examples of measuring lower-order bits without measuring higher-order bits. If something is valuable to measure, there's a good chance that someone has figured out a way to measure it. Here is the most common example of this that I am familiar with:

When dealing with lasers, it is often useful to pass the laser through a beam splitter, so part of the beam travels along one path and part of the beam travels along a different path. These two beams are often brought back together later. The combination might have either constructive or destructive interference. It has constructive interference if the difference in path lengths is an integer multiple of the wavelength, and destructive interference if the difference in path length is a half integer multiple of the wavelength. This allows you to measure changes in differences in path lengths, without knowing how many wavelengths either path length is.

One place this is used is in LIGO. LIGO is an interferometer with two multiple kilometer long arms. It measures extremely small ( $ 10^{-19} $ m) changes in the difference between the two arm lengths caused by passing gravitational waves.

It seems like your comment is saying something like:

These restrictions are more relevant to an Oracle than to other kinds of AI.

Unfortunately, decisions about units are made by a bunch of unaccountable bureaucrats. They would rather define the second in terms that only the techno-aristocracy can understand instead of using a definition that everyone can understand. It's time to turn control over our systems of measurement back to the people !

#DemocratizeUnits

Adding a compass is unlikely to also make the bird disoriented when exposed to a weak magnetic field which oscillates at the right frequency. Which means that the emulated bird will not behave like the real bird in this scenario.

You could add this phenomenon in by hand. Attach some detector to your compass and have it turn off the compass when these fields are measured.

More generally, adding in these features ad hoc will likely work for the things that you know about ahead of time, but is very unlikely to work like the bird outside of its training distribution. If you have a model of the bird that includes the relevant physics for this phenomenon, it is much more likely to work outside of its training distribution.