Frames in context

post by Richard_Ngo (ricraz) · 2023-07-03T00:38:52.078Z · LW · GW · 9 commentsContents

9 comments

In my previous post [LW · GW], I introduced meta-rationality and frames, and described some examples of frames and some of their properties. In this post I’ll outline some of the limitations of existing ways of thinking about cognition, and some of the dynamics that they can’t describe which meta-rationality can. This post (especially the second half) can be seen as a summary of the key ideas from the rest of the sequence; if you find it too dense, feel free to skip it and come back after reading the next five posts. To quickly list my main claims:

- Unlike logical propositions, frames can’t be evaluated as discretely true or false.

- Unlike Bayesian hypotheses, frames aren’t mutually exclusive, and can overlap with each other. This (along with point #1) means that we can’t define probability distributions of credences over frames.

- Unlike in critical rationalism, we evaluate frames (partly) in terms of how true they are (based on their predictions) rather than just whether they’ve been falsified or not.

- Unlike Garrabrant traders and Rational Inductive Agents, frames can output any combination of empirical content (e.g. predictions about the world) and normative content (e.g. evaluations of outcomes, or recommendations for how to act).

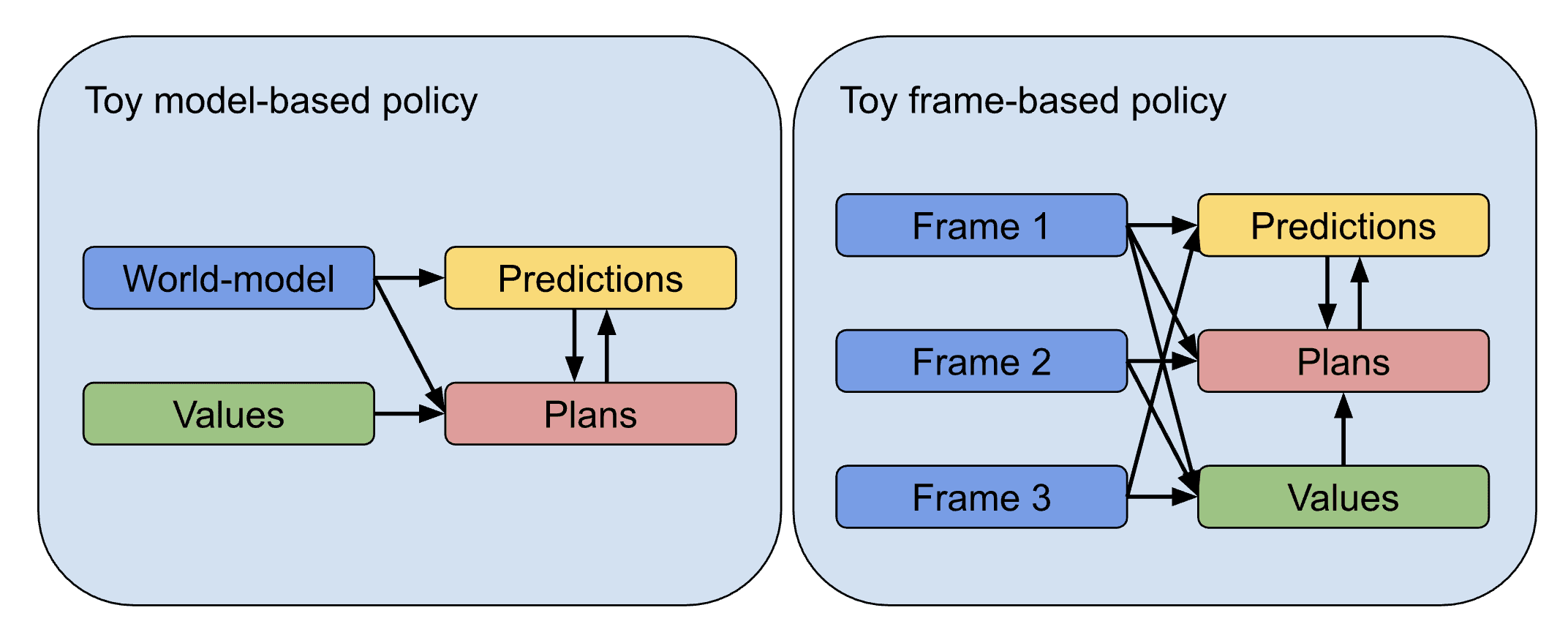

- Unlike model-based policies, policies composed of frames can’t be decomposed into modules with distinct functions, because each frame plays multiple roles.

- Unlike in multi-agent RL, frames don’t interact independently with their environment, but instead contribute towards choosing the actions of a single agent.

I’ll now explain these points in more detail. Epistemology typically focuses on propositions which can (at least in principle) be judged true or false. Traditionally, truth and knowledge are both taken as binary criteria: each proposition is either true or false, and we either know which it is or we don’t. Intuitively speaking, though, this doesn’t match very well with our everyday experience. There are many propositions which are kinda true, or which we kinda know: cats are (mostly) carnivorous (I think); Bob is tall(ish, if I’m looking at the right person); America is beautiful (in some ways, by my current tastes).

The most straightforward solution to the problem of uncertainty is to assign credences based on how much evidence we have for each proposition. This is the bayesian approach, which solves a number of “paradoxes” in epistemology. But there’s still the question: what are we assigning credences to, if not to the proposition being discretely true or false? You might think that we can treat propositions which are “kinda true” (aka fuzzily true) as edge cases—but they’re omnipresent not only in everyday life, but also when thinking about more complex topics. Consider a scientific theory like Darwinian evolution. Darwin got many crucial things right, when formulating his theory; but there were also many gaps and mistakes. So applying a binary standard of truth to the theory as a whole is futile: even though some parts of Darwin’s original theory were false or too vague to evaluate, the overall theory was much better than any other in that domain. The mental models which we often use in our daily lives (e.g. our implicit models of how bicycles work), and all the other examples of frames I listed at the beginning of this post, can also be seen as “kinda but not completely true”. (From now on I’ll use “models” as a broad term which encompasses both scientific theories and informal mental models.)

Not being “completely true” isn’t just a limitation of our current models, but a more fundamental problem. Perhaps we can discover completely true theories in physics, mathematics, or theoretical CS. But in order to describe high-level features of the real world, it’s always necessary to make simplifying assumptions and use somewhat-leaky abstractions, and so those models will always face a tradeoff between accuracy and usefulness. For example, Newtonian mechanics is less true than Einsteinian relativity, but still “close enough to true” that we use it in many cases, whereas Aristotelian physics isn’t.

I’m implicitly appealing here to the idea that we can categorize and compare “how true” different models are. This often makes intuitive sense—as Isaac Asimov put it: “When people thought the earth was flat, they were wrong. When people thought the earth was spherical, they were wrong. But if you think that thinking the earth is spherical is just as wrong as thinking the earth is flat, then your view is wronger than both of them put together.” We could also treat uncertainty over wrongness from a bayesian perspective: e.g. we might place a high credence on our current understanding of evolution being fairly close to the truth, and low credences on it being almost entirely true or mostly false. And it also applies to more prosaic examples: e.g. it seems reasonable to expect that my mental model of a close friend Alice (including things like her appearance, life story, personality traits, etc) is probably more accurate than my model of Alice’s partner Bob, but unlikely to be more accurate than Bob’s model of Alice.

Unfortunately, even if we accept continuous degrees of truth in theory, it turns out to be very hard to describe clearly what it means for a model to have a given degree of truth, or to be more or less true than another model Philosophers of science have searched extensively for a principled account of this, especially in the case where two models predict all the same phenomena. But I think the point is better illustrated by comparing two models which both make accurate predictions, but where the questions they predict don’t fully overlap. For example, one model might have made more accurate predictions overall, but skew towards trivial predictions. Even if we excluded those, models would still have better average accuracy if they only made predictions that they were very confident about. Concretely, imagine an economist and an environmentalist debating climate change. The economist might do much better at predicting the specific consequences of many policies; the environmentalist might focus instead on a few big predictions, like “these measures won’t keep us below 450 ppm of atmospheric carbon dioxide” or “we’ll need significant cultural or technological progress to solve climate change”, and think that most of the metrics the economist is forecasting are basically irrelevant.

In cases like these, the idea that we can compare multiple models on a single scale of “truth” is much less compelling. What alternatives do we have? One is given by critical rationalism, an epistemology developed by Karl Popper and, later, David Deutsch. Critical rationalism holds that we don’t ever have reasons to accept a theory as true; we can only ever reject theories after they’ve been falsified. However, there are at least two reasons to be skeptical of this position. Firstly, the claim that there’s no reason to believe more strongly in models which have made good predictions is very counterintuitive (as I argue more extensively in post #5). Secondly, critical rationalism is very much focused on science, where it’s common for new theories to conclusively displace old ones, sometimes on the basis of only a few datapoints. But other domains are much less winner-takes-all. In the example above, the economist and the environmentalist probably each have both some good points and also some big mistakes or oversights. We’re primarily trying not to discard the one that’s less true, but rather to form reasonable judgments by taking both perspectives into account.

Garrabrant induction provides a framework for doing so, by thinking of models as traders on a prediction market, who buy and sell shares in a set of propositions. Traders accrue wealth by “beating the market” in predicting which propositions will turn out to be true. Intuitively speaking, this captures the idea that successful predictions should be rewarded in proportion to how surprising they are—meaning that it matters less what the specific questions are, since a trader only makes money by beating existing predictions, and makes more money the more it beats. A trader’s wealth can therefore be seen as an indicator of how true it is compared with the other models it’s trading against.[1]

Garrabrant traders capture many key intuitions about how frames work—but unlike most of the examples of frames above, they don’t directly influence actions, only beliefs. In other words, the traders in a classic Garrabrant inductor only trade on epistemic not normative claims. However, a variant of Garrabrant inductors called Rational Inductive Agents (RIAs) has been defined where the traders make bids on a decision market rather than a prediction market. My current best guess for how to formalize frames is as a combination of Garrabrant inductors, RIAs, and some kind of voting system: traders would earn wealth from a combination of making predictions and directly influencing decisions, and then spend that wealth to determine which values should be used as evaluation criteria for the decisions (as I’ll explore in post #6; see also the diagram below).

The idea of a single system which makes predictions, represents values, and makes decisions is common in the field of model-based reinforcement learning. Model-based RL policies often use a world-model to predict how different sequences of actions will play out, and then evaluate the quality of the resulting trajectories using a reward model (or some other representation of goals or values). However, crucially, these model-based policies are structured so that each module has a distinct role—as in the (highly simplified) diagram on the left. By contrast, a (hypothetical) frame-based policy would be a type of ensemble where each frame has multiple different types of output—as in the diagram on the right.[2] This distinction is important because in general we can’t cleanly factorize a frame into different components. In particular, both a frame’s empirical and its normative claims typically rely on the same underlying ontology, so that it’s hard to change one without changing the other.

The way I’ve portrayed frames here makes them seem in some ways like agents in their own right—which suggests that we could reason about the interactions between them using concepts from multi-agent RL, economics, politics, and other social sciences. I do think this is a useful perspective on frames, and will draw on it more strongly in the second half of this sequence. But a first-pass understanding of frames should focus on the fact that they’re controlling the predictions, plans and values of a single agent, rather than being free to act independently.[3]

As a final point: this section focused on ways in which frames are more general than concepts used in other epistemologies. However, the broader our conception of frames, the harder it is to identify how meta-rationality actually constrains our expectations or guides our actions. So this post should be seen mainly as an explanation for why there’s potentially a gap to be filled—but the question of whether meta-rationality actually fills that gap needs to be answered by subsequent posts.

- ^

It may seem weird for this indicator to depend on the other models that already exist, but I think that’s necessary if we lack a way to compare models against each other directly.

- ^

Note that in this diagram not all of the frames are weighing in on all three of predictions, plans and values. This is deliberate: as I’ve defined frames, they don’t need to have opinions on everything.

- ^

This helps reconcile meta-rationality with Feyerabend’s epistemological anarchism, which claims that there are no rules for how scientific progress should be made. They are consistent in the sense that “anything goes” when constructing frames: frame construction often happens in chaotic or haphazard ways (as I’ll explore further in post #4). But meta-rationality then imposes restrictions on what frames are meant to do, and how we evaluate them.

9 comments

Comments sorted by top scores.

comment by Jan_Kulveit · 2023-07-04T08:57:16.621Z · LW(p) · GW(p)

So far it seems like you are broadly reinventing concepts which are natural and understood in predictive processing and active inference.

Here is rough attempt at translation / pointer to what you are describing: what you call frames is usually called predictive models or hierarchical generative models in PP literature

- Unlike logical propositions, frames can’t be evaluated as discretely true or false.

Sure: predictive models are evaluated based on prediction error, which is roughly a combination of ability to predict outputs of lower level layers, not deviating too much from predictions of higher order models, and being useful for modifying the world. - Unlike Bayesian hypotheses, frames aren’t mutually exclusive, and can overlap with each other. This (along with point Frames in context [LW · GW]

Sure: predictive models overlap, and it is somewhat arbitrary where you would draw boundaries of individual models. E.g. you can draw a very broad boundary around a model call microeconomics, and a very broad boundary around a model called Buddhist philosophy, but both models likely share some parts modelling something like human desires - Unlike in critical rationalism, we evaluate frames (partly) in terms of how true they are (based on their predictions) rather than just whether they’ve been falsified or not.

Sure: actually science roughly is "cultural evolution rediscovered active inference". Models are evaluated based on prediction error. - Unlike Garrabrant traders and Rational Inductive Agents, frames can output any combination of empirical content (e.g. predictions about the world) and normative content (e.g. evaluations of outcomes, or recommendations for how to act).

Sure: actually, the "any combination" goes even further. In active inference, there is no strict type difference between predictions about stuff like "what photons hit photoreceptors in your eyes" and stuff like "what should be a position of your muscles". Recommendations how to act are just predictions about your actions conditional of wishful oriented beliefs about future states. Evaluations of outcomes are just prediction errors between wishful models and observations. - Unlike model-based policies, policies composed of frames can’t be decomposed into modules with distinct functions, because each frame plays multiple roles.

Mostly but this description seems a bit confused. "This has distinct function" is a label you slap on a computation using design stance, if the design stance description is much shorter than the alternatives (e.g. physical stance description). In case of hierarchical predictive models, you can imagine drawing various boundaries around various parts of the system (e.g., you can imagine alternatives of including or not including layers computing edge detection in a model tracking whether someone is happy, and in the other direction you can imagine including and not including layers with some abstract conceptions of hedonic utilitarianism vs. some transcendental purpose). Once you select a boundary, you can sometimes assign "distinct function" to it, sometimes more than one, sometimes "distinct goal", etc. It's just a question of how useful are physical/design/intentional stances. - Unlike in multi-agent RL, frames don’t interact independently with their environment, but instead contribute towards choosing the actions of a single agent.

Sure: this is exactly what hierarchical predictive models do in PP. All the time different models are competing for predictions about what will happen, or what will do.

Assuming this more or less shows that what you are talking about is mostly hierarchical generative models from active inference, here are more things the same model predict

a. Hierarchical generative models are the way how people do perception. predictive error is minimized between a stream of prediction from upper layers (containing deep models like "the world has gravity" or "communism is good") and stream of errors from the direction of senses. Given that, what is naively understood as "observations" is ... more complex phenomenon, where e.g. leaf flying sideways is interpreted given strong priors like there is gravity pointing downward, and an atmosphere, and given that, the model predicting "wind is blowing" decreases the sensory prediction error. Similarly, someone being taken into custody by KGB is, under the upstream model of "soviet communism is good" prior, interpreted as the person likely being a traitor. In this case competing broad model "soviet communism is evil totalitarian dictatorship" could actually predict the same person being taken into custody, just interpreting it as the system prosecuting dissidents.

b. It is possible to look at parts of this modelling machinery wearing intentional stance hat. If you do this, the system looks like multi-agent mind [LW · GW], and you can

- derive a bunch of IFC/ICF style of intuitions

- see parts of it as econ interaction or market - the predictive models compete for making predictions, "pay" a complexity cost, are rewarded for making "correct" predictions (correct here meaning minimizing error between the model and the reality, which can include changing the reality, aka pursuing goals)

What's the main difference between naive/straightforward multi-agent mind models is the "parts" live within a generative model, and interact with it and though it, not through the world. They don't have any direct access to reality, and compete at the same time for interpreting sensory inputs and predicting actions.

↑ comment by Richard_Ngo (ricraz) · 2023-07-04T10:14:56.137Z · LW(p) · GW(p)

Useful comment, ty! I'm planning to talk about predictive processing in the next two posts, and I do think that predictive processing is a very useful frame here. I will probably edit this post and the previous post too to highlight the connection.

I don't want to fully round off "frames = hierarchical generative models" though, for a couple of reasons.

- I feel pretty uncertain about the "Recommendations how to act are just predictions about your actions conditional of wishful oriented beliefs about future states. Evaluations of outcomes are just prediction errors between wishful models and observations" thing. Maybe that's true at a low level but it doesn't seem true at the level which makes it useful for doing epistemology. E.g. my high-level frames know the difference between acting and predicting, and use sophisticated planning to do the former. Now, you could say that explicit planning is still "just making a prediction", but I think it's a noncentral example of prediction.

- Furthermore it seems like the credit assignment for actions needs to work differently from the credit assignment for predictions, otherwise you get the dark room problem.

- More generally I think hierarchical generative models underrate the "reasoning" component of thinking. E.g. suppose I'm in a dark room and I have a sudden insight that transforms my models. Perhaps you could describe this naturally in HGM terms, but I suspect it's tricky.

So right now I'm thinking of HGMs as "closely related to frames, but with a bunch of connotations that make it undesirable to start talking about them instead of about frames themselves". But I'm still fairly uncertain about these points.

Replies from: Jan_Kulveit↑ comment by Jan_Kulveit · 2023-07-04T16:43:19.649Z · LW(p) · GW(p)

I broadly agree with something like "we use a lot of explicit S2 algorithms built on top of the modelling machinery described", so yes, what I mean more directly apply to the low level, than to humans explicitly thinking about what steps to take.

I think practically useful epistemology for humans needs to deal with both "how is it implemented" and "what's the content". To use ML metaphor: human cognition is build out of both "trained neural nets" and "chain-of-thought type inferences in language" running on top of such nets. All S2 reasoning is a prediction in somewhat similar way as all GPT3 reasoning is a prediction - the NN predictor learns how to make "correct predictions" of language, but because the domain itself is partially symbolic world model, this maps to predictions about the world.

In my view some parts of traditional epistemology are confused in trying to do epistemology for humans basically only at the level of the language reasoning, which is a bit like if you try to fix LLM cognition just by writing smart prompts, and ignore there is this huge underlying computation which does the heavy lifting.

I'm certainly in favour of attempts to do epistemology for humans which are compatible with what the underlying computation actually does.

I do agree you can go too far in the opposite direction, ignoring the symbolic reason ... but seems rare when people think about humans?

2. My personal take on dark room problem is it is in case of humans mostly fixed by "fixed priors" on interoceptive inputs. I.e. your body has evolutionary older machinery to compute hunger. This gets fed into the predictive processing machinery as input, and the evolutionary sensible belief ("not hungry") gets fixed. (I don't think calling this "priors" was good choice of terminology...).

This setup at least in theory rewards both prediction and action, and avoids dark room problems for practical purposes: let's assume I have this really strong belief ("fixed prior") I won't be hungry 1 hour in future. Conditional on that, I can compute what are my other sensory inputs half an hour from now. Predictive model of me eating a tasty food in half an hour is more coherent with me being not hungry than predictive model of me reading a book - but this does not need to be hardwired, but can be learned.

Given that evolution has good reasons to "fix priors" on multiple evolutionary relevant inputs, I would not expect actual humans to seek dark rooms, but I would expect the PP system occasionally seeking a way how to block or modify the interoceptive signals

3. My impression about how you use 'frames' is ... the central examples are more like somewhat complex model ensembles including some symbolic/language based components, rather than e.g. "there is gravity" frame or "model of apple" frame. My guess is this will likely be useful for practical use, but with attempts to formalize it, I think a better option is to start with the existing HGM maths.

↑ comment by Richard_Ngo (ricraz) · 2023-07-04T17:35:06.374Z · LW(p) · GW(p)

let's assume I have this really strong belief ("fixed prior") I won't be hungry 1 hour in future. Conditional on that, I can compute what are my other sensory inputs half an hour from now. Predictive model of me eating a tasty food in half an hour is more coherent with me being not hungry than predictive model of me reading a book - but this does not need to be hardwired, but can be learned.

I still think you need to have multiple types of belief here, because this fixed prior can't be used to make later deductions about the world. For example, suppose that I'm stranded in the desert with no food. It's a new situation, I've never been there before. If my prior strongly believes I won't be hungry 10 hour in the future, I can infer that I'm going to be rescued; and if my prior strongly believes I won't be sleepy 10 hours from now, then I can infer I'll be rescued without needing to do anything except take a nap. But of course I can't (and won't) infer that.

(Maybe you'll say "well, you've learned from previous experience that the prior is only true if you can actually figure out a way of making it true"? But then you may as well just call it a "goal", I don't see the sense in which it's a belief.)

This type of thing is why I'm wary about "starting with the existing HGM maths". I agree that it's rare for humans to ignore symbolic reasoning... but the HGM math might ignore symbolic reasoning! And it could happen in a way that's pretty hard to spot. If this were my main research priority I'd do it anyway (although even then maybe I'd write this sequence first) but as it is my main goal here is to have a minimum viable epistemology which refutes bayesian rationalism, and helps rationalists reason better about AI.

I'd be interested in your favorite links to the HGM math though, sounds very useful to read up more on it.

comment by dxu · 2023-07-03T22:57:14.329Z · LW(p) · GW(p)

Can you say more about how a “frame” differs from a “model”, or a “hypothesis”?

(I understand the distinction between those three and “propositions”. It’s less clear to me how they differ from each other. And if they don’t differ, then I’m pretty sure you can just integrate over different “frames” in the usual way to produce a final probability/EV estimate on whatever proposition/decision you’re interested in. But I’m pretty sure you don’t need Garrabrant induction to do that, so I mostly think I don’t understand what you’re talking about.)

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2023-07-04T09:54:59.240Z · LW(p) · GW(p)

In the bayesian context, hypotheses are taken as mutually exclusive. Frames aren't mutually exclusive, so you can't have a probability distribution over them. For example, my physics frame and my biology frame could both be mostly true, but have small overlaps where they disagree (e.g. cases where the physics frame computes an answer using one approximation, and the biology frame computes an answer using another approximation). A tentative example: the physics frame endorses the "calories in = calories out" view on weight loss, whereas this may be a bad model of it under most practical circumstances.

By contrast, the word "model" doesn't have connotations of being mutually exclusive, you can have many models of many different domains. The main difference between frames and models is that the central examples of models are purely empirical (i.e. they describe how the world works) rather than having normative content. Whereas the frames that many people find most compelling (e.g. environmentalism, rationalism, religion, etc) have a mixture of empirical and normative content, and in fact the normative content is compelling in part due to the empirical content. Very simple example: religions make empirical claims about god existing, and moral claims too, but when you no longer believe that god exists, you typically stop believing in their (religion-specific) moral claims.

comment by johnswentworth · 2023-07-03T20:29:14.171Z · LW(p) · GW(p)

My current best guess for how to formalize frames is as a combination of Garrabrant inductors, RIAs, and some kind of voting system: traders would earn wealth from a combination of making predictions and directly influencing decisions, and then spend that wealth to determine which values should be used as evaluation criteria for the decisions

My two cents: I think the phenomena you're emphasizing are roughly-accurately characterized in terms of uncertainty over binding: i.e. uncertainty over the preconditions under which various Bayesian submodels apply, and how to map the "variables" in those submodels to stuff-in-the-world. Examples:

- "Cats are carnivorous" -> ... usually binds to a given cat in given circumstances, but we're not confident that it binds to all cats in all circumstances.

- "Bob is tall" -> ... assuming you're looking at the right person, but you're not confident that "Bob" in this model binds to the person you're looking at. (I think the part about "tall" being relative is a separate phenomenon from the main thing you're gesturing at.)

- "America is beautiful" -> (I think this is a qualitatively different thing which is getting conflated with the main category of stuff you're gesturing at.)

- "When people thought the earth was flat, they were wrong. When people thought the earth was spherical, they were wrong. ..." -> The flat-model does make accurate predictions locally, i.e. over small enough chunks of the Earth's surface, so it's useful insofar as we restrict its binding to that range. The sphere model binds accurately over a much wider range.

- "two models which both make accurate predictions, but where the questions they predict don’t fully overlap" -> The two models bind to different real-world situations.

↑ comment by Richard_Ngo (ricraz) · 2023-07-03T20:49:56.400Z · LW(p) · GW(p)

Just a quick response: I don't really understand what you mean by "binding", got a link to where you discuss it more? But just going off my intuitive reading of it: the "binding" thing feels like a kinda Kripkean way of thinking about reference—something either binds or it doesn't—which I am not a fan of. I think in more Russellian terms: we have some concept, which has some properties, and there's a pretty continuous spectrum over how well those properties describe some entity in the world.

For example, when I'm uncertain about "cats are carnivorous", it's not that I have a clear predicate "carnivorous" and I'm uncertain which cats it "binds" to. Rather, I think that probably almost all cats are kinda carnivorous, e.g. maybe they strongly prefer meat, and if they never get meat they'll eventually end up really unhealthy, but maybe they'd still survive, idk...

comment by Kevin Shen (kevin-shen) · 2024-01-26T08:08:54.804Z · LW(p) · GW(p)

Great article, but I might be biased since I’m also a fan of Chapman. I find the comments to be fascinating.

It seems to me that people read your article and think, “oh, that’s not new, I have a patch for that in my system.”

However. I think the point you and Chapman are trying to make is that we should think about these patches at the interface of rationality and the real world more carefully. The missing connection, then, is people wondering, why? Are you trying to:

- simply argue that the problem exists?

- propose a comprehensive approach?

- propose a specific instance of the approach? connect stronger to the operations of human hardware?

And, would 3) end up looking itself like a patch?

Also, very curious to hear your thoughts on normativity. That’s usually the subject of ethics, so would the discussion become meta ethical?